Abstract

Both visual and vestibular sensory cues are important for perceiving one's direction of heading during self-motion. Previous studies have identified multisensory, heading-selective neurons in the dorsal medial superior temporal area (MSTd) and the ventral intraparietal area (VIP). Both MSTd and VIP have strong recurrent connections with the pursuit area of the frontal eye field (FEFsem), but whether FEFsem neurons may contribute to multisensory heading perception remain unknown. We characterized the tuning of macaque FEFsem neurons to visual, vestibular, and multisensory heading stimuli. About two-thirds of FEFsem neurons exhibited significant heading selectivity based on either vestibular or visual stimulation. These multisensory neurons shared many properties, including distributions of tuning strength and heading preferences, with MSTd and VIP neurons. Fisher information analysis also revealed that the average FEFsem neuron was almost as sensitive as MSTd or VIP cells. Visual and vestibular heading preferences in FEFsem tended to be either matched (congruent cells) or discrepant (opposite cells), such that combined stimulation strengthened heading selectivity for congruent cells but weakened heading selectivity for opposite cells. These findings demonstrate that, in addition to oculomotor functions, FEFsem neurons also exhibit properties that may allow them to contribute to a cortical network that processes multisensory heading cues.

Keywords: frontal eye field, multisensory, optic flow, self-motion, vestibular

Introduction

To generate robust estimates of heading during navigation, the brain needs to integrate multiple sensory signals, including visual (optic flow) and vestibular (inertial motion) cues (see reviews by Angelaki et al. 2009; Fetsch, DeAngelis et al. 2010). Previous research has focused primarily on the dorsal medial superior temporal area (MSTd) of the macaque extrastriate visual cortex, where neurons are tuned to the direction of self-motion based on both visual and vestibular cues (Duffy 1998; Bremmer et al. 1999; Page and Duffy 2003; Gu et al. 2006; Takahashi et al. 2007). A subpopulation of neurons with matched visual and vestibular heading preferences (congruent cells) tends to show enhanced heading selectivity when the 2 cues are provided simultaneously, thus allowing for more precise heading judgments (Gu et al. 2008; Fetsch et al. 2013). Artificially perturbing neural activity further confirms that area MSTd is directly involved in heading perception based on optic flow or vestibular motion signals (Britten and van Wezel 1998, 2002; Gu et al. 2012).

There is emerging evidence, however, that MSTd may not be the only cortical area relevant to multisensory heading perception. Specifically, MSTd inactivation results suggested that optimal heading judgments rely on neural activity from other cortical areas in addition to MSTd (Gu et al. 2012). In support of this conclusion, the ventral intraparietal (VIP) (Colby et al. 1993; Bremmer et al. 1999; Bremmer et al. 2002; Schlack et al. 2002; Zhang et al. 2004; Britten 2008; Maciokas and Britten 2010; Zhang and Britten 2010; Chen et al. 2011a) and visual posterior sylvian (VPS) (Chen et al. 2011b) areas also exhibit visual and vestibular tuning for direction of self-motion. Another cortical area that is anatomically connected with MSTd is the pursuit area of the frontal eye fields (FEFsem), located in the fundus of the anterior bank and the posterior bank of the arcuate sulcus (MacAvoy et al. 1991; Gottlieb et al. 1993; Gottlieb et al. 1994; for review, see Lynch and Tian 2006). Whether FEFsem contains neurons with multisensory heading selectivity remains unknown.

Traditionally, FEFsem has been considered to be part of the macaque smooth pursuit system (Gottlieb et al. 1994; Tanaka and Fukushima 1998; Fukushima et al. 2000; Tanaka and Lisberger 2002; see review by Fukushima 2003). Although it is known that FEFsem neurons respond to visual motion (MacAvoy et al. 1991; Krauzlis 2004; Fujiwara et al. 2014), whether they are also tuned to the complex optic flow patterns experienced during navigation has never been tested. Furthermore, responses of FEFsem neurons are modulated by vestibular stimuli, including both rotations (Fukushima et al. 2005; Fukushima et al. 2006; Akao et al. 2007) and translations (Fukushima et al. 2005; Akao et al. 2009), and FEFsem receives direct vestibular projections via the thalamus (Ebata et al. 2004). These studies suggested that FEFsem neurons might integrate visual and vestibular cues to translational self-motion.

We used a virtual reality system (Gu et al. 2006) to systematically characterize the activity of FEFsem neurons in response to heading stimuli defined by inertial (vestibular) motion and optic flow. We first quantified each FEFsem neuron's heading selectivity in terms of tuning strength and direction preference. We then used Fisher information to assess the cells' heading sensitivity in comparison to MSTd and VIP neurons (Gu et al. 2008; Fetsch et al. 2012; Chen et al. 2013a). We show that responses of FEFsem neurons to 3D heading stimuli share many features with visual/vestibular neurons in other multisensory cortical areas.

Materials and Methods

Subjects

Physiological experiments were performed in 3 male rhesus monkeys (Macaca mulatta) weighing 6–8 kg. The animals were chronically implanted with a plastic head-restraint ring that was firmly anchored to the apparatus during the experiment to minimize head movement. All monkeys were implanted with a scleral coil in 1 eye, for measuring eye movements in a magnetic field (Robinson 1963). After sufficient recovery, animals were trained using standard operant conditioning to fixate visual targets for fluid reward. All animal surgeries and experimental procedures were approved by the Institutional Animal Care and Use Committee at Washington University and were in accordance with NIH guidelines.

Motion Stimuli

Translation of the monkey in 3D space was accomplished by a motion platform (MOOG 6DOF2000E; Moog, East Aurora, NY). To activate vestibular otolith organs, each transient inertial motion stimulus followed a smooth trajectory with a Gaussian velocity profile having a peak velocity of 0.25 m/s and a peak acceleration of approximately 1 m/s2. Note that we did not apply any rotational stimuli in the current study; thus, our stimuli activated vestibular processing pathways driven by the otolith organs but not the semicircular canals. A 3-chip DLP projector (Christie Digital Mirage 2000) was mounted on the motion platform and rear-projected images (subtending 90 × 90° of visual angle) onto a tangent screen in front of the monkey. Visual stimuli depicted movement through a 3D cloud of “stars” that occupied a virtual space of 100 cm wide, 100 cm tall, and 40 cm deep. Star density was 0.01 cm−3, with each star being a 0.15 × 0.15 cm triangle. Stimuli were presented stereoscopically as red/green anaglyphs and were viewed through Kodak Wratten filters (red #29, green #61). The display contained a variety of depth cues, including horizontal disparity, motion parallax, and size information. All visual motion stimuli were presented at 100% coherence.

Behavioral Task and Experimental Protocols

Monkeys were required to maintain fixation on a head-fixed visual target located at the center of the display screen during each stimulus presentation. Trials were aborted if eye position deviated from a 2 × 2° electronic window centered on the fixation point. The experimental paradigm consisted of 3 randomly interleaved stimulus conditions: 1) In the “vestibular” condition, the monkey was translated by the motion platform while fixating a head-fixed target on a blank screen. Motion was along one of the 26 headings that spanned all combinations of azimuth and elevation angles in steps of 45° (Fig. 1A). In this condition, the main source of heading information was otolith-driven signals from the vestibular system (Gu et al. 2006; Gu et al. 2007; Takahashi et al. 2007). 2) In the “visual” condition, the motion platform remained stationary while optic flow simulated the same set of 26 headings. 3) In the “combined” condition, congruent inertial motion and optic flow were provided, with visual and vestibular stimuli being temporally synchronized. Each of these 78 stimulus conditions was repeated a minimum of 3 times, but typically 5 times. To measure the spontaneous activity of each neuron, additional trials without platform motion or optic flow were interleaved, resulting in a total of 395 trials for 5 repetitions of each distinct stimulus. Across all 229 neurons recorded in FEFsem, the average spontaneous activity was 12.3 ± 12.0 spikes/s (mean ± SD). During all 3 stimulus conditions, the animal was required to fixate a central target (0.2° in diameter) at a viewing distance of 30 cm and to maintain fixation within a 2 × 2° electronic window. Animals were rewarded with a drop of liquid (∼0.1 mL) at the end of each trial for maintaining fixation throughout the stimulus presentation. Because we only monitored the movements of 1 eye, vergence angle was not measured.

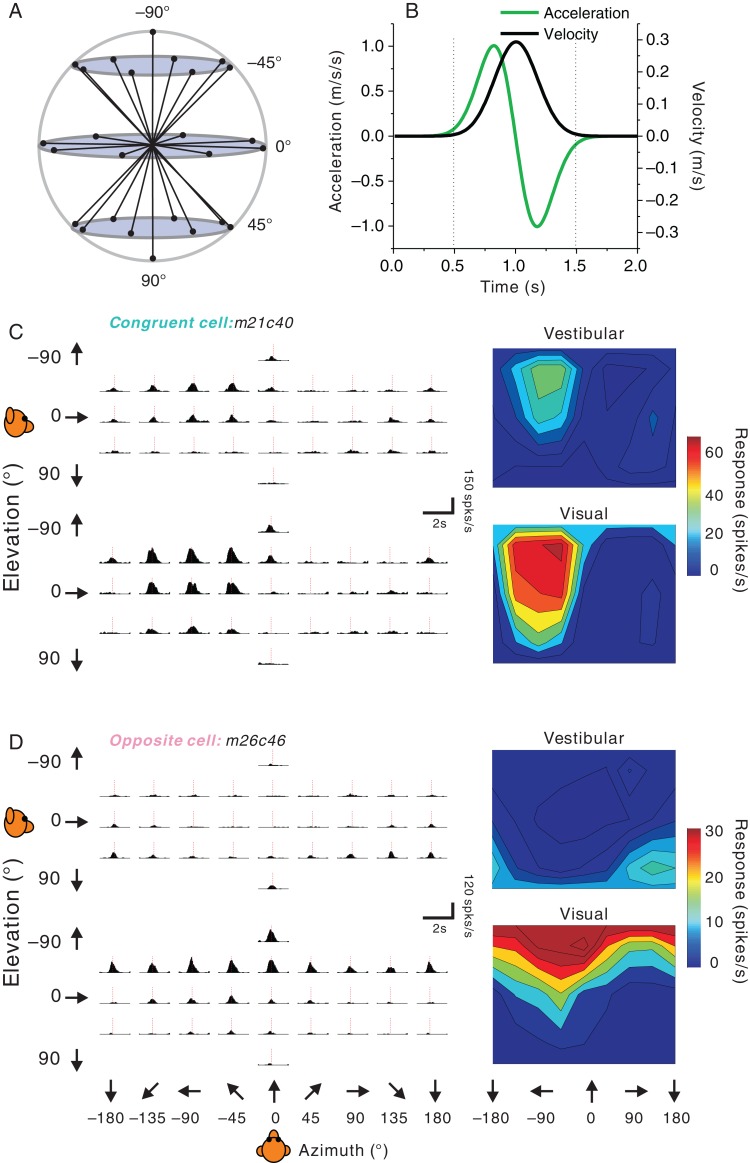

Figure 1.

Stimuli and examples of single-peaked 3D heading tuning. (A) Schematic of the 26 movement trajectories in 3D, spaced 45° apart in both azimuth and elevation. (B) The 2 s stimulus profile: velocity (black), acceleration (green). (C) Vestibular (top) and visual (bottom) PSTHs (left panels) and 3D heading tuning profiles (right panels) for a congruent FEFsem neuron. The dashed red vertical lines indicate the single peak time (vestibular: 925 ms, visual: 875 ms). (D) PSTHs and spatial tuning profiles for an FEFsem neuron with opposite heading preferences for the vestibular and visual conditions. For both example neurons, 3D tuning profiles are illustrated as color contour maps (Lambert cylindrical equal area projection of spherical data). Firing rate is based on spikes counted during the middle 1 s of the stimulus period. Dashed red vertical lines indicate the single peak times (vestibular: 1000 ms, visual: 900 ms).

In contrast to the saccade area of the frontal eye field, where electrical microstimulation evokes contralateral saccadic eye movements (Bruce et al. 1985), stimulating the pursuit area typically evokes smooth ipsilateral eye movements (MacAvoy et al. 1991; Gottlieb et al. 1993; Gottlieb et al. 1994; Tanaka and Lisberger 2002). In the present experiments, FEFsem was identified through a combination of structural magnetic resonance imaging (MRI) scans and the pattern of microstimulation-evoked smooth eye movements (MacAvoy et al. 1991; Gottlieb et al. 1994; Tanaka and Fukushima 1998; Fukushima 2003). During mapping, an electrical current was delivered through a tungsten microelectrode (FHC, tip diameter ∼3 µm, impedance ∼1 MΩ) placed into the peri-arcuate area. Current delivery was triggered at the end of each trial after the animal maintained fixation of a central visual target for 1500 ms. Microstimulation consisted of 200 Hz pulse trains (biphasic: cathodal-anodal; pulse width: 200 µs for each phase duration; interpulse interval: 100 µs; peak magnitude: 50 µA) that were either 50 or 300 ms in duration. The short duration stimulation was used to evoke saccadic eye movements, while the long duration stimulation was used to evoke smooth pursuit eye movements (MacAvoy et al. 1991; Gottlieb et al. 1994; Tanaka and Fukushima 1998; Fukushima 2003).

Saccade and pursuit areas of the FEF were identified as such when the respective eye movement type could be observed for at least 50% of microstimulation trials. Consistent with previous studies (Bruce et al. 1985), saccadic eye movements were evoked from the anterior bank of the arcuate sulcus, and their directions were typically opposite to the recorded hemisphere. Specifically, the center of the FEF saccade area (FEFsac) was located approximately 25 mm anterior to the interaural plane and 10–20 mm lateral to the midline, in the anterior bank of the arcuate sulcus. In contrast, smooth pursuit eye movements were evoked from more posterior areas (20–25 mm anterior to the interaural plane and 10–15 mm lateral to the midline), and their directions were typically ipsilateral to the recorded hemisphere, in agreement with previous studies (MacAvoy et al. 1991; Gottlieb et al. 1994; Tanaka and Fukushima 1998; Fukushima 2003). Along some penetrations, near the border between the 2 areas, either saccadic or smooth pursuit eye movements could be evoked alternately as the electrode was lowered into gray matter.

Once the area of interest was mapped, we recorded from any well-isolated neuron encountered within the pursuit area of FEF. To verify whether cells recorded within the microstimulation-defined pursuit area were indeed pursuit-related neurons, some cells were also tested with a smooth pursuit protocol. In this protocol, animals were required to pursue a fixation spot that appeared at the center of the screen and then moved along one of 8 equally spaced directions within the frontoparallel plane (Gottlieb et al. 1994). Viewing distance was again 30 cm. Because pursuit target direction was unpredictable at the beginning of each trial, a larger fixation window of 5° × 5° was enforced during the initiation of pursuit. The fixation window then shrunk to 3° × 3° once the animal initiated smooth pursuit, and eye position was required to stay within this window throughout the rest of the trial. Pursuit trials had a duration of 2 s, and motion of the pursuit target followed a Gaussian velocity profile with a peak speed of 10 deg/s (Fig. 10B).

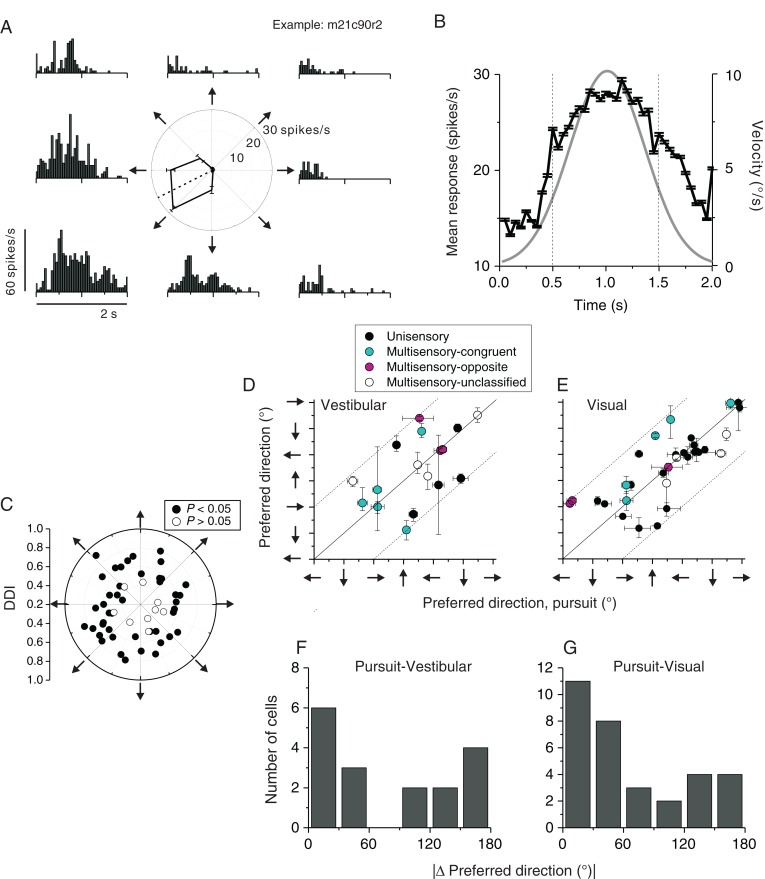

Figure 10.

Pursuit response properties. (A) An example FEFsem neuron's activity during smooth pursuit of a target moving along 8 directions in the frontoparallel plane (indicated by arrows around the polar plot). The tuning curve in the polar plot was based on average firing rate during the middle 1 s of stimulus duration (vertical dashed lines in B). (B) Population PSTH from 54 neurons tested during pursuit. Responses were pooled from the maximum pursuit direction in the vertical plane for each neuron, regardless whether the neuron's modulation to pursuit was significant or not (P < 0.05, 1-way ANOVA, see below). Target speed followed a Gaussian velocity profile (gray line). (C) Joint distribution of preferred pursuit direction and response strength assessed by DDI. Filled symbols represent neurons with significant pursuit tuning (N = 45, P < 0.05) while open symbols represent insignificant tuning (N = 9, P > 0.05). (D and E) Comparison of the preferred pursuit direction and preferred vestibular heading (D) or the preferred visual heading (E). Error bars represent 95% confidence intervals. Filled black symbols: unisensory cells responding only to vestibular (D) or visual (E) heading stimuli; filled cyan symbols: congruent multisensory neurons (|Δ preferred heading, vestibular-visual| <60°); filled magenta symbols: opposite multisensory neurons (|Δpreferred heading, vestibular-visual| >120°); open symbols: unclassified multisensory neurons (|Δpreferred heading, vestibular-visual|>60° and |Δ preferred heading, vestibular-visual|<120°). Arrows next to the axes represent pursuit direction, vestibular heading direction, or the simulated heading in the visual condition. For example, a rightward arrow designates pursuit to the right, or heading to the right. (F and G) Distribution of difference in preferred direction, |Δ preferred direction|, between pursuit and vestibular heading (F, n = 17) or visual heading (G, n = 32) conditions.

Data Analysis

Analysis of spike data and statistical tests were performed using MATLAB (MathWorks). Peristimulus time histograms (PSTHs) were constructed for each direction of translation using 25 ms time bins. The temporal modulation of the response for each stimulus direction was considered significant when the spike count distributions from the time bin containing the maximum and/or minimum response differed significantly from the baseline response distribution (−100 to 300 ms after stimulus onset; Wilcoxon matched-pairs test, P < 0.01) (for details, see Chen et al. 2011a, c). We calculated the maximum response of the neuron across stimulus directions for each 25 ms time bin between 0.5 and 2 s after motion onset. This time window was chosen, because it contains most of the stimulus-related response modulations (Fig. 3E,F). Due to the Gaussian velocity profile of our stimuli, in which stimulus velocity was negligible during the first 500 ms (Fig 1B), there is little stimulus-related activity during the first 500 ms of the stimulus presentation.

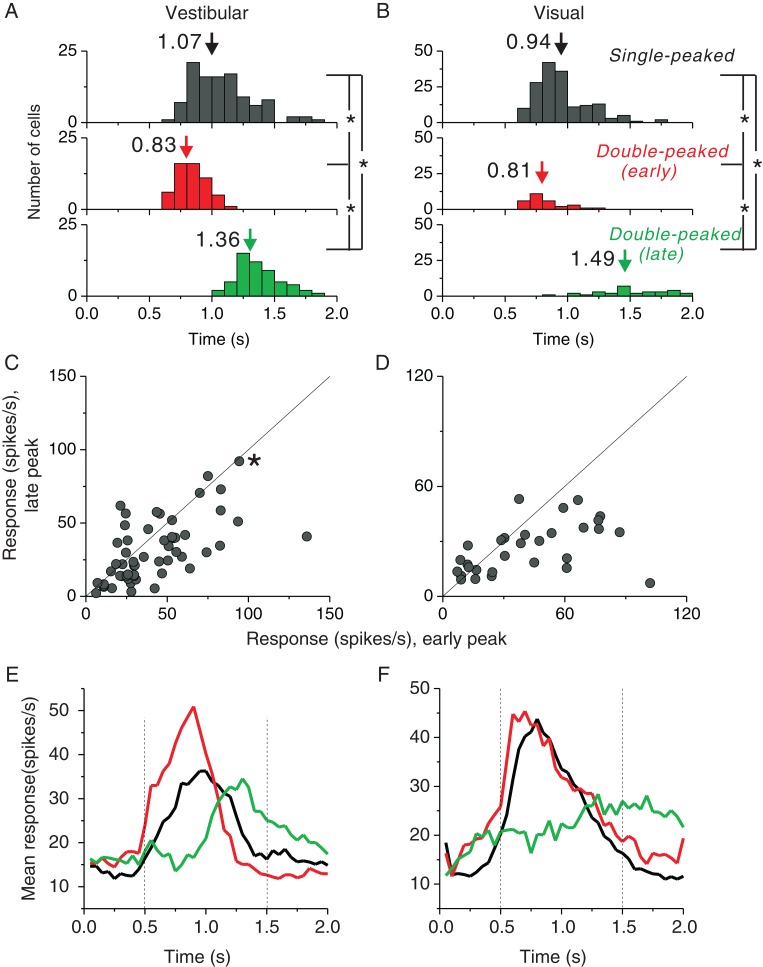

Figure 3.

Comparison of temporal response properties of single-peaked and double-peaked neurons. (A and B) Distributions of peak times for the vestibular and visual conditions, respectively. Top panels: peak times for single-peaked cells; middle panels: early peak times for double-peaked cells; bottom panels: late peak times for double-peaked cells. Arrows denote mean values. (C and D) Comparison of the response strength (averaged within a window of ±200 ms around each peak time) at the early peak versus late peak times for double-peaked cells in the vestibular (n = 55) and visual (n = 30) conditions, respectively. Solid lines show unity slope diagonals. The asterisk in C represents the example cell shown in Figure 2A and B, which is double-peaked only in the vestibular condition. (E and F) Population PSTHs for single-peaked and double-peaked cells in the vestibular (single-peaked cells: n = 106; double-peaked cells: n = 55) and visual (single-peaked cells: n = 164; double-peaked cells: n = 30) conditions, respectively. Black curves: single-peaked cells; red curves: double-peaked cells, with the maximum firing direction defined by PSTHs at the early peak time; green curves: double-peaked cells, with the maximum firing direction defined by PSTHs at the late peak time. Vertical dotted lines illustrate the middle 1s period used for computing firing rates (spike counts) in the main analyses.

We used ANOVA to assess the statistical significance of direction tuning as a function of time and to evaluate whether there are multiple time periods in which a neuron shows significant directional tuning (for details, see Chen et al. 2011a, c). “Peak times” were defined as the times of local maxima at which distinct epochs of directional tuning were observed, using the following criteria: 1) Significant tuning (based on an ANOVA, P < 0.01) for 5 consecutive time bins centered on the putative local maximum, and 2) a continuous temporal sequence of time bins for which direction tuning is significantly positively correlated with the tuning curve at the peak time. Based on the number of distinct peak times, FEFsem cells were divided into 3 groups: 1) cells with a single temporal epoch of directional selectivity (single-peaked), 2) cells with 2 distinct epochs of directional tuning (double-peaked), and 3) cells that were not significantly direction-selective at any time period (not tuned).

Direction Tuning Curve

The strength of directional tuning was quantified using a direction discrimination index (DDI, Takahashi et al. 2007) given by:

| (1) |

where Rmax and Rmin are the maximum and minimum responses from the 3D tuning function, respectively. SSE is the sum-squared error around the mean response. N is the total number of observations (trials), and M is the number of stimulus directions (M = 26). The DDI compares the difference in firing rate between the preferred and null directions against response variability and quantifies the reliability of a neuron for distinguishing between preferred and null motion directions. Neurons with large response modulations relative to the noise level will have DDI values close to 1, whereas neurons with weak response modulation will have DDI values close to 0.

Weighted Linear Sum Model

We used a weighted linear summation model to predict responses during cue combination from responses to each single-cue condition (Gu et al. 2008):

| (2) |

where Rvestibular and Rvisual are evoked responses (i.e., baseline activity subtracted) from the single-cue conditions, and wvestibular and wvisual represent weights (constrained between −20 and +20) applied to the vestibular and visual responses, respectively. The weights were determined by minimizing the sum-squared error between predicted and measured responses in the combined condition. The Pearson correlation coefficient (R) from a linear regression fit, which ranged from −1 to 1, was used to assess goodness of fit.

Fisher Information Analysis

To investigate the heading information capacity in FEFsem, we estimated the precision of the average neuron for discriminating heading by computing Fisher information (for more details, see Gu et al. 2010). Briefly, Fisher information (IF) provides an upper limit on the precision with which any unbiased estimator can discriminate between small variations in a variable (x) around a reference value (xref) (Seung and Sompolinsky 1993; Pouget et al. 1998; Abbott and Dayan 1999). For a population of neurons with independent Poisson-like statistics, population Fisher information can be computed from the equation:

| (3) |

In this equation, N denotes the number of neurons in the population, denotes the derivative of the tuning curve for the ith neuron at xref, and is the variance of the response of the ith neuron at xref. To compute tuning curve slope, , we used a spline function (1° resolution) to interpolate among the coarsely sampled data points (45° spacing). Since Equation 3 only quantifies Fisher information for discrimination around a specific reference heading, we performed this computation many times using all possible headings (in 1° increments) as reference values. For each different reference heading (xref), was computed based on the responses at its 2 neighboring reference values, that is, xref − 1° and xref + 1°, respectively. The average Fisher information can be computed by summing Fisher information across all neurons and dividing by the number of neurons.

Results

In 3 adult rhesus macaques, we recorded neural activity from 229 single neurons (monkey Z: n = 103; monkey B: n = 96; monkey M: n = 30) located in the pursuit area of FEF (FEFsem). Heading tuning in 3D was measured in response to vestibular cues alone, visual (optic flow) cues alone, or both cues together. Optic flow stimuli included horizontal disparity, motion parallax, and size cues to depth (see Materials and Methods). In each case, 26 headings sampled on a sphere were presented (Fig. 1A). In the vestibular condition, a motion platform was used to translate the animal. To activate pathways driven by the otolith organs, the temporal waveform of the stimulus had a biphasic acceleration profile (peak = 1 m/s2) and a Gaussian velocity profile (Fig. 1B). In the visual condition, the same set of 3D motion trajectories was simulated using optic flow. Finally, in the combined condition, we provided synchronized and congruent vestibular and visual heading cues (Gu et al. 2006). We first describe how single FEFsem neurons are modulated by vestibular and visual cues to heading, and then we examine whether combining vestibular and visual cues can enhance FEFsem heading selectivity.

Single-Cue Responses

Response PSTHs from a typical example neuron with matched vestibular and visual heading tuning are illustrated in Figure 1C. Responses roughly followed the Gaussian velocity profile of the stimulus, rather than the biphasic linear acceleration profile, a property that is commonly encountered in other cortical areas including MSTd (Gu et al. 2006; Takahashi et al. 2007; Fetsch, Rajguru et al. 2010), VIP (Chen et al. 2011a; c), and PIVC (Chen et al. 2010). In line with these previous studies, we quantified heading tuning by counting spikes in the middle 1s of the trial epoch, when stimulus velocity was large (Fig. 1B, vertical dashed lines). This example neuron showed similar heading selectivity for visual and vestibular stimuli, as illustrated by the color contour maps (Fig. 1C, right). We refer to such neurons as “congruent” cells. The largest response was observed for a leftward and slightly upward heading.

A typical example of an FEFsem neuron with mismatched visual and vestibular heading tuning (referred to as an “opposite” cell) is shown in Figure 1D. This neuron exhibited roughly opposite heading preferences for vestibular and visual inputs: while it preferred an upward and backward motion (top panels) defined by inertial cues, its heading preference was downward and forward when stimulated using optic flow (bottom panels). Note that both example cells shown in Figure 1C and D show a single peak in their response PSTHs and have a single temporal peak of directional tuning. We refer to such neurons, having a single temporal epoch of directional selectivity, as “single-peaked” (Chen et al. 2011a, c).

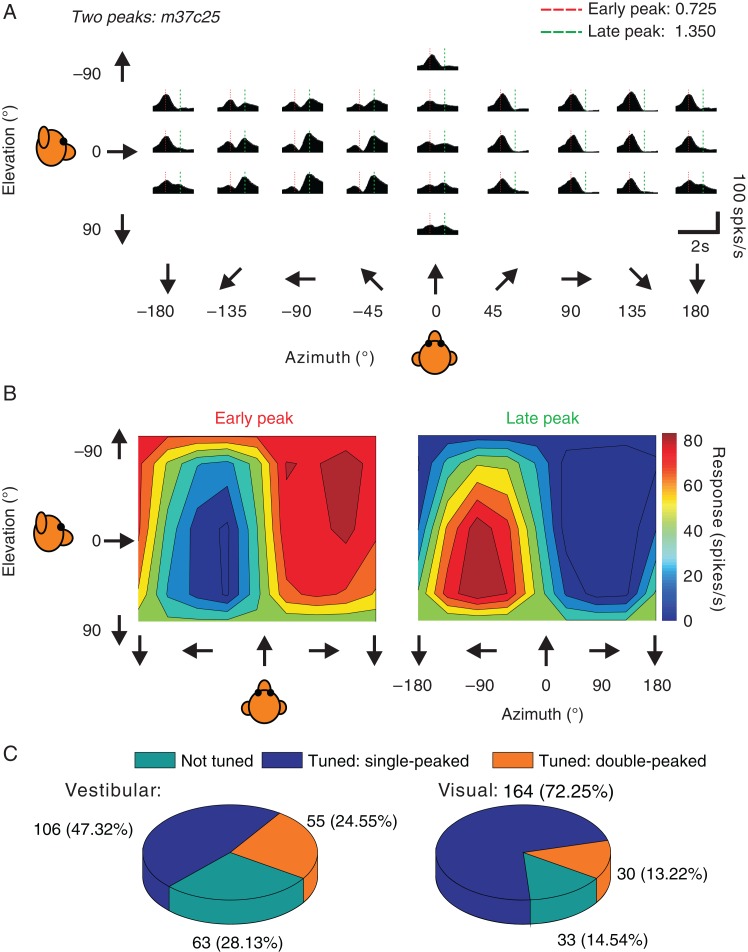

A second, and smaller, group of neurons showed 2 distinct response peaks that occurred at different times and different directions, as illustrated by the vestibular responses from another example cell in Figure 2A. As shown in Figure 2B (left), the early responses of this neuron showed a clear preference for rightward and slightly upward headings, and this peak of selectivity was reached at 725 ms after stimulus onset (Fig. 2A, red dashed lines). In addition, a second period of strong directional selectivity emerged at approximately 1350 ms (Fig. 2A, green dashed lines), with a preference for leftward and slightly downward headings. We refer to such neurons, with 2 distinct temporal periods of directional selectivity, as “double-peaked” (Chen et al. 2011a, c).

Figure 2.

Double-peaked example neuron and summary of response dynamics. (A) Example of an FEFsem neuron with double-peaked heading tuning in the vestibular condition. PSTHs are shown for 26 directions of vestibular translation. The red and green dashed vertical lines indicate the 2 peak times. (B) 3D heading tuning of the same example neuron at each of the 2 (early and late) peak times. Spikes were counted during a 400 ms window around each peak time. (C) Pie charts for the vestibular (left) and visual (right) conditions, showing the proportions of neurons in FEFsem with significant single-peaked tuning and double-peaked tuning, as well as neurons that were not tuned.

Among 229 neurons recorded, 106 (∼46%) cells were single-peaked in the vestibular condition and 164 (∼72%) cells were single-peaked in the visual condition (Fig. 2C, blue). An additional 55 (24%) cells were double-peaked in the vestibular condition, and 30 (13%) cells were double-peaked in the visual condition (Fig. 2C, orange). The remaining cells were not significantly tuned in the visual (n = 33), vestibular (n = 63), or both (n = 14) conditions (Fig. 2C, cyan). The fact that the proportion of double-peaked cells was significantly greater in the vestibular than visual condition (P = 4 × E−5, χ2 test) is similar to previous findings for areas VIP, MSTd, and VPS (Chen et al. 2011a, c, b) and may be due to stronger acceleration contributions to vestibular responses than visual responses. Consistent with this idea, previous studies of pretectal neurons in birds (Cao et al. 2004) and neurons in macaque area MT (Lisberger and Movshon 1999; Price et al. 2005) have shown that visual acceleration signals are weak or present in only a small fraction of neurons. Another possible explanation for the second peak of activity in double-peaked cells is a rebound excitation following an initially suppressive stimulus. We cannot rule out this possibility, but we note that some double-peaked neurons do not show an initial phase of suppression preceding the second peak of response.

For single-peaked cells, the average peak time was 1.07s for the vestibular condition (Fig. 3A) and 0.94 s for the visual condition (Fig. 3B). For double-peaked cells, the average times of the first peak of selectivity (vestibular condition: 0.83 s; visual condition: 0.81 s) were significantly earlier than the average peak times of single-peaked cells (P << 0.001, t-test). On the other hand, the average times of the late peak of selectivity (vestibular condition: 1.36 s; visual condition: 1.49 s) were significantly greater than average peak times of single-peaked cells (P < 0.001, t-test). Double-peaked cell responses at the late peak time were generally weaker than those at the early peak time (P < 0.001, paired t-tests) in both vestibular and visual conditions, as illustrated by the scatter plots in Figure 3C and D. Overall, the timing of responses of double-peaked cells is roughly consistent with the 2 peak times being related, at least in part, to stimulus acceleration and deceleration (Chen et al. 2010).

The relatively weaker responses at the late peak time can also be seen in the population PSTHs (Fig. 3E and F, red vs. green curves for double-peaked cells; black curves for single-peaked cells), and this difference in response strength between early and late phases was greater for the visual condition (Fig. 3F). Most of the response variation for single-peaked cells or the early activity of double-peaked cells could be captured by the middle 1s analysis window we have used (vertical dashed lines, Fig. 3E and F). In the following analysis, we computed mean firing rates based on spikes counted in this middle 1s window, thus partially discounting the second response peak of double-peaked cells.

Summary of Heading Selectivity in Single-Cue Conditions

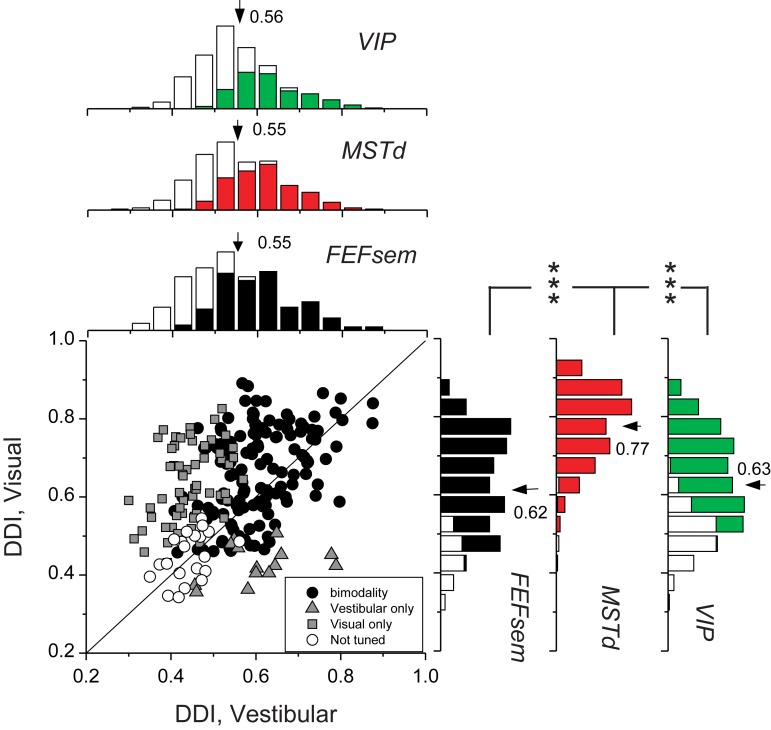

We first assessed the tuning strength of FEFsem neurons by computing a DDI (see Materials and Methods). Figure 4 compares DDI values between the visual and vestibular conditions for all 229 FEFsem neurons recorded. The average visual DDI (0.62 ± 0.0086, mean ± SEM) was significantly greater than the mean vestibular DDI (0.55 ± 0.0075; P = 8.1 × E−15, paired t-test). We further compared the visual and vestibular DDI values across cortical areas, reanalyzing data recorded previously from MSTd (Gu et al. 2006) and VIP (Chen et al. 2011a) using the same methods (Fig. 4, marginal distributions). In the vestibular condition, the mean DDI value for FEFsem neurons was not significantly different from those for MSTd (mean DDI = 0.55, P = 0.58, t-test) or VIP (mean DDI = 0.56, P = 0.43, t-test) neurons. In the visual condition, the mean DDI of FEFsem neurons was significantly less than that of MSTd neurons (mean DDI = 0.77, P = 1 × E−42, t-test), but not significantly different from that for VIP neurons (mean DDI = 0.62, P = 0.91, t-test). Thus, overall, FEFsem neurons showed robust heading selectivity that was comparable to, but not better than, the selectivity of MSTd and VIP neurons.

Figure 4.

Summary of heading selectivity. Selectivity was assessed by computing a DDI for the vestibular and visual conditions. Filled circles (n = 134) represent cells with significant vestibular and visual tuning (P < 0.05, 1-way ANOVA); gray squares (n = 60) indicate cells with significant visual tuning only; gray triangles (n = 14) represent cells with significant vestibular tuning only; open circles (n = 21) denote cells without significant tuning for both stimulus conditions. Solid line shows the unity slope diagonal. Marginal distributions of DDI for FEFsem (black) are compared with those for areas MSTd (red, n = 286) and VIP (green, n = 443). Filled bars: significant modulation to heading stimuli (P < 0.05, 1-way ANOVA); Open bars: insignificant modulation to heading stimuli (P > 0.05, 1-way ANOVA). Arrows, mean DDI values. Brackets with *** illustrate significant differences between areas (P < 0.001, t-test). In the absence of brackets, the mean DDI was not significantly different between each pair of areas (P > 0.05).

We also explored whether the tuning strength of FEFsem neurons depended on recording location within an area covering approximately 4 by 4 mm. We searched for correlations between vestibular and visual DDI values and the anterior–posterior (AP) or medial–lateral (ML) coordinates of recording locations, but found no consistent trends across animals. For vestibular tuning, 1 animal showed a marginally significant dependence on both AP location (Monkey M: R =− 0.4, P = 0.03, N = 30, Spearman rank correlation) and ML location (R =− 0.4, P = 0.02), but the other animals showed no significant dependence on either AP or ML location (P > 0.05). For visual tuning, 1 animal showed a significant dependence on AP location, such that visual tuning was stronger for more anterior recording sites (Monkey Z: R = 0.42, P = 1.1 × E−5, N = 103, Spearman rank correlation). However, there was no significant dependence of visual DDI on either AP or ML location otherwise (P > 0.05). Hence, vestibular and visual tuning strength were roughly uniform within FEFsem.

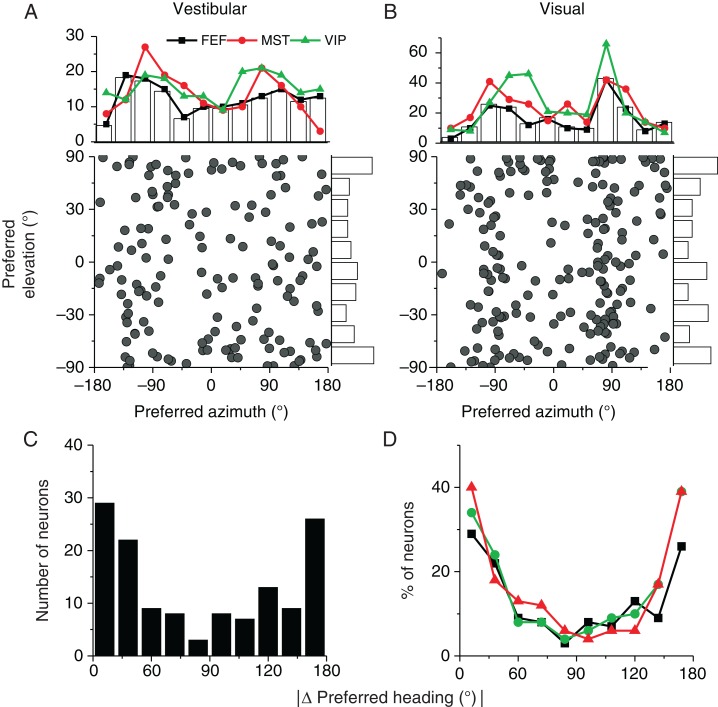

To examine the heading preferences of neurons in FEFsem, we computed the preferred heading using a vector sum algorithm for each neuron with significant heading tuning. Figure 5A and B show distributions of heading preferences for 148 cells that were significantly tuned in the vestibular condition and 194 cells that were significantly tuned in the visual condition (P < 0.05, 1-way ANOVA). Overall, heading preferences were distributed throughout 3D space. In line with findings for areas MSTd (Gu et al. 2006; Takahashi et al. 2007) and VIP (Chen et al. 2011a), more FEFsem neurons preferred leftward or rightward headings than forward or backward headings in the horizontal plane, and this trend toward bimodality was highly significant for the visual condition (Fig. 5B, P ≪ 0.001, uniformity test; Puni ≪ 0.001, Pbi > 0.5, modality test) (see Gu et al. 2006), but only marginally significant for the vestibular condition (P = 0.05, uniformity test, Fig. 5A). Interestingly, the distribution of differences between vestibular and visual heading preferences was significantly bimodal (Fig. 5C, P ≪ 0.001, uniformity test; Puni = 0.04, Pbi = 0.6, modality test), reflecting the presence of roughly equal numbers of congruent and opposite cells. This pattern of results is very similar to that described previously for areas MSTd and VIP (Fig. 5D, see also Gu et al. 2006; Gu et al. 2007; Takahashi et al. 2007; Chen et al. 2011a) .

Figure 5.

Heading preferences in FEFsem. (A and B) Scatter plot and marginal distributions of azimuth (abscissa) and elevation (ordinate) coordinates of heading preferences in the vestibular (n = 148) and visual (n = 194) conditions, respectively. Marginal bar graphs and black curves show distributions from FEFsem, while red and green curves show data from MSTd (vestibular, n = 162; visual, n = 280) and VIP (vestibular, n = 187; visual, n = 302), respectively. (C) Distribution of the absolute difference in 3D heading preferences between vestibular and visual conditions for FEFsem (n = 134). (D) Comparison of the absolute difference in vestibular and visual heading preferences among 3 cortical areas: FEFsem (black, n = 134), MSTd (red, n = 161), and VIP (green, n = 159). Since different numbers of neurons were recorded in each area, the ordinate represents percentage of cells. In C and D, only cells with significant heading tuning for both the vestibular and visual conditions were included.

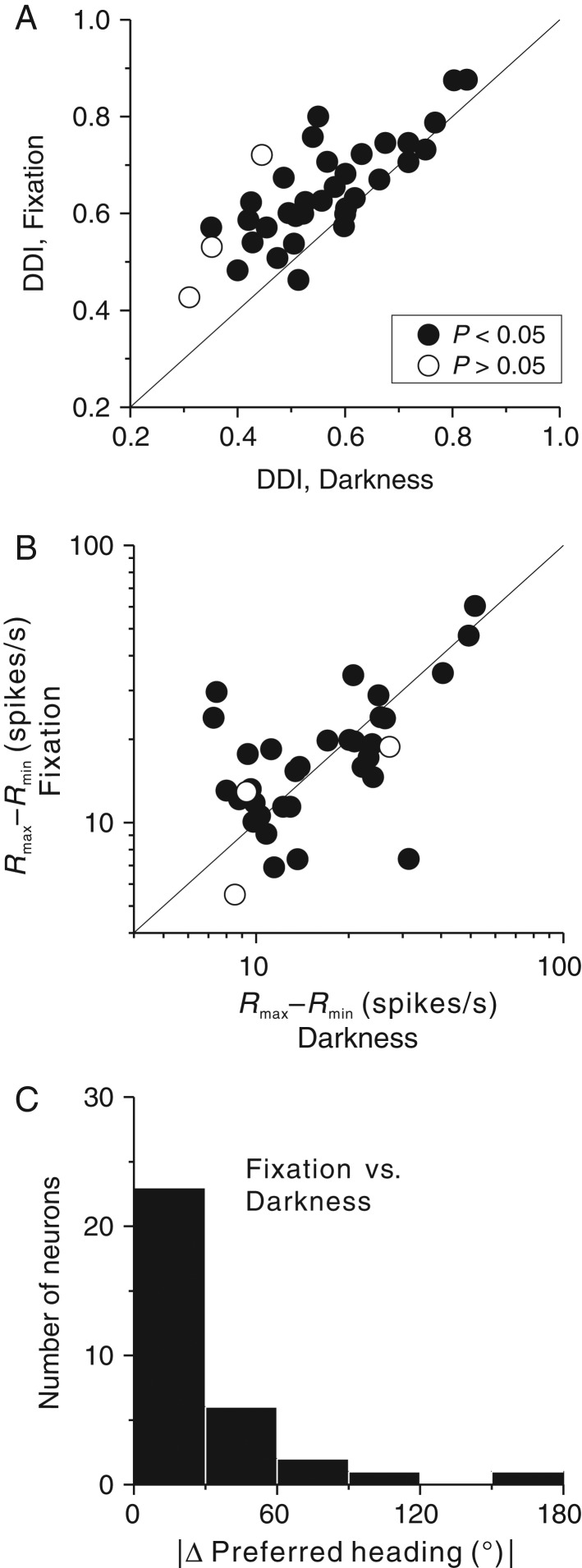

Vestibular Responses in Darkness

It is known from previous studies that FEFsem neurons show vestibular response modulations to translation in total darkness (Akao et al. 2009). In the present experiments, although no visual motion stimulus was presented in the vestibular condition, the animal was required to maintain fixation on a small spot at the center of the display. It is thus possible that “vestibular” responses could result from retinal slip of the fixation point or the projector's faint background texture, due to incompletely suppressed eye movements (Chowdhury et al. 2009). Indeed, small (<2 degrees/s) eye movements were present, particularly during lateral translation (see Supplementary Fig. 1). To verify that these small residual eye movements did not have a major effect on vestibular responses, a subpopulation of 36 FEFsem neurons with significant vestibular heading tuning was also tested in total darkness after turning off the projector. The majority of cells (33/36 = 91.7%) remained significantly tuned in the dark, although their heading selectivity was somewhat reduced (mean DDIfixation = 0.64, mean DDIdarkness = 0.55, P = 1.8E−7, paired t-test, Fig. 6A). However, the peak to trough response modulation was not significantly reduced in the dark (P = 0.7, paired t-test, Fig. 6B), suggesting that the reduction in DDI was due to increased response variability in darkness, and this may have resulted from unconstrained eye movements. For the 33 neurons with significant tuning in total darkness, vestibular heading preferences remained largely unchanged, compared with our standard vestibular condition (median Δ preferred heading =18.1°, Fig. 6C). These data suggest that the non-visual tuning characterized in Figures 3–5 was predominantly extra-retinal in origin and did not simply result from retinal slip artifacts.

Figure 6.

Vestibular heading selectivity in darkness. (A) Comparison of DDI values for FEFsem neurons tested in darkness (abscissa) and during the standard vestibular condition (involving fixation on a head-fixed target, ordinate). All cells were significantly tuned in the fixation condition (n = 36). Filled circles represent cells maintaining significant heading tuning in the dark (n = 33), and open circles represent cells that lose their tuning in darkness (n = 3). (B) Comparison of peak to trough response modulation of FEFsem neurons during fixation and darkness conditions; same format as in A. (C) Distribution of the absolute difference in preferred heading between darkness and fixation conditions. Only cells tuned in both conditions are included in this comparison (n = 33).

Heading Selectivity During Cue Combination

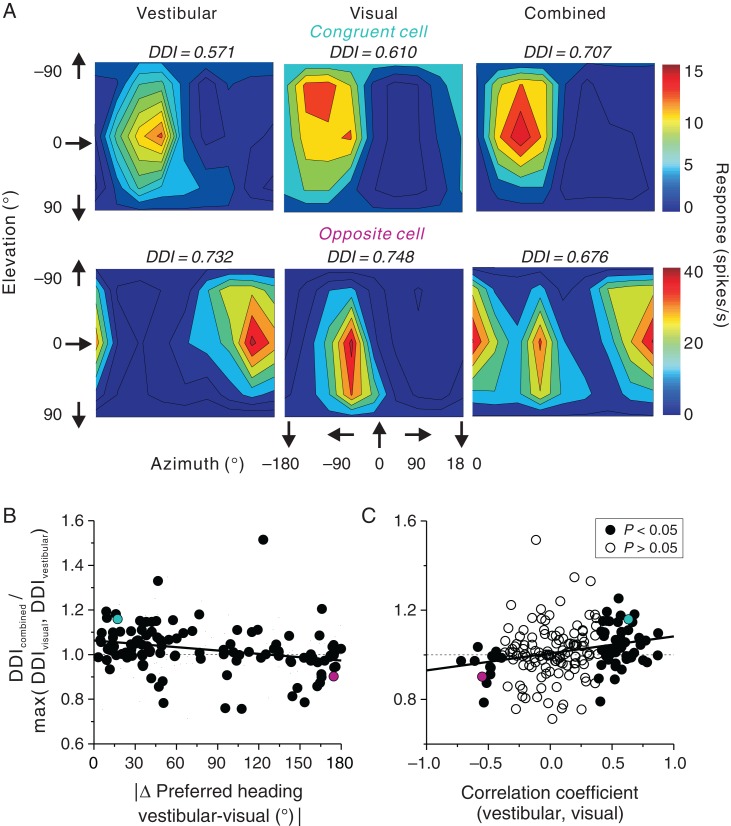

As noted above, approximately half of FEFsem neurons (134/229 = 58.5%) showed significant heading selectivity in both the vestibular and visual conditions, suggesting that FEFsem neurons may contribute to integrating visual and vestibular heading signals during self-motion. To explore how heading selectivity changed during cue combination, we tested 186 FEFsem neurons with synchronized and congruent vestibular and optic flow stimuli. Figure 7 shows data from 2 example cells that were tested with all 3 stimuli. The first example neuron, a congruent cell, preferred leftward motion in both vestibular and visual conditions (Fig. 7A, top panels). In the combined condition, the heading preference remained the same while heading selectivity was enhanced by 16% (DDIcombined = 0.71) compared with the maximum single-cue DDI value (DDIvestibular = 0.57, DDIvisual = 0.61). The second example FEFsem neuron, an opposite cell, preferred rightward/backward headings in the vestibular condition and leftward/forward headings in the visual condition. During combined stimulation, this cell showed 2 peaks in its heading tuning profile (Fig. 7A, bottom panels), which resulted in weaker heading selectivity compared with the single-cue conditions (DDIcombined = 0.68, DDIvestibular = 0.73, DDIvisual = 0.75).

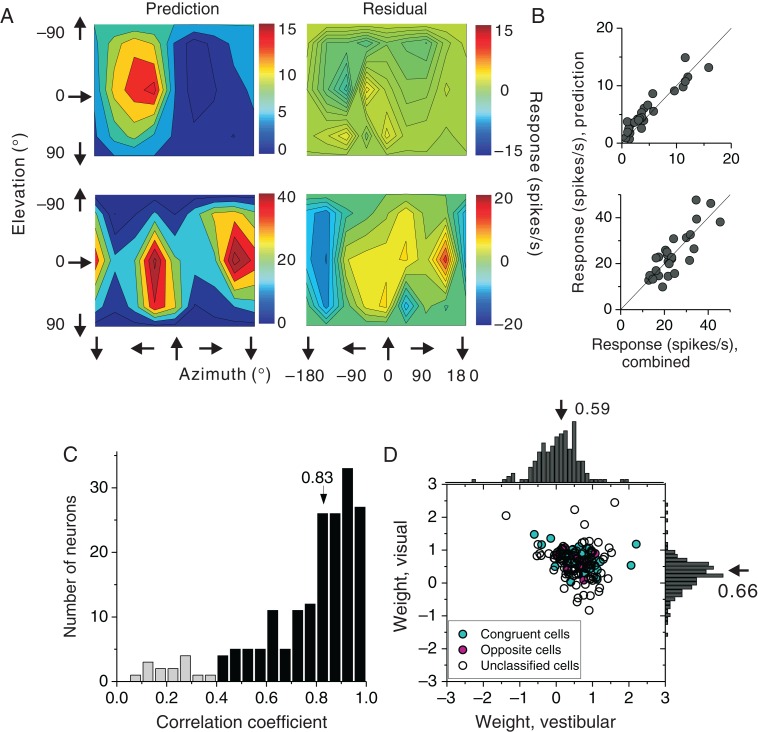

Figure 7.

Vestibular-visual integration in area FEFsem. (A) Contour maps of 3D heading tuning for 2 example neurons (top: congruent cell; bottom: opposite cell) during the vestibular, visual, and combined conditions. (B) Ratio of the combined DDI over the maximum of the single-cue DDI values is plotted as a function of the absolute difference in heading preference between visual and vestibular conditions (n = 113, only neurons with significant tuning are included). (C) Ratio of the combined DDI over the maximum of the single-cue DDI values is plotted as a function of the correlation coefficient between visual and vestibular tuning curves (n = 186). Filled circles represent significant congruent cells (n = 55, r > 0, P < 0.05, Pearson correlation coefficient) or opposite cells (n = 13, r < 0, P < 0.05) and open circles represent cells not assigned to either group (n = 118, P > 0.05). The cyan and magenta circles represent the neurons shown in A. Solid lines represent results of a type II linear regression. Notice that positive values on the x-axis in C and small values on the x-axis in B both represent congruent cells; thus, the opposite trends in B and C are expected.

We summarized the effect of cue combination on selectivity by computing the ratio of DDIcombined to the maximum of the single-cue DDI values (DDIvestibular, DDIvisual) and by plotting this ratio as a function of visual/vestibular congruency. Congruency was quantified in 2 ways. First, congruency was defined as the difference in heading preference between the vestibular and visual tuning profiles (Fig. 7B). There was a significant trend (R =− 0.26, P = 0.003, Spearman rank correlation) such that neurons with congruent vestibular and visual heading preferences tended to exhibit enhanced heading selectivity in the combined condition, whereas neurons with mismatched vestibular and visual heading preferences did not. This comparison only included cells with significant heading tuning for both visual and vestibular stimuli. Second, congruency was defined as the correlation coefficient between visual and vestibular tuning profiles (Fig. 7C). The advantage of this congruency measure is that it can be computed for all 186 neurons (flat tuning curves would produce correlation coefficients close to 0). Again, there was a significant correlation (R = 0.23, P = 0.001, Spearman rank correlation), indicating that neurons with similar vestibular and visual tuning tended to show increased heading selectivity in the combined condition, whereas neurons with dissimilar tuning tended to have reduced selectivity. Specifically, the average ratio of DDIcombined/max(DDIvestibular, DDIvisual) for the 55 congruent neurons in Figure 7C was significantly >1 (mean = 1.047, P = 1.5E−4, t-test), whereas the average ratio for the 13 opposite neurons was significantly <1 (mean = 0.948, P = 0.017, t-test). Thus, as found previously for MSTd (Gu et al. 2008) and VIP (Chen et al. 2013a), congruent and opposite FEFsem neurons showed improved and impaired heading selectivity, respectively, during cue combination.

How are responses from single-cue conditions integrated into the combined response? It has been shown previously that MSTd and VIP neurons follow a simple linear, but sub-additive, summation rule (Gu et al. 2006; Gu et al. 2008; Morgan et al. 2008; Chen et al. 2011a). We applied the same approach to FEFsem neurons to explore the vestibular and visual weights during cue combination (see Materials and Methods). We illustrate this analysis in Figure 8A for the 2 example neurons from Figure 7A. The predicted responses from the linear model are strongly correlated with responses measured in the combined condition (Fig. 8B, R = 0.92 and 0.75 for the 2 example neurons, P < 0.001, Spearman rank correlation). Across the population (Fig. 8C), the median correlation coefficient was 0.83, and 170 out of 184 (92.4%) cells showed significant correlations (P < 0.05, Pearson correlation coefficient), indicating that the linear weighted-sum model generally predicted the combined responses quite successfully. In addition, the quality of model predictions was not significantly different between congruent (median R = 0.912) and opposite (median R = 0.916) cells (P = 0.45, Kolmogorov–Smirnov test).

Figure 8.

Results from weighted linear summation model. (A) Color contour maps showing model fits for the same neurons as in Figure 7 (Prediction, left panels) and the corresponding errors of the fit (Residuals, right panels). (B) Comparison of measured and predicted responses for the combined condition. Solid lines show unity slope diagonals. (C) Distributions of correlation coefficients between measured and predicted responses (n = 184, 2 cells with negative correlation coefficients are not shown). Black bars represent fits with significant correlation coefficients (P < 0.05, Pearson correlation) (n = 170) and light gray bars represent non-significant fits (P > 0.05). Arrow represents the median value for all cases. (D) Comparison of vestibular and visual weights for FEFsem cells with significant model fits in C (n = 170). Cyan: congruent cells, n = 54; magenta: opposite cells, n = 9; open circles: unclassified cells, n = 107. Arrows: mean values.

We then examined the cue combination weights for 170 cells that were well fit by the linear summation model (Fig. 8D). Overall, there was a significant negative correlation between vestibular and visual weights (R =− 0.23, P = 0.002, Spearman rank correlation). This trend is similar to that reported previously in area MSTd (Gu et al. 2008), indicating that cortical neurons show combination rules that vary continuously from visual dominance to vestibular dominance. The average vestibular weight was 0.59 ± 0.04 (mean ± SEM) and the average visual weight was 0.66 ± 0.03 (mean ± SEM). These data demonstrate sub-additive linear combination of visual and vestibular responses. Interestingly, the weights assigned to each cue were roughly equal in magnitude (P = 0.24, paired t-test). Notably, matched visual/vestibular weights have also been reported for area VIP (Chen et al. 2011a), in contrast to the visual dominance observed in area MSTd (Gu et al. 2006; Gu et al. 2008; Morgan et al. 2008; Fetsch et al. 2013).

Fisher Information of Heading Signals in FEFsem

To compare how visual and vestibular heading sensitivity in FEFsem compares with that in MSTd and VIP, we used Fisher information (Seung and Sompolinsky 1993; Pouget et al. 1998; Abbott and Dayan 1999) to estimate the maximum amount of heading information that an unbiased decoder can extract from the population of FEFsem neurons (see Materials and Methods). For simplicity, this analysis was restricted to headings in the horizontal plane, as done previously for MSTd (Gu et al. 2010). Figure 9A illustrates the analysis for an example cell that prefers leftward motion (approximately −90°; Fig. 9A, black curve) and has the steepest slope of its tuning curve around straight forward. As a result, the computed Fisher information peaked at approximately 0° (Fig. 9A, orange curve).

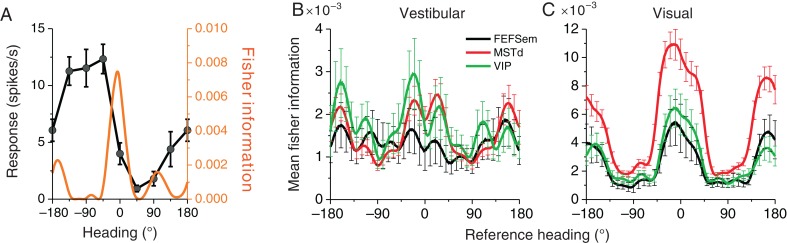

Figure 9.

Fisher information analysis. (A) Example tuning curve in the horizontal plane from an example FEFsem neuron (black) and the corresponding Fisher information (orange). (B and C) Average Fisher information in FEFsem, plotted as a function of reference heading direction (black, n = 104 and 167), is compared with the corresponding data from MSTd (red, n = 556 and 992) and VIP (green, n = 117 and 238) in the vestibular and visual conditions, respectively. Error bars represent 95% confidence intervals. Only neurons that are significantly tuned (ANOVA P < 0.05) in the horizontal plane in the vestibular or visual condition were included in this analysis.

The average Fisher information across all FEFsem neurons with significant heading tuning in the horizontal plane for both vestibular and visual conditions is shown in Figure 9B and C, respectively. The overall shape of the average Fisher information curve was similar across all 3 areas, with peaks around the straightforward reference heading and troughs around leftward and rightward headings. This pattern was clearest for the visual condition (Fig. 9C), but can also be observed for the vestibular condition (Fig. 9B). This pattern arises, because a majority of neurons have broad heading tuning and heading preferences that cluster around lateral motion (Fig. 5A and B, top marginal distributions). Indeed, the weaker peak in the Fisher information profile for FEFsem neurons in the vestibular condition, compared with MSTd and VIP, is likely explained by the less clearly bimodal distribution of vestibular heading preferences in FEFsem relative to the other areas (marginal distributions in Fig. 5A). For the vestibular condition, the overall magnitudes of Fisher information were similar across brain areas (Fig. 9B). In contrast, for the visual condition, the overall magnitude of Fisher information is similar for FEFsem and VIP (overlapping 95% confidence intervals), but substantially greater in MSTd (Fig. 9C, P < 0.05, non-overlapping 95% confidence intervals). Overall, these data show that FEFsem neurons carry robust heading information based on both vestibular and visual cues, similar to MSTd and VIP, except for the fact that MSTd shows greater discriminability of heading based on optic flow. The latter finding may reflect the fact that MSTd is more closely connected to other visual motion processing areas (Felleman and Van Essen 1991).

Pursuit Response Properties of FEFsem Neurons

Previous studies have shown that FEEsem neurons respond during smooth ocular pursuit of a moving target and have suggested that these signals could be related to gaze (Gottlieb et al. 1994; Tanaka and Fukushima 1998; Fukushima et al. 2000; Tanaka and Lisberger 2002). We recorded responses of a subpopulation of FEFsem neurons (N = 54) during smooth pursuit of a target moving along 8 directions in the frontoparallel (i.e., vertical) plane. As illustrated for an example neuron in Figure 10A, responses during pursuit were often directionally selective. This cell fired maximally during pursuit of a leftward and downward moving target.

The population PSTH across all 54 neurons (each cell contributing responses along its preferred direction) showed robust responses particularly during the middle 1 s of the stimulus period (peak of stimulus velocity; Fig. 10B). We used mean firing rate during this time period to compute tuning curves for pursuit direction and DDI values. The majority of neurons (45/54 = 83.3%) were significantly tuned during smooth pursuit (P < 0.05, 1-way ANOVA, Fig. 10C), and the average DDI value was 0.60 ± 0.02 (mean ± SEM, N = 54). Thus, most neurons within the pursuit region of FEF identified by microstimulation were also tuned to pursuit.

We tested whether the preferred direction for pursuit was correlated with the vestibular or visual heading preference within the frontoparallel plane. Since vestibular and visual heading data were collected in 3D space (Fig. 1A), we extracted data corresponding to headings in the frontoparallel plane and computed the heading preference within this plane by vector sum, such that it could be compared with the preferred direction of pursuit in the same plane. This comparison was possible for 17 neurons that were significantly tuned for both pursuit and vestibular heading stimuli (Fig. 10D and F) and for 32 neurons that were significantly tuned for both pursuit and visual heading stimuli (Fig. 10E and G). We found no significant correlation between the preferred direction for pursuit and the vestibular/visual heading preference (pursuit vs. vestibular: r =− 0.15, P = 0.11; pursuit vs. visual: r = 0.04, P = 0.35, circular correlation coefficients). This is further illustrated by the broad distribution of differences in preferred direction (|Δ preferred direction|) between pursuit and vestibular or visual heading (Fig. 10F and G). The distribution of |Δ preferred direction| was not significantly different from uniform for pursuit versus vestibular (Fig. 10F, P = 0.117, n = 17, uniformity test) and was only marginally different from uniform for pursuit versus visual (Fig. 10G, P = 0.034, n = 32, uniformity test). A further modality test did not reveal a significantly bimodal distribution (Puni = 0.15, Pbi = 0.89, modality test), but is limited by the small sample size. Thus, although there was a tendency for the preferred pursuit direction to be either congruent or opposite to the preferred visual heading, it was not significant.

Finally, we also examined whether the relationship between the preferred pursuit direction and preferred vestibular/visual heading depended on the congruency of vestibular/visual tuning. Due to the small data sample, we could only examine this relationship for 12 neurons including 5 congruent cells (|Δ preferred heading, vestibular-visual|<60°, cyan symbols in Fig. 10D and E), 3 opposite cells (|Δ preferred heading, vestibular-visual|>120°, magenta symbols in Fig. 10D and E), and 4 unclassified cells (60°<|Δ preferred heading, vestibular-visual|<120°, open symbols in Fig. 10D and E). Even for these few congruent cells, we could see that the preferred pursuit direction could either match (n = 3) or mismatch (n = 2) the preferred vestibular/visual heading. Similarly, for the few opposite cells, the preferred pursuit direction either matched the vestibular heading preference (n = 1) or the visual heading preference (n = 2).

In summary, these results show that the majority of FEFsem cells that we studied were pursuit neurons. These neurons showed robust responses during smooth pursuit of a moving target in the frontoparallel plane, but our limited sample of neurons did not reveal a consistent relationship between the preferred pursuit direction and the vestibular/visual heading preferences.

Discussion

We have used a virtual reality system (Gu et al. 2006) to systematically characterize the activity of single FEFsem neurons in response to 3D heading stimuli defined by vestibular inertial motion and visual optic flow cues. We discovered that many FEFsem neurons are significantly tuned for heading in 3D based on both sensory modalities. Response strength and overall tuning properties were quite similar to those found in areas MSTd and VIP. Specifically, we found that: 1) visual and vestibular cues in all areas are combined based on a linear, sub-additive scheme; 2) all areas show a tendency for heading preferences to be clustered around lateral motion directions and for peak heading discriminability to occur around forward headings, with the latter property being clearest for the visual condition; 3) all areas show roughly equal proportions of congruent and opposite cells, with selectivity being enhanced by cue combination for congruent cells but not opposite cells. Our findings support the hypothesis that FEFsem, like MSTd and VIP, may contribute to multisensory heading perception.

Vestibular Heading Signals in FEFsem

The present results show that nearly two-thirds of FEFsem neurons are tuned to linear translation in 3D space based on inertial motion signals. Although more variable, these responses to vestibular stimulation persisted during motion in darkness, thus eliminating the possibility that they arise simply because of retinal slip due to residual eye movements. Vestibular response modulation during sinusoidal translation and rotation in darkness has been reported previously in this area (Fukushima et al. 2005, 2006; Akao et al. 2007, 2009; Fujiwara et al. 2009). The vestibular signals in FEFsem originate from at least 2 sources: 1) directly from the thalamus (Ebata et al. 2004) and 2) indirectly from other cortical areas, including VIP, 3a, VPS, and PIVC, where clear vestibular response modulation has been identified (Tian and Lynch 1996; Stanton et al. 2005; Lynch and Tian 2006). A more detailed analysis of the spatial-temporal properties of vestibular responses in FEFsem, compared with other areas, may help clarify the flow of vestibular signals in the brain and is the topic of an ongoing study.

The vestibular signals reported previously in FEFsem have been implicated in oculomotor functions, including maintaining stability of objects on the fovea during rotation/translation of the body in space (see reviews by Fukushima 2003; Ilg and Thier 2008; Fukushima et al. 2011). Evidence supporting this hypothesis arises from the fact that most neurons had matched direction preferences for pursuit and VOR suppression signals elicited by whole-body rotation, although some neurons with mismatched preferences were also found (Fukushima et al. 2000; Akao et al. 2007). Our finding of vestibular heading tuning in FEFsem is not surprising, as vestibular signals originating from the otolith organs have been reported previously for the caudal pursuit area of FEF (Akao et al. 2009). Although the response modulation of some neurons in that study also exhibited gaze-velocity properties, which was similar to the rotation signals observed in the majority of FEFsem neurons, the relationship between preferred pursuit direction and translational VOR-cancellation direction was not clear, likely due to a small sample size (N = 14 neurons). In the present study, we also found FEFsem neurons with either matched or mismatched preferred directions for pursuit and frontoparallel heading. However, our small sample size (N = 17) limits the power of the quantitative comparisons that we can make between pursuit and heading preferences.

The convergence of vestibular signals with optic flow responses that we have observed suggests that FEFsem may also contribute to multisensory processing of self-motion cues, in addition to oculomotor control. In fact, tuning strength, average Fisher information, and temporal response modulation properties are all broadly similar to those reported previously for areas MSTd and VIP (Gu et al. 2006, 2010; Chen et al. 2011a). A role for FEFsem in both oculomotor and heading estimation functions may not be surprising, as these 2 functions naturally co-occur during navigation (see review by Britten 2008).

Visual Heading Signals in FEFsem and Relationship to Vestibular Signals

It was shown previously that FEFsem neurons carry visual motion signals by responding to moving targets (for reviews, see MacAvoy et al. 1991; Krauzlis 2004). A recent study also showed that FEFsem neurons respond to looming visual stimuli (Fujiwara et al. 2014), although this study did not directly assess visual heading tuning. Anatomical studies have revealed that FEFsem receives direct inputs from several cortical areas involved in visual-motion processing, including areas MST, FST, MT, and VIP (Boussaoud et al. 1990; Maioli et al. 1998; Stanton et al. 2005; for review, see Lynch and Tian 2006).

In the current study, we showed that more than two-thirds of FEFsem neurons are also tuned to optic flow patterns that are typically experienced during self-motion. Such responsiveness in FEFsem could propagate directly from extrastriate visual cortical areas, including MSTd and VIP, that are known to be selective for optic flow (Tanaka et al. 1986; Tanaka et al. 1989; Duffy and Wurtz 1991, 1995; Britten and van Wezel 1998; Vaina 1998; Bremmer et al. 2002; Britten and Van Wezel 2002; Schlack et al. 2002; Gu et al. 2006, 2008; Chen et al. 2013a; Fetsch et al. 2013). Indeed, our analyses have revealed that tuning strength and Fisher information in FEFsem are both comparable to analogous measurements from VIP (Chen et al. 2011a), albeit a bit weaker than corresponding observations from area MSTd (Gu et al. 2006, 2010).

Our data also indicate that optic flow signals in FEFsem interact with vestibular signals in a congruency-dependent manner (Fig. 7). Specifically, congruent neurons with matched visual and vestibular heading preferences tend to show enhanced heading selectivity in the combined condition. Psychophysical studies with both humans and non-human primates have demonstrated that heading discrimination becomes more precise during integration of vestibular and visual signals (Gu et al. 2008; Butler et al. 2010, 2011; Fetsch et al. 2012). The congruent cells identified in FEFsem may thus provide a potential neural substrate for visual-vestibular cue integration, as previously suggested for MSTd (Gu et al. 2008; Fetsch et al. 2012) and VIP (Chen et al. 2013a). Further experiments will be required to determine whether inactivation of FEFsem impairs heading judgments.

In contrast to congruent cells, opposite cells with mismatched vestibular and visual heading preference were also identified. These cells might not be useful for heading perception, because their heading selectivity will be reduced during navigation through a stationary environment, when both visual and vestibular cues are present together. We have suggested previously that the presence of these 2 cell types may imply distinct and potentially complementary functions. The differential involvement of congruent and opposite cells in heading perception was indicated previously in a fine heading discrimination task in which the monkeys actively judged their heading while neuronal activity was monitored simultaneously (Gu et al. 2008; Chen et al. 2013a). We found that congruent cells in MSTd and VIP were significantly correlated with monkeys' perceptual decisions on a trial-by-trial basis, whereas opposite cells were not. Opposite cells may play other roles in behavior, such as helping to distinguish self-motion from object motion.

As in MSTd (Gu et al. 2006; Takahashi et al. 2007) and VIP (Chen et al. 2011a), the clustering of vestibular and visual heading preferences around leftward and rightward directions makes FEFsem neurons sensitive to small changes in heading around straightforward, as quantified by the Fisher information analysis (Figs 5 and 9). Heading information capacity is greatest for reference headings along the forward–backward axis, and smallest for reference headings along the lateral axis (see also Gu et al. 2010). However, probably due to a relatively smaller sample size, the bimodal distribution of vestibular heading preferences in FEFsem was only marginally significant (Fig. 5A), leading to a less clear bimodal profile of population Fisher information (Fig. 9B).

In addition to many similarities across the 3 areas, we also found some notable differences. While vestibular heading selectivity is roughly equally strong across the 3 areas, visual selectivity is substantially stronger in MSTd than in FEFsem or VIP (Fig. 4). These differences among areas may be accounted for by their respective locations within the hierarchy of visual processing (Felleman and Van Essen 1991). Area MSTd receives strong projections from area MT, which is known to process visual motion signals (Van Essen et al. 1981; Maunsell and van Essen 1983, 1987). In contrast, FEFsem and VIP are thought to lie closer to motor and decision-related circuits (Maunsell and van Essen 1983; Bruce et al. 1985; Maunsell and Van Essen 1987; Felleman and Van Essen 1991; Gottlieb et al. 1993; Gottlieb et al. 1994; Lewis and Van Essen 2000; Cooke et al. 2003). These differences in visual heading selectivity between areas may imply that, during cue combination, visual responses would be more dominant in area MSTd than in VIP or FEFsem. This is, indeed, suggested by fits of the weighted linear sum model, for which the vestibular and visual weights are roughly matched in FEFsem and VIP, whereas the average visual weight is greater than the vestibular weight in MSTd (Gu et al. 2006, 2008; Chen et al. 2011a; but see Chen et al. 2013a). These results add to a body of work that has revealed a continuous range of modality dominance across areas, from visual dominance in MSTd (Gu et al. 2006), to roughly equal balance of visual and vestibular signals in VIP (Chen et al. 2011a) and FEFsem (Fig. 8D), to partial vestibular dominance in VPS (Chen et al. 2011b) and complete vestibular dominance in PIVC (Chen et al. 2010).

In summary, our results show that vestibular and visual motion signals in FEFsem are strong and sufficiently reliable for this area to potentially contribute to heading perception. Whether this is indeed the case requires further study. Future experiments need to more directly examine whether these signals contribute functionally to heading perception, as well as determine whether FEFsem represents heading in different reference frames than have been found to operate in MSTd (Fetsch et al. 2007) and VIP (Chen et al. 2013b, c). The fact that multiple areas, including MSTd, VIP, and FEFsem, carry similar heading signals may suggest that precise and accurate heading estimation is not based on a single area, but rather a network. Still, it remains unclear why the brain would employ multiple areas for heading processing, and it is important to interrogate the function of these areas using causal manipulations (as previously done by Gu et al. 2012 in MSTd). Thus, further experiments must address both the homogeneous and heterogeneous functions of each of these nodes in the network, including their functional specializations. For example, compared with MSTd and VIP, FEFsem contains more motor-related responses, because electrical stimulation directly evokes smooth pursuit eye movements (MacAvoy et al. 1991; Gottlieb et al. 1993; Gottlieb et al. 1994; Tanaka and Lisberger 2002). It is thus possible that FEFsem plays a prominent role in coordinating volitional eye movements during self-motion.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

This work was supported by grants from National Institutes of Health (EY017866 to D.E.A. and EY016178 to G.C.D.); and from the National Natural Science Foundation of China Project (31471048); the Recruitment Program of Global Youth Experts; and the Shanghai Pujiang Program (13PJ1409400 to Y.G.).

Supplementary Material

Notes

Conflict of Interest: None declared.

References

- Abbott LF, Dayan P. 1999. The effect of correlated variability on the accuracy of a population code. Neural Comput. 11(1):91–101. [DOI] [PubMed] [Google Scholar]

- Akao T, Kurkin S, Fukushima J, Fukushima K. 2009. Otolith inputs to pursuit neurons in the frontal eye fields of alert monkeys. Exp Brain Res. 193(3):455–466. [DOI] [PubMed] [Google Scholar]

- Akao T, Saito H, Fukushima J, Kurkin S, Fukushima K. 2007. Latency of vestibular responses of pursuit neurons in the caudal frontal eye fields to whole body rotation. Exp Brain Res. 177(3):400–410. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Gu Y, DeAngelis GC. 2009. Multisensory integration: psychophysics, neurophysiology, and computation. Curr Opin Neurobiol. 19(4):452–458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boussaoud D, Ungerleider LG, Desimone R. 1990. Pathways for motion analysis: cortical connections of the medial superior temporal and fundus of the superior temporal visual areas in the macaque. J Comp Neurol. 296(3):462–495. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. 2002. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP). Eur J Neurosci. 16(8):1569–1586. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Kubischik M, Pekel M, Lappe M, Hoffmann KP. 1999. Linear vestibular self-motion signals in monkey medial superior temporal area. Ann NY Acad Sci. 871:272–281. [DOI] [PubMed] [Google Scholar]

- Britten KH. 2008. Mechanisms of self-motion perception. Annu Rev Neurosci. 31:389–410. [DOI] [PubMed] [Google Scholar]

- Britten KH, Van Wezel RJ. 2002. Area MST and heading perception in macaque monkeys. Cereb Cortex. 12(7):692–701. [DOI] [PubMed] [Google Scholar]

- Britten KH, van Wezel RJ. 1998. Electrical microstimulation of cortical area MST biases heading perception in monkeys. Nat Neurosci. 1(1):59–63. [DOI] [PubMed] [Google Scholar]

- Bruce CJ, Goldberg ME, Bushnell MC, Stanton GB. 1985. Primate frontal eye fields. II. Physiological and anatomical correlates of electrically evoked eye movements. J Neurophysiol. 54(3):714–734. [DOI] [PubMed] [Google Scholar]

- Butler JS, Campos JL, Bulthoff HH, Smith ST. 2011. The role of stereo vision in visual-vestibular integration. J Vis. 10(11):23. [DOI] [PubMed] [Google Scholar]

- Butler JS, Smith ST, Campos JL, Bulthoff HH. 2010. Bayesian integration of visual and vestibular signals for heading. Seeing Perceiving. 24(5):453–470. [DOI] [PubMed] [Google Scholar]

- Cao P, Gu Y, Wang SR. 2004. Visual neurons in the pigeon brain encode the acceleration of stimulus motion. J Neurosci. 24(35):7690–7698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. 2011c. A comparison of vestibular spatiotemporal tuning in macaque parietoinsular vestibular cortex, ventral intraparietal area, and medial superior temporal area. J Neurosci. 31(8):3082–3094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. 2011b. Convergence of vestibular and visual self-motion signals in an area of the posterior sylvian fissure. J Neurosci. 31(32):11617–11627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen X, DeAngelis GC, Angelaki DE. 2013b. Diverse spatial reference frames of vestibular signals in parietal cortex. Neuron. 80(5):1310–1321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen X, DeAngelis GC, Angelaki DE. 2013c. Eye-centered representation of optic flow tuning in the ventral intraparietal area. J Neurosci. 33(47):18574–18582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. 2013a. Functional specializations of the ventral intraparietal area for multisensory heading discrimination. J Neurosci. 33(8):3567–3581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. 2010. Macaque parieto-insular vestibular cortex: responses to self-motion and optic flow. J Neurosci. 30(8):3022–3042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. 2011a. Representation of vestibular and visual cues to self-motion in ventral intraparietal cortex. J Neurosci. 31(33):12036–12052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chowdhury SA, Takahashi K, DeAngelis GC, Angelaki DE. 2009. Does the middle temporal area carry vestibular signals related to self-motion? J Neurosci. 29(38):12020–12030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colby CL, Duhamel JR, Goldberg ME. 1993. Ventral intraparietal area of the macaque: anatomic location and visual response properties. J Neurophysiol. 69(3):902–914. [DOI] [PubMed] [Google Scholar]

- Cooke DF, Taylor CS, Moore T, Graziano MS. 2003. Complex movements evoked by microstimulation of the ventral intraparietal area. Proc Natl Acad Sci USA. 100(10):6163–6168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duffy CJ. 1998. MST neurons respond to optic flow and translational movement. J Neurophysiol. 80(4):1816–1827. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. 1995. Response of monkey MST neurons to optic flow stimuli with shifted centers of motion. J Neurosci. 15(7 Pt 2):5192–5208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. 1991. Sensitivity of MST neurons to optic flow stimuli. I. A continuum of response selectivity to large-field stimuli. J Neurophysiol. 65(6):1329–1345. [DOI] [PubMed] [Google Scholar]

- Ebata S, Sugiuchi Y, Izawa Y, Shinomiya K, Shinoda Y. 2004. Vestibular projection to the periarcuate cortex in the monkey. Neurosci Res. 49(1):55–68. [DOI] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC. 1991. Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex. 1(1):1–47. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, DeAngelis GC, Angelaki DE. 2013. Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nat Rev Neurosci. 14(6):429–442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, DeAngelis GC, Angelaki DE. 2010. Visual-vestibular cue integration for heading perception: applications of optimal cue integration theory. Eur J Neurosci. 31(10):1721–1729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Pouget A, DeAngelis GC, Angelaki DE. 2012. Neural correlates of reliability-based cue weighting during multisensory integration. Nat Neurosci. 15(1):146–154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Rajguru SM, Karunaratne A, Gu Y, Angelaki DE, DeAngelis GC. 2010. Spatiotemporal properties of vestibular responses in area MSTd. J Neurophysiol. 104(3):1506–1522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Wang S, Gu Y, DeAngelis GC, Angelaki DE. 2007. Spatial reference frames of visual, vestibular, and multimodal heading signals in the dorsal subdivision of the medial superior temporal area. J Neurosci. 27(3):700–712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujiwara K, Akao T, Kawakami S, Fukushima J, Kurkin S, Fukushima K. 2014. Neural correlates of time-to-collision estimation from visual motion in monkeys. Equilibrium Res. 73(3):144–153. [Google Scholar]

- Fujiwara K, Akao T, Kurkin S, Fukushima K. 2009. Discharge of pursuit neurons in the caudal part of the frontal eye fields during cross-axis vestibular-pursuit training in monkeys. Exp Brain Res. 195(2):229–240. [DOI] [PubMed] [Google Scholar]

- Fukushima J, Akao T, Kurkin S, Kaneko CR, Fukushima K. 2006. The vestibular-related frontal cortex and its role in smooth-pursuit eye movements and vestibular-pursuit interactions. J Vestib Res. 16(1–2):1–22. [PMC free article] [PubMed] [Google Scholar]

- Fukushima K. 2003. Frontal cortical control of smooth-pursuit. Curr Opin Neurobiol. 13(6):647–654. [DOI] [PubMed] [Google Scholar]

- Fukushima K, Akao T, Kurkin S, Fukushima J. 2005. Role of vestibular signals in the caudal part of the frontal eye fields in pursuit eye movements in three-dimensional space. Ann NY Acad Sci. 1039:272–282. [DOI] [PubMed] [Google Scholar]

- Fukushima K, Fukushima J, Warabi T. 2011. Vestibular-related frontal cortical areas and their roles in smooth-pursuit eye movements: representation of neck velocity, neck-vestibular interactions, and memory-based smooth-pursuit. Front Neurol. 2:78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fukushima K, Sato T, Fukushima J, Shinmei Y, Kaneko CR. 2000. Activity of smooth pursuit-related neurons in the monkey periarcuate cortex during pursuit and passive whole-body rotation. J Neurophysiol. 83(1):563–587. [DOI] [PubMed] [Google Scholar]

- Gottlieb JP, Bruce CJ, MacAvoy MG. 1993. Smooth eye movements elicited by microstimulation in the primate frontal eye field. J Neurophysiol. 69(3):786–799. [DOI] [PubMed] [Google Scholar]

- Gottlieb JP, MacAvoy MG, Bruce CJ. 1994. Neural responses related to smooth-pursuit eye movements and their correspondence with electrically elicited smooth eye movements in the primate frontal eye field. J Neurophysiol. 72(4):1634–1653. [DOI] [PubMed] [Google Scholar]

- Gu Y, Angelaki DE, DeAngelis GC. 2008. Neural correlates of multisensory cue integration in macaque MSTd. Nat Neurosci. 11(10):1201–1210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, DeAngelis GC, Angelaki DE. 2012. Causal links between dorsal medial superior temporal area neurons and multisensory heading perception. J Neurosci. 32(7):2299–2313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, DeAngelis GC, Angelaki DE. 2007. A functional link between area MSTd and heading perception based on vestibular signals. Nat Neurosci. 10(8):1038–1047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Fetsch CR, Adeyemo B, DeAngelis GC, Angelaki DE. 2010. Decoding of MSTd population activity accounts for variations in the precision of heading perception. Neuron. 66(4):596–609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. 2006. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J Neurosci. 26(1):73–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ilg UJ, Thier P. 2008. The neural basis of smooth pursuit eye movements in the rhesus monkey brain. Brain Cogn. 68(3):229–240. [DOI] [PubMed] [Google Scholar]

- Krauzlis RJ. 2004. Recasting the smooth pursuit eye movement system. J Neurophysiol. 91(2):591–603. [DOI] [PubMed] [Google Scholar]

- Lewis JW, Van Essen DC. 2000. Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J Comp Neurol. 428(1):112–137. [DOI] [PubMed] [Google Scholar]

- Lisberger SG, Movshon JA. 1999. Visual motion analysis for pursuit eye movements in area MT of macaque monkeys. J Neurosci. 19(6):2224–2246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lynch JC, Tian JR. 2006. Cortico-cortical networks and cortico-subcortical loops for the higher control of eye movements. Prog Brain Res. 151:461–501. [DOI] [PubMed] [Google Scholar]

- MacAvoy MG, Gottlieb JP, Bruce CJ. 1991. Smooth-pursuit eye movement representation in the primate frontal eye field. Cereb Cortex. 1(1):95–102. [DOI] [PubMed] [Google Scholar]

- Maciokas JB, Britten KH. 2010. Extrastriate area MST and parietal area VIP similarly represent forward headings. J Neurophysiol. 104(1):239–247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maioli MG, Squatrito S, Samolsky-Dekel BG, Sanseverino ER. 1998. Corticocortical connections between frontal periarcuate regions and visual areas of the superior temporal sulcus and the adjoining inferior parietal lobule in the macaque monkey. Brain Res. 789(1):118–125. [DOI] [PubMed] [Google Scholar]

- Maunsell JH, van Essen DC. 1983. The connections of the middle temporal visual area. MT and their relationship to a cortical hierarchy in the macaque monkey. J Neurosci. 3(12):2563–2586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maunsell JH, Van Essen DC. 1987. Topographic organization of the middle temporal visual area in the macaque monkey: representational biases and the relationship to callosal connections and myeloarchitectonic boundaries. J Comp Neurol. 266(4):535–555. [DOI] [PubMed] [Google Scholar]

- Morgan ML, DeAngelis GC, Angelaki DE. 2008. Multisensory integration in macaque visual cortex depends on cue reliability. Neuron. 59(4):662–673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Page WK, Duffy CJ. 2003. Heading representation in MST: sensory interactions and population encoding. J Neurophysiol. 89(4):1994–2013. [DOI] [PubMed] [Google Scholar]

- Pouget A, Zhang K, Deneve S, Latham PE. 1998. Statistically efficient estimation using population coding. Neural Comput. 10(2):373–401. [DOI] [PubMed] [Google Scholar]

- Price NS, Ono S, Mustari MJ, Ibbotson MR. 2005. Comparing acceleration and speed tuning in macaque MT: physiology and modeling. J Neurophysiol. 94(5):3451–3464. [DOI] [PubMed] [Google Scholar]

- Robinson DA. 1963. A method of measuring eye movement using a scleral search coil in a magnetic field. IEEE Trans Biomed Eng. 10:137–145. [DOI] [PubMed] [Google Scholar]

- Schlack A, Hoffmann KP, Bremmer F. 2002. Interaction of linear vestibular and visual stimulation in the macaque ventral intraparietal area (VIP). Eur J Neurosci. 16(10):1877–1886. [DOI] [PubMed] [Google Scholar]

- Seung HS, Sompolinsky H. 1993. Simple models for reading neuronal population codes. Proc Natl Acad Sci USA. 90(22):10749–10753. [DOI] [PMC free article] [PubMed] [Google Scholar]