Abstract

Visual perceptual learning (VPL) is long-term performance improvement as a result of perceptual experience. It is unclear whether VPL is associated with refinement in representations of the trained feature (feature-based plasticity), improvement in processing of the trained task (task-based plasticity), or both. Here, we provide empirical evidence that VPL of motion detection is associated with both types of plasticity which occur predominantly in different brain areas. Before and after training on a motion detection task, subjects' neural responses to the trained motion stimuli were measured using functional magnetic resonance imaging. In V3A, significant response changes after training were observed specifically to the trained motion stimulus but independently of whether subjects performed the trained task. This suggests that the response changes in V3A represent feature-based plasticity in VPL of motion detection. In V1 and the intraparietal sulcus, significant response changes were found only when subjects performed the trained task on the trained motion stimulus. This suggests that the response changes in these areas reflect task-based plasticity. These results collectively suggest that VPL of motion detection is associated with the 2 types of plasticity, which occur in different areas and therefore have separate mechanisms at least to some degree.

Keywords: fMRI, motion, perceptual learning, 2-plasticity model, vision

Introduction

Visual perceptual learning (VPL) is regarded as a manifestation of plasticity in the brain as a result of visual experience (Dosher and Lu 1998; Sasaki et al. 2010; Xu et al. 2010; Sagi 2011; Byers and Serences 2012, 2014; Shibata et al. 2014). Although there has been a vast amount of research conducted to study VPL, what type(s) of neural information processing is changed in association with VPL has remained elusive (Sasaki et al. 2010; Shibata et al. 2014).

Different possibilities about changes in mechanisms associated with VPL have been discussed. One possibility is that VPL reflects refinement of a neural representation of a visual feature presented during training (Karni and Sagi 1991; Poggio et al. 1992; Shiu and Pashler 1992; Yu et al. 2004; Gilbert and Li 2012; Xu, Jiang, et al. 2012). Tuning property changes related to the trained feature in the low- or midlevel visual cortex of primates (Schoups et al. 2001; Yang and Maunsell 2004; Hua et al. 2010) and activation changes in the primary visual cortex of humans (Furmanski et al. 2004; Yotsumoto et al. 2008, 2009) have been often regarded as evidence for a refinement of a feature representation (Bejjanki et al. 2011). Another possibility is that VPL is associated with changes in the processing related to the trained task (Dosher and Lu 1998; Petrov et al. 2005) and/or in some cognitive processing including the attentional system (Green and Bavelier 2003; Xiao et al. 2008; Zhang et al. 2010; Kim et al. 2015). A number of researchers have suggested that VPL is associated with connectivity changes between feature representation and decision units (Dosher and Lu 1998; Petrov et al. 2005). The possibility of involvement of more cognitive processing than feature representation refinement has been supported by response changes in the lateral intraparietal area (LIP) of monkeys (Law and Gold 2008), elimination of location specificity of some types of VPL due to double training (Xiao et al. 2008), and activation changes in brain areas beyond the visual cortex (Lewis et al. 2009; Kahnt et al. 2011; Chen et al. 2015).

However, the possibility that VPL is associated with more than 1 type of plasticity mechanism has been raised. An increasing number of studies have empirically and theoretically supported this possibility (Watanabe et al. 2002; Gold and Watanabe 2010; Harris et al. 2012; Dosher et al. 2013; Hung and Seitz 2014; Shibata et al. 2014; Watanabe and Sasaki 2015; Chen et al. 2015; Talluri et al. 2015). Nevertheless, direct evidence that shows the mechanism of more than 1 types of plasticity involved in VPL is scanty.

In the present study, we propose a new model of VPL, termed the 2-plasticity model. In this model, VPL occurs as a result of at least 2 different types of plasticity that provide distinctive functions: feature- and task-based plasticity. Feature-based plasticity refers to refinement of a neural representation of a visual feature that is used during training. It is hypothesized that feature-based plasticity occurs in a specific visual area where the trained visual feature is mainly processed. On the other hand, task-based plasticity refers to improvement in task-related processing due to training on a task. These 2 types of plasticity are so different from each other that they may occur in different brain areas. To test the validity of the 2-plasticity model, we measured neural response changes that may reflect feature-based plasticity and neural response changes that may reflect task-based plasticity. Consider an experiment in which a subject is trained on a task with a visual feature. Before and after training (pre- and posttests), neural responses to the trained feature are measured while the subject performs the same trained task as in training. We call this condition an active-test condition. However, the active-test condition alone cannot determine whether response changes between the pre- and post-tests in a brain area occurred due to changes in representations of the trained feature, processing involved in the trained task, or both. To determine this, it is necessary to measure the neural responses to the trained feature without the trained task processing being involved. For this purpose, we need to measure response changes when the same subject is passively exposed to the feature. We call this condition a passive-test condition. In the passive-test condition, if a brain area reflects only task-based plasticity, response changes should not be observed between the pre- and post-tests since the subject does not perform the trained task in this condition. In contrast, if the area reflects feature-based plasticity, significant response changes should be observed since feature-based plasticity by definition should occur without changing the processing related to the trained task. Thus, in this study, we compared changes in neural activities between the active- and passive-test conditions to clarify whether different areas involved in visual information processing are associated with feature-based plasticity, task-based plasticity or both.

The results of the experiment using functional magnetic resonance imaging (fMRI) provide the first empirical evidence that supports the 2-plasticity model in VPL of global motion. Subjects were trained in a global motion detection task. In the pre- and post-tests, subjects' neural responses to the motion stimuli were measured in both of the passive- and active-test conditions using fMRI. In V3A, we found significant response changes in both active- and passive-test conditions. On the other hand, in V1 and the intraparietal sulcus (IPS) significant response changes were observed only in the active-test condition. These results support the 2-plasticity model and demonstrate that, at least in VPL of global motion, VPL results at least from feature- and task-based plasticity, which in turn reflect improvements in different aspects of neural information processing for visual perception subserved by different areas.

Materials and Methods

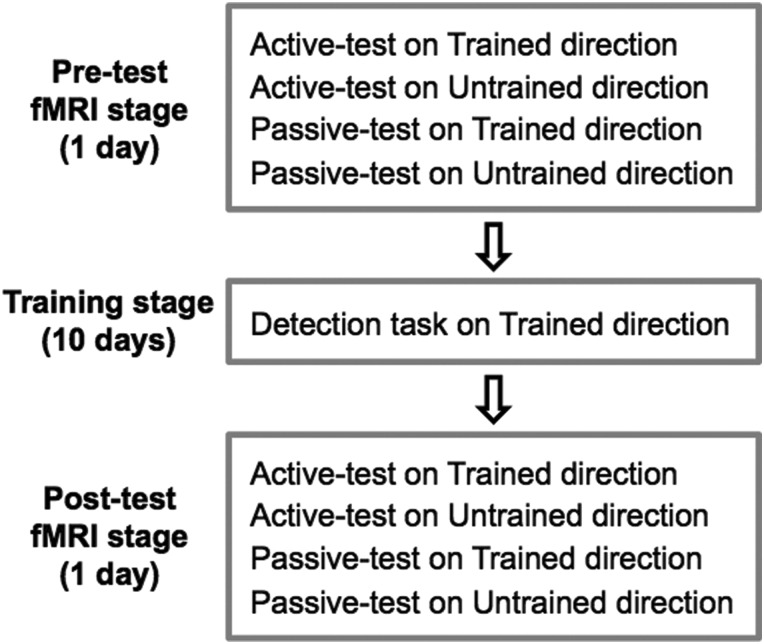

The complete experiment consisted of 3 stages: pre-test fMRI (1 day), behavioral training (10 days), and post-test fMRI (1 day) stages (Fig. 1). Different stages were separated by at least 24 h.

Figure 1.

Experimental design. The complete experiment consisted of 3 stages: pre-test fMRI (1 day), training (10 days), and post-test fMRI (1 day) stages. In the training stage, subjects were trained on a motion detection task on a particular motion direction (trained direction). In the pre- and post-test fMRI stages, the same group of subjects participated in all 4 different conditions during each of which fMRI responses to the motion stimuli were measured.

The training stage was aimed to train subjects in a detection task on global motion in a particular motion direction (trained direction) so that VPL of global motion would occur specifically to the trained direction (see Training stage section for details). Before and after the training stage, the pre- and post-test fMRI stages were conducted to test whether and if so how each of brain areas in visual information processing is involved in feature- or task-based plasticity of the VPL of the trained motion direction (see Pre- and Post-test fMRI Stages section for details).

Subjects

Thirteen naïve subjects (21–28 years old; 12 males and 1 female) with normal or corrected-to-normal vision participated in the study, which was approved by the Institutional Review Board of Advanced Telecommunications Research Institute International (ATR). All subjects gave written informed consents.

Motion Stimulus

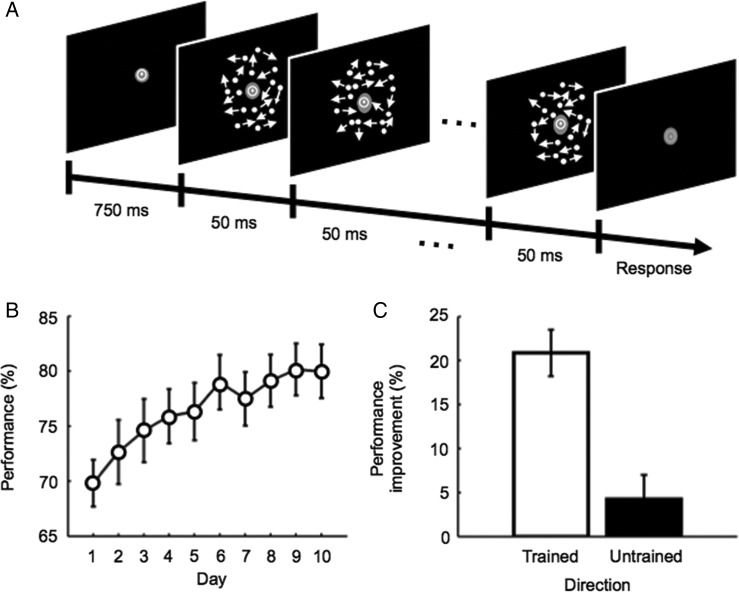

Moving dots were presented within an annulus subtending from 1.5 to 10 deg diameter on a black background (Fig. 2A). The dot density was 0.91 dots/deg2. The color of the dots was white. The display consisted of coherently moving (signal) dots and randomly moving (noise) dots. In each frame (16.7 ms per frame), each dot (0.1 deg2) was randomly classified into either a signal or noise dot. Signal dots moved in a predetermined direction at a speed of 14.2 deg/s, while noise dots were plotted in random positions (Newsome and Pare 1988).

Figure 2.

Motion detection task and behavioral results. (A) Example of the time-course of a trial in the training stage. Subjects were asked to report whether coherent motion in a particular direction was presented or not. (B) Mean (±SEM) behavioral performance across subjects for the trained direction during the training stage. (C) Mean (±SEM) behavioral performance improvement across subjects in the motion detection task for the trained (white bar) and untrained (black bar) directions from the pre- to post-test fMRI stages.

Training Stage

During the 10-day training stage, subjects were trained on a motion detection task (Fig. 2A) in a particular motion direction (trained direction). Throughout the task, subjects were asked to fixate on a bull's eye point at the center of a gray disk (1.5 deg diameter) presented at the center of the display. Each trial began with a 750-ms interval, during which subjects fixated on the fixation point. Then, the motion stimulus was presented and lasted for 500 ms. After the offset of the motion stimulus, the fixation color turned from white to light gray. During this light gray fixation period, subjects were asked to report whether the stimulus contained coherent motion or not by pressing 1 of the 2 buttons on a keyboard. This particular procedure of the detection task was taken because it is highly suitable for the measurements of fMRI signals for a pattern-classification analysis (see fMRI analysis section for details of the pattern-classification analysis). After the button press, the fixation color turned back to white, and the next trial started. In half of the trials, a stimulus contained 10% coherent motion in the trained direction, and in the other half of the trials the stimulus was entirely random. The trained direction was randomly chosen from the off-cardinal directions such as 23, 68, 113, 158, 203, 248, 293 and 338 deg for each subject. No accurate feedback was given to subjects. Each subject completed 640 trials on each day (∼60 min). A brief break period was provided after every 40 trials upon a subject's request.

Pre- and Post-Test fMRI Stage

The pre- and post-test fMRI stages were conducted to experimentally determine whether feature-based plasticity, task-based plasticity, or both occurs in each of regions of interest (ROIs; see Regions of interest section for ROI definition). For this purpose, the same group of subjects participated in 4 different conditions (Fig. 1), as described below.

There were 2 different test conditions: active- and passive-test conditions. In the active-test condition (see the following sections for details), subjects were asked to perform the same motion detection task as in the training stage. On the other hand, in the passive-test condition (see the following sections for details) subjects were passively exposed to the motion stimuli without conducting the trained motion detection task.

In addition to the 2 test conditions (active- vs. passive-test conditions), the 2 stimulus conditions (trained vs. untrained directions) were conducted to test whether both VPL of global motion and neural changes in association with the VPL are specific to the trained feature, as found in previous studies of VPL (Schoups et al. 2001; Furmanski et al. 2004; Law and Gold 2008; Hua et al. 2010; Shibata et al. 2011, 2012; Jehee et al. 2012; Chen et al. 2015). The untrained direction was 90 deg away from the trained direction.

Thus, in the pre- and post-test fMRI stages all subjects underwent the 4 different conditions (Fig. 1): active-test on trained-direction condition, active-test on untrained-direction condition, passive-test on trained-direction condition and passive-test on untrained-direction condition. In each of the pre- and post-test fMRI stages, all subjects completed 12 fMRI runs (see the following sections for details). For each fMRI run, 1 of the 4 conditions was selected in a random order. Each condition was repeated 3 times in each of the pre- and post-test fMRI stages.

Stimulus and Time-Course

In the pre- and post-test fMRI stages, each fMRI run consisted of 40 trials. The visual stimulus presented in the test stages was identical to that in the training stage, except that a sequence of 10 white alphabetical letters was presented on the gray disk presented at the center of the display, concurrently with the motion stimulus (Supplementary Fig. 1). In 20 out of the 40 trials, the stimulus contained 10% coherent motion toward a predetermined target direction (the trained or untrained direction). In the other 20 trials of the run, the stimulus contained 0% coherent motion (random motion). Irrespective of the motion stimuli, in 20 out of the 40 trials the letter sequence contained a predetermined target letter (L or T). In another 20 trials, the letter sequence did not contain the target letter. The target direction and letter were randomly determined and counterbalanced across the runs.

The time-course of each trial was identical to that of the training stage except for the light gray fixation period. The length of the light gray fixation period for each trial ranged from 4.75 to 10.75 s, as determined using OptSeq software (http://surfer.nmr.mgh.harvard.edu/optseq). Thus, the length of each trial ranged from 6 to 12 s. Each run (330 s) consisted of the 40 trials preceded by a 4-s light gray fixation period and followed by a 6-s light gray fixation period. A brief break period was provided after each run upon a subject's request.

Active-Test Condition

Before the onset of an fMRI run with the active-test condition, subjects were informed that, during the run, they would conduct the same motion detection task on the target direction (the trained or untrained direction) by pressing 1 of the 2 buttons with their right hand.

Passive-Test Condition

Before the onset of an fMRI run with the passive-test condition, subjects were informed that they would conduct a letter detection task. In the letter detection task, subjects were asked to report whether the letter sequence contained the target letter (L or T) by pressing 1 of the 2 buttons during fMRI measurement.

Button Correspondence

The button correspondence (1 button for target present, the other button for target absent) was randomly reversed and informed to subjects prior to each run. This manipulation was made so that fMRI data would not reflect motor components that were related to subjects' button presses but reflect their perceptual contents.

Retinotopic Mapping

At the end of the pre-test fMRI stage, a standard retinotopic mapping procedure (Sereno et al. 1995; Engel et al. 1997; Tootell et al. 1997; Tootell and Hadjikhani 2001; Fize et al. 2003) was conducted. No retinotopic mapping procedure was conducted in the post-test fMRI stage.

Regions of Interest

We selected V1, V3A, MT+, and IPS as ROIs for the following reasons. As described in Introduction section, we hypothesized that feature-based plasticity occurs in a specific visual area where a trained visual feature is mainly processed. A number of studies in humans have indicated that global motion strongly activates V3A (Braddick et al. 2000, 2001; Koyama et al. 2005; Koldewyn et al. 2011; Maus et al. 2013) and that VPL of global motion is specifically associated with response changes in V3A, but not other visual areas (Shibata et al. 2012; Chen et al. 2015). MT+ is homologous of monkey's middle temporal area and has been implicated in processing of motion stimuli (Maunsell and Van Essen 1983; Huk and Heeger 2002), although recent studies reported no or little changes in this area in association with VPL of motion in humans or monkeys (Law and Gold 2008; Chen et al. 2015). We also hypothesized that task-based plasticity occurs in an area associated with processing of a trained visual task. V1 has been implicated in various types of VPL (Crist et al. 2001; Schoups et al. 2001; Schwartz et al. 2002; Furmanski et al. 2004; Li et al. 2004, 2008; Yotsumoto et al. 2008; Hua et al. 2010; Shibata et al. 2011; Jehee et al. 2012) and known to be differentially involved in different visual tasks including a detection task (Dupont et al. 1993; Orban et al. 1996; Huk and Heeger 2000; Jehee et al. 2011; Gilbert and Li 2013; Saproo and Serences 2014). IPS was selected because previous studies have reported monkey LIP, which is known as an important area for a perceptual decision-making task on global motion (Shadlen and Newsome 2001; Law and Gold 2008), is involved in VPL of global motion (Law and Gold 2008, 2009), and monkey LIP is a homologous to part of human IPS (Culham and Kanwisher 2001; Shikata et al. 2008). In addition, a recent human study showed that IPS is implicated in VPL of global motion (Chen et al. 2015).

The 4 ROIs such as V1, V3A, MT, and IPS were identified for each subject. We used a standard retinotopy analysis (Tootell et al. 1995, 1997; Fize et al. 2003; Yotsumoto et al. 2009; Thompson et al. 2013) to delineate the retinotopic region of V1, V3A, and MT+ on flattened cortical representations. IPS was anatomically defined using the BrainVoyager QX software (Brain Innovation, the Netherlands). Voxels from the left and right hemispheres were merged for each ROI.

FMRI Analysis

To quantify changes in fMRI responses to motion stimuli in each ROI between the pre- and post-test fMRI stages, a pattern-classification analysis (see below for details) was conducted. The pattern-classification analysis quantifies how precisely patterns of the fMRI responses in each ROI reflect the specific motion stimuli presented with subjects.

Measured fMRI signals were preprocessed using the BrainVoyager QX software. All functional images underwent 3D motion correction. No spatial or temporal smoothing was applied. Rigid-body transformations were performed to align the functional images to the structural image for each subject. A gray matter mask was used to extract fMRI data only from gray matter voxels for further analyses.

We used spatiotemporal patterns of fMRI signals for the pattern-classification analysis for each ROI. First, a time-course of fMRI signal intensities was extracted from each voxel of an ROI using the Matlab software (Mathworks, USA). Second, a linear trend was removed from the time-course. Third, the time-course was z-score normalized for each run to minimize baseline differences across the runs. Finally, the data sample for each trial was created by calculating changes in the fMRI signal intensities for 3 volumes after the trial onset for each voxel. Thus, the number of features used for the pattern-classification analysis for each ROI was 3 multiplied by the number of voxels in the ROI.

As a classifier for the pattern-classification analysis, we used a sparse logistic regression (Miyawaki et al. 2008; Yamashita et al. 2008; Kuncheva and Rodriguez 2010; Ryali et al. 2010; Shibata et al. 2011; Tong and Pratte 2012), which automatically selected the relevant features (see above for the definition of the features) for pattern classification. The mean (±SEM) numbers of features selected by the classifier across the conditions and subjects were 16.3 ± 0.1 for V1, 16.9 ± 0.2 for V3A, 16.5 ± 0.1 for MT+, and 15.3 ± 0.2 for IPS.

Performance of the classifier was evaluated using a leave-one-run-out cross-validation procedure for each subject. For each combination of the ROIs and 4 conditions (shown in Fig. 1), we trained the classifier to associate a pattern of the fMRI responses to the motion stimuli (random or coherent motion) using 80 data samples (40 samples for random motion, 40 samples for coherent motion) from 2 runs. We then calculated classification performance (percent correct) by testing how accurately the classifier predicted the motion stimuli (random or coherent motion) using independent data samples (20 samples for random motion, 20 samples for coherent motion) from a remaining run.

Apparatus

Visual stimuli were presented on a LCD display (CV722X, Tohoku; 1024 × 768 resolution, 60 Hz refresh rate) during the training stage and via a LCD projector (DLA-HD10KHK, Victor; 1024 × 768 resolution, 60 Hz refresh rate) during fMRI measurements in a dim room. All visual stimuli were made using the Matlab software and Psychtoolbox (Brainard 1997) on Mac OS X.

MRI Parameters

Subjects were scanned in a 3 T MR scanner (Siemens, Trio) with a head coil in the ATR Brain Activation Imaging Center. Subjects' fMRI responses were acquired using a gradient EPI sequence. In all fMRI experiments, 33 contiguous slices (TR = 2 s, voxel size = 3 × 3 × 3.5 mm3, 0 mm slice gap) oriented parallel to the AC-PC plane were acquired, covering the entire brain. For an inflated format of the cortex used for retinotopic mapping, T1-weighted MR images (MP-RAGE; 256 slices, voxel size = 1 × 1 × 1 mm3, 0 mm slice gap) were acquired during the pre-test fMRI stage.

Results

Behavioral Results

During the training stage, subjects' detection performance on the trained direction significantly improved (Day 1 vs. Day 10, paired t-test, t12 = 6.052, P < 10−4, Fig. 2B; see Supplementary Fig. 2A for the additional analysis based on the signal detection theory; Wickens 2001).

We compared subjects' detection performance in the pre- and post-test fMRI stages and found that direction-specific motion VPL occurred (Fig. 2C and Supplementary Fig. 2C). We performed 2-way ANOVA with repeated measures with factors being stage (pre- vs. post-test fMRI stages) and stimulus condition (trained vs. untrained directions) on performance in the active-test condition in which subjects conducted the motion detection task. A significant main effect was found for test (F1,12 = 45.029, P < 10−4), but not for stimulus condition (F1,12 = 2.166, P = 0.167). We also found a significant interaction between the 2 factors (F1,12 = 18.195, P = 0.001). These results indicate that the 10-day training resulted in VPL specifically of the trained direction (see Supplementary Fig. 2B for the additional analysis based on the signal detection theory; see Supplementary Fig. 2D for behavioral performance in each of the pre- and post-test fMRI stages in the passive-test condition).

FMRI Results

A purpose of this study was to test whether and if so how each ROI reflects feature- and task-based plasticity. According to our hypothesis as shown in Introduction section, feature-based plasticity occurs in a specific visual area that processes the trained visual feature, whereas task-based plasticity occurs in an area that is associated with processing of the trained visual task. To test the hypothesis, we selected V1, V3A, MT+, and IPS as ROIs (see Regions of interest section in Materials and Methods section for details).

To test whether each of ROIs reflected feature-based plasticity, task-based plasticity, or both, we compared fMRI responses with the motion stimuli between the pre- and post-test fMRI stages (Fig. 1). To quantify changes in the fMRI responses, the pattern-classification analysis was used (see fMRI analysis section in Materials and Methods section for details). The pattern-classification analysis quantifies how precisely patterns of the fMRI responses in each area reflect the specific motion stimuli presented in each condition.

In particular, we focused on fMRI responses to the trained motion direction. As described in Introduction, task-based plasticity and feature-based plasticity can be revealed based on the comparison of changes in fMRI responses to the trained motion direction between the active- and passive-test conditions. Since the behavioral results showed the motion VPL specific to the trained motion direction, we assumed that neither feature- nor task-based plasticity occurred for the untrained direction, which was 90 deg away from the trained direction. This assumption is based on previous studies showing that VPL of global motion leads to neural changes largely specific to the trained motion direction (Shibata et al. 2012; Chen et al. 2015).

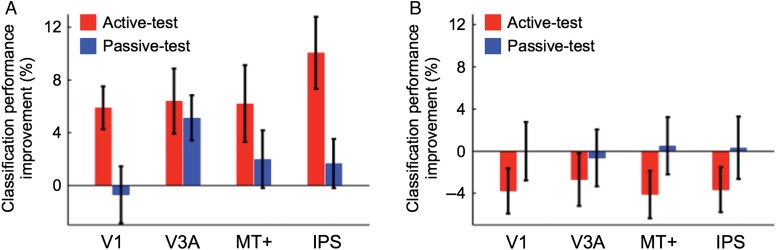

The following results of 4 statistical analyses suggest that V3A reflects feature-based plasticity, while V1 and IPS (and possibly MT+) reflect task-based plasticity in association with the VPL of global motion.

First, we tested whether the 4 ROIs showed different patterns of changes in fMRI responses to the trained motion direction (Fig. 3A and Supplementary Fig. 3; see Supplementary Fig. 4A for an additional analysis based on the signal detection theory). A 3-way ANOVA with repeated measures with factors being ROI (V1, V3A, MT vs. IPS), test (pre- vs. post-test fMRI stages), and task condition (active- vs. passive-test conditions) was applied to the classification performance. A significant main effect was found for test (F1,12 = 7.532, P = 0.019), but not for ROI (F3,36 = 0.660, P = 0.582), or task condition (F1,12 = 0.402, P = 0.538). In addition, we found significant interactions between ROI and test (F3,36 = 3.293, P = 0.031), between test and task condition (F1,12 = 5.363, P = 0.039), and among the 3 factors (F3,36 = 4.120, P = 0.013). These results are likely to reflect the difference between feature- and task-based plasticity.

Figure 3.

(A) Mean (±SEM) classification performance improvements across subjects for the trained direction from the pre- to post-test fMRI stages for V1, V3A, MT+, and IPS. Red and blue bars represent the classification performance improvements for the active- and passive-test conditions, respectively. (B) Mean (±SEM) classification performance improvements across subjects for the untrained direction from the pre- to post-test fMRI stages for V1, V3A, MT+, and IPS.

Second, we tested whether each of the 4 ROIs reflected feature- or task-based plasticity (Fig. 3A and Supplementary Fig. 3; see below for details of statistical results). If an ROI reflects feature-based plasticity, the classification performance in the ROI should improve between the pre- and post-test fMRI stages, irrespective of the task condition (active- or passive-test condition) during the fMRI measurements. That is, a significant main effect of the test stage should be observed, while there should be no significant main effect of the task condition or interaction between the test stage and task condition. On the other hand, if an ROI reflects task-based plasticity, the classification performance improvement in the ROI should be observed only in the active-test condition. In this case, a significant interaction between the test stage and task condition should be observed.

In V3A (Fig. 3A and Supplementary Fig. 3B), 2-way ANOVA with repeated measures with factors being test (pre- vs. post-test fMRI stages) and task condition (active- vs. passive-test conditions) was conducted on the classification performance for the trained direction. The results showed a significant main effect of test (F1,12 = 11.712, P = 0.005), but neither the main effect of task condition (F1,12 = 0.431, P = 0.524) nor interaction between the 2 factors (F1,12 = 0.248, P = 0.627) was found to be significant. These results are in accord with the hypothesis that neural sensitivity of V3A to the trained direction significantly improved due to training on the motion detection task, irrespective of whether subjects performed the task during the fMRI test stages. That is, the classification performance improvement obtained in the passive-test condition is not likely to be due to improvement in the task-related processing but due to refinement in representation of the trained motion direction. These results are in accord with the hypothesis that feature-based plasticity occurs in V3A.

In V1 (Fig. 3A and Supplementary Fig. 3A), the 2-way ANOVA on the classification performance showed a significant interaction between test and task condition (F1,12 = 10.024, P = 0.008), while no significant main effect was found for test (F1,12 = 2.606, P = 0.133) or task condition (F1,12 = 0.455, P = 0.512). Post hoc tests showed a simple main effect of test in the active-test condition (F1,24 = 9.467, P = 0.005), but not in the passive-test condition (F1,24 = 0.135, P = 0.716). That is, the significant change in the fMRI responses in V1 was observed only when subjects engaged in the trained task on the trained direction during the fMRI measurements. These results are in accord with the hypothesis that V1 reflects task-based plasticity. This hypothesis is also supported by the result of previous studies that V1 is involved in a detection task (Dupont et al. 1993; Orban et al. 1996; Huk and Heeger 2000; Jehee et al. 2011; Gilbert and Li 2013; Saproo and Serences 2014).

In IPS (Fig. 3A and Supplementary Fig. 3D), the 2-way ANOVA on the classification performance showed a significant main effect of test (F1,12 = 8.815, P = 0.012) and interaction between the 2 factors (F1,12 = 11.422, P = 0.006), but not the main effect of task condition (F1,12 = 0.925, P = 0.355). These results suggest that IPS is involved in task-based plasticity.

In MT+ (Fig. 3A and Supplementary Fig. 3C), the 2-way ANOVA on the classification performance showed only marginal effect of test (F1,12 = 3.920, P = 0.071). Neither task condition (F1,12 = 0.002, P = 0.968) nor interaction between the 2 factors (F1,12 = 1.908, P = 0.192) was significant. That is, although a certain degree of changes occurred in MT+ in association with the VPL of global motion, the change was not as robust as in V1, V3A, and IPS. This weak tendency of changes in MT+ is consistent with the previous findings that showed no or little changes in monkey MT (Law and Gold 2008) or human MT+ (Chen et al. 2015) in association with VPL of global motion.

Third, we further examined the degree to which classification performance improvement in each ROI is task-dependent. We calculated a task-dependency index (Supplementary Fig. 5) for each of V1, V3A, and IPS which had shown the significant classification performance improvement (Fig. 3A). The results are in accord with the results of ANOVA in that response changes in IPS and V1 reflected significant task-dependency, whereas changes in V3A did not.

Finally, we tested the validity of the aforementioned assumption that the fMRI responses to the untrained direction should not change when VPL of the trained motion direction occurs. Figure 3B shows the classification performance improvement for the untrained direction (see Supplementary Fig. 4B for an additional analysis based on the signal detection theory). A 3-way ANOVA with factors being ROI, test and task condition was applied to the classification performance to the untrained direction. While we found a significant main effect of ROI (F3,36 = 4.945, P = 0.006), none of main effects of test (F1,12 = 0.989, P = 0.340) and task condition (F1,12 = 0.004, P = 0.954) was significant. We did not find any significant interactions between ROI and test (F3,36 = 0.021, P = 0.996), ROI and task condition (F3,36 = 0.847, P = 0.477), test and task condition (F1,12 = 1.407, P = 0.259), or among the 3 factors (F3,36 = 0.377, P = 0.770). These results indicate that VPL of the trained direction influenced the fMRI responses specifically to the trained direction, but not to the untrained direction. This result is in accord with the behavioral results (Fig. 2C).

Discussion

The purpose of the present study was to provide empirical evidence that supports the 2-plasticity model on VPL. After training on a motion detection task, in V3A the fMRI response to the motion stimuli was changed specifically to the trained direction, independent of whether subjects conducted the motion detection task on the motion stimuli or they were passively exposed to the stimuli. This is in accord with the hypothesis that feature-based plasticity involves V3A, which has been suggested to be a main area that processes the type of motion stimulus used in this experiment (Braddick et al. 2000, 2001; Koyama et al. 2005; Koldewyn et al. 2011; Maus et al. 2013). On the other hand, in V1 and IPS significant changes in fMRI responses were found only when subjects performed the motion detection task on the trained direction. This result is consistent with the hypothesis that task-based plasticity involves V1 and IPS which are among the areas that have been reported to be differentially activated depending on visual tasks (Dupont et al. 1993; Orban et al. 1996; Huk and Heeger 2000; Shadlen and Newsome 2001; Law and Gold 2008; Jehee et al. 2011; Gilbert and Li 2013; Saproo and Serences 2014). Collectively, these results support the 2-plasticity model in which VPL of global motion results at least from the 2 qualitatively different types of plasticity (refinement in sensory representation and improvement in task processing), which are subserved by different areas.

Is there any possibility that no significant change in the classification performance for IPS in the passive-test condition (Fig. 3A, blue) was simply due to weak fMRI responses in this condition? In the pre-test fMRI stage, the mean classification performances for IPS were significantly above chance (50%) in both the active- (1-sample t-test, t12 = 4.145, P = 0.001) and passive- (t12 = 5.749, P < 10−3) test conditions (Supplementary Fig. 3D, blue). In addition, there was no significant difference in the mean classification performance for IPS between the active- and passive-test conditions in the pre-test fMRI stage (paired t-test, t12 = 0.484, P = 0.637). These results are at odds with the possibility that no change in the classification performance for IPS in the passive-test condition in the post-test stage was merely due to weak fMRI responses in IPS for the passive-test condition.

The pattern-classification analysis in this study revealed the significant activation changes in V3A to account for feature-based plasticity, and activation changes in V1 and IPS for task-based plasticity (Fig. 3A). Did the activation changes in these areas occur independently from each other? If this is the case, there should be no significant correlation in the classification performance improvements among these areas across subjects. However, we found significant correlations between the classification performance improvements among V1, V3A, and IPS (Supplementary Fig. 6). Thus, although V3A may be predominantly involved in feature-based plasticity and V1 and IPS may be predominantly involved in task-based plasticity, these 2 types of plasticity may cooperate with each other at some level. Future investigation is necessary for clarifying the nature of the cooperation.

Interestingly, no significant classification performance improvement was observed for the untrained direction (Fig. 3B) in the active-test condition as well as in the passive-test condition, in V1, MT+, or IPS in which active plasticity may have occurred. This result suggests that feature-based attention to the trained feature (Watanabe et al. 1998; Treue and Martinez Trujillo 1999; Saenz et al. 2002) is involved in task-based plasticity.

Note that the finding that V3A reflects feature-based plasticity in association with the VPL of global motion does not necessarily indicate that V3A is associated with feature-based plasticity in all types of VPL. As discussed above, a feature representation for global motion may occur mainly in V3A. We believe that is why the feature-based plasticity is associated with changes in V3A. Thus, it is likely that feature-based plasticity in VPL of a visual feature occurs in other areas depending on the feature.

Why did previous brain studies not clarify the 2 types of plasticity? The majority of previous studies measured neural activities in only either the active- or passive-test condition. Although some studies (Li et al. 2004, 2008; Law and Gold 2008; Adab and Vogels 2011) indeed employed both active- and passive-test conditions, they did not compare results from these 2 conditions to discern the 2 different types of plasticity in a brain area. As discussed in Introduction, in that case, it is impossible to determine whether the area is involved in feature-based plasticity, task-based plasticity, or both.

One previous fMRI study has reported that VPL of global motion is associated with changes in both V3A and connectivity between V3A and IPS (Chen et al. 2015). This result is in accord with our 2-plasticity model. However, their study did not show functional differences between these 2 areas. As discussed above, it is necessary to compare neural activations between the active- and passive-test conditions to determine whether a brain area is involved in feature-based plasticity or task-based plasticity. The present study compared neural activations between the 2 conditions and revealed, for the first time, the differential functional roles of V3A and IPS in VPL of global motion.

Note that VPL occurs as a result of exposure to a feature that is irrelevant to a given task (Watanabe et al. 2001, 2002; Seitz and Watanabe 2003; Xu, He, et al. 2012; Chang et al. 2014) as well as training on a feature that is task-relevant (task-relevant VPL). One previous study has reported that task-relevant VPL (Supplementary Fig. 7, top) is associated with changes in an area beyond the visual cortex, whereas task-irrelevant VPL (Supplementary Fig. 7, bottom) is associated with changes in visual areas (Zhang and Kourtzi 2010). The purpose and finding of this previous study are totally different from those of our present study. The previous study found that the area associated with VPL depends on the type of training (task performance vs. exposure) and, therefore, the type of VPL (task-relevant VPL vs. task-irrelevant VPL). On the other hand, the present study concerned only with task-relevant VPL and found that training on a task leads to 2 different types of plasticity, feature-based and task-based plasticity.

It was not clear whether task-relevant VPL is associated with refinement of a neural representation of a trained feature or improvement of processing related to a trained task or both. Although some recent studies have suggested that task-relevant VPL is associated with changes in multiple brain areas (Sagi 2011; Chen et al. 2015), the roles of these areas have remained unclear. The results of the present study suggest that task-relevant VPL is the end result of the qualitatively different types of neural plasticity that reflect the visual representation refinement and the improvement in task-related processing, which are subserved by different brain areas. These findings support the 2-plasticity model and represent an important step toward comprehensive understanding of VPL (Shibata et al. 2014; Watanabe and Sasaki 2015).

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

This work was conducted under the ImPACT Program of Council for Science, Technology and Innovation, Cabinet Office, Government of Japan. This work was also partially supported by NIH R01 EY015980, R01 EY019466, R01 MH091801; NSF 1539717; JSPS; and "Development of BMI Technologies for Clinical Application" of the Strategic Research Program for Brain Sciences supported by Japan Agency for Medical Research and Development (AMED). Funding to pay the Open Access publication charges for this article was provided by Advanced Telecommunications Research Institute International, Japan.

Supplementary Material

Notes

We thank Jonathan Dobres for editing an early draft of the paper and Yuka Oshima for technical assistance. Conflict of Interest: None declared.

References

- Adab HZ, Vogels R. 2011. Practicing coarse orientation discrimination improves orientation signals in macaque cortical area V4. Curr Biol. 21:1661–1666. [DOI] [PubMed] [Google Scholar]

- Bejjanki VR, Beck JM, Lu ZL, Pouget A. 2011. Perceptual learning as improved probabilistic inference in early sensory areas. Nat Neurosci. 14:642–648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braddick OJ, O'Brien JM, Wattam-Bell J, Atkinson J, Hartley T, Turner R. 2001. Brain areas sensitive to coherent visual motion. Perception. 30:61–72. [DOI] [PubMed] [Google Scholar]

- Braddick OJ, O'Brien JM, Wattam-Bell J, Atkinson J, Turner R. 2000. Form and motion coherence activate independent, but not dorsal/ventral segregated, networks in the human brain. Curr Biol. 10:731–734. [DOI] [PubMed] [Google Scholar]

- Brainard DH. 1997. The psychophysics toolbox. Spat Vis. 10:433–436. [PubMed] [Google Scholar]

- Byers A, Serences JT. 2014. Enhanced attentional gain as a mechanism for generalized perceptual learning in human visual cortex. Journal of Neurophysiology. 112:1217–1227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byers A, Serences JT. 2012. Exploring the relationship between perceptual learning and top-down attentional control. Vision Res. 74:30–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang LH, Shibata K, Andersen GJ, Sasaki Y, Watanabe T. 2014. Age-related declines of stability in visual perceptual learning. Curr Biol. 24:2926–2929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen N, Bi T, Zhou T, Li S, Liu Z, Fang F. 2015. Sharpened cortical tuning and enhanced cortico-cortical communication contribute to the long-term neural mechanisms of visual motion perceptual learning. NeuroImage. 115:17–29. [DOI] [PubMed] [Google Scholar]

- Crist RE, Li W, Gilbert CD. 2001. Learning to see: experience and attention in primary visual cortex. Nat Neurosci. 4:519–525. [DOI] [PubMed] [Google Scholar]

- Culham JC, Kanwisher NG. 2001. Neuroimaging of cognitive functions in human parietal cortex. Curr Opin Neurobiol. 11:157–163. [DOI] [PubMed] [Google Scholar]

- Dosher BA, Jeter P, Liu J, Lu ZL. 2013. An integrated reweighting theory of perceptual learning. Proc Natl Acad Sci U S A. 110:13678–13683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosher BA, Lu ZL. 1998. Perceptual learning reflects external noise filtering and internal noise reduction through channel reweighting. Proc Natl Acad Sci U S A. 95:13988–13993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dupont P, Orban GA, Vogels R, Bormans G, Nuyts J, Schiepers C, De Roo M, Mortelmans L. 1993. Different perceptual tasks performed with the same visual stimulus attribute activate different regions of the human brain: a positron emission tomography study. Proc Natl Acad Sci U S A. 90:10927–10931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel SA, Glover GH, Wandell BA. 1997. Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cereb Cortex. 7:181–192. [DOI] [PubMed] [Google Scholar]

- Fize D, Vanduffel W, Nelissen K, Denys K, Chef d'Hotel C, Faugeras O, Orban GA. 2003. The retinotopic organization of primate dorsal V4 and surrounding areas: a functional magnetic resonance imaging study in awake monkeys. J Neurosci. 23:7395–7406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furmanski CS, Schluppeck D, Engel SA. 2004. Learning strengthens the response of primary visual cortex to simple patterns. Curr Biol. 14:573–578. [DOI] [PubMed] [Google Scholar]

- Gilbert CD, Li W. 2012. Adult visual cortical plasticity. Neuron. 75:250–264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert CD, Li W. 2013. Top-down influences on visual processing. Nat Rev Neurosci. 14:350–363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JI, Watanabe T. 2010. Perceptual learning. Curr Biol. 20:R46–R48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green CS, Bavelier D. 2003. Action video game modifies visual selective attention. Nature. 423:534–537. [DOI] [PubMed] [Google Scholar]

- Harris H, Gliksberg M, Sagi D. 2012. Generalized perceptual learning in the absence of sensory adaptation. Curr Biol. 22:1813–1817. [DOI] [PubMed] [Google Scholar]

- Hua T, Bao P, Huang Z, Xu J, Zhou Y, Lu ZL. 2010. Perceptual learning improves contrast sensitivity of V1 neurons in cats. Curr Biol. 20:887–894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huk AC, Heeger DJ. 2002. Pattern-motion responses in human visual cortex. Nat Neurosci. 5:72–75. [DOI] [PubMed] [Google Scholar]

- Huk AC, Heeger DJ. 2000. Task-related modulation of visual cortex. J. Neurophysiol. 83:3525–3536. [DOI] [PubMed] [Google Scholar]

- Hung SC, Seitz AR. 2014. Prolonged training at threshold promotes robust retinotopic specificity in perceptual learning. J Neurosci. 34:8423–8431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jehee JF, Brady DK, Tong F. 2011. Attention improves encoding of task-relevant features in the human visual cortex. J Neurosci. 31:8210–8219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jehee JF, Ling S, Swisher JD, van Bergen RS, Tong F. 2012. Perceptual learning selectively refines orientation representations in early visual cortex. J Neurosci. 32:16747–16753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahnt T, Grueschow M, Speck O, Haynes JD. 2011. Perceptual learning and decision-making in human medial frontal cortex. Neuron. 70:549–559. [DOI] [PubMed] [Google Scholar]

- Karni A, Sagi D. 1991. Where practice makes perfect in texture discrimination: evidence for primary visual cortex plasticity. Proc Natl Acad Sci U S A. 88:4966–4970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim YH, Kang DW, Kim D, Kim HJ, Sasaki Y, Watanabe T. 2015. Real-time strategy video game experience and visual perceptual learning. J Neurosci. 35:10485–10492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koldewyn K, Whitney D, Rivera SM. 2011. Neural correlates of coherent and biological motion perception in autism. Dev Sci. 14:1075–1088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koyama S, Sasaki Y, Andersen GJ, Tootell RB, Matsuura M, Watanabe T. 2005. Separate processing of different global-motion structures in visual cortex is revealed by FMRI. Curr Biol. 15:2027–2032. [DOI] [PubMed] [Google Scholar]

- Kuncheva LI, Rodriguez JJ. 2010. Classifier ensembles for fMRI data analysis: an experiment. Magn Reson Imaging. 28:583–593. [DOI] [PubMed] [Google Scholar]

- Law CT, Gold JI. 2008. Neural correlates of perceptual learning in a sensory-motor, but not a sensory, cortical area. Nat Neurosci. 11:505–513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Law CT, Gold JI. 2009. Reinforcement learning can account for associative and perceptual learning on a visual-decision task. Nat Neurosci. 12:655–663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis CM, Baldassarre A, Committeri G, Romani GL, Corbetta M. 2009. Learning sculpts the spontaneous activity of the resting human brain. Proc Natl Acad Sci U S A 106:17558–17563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li W, Piech V, Gilbert CD. 2008. Learning to link visual contours. Neuron. 57:442–451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li W, Piech V, Gilbert CD. 2004. Perceptual learning and top-down influences in primary visual cortex. Nat Neurosci. 7:651–657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maunsell JH, Van Essen DC. 1983. Functional properties of neurons in middle temporal visual area of the macaque monkey. I. Selectivity for stimulus direction, speed, and orientation. J Neurophysiol. 49:1127–1147. [DOI] [PubMed] [Google Scholar]

- Maus GW, Fischer J, Whitney D. 2013. Motion-dependent representation of space in area MT+. Neuron. 78:554–562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miyawaki Y, Uchida H, Yamashita O, Sato MA, Morito Y, Tanabe HC, Sadato N, Kamitani Y. 2008. Visual image reconstruction from human brain activity using a combination of multiscale local image decoders. Neuron. 60:915–929. [DOI] [PubMed] [Google Scholar]

- Newsome WT, Pare EB.. 1998. A selective impairment of motion perception following lesions of the middle temporal visual are (MT). J Neurosci. 8:2201–2211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orban GA, Dupont P, Vogels R, De Bruyn B, Bormans G, Mortelmans L. 1996. Task dependency of visual processing in the human visual system. Behav Brain Res. 76:215–223. [DOI] [PubMed] [Google Scholar]

- Petrov AA, Dosher BA, Lu ZL. 2005. The dynamics of perceptual learning: an incremental reweighting model. Psychol Rev. 112:715–743. [DOI] [PubMed] [Google Scholar]

- Poggio T, Fahle M, Edelman S. 1992. Fast perceptual learning in visual hyperacuity. Science. 256:1018–1021. [DOI] [PubMed] [Google Scholar]

- Ryali S, Supekar K, Abrams DA, Menon V. 2010. Sparse logistic regression for whole-brain classification of fMRI data. NeuroImage. 51:752–764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saenz M, Buracas GT, Boynton GM. 2002. Global effects of feature-based attention in human visual cortex. Nat Neurosci. 5:631–632. [DOI] [PubMed] [Google Scholar]

- Sagi D. 2011. Perceptual learning in vision research. Vis Res. 51:1552–1566. [DOI] [PubMed] [Google Scholar]

- Saproo S, Serences JT. 2014. Attention improves transfer of motion information between V1 and MT. J Neurosci. 34:3586–3596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sasaki Y, Nanez JE, Watanabe T. 2010. Advances in visual perceptual learning and plasticity. Nat Rev Neurosci. 11:53–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoups A, Vogels R, Qian N, Orban G. 2001. Practising orientation identification improves orientation coding in V1 neurons. Nature. 412:549–553. [DOI] [PubMed] [Google Scholar]

- Schwartz S, Maquet P, Frith C. 2002. Neural correlates of perceptual learning: a functional MRI study of visual texture discrimination. Proc Natl Acad Sci U S A. 99:17137–17142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz AR, Watanabe T. 2003. Psychophysics: Is subliminal learning really passive? Nature. 422:36. [DOI] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, Rosen BR, Tootell RB. 1995. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science. 268:889–893. [DOI] [PubMed] [Google Scholar]

- Shadlen MN, Newsome WT. 2001. Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. J Neurophysiol. 86:1916–1936. [DOI] [PubMed] [Google Scholar]

- Shibata K, Chang L, Kim D, Náñez JE, Kamitani Y, Watanabe T, Sasaki Y. 2012. Decoding reveals plasticity in V3A as a result of motion perceptual learning. PLoS One. 7:e44003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shibata K, Sagi D, Watanabe T. 2014. Two-stage model in perceptual learning: toward a unified theory. Ann N Y Acad Sci. 1316:18–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shibata K, Watanabe T, Sasaki Y, Kawato M. 2011. Perceptual learning incepted by decoded fMRI neurofeedback without stimulus presentation. Science. 334:1413–1415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shikata E, McNamara A, Sprenger A, Hamzei F, Glauche V, Buchel C, Binkofski F. 2008. Localization of human intraparietal areas AIP, CIP, and LIP using surface orientation and saccadic eye movement tasks. Hum Brain Mapp. 29:411–421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shiu LP, Pashler H. 1992. Improvement in line orientation discrimination is retinally local but dependent on cognitive set. Percept Psychophys. 52:582–588. [DOI] [PubMed] [Google Scholar]

- Talluri BC, Hung SC, Seitz AR, Series P. 2015. Confidence-based integrated reweighting model of task-difficulty explains location-based specificity in perceptual learning. J Vis. 15:17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson B, Tjan BS, Liu Z. 2013. Perceptual learning of motion direction discrimination with suppressed and unsuppressed MT in humans: an fMRI study. PLoS One. 8:e53458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tong F, Pratte MS. 2012. Decoding patterns of human brain activity. Annu Rev Psychol. 63:483–509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tootell RB, Hadjikhani N. 2001. Where is “dorsal V4” in human visual cortex? Retinotopic, topographic and functional evidence. Cereb Cortex. 11:298–311. [DOI] [PubMed] [Google Scholar]

- Tootell RB, Mendola JD, Hadjikhani NK, Ledden PJ, Liu AK, Reppas JB, Sereno MI, Dale AM. 1997. Functional analysis of V3A and related areas in human visual cortex. J Neurosci. 17:7060–7078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tootell RB, Reppas JB, Dale AM, Look RB, Sereno MI, Malach R, Brady TJ, Rosen BR. 1995. Visual motion aftereffect in human cortical area MT revealed by functional magnetic resonance imaging. Nature. 375:139–141. [DOI] [PubMed] [Google Scholar]

- Treue S, Martinez Trujillo JC. 1999. Feature-based attention influences motion processing gain in macaque visual cortex. Nature. 399:575–579. [DOI] [PubMed] [Google Scholar]

- Watanabe T, Harner AM, Miyauchi S, Sasaki Y, Nielsen M, Palomo D, Mukai I. 1998. Task-dependent influences of attention on the activation of human primary visual cortex. Proc Natl Acad Sci U S A. 95:11489–11492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe T, Nanez JE, Sasaki Y. 2001. Perceptual learning without perception. Nature. 413:844–848. [DOI] [PubMed] [Google Scholar]

- Watanabe T, Nanez JE Sr., Koyama S, Mukai I, Liederman J, Sasaki Y. 2002. Greater plasticity in lower-level than higher-level visual motion processing in a passive perceptual learning task. Nat Neurosci. 5:1003–1009. [DOI] [PubMed] [Google Scholar]

- Watanabe T, Sasaki Y. 2015. Perceptual learning: toward a comprehensive theory. Annu Rev Psychol. 66:197–221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wickens TD. 2001. Elementary signal detection theory. Oxford: Oxford University Press. [Google Scholar]

- Xiao LQ, Zhang JY, Wang R, Klein SA, Levi DM, Yu C. 2008. Complete transfer of perceptual learning across retinal locations enabled by double training. Curr Biol. 18:1922–1926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu JP, He ZJ, Ooi TL. 2010. Effectively reducing sensory eye dominance with a push-pull perceptual learning protocol. Curr Biol. 20:1864–1868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu JP, He ZJ, Ooi TL. 2012. Perceptual learning to reduce sensory eye dominance beyond the focus of top-down visual attention. Vis Res. 61:39–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu S, Jiang W, Poo MM, Dan Y. 2012. Activity recall in a visual cortical ensemble. Nat Neurosci. 15:449–455, S441–442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamashita O, Sato MA, Yoshioka T, Tong F, Kamitani Y. 2008. Sparse estimation automatically selects voxels relevant for the decoding of fMRI activity patterns. NeuroImage. 42:1414–1429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang T, Maunsell JH. 2004. The effect of perceptual learning on neuronal responses in monkey visual area V4. J Neurosci. 24:1617–1626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yotsumoto Y, Sasaki Y, Chan P, Vasios CE, Bonmassar G, Ito N, Nanez JE Sr., Shimojo S, Watanabe T. 2009. Location-specific cortical activation changes during sleep after training for perceptual learning. Curr Biol. 19:1278–1282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yotsumoto Y, Watanabe T, Sasaki Y. 2008. Different dynamics of performance and brain activation in the time course of perceptual learning. Neuron. 57:827–833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu C, Klein SA, Levi DM. 2004. Perceptual learning in contrast discrimination and the (minimal) role of context. J Vis. 4:169–182. [DOI] [PubMed] [Google Scholar]

- Zhang J, Kourtzi Z. 2010. Learning-dependent plasticity with and without training in the human brain. Proc Natl Acad Sci U S A. 107:13503–13508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang JY, Zhang GL, Xiao LQ, Klein SA, Levi DM, Yu C. 2010. Rule-based learning explains visual perceptual learning and its specificity and transfer. J Neurosci. 30:12323–12328. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.