Abstract

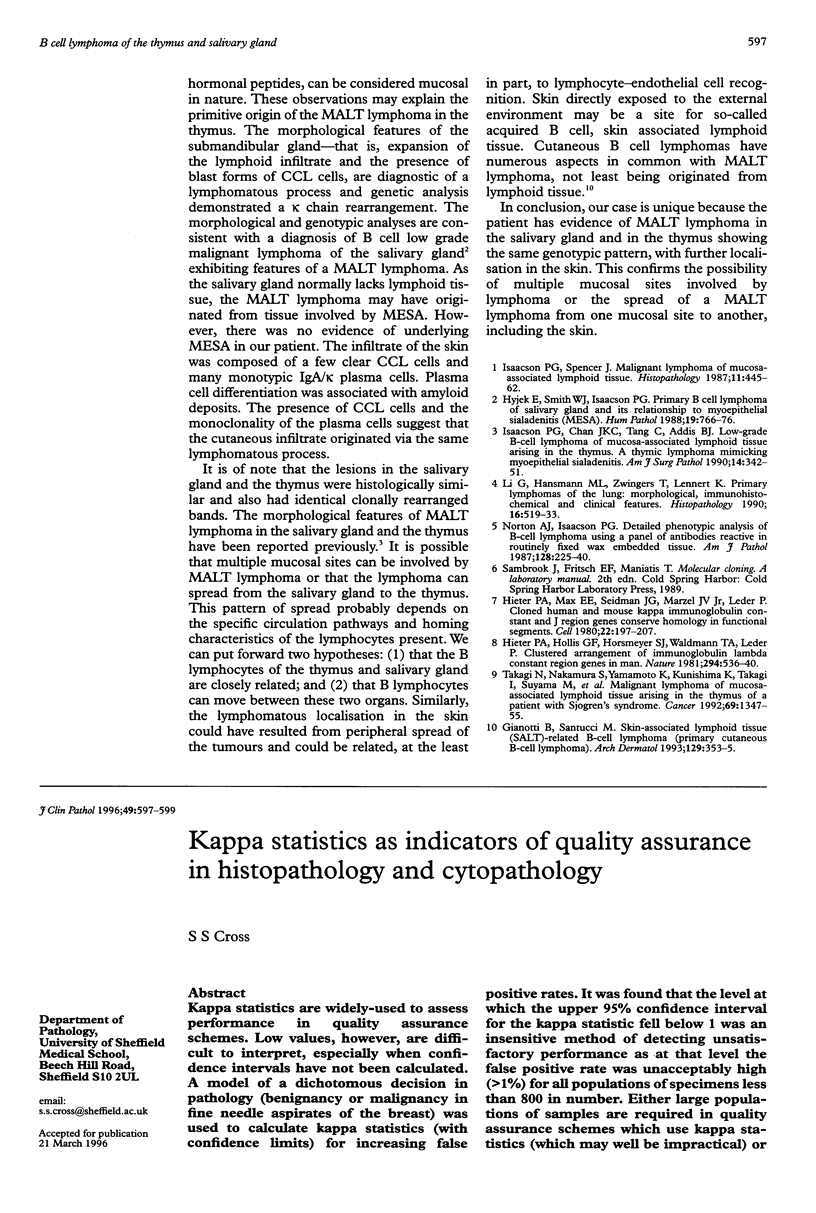

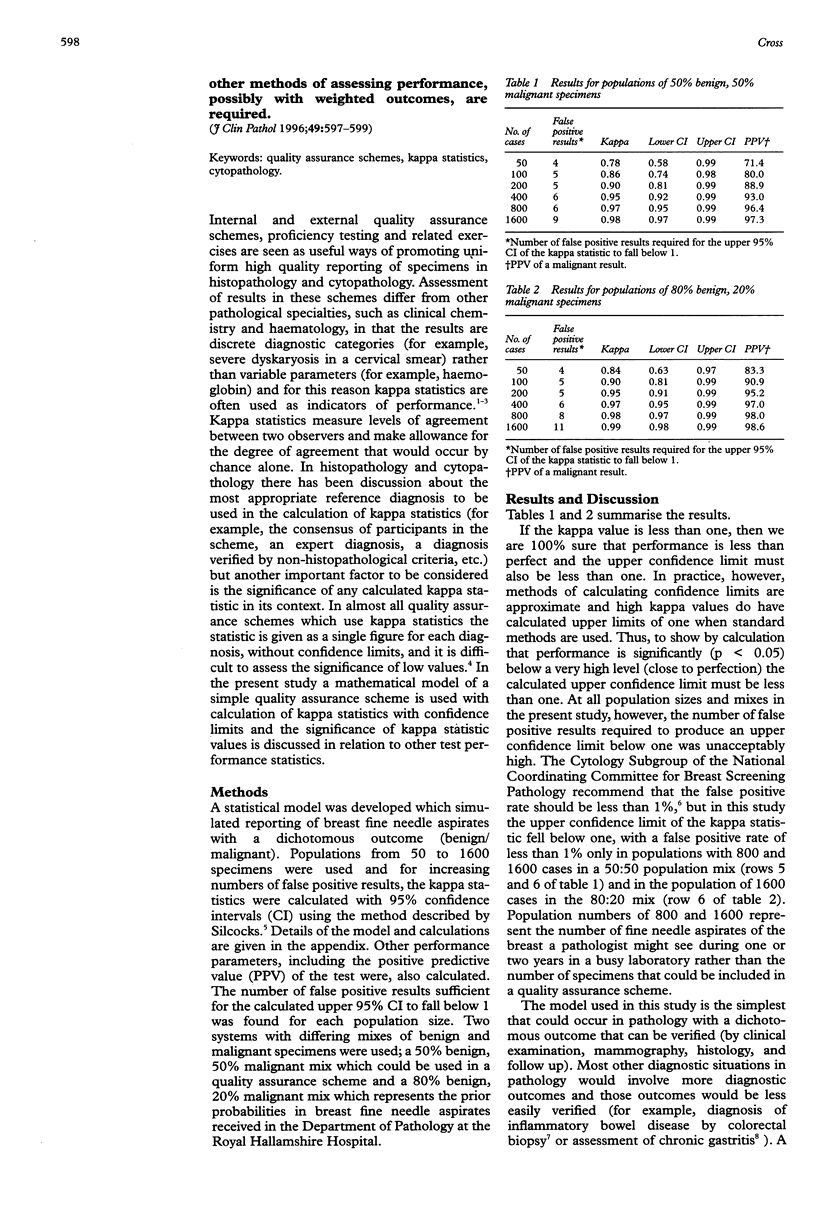

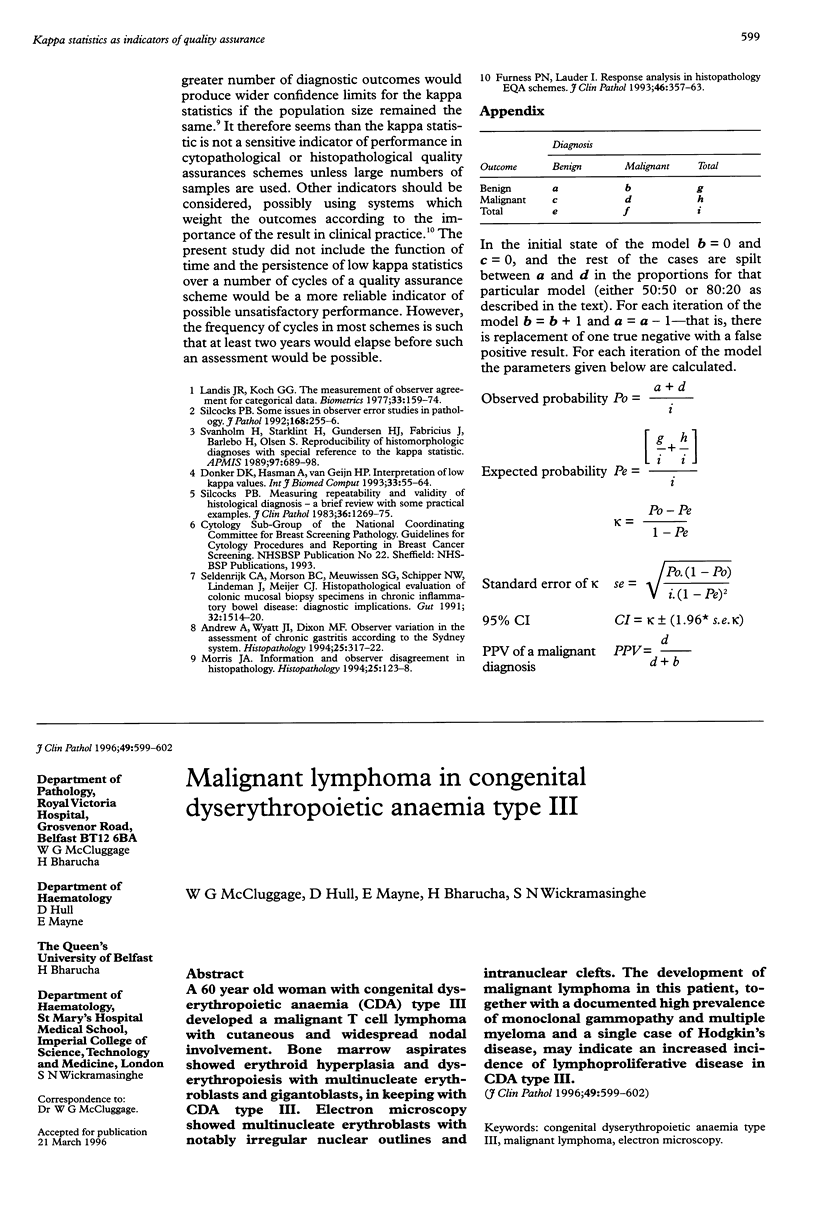

Kappa statistics are widely-used to assess performance in quality assurance schemes. Low values, however, are difficult to interpret, especially when confidence intervals have not been calculated. A model of a dichotomous decision in pathology (benignancy or malignancy in fine needle aspirates of the breast) was used to calculate kappa statistics (with confidence limits) for increasing false positive rates. It was found that the level at which the upper 95% confidence interval for the kappa statistic fell below 1 was an insensitive method of detecting unsatisfactory performance as at that level the false positive rate was unacceptably high (> 1%) for all populations of specimens less than 800 in number. Either large populations of samples are required in quality assurance schemes which use kappa statistics (which may well be impractical) or other methods of assessing performance, possibly with weighted outcomes, are required.

Full text

PDF

Selected References

These references are in PubMed. This may not be the complete list of references from this article.

- Andrew A., Wyatt J. I., Dixon M. F. Observer variation in the assessment of chronic gastritis according to the Sydney system. Histopathology. 1994 Oct;25(4):317–322. doi: 10.1111/j.1365-2559.1994.tb01349.x. [DOI] [PubMed] [Google Scholar]

- Donker D. K., Hasman A., van Geijn H. P. Interpretation of low kappa values. Int J Biomed Comput. 1993 Jul;33(1):55–64. doi: 10.1016/0020-7101(93)90059-f. [DOI] [PubMed] [Google Scholar]

- Furness P. N., Lauder I. Response analysis in histopathology external quality assessment schemes. J Clin Pathol. 1993 Apr;46(4):357–363. doi: 10.1136/jcp.46.4.357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landis J. R., Koch G. G. The measurement of observer agreement for categorical data. Biometrics. 1977 Mar;33(1):159–174. [PubMed] [Google Scholar]

- Morris J. A. Information and observer disagreement in histopathology. Histopathology. 1994 Aug;25(2):123–128. doi: 10.1111/j.1365-2559.1994.tb01567.x. [DOI] [PubMed] [Google Scholar]

- Seldenrijk C. A., Morson B. C., Meuwissen S. G., Schipper N. W., Lindeman J., Meijer C. J. Histopathological evaluation of colonic mucosal biopsy specimens in chronic inflammatory bowel disease: diagnostic implications. Gut. 1991 Dec;32(12):1514–1520. doi: 10.1136/gut.32.12.1514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silcocks P. B. Measuring repeatability and validity of histological diagnosis--a brief review with some practical examples. J Clin Pathol. 1983 Nov;36(11):1269–1275. doi: 10.1136/jcp.36.11.1269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silcocks P. Some issues in observer error studies in pathology. J Pathol. 1992 Nov;168(3):255–256. doi: 10.1002/path.1711680302. [DOI] [PubMed] [Google Scholar]

- Svanholm H., Starklint H., Gundersen H. J., Fabricius J., Barlebo H., Olsen S. Reproducibility of histomorphologic diagnoses with special reference to the kappa statistic. APMIS. 1989 Aug;97(8):689–698. doi: 10.1111/j.1699-0463.1989.tb00464.x. [DOI] [PubMed] [Google Scholar]