Abstract

We investigated the neural correlates supporting three kinds of memory judgments after very short delays using naturalistic material. In two functional magnetic resonance imaging (fMRI) experiments, subjects watched short movie clips, and after a short retention (1.5–2.5 s), made mnemonic judgments about specific aspects of the clips. In Experiment 1, subjects were presented with two scenes and required to either choose the scene that happened earlier in the clip (“scene‐chronology”), or with a correct spatial arrangement (“scene‐layout”), or that had been shown (“scene‐recognition”). To segregate activity specific to seen versus unseen stimuli, in Experiment 2 only one probe image was presented (either target or foil). Across the two experiments, we replicated three patterns underlying the three specific forms of memory judgment. The precuneus was activated during temporal‐order retrieval, the superior parietal cortex was activated bilaterally for spatial‐related configuration judgments, whereas the medial frontal cortex during scene recognition. Conjunction analyses with a previous study that used analogous retrieval tasks, but a much longer delay (>1 day), demonstrated that this dissociation pattern is independent of retention delay. We conclude that analogous brain regions mediate task‐specific retrieval across vastly different delays, consistent with the proposal of scale‐invariance in episodic memory retrieval. Hum Brain Mapp 36:2495–2513, 2015. © 2015 The Authors Human Brain Mapping Published by Wiley Periodicals, Inc.

Keywords: cinematic material, scale‐invariance, short‐/long‐delays, “what‐where‐when” tripartite pattern

INTRODUCTION

In memory research, there is a long‐held distinction between mechanisms involved in short‐term memory (STM) and long‐term memory [LTM; see Shallice and Warrington, 1970; for classic neuropsychological data in patients]. Baddeley and Hitch posited that there are multiple short‐term buffers as temporary memory stores, each of them separate from the stores for long‐term retention [1974]. More recently, we have seen an expansion of evidence challenging this dissociation [Jonides et al., 2008; Nee et al., 2008; Ranganath and Blumenfeld, 2005]. For example, it has been put forward that different local circuits mediate STM vs. LTM processes within the same cortical area [Gaffan, 2002; Howard et al., 2005]. By these views, the distinction between STM and LTM can be explained through differences in the intraregional neuronal dynamics within the same memory areas [Fuster, 2006, 2009], or through differences in the level of activity within these common memory areas/networks [Jonides et al., 2005; Postle, 2006].

We sought to contribute to this debate by characterizing task‐specific functional dissociations during the immediate retrieval of “what‐where‐when” information using complex and naturalistic material [Holland and Smulders, 2011]. In LTM, previous neuroimaging studies have investigated memory retrieval based on this classification [Burgess et al., 2001; Ekstrom and Bookheimer, 2007; Ekstrom et al., 2011; Fujii et al., 2004; Hayes et al., 2004; Nyberg et al., 1996]. However, the brain regions involved in attribute‐specific retrieval do not appear to correspond following long‐term retention delays [Furman et al., 2012; Magen et al., 2009] versus short‐term delays [Mohr et al., 2006; Munk et al., 2002]. We consider that the lack of such correspondence could arise from the fact that paradigms used in taxing STM and LTM retrieval had been designed differently to accommodate the vast difference in delays.

In STM studies, the use of simple stimuli enabled researchers to accurately control for many stimulus parameters (e.g., the number of objects and stimulus position), but led to experimental conditions that substantially differ from everyday situations that require the processing of complex and dynamic sensory input. While memory for simple visual displays, words, and spatial positions is thought to be supported by capacity‐limited buffers in which only a few items can be held [Baddeley and Hitch, 1974], memory for other types of material [e.g., unknown faces; and possibly scenes, see Shiffrin, 1973], which reflect more closely the dynamic nature of real‐life situations, may tap into the same high‐capacity system as long‐term episodic memory [Shallice and Cooper, 2011].

The main aim of this study was to investigate task‐specific functional dissociations for immediate memory retrieval using naturalistic stimuli, and to compare these with the results of a previous LTM study that used analogous material and tasks. We devised a short‐delay version of the paradigm that we previously used to investigate different types of judgment during retrieval from LTM [Kwok et al., 2012]. In the original LTM paradigm, participants watched a 42‐min Television (TV) film, and 24 h later, made discriminative choices of scenes extracted from the film during fMRI. Subjects were presented with two scenes and required to either choose the scene that happened earlier in the film, or the scene with a correct spatial arrangement, or the scene that had been shown. This paradigm revealed a tripartite dissociation within the retrieval network [Hayama et al., 2012], comprising the precuneus and the angular gyrus for temporal order judgments, dorsal frontoparietal cortices for spatial configuration judgments, and anteromedial temporal regions for simple recognition of objects/scenes.

Here, we used modified versions of the original paradigm and tested for task‐specific dissociations in two fMRI experiments that included just 1.5–2.5 s between the end of the encoding phase and the retrieval phase. In both experiments, we asked the participants to watch short TV commercial clips (7.72–11.4 s), and after a brief delay (1.5–2.5 s), to make memory judgments about the “temporal‐chronology,” the “spatial‐layout” or “scene‐recognition” of the encoded stimuli. The stimuli for encoding comprised video clips of naturalistic events, which are unlikely to be stored into capacity‐limited memory buffers and more closely reflect the dynamic nature of real‐life situations. Because of the stimulus complexity, memory retrieval could be supported by a combination of various strategies, potentially with each of them utilized to a different extent during the three tasks. With respect to this, some difference may be expected between the current studies and previous STM studies that have used simpler and stereotyped stimuli—such as geometrical shapes or lists of words—which permit a more rigorous control over processes specific to the retrieval of temporal, spatial, and object details [e.g., Harrison et al., 2010; and see Discussion Section). Nonetheless, for consistency with our previous LTM study [Kwok et al., 2012], in the sections below we adopted the original labels of “Temporal,” “Spatial,” and “Object” tasks.

In the first experiment (Exp 1), each retrieval trial included two scenes (still frames) presented side‐by‐side and the participants were required to either choose the scene that happened earlier in the clip (“scene‐chronology” judgment; Temporal task), or that with a correct spatial arrangement (“scene‐layout” judgment; Spatial task), or that had been shown during encoding (“scene‐recognition” judgment; Object task). Thus, the retrieval cues were identical to those used in the previous LTM experiment, which enabled us to assess the imaging data using exactly the same contrasts/subtractions. In the second experiment (Exp 2), we again assessed task‐specific dissociations during immediate retrieval, but now presenting only one single probe during retrieval (i.e., either a “seen” or an “unseen” image for scene‐layout and the scene‐recognition tasks, see Methods). With this, we could compare the three retrieval tasks using only trials including seen/old probe images, avoiding any possible confound related to the presentation of the unseen foil images. Moreover, the substantial changes of the task structure across experiments 1 and 2 enabled us to confirm any task‐specific dissociation under different sets of task‐constraints.

On the assumption that memory for complex, naturalistic events depends negligibly on capacity‐limited memory buffers, we hypothesized task‐specific functional dissociations during retrieval after short delays were analogous to those observed during retrieval after long delays. This was tested by identifying any task‐specific brain activation during retrieval after short delays (“task‐specific” analyses, Exp 1 and 2), and by comparing these effects with data from the previous LTM experiment [“conjunction analyses” between the current Exp 1 and LTM data from Kwok et al., 2012]. Findings of task‐specific dissociations equivalent to those previously reported for LTM would support the view that memory for naturalistic material can accommodate a vast range of retention delays [see also Maylor et al., 2001 for scale‐invariance in free recall memory). In addition, we controlled that the task‐specific effects at retrieval were not merely due to the new encoding of unencountered material (“seen‐only” analyses, in Exp 2), and tested whether the task‐specificity was determined by the successful retrieval outcome, or rather reflected the attempt to recover relevant mnemonic details [Rugg and Wilding, 2000]. Confirmation of our hypotheses about task‐specific functional dissociations during retrieval after short delays would indicate that the processes governing memory for naturalistic events are similarly guided by task‐specific constraints across various retention delays, and provide evidence for the notion of scale‐invariance in memory functions [Brown et al., 2007; Howard and Kahana, 2002].

MATERIALS AND METHODS

Overview

The trial structure of Exp 1 and Exp 2 were analogous: the subject watched a short video clip and then performed one of three retrieval tasks about some details of the clip. The retrieval tasks aimed at tapping into different processes associated with the judgment of scene‐chronology (Temporal task), scene‐layout (Spatial task), or scene‐recognition (Object task). The retrieval task was cued at the onset of the probe images. This cueing procedure ensured that the subjects encoded the movie clips without anticipating what specific stimulus detail would be probed at retrieval (see Fig. 1A).

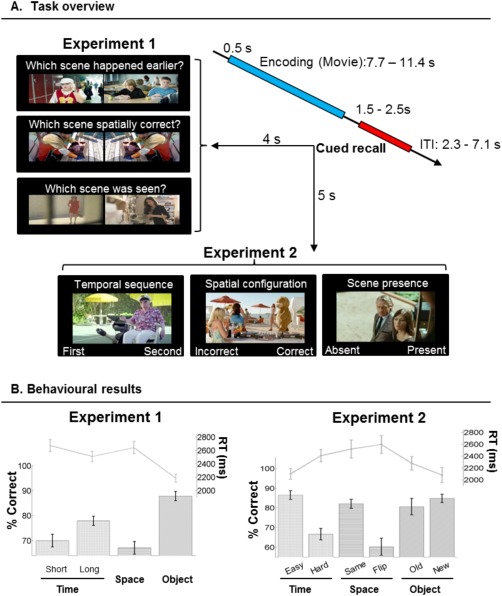

Figure 1.

Task schema and behavioral performance for Experiments 1 and 2. (A) Trial timeline, probe images and cues at retrieval. Each trial carried an identical structure consisting of presentation of a short movie clip, followed by a cued retrieval test. The retrieval test comprised either a pair of probe images (Exp 1) or one single probe (either target or foil; Exp 2). (B) Accuracy presented in bars (% correct; unit on left ordinate axes) and response times in lines (ms; right ordinate axes) for Experiment 1 (left panel) and Experiment 2 (right panel). Error bars are standard error of the means.

In Exp 1, the retrieval phase comprised the presentation of two images side‐by‐side. For the Temporal task, both scenes were extracted from the clip and the subject was asked to choose the scene that had happened at an earlier time point in the clip (target). For the Spatial and Object tasks, the judgment at retrieval was between one scene that had been presented (target) and another one that had not been presented (foil). We investigated task‐specific dissociations by directly comparing each of the tasks with the average of the other two tasks. Further, we performed conjunction analyses between the current dataset of Exp 1 and a previous LTM experiment that made use of the same retrieval procedure, but with a longer movie (42‐min) and a retention delay of 24 h [Kwok et al., 2012]. This enabled us to formally test whether there was any functional overlap between the patterns of task‐specific dissociation following short and long delays (1.5–2.5 s vs. 24 h).

Exp 1 was designed to match the previous LTM study [Kwok et al., 2012], thus including two images (target and foil) in each retrieval trial. However, with this procedure the retrieval‐display of scene‐recognition Object task included an unseen image and the display of the scene‐layout Spatial task included an unseen image‐configuration. The presentation of these unseen foils may have contaminated any task‐specific effects associated with the Spatial and Object tasks (e.g., incidental encoding of new images or new image‐configurations). This was addressed in Exp 2 that entailed the presentation of one single image at retrieval and required the subject to answer with a yes/no response. In the Spatial and the Object tasks, the probe was either a seen/old image extracted from the video or an unseen image. The Temporal task always comprised the presentation of a probe image extracted from the clip, but the task now involved judging whether the image belonged to the first or second half of the clip. We again assessed task‐specific dissociations during immediate retrieval, but now by comparing the three tasks using only trials including seen/old probe images to avoid any possible confound related to the unseen images.

Subjects

Seventeen participants took part in Exp 1 (mean age: 25.8, 21–33 years; 8 females) and another 17 different participants took part in Exp 2 (mean age: 25.4, 20–35 years; 7 females). All participants gave written informed consent, had normal or corrected‐to‐normal vision, without known history of psychiatric/neurological problems, and were free from any MRI contraindication. The study was approved by the Fondazione Santa Lucia (Scientific Institute for Research Hospitalization and Health Care) Independent Ethnics Committee, in accordance with the Declaration of Helsinki.

Stimuli

Movie clips

We purchased a collection of non‐Italian TV commercial clips from an Advertising Archive (http://www.coloribus.com/) and reproduced 160 short clips using a video editing software (Final Cut Pro, Apple Inc.). Each clip was purposely edited to depict a coherent storyline with multiple switches of scenes. The mean length was 9.59 s, with a range of 7.72–11.40 s. We preserved the background sound, and used only segments without dialogues/conversations. The whole set of 160 clips was used in Exp 1, and each clip was assigned to one of the three retrieval tasks: 96 Temporal, 32 Spatial, and 32 Object clips. For Exp 2, we selected a subset of 144 clips and each clip was used for all the three tasks, counterbalanced across subjects.

Clip analyses

We performed a frame‐by‐frame analysis to mark the changes of scene for each clip. The scene changes included both between‐scenes cuts (no scene continuity, i.e., edit cut from one scene to another) and within‐scenes cuts [scene continuity, but with a change of camera perspective; Smith and Henderson, 2008]. This frame‐by‐frame analysis allowed us to identify boundary frames that divided segments within each clip. The number of segments across all clips ranged between 4 and 10. The first and last segments were always excluded for the retrieval tests to mitigate end‐of‐list distinctiveness effects [Cabeza et al., 1997; Konishi et al., 2002]. Specifically for Exp 2, we divided each clip into 20 segments with equal duration. The memory probe images were extracted from 12 of these segments: 3–5 (1st quartile), 7–9 (2nd quartile), 12–14 (3rd quartile) and 16–18 (4th quartile) (cf. Temporal task).

Probe images extraction

Exp 1

After excluding the first and last segments, for the Temporal trials we pseudorandomly selected two images from two different segments from each temporal clip. This represented a range of temporal distances between the two probe images across all the Temporal trials. The temporal distances varied between 0.60 and 4.96 s. The Spatial trials were generated by pairing a target image with its own mirror image (by laterally flipping the target image). The Object trials were generated by pairing a target image with an unseen image that also contained the same object/character from an edited‐out part of the original clip.

Exp 2

Irrespective of task, we selected one probe image from each clip, which was used as the target image. This image was extracted with an equal likelihood from one of the 4 quartiles (see Clip analyses, above), thus with half of them coming from the first half and the other half from the second half of the clip. For the Spatial task, the foil images were mirror images of the targets, whereas for the Object task new images were extracted from an edited‐out part of the original clip as the foil images.

Trial structure

The trial structure was identical in Exp 1 and 2: each trial began with a green fixation cross for 0.5 s, followed by the presentation of a clip lasting for 7.72–11.4 s, then a blank screen for a period of 1.5–2.5 s. This was followed by a retrieval test (Fig. 1A). The two experiments differed in how memory was probed during retrieval. The retrieval test of Exp 1 contained a pair of probe images and a written cue above the images, both of which were presented on the screen for 4 s, whereas that of Exp 2 contained only one probe image and a written cue, both presented for 5 s. In both experiments, the written cue specified what task the subject had to perform on that trial. Trials were separated by a white fixation cross, with jittered ITIs sampled from a truncated logarithmic distribution (trial durations ranged between 20.7 and 22.8 s). We presented the stimuli using Cogent Toolbox (http://www.vislab.ucl.ac.uk/cogent.php) implemented in MATLAB 7.4 (The MathWorks, Natick, MA). During fMRI scanning, all stimuli were back‐projected on a screen at the back of the MR bore and viewed through a mirror system (20 × 15° visual angle). Participants were given detailed written instructions and were explained of the task requirements prior to the commencement of the experiments.

Retrieval Tasks

Experiment 1

The retrieval test included three main experimental conditions: Temporal task (96 trials), Spatial task (32 trials), and Object task (32 trials). The reason for the larger number of Temporal trials was that this task comprised two different trial types (Tshort and Tlong) that were classified—and modeled separately in the main fMRI analysis—according to the temporal distance between the two probe images (see also Supporting Information Table 1). This distinction was motivated by previous findings showing that the temporal separation of the probes at encoding can affect retrieval performance and brain activity during temporal order judgments with naturalistic material [Kwok et al., 2012; St. Jacques et al., 2008].

For all three tasks, each retrieval trial comprised a pair of probe images arranged with a left/right side‐by‐side configuration: one of the images was the target, the other was the foil. The left‐right position of the target/foil images was counterbalanced within conditions. Subjects indicated the left/right stimulus by pressing either one of the two keys of a response box. Scene‐chronology judgment (Temporal task, T): Subjects were instructed to remember the order of the events in the clip, so as to choose the image that had happened at an earlier time point. Scene‐layout judgment (Spatial task, S): Subjects were instructed to focus on the spatial layout and choose the probe image that had the identical spatial arrangement as depicted in the clip. Scene‐recognition judgment (Object task, O): Subjects were instructed to focus on the content of the probe images and to identify the image they had seen in the clip.

It should be noted that the retrieval of complex stimuli, as those used here, can be achieved via many different strategies. For example, to solve the Spatial task participants may attend to and store the location of a specific person in the scene, the facing direction of a car and so on, rather than processing the overall spatial details within the scene. For this reason, we emphasize that the “labels” used here (Temporal/Spatial/Object) do not imply any exclusive strategy for solving the tasks, but rather are meant to refer to the specificity of the type of judgment implicated during retrieval.

Experiment 2

The aim of Exp 2 was to test for task‐specific dissociations comparing trials that included only “seen” images (i.e., images extracted from the video). The retrieval test included the three main conditions (Temporal task, 48 trials; Spatial task, 48 trials; and Object task, 48 trials), but now each trial comprised one single probe image. Scene‐chronology (Temporal): Subjects were required to recall the probe image's temporal location with respect to the midpoint of the clip, by choosing between the “first half” or the “second half” of the clip. Subjects were required to press the left button if the probe image happened in the first half or the right button if the image happened in the second half. Scene‐layout (Spatial): Subjects were instructed to focus on the spatial layout and to judge whether the probe depicted a scene with a spatial arrangement as identical to the clip, or it was a mirror image. An identical spatial configuration required the subject to press the “correct” button, whereas a mirror image required a press to the “incorrect” button. The mapping of “correct/incorrect” (left/right) layouts was counterbalanced across subjects. Scene‐recognition (Object): Subjects were instructed to focus on the content of the probe image and to indicate whether the image had been shown in the clip. If it had been shown, the subject responded with the “present” button, otherwise with the “absent” button. Again, the left/right mapping of “present/absent” responses was counterbalanced between subjects.

Since only a single image was now presented at retrieval, the Temporal task could not involve any factor of “temporal distance” (cf. Tshort/Tlong in Exp 1). Instead, in Exp 2, we found that the behavioral performance was affected by the “temporal position” of the probe image during encoding (easier, if extracted from the start/end of the clip; harder, if extracted from around the middle). Accordingly, the temporal trials were now classified, and modeled separately in the fMRI analysis, as Teasy and Thard (see also Supporting Information Table 1).

fMRI Data Acquisition and Preprocessing

The functional images were acquired on a Siemens Allegra (Siemens Medical Systems, Erlangen, Germany) 3 Tesla scanner equipped for echo‐planar imaging (EPI). A quadrature volume head coil was used for radio frequency transmission and reception. Head movement was minimized by mild restraint and cushioning. Thirty‐two slices of functional MR images were acquired using blood oxygenation level‐dependent imaging (3 × 3 mm in‐plane, 2.5 mm thick, 50% distance factor, repetition time = 2.08 s, echo time = 30 ms, flip angle = 70°, FOV = 192 mm, acquisition order = continuous, ascending), covering the whole cortex. We acquired 1,648 and 1,488 fMRI volumes for each subject in Exp 1 and 2, respectively. Images were acquired across 4 fMRI runs in both experiments. The total acquisition periods lasted for 55 and 49 min, respectively.

Data preprocessing was performed with SPM8 (http://www.fil.ion.ucl.ac.uk/spm) implemented on Matlab 7.4 (The MathWorks, Natick, MA). After having discarded the first 4 volumes in each run, images were realigned to correct for head movements. Slice‐acquisition delays were corrected using the middle slice as a reference. Images were then normalized to the MNI EPI template, resampled to 3 mm isotropic voxel size and spatially smoothed using an isotropic Gaussian Kernel (FWHM = 8 mm).

fMRI Analyses

Statistical inference was based on a random effects approach. This comprised two steps: first‐level analyses estimating contrasts of interest for each subject followed by second‐level analyses for statistical inference at the group‐level [Penny and Holmes, 2004]. For each of the two experiments, we used several different models depending on the specific questions that we sought to address (see below, and Supporting Information Table 1).

All the first‐level, subject‐specific models included one regressor modeling the presentation of the video clips irrespective of task, plus several regressors that modeled the retrieval phase according to the different conditions of interest (see leftmost column in Supporting Information Table 1). The regressor modeling the “encoding phase” (Movie‐regressor) was time‐locked to the onset of each video and had an event duration corresponding to the duration of each specific video (i.e., 7.72–11.40 s). All the retrieval‐related regressors were time‐locked to the onset of the probe image(s) and with a duration corresponding to the probe presentation at retrieval, that is, 4 s in Exp 1 and 5 s in Exp 2. All regressors were convolved with the SPM8 canonical hemodynamic response function. Time series were high‐pass filtered at 128 s and prewhitened by means of autoregressive model AR(1). The sections below detail how the trials were assigned to the retrieval regressors/conditions in the different analyses.

Experiment 1

For Exp 1 we used two different sets of first‐level models (see Supporting Information Table 1, leftmost column). The first set of models included the encoding‐regressor (Movie), plus 5 retrieval‐related conditions: temporal‐short (Tshort), temporal‐long (Tlong), spatial (S), object (O) trials and a condition modeling all the error trials irrespective of task (Error). The trials belonging to the Temporal task were modeled with two separate regressors accounting for the factor of temporal distance [Tshort vs. Tlong; cf. Kwok et al., 2012]. All temporal trials including probes with a temporal distance shorter than 1.9 s were modeled as Tshort, while those longer than 1.9 s modeled as Tlong.

The main second‐level analysis consisted of a within‐subjects Analysis of variance (ANOVA) modeling the four effects of interest: Tshort, Tlong, S, and O conditions. For each subject and each of the four conditions, a contrast image was computed in the first‐level model by averaging the corresponding parameter estimates across the 4 fMRI runs. These subject‐specific contrast images were entered in the second‐level ANOVA. For the identification of task‐specific retrieval effects, we compared each task with the mean of the two other tasks, averaging the two Temporal conditions: “T(short+long)/2 > (S + O)/2”; “S > (T(short+long)/2 + O)/2”; and “O > (T(short+long)/2 + S)/2.” Sphericity‐correction was applied to all group‐level ANOVAs to account for any nonindependent error term for repeated measures and any difference in error variance across conditions [Friston et al., 2002]. The P‐values were corrected for multiple comparisons using a cluster‐level threshold of P‐FWE‐corr. = 0.05, considering the whole brain as the volume of interest. The cluster size was estimated using an initial voxel‐level threshold of P‐unc. = 0.001. To ensure the specificity of any task effect, the main differential contrast was inclusively masked with 2 additional effects. These corresponded to the activation for the critical task vs. each of the two other tasks: for example, the Temporal contrast “T(short+long) > (S + O)” was inclusively masked with “T(short+long)/2 > S” and “T(short+long)/2 > O.” For these additional and nonindependent masking contrasts the threshold was set to P‐unc. = 0.05. Together with the main contrasts that compared the three retrieval tasks, within this ANOVA we also tested for the effect of “temporal distance” (Tshort > Tlong).

Using the same first‐level models, we ran a second within‐subject ANOVA that now also included the average response times (RT) for each condition per subject as a covariate of no interest (Supporting Information Table 1; “Control for task difficulty”). The aim of this was to reassess the pattern of task‐specific dissociation after having accounted for any differences in task difficulty (Fig. 1B). This RT‐controlled analysis was not independent of the main analysis and the results are reported in the Supporting Information.

Experiment 2

The aim of Exp 2 was to account for the possible influence of “unseen/new” images that were presented as foils during the retrieval phase of the Spatial and Object tasks in Exp 1 (e.g., incidental encoding during retrieval). In Exp 2, a single probe image was presented during the retrieval phase of the trial (Fig. 1A, Experiment 2). This procedure imposed a new constraint on the Temporal task, which now could not include the factor of “temporal distance.” Instead, we taxed temporal retrieval by asking participants to judge whether the single probe image was extracted from the first or second half of the clip. As expected the participants were faster and more accurate to judge probes presented either near the beginning or the end of the clip, compared with probes extracted from around the middle of the clip. Thus we now divided the temporal trials into Teasy and Thard, accounting for this behavioral effect.

For Exp 2, we performed one single set of first‐level models and a single second‐level group‐analysis. The first‐level models included the encoding‐regressor (Movie) and 6 retrieval‐related regressors: Teasy (probe extracted from the 1st or 4th quartile), Thard (probe extracted from the 2nd or 3rd quartile), Ssame, Sflipped, Oold, and Onew, plus one regressor modeling all the error trials (see Supporting Information Table 1).

The group level analysis consisted of a within‐subjects ANOVA that included the 6 conditions of interest: Teasy, Thard, Ssame, Sflipped, Oold, and Onew. Within this ANOVA, we first tested for the task‐specific effects by comparing each task vs. the mean of the other two tasks. In this initial analysis, we averaged the Teasy, and Thard conditions of the Temporal task, as well as seen and unseen images of the Spatial and Object tasks, for example, [T(easy+hard) > (S(same+flipped) + O(old+new))/2]. The statistical thresholds used here were the same as Exp 1: statistical maps were corrected for multiple comparisons using a cluster‐level threshold of P‐FWE‐corr. = 0.05 (whole‐brain). The cluster size was estimated using an initial voxel‐level threshold of P‐unc. = 0.001. Again, all comparisons were inclusively masked with the effect of the main task vs. each of the two other tasks (see Exp 1 above for details).

Within the same ANOVA, a second set of contrasts sought to replicate the task‐specific effects, but now using only seen/old images (Supporting Information Table 1, “Seen‐only images”), hence excluding any possible confound associated with the presentation of unseen images during retrieval. We used the following contrasts: [T(easy+hard) > (Ssame + Oold)]; [Ssame > (T(easy+hard)/2 + Oold)/2]; and [Oold > (T(easy+hard)/2 + Ssame)/2]. The statistical thresholds and masking procedure were identical to Exp 1 and Exp 2 initial analysis.

In Exp 2, we also performed control tests to rule out the possibility that our main findings of task‐specific dissociations merely reflected differences in task difficulty (Supporting Information Table 1, “Task difficulty”). This was addressed by comparing conditions that behaviorally resulted in difficult vs. easy retrieval, which included the corresponding “hard” versus “easy” trials for the Temporal task, as well as by trials including “flipped” versus “same” images in the Spatial task (Fig. 1B). The results of these additional analyses are reported in the Supporting Information.

Conjunction analyses: Between immediate memory and LTM

To compare the current findings about task‐specific retrieval after short delays with previous data using analogous retrieval tasks but a much longer retention delay [∼24 h; Kwok et al., 2012], we performed “between‐studies” analyses. Please note that the two paradigms differed not only in terms of the retention delay (which differed by a factor of 43,200), but also in the presence/amount of interleaving events between encoding and retrieval. In the LTM version, participants were exposed to 24 h of new episodic experiences following encoding which might cause retroactive interference on the already acquired information [Wohldmann et al., 2008], whereas such retroactive interference was absent in the current study on immediate retrieval. The video clips were also shorter by a factor of about 250 compared to the 42‐min episode used in the LTM paradigm. Because of these differences, our analyses focused on commonalities rather than differences between LTM and immediate retrieval. These analyses considered the current Exp 1, which included the same retrieval tasks as the LTM experiment. Separately for each of the three tasks, we computed the relevant contrasts (e.g., T > (S+O)/2) in the two studies and entered these in three separate two samples t‐tests. For the identification of common activation across studies, we performed null‐conjunction analyses (e.g., [T > (S+O)/2]LTM ∩ [T > (S+O)/2]ImMem). Statistical threshold were set at P‐FWE‐corr. = 0.05, cluster‐level corrected at the whole brain level (cluster size estimated and displayed at P‐unc. = 0.001), consistent with all the analyses above. For completeness, the contrasts testing for any differences between studies are reported in Supporting Information Table 2.

Additional control analyses: Retrieval success

Finally, we asked to what extent any task‐specific effects identified in our main analyses depended on the successful retrieval of stored information in the clips. For this, we carried out additional analyses testing for retrieval performance in Exp 1 (“Task × Accuracy,” in T and S tasks), and for accurate performance on seen/old vs. unseen/new stimuli in Exp 2 (“Task × Seen‐unseen,” in S and O tasks). Please see Supporting Information for details of these analyses and the corresponding results.

RESULTS

Behavioral Results

Experiment 1

Subjects performed significantly above chance in all three tasks (all P < 0.001). The percentage of correct accuracy and response times (RT) were analyzed using repeated measures ANOVAs with three levels corresponding to the T/S/O tasks. This showed a main effect of conditions both for accuracy [F(2, 32) = 81.42, P < 0.001] and RT [F(2,32) = 31.23, P < 0.001]. Post hoc t‐tests revealed that subjects performed significantly better and faster in the Object than Spatial task [Accuracy: t(16) = 13.11, P < 0.001; RT: t(16) = 6.52, P < 0.001] and Object than Temporal task [Accuracy: t(16) = 9.79, P < 0.001; RT: t(16) = 6.71, P < 0.001]. Subjects were also more accurate on Temporal trials than on Spatial trials [Accuracy: t(16) = 3.48, P < 0.001; but no difference in RT: P > 0.1]. For the Temporal task we also examined the effect of temporal distance, expecting that the retrieval would be more difficult when the two probes were temporally close (T short, < 1.9 s) compared with temporally far (T long, > 1.9 s) during encoding. Indeed t‐tests showed that subjects responded less accurately and slower in short than in long distance trials [Accuracy: t(16) = 3.91, P < 0.005; RT: t(16) = 3.15, P < 0.01] (Fig. 1B, plot on the left).

Experiment 2

In Exp 2, subjects also performed better than chance in all three tasks (all P < 0.001). Repeated measures ANOVAs showed main effects of tasks both for accuracy [F(2, 32) = 9.19, P < 0.005] and RT [F(2,32) = 17.66, P < 0.001]. Post hoc t‐tests revealed that subjects performed with higher accuracy and shorter RT in the Object compared with the Spatial task [Accuracy: t(16) = 4.06, P < 0.001; RT: t(16) = 5.32, P < 0.001] and in the Object compared with the Temporal task [Accuracy: t(16) = 2.77, P < 0.05; RT: t(16) = 3.83, P < 0.005]. The difference in accuracy between the Temporal and Spatial tasks approached significance (P < 0.09), while there was no RT difference between Temporal and Spatial tasks (P > 0.1). Additional t‐tests directly compared different trial types within each task. For the Temporal task, subjects performed more accurately and faster in the “easy” than “hard” trials [Accuracy: t(16) = 6.57, P < 0.001; RT: t(16) = 5.27, P < 0.001)]. For the Spatial task, they were more accurate in recognizing “same” than correctly rejecting “flipped” images [Accuracy: t(16) = 5.34, P < 0.001; RT: P > 0.1]. For the Object task, subjects were faster to respond to unseen Onew images compared to seen Oold images [RT: t(16) = 2.94, P < 0.01], without any difference in accuracy (P > 0.1) (Fig. 1B, plot on the right).

Functional Neuroimaging: Task‐Specific Retrieval

In both Exp 1 and 2, we assessed brain activity specific to each of the three retrieval tasks (Temporal, Spatial and Object) by comparing each task versus the mean of the other two (“Task specificity” contrasts, see Supporting Information Table 1). In Exp 2, we also carried out additional, nonindependent, control tests using only trials containing seen/old images (“Seen‐only images” contrasts). The latter served to ensure that any task‐specific dissociation did not arise from the presentation of unseen/new images during the retrieval phase of the Spatial and Object tasks (i.e., flipped‐ and new‐image foils).

Scene‐chronology judgment (Temporal task)

Exp 1

The comparison of the Temporal task with the other two retrieval conditions revealed significant activation in the right precuneus, bilateral caudate and bilateral cuneus (see Table 1, and Fig. 2A, clusters in blue/magenta). The signal plots in Figure 2 (panels on the left) show that the precuneus and the right angular gyrus were activated more by the Temporal task (average of Tshort and Tlong) than by the Spatial and Object tasks. Note that the effect in the right angular gyrus was detected only at uncorrected threshold (P‐unc < 0.001), and it is reported here because it was fully significant in Exp 2 (see below) and as well as in the LTM dataset [Kwok et al., 2012].

Table 1.

Task‐specific retrieval activation

| Exp. 1 | Exp. 2 | Exp. 2 Seen‐only | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cluster | Voxel | Cluster | Voxel | Cluster | Voxel | |||||||

| K | P‐corr. | Z | x y z | k | P‐corr. | Z | x y z | K | P‐corr. | Z | x y z | |

| Scene‐chronology (Temporal) | ||||||||||||

| Precuneus B | 258 | <0.001 | 7.10 | 6 −54 47 | 2666 | < 0.001 | 5.17 | 4 −72 43 | 1835 | < 0.001 | 4.00 | 6 −70 41 |

| Post. cingulate cortex B | — | — | — | — | 5.64 | 0 −30 27 | 5.35 | 6 −36 31 | ||||

| Caudate B | 148 | 0.005 | 4.30 | −12 −15 14 | — | — | — | — | — | — | — | — |

| Cuneus L | 82 | 0.052 | 5.22 | −15 −60 17 | — | — | — | — | — | — | — | — |

| Cuneus R | 88 | 0.042 | 5.21 | 18 −57 20 | — | — | — | — | — | — | — | — |

| Inferior frontopolar cortex R | — | — | — | — | 874 | < 0.001 | 5.47 | 28 60 9 | 706 | 0.001 | 5.75 | 28 60 7 |

| Superior frontal cortex R | — | — | — | — | 685 | 0.001 | 5.35 | 26 24 55 | 889 | 0.001 | 5.60 | 26 28 53 |

| Angular gyrus R | 43 | < 0.001 | 4.63 | 45 −69 29 | 457 | 0.007 | 4.84 | 44 −66 45 | 509 | 0.004 | 4.58 | 44 −68 43 |

| Scene‐layout (Spatial) | ||||||||||||

| Superior parietal gyrus L | 1142 | < 0.001 | 6.90 | −21 −66 59 | 2530 | < 0.001 | 6.67 | −18 −68 59 | 377 | 0.016 | 4.99 | −18 −66 59 |

| Dorsal occipital cortex L | 5.11 | −27 −75 9 | 5.21 | −28 −80 9 | — | — | — | — | ||||

| Supramarginal gyrus L | 6.21 | −60 −33 38 | — | — | — | — | — | — | ||||

| Intraparietal sulcus L | 5.77 | −42 −39 41 | 5.56 | −36 −44 45 | — | — | — | — | ||||

| Superior parietal gyrus R | 1314 | < 0.001 | 6.72 | 21 −63 59 | 3026 | < 0.001 | 7.55 | 16 −66 59 | 1262 | < 0.001 | 4.74 | 26 −68 47 |

| Dorsal occipital cortex R | 4.12 | 30 −93 23 | 7.40 | 34 −78 29 | 4.10 | 30 −74 39 | ||||||

| Intraparietal sulcus R | 6.25 | 36 −39 44 | 334 | 0.026 | 5.48 | 40 −40 45 | — | — | — | — | ||

| Supramarginal gyrus R | 5.97 | 60 −24 35 | — | — | — | — | — | — | ||||

| Superior frontal sulcus L | 213 | 0.001 | 6.45 | −24 3 62 | 238 | 0.078 | 5.43 | −26 −2 51 | — | — | — | — |

| Superior frontal sulcus R | 182 | 0.002 | 5.92 | 30 −3 56 | 220 | 0.097 | 4.70 | 28 0 53 | — | — | — | — |

| Inferior frontal gyrus L | 92 | 0.036 | 5.00 | −51 6 26 | — | — | — | — | — | — | — | — |

| Inferior frontal gyrus R | 304 | < 0.001 | 6.40 | 54 12 11 | 536 | 0.003 | 6.07 | 52 12 25 | 238 | 0.078 | 4.80 | 50 12 25 |

| Inferior occipital gyrus L | 152 | 0.005 | 4.81 | −57 −60 −10 | 894 | < 0.001 | 6.03 | −52 −64 −11 | 591 | 0.002 | 5.03 | −50 −66 −11 |

| Inferior occipital gyrus R | 186 | 0.002 | 6.77 | 54 −60 −13 | 2277 | < 0.001 | 6.82 | 56 −58 −11 | 1161 | < 0.001 | 4.87 | 50 −58 −17 |

| Occipital pole B | 218 | 0.001 | 4.84 | −9 −93 2 | — | — | — | — | — | — | — | — |

| Scene‐recognition (Object) | ||||||||||||

| Medial frontal cortex B | 478 | < 0.001 | 5.19 | −3 51 47 | 2215 | < 0.001 | 5.96 | −8 56 39 | 314 | 0.032 | 4.64 | −10 56 39 |

| Middle temporal gyrus L | 490 | < 0.001 | 5.94 | −60 −12 −25 | 1661 | < 0.001 | 6.77 | −62 −14 −19 | 162 | < 0.001 | 4.29 | −60 −4 −25 |

| Middle temporal gyrus R | 304 | < 0.001 | 6.15 | 60 −12 −16 | 1017 | < 0.001 | 5.69 | 60 −6 −23 | 15 | < 0.002 | 2.96 | 62 −4 −23 |

| Orbito‐frontal cortex B | 95 | 0.014 | 5.04 | 0 51 −22 | 227 | 0.049 | 5.06 | 0 44 −19 | — | — | — | — |

| Angular gyrus L | 227 | 0.001 | 5.30 | −51 −54 29 | — | — | — | — | — | — | — | — |

| Angular gyrus R | 142 | 0.006 | 4.98 | 57 −60 38 | — | — | — | — | — | — | — | — |

| RSC/post. cingulate cortex B | 474 | < 0.001 | 4.90 | 3 −42 35 | — | — | — | — | — | — | — | — |

T‐contrasts compared each condition versus the mean of the other two conditions (e.g., T(short+long)/2 > (S + O)/2), and was inclusively masked with the activation for the critical condition versus each of the two other conditions (e.g., T(short+long)/2 > S/2 and T(short+long)/2 > O/2). In Exp 2, we conducted two separate sets of contrasts at the group‐level. First, t‐contrasts were conducted using all six conditions to compare each condition versus the mean of the other two conditions (e.g., S(same+flipped) > [T(easy+hard) + O(old+new)]/2), see columns in the middle. Second, for Spatial and Object conditions, we were interested in activity elicited by the responses associated with “seen” trials only (e.g., Ssame > [T(easy+hard)/2 + Oold]/2), see rightmost columns and also Supporting Information Table 1. Brain regions highlighted in bold correspond to the task‐specific effects consistently observed across all experiments/analyses; these regions are also displayed in Figures 2, 3, 4. P‐values are corrected for multiple comparisons, except for the right angular gyrus in Exp 1, and the middle temporal gyri in Exp 2 Seen‐only that are reported at a lower uncorrected threshold (in italics). Post, posterior; x y z, coordinates in the standard MNI space of the activation peaks in the clusters; k, number of voxels in each cluster; RSC, retrosplenial cortex; L/R/B, left/right hemisphere/bilateral.

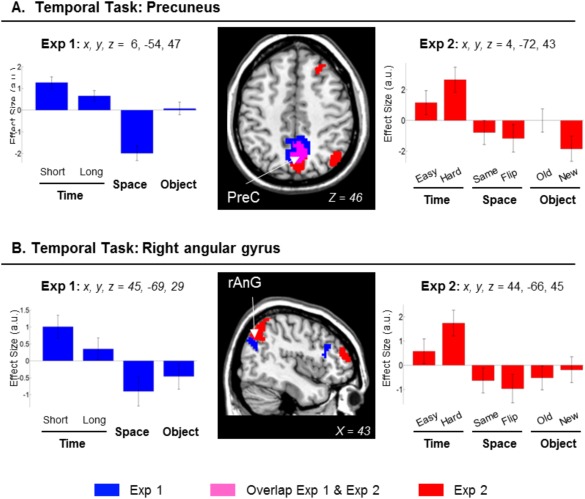

Figure 2.

Clusters of brain activation and signal plots for the scene‐chronology judgment (Temporal task). Clusters of activation and signal plots for (A) the precuneus, and (B) the right angular gyrus. These regions activated selectively during the temporal tasks in Exp 1 (in blue), Exp 2 (in red; overlap between Exp 1 and 2 shown in magenta). The activation selective for the Temporal task was computed with the contrast: T(short+long)/2 > (S + O)/2, inclusively masked with T(short+long)/2 > S/2 and with T(short+long)/2 > O/2. The involvement of the precuneus and the right angular gyrus was further confirmed using only “seen/old” images (Table 1, rightmost columns). Activation clusters were estimated and displayed at P‐unc. = 0.001, thresholded at a minimum of 40 voxels. Effect sizes are mean adjusted (sum to zero) and expressed in arbitrary units (a.u. ± 90% CI). L/R, left/right; PreC, precuneus; AnG, angular gyrus.

Together with the main comparison for the Temporal task in Exp 1, we assessed the effect of temporal distance by comparing Tshort vs. Tlong trials. In previous studies using LTM protocols, we found that temporal distance further modulated activity in the precuneus [Kwok et al., 2012, 2014; see also St. Jacques et al., 2008]. Here this contrast did not reveal any significant effect. This is in contrast to the significant behavioral cost of retrieving Tshort vs. Tlong temporal distances (see behavioral results, and Fig. 1B, plot on the left) that was analogous to our previous LTM protocol. Similar behavioral patterns have been observed in many other short‐term studies [Hacker, 1980; Konishi et al., 2002; Milner et al., 1991; Muter, 1979], yet to our knowledge, there does not seem to exists a clear consensus on the neural correlates subserving the temporal distance effect separating two temporally very nearby events/items.

Exp 2

In Exp 2 we replicated the effect of the temporal retrieval task in the precuneus and found a fully significant effect in the right angular gyrus (see Fig. 2, clusters in red/magenta). In addition, in Exp 2 there was a significant activation of the right superior frontal cortex and the right inferior frontopolar cortex that were not found in Exp 1 (Table 1). The involvement of the precuneus and the right angular gyrus was further confirmed using only trials including seen images (cf. “Exp 2 Seen‐ only,” Table 1). The signal plots on Figure 2 (panels on the right) show the activity of the precuneus and the right angular gyrus for all the conditions of Exp 2. Both in the precuneus and the right angular gyrus the average activity in the temporal task [T(easy+hard)/2] were larger than the Ssame and the Oold conditions. Notably, Exp 2 included the presentation of a single image and that the participants were asked to judge the absolute temporal position of the probe, rather than the relative temporal order. Despite this important change of how temporal memory was probed, the finding of analogous patterns of activation across both experiments highlights the link between immediate retrieval of temporal information and activation of the precuneus and angular gyrus.

Scene‐layout judgment (Spatial task)

Exp 1

The contrast concerning the retrieval of the spatial layout revealed activation in the superior parietal cortex bilaterally, with the cluster extending to the intraparietal sulcus and the supramarginal gyrus. This contrast also showed activation of the superior frontal sulci, the inferior frontal gyri, plus the inferior occipital cortex (see Table 1, and Fig. 3, clusters displayed in blue/magenta, signals plots on the left side).

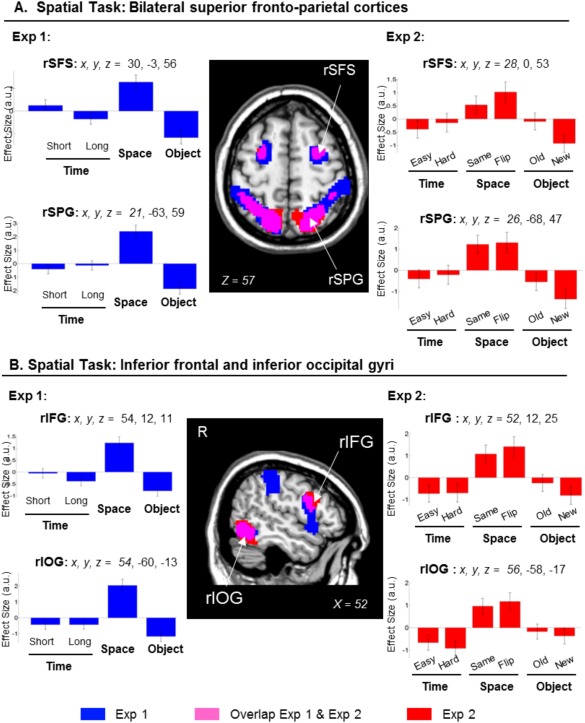

Figure 3.

Clusters of brain activation and signal plots for the Scene‐layout judgment (Spatial task). Clusters of activation and signal plots for (A) the superior frontal cortex and the superior parietal cortex bilaterally and (B) the right inferior occipital cortex and the right inferior frontal cortex. These regions activated for the Spatial task in Exp 1 (blue) and in Exp 2 (red; overlap between Exp 1 and Exp 2 in magenta). The activation selective for the Spatial task was computed with the contrast: S > [T(short+long)/2 + O]/2, inclusively masked with S > T(short+long)/2 and with S > O. The effects in the superior parietal gyri, the inferior occipital gyri and the right inferior frontal gyrus were replicated when considering only same/old images in Exp 2 (Table 1, rightmost columns). Activation clusters were estimated and displayed at P‐unc. = 0.001, thresholded at a minimum of 80 voxels. Effect sizes are mean adjusted (sum to zero) and are expressed in arbitrary units (a.u. ± 90% CI). L/R, left/right; SFS, superior frontal sulcus; SPG, superior parietal gyrus; IFG, inferior frontal gyrus; IOG, inferior occipital gyrus.

Exp 2

The same comparison in Exp 2 replicated the results of Exp 1, showing activation in the superior parietal cortex bilaterally, the superior frontal sulci, the inferior and dorsal occipital cortices and the right inferior frontal gyrus (Fig. 3, clusters in red/magenta). When we considered only the conditions including the seen/old images (“Exp 2 Seen‐ only”), we replicated the effects in the superior parietal gyri, the inferior occipital gyri and the right inferior frontal gyrus (see Table 1, and Fig. 3, signal plots on the right). The activation in the intraparietal sulci and the superior frontal sulci did not reach statistical significance. Inspection of the signal plots indicated that in these areas there was less activation in the Ssame than Sflipped trials (cf. bar 3 vs. bar 4, signal plots of the superior fontal sulcus, Fig. 3A plot on the right). Nonetheless, the activity in the Ssame condition was larger than those in the T and O conditions (cf. bar 3 vs. bars 1–2 and 5–6).

Scene‐recognition judgment (Object task)

Exp 1

The Object task was associated with the activation of the medial frontal cortex (MFC) and the orbitofrontal cortex (but the latter did not fully replicate in Exp 2; see Table 1, and Fig. 4, clusters blue/magenta and signal plots on the left), together with bilateral activation in the middle temporal gyri, the retrosplenial/posterior cingulate gyrus, and a portion of the angular gyrus. The same contrast also revealed an activation of the hippocampus bilaterally. The activation of the hippocampus was just below statistical significance (P‐FWE‐corr. = 0.052; Z = 4.58; at x, y, z = 21 −6 −19; and P‐FWE‐corr. = 0.077; Z = 4.58; at x, y, z = −24 −9 −25; considering the whole brain as the volume of interest) and it is reported here because the same effect was previously found in the LTM dataset [Kwok et al., 2012; see also Discussion Section].

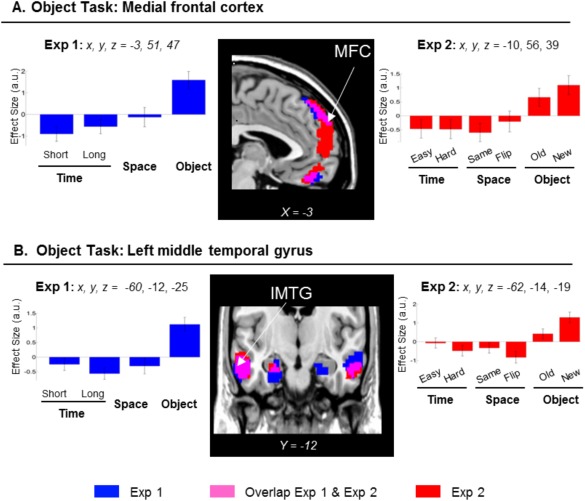

Figure 4.

Clusters of brain activation and signal plots for the Scene‐recognition judgment (Object task). Clusters of activation and signal plots for (A) the MFC, and (B) the MTG. These regions activated for the Object task in Exp 1 (blue) and in Exp 2 (red; overlap in magenta). The activation selective for the Object task was computed with the contrast: O > [T(short+long)/2 + S]/2, inclusively masked with O > T(short+long)/2 and with O > S. The signal plots refer to the left hemisphere, but analogous patterns of activation were found in the right hemisphere (Table 1). In Exp 2, the task‐specific effect of the Object task in the MFC was replicated when considering only the same/old images (Table 1, rightmost columns). Inspection of the signal plot for the left MTG revealed less activity in the Oold than Onew trials (plots on the right, bars 5 and 6), although the Oold trials showed numerically larger parameter estimates than the T and S conditions (see p‐uncorr. reported in italics in Table 1, rightmost columns). Activation clusters were estimated and displayed at P‐unc. = 0.001, thresholded at a minimum of 80 voxels. Effect sizes are mean adjusted (sum to zero) and expressed in arbitrary units (a.u. ± 90% CI). L/R, left/right; MTG, middle temporal gyrus; MFC, medial frontal cortex.

Exp 2

An almost identical pattern of activation was found in Exp 2, when we considered all the conditions: O(old+new) > (T(easy+hard) + S(same+flipped))/2. Significant effects were found in the middle temporal gyri, and a large cluster including the medial frontal gyrus, extending ventrally into the orbital‐frontal cortex (Fig. 4, clusters in red/magenta). However, when we considered only the trials including seen/old‐images [Oold > (T(easy+hard)/2 + Ssame)/2], the Object task was found to significantly activate only the MFC (Table 1, rightmost columns), indicating that this region was not affected by incidental encoding.

In summary, across all three tasks, the brain activation was found to be similar across Exp 1 and Exp 2. The scene‐chronology Temporal task activated the precuneus and the right angular gyrus; the scene‐layout Spatial task was associated with activation of the posterior/superior parietal cortex and the right inferior frontal gyrus; while the scene‐recognition Object task activated the MFC and the middle temporal gurus (see Table 1, areas highlighted in bold). The control analysis of Exp 2 that considered only trials including seen/old images confirmed these task‐specific effects, indicating that the pattern of dissociation—and in particular for the Spatial and Object tasks—was not merely driven by the unseen/new images during the retrieval phase.

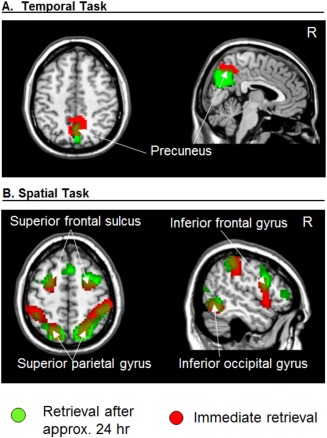

Functional Neuroimaging: Correspondence with An Analogous LTM Dataset

We directly compared the task‐specific dissociation observed in the current dataset (Exp 1) with our previous LTM study that included the same task‐specific retrieval tasks, but after a much longer retention delay [i.e., 24 h between encoding and retrieval, Kwok et al., 2012]. Common across the two data sets, the conjunction analyses revealed an activation cluster in the precuneus for the Temporal task (Fig. 5A and Table 2), and the dorsal frontoparietal network plus right inferior frontal gyrus and right occipital cortex for the Spatial task (Fig. 5B and Table 2). These formal tests show that the Temporal and Spatial task‐specific dissociation observed here for immediate retrieval matched well with the patterns observed during retrieval from LTM. By contrast, for the Object task, overlapping effects in the MFC and in the hippocampus were detected only at a more lenient uncorrected threshold of P‐uncorr. = 0.001. In the hippocampus, the lack of a fully significant “common” effect across datasets was due to the fact that in the current Exp 1 the contrast comparing the Object task versus the two other tasks did not survive correction for multiple comparisons, considering the whole brain as the volume of interest (P‐corr. = 0.052 and 0.077, in the left and right hemispheres respectively; cf. above). Indeed, the formal test assessing differences between the two datasets (i.e., “Task × Experiment” interactions, see Supporting Information Table 2) did not reveal any significant difference between LTM and immediate retrieval in the hippocampus, consistent with this region being involved in the scene‐recognition judgment irrespective of the retention delay (see also Discussion Section).

Figure 5.

Task‐specific retrieval activation common in studies after immediate delays and a longer delay. Activation for the LTM study [∼24 h; Kwok et al., 2012] is depicted in green and activation for the current immediate retrieval study (data from current Exp 1) depicted in red. Activation clusters for both studies were estimated and displayed at P‐unc. = 0.001.

Table 2.

Common memory retrieval activation

| ImMem ∩ LTM common effects | ||||

|---|---|---|---|---|

| Cluster | Voxel | |||

| k | P‐corr. | Z | x y z | |

| Scene‐chronology (Temporal) | ||||

| Precuneus B | 112 | 0.011 | 4.72 | 3 −57 47 |

| Angular gyrus R | 98 | < 0.001 | 4.00 | 54 −54 17 |

| Scene‐layout (Spatial) | ||||

| Superior parietal gyrus L | 206 | 0.001 | 5.95 | −21 −69 56 |

| Supramarginal gyrus L | 129 | 0.008 | 3.46 | −60 −36 44 |

| Intraparietal sulcus L | 4.87 | −36 −45 41 | ||

| Superior parietal gyrus R | 651 | < 0.001 | 5.20 | 24 −63 59 |

| Dorsal occipital cortex R | 3.72 | 30 −75 29 | ||

| Intraparietal sulcus R | 5.20 | 42 −39 47 | ||

| Supramarginal gyrus R | 4.82 | 57 −33 53 | ||

| Superior frontal sulcus L | 100 | 0.022 | 4.82 | −24 3 56 |

| Superior frontal sulcus R | 145 | 0.004 | 4.81 | 30 0 53 |

| Inferior frontal gyrus R | 95 | 0.027 | 4.75 | 54 9 26 |

| Inferior occipital gyrus R | 98 | 0.024 | 5.15 | 54 −57 −10 |

Task‐specific activation after immediate delays (current Exp 1; ImMem) and a previous study that used a long delay between encoding and retrieval [∼24 h; LTM: Kwok et al., 2012]. Statistical threshold were set at P‐FWE‐corr. = 0.05 (cluster‐level), considering the whole brain as the volume of interest. Note that the “between‐studies” overlap activation in the right angular gyrus was found at a lower uncorrected threshold (in italics); but importantly within this area no differences or interactions between ImMem and LTM studies were found (see differential effects between the two studies in Supporting Information Table 2). L/R/B, left/right hemisphere/bilateral.

DISCUSSION

We have identified distinct sets of brain areas that showed task‐specific activation during immediate retrieval, following the encoding of dynamic cinematic material. The precuneus and the right angular gyrus were activated while retrieving the temporal order of events (scene‐chronology judgment), the superior parietal cortex was recruited during spatial related recall (scene‐layout judgment) whereas activity in the MFC and the middle temporal gyrus (MTG) reflected processes associated with scene‐recognition judgments. These task‐specific effects occurred independently of whether subjects were asked to judge seen/old or unseen/new stimuli (cf. “Seen‐only images” contrasts, in Exp 2), and independently of retrieval success and general task difficulty (see Supporting Information). With respect to the hypothesized correspondence between immediate retrieval and retrieval from LTM, we found that these task‐specific dissociations are independent of the information's mnemonic history (24 h vs. 2 s retention, i.e., differing by 43,200 times in magnitude).

One important qualifier for the following discussion is that memory retrieval in all our tasks depended minimally on memory buffers compared to other simpler items/displays that are typically used in “standard” short‐term/working memory tasks [Shallice and Cooper, 2011; Shiffrin, 1973]. In each trial the number of items presented during encoding was clearly well beyond the 4 ± 1 items stipulated by classical STM models [Cowan, 2001]. While memories of some materials (e.g., words, spatial positions, simple visual displays) are supported by limited‐capacity buffers [Baddeley and Hitch, 1974], memory for other categories [e.g., unknown faces; and scenes, see Shiffrin, 1973] will tap into LTM when the task requires the encoding of two or more items [Shallice and Cooper, 2011]. For this reason, we restrict our discussion on the tripartite, task‐specific dissociations with reference to highly complex, naturalistic material.

Precuneus and Inferior Parietal Cortex Support Temporal Order Recollection

The scene‐chronology judgment task activated the precuneus in both Exp 1 and 2, despite the considerable changes in the trial structure for probing the retrieval of temporal information (i.e., relative temporal order between two probes in Exp 1 vs. absolute temporal position of a single probe in Exp 2). The chronology‐judgment task required retrieving the event sequences of many complex stimuli (scenes) that entail high‐level conceptual/hierarchical relationships between them [Swallow et al., 2011; Zacks et al., 2001]. The precuneus was previously found to activate in our LTM protocol that used the same temporal order judgment [Kwok, et al., 2012; and see also results of the conjunction analyses]. The current finding of precuneal activation using very different (much shorter) delays fits well with the view that these processes are not strictly associated with retrieval following long retention delays, suggesting that this medial parietal region is implicated during temporal order retrieval irrespective of delay (Fig. 5A and Table 2). Specifically, we link the effect of chronological retrieval in the precuneus with processes involving an active reconstruction of past events [see also St. Jacques et al., 2008], and possibly memory‐related imagery [Fletcher et al., 1995; Ishai et al., 2000]. These processes would characterize temporal order judgments using complex naturalistic material, but not order‐judgment with simpler stimulus material, which previous studies have associated with other brain regions [e.g., middle/prefrontal areas, Suzuki, et al., 2002].

Together with the precuneus, temporal‐related judgment also gave rise to activation of the right angular gyrus (AG). One possible interpretation relates the inferior parietal cortex to the engagement of attentional processes [Majerus et al., 2007, 2010]. The scene‐chronology judgment of Exp 2 provided us with a further qualifier to this kind of temporal‐related attention. Although the Temporal task in Exp 2 did not require any explicit temporal order judgment (only classifying a scene into either the first or second half of the movie), an accurate decision still necessitated an evaluation of the target scene with many other segments of the movie. This operation is fundamentally different from the evaluation implicated in the scene‐layout judgment, which required decisions on comparing between a single scene and its own correspondent encoded snapshot. The latter involves visuospatial analysis of the configuration within scenes. The AG may subserve different types of attention, among which one of them is linked with memory search operations [see Majerus et al., 2006, 2010, also showing coactivation of these two regions during order retrieval of simple stimuli after short delays].

Superior Parietal Cortex Evaluates Spatial Details Against Memory Representation

The second pattern consistently found across experiments (Exp 1 and Exp 2, see Fig. 3) and across studies with different retention delays (conjunction between immediate retrieval and LTM studies, see Fig. 5B and Table 2) was the activation of the superior parietal lobule (SPL) during the scene‐layout judgment. Previous studies showed sustained activation in the parietal cortex during working memory delays [Courtney et al., 1998] and that activity there correlates with memory load [Todd and Maroi, 2004]. Here, the amount of information contained in the clips was equated in the three tasks, especially in Exp 2 where we counterbalanced the assignment of movies across tasks. The subjects also could not anticipate what specific type of information would be tested at retrieval, and needed to keep rehearsing different aspects of the clips. Because of this, we deem unlikely that the activation of SPL, that here was specific for the scene‐layout tasks, reflected general rehearsal processes [Magen et al., 2009] and/or updating operations [Bledowski et al., 2009; Leung et al., 2007].

Instead, we suggest a role for the SPL in mediating an interface between the internal memory representation and external information. It has been proposed that the superior parietal cortex is engaged in allocating top‐down attention to orient to aspects of the available visual input and on information retrieved from memory [Ishai et al., 2002; Lepsien and Nobre, 2007; Summerfield et al., 2006]. In line with this, we found SPL activation when the subjects had to make decisions by comparing between their memory of the encoded clips and the scene stimuli presented at retrieval. This procedure might act on some visuospatial storage which is held by the lateral occipital cortex and mediated by the intraparietal sulcus (Fig. 3B). The immediate memory task here was analogous to the Spatial task of our previous LTM study [Kwok et al., 2012], which also required matching/comparing the available external visual input and internal information stored in memory [see also Nobre et al., 2004]. This interface involves a range of top‐down processes such as monitoring and verification [Cabeza et al., 2008; Ciaramelli et al., 2008], which are independent of the probe image's mnemonic status, as shown by the equated effect sizes for “same” and “flipped” images conditions (see signals plots in Fig. 3, and also “Retrieval success” analyses of Exp 2 in Supporting Information).

Recognition Processes in the Medial Frontal Cortex and the Temporal Cortex

The third retrieval task involved scene‐recognition judgments and was associated with the activation of the MFC and the MTG. Moreover, in Exp 1 we found a statistical trend in the hippocampus bilaterally: an area that we previously associated with scene‐recognition judgment from LTM (see next section about “Possible correspondences between retrieval from short‐ and long‐term memory”).

The MFC was engaged both Exp 1 and 2, but was not activated in the other two tasks, thus a broad prefrontal cortex/working memory correspondence [Tsuchida and Fellows, 2009] seems insufficient to explain this task‐specific effect. The scene‐recognition task in Exp 2 taxed both recognition of “old” scenes and correct rejection of “new” scenes, suggesting that the MFC here did not distinguish between the correct rejection of new information (unseen/new trials) and the recognition of old images. One candidate cognitive process that is common to both types of processes is feeling‐of‐knowing (FOK). FOK depend on the cue specification, content retrieval and appraisal of the retrieved memory fragments, but are independent of the ultimate determination of content appropriateness [Schnyer et al., 2005]. Using imaging data during sentence completion recognition, Schnyer et al. [2005] modeled a FOK‐related directional path. The path implicated the temporal cortex and the hippocampus, and engaged the ventro‐MFC at the end, which is consistent with our findings for the Object task implicating the MFC, the middle temporal cortex, and at a lower statistical threshold, the hippocampus.

By contrast, the activity in the MTG was larger for unseen/new images than for seen/old memory probes (Fig. 4B). This suggests that new encoding may have contributed to the activation of the MTG during the retrieval phase of the Object task in Exp 1. The scene‐recognition task required a lower precision in terms of retrieving details about the encoded material and could be solved by simple recognition [Wixted and Squire, 2010]. Thus, alternatively, larger activation for “new vs. old” stimuli may relate to some reduced activation associated with familiarity processes on old trials [see Henson et al., 1999; who reported reduced familiarity related activation in middle temporal gyri bilaterally; and Henson and Rugg, 2003, for review]. However, we should point out that in Exp 2 the unseen images were extracted from edited‐out parts of the original clips and therefore contained the same object/character depicted in the target images. Therefore, we deem more likely that the relational association between objects rather than their presence/absence in the scenes helped subjects to distinguish targets from foils. We thus suggest that the activation of the MTG reflects processes utilizing the relational details among objects embedded in the probe images for reaching the recognition decision [Diana et al., 2007; Finke et al., 2008; Hannula et al., 2006; Olson et al., 2006]. Future studies should consider the inclusion of some correlative behavioral measures to index familiarity processes to elucidate further this issue. Moreover, while the Temporal and Spatial tasks required making relative comparisons about aspects of the encoded video‐clip, the scene‐recognition judgment merely required judging whether the image presented at retrieval was present (or not) within the clip. This may entail different processing dynamics in the Object task compared with the other two tasks (cf. also differences in retrieval times). The latter could be addressed using more subtle experimental manipulations for the scene‐recognition judgment, for example by using digitally‐modified foil images where a single object in the scene has been modified/removed.

Possible Correspondences Between Retrieval from Short‐ and Long‐Term Memory

The overlap between our current study and the previous LTM study using analogous tasks and materials lends weight to the notion of scale‐invariance in memory mechanisms [Brown et al., 2007], as have been previously demonstrated in free recall tasks, where the rate of item recall was unvarying across recall span (day, week, year) and even across from the past to the prospective future [Maylor et al., 2001]. Those previous behavioral findings imply that scale‐invariance is a form of self‐similarity in that the holistic pattern can exist at multiple levels of magnification [and even across different memory systems, e.g., Maylor, 2002]. Here, our data affirmed the importance of the posterior parietal cortex in both immediate and long‐term retrieval [Berryhill et al., 2007, 2011] and further characterized its involvement in dealing with the spatial relations of objects within scenes [Bricolo et al., 2000; Buiatti et al., 2011]. Our data also demonstrated such short‐/long‐term correspondence exists in the precuneus/angular gyrus for temporal order retrieval (cf. scene‐chronology judgment task). In contrast, the bilateral hippocampus and MFC activations did not reach full statistical significance in the ImMem ∩ LTM conjunction analysis. At first sight, this may indicate that the scene‐recognition task tapped into distinct recognition‐based mechanisms as a function of retention delay. However, we deem this unlikely because the direct comparison between the two datasets did not show any significant effect in these regions (see Supporting Information Table 2). Indeed, a more targeted test asking the question of whether the hippocampus clusters previously found in LTM activated also in the current immediate retrieval experiments revealed significant effects in both immediate retrieval experiments here (Exp 1: x y z = −24 −9 −25; P‐FWE‐corr. < 0.001; Z = 4.58; x y z = 21 −9 −22; P‐FWE‐corr. = 0.001; Z = 4.51; and in Exp 2: x y z = −24 −14 −21; P‐FWE‐corr. = 0.004; Z = 3.96; using the LTM clusters for small volume correction). These additional tests point to a more general issue of sensitivity that future studies may address using different methodological approaches, such as multivariate methods that can be more sensitive than the standard univariate analyses used here. Moreover, the former would enable capturing task‐specific effects that are expressed as patterns of distributed activation, rather than local changes of activity as reported here [e.g., Jimura and Poldrack, 2012].

Task Difficulty and Retrieval Success

We used several control analyses to show that the task‐specific patterns of activation, and the correspondence between the current data and our previous LTM study, do not merely reflect some effect related to general task difficulty (see Supporting Information). First, areas involved in overall task difficulty should show progressively greater activation with progressively more difficult tasks. In Exp 1 this would entail S > T > O. However, the patterns in the precuneus and the angular gyrus (with T > O > S, Fig. 2A) and the MFC (O > S > T, Fig. 4A) did not fit with simple predictions based on overall task difficulty. We also assessed the effect of difficulty using the Temporal and Spatial tasks in Exp 2. The contrast “hard > easy” Temporal trials revealed one single activation cluster in the supplementary frontal eye field, which has been associated with difficult decision making [Heekeren et al., 2004]. None of the critical task‐specific regions (Figs 2, 3, 4) was activated by this “hard > easy” comparison. Behaviorally, there were also differences between “flipped vs. same” Spatial trials. The corresponding contrast showed only one activation cluster in the left inferior frontal gyrus. This region was initially associated with the Spatial task in Exp 1, but no corresponding effect was found in Exp 2 (see Table 1). For Exp 1, we additionally replicated the main findings of task‐specific dissociation by entering RT as a covariate of no interest to account for task difficulty. As a whole these results indicate that the task‐specific dissociation during immediate retrieval cannot be simply attributed to task difficulty. The same pattern was previously demonstrated with analogous control analyses of the LTM dataset [see p. 2949, Kwok et al. 2012], indicating that differences in overall task difficulty cannot account for the task‐specific correspondence between retrieval following short and long retention delays.

Similar to the previous LTM study [Kwok et al., 2012], also here the task‐specific activation patterns were independent of retrieval success (see Supporting Information). This emphasizes the centrality of “retrieval attempts” [Rugg and Wilding, 2000] both for immediate retrieval and for retrieval after long delays, in line with the proposal that there might exist time‐scale invariance in the neural correlates of memory retrieval. In Exp 1, we found that the task‐specific activation associated with the scene‐chronology (Temporal) and scene‐layout (Spatial) tasks were common for correct and incorrect trials. In Exp 2, the task‐specific effects for the scene‐layout and the scene‐recognition (Object) tasks were common for trials including old/seen pictures and trials including unseen/new stimuli. These results were in line with our analogous analyses on the LTM dataset [p. 2950, Kwok et al., 2012], and indicate that the task‐specific dissociation found both for immediate and long‐delay retrieval should be related to the “attempt” to retrieve specific types of information, rather than to the successful recovery of stimulus content from memory.

Finally, rather than advocating that retrieval from STM and LTM are “the same,” here we sought to characterize the specific demands in each task and map the corresponding neural correlates. A limitation of the current approach using naturalistic material is that the complexity of the movie stimuli may have led the participants to use a mixture of different strategies during retrieval. For instance, the subjects could attempt to solve the scene‐chronology task by remembering which objects occurred more recently (i.e., recency judgment), or rely on non‐spatial details within the scenes to identify the target image in the scene‐layout task. Nonetheless, the findings of analogous dissociations in both Exp 1 and Exp 2 [and LTM study in Kwok et al., 2012], which used considerably different tasks to probe memory for the same what/where/when type of information, suggest that these correspondences are largely independent on task characteristics. Instead, each task might flexibly recruit a subset of a host of “atomic” cognitive processes to subserve task specific functions [Van Snellenberg and Wager, 2009], meeting retrieval demands across different retention intervals.

CONCLUSION

Cast in a “what‐where‐when” framework, we showed task‐specific functional dissociations during the immediate retrieval of information about complex and naturalistic material. The precuneus and the right angular gyrus were activated during retrieving the temporal order of events, the superior parietal cortex in spatial‐related judgment, whereas the MFC, together with middle temporal areas, were activated during scene recognition. By comparing the current results involving short retention delays with a previous LTM paradigm with a 24‐h delay between encoding and retrieval, we found delay‐independent functional correspondence of the judgment specific patterns. The findings can be ascribed to a subset of atomic processes, guided by task‐specific (what/where/when) and stimuli‐specific (complex scenes) constraints that operate in a scale‐free manner across a wide range of retention delays.

Supporting information

Supplementary Information

ACKNOWLEDGEMENT

The authors thank Prof. Tim Shallice for his substantial contribution to the refinement of many ideas in this article; and Sara Pernigotti for editing the video clips.

Correction added after online publication 12 March 2015. The order of the authors' affiliations has been corrected.

REFERENCES

- Baddeley A, Hitch GJ (1974): Working memory In: Bower GH, editor. The Psychology of Learning and Motivation: Advances in Research and Theory. New York: Academic Press; pp 47–89. [Google Scholar]

- Berryhill ME, Phuong L, Picasso L, Cabeza R, Olson IR (2007): Parietal lobe and episodic memory: Bilateral damage causes impaired free recall of autobiographical memory. J Neurosci 27:14415–14423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berryhill ME, Chein J, Olson IR (2011): At the intersection of attention and memory: The mechanistic role of the posterior parietal lobe in working memory. Neuropsychologia 49:1306–1315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bledowski C, Rahm B, Rowe JB (2009): What “works” in working memory? Separate systems for selection and updating of critical information. J Neurosci 29:13735–13741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bricolo E, Shallice T, Priftis K, Meneghello F (2000): Selective space transformation deficit in a patient with spatial agnosia. Neurocase 6:307–319. [Google Scholar]

- Brown GDA, Neath I, Chater N (2007): A temporal ratio model of memory. Psychol Rev 114:539–576. [DOI] [PubMed] [Google Scholar]

- Buiatti T, Mussoni A, Toraldo A, Skrap M, Shallice T (2011): Two qualitatively different impairments in making rotation operations. Cortex 47:166–179. [DOI] [PubMed] [Google Scholar]

- Burgess N, Maguire EA, Spiers HJ, O'Keefe J (2001): A temporoparietal and prefrontal network for retrieving the spatial context of lifelike events. NeuroImage 14:439–453. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Mangels JA, Nyberg L, Habib R, Houle S, McIntosh AR, Tulving E (1997): Brain regions differentially involved in remembering what and when: A PET study. Neuron 19:863–870. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Ciaramelli E, Olson IR, Moscovitch M (2008): The parietal cortex and episodic memory: An attentional account. Nat Rev Neurosci 9:613–625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciaramelli E, Grady CL, Moscovitch M (2008): Top‐down and bottom‐up attention to memory: A hypothesis (AtoM) on the role of the posterior parietal cortex in memory retrieval. Neuropsychologia 46:1828–1851. [DOI] [PubMed] [Google Scholar]

- Courtney SM, Petit L, Maisog JM, Ungerleider LG, Haxby JV (1998): An area specialized for spatial working memory in human frontal cortex. Science 279:1347–1351. [DOI] [PubMed] [Google Scholar]

- Cowan N (2001): The magical number 4 in short‐term memory: A reconsideration of mental storage capacity. Behav Brain Sci 24:87–114. [DOI] [PubMed] [Google Scholar]

- Diana RA, Yonelinas AP, Ranganath C. (2007): Imaging recollection and familiarity in the medial temporal lobe: A three‐component model. Trends Cogn Sci 11:379–386. [DOI] [PubMed] [Google Scholar]

- Ekstrom AD, Bookheimer SY (2007): Spatial and temporal episodic memory retrieval recruit dissociable functional networks in the human brain. Learn Mem 14:645–654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekstrom AD, Copara MS, Isham EA, Wang W‐C, Yonelinas AP (2011): Dissociable networks involved in spatial and temporal order source retrieval. NeuroImage 56:1803–1813. [DOI] [PubMed] [Google Scholar]

- Finke C, Braun M, Ostendorf F, Lehmann T‐N, Hoffmann K‐T, Kopp U, Ploner CJ (2008): The human hippocampal formation mediates short‐term memory of colour–location associations. Neuropsychologia 46:614–623. [DOI] [PubMed] [Google Scholar]