Abstract

The present fMRI study examined whether upper‐limb action classes differing in their motor goal are encoded by different PPC sectors. Action observation was used as a proxy for action execution. Subjects viewed actors performing object‐related (e.g., grasping), skin‐displacing (e.g., rubbing the skin), and interpersonal upper limb actions (e.g., pushing someone). Observation of the three action classes activated a three‐level network including occipito‐temporal, parietal, and premotor cortex. The parietal region common to observing all three action classes was located dorsally to the left intraparietal sulcus (DIPSM/DIPSA border). Regions specific for observing an action class were obtained by combining the interaction between observing action classes and stimulus types with exclusive masking for observing the other classes, while for regions considered preferentially active for a class the interaction was exclusively masked with the regions common to all observed actions. Left putative human anterior intraparietal was specific for observing manipulative actions, and left parietal operculum including putative human SII region, specific for observing skin‐displacing actions. Control experiments demonstrated that this latter activation depended on seeing the skin being moved and not simply on seeing touch. Psychophysiological interactions showed that the two specific parietal regions had similar connectivities. Finally, observing interpersonal actions preferentially activated a dorsal sector of left DIPSA, possibly the homologue of ventral intraparietal coding the impingement of the target person's body into the peripersonal space of the actor. These results support the importance of segregation according to the action class as principle of posterior parietal cortex organization for action observation and by implication for action execution. Hum Brain Mapp 36:3845–3866, 2015. © 2015 The Authors Human Brain Mapping Published by Wiley Periodicals, Inc.

Keywords: vision, skin‐displacing actions, object manipulation, interpersonal actions, functional imaging

INTRODUCTION

Posterior parietal cortex (PPC) is generally viewed as a mosaic of areas primarily involved in sensorimotor transformations for action planning [Andersen and Buneo, 2002; Bremmer et al., 2001; Culham and Valyear, 2006; Graziano and Cooke, 2006; Guipponi et al., 2013; Huang et al., 2012; Jeannerod et al., 1995; Kaas et al., 2011; Pitzalis et al., 2013; Rizzolatti et al., 1998; Stepniewska et al., 2009]. A debated question is whether PPC is organized in terms of single or multiple effectors. Brain imaging studies in which subject performed actions have shown that some PPC sectors encode specific, single‐effector‐related actions such as grasping, reaching, pointing, and eye movements, and other sectors actions involving multiple effectors [Binkofski et al., 1998; Cavina‐Pratesi et al., 2010; Connolly et al., 2003; Culham et al., 2003; Filimon et al., 2009; Filimon, 2010; Frey et al., 2005; Gallivan et al., 2011; Hinkley et al., 2009; Konen et al., 2013; Levy et al., 2007; Nishimura et al., 2007]. This has led some [Heed et al., 2011; Leoné et al., 2014] to suggest an organization based on the nature of actions planned rather than effectors, at least for body actions, as the eyes may be controlled by separate parietal regions [Beurze et al., 2009; Vesia and Crawford, 2012; Van Der Werf et al., 2010]. To test this idea, further one needs to compare actions of a different nature performed with the same effector, such as manipulating objects, scratching one's own skin, or striking a conspecific all performed with the upper arm. Such an imaging experiment, however, is impossible to perform, as these movements alter the magnetic field of the scanner and some targets such as conspecifics simply do not fit in such a constrained environment.

Given these technical difficulties, we explored whether action observation can serve as a proxy for action execution providing us with an alternative approach for ascertaining the organization of human PPC. This approach is supported by a vast literature showing that the observation of actions performed by others activates the same parietal regions as those active during actual movements [for review see: Caspers et al., 2010; Grosbras et al., 2012; Molenberghs et al., 2012; Rizzolatti et al., 2014; Rizzolatti and Craighero, 2004]. Such a strategy was successfully used in studying climbing and locomotion actions [Abdollahi et al., 2013], which could not be performed in the scanner. These authors used action observation as proxy, contrasting object‐grasping actions with climbing, both of which use the hand as the effector. The results revealed distinct localizations for observing the two classes of hand actions. One ‐object‐grasping‐ was localized, as expected, in putative human anterior intraparietal (phAIP) area, the other ‐climbing‐ in the dorsal superior parietal lobule (SPL) extending into precuneus.

The intent of this study is to clarify the PPC organization for actions classes that is actions differing in goals and in the way the effectors move to reach those goals, as in Abdollahi et al. [2013], but that are executed with the same effectors. We addressed this issue by presenting, in a fMRI experiment, human volunteers with three classes of actions, all performed with the upper limb: objects manipulated by an actor (object‐manipulative actions), actions directed toward the actor's own skin (skin‐displacing actions), and actions directed toward another person (interpersonal actions). The results showed that observing these action classes activate specific parietal areas according to their action goal, in addition to common visual and visuo‐motor areas. We predicted from previous experiments [Abdollahi et al., 2013; Jastorff et al., 2010] that putative human phAIP would be specifically activated by the class of manipulative actions. phAIP is supposedly connected to the premotor and occipito‐temporal levels of the action observation network (AON), as is its monkey homologue AIP [Nelissen et al., 2011]. Therefore, we complemented the study of the activation pattern by psychophysiological interactions of the specific parietal sites to compare their connectivity with that of phAIP.

MATERIAL AND METHODS

Participants

Twenty eight volunteers participated in the main experiment (14 female, mean age: 23 years, range 19–29 years). Two also participated in a subsequent control experiment performed with 13 volunteers (6 females, mean age = 23.9, range 20–30). All participants were right‐handed, had normal or corrected‐to‐normal visual acuity and no history of mental illness or neurological diseases. The study was approved by the Ethical Committee of Parma Province and all volunteers gave written informed consent in accordance with the Helsinki Declaration prior to the experiment.

Stimuli and Experimental Conditions

Main experiment

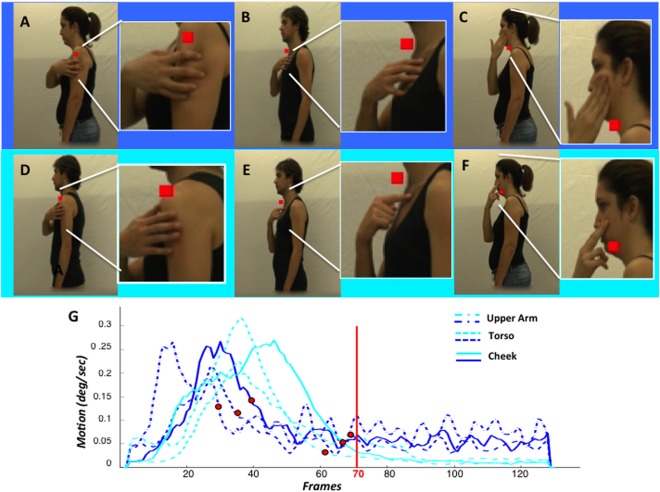

Experimental stimuli of the main experiment consisted of video‐clips (448 × 336 pixels, 50 frames/s) showing an actor, viewed from the side, performing hand actions directed toward objects (manipulative actions), his own skin (skin‐displacing actions) or another person (interpersonal actions, Fig. 1). Each of these three action classes included 16 videos, showing four different exemplars of each class.

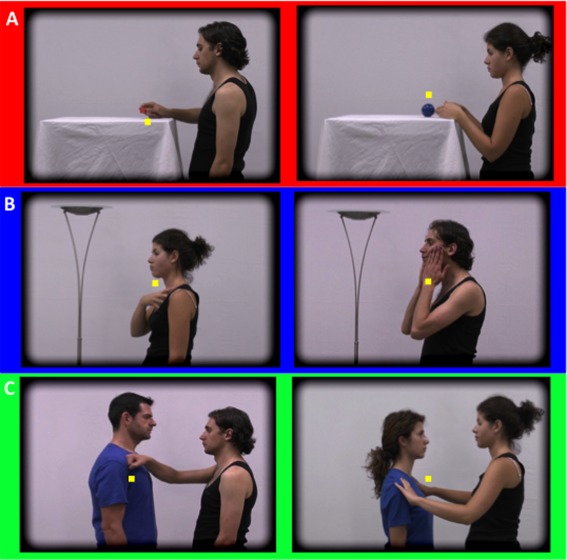

Figure 1.

Action classes in the main experiment: manipulative (A, red), skin‐related (B, blue), and interpersonal actions (C, green). Frames from action videos: using the right hand (left) or both hands (right). Yellow dot: fixation point, above or below action target.

The videos of manipulative hand actions displayed human actors using the right hand or both hands, to grasp or drag an object or both hands to drop or push an object. The actions were performed by a male or female actor on a small red cube or larger blue ball, yielding four versions of each action exemplar (Table 1). The objects lay on a table, with the hands on the table next to the object, until the action began. Hence, manipulative actions involved only the wrist and fingers.

Table 1.

Schematic overview of experimental videos

| Action class | Target size | Action positive | Action negative | Actors |

|---|---|---|---|---|

| Manipulation |

Big ball Small cube |

Grasp (R hand) Drag (R hand) |

Drop (2 hands) Push (2 hands) |

Female Male |

| Skin‐displacing |

(L) Torso (L) cheek |

Massage(R hand) Rub (2 hands) |

Hit (2 hands) Scratch (R hand) |

Female Male |

| Interpersonal |

Short girl High boy |

Touch (R hand) Hug (2 hands) |

Punch (R hand) Push (2 hands) |

Female Male |

The videos of skin‐displacing actions showed human actors using the right hand to scratch the skin or massage their muscles and overlying skin, or using both hands to hit or rub the skin. The actions were performed by a male or female actor and were directed toward either the chest or face of the actor for bimanual actions, or to the left side of the face or chest for the unimanual actions, again yielding four versions of each action exemplar. At the start of the action, the arms rested along the body, hence the actions also involved the shoulders and elbows, in addition to the wrist and fingers.

The videos of interpersonal actions showed human actors using the right hand to touch or punch another person, or both hands to hug or push this other person. The actions were performed by a male or female actor toward either a short girl or a tall boy, again yielding four versions of each exemplar. In these actions, the target person did not react. Again, at the start of the actions, the arms rested along the body. Hence these actions, as the skin‐directed actions, involved shoulder and elbow in addition to the fingers and wrist. Thus, the first action class involved only the distal parts of the upper limb, while the last two involved the whole upper limb. Of course the upper‐limb segments moved in different ways in the three action classes, depending on the goal to be achieved, but this is part of the definition of an action class.

We took extensive precautions to equalize as many visual, motor, and cognitive aspects as possible across the three classes (Table 1): the same two actors, wearing the same clothing and performing the actions without expressing any particular emotion, same proportion of uni‐ and bimanual actions, same proportion of actions with positive and negative valence, and same proportion of small and large targets. Efforts were also made to equalize the scenes in which the action took place. Lighting and background were identical as well as the general organization of the scene. The actor stood on the right, kept his/her hands in the middle while performing the action, with the target on the left. The objects to be manipulated were placed on a table, occupying the left part of the scene, as did the person targeted by the interpersonal actions. To have an “object” on the left in the skin‐directed actions we introduced a lamp in these videos.

Two types of control stimuli were used. The rationale is as follows: an action may be described as an integration of two main components: a figural component (shape of the body) and a motion component (motion vectors of the body). To control both, we used static images taken from the action videos, and “dynamic scrambled” stimuli derived by animating a noise pattern with the motion extracted from the original action video. The static images consisted of three frames taken from the beginning, middle, and end of the video to capture the shape of the actor at different stages of the action. These images control both for shape and for lower‐order static features such as color or spatial frequency. To create “dynamic scrambled” control videos, the local motion vector was computed for each pixel in the image on a frame‐by‐frame basis [Pauwels and Van Hulle, 2009]. Subsequently, these vectors were used to animate a random‐dot texture pattern (isotropic noise image). The resulting videos contained exactly the same amount of local motion as the originals, but no static configuration information. These videos were further processed in two steps, improving the procedure of temporal scrambling used in [Abdollahi et al., 2013]. First, each frame of the video was divided into 128 squares, with increasing sizes toward the edge of the frame (from 0.26° to 1.2° side). The starting frame was randomized for each square, thereby temporally scrambling the global motion pattern. Second, to remove the biological kinematics, the optic flow in each frame was replaced by a uniform translation with mean (averaged over the square) speed and direction equal to that of the optic flow. This procedure eliminated the global perception of a moving human arm and hand, but within each square, local motion remained identical to that in the original video. Mean luminance of “dynamic scrambled” and original videos was identical. Each video clip had its corresponding two types of control stimuli, resulting in a 3 × 3 design with factors class of action (three levels) and type of video (three levels). To assess the visual nature of the fMRI signals, we included an additional baseline fixation condition. In this condition, a gray rectangle of the same size and average luminance as the videos was shown. We thereby minimized luminance changes, and thus pupil size changes, across the conditions.

Control experiment

In the first session of the control experiment, the skin‐displacing action videos were used again. Stimuli in the second session consisted of video clips (448 × 336 pixels, 50frames/s, 2.6 s) showing an actor performing two skin‐directed action subclasses: simple touch or skin‐displacing actions characterized by a motion of the hand to or over the skin of a body region. The latter subclass included the same four exemplars as in the main experiment. Specifically, these two skin‐targeting action subclasses possessed similar reaching stages but differed in their final stages: in the simple touch actions, the actor's right hand simply reached to the body region then remained motionless; for skin‐displacing actions, the skin was actively manipulated once the body part was contacted. Both skin‐related action subclasses included four different exemplars: in the skin‐displacing actions, the exemplars were those used in the main experiment (massaging, scratching, rubbing, and hitting); in the simple touch action, exemplars represented four types of touch: contacting the skin of the body region with one, two, or four fingers, or with the lateral edge of the hand. Each of these eight actions was executed by two actors (male and female) always with the right hand to three body regions: upper torso, left cheek, and left shoulder. The three body parts and the two action subclasses produced one 3 × 2 experimental design with six conditions plus one fixation condition, with the latter used as explicit visual baseline. Because we wanted only to compare the two skin‐related action subclasses directly, we did not use control conditions.

General stimulus characteristics

All videos in both the main and control experiments measured 17.7° by 13.2° and lasted 2.6 s. The edges were blurred with an elliptical mask (14.3° × 9.6°), leaving the actor and the background of the video unchanged, but blending it gradually and smoothly into the black background around the edges. A 0.2° fixation point was shown in all conditions. For action presentations of the main experiment, this point was presented in two positions, above and below the position where the movement took place in the video (Fig. 1), to avoid retinotopic effects [Jastorff et al., 2010]. The variation in fixation point was similar for the three classes of action with the action occurring above the fixation point in half the videos and below in the other half. How this was achieved differed between the action classes, as they had different targets. For manipulative actions, the fixation point was positioned below the position of the hands for grasping and dropping, and above that for pushing and dragging. For skin‐displacing actions, it was positioned at a level between the two targets: the cheek and the chest, and for interpersonal actions it was positioned between the targets: the shoulders levels of the short and tall persons. For control stimuli, the fixation point was placed at the same position as in the original videos. In the first session of the control experiment, the fixation point was positioned as in the main experiment. In the second session, the fixation point was positioned just above (4 runs) or below (the remaining 4 runs) the hand‐body contact zone.

Presentation order of conditions

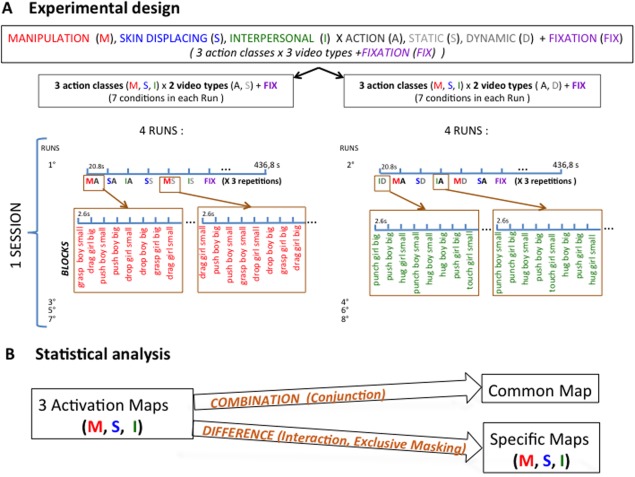

The main experiment included a single session per subject. Runs included either static or dynamic control blocks, reducing the 3 × 3 design to two 2 × 3 designs (Fig. 2A). Four runs of each type (either static or dynamic control) were sampled per subject in an interleaved manner during a single session (in half of the subjects uneven runs had static controls, in the other half the even runs). Within a run, the seven conditions (three action classes, three controls and the baseline) of the main experiment were presented in blocks lasting 20.8 s and repeated twice for a total of 436.8 s (Fig. 2A). Each experimental block included eight videos of any given class, corresponding to four action exemplars and both genders. Both the individual videos within a block and the order of the blocks were selected pseudorandomly, and counterbalanced across runs and participants. Over the course of 2 runs, all video clips for a given class (n = 16: 4 exemplars × 2 genders × 2 targets) were shown three times. In the runs with static control blocks, each of the three static blocks of a given class used a different frame of the video (start, middle, and end).

Figure 2.

Methods: A: Order of conditions of the main experiment; B: statistical analysis of the main experiment.

The control experiment included two MRI sessions. The first consisted of 8 runs including two experimental conditions (skin‐displacing actions and their static or dynamic controls) plus the fixation condition, as an explicit visual baseline. As this session was intended to localize OP in each subject, the skin‐displacing action/control stimuli were exactly as used in the main experiment. Dynamic and static control stimuli were placed in the same run but in different repetitions of the blocks. In each 20.8s block, eight videos were shown. Each run repeated conditions five times (i.e., three static and three dynamic control blocks), for a total run duration of 375 s. In half the runs, the control condition was presented first and the skin‐displacing actions second; in the other half, this order was inverted. The fixation was always the last condition of the three. Across the six action blocks of a run, the 16 variants of skin‐displacing videos were shown three times. The second session was devoted to presenting the videos of skin‐directed action subclasses. The seven conditions (3 body parts × 2 subclasses, plus fixation baseline) were presented in 20.8s blocks and repeated three times in each run totaling 436.8s. Each experimental block included all eight videos of a given factorial condition, corresponding to four action exemplars and both genders. Both the order of the blocks and the videos within a block were selected pseudorandomly and counterbalanced across runs and participants. Eight runs were sampled per subject during the second session. All runs started with the acquisition of four dummy volumes to assure that the fMRI signal had reached a steady state.

Presentation and Data Collection

Participants lay supine in the bore of the scanner. Visual stimuli were presented in the fronto‐parallel plane by means of a head‐mounted display (60 Hz refresh rate) with a resolution of 800 horizontal pixels × 600 vertical pixels (Resonance Technology, Northridge, CA) in each eye. The display was controlled by an ATI Radeon 2400 DX dual output video card (AMD, Sun Valley, CA). Sound‐attenuating headphones were used to muffle scanner noise and give instructions to subjects. The presentation of the stimuli was controlled by E‐Prime software (Psychology Software Tools, Sharpsburg, PA). To reduce the amount of head motion during scanning, the subjects’ head was padded with PolyScan™ vinyl‐coated cushions. Throughout the scanning session, eye movements were recorded with an infrared eye tracking system (60 Hz, Resonance technology, Northridge, CA).

Scanning was performed using a 3T MR scanner (GE Discovery MR750, Milwaukee, ILL) with an 8‐parallel‐channels receiver coil, located in the University Hospital of the University of Parma. Functional images were acquired using gradient‐echoplanar imaging with the following parameters: 49 horizontal slices (2.5 mm slice thickness; 0.25 mm gap), repetition time (TR) = 3 s, time of echo (TE) = 30 ms, flip angle = 90°, 96 × 96 matrix with FOV 240 (2.5 × 2.5 mm in plane resolution), and ASSET factor of 2. The 49 slices contained in each volume covered the entire brain from cerebellum to vertex. A three‐dimensional (3D) high‐resolution T1‐weighted IR‐prepared fast SPGR (Bravo) image covering the entire brain was acquired in one of the scanning sessions and used for anatomical reference. Its acquisition parameters were as follows: TE/TR 3.7/9.2 ms; inversion time 650 ms, flip‐angle 12°, acceleration factor (ARC) 2; 186 sagittal slices acquired with 1 × 1 × 1 mm3 resolution. A single scanning session required about 90 min. Thousand one hundred and sixty eight volumes were collected in a main experimental session and 1,008 and 1,168 volumes in the first and second sessions of the control experiment, respectively.

Analysis of Main Experiment

Data analysis was performed using the SPM8 software package (Wellcome Department of Cognitive Neurology, London, UK) running under MATLAB (The Mathworks, Natick, MA). The preprocessing steps for the main experiment involved: (1) realignment of the images, (2) co‐registration of the anatomical image and the mean functional image, (3) spatial normalization of all images to a standard stereotaxic space (MNI) with a voxel size of 2 × 2 × 2 mm and (4) smoothing of the resulting images with an isotropic Gaussian kernel of 6 mm. Data from two subjects were discarded because more than 10% of the volumes were corrupted, either because the signal strength varied more than 1.5% of mean value, or because scan to scan movement exceeds 0.5 mm per TR in any of the six realignement parameters (art repair in SPM8). For each subject, the duration of conditions and onsets were modeled by a general linear model (GLM). The design matrix was composed of 13 regressors: seven modeling the conditions used (three actions, three controls, and baseline) and six from the realignment process (three translations and three rotations). All regressors were convolved with the canonical hemodynamic response function. We performed both statistical parametric mapping (SPM) and psychophysiological interaction analyses.

For the SPM, we calculated contrast images for each participant and performed a second‐level random effects analysis [Holmes and Friston, 1998] for the group of 26 subjects. We defined three different types of statistical maps (Fig. 2B). In the computation of these maps, simple or interaction contrasts were defined at the first level, while conjunctions and masking were made at the second level. The first type of map took the conjunction [conjunction null, Nichols et al., 2005] of the contrasts comparing the action condition to the static and dynamic control conditions. This conjunction was inclusively masked by the contrast action condition versus fixation at P < 0.01 uncorrected, to define the activation map for each action class, as in the previous study [Abdollahi et al., 2013]. Thus, the activation map indicates the network of visually responsive brain regions that are significantly more activated by the observation of that action class than by the static or the dynamic control conditions. The second type of map used in the present analysis is the conjunction [conjunction null, Nichols et al., 2005] of the activation maps of the three action classes. It defines the common activation map, including the visually responsive regions, which were significantly activated relative to controls by the observation of all three actions.

The third type of map examined the interaction between action class and type of video, to determine which regions were differentially activated by the observation of a particular action class compared with the observation of the two remaining classes. This interaction analysis ensures that the differences in activity reported cannot be explained by lower‐order factors also present in control conditions. It can be written as the conjunction of the interactions for the static controls: (a1‐st1) − 1/2(a2‐st2)‐ ½ (a3‐st3) and for the dynamic controls (a1‐ds1) − 1/2(a2‐ds2)‐ ½ (a3‐ds3), where a, st, and ds are the action, static, and dynamic scrambled condition and 1 is the class of interest, and 2, 3 the two other classes. To ensure that the interaction is due to strong activity in the action condition for the class of interest (a1) rather than strong activities of the control conditions of the two other classes (st2,3 and ds2,3), this contract was masked with the activation map of the class of interest at P < 0.01. It was also inclusively masked with the contrast a1l –fix at P < 0.01 uncorrected to ensure that the activation reflected visual responses. As an interaction guarantees only that the activation by one class is larger than that evoked by the two other classes, we exclusively masked the contrast with the activation maps of the two other classes at P < 0.01 uncorrected. This ensures that there is little or no significant activation for the other classes. This combination of interaction with its inclusive and exclusive masking defines the specific map for a given class. This map includes the visually responsive regions that are activated specifically by observing a given action class. Replacing the activation maps of the other two classes by the less stringent common activation map as exclusive mask of the interaction, while keeping the two inclusive maskings, defines the preferential map of a given class. This possibility was explored only when the specific map for an action class was empty. All maps were thresholded at P < 0.05 FWE corrected at either voxel or cluster level. Voxels reaching P < 0.001 uncorrected are indicated in the activation maps and the common map (figures and table) for illustrative purposes and to avoid threshold effects (e.g., in interhemispheric asymmetry).

A psycho‐physiological interaction (PPI) examines how functional connectivity depends on stimulus conditions. It assesses a limited effective connectivity in that a direction away from the seed region is implied [Friston et al., 1997; Gitelman et al., 2003]. As our interest, in this study, is the functional organization of the PPC, we used PPIs to investigate which cortical regions receive inputs from those parietal regions selectively encoding the observation of an action class, that is sites belonging to class‐specific maps. We used the contrast “action minus controls,” defining the activation maps, to define the seed regions in individual subjects and retained the voxels reaching P < 0.05 centered on a local maximum (LM) within 20 mm of the group LM of the specific parietal region (interaction in random effects analysis). The threshold for the PPI was set at P < 0.05 FWE corrected for multiple comparisons at either voxel or cluster level. As we are interested in the connectivity of the specific sites with the other parts of the activation map to which they belong, we used the activation map at P < 0.01 as a priori region of interest (ROI) for small volume correction (SVC) to supplement the whole brain analysis.

The activation SPMs, interaction SPMs, and PPI maps were projected (enclosing voxel projection) onto flattened left and right hemispheres of the human PALS B12 atlas [Van Essen, 2005, http://sumsdb.wustl.edu:8081/sums/directory.do?id=636032] using the Caret software package [Van Essen et al., 2001, http://brainvis.wustl.edu/caret]. Occipital activation sites were identified by comparison with the maximum probability atlas of retinotopic regions [Abdollahi et al., 2014]. Activity profiles plotted the mean (and standard error), across subjects, of the MR signal change from the fixation baseline, in percent of average activity, for the different conditions of the experiment. Profiles of specific sites were computed by averaging the voxels reaching P < 0.001 uncorrected in the interaction, with the masking described above. These profiles were obtained by a split analysis, using two runs to define the ROI and 6 runs to compute the activity in the different conditions. The main purpose of the activity profiles, which plot the visual responses in the different conditions, was to confirm (1) the nature of the interaction (we look for increased activation in the action observation of interest) and (2) the visual nature of the responses. In addition to confirming that the contrasts operate as intended, they also provide a sense of the degree of selectivity. Activity profiles were also defined for the seed regions of the PPI analysis to functionally confirm the definition of the sites. Finally, activity profiles were also used to confirm the involvement of cytoarchitectonic areas in a specific activation site. In this case, the threshold for the interaction defining the specific activation was lowered to P < 0.05 uncorrected, maintaining all the masking procedures, and profiles were computed for all voxels of an area reaching that level.

Analysis of Control Experiment

The 16 runs included in the two control sessions were preprocessed together, following the same steps as for the main experiment. The two sessions were then analyzed (SPM8) separately using the data of all 13 subjects. The first session was intended to localize OP, the OP region specific for skin‐displacing action observation, in each subject. To that end, a GLM was computed for each subject with the design matrix: skin‐displacing action, corresponding control (static and dynamic), fixation, and six regressors from the realignment process (three translations and three rotations). All regressors were convolved with the canonical hemodynamic response function. An OP ROI was defined in individual subjects in three steps. First the contrast skin‐displacing action versus control masked with skin‐displacing action versus fixation (both at P < 0.05 as a threshold) was computed for each subject. Second, we looked for the local maxima nearest to the OP site obtained in the main experiment (−62, −18, 20). Finally, we defined 27‐voxel ROIs around the individual maxima. As for the PPI analysis, we accepted only local maxima at less than 20 mm from the original group site. As one subject did not meet this criterion, this subject was omitted and the following analysis was performed on a sample of 12 subjects.

The GLM of the second session provided a design matrix including the 13 following regressors: skin action torso, skin action shoulder, skin action cheek, touch torso, touch shoulder, touch cheek, fixation, and six motion regressors taken from realignment process. This GLM was created for each subject independently. Activity profiles were computed in the OP ROIs of individual subjects and then averaged across all 10 subjects. To investigate the relationship between MR activity and stimuli, we calculated the speed of motion in the videos as a function of time (Fig. 9). To that end, we first extracted the motion in each frame of a video (using the same algorithm to compute the displacement between successive frames of each pixel as that used to create control stimuli, and averaging over the pixels), and then averaged the speed of motion across all eight videos of each condition.

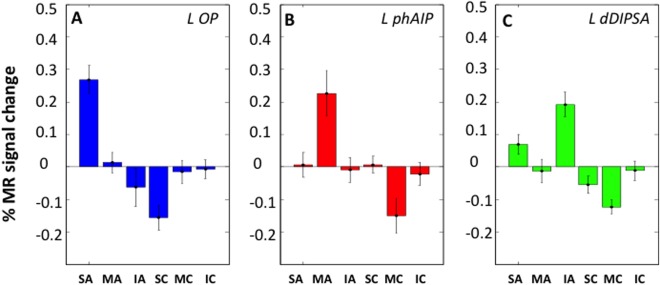

Figure 9.

PPI analysis of main experiment. Aand B average activity profiles of seed regions: OP (A, n = 21), left phAIP (B, n = 19); C and D: PPI maps of left phAIP (C) left OP (D) on flatmap of left hemisphere. Colored patches indicate regions reaching P < 0.001 uncorrected and numbers indicate significant sites (Table 3). In A and B vertical bars indicate SE. Stars in C, D: seed regions; Black outlines in C, D: activation maps (P < 0.01) for observing manipulation (C) and skin‐displacing actions (D), taken from Figures 3 and 4.

RESULTS

Three classes of actions, and their corresponding controls, were presented to the volunteers while they fixated a target in the center of the display. These three action classes were: object manipulation; skin‐displacing actions; interpersonal actions. Examples are shown in Figure 1 (see also Table 1). Eye recordings (see Methods) of all but one of the 28 subjects entering the GLM were suitable for analysis. The subjects averaged 9.6 (SD 1.7) saccades per minute. The number of saccades did not differ across conditions (F 6,20 = 0.3 P = 0.9).

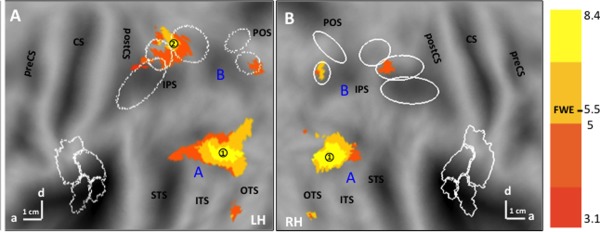

Activation Maps Resulting From the Observation of Each Action Class

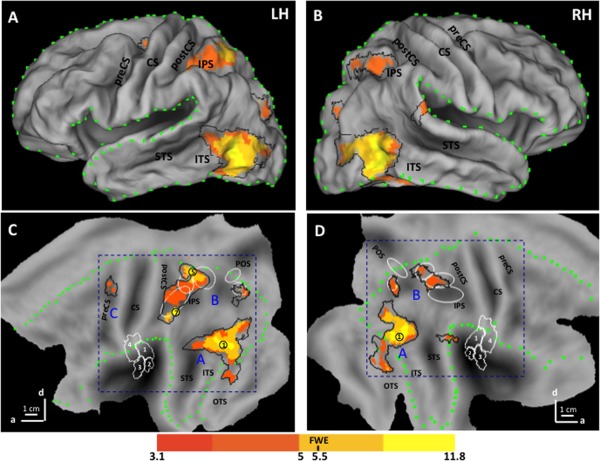

Figure 3 shows the activation map resulting from the observation of object manipulation actions on a rendered brain (3A), an inflated brain (3B), and on flat‐maps (3C and D). The map was generated by the conjunction of the contrasts “action observation versus static and versus dynamic controls” (see Methods). The colored areas in C and D reached P < 0.001 uncorrected (see color scale), while the black outlines show the P < 0.01 uncorrected region used for masking in a subsequent analysis (see Methods). The distance between the outline and the colored voxels provides an estimate of the steepness of the SPM. The numbers correspond to activation sites reaching significance (see methods) and listed in Table 2.

Figure 3.

SPM corresponding to the activation map for observation of manipulation: left hemisphere (LH) on rendered brain (A), inflated Caret brain (B), and flatmap (C) and the right hemisphere (RH) on flatmap (D). Color code indicates t score for the conjunction of contrasts action versus static and dynamic controls (see inset); yellow dots and numbers: local maxima reaching FWE corrected level (Table 2); Blue letters A, B, C: three levels of AON; black outlines P < 0.01 uncorrected level; green dotted lines: border of lateral view in A and B; white ellipses from rostral to caudal: phAIP, DIPSA, DIPSM, POIPS, VIPS [Georgieva et al., 2009]; white contours OP1‐4 regions [Eickhoff et al., 2007]. Scale indicates anterior (a) and dorsal (d) directions; one branch indicates 1 cm. Abbreviations: Cgs, cingulate sulcus; SFS, superior frontal sulcus; IFS, inferior frontal sulcus; PreCS, precentral sulcus; CS, central sulcus; PostCS, postcentral sulcus; IPS, intraparietal sulcus; POS, parieto‐occipital sulcus; STS, superior temporal sulcus; ITS, inferior temporal sulcus; OTS, occipito‐temporal sulcus; ColS, collateral sulcus.

Table 2.

Local maxima of the SPM of the activation maps and the common activation map

| Manipulation | Skin‐displacing | Interpersonal | Common | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Left | Right | Left | Right | Left | Right | Left | Right | ||

| Occipito‐temporal | |||||||||

| EVC | −22 −88 −6 | ||||||||

| 1 pITS | −42 −72 2 | 48 −66 2 | −42 −70 6 | 46 −68 4 | −42 −70 6 | 46 −68 2 | 1 | −42 −72 4 | 46 −68 4 |

| 2 OTS | −42 −46 −20 | −42 −46 −16 | 42 −42 −20 | −42 −44 −18 | 42 −46 −14 | −42 −46 20 | 42 −46 14 | ||

| 3 STG | 56 −32 26 | 64 −34 18 | 58 −40 16 | ||||||

| Parietal | |||||||||

| 4 VIPS | −22 −88 28 | 28 −76 28 | 24 −82 40 | −18 −86 22 | 26 −80 34 | −22 −88 28 | 26 −80 32 | ||

| 5 DIPSM | −30 −54 66 | 24 −64 60 | −32 −54 64 | 26 −54 60 | 2 | −24 −60 60 | |||

| 6 DIPSA | 34 −54 58 | −32 −54 64 | 26 −54 60 | −32 −52 64 | 28 −54 62 | 32 −54 54 | |||

| 7 phAIP | −48 −42 50 | ||||||||

| 8 OP | −56 −28 20 | ||||||||

| Premotor | |||||||||

| 9 D PrCG | −46 −6 52 | 46 −2 54 | |||||||

| 10 D PrCS | −28 −14 52 | −28 −10 56 | |||||||

Numbered sites reach FWE corrected P < 0.05 at cluster level (red) or voxels level (yellow hatching) in at least one map; other sites reach P < 0.001 uncorrected in at least 10 voxels.

EVC: early visual cortex = V1‐3 (here ventral V3).

The activation pattern was largely symmetrical, although some local maxima were present in only one hemisphere and premotor activation was left‐sided (Table 2). Both hemispheres were activated at the three processing stages typical of action observation, occipito‐temporal (A in Fig. 3), parietal (B in Fig. 3), and premotor cortex (C in Fig. 3) [Caspers et al., 2010; Jastorff et al., 2010; Molenberghs et al., 2012; Rizzolatti et al., 2014; Rizzolatti and Craighero, 2004]. The occipito‐temporal activation reached maximum near the posterior end of the inferior temporal sulcus (ITS, 1 in Fig. 3), in the vicinity of human middle temporal (hMT)/V5+ which overlaps with the extrabody area [Ferri et al., 2013]. This activation shows two rostrally‐directed branches: a dorsal one extending to the posterior parts of superior temporal sulcus (pSTS) on the left and posterior superior temporal gyrus (STG) on the right, and a ventral branch extending into the posterior occipito‐temporal sulcus (pOTS). These two branches most likely correspond to the upper and lower banks of monkey STS, respectively [Jastorff et al., 2012b].

The parietal activation included three bilateral motion‐sensitive areas [Sunaert et al., 1999]: ventral intraparietal sulcus (VIPS), dorsal intraparietal sulcus medial (DIPSM), and dorsal intraparietal sulcus anterior (DIPSA) areas (3 of 5 white ellipses in Fig. 2). It has been proposed that DIPSM and DIPSA correspond in the monkey to the anterior sector of the lateral intraparietal (LIP) area and posterior AIP, respectively [Durand et al., 2009; Vanduffel et al., 2014]. The PPC activation included the left phAIP region, proposed to be the homologue of the anterior part of monkey AIP [Georgieva et al., 2009; Vanduffel et al., 2014]. As typical of action observation, the activations were more extensive in the left hemisphere [Buccino et al., 2001; Jastorff et al., 2010; Wheaton et al., 2004], and only two sites (DIPSM and phAIP) in the left hemisphere reached significance The premotor activation was located in the upper portion of the precentral sulcus of the left hemisphere, but failed to reach significance.

The activation maps for observing skin‐displacing actions are shown in Figure 4A,B. The map included the three levels of AON, as in Figure 3. The activation pattern in the right hemisphere is similar to that resulting from the observation of manipulative actions, with the activation reaching significance at the DIPSM/DIPSA border (Table 2). Differences from the manipulation map appear primarily in left parietal cortex: skin‐displacing actions activated a smaller part of phAIP than manipulation actions and, most interestingly, significantly activated a sector (8 in Fig. 4A) overlapping the parietal OP regions (white outlines). In addition, the premotor activation was bilateral and located on the precentral gyrus, reaching significance in the left hemisphere.

Figure 4.

SPMs corresponding to the activation maps for observation of skin‐displacing (A,B) and interpersonal actions (C,D) on flatmaps of left (A,C) and right (B,D) hemispheres. Same conventions as Figure 3.

The activation maps for observing interpersonal actions are shown in Figure 4C,D. Again the occipito‐temporal, parietal, and premotor levels of the AON are activated. The activation pattern in the right hemisphere is similar to that obtained for the two other action classes. In the left hemisphere, differences can be observed in the parietal cortex: the observation of interpersonal actions did not activate the OP regions, unlike observing skin‐displacing actions, and activated a smaller sector of phAIP than observing manipulation. Left phAIP included 425 voxels, 131 of which reach P < 0.001 uncorrected for observing manipulation, compared with 9 for interpersonal actions and 6 for skin‐displacing actions (chi2 = 236, df = 2, P < 0.001). In contrast, the observation of interpersonal actions produced a more significant DIPSA activation extending into DIPSM and more dorsally into SPL and elicited a stronger parieto‐occipital activation than did observing the two other action classes.

Activation Map Common to All Three Classes of Actions

The common activation map, that is the voxels belonging to all three activation maps, was relatively restricted and almost symmetrical across the two hemispheres, except for a more extensive parietal activation on the left (Fig. 5, Table 2). The occipito‐temporal activation was centered on pITS and extends toward the pSTS and to a lesser degree, pOTS. The parietal activation included a dorsal intraparietal sulcus (IPS) site centered on the DIPSM/DIPSA boundary, extending into the SPL. Other common parietal sites (right DIPSA, bilateral VIPS) did not reach significance (Table 2).

Figure 5.

SPM of the common activation map for observing all three action classes on flatmaps of left (A) and right (B) hemispheres. Same conventions as Figure 3.

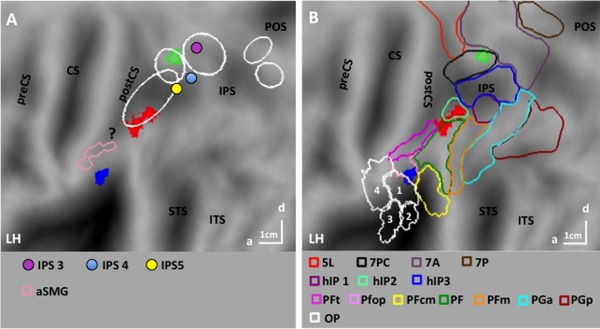

Specific Maps for the Action Classes

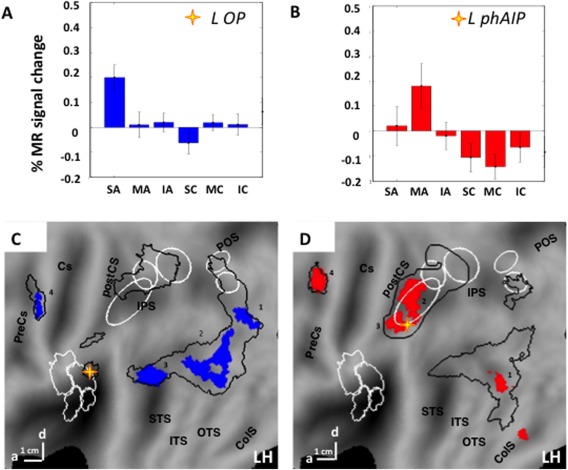

The sites specifically activated during the observation of one of the three action classes, that is, the voxels appearing in only a single activation map (see Methods), were restricted to left parietal cortex (Fig. 6A,B). The activation site specific for observing manipulative actions (red‐filled patch in Fig. 6A,B) was located in the rostral part of left phAIP, at some distance from IPS5, as expected [Konen et al., 2013]. It straddled the boundary between cytoarchitectonic PF [Caspers et al., 2006] and HIP2 [Choi et al., 2006]. The LM (−54, −36, 50) reached the significance level (t = 4.4, P < 0.001 unc) as did 47 voxels defining the site. Given its relatively large size, it did reach FWE correction at the cluster level (P < 0.04). Its activity profile (Fig. 7A) shows that the site is indeed very specific, indicating that the contrasts used to define the site operate as expected.

Figure 6.

SPMs of the specific maps for manipulation (red‐filled nodes) for skin‐displacing actions (blue‐filled nodes) and of the preferential map for interpersonal actions (green outlines with stripes) on flatmaps of left hemisphere superimposed (A) onto motion sensitive parietal regions DIPSA/M and phAIP (ellipses), outlines of aSMG region [pink, Peeters et al., 2013], and centers of retinotopic regions IPS3‐5, [Konen and Kastner, 2008, colored dots] and of IPL region involved in seen touch [?, Chan & Baker, 2015] and (B) on cytoarchitectonic OP regions 1–4 [Eickhoff et al., 2007], IPL regions [Caspers et al., 2006, see inset], IPS regions hIP1‐3 [Choi et al., 2006], and SPL regions [Scheperjans et al., 2008]. Other conventions as in Figure 3.

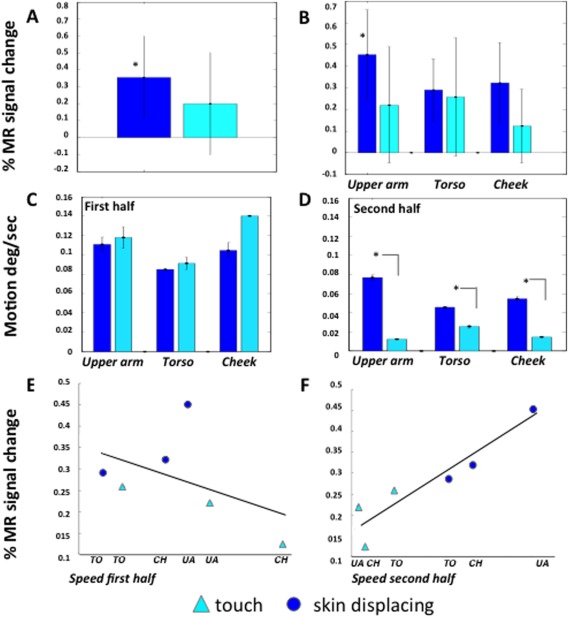

Figure 7.

Activity profiles of left OP (22 voxels, A), phAIP (33 voxels, B), and dDIPSA (10 voxels, C) sites (split analysis, see Methods). Vertical bars: standard errors (SEs). 2‐way ANOVAs: A: main effect video type F 1,25 = 23.1 (P < 10‐6), main effect of action class F 2,50 = 6.4 (P < 0.005), interaction F 2,50 = 30,3 (P < 10‐6); post hoc t tests skin‐displacing action condition > five other conditions (all P < 0.001); B: main effect video type F 1,25 = 9.5 (P < 0.005), main effect of action class F 2,50 = 4.2 (P < 0.05), interaction F 2,50 = 10.5 (P < 0.001); post hoc t‐tests: manipulative actions > five other conditions (all P < 0.01); C: main effect video type F 1,25 = 34.4 (P < 10‐6), main effect action class F 2,50 = 29.1 (P < 10‐6), interaction F 2,50 = 2.9 (P < 0.1), post hoc t‐tests: interpersonal actions > two other action classes (both P < 0.001).

One parietal site was specific for observing skin‐displacing actions (blue‐filled patch in Fig. 6A,B). This site in left OP cortex included 22 voxels reaching P < 0.001 uncorrected, but the LM (−58, −26, 20) reached FWE correction (t = 6.3, P < 0.005 FWE). The OP site is located on the boundary between Pfop [Caspers et al., 2006] and OP1 corresponding to the somatosensory area SII mapped by Eickhoff et al. [2007]. The activity profile of the OP site (Fig. 7B) illustrates its specificity, again confirming the efficiency of the masking procedure, complementing the interaction contrast. To further investigate the relative involvement of OP1 and PFop in the OP site, the threshold of the interaction was lowered to P < 0.05 uncorrected, yielding 70 voxels which were about equally divided between the two areas (Fig. 8A; OP1: 32; PFop: 38). The profiles of these two groups of voxels were very similar (Fig. 8B,C) confirming that the OP site belonged to both OP1 and PFop. There was no parietal site specific for observing interpersonal actions.

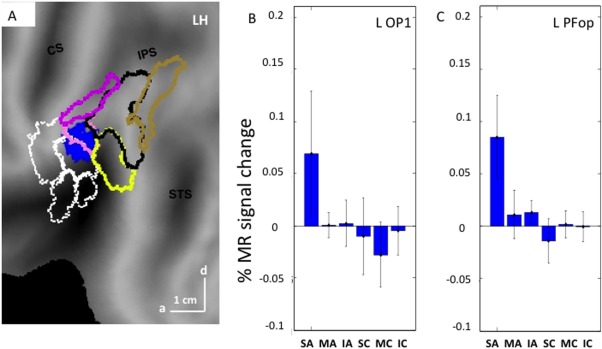

Figure 8.

A: SPM of the specific map for observing skin‐displacing actions on flatmap of LH, centered on the parietal operculum: blue patch: voxels reach P < 0.05 uncorrected in the interaction; B and C: activity profiles for the voxels selected in OP1 (B: 32 voxels) and PFop (C: 38 voxels). Vertical bars: SE.

Preferential Maps for Interpersonal Actions

The absence of any specific parietal region for observing interpersonal actions prompted us to assess which areas were preferentially activated for this class of actions, that is whether any visually responsive voxels, outside the common map, were more strongly activated in the interpersonal map than in the other two (see Methods). One region was preferentially activated during observation of interpersonal actions (Fig. 6A,B, green outline). This site was located in the SPL of the left hemisphere near the IPS, more precisely in the caudal half of cytoarchitectonic area 7PC [Scheperjans et al., 2008]. Its proximity to the common activation map may explain why the exclusive masking used to define specific sites prevented it from being specific. Indeed, the site was small, including 14 voxels reaching P < 0.001 uncorrected, but its LM (−30, −52, 62) reached FWE correction at voxel level (t = 5.9, P < 0.02 FWE). The profile of this site (Fig. 7C) indicates that it was activated more strongly by observation of interpersonal actions than any other condition, but also to a lesser degree, by observing skin‐displacing actions (see figure legend for statistical analysis).

Effective Connectivity of the Specific Parietal Regions

To better understand the connections of the parietal regions encoding the different classes of actions, we estimated their PPI with the factor action versus control conditions. The PPI analysis targeted the two specific parietal sites, left phAIP and left OP. We reasoned that if the OP region specific for observing skin‐displacing actions, plays a functional role in encoding others’ skin‐displacing actions, in a manner similar to phAIP in encoding others’ manipulative actions, then this region should exhibit a similar pattern of effective connectivity.

Seed regions were obtained in 21 subjects for the left OP, and in 19 for left phAIPs. The specificity of these two seed regions is illustrated by the activity profiles of left OP and phAIP (Fig. 9A,B), both confirming the procedures used to define specific regions in individual subjects. As predicted, those projections from left phAIP functionally modulated by the experimental conditions were directed to the premotor and occipito‐temporal regions of its own activation map (Fig. 9C, Table 3), that is those AON‐nodes receiving and sending connections to the parietal cortex [Nelissen et al., 2011]. The projections from the left OP (Table 3) modulated by the stimulus conditions were similarly directed also toward premotor and occipito‐temporal regions of its own activation network (Fig. 9D). Thus, the projections from OP and phAIP exhibit similar patterns targeting the AON levels other than PPC, with distinct locations within these levels.

Table 3.

The local maxima of the PPI analysis: whole brain and SVC

| Brain level | SVC level | |||||

|---|---|---|---|---|---|---|

| MNI coordinates | t value | FWE voxel | FWE cluster | FWE voxel | FWE cluster | |

| LphAIP | ||||||

| Col S | −34 −42 −14 | 5.5 | 0.9 | 0.9 | – | – |

| 1 pITS | −38 −66 −2 | 7 | 0.7 | 0.01 | 0.002 | 0.000 |

| 2 dors phAIP | −38 −40 50 | 5.3 | 0.9 | 0.006 | 0.06 | 0.000 |

| 3 ant phAIP | −48 −30 42 | 5 | 0.9 | 0.8 | 0.1 | 0.000 |

| ant STS | −42 −50 −2 | 4.7 | 0.8 | 0.9 | – | – |

| 4 dors PrCS | −26 −12 58 | 6.9 | 0.7 | 0.08 | 0.002 | 0.000 |

| L OP | ||||||

| 1 V3A/V3B | −24 −86 8 | 5.8 | 0.4 | 0.3 | 0.01 | 0.03 |

| 2 pMTG | −38 −60 6 | 7.4 | 0.016 | 0.000 | 0.000 | 0.000 |

| 3 ant STS | −52 −48 14 | 5.7 | 0.3 | 0.3 | 0.01 | 0.03 |

| 4 dors PrCG | −40 −6 50 | 10 | 0.000 | 0.5 | 0.000 | 0.002 |

Numbered sites reach P < 0.05 FWE corrected (bold) in whole brain or with SVC.

Dors, dorsal; ant, anterior.

Control Experiment: What Drives OP in the Skin‐Displacing Action Videos?

The skin‐displacing actions include three components that might explain the activation of OP: (1) arm reaching‐movement, (2) skin contact, and (3) hand movements over the skin. Because any contribution of the first component was excluded by the activity profile of OP (Figs. 7 and 8), a control experiment was designed to assess the effects of the latter two action components. To distinguish these components from one another, observing actions over the skin (Fig. 10A–C) and simple skin touch (Fig. 10D–F), applied to three body regions, upper arm (Fig. 10A,D), torso (Fig. 10B,E), and cheek (Fig. 10C,F), were compared.

Figure 10.

Control experiment: A–F frames taken from videos (insets: enlarged view of target region illustrating the skin displacements) showing skin‐displacing actions (A–C) and touch actions (D–F) toward left upper arm (A, D), left torso (B, E) or left cheek (C, F); G: average time‐course of speed for the six video types: darker blue indicating the skin‐displacing actions (see inset); red dot: time of skin contact.

As in the main experiment, subjects maintained fixation well, averaging 9.5 and 8.7 saccades/min in the first and second sessions, respectively. The number of saccades did not significantly differ between the three conditions of session one (F 2,7 = 0.62, P > 0.5), or the seven conditions of session two (F 6,3 = 0.12, P > 0.9).

The OP site could be recovered in 12 subjects using the data of session 1 contrasting observation of skin‐displacing actions with their controls. An ANOVA was performed to investigate the effects of factors type of action and body region, tested in session two, on the activation of OP as defined in session one. Both main effects reached significance, particularly Type of action (F 1,11 = 5.7, P < 0.05). As shown in Figure 11A, the activation was indeed stronger for skin‐displacing action observation than for the observation of simple touch. However, the interaction between type of action and body region proved significant (F 2,22 = 5.8, P < 0.01). The difference between observing skin‐displacing actions and simple touch was much greater for the upper arm and cheek than for the torso (Fig. 11B).

Figure 11.

Activity of OP site in control experiment: A, B: activity profiles (vertical bars: SE) for skindisplacing (dark blue) and touch (light blue) actions averaged over body regions (A) and for body regions separately (B).C, D: average seed in the first (C) and second half (D) of the videos for the six different conditions; E, F: correlation of OP activity with average speed in the first (E, r = −0.44, P > 0.3) and second (F, r = 0.93, P < 0.01) halves of the videos.

We noticed that the skin over the torso, being attached to the sternum, is far less mobile than that of the upper arm and cheek (Fig. 10A–F). Hence, we analyzed the speed of hand movements in the videos as a function of time (Fig. 10G), averaging speeds over the whole display. All curves reached a maximum in the first half of the video corresponding to the reaching component, but later stages differed among the subclasses. The speed for the skin‐displacing actions decreased until 30–40 frames after contact (red symbols), but speed continued to hover at some small but non zero value until the end of the video (Fig. 10G). The speed in the touch videos decreased later, approaching zero between 60 and 70 frames after onset, at contact (red symbols), and thereafter remained very close to zero. As a consequence throughout the second half of the video the speed was higher for skin‐displacing actions than for touch videos, with greater differences for the cheek and upper arm than for the torso, as predicted (Fig. 11D). In the first halves of the videos speeds were similar across conditions and if anything higher in the touch videos (Fig. 11C).

Given that OP activity and speed in the second halves of the video varied similarly across conditions, we tested for correlation (Fig. 11F), finding a strong relationship (r = 0.93, P < 0.01). In contrast OP activity did not correlate (Fig. 11E) with speed in the first halves of the videos (r = −0.44, P > 0.35). To test whether these two correlation coefficients differed significantly, the correlations were also computed for single subjects. The median correlation for the second half was 0.68, compared with −0.23 for the first half, a significant difference (paired‐sample Willcoxon signed ranks Z = 2.8, P < 0.005). This analysis indicates that the activity in OP largely reflects hand and skin movements after contact between hand and skin has been established, rather than contact with the skin itself. Indeed, the speed in the second halves of the videos explains over 85% of the OP activity. OP appears to be also almost completely insensitive to the initial reaching component of the skin‐displacing actions.

DISCUSSION

To investigate how actions differing in motor goal, but executed with an identical effector are encoded in PPC, we instructed volunteers to observe videos showing three classes of upper limb action: object manipulation, skin‐manipulation, and interpersonal actions. Results showed that each action class recruits its specific PPC area, in addition to a cortical “core circuit” active during the observation of all classes.

Skin‐Displacing Actions

The sector specifically activated during observation of skin‐displacing actions was located in the left OP cortex, involving both PFop, an IPL region [Caspers et al., 2006], and OP1, considered the homologue [Disbrow et al., 2000; Eickhoff et al., 2007] of monkey SII [Kaas and Collins, 2001]. The location of this activation on the caudal border of OP1 is consistent with the somatotopic organization of SII region in human and nonhuman primates [Disbrow et al., 2003; Eickhoff et al., 2007]. The OP site neighbors the anterior Supramarginal gyrus (aSMG) tool region (pink outline in Fig. 6A), which is co‐extensive with PFt [Peeters et al., 2013]. The OP activation was specific, as it was evoked neither by observing object manipulation nor by an actor interacting with another person. A control experiment in which we “dissected” the various somatosensory and motor components in the videos of the skin‐displacing actions showed that the OP activation was predominantly due to observing skin manipulation rather than seeing touch, as 85% of its activity was explained by the speed in the second half of the videos, after the hand had touched the skin.

Activation of putative SII, sometimes combined with that of SI, was previously reported during the observation of others being touched by a conspecific's hand [Blakemore et al., 2005; Ebisch et al., 2008; Keysers et al., 2004]. The SII activation was interpreted as resulting from “mirroring” tactile perception. Chan and Baker [2015] provided strong evidence against the involvement of SI or SII in observed touch, which according to these authors, activates an IPL region in the ventral part of the postcentral sulcus (“?” in Fig. 6). Whether this latter region represents seen touch or observation of tool use (videos showed a brush stroking a hand), which activates nearby aSMG [Peeters et al., 2009, 2013, pink outline in Fig. 6] remains to be seen. At any rate, these studies all addressed passive touch that is, a body observed being touched, while our study included observing active touch, that is an actor touching a target. There seems to be a distinction between the active touch of one's own skin and touching an external animate or inanimate “object,” in that only the former drove SII and neighboring PFop to some degree. Our control experiment, however, demonstrated that observing skin‐displacing actions was far more efficient than observing active touch, even of the actors’ own skin, in activating this OP region. Furthermore, the strong dependence of this OP activation on the speed in the second half of the videos supports the view that it reflects observation of the others’ actions rather than seen touch.

Interpersonal Actions

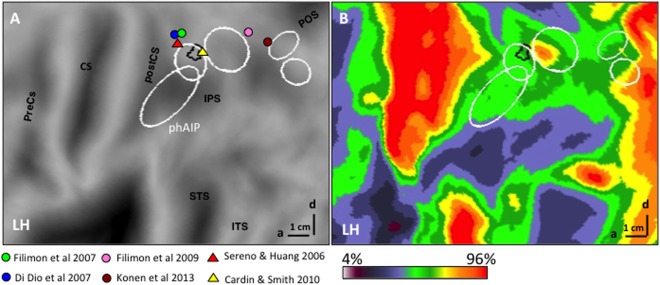

Although no cortical area was active exclusively during the observation of interpersonal actions, the dorsal part of left DIPSA was significantly more active when observing this action class relative to the other two. The location (Fig. 12A) between the human homologues of the ventral intraparietal (VIP) area proposed by Sereno and Huang [2006] and Cardin and Smith [2010] suggests that this dorsal part of DIPSA may be the putative homologue of VIP. This proposal is consistent with its location near DIPSM, the homologue of anterior LIP [Vanduffel et al., 2014], and near a heavily myelinated zone of the IPS [Fig. 12B, Abdollahi et al., 2014] overlapping DIPSM, possibly homologous to densely myelinated ventral LIP [Lewis and Van Essen, 2000]. It is also consistent with the resemblance between some of the interpersonal actions and the defensive movements evoked in the macaque monkey by air puffs to the face and by electrical stimulation of VIP [Cooke et al., 2003; Cooke and Graziano, 2003]. The observation of actions performed in the peripersonal space of another person activates VIP neurons [Ishida et al., 2010]. A similar “mirror” coding of others’ peripersonal space was also demonstrated, albeit indirectly, in humans by a spatial alignment effect: facilitation of left/right hand motor movements when the orientation of the affording part of the object is spatially aligned with the responding hand, provided objects fall within the reaching space of an individual, or outside his peripersonal space but within that of another individual [Ambrosini et al., 2012; Cardellicchio et al., 2011; Costantini et al., 2010]. Thus, the activation of dDIPSA may signal an individual entering the peripersonal space of the actor and contacting his/her body during the interpersonal actions.

Figure 12.

Parietal dDIPSA site preferential for interpersonal actions in relationship to LM from other studies (see inset) (A) and myelin density (color code in inset) map (B) from Abdollahi et al. [2014].

Core Reaching‐to‐Act Circuit and Object‐Related Actions

In humans, the observation of reaching‐to‐grasp actions activates cortex within and around the IPS [Caspers et al., 2010; Grosbras et al., 2012; Jastorff et al., 2010; Molenberghs et al., 2012; Rizzolatti et al., 2014; Rizzolatti and Craighero, 2004]. Reaching‐to‐grasp actions include three components: a proximal transport component, a wrist orientation component, and a distal hand‐shaping component [Jeannerod et al., 1995]. The latter component is prominent in the manipulative action class, and a ventro‐rostral sector within this large reaching‐to‐grasp PPC region, phAIP, was selective for observing manipulative actions. These results are in agreement with previous fMRI studies in humans [Abdollahi et al., 2013; Jastorff et al., 2010], as well as single‐neuron [Pani et al., 2014] and fMRI studies in macaque monkeys [Nelissen et al., 2011].

While two classes—skin moving and interpersonal actions—included a transport component this component was absent in the manipulative actions, as the hand remained close to the object at the start of the action in our videos. Hence, it is likely that the common circuit relates mostly to the wrist component of reaching‐to‐grasp actions, or more precisely, of reaching‐to‐act in general. The wrist may be the prototypical effector for these regions, just as the hand is for phAIP [Jastorff et al., 2010], the ankle being another effector. This function explains the location of the main portion of the common parietal regions (vDIPSA/DIPSM) between the grasping observation region (phAIP) and the PPC region dorsal to the IPS which is involved in observation of reaching without grasping [Di Dio et al., 2013; Filimon et al., 2007, 2009, Konen et al., 2013]. The common regions may also reflect common visual processes, for example, 3D shape‐from‐motion known to activate DIPSA/M [Vanduffel et al., 2002]. That they correspond to common cognitive processes such as spatial attention or temporal prediction is less likely insofar as these activate more caudal and dorsal regions [Cotti et al., 2011; Szczepanski et al., 2013].

Action Observation and Execution

Area phAIP is specifically involved in observing grasping and manipulative actions [Abdollahi et al., 2013, present results], but it is well‐established that this area is also active during actual grasping [Begliomini et al., 2007; Binkofski et al., 1998; Cavina‐Pratesi et al., 2010; Culham et al., 2003; Frey et al., 2005, 2014; Konen et al., 2013] . In the macaque monkey, AIP has also been shown to be active during both the observation [Nelissen et al., 2011], and execution of grasping [Nelissen and Vanduffel, 2011]. Thus, our results directly support the view that observation can serve as a proxy for execution in PPC, at least for manipulative actions. Our results further suggest that this view might apply also to skin‐displacing actions, the observation of which specifically activated putative SII and PFop. Indeed the effective connectivity of the OP site (putative SII plus Pfop) was similar to that of phAIP. This view is also consistent with a recent single cell study [Ishida et al., 2013] investigating motor properties of neurons in SII and caudal OP regions. Although most neurons (74%) responded to somatosensory stimuli, as expected [Robinson and Burton, 1980], a subset (32%) exhibited clear motor properties during hand‐manipulation, generally without somato‐sensory responses. Similarly, the dorsal DIPSA region, activated preferentially by observing interpersonal actions, may be involved in the planning of these actions using signals of impingement into peripersonal space.

The generalization of the “proxy” proposal from manipulation to the other two action classes that we propose, must take into account four factors: lateralization, generalization over effectors, timing and the motor state of the observer. First, action execution is typically lateralized, in right‐handed subjects, to the left hemisphere. In contrast action observation might be more bilateral or display lateralization related to nonmotor factors such as location within the visual field. Second, as discussed above, action observation may generalize over more effectors than does execution which might manifest a typical or dominant effector [Jastorff et al., 2010; Leoné et al., 2014]. Third, the representations related to action execution must maintain a fixed relationship to motor onset, while those related to action observation may be more flexible with regard to visual input onset, especially when the action is unpredictable [Rotman et al., 2006]. Finally, the observer may, unlike the agent, be passive or active. Preparation for ensuing action can indeed influence representations of a previously‐observed action [Jastorff et al., 2012a]. The last three factors are unlikely to influence our study as we showed predictable actions, performed with the typical effector, to passive subjects. As all action class specific sites proved left‐sided in our study, even lateralization is unlikely to intervene. Thus, action observation can be considered a valid proxy for action execution in parietal cortex, at least for the action classes investigated. Even if an extension to other action classes is likely, this need not imply that the proxy hypothesis applies to other parts of cortex, as it does not apply to primary motor cortex [Caspers et al., 2010]. Likewise, this does not necessarily imply that parietal activations by execution and observation are fully coextensive. Taking AIP as an example, one would expect the observation signals here to impinge on its predominantly motor sector rather than the more visual portion related to object affordances. Indeed the activation by manipulation observation was located in rostral phAIP, sparing DIPSA. However, further work either using surface Electro‐encephalography (EEG), or preferably intracranial EEG, compatible with action execution, is needed to confirm the overlap between parietal regions involved in observation and execution of these upper‐limb actions and to extend the proxy view to other action classes.

Functional Organization of PPC

The activation maps (Figs. 3 and 4) show that observation of the three classes of upper limb actions systematically involved three parts of PPC: a large middle region centered above the IPS, a rostral region in supramariginal/OP cortex, and a caudal region between caudal IPS and posterior occipital sulcus. This broad region includes both a common component located more caudally (Fig. 5) and three specific parts more rostrally: phAIP, OP/PF, and dorsal DIPSA (Fig. 6). These specific regions support our view that the PPC is organized functionally that is according to the nature of the action observed. Indeed the specific activation of the phAIP site is compatible with different upper limb segments being involved, but not that of the OP site as skin‐displacing and interpersonal actions used exactly the same limb segments. Furthermore, the second action class driving dorsal DIPSA in addition to interpersonal actions, is not that predicted from the upper limb segments used. However, the fact that observing three classes of upper limb actions all activate the same general region of PPC could be taken as an argument that in fact the effector used, here the upper limb, is the dominant factor of PPC organization. There is evidence form another action observation study [Abdollahi et al., 2013], however, showing that using the upper limb in climbing drives more dorsal and posterior parts of the PPC, indicating that the body part used cannot be the sole explanation.

Is there then a more comprehensive explanation for the fact that observing the three classes of upper limb action broadly engages similar parts of PPC? The proxy view, which seems to apply to PPC, suggests one. The use of the upper limb to attain different goals in fact requires different inputs: somatosensory information for skin‐displacing actions, the shape of the object provided by the visual but also the somatosensory channels, for manipulative actions, and peripersonal space which is also provided by vision and supplemented by somatosensory inputs for interpersonal actions. During execution, this diverse sensory information is processed in different but neighboring PPC areas which all use the hand as prototypical effector [Jastorff et al., 2010]. The combination of these two factors, sensory and motor, in our opinion, dictates where a given sensori‐motor transformation is performed in PPC. Applying the proxy view then suggests that the PPC regions performing the sensori‐motor transformation are also involved in observation of the actions. Thus, our choice of comparing observation of different upper limb actions may explain the relative proximity of the specific parietal sites reported here. Based on the results of Jastorff et al. [2010], who showed similar phAIP activation for observing objects manipulated with hand, foot, or mouth, we might speculate that these specific parietal sites generalize to lower limb or head actions having similar goals.

REFERENCES

- Abdollahi RO, Jastorff J, Orban GA (2013): Common and segregated processing of observed actions in human SPL. Cereb Cortex 23:2734–2753. [DOI] [PubMed] [Google Scholar]

- Abdollahi RO, Kolster H, Glasser MF, Robinson EC, Coalson TS, Dierker D, Jenkinson M, Van Essen DC, Orban GA (2014): Correspondences between retinotopic areas and myelin maps in human visual cortex. Neuroimage 99:509–524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ambrosini E, Scorolli C, Borghi AM, Costantini M (2012): Which body for embodied cognition? Affordance and language within actual and perceived reaching space. Conscious Cogn 21:1551–1557. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Buneo CA (2002): Intentional maps in posterior parietal cortex. Annu Rev Neurosci 25:189–220. [DOI] [PubMed] [Google Scholar]

- Begliomini C, Wall MB, Smith AT, Castiello U (2007): Differential cortical activity for precision and whole‐hand visually guided grasping in humans. Eur J Neurosci 25:1245–1252. [DOI] [PubMed] [Google Scholar]

- Beurze SM, de Lange FP, Toni I, Medendorp WP (2009): Spatial and effector processing in the human parietofrontal network for reaches and saccades. J Neurophysiol 101:3053–3062. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Dohle C, Posse S, Stephan KM, Hefter H, Seitz RJ, Freund HJ (1998): Human anterior intraparietal area subserves prehension: A combined lesion and functional MRI activation study. Neurology 50:1253–1259. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Bristow D, Bird G, Frith C, Ward J (2005): Somatosensory activations during the observation of touch and a case of vision‐touch synaesthesia. Brain 128:1571–1583. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Schlack A, Shah NJ, Zafiris O, Kubischik M, Hoffmann KP, Zilles K, Fink GR (2001): Polymodal motion processing in posterior parietal and premotor cortex: A human fMRI study strongly implies equivalencies between humans and monkeys. Neuron 29:287–296. [DOI] [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, Seitz RJ, Zilles K, Rizzolatti G, Freund HJ (2001): Action observation activates premotor and parietal areas in a somatotopic manner: An fMRI study. Eur J Neurosci 13:400–404. [PubMed] [Google Scholar]

- Cardellicchio P, Sinigaglia C, Costantini M (2011): The space of affordances: A TMS study. Neuropsychologia 49:1369–1372. [DOI] [PubMed] [Google Scholar]

- Cardin V, Smith AT (2010): Sensitivity of human visual and vestibular cortical regions to egomotion‐compatible visual stimulation. Cereb Cortex 20:1964–1973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspers S, Geyer S, Schleicher A, Mohlberg H, Amunts K, Zilles K (2006): The human inferior parietal cortex: Cytoarchitectonic parcellation and interindividual variability. Neuroimage 33:430–448. [DOI] [PubMed] [Google Scholar]

- Caspers S, Zilles K, Laird AR, Eickhoff SB (2010): ALE meta‐analysis of action observation and imitation in the human brain. Neuroimage 50:1148–1167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavina‐Pratesi C, Monaco S, Fattori P, Galletti C, McAdam TD, Quinlan DJ, Goodale MA, Culham JC (2010): Functional magnetic resonance imaging reveals the neural substrates of arm transport and grip formation in reach‐to‐grasp actions in humans. J Neurosci 30:10306–10323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan AWY, Baker CI (2015): Seeing is not feeling: Posterior parietal but not somatosensory cortex engagement during touch observation. J Neurosci 28:1468–1480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi HJ, Zilles K, Mohlberg H, Schleicher A, Fink GR, Armstrong E, Amunts K (2006): Cytoarchitectonic identification and probabilistic mapping of two distinct areas within the anterior ventral bank of the human intraparietal sulcus. J Comp Neurol 495:53–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Connolly J, Andersen R, Goodale M (2003): FMRI evidence for a parietal reach region in the human brain. Exp Brain Res 153:140–145. [DOI] [PubMed] [Google Scholar]

- Cooke DF, Graziano MSA (2003): Defensive movements evoked by air puff in monkeys. J Neurophisiol 90:3317–3329. [DOI] [PubMed] [Google Scholar]

- Cooke DF, Taylor CSR, Moore T, Graziano MSA (2003): Complex movements evoked by microstimulation of the ventral intraparietal area. Proc Natl Acad Sci USA 100:6163–6168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costantini M, Ambrosini E, Tieri G, Sinigaglia C, Committeri G (2010): Where does an object trigger an action? An investigation about affordances in space. Exp Brain Res 207:95–103. [DOI] [PubMed] [Google Scholar]

- Cotti J, Rohenkohl G, Stokes M, Nobre AC, Coull JT (2011): Functionally dissociating temporal and motor components of response preparation in left intraparietal sulcus. Neuroimage 54:1221–1230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culham JC, Valyear KF (2006): Human parietal cortex in action. Curr Opin Neurobiol 16:205–212. [DOI] [PubMed] [Google Scholar]

- Culham JC, Danckert SL, DeSouza JFX, Gati JS, Menon RS, Goodale MA (2003): Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Exp Brain Res 153:180–189. [DOI] [PubMed] [Google Scholar]

- Di Dio C, Di Cesare G, Higuchi S, Roberts N, Vogt S, Rizzolatti G (2013): The neural correlates of velocity processing during the observation of a biological effector in the parietal and premotor cortex. Neuroimage 64:425–436. [DOI] [PubMed] [Google Scholar]

- Disbrow E, Roberts T, Krubitzer L (2000): Somatotopic organization of cortical fields in the lateral sulcus of Homo sapiens: Evidence for SII and PV. J Comp Neurol 418:1–21. [DOI] [PubMed] [Google Scholar]

- Disbrow E, Litinas E, Recanzone GH, Padberg J, Krubitzer L (2003): Cortical connections of the second somatosensory area and the parietal ventral area in macaque monkeys. J Comp Neurol 462:382–399. [DOI] [PubMed] [Google Scholar]

- Durand JB, Peeters R, Norman JF, Todd JT, Orban GA (2009): Parietal regions processing visual 3D shape extracted from disparity. Neuroimage 46:1114–1126. [DOI] [PubMed] [Google Scholar]

- Ebisch SJH, Perrucci MG, Ferretti A, Del Gratta C, Romani GL, Gallese V (2008): The sense of touch: Embodied simulation in a visuotactile mirroring mechanism for observed animate or inanimate touch. J Cogn Neurosci 20:1611–1623. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Grefkes C, Zilles K, Fink GR (2007): The somatotopic organization of cytoarchitectonic areas on the human parietal operculum. Cereb Cortex 17:1800–1811. [DOI] [PubMed] [Google Scholar]

- Ferri S, Kolster H, Jastorff J, Orban GA (2013): The overlap of the EBA and the MT/V5 cluster. Neuroimage 66:412–425. [DOI] [PubMed] [Google Scholar]

- Filimon F (2010): Human cortical control of hand movements: Parietofrontal networks for reaching, grasping, and pointing. Neuroscientist 16:388–407. [DOI] [PubMed] [Google Scholar]

- Filimon F, Nelson JD, Hagler DJ, Sereno MI (2007): Human cortical representations for reaching: Mirror neurons for execution, observation, and imagery. Neuroimage 37:1315–1328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Filimon F, Nelson JD, Huang R‐S, Sereno MI (2009): Multiple parietal reach regions in humans: Cortical representations for visual and proprioceptive feedback during on‐line reaching. J Neurosci 29:2961–2971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frey SH, Vinton D, Norlund R, Grafton ST (2005): Cortical topography of human anterior intraparietal cortex active during visually guided grasping. Cogn Brain Res 23:397–405. [DOI] [PubMed] [Google Scholar]

- Frey SH, Hansen M, Marchal N (2014): Grasping with the press of a button: Grasp‐selective responses in the human anterior intraparietal sulcus depend on nonarbitrary causal relationships between hand movements and end‐effector actions. J Cogn Neurosci. 1:1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Buechel C, Fink GR, Morris J, Rolls E, Dolan RJ (1997): Psychophysiological and modulatory interactions in neuroimaging. Neuroimage 6:218–229. [DOI] [PubMed] [Google Scholar]

- Gallivan JP, McLean DA, Smith FW, Culham JC (2011): Decoding effector‐dependent and effector‐independent movement intentions from human parieto‐frontal brain activity. J Neurosci 31:17149–17168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Georgieva S, Peeters R, Kolster H, Todd JT, Orban GA (2009): The processing of three‐dimensional shape from disparity in the human brain. J Neurosci 29:727–742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gitelman DR, Penny WD, Ashburner J, Friston KJ (2003): Modeling regional and psychophysiologic interactions in fMRI: The importance of hemodynamic deconvolution. NeuroImage 19:200–207. [DOI] [PubMed] [Google Scholar]

- Graziano MSA, Cooke DF (2006): Parieto‐frontal interactions, personal space, and defensive behavior. Neuropsychologia 44:2621–2635. [DOI] [PubMed] [Google Scholar]

- Grosbras MH, Beaton S, Eickhoff SB (2012): Brain regions involved in human movement perception: A quantitative voxel‐based meta‐analysis. Hum Brain Mapp 33:431–454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guipponi O, Wardak C, Ibarrola D, Comte J‐C, Sappey‐Marinier D, Pinède S, Ben Hamed S (2013): Multimodal convergence within the intraparietal sulcus of the macaque monkey. J Neurosci 33:4128–4139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heed T, Beurze SM, Toni I, Roder B, Medendorp WP (2011): Functional rather than effector‐specific organization of human posterior parietal cortex. J Neurosci 31:3066–3076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hinkley LBN, Krubitzer LA, Padberg J, Disbrow EA (2009): Visual‐manual exploration and posterior parietal cortex in humans. J Neurophysiol 102:3433–3446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes A, Friston K (1998): Generalisability, random effects and population inference. Neuroimage 7:S754. [Google Scholar]

- Huang R‐S, Chen C‐f, Tran AT, Holstein KL, Sereno MI (2012): Mapping multisensory parietal face and body areas in humans. Proc Natl Acad Sci USA 109:18114–18119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishida H, Nakajima K, Inase M, Murata A (2010): Shared mapping of own and others’ bodies in visuotactile bimodal area of monkey parietal cortex. J Cogn Neurosci 22:83–96. [DOI] [PubMed] [Google Scholar]