Abstract

Umbrella sampling efficiently yields equilibrium averages that depend on exploring rare states of a model by biasing simulations to windows of coordinate values and then combining the resulting data with physical weighting. Here, we introduce a mathematical framework that casts the step of combining the data as an eigenproblem. The advantage to this approach is that it facilitates error analysis. We discuss how the error scales with the number of windows. Then, we derive a central limit theorem for averages that are obtained from umbrella sampling. The central limit theorem suggests an estimator of the error contributions from individual windows, and we develop a simple and computationally inexpensive procedure for implementing it. We demonstrate this estimator for simulations of the alanine dipeptide and show that it emphasizes low free energy pathways between stable states in comparison to existing approaches for assessing error contributions. Our work suggests the possibility of using the estimator and, more generally, the eigenvector method for umbrella sampling to guide adaptation of the simulation parameters to accelerate convergence.

I. INTRODUCTION

One of the main uses of molecular simulations is the calculation of equilibrium averages. For understanding reaction processes, the free energy projected onto selected coordinates (collective variables) is of special interest. It relates directly to the probabilities of the coordinates taking particular values, and it can provide valuable information about the stable states, the barriers between them, and the origin of their stabilization. Furthermore, it is the starting point for most rate theories. Although in principle the free energy can be estimated from a long unbiased simulation, in practice doing so is challenging because bottlenecks slow the exploration of the configuration space. In other words, transitions between regions of the space are very infrequent in comparison to local fluctuations.

Various methods have been introduced to overcome this problem. Here, we consider one of the oldest and still most widely used such methods, umbrella sampling (US).1,2 In this approach, the collective-variable interval of interest is covered by a series of simulations, in each of which the system is biased such that sampling is restricted to a relatively narrow window of values of the collective variables. This can be accomplished by addition of a biasing potential that is small in the window and large outside it. The information from the different simulations must be combined, and the effect of the bias removed, to obtain the overall free energy profile. This requires consistently normalizing the probabilities in different windows, a task that is complicated by the fact that the simulations are run independently.

Considerable effort has been devoted to determining how best to combine the results from different simulations. Initially, researchers manually adjusted the zero of free energy in each window to make the full free energy profile continuous and, often, smooth; conflicting results arising from limited sampling at the window peripheries were removed. The desire to use all the simulation data motivated the introduction of estimators that allow for systematically combining the data from different simulations. By far, the most widely used of these in chemical physics applications is the weighted histogram analysis method (WHAM). The multistate Bennett acceptance ratio (MBAR) method, as it is referred to in the molecular-simulation literature and will be referred to here, is closely related but does not rely on binning the data.3–6 Both WHAM and MBAR can be derived from maximum-likelihood or minimum asymptotic variance principles, assuming independent, identically distributed sampling in each window, and have corresponding statistical optimality properties under those conditions. Recent extensions seek to improve performance when the sampling is limited and to extend the algorithm to more general ensembles.7,8

In the present paper, we introduce an alternative scheme for estimating the free energy from US simulation data. In this approach, the normalization constants needed to combine information from separate simulation windows are the components of the eigenvector of a stochastic matrix that can be constructed from running averages in the windows. We thus term our method Eigenvector Method for Umbrella Sampling (EMUS). The advantage of our method is that it lends itself to error analysis. Following previous work,9–11 we measure error with the asymptotic variance.

Our paper is organized as follows. After giving some background on US in Section II, we formulate EMUS in Section III. In Sections IV and V, we show that EMUS performs comparably to WHAM and MBAR, and discuss its connection with the latter. In Section VI, we use scaling arguments with simplifying assumptions to show that accounting for the error associated with combining the data is important and limits the speedups that can be achieved by increasing the number of simulation windows. In Section VII, we provide the full numerical analysis, which applies generally, without simplifying assumptions. Specifically, we derive a central limit theorem for averages from EMUS and use it to develop a means for estimating the error contributions from individual windows. We demonstrate the method for the free energy projected onto the ϕ and ψ dihedral angles of the alanine dipeptide and compare the error contributions with those from an estimator introduced by Zhu and Hummer.12 We conclude in Section VIII.

II. BACKGROUND ON UMBRELLA SAMPLING

Here, we review umbrella sampling and establish basic terms and notation. The goal is the calculation of an average of an observable g over a time-independent probability distribution π,

| (1) |

At thermal equilibrium, π is the Boltzmann distribution,

| (2) |

where H0 is the system Hamiltonian, kB is Boltzmann’s constant, and T is the temperature. In particular, we can express the free energy difference between two states S1 and S2 as

| (3) |

where 1 is the indicator function

| (4) |

Similarly, the reversible work to constrain a collective variable q(x) to a particular value q′, also known as the potential of mean force (PMF), may be written as

| (5) |

For complex systems, averages of the form in (1) must be evaluated numerically. Typically, this is done by generating a chain of related configurations, Xt, using Metropolis Monte Carlo methods or molecular dynamics, and by assuming ergodicity. Namely, as the number of configurations N goes to infinity, is the limit of the sample mean,

| (6) |

In all practical sampling methods, successive configurations are strongly correlated. While ergodicity guarantees that sample means converge to averages over π, convergence can be extremely slow if the correlation between subsequent points is strong. This is the case when sampling π relies on visiting low-probability states, such as transition states of chemical reactions.

US methods address this issue by enforcing sampling of different regions of configuration space (windows), introducing L nonnegative bias functions ψi and then using L independent simulations to sample from the biased probability distributions,

| (7) |

The essential idea is that sampling each πi is fast because ψi is chosen so that relatively likely states under ψi are not separated by relatively unlikely states. This is accomplished by restricting the set of states on which ψi is non-negligible so that π is closer to constant on that set. In Section VI C we make this point more carefully by examining a regime in which umbrella sampling can be shown to be exponentially more efficient than direct simulation. A popular choice is to use bias functions that take a Gaussian form,

| (8) |

such that

| (9) |

This corresponds to adding a harmonic potential centered at with spring constant ki to the system Hamiltonian. We call the relative normalization constant of the ith biased distribution zi,

| (10) |

We also define the free energy in window i as

| (11) |

We denote averages over the biased distributions by

| (12) |

Overall averages of interest, 〈g〉, can be estimated as zi-weighted sums of averages computed in each of the windows. We detail our prescription in Section III.

III. THE EIGENVECTOR METHOD FOR UMBRELLA SAMPLING

In this section, we present the Eigenvector Method for Umbrella Sampling (EMUS). We begin by defining

| (13) |

for any function g. Then, we observe that

| (14) |

The factor in parentheses can be taken out of the sum over i. To express this factor in terms of computable averages, we repeat the same steps with g = 1,

| (15) |

| (16) |

Consequently, if we can evaluate the zi, the , and the , then we can assemble the original average of interest. The averages can be computed from sequences (typically independent for each i) that sample the πi. Umbrella sampling methods differ primarily in how the zi are computed.

To express the constants zi in terms of averages over the biased distributions, we take g(x) = ψj(x) in (14). Then, zi solves

where

| (17) |

That is, the vector of normalization constants z is a left eigenvector of the matrix F with eigenvalue one. Under conditions to be elaborated in Section III B, the solution to (17) is uniquely specified when we notice that

| (18) |

A. Computational procedure

In the EMUS algorithm, we estimate the entries of F and the averages and by sample means, then assemble the estimate of using (16). To be precise, we denote the sample means by

| (19) |

| (20) |

| (21) |

EMUS proceeds as follows:

-

1.

Choose the biasing functions ψi.

-

2.

Compute trajectories that sample states from the biased distributions πi.

-

3.

Calculate the matrix and the averages and .

- 4.

-

5.Compute the estimate of ,

by substituting zEMUS and the sample means in (16).(23)

We have provided an implementation of this algorithm online, along with implementations of the iteration described in Section IV and the asymptotic error estimate found in Section VII B 1.14 We remark that when one wishes to compute a free energy difference or a ratio of two observables, as in Equation (3), it is not necessary to compute the . Instead, one may use the formula

| (24) |

where g1 and g2 are arbitrary functions.

B. The eigenvector problem

In this section, we give conditions under which the eigenvector problem has a unique solution. First, we show that F is a stochastic matrix; that is, each element Fij is nonnegative and every row of F sums to one:

| (25) |

The entries of F are nonnegative since we require that the bias functions be nonnegative. One can show that the matrix is also stochastic by similar arguments.

A stochastic matrix J has a unique eigenvector with eigenvalue one if it is irreducible: for every possible grouping of the indices into two distinct sets, A and B, Jij ≠ 0 for some i ∈ A and j ∈ B.15 In fact, this statement remains true when J is nonnegative with largest eigenvalue equal to one. For any such matrix we let z(J) denote the continuous function returning the unique left eigenvector of J corresponding to eigenvalue one.

In the case of the particular stochastic matrix F defined in (17) these statements imply that if, for any division of the indices into sets A and B, there is a sufficient overlap between the sets ∪i∈A{x : ψi(x) > 0} and ∪j∈B{x : ψj(x) > 0} then there will be a unique solution z(F) to (17) which necessarily equals the relative normalization constants z defined in (10). Because z(J) is a continuous function of its arguments, converges to z as converges to F. Consequently, EMUS produces a consistent estimator in the sense that if the sample averages used to estimate the entries Fij and converge (in probability or with probability one) to the true values, then the estimate of also converges (in the same sense).

IV. THE CONNECTION BETWEEN EMUS AND MBAR

Building upon earlier work in the statistics literature,3,4,16 Shirts and Chodera6 suggested a class of algorithms for estimating free energy differences between states, which they termed MBAR. This method is similar to WHAM but does not require binning the simulation data to form histograms (see Tan et al.9). In this section, we explain the relation between EMUS and MBAR.6 We also derive a new iterative method for solving the MBAR equations, and we show that our iteration leads naturally to a new family of related consistent estimators.

The starting point of Shirts and Chodera6 is the identity (see their (5))

| (26) |

where αij(x) is an arbitrary function. They proposed the choice

| (27) |

where ni is the number of uncorrelated samples in window i. Substituting (27) into (26) gives

| (28) |

We can cast (28) in a form reminiscent of EMUS by writing

| (29) |

where

| (30) |

for any vector w with positive entries. EMUS corresponds to setting w = n so that

| (31) |

and (26) reduces to the eigenproblem (17).

In practice, one must replace the matrix Fij(w) in (30) by the sample mean approximation

| (32) |

Substituting for Fij(z) in (29) yields the equation

| (33) |

for zMBAR, which we refer to here as the MBAR estimator. If the samples are independent, MBAR is the nonparametric maximum-likelihood estimator of z.3

In practice, the samples are not independent for a given i, and the ni must be estimated from data. Several algorithms for estimating the ni have been proposed.17–19 Shirts and Chodera6 base their estimates on the integrated autocorrelation times of physically motivated coordinates, and we follow this common practice here. In fact, once the ni have been estimated, Shirts and Chodera6 suggest replacing sample averages over all Ni points by sample averages over the ni points obtained by including only every Ni/ni-th sample along the trajectory. We note that both the subsampling approach and the one in (32) correspond to approximations of expression (26) with (27), and we regard both as variations on the MBAR estimator. When the samples are independent, the two approaches are the same. In tests of the iterative EMUS algorithm introduced below, we find estimates to be insensitive to the choice of ni and they can be set equal to 1, though in that case the estimator no longer corresponds directly to MBAR.

As written above, the MBAR estimator (33) resembles an eigenvector problem. However, the dependence of on z implies that the solution must be obtained self-consistently. The approach advocated by Shirts and Chodera for computing the MBAR estimator corresponds in the framework described here to solving (33) by a Newton-type iteration. However, the eigenvector form of (33) suggests an alternative approach. Rather than Newton’s method, we employ the following algorithm:

-

1.

As an initial guess for zMBAR, choose a vector z0 with positive entries. Estimate the ni. Set m = 0.

-

2.

-

(a)Calculate according to (32).

-

(b)Calculate a new estimate zm+1 of zMBAR by solving the eigenproblem

(34)

-

(a)

-

3.If ,

-

(a)Increment m;

-

(b)Go to Step 2.

-

(a)

A similar algorithm was proposed by Meng and Wong.20

To show that this iteration makes sense, we must prove that the eigenproblem (34) always has a unique solution and that zm converges to zMBAR as m goes to infinity. To see that the eigenproblem has a solution, first observe that if is irreducible for one vector with positive entries, w, then it is irreducible for all vectors with positive entries. When applying the EMUS method, we thus assume that is irreducible. Moreover, observe that for any positive vector w, the vector with entries ni/wi is a right eigenvector of with eigenvalue one and positive entries. It follows from the Perron-Frobenius theorem that the matrix has a unique left eigenvector with eigenvalue one and that has positive entries. Thus, the eigenproblem always has a unique solution. We do not have a proof that the iterates converge. However, since zMBAR is a fixed point of the iteration, if the iterates do converge, their limit must be zMBAR. In practice, we find that the iteration converges quickly, usually to a relative error of 10−6 within 10 iterates.

In addition to its apparently rapid convergence, another argument in favor of the algorithm that we introduce above for solving (33) is that each iteration of the scheme results in a new consistent estimator. We will use the term iterative EMUS to refer to this family of estimators. With the initial guess z0 = n, the result, z1, of the first iteration is the EMUS estimator defined in Section III. In the Appendix, we show that for any fixed finite number of iterations m, zm is also a consistent estimator of the vector z of normalization constants. By contrast, other schemes, such as Newton’s method, for solving (33) may require that the number of iterations goes to infinity to obtain a consistent estimate. We also remark that the consistency result in the Appendix holds as long as the ni converge to non-random, positive values with increasing numbers of samples Ni. They can be chosen as described above, or simply set to a fixed value.

Differences between the iterative EMUS scheme above and the application of Newton’s method proposed by Shirts and Chodera6 are mostly matters of implementation. As we will see in Section V, the results are not very sensitive to these computational details; most of the accuracy in the iterative EMUS approach is achieved in the first step. In any case, we remind the reader that the primary goal of this paper is to characterize those properties of the broader umbrella sampling approach that are essential to its success, not to analyze details of implementation.

While we focus here on potentials of mean force, the MBAR estimator has been applied to a broader category of free energy problems, including the analysis of single-molecule pulling experiments and alchemical free energy calculations.6,21,22 The close relation between EMUS and MBAR indicates that error analysis of EMUS may provide insight into the sources of error in MBAR for these problems, but we do not pursue this idea further in the present work.

V. NUMERICAL COMPARISON

To test the algorithm numerically, we performed 100 independent umbrella sampling calculations for the PMF of the ϕ coordinate of the alanine dipeptide (i.e., N-acetyl-alanyl-N′-methylamide) in vacuum. Simulations were run using GROMACS version 5.1.1 with harmonic bias potentials applied using the PLUMED 2.2.0 software package.23,24 The molecule was represented by the CHARMM 27 force field without CMAP corrections,25 with covalent bonds to hydrogen atoms constrained by the LINCS algorithm.26 Twenty windows were evenly spaced along the ϕ dihedral angle. The force constant ki = 0.007 605 35 × 10−2 kcal mol−1 deg−2 such that the standard deviation of the Gaussian bias functions was 9∘. In each window, we integrated the equations of motion with the GROMACS leap-frog Langevin integrator with a 1 fs time step and a time constant of 0.1 ps. The temperature was 300 K. The system was equilibrated for 40 ps and then sampled for 100 ps, saving structures every 10 fs.

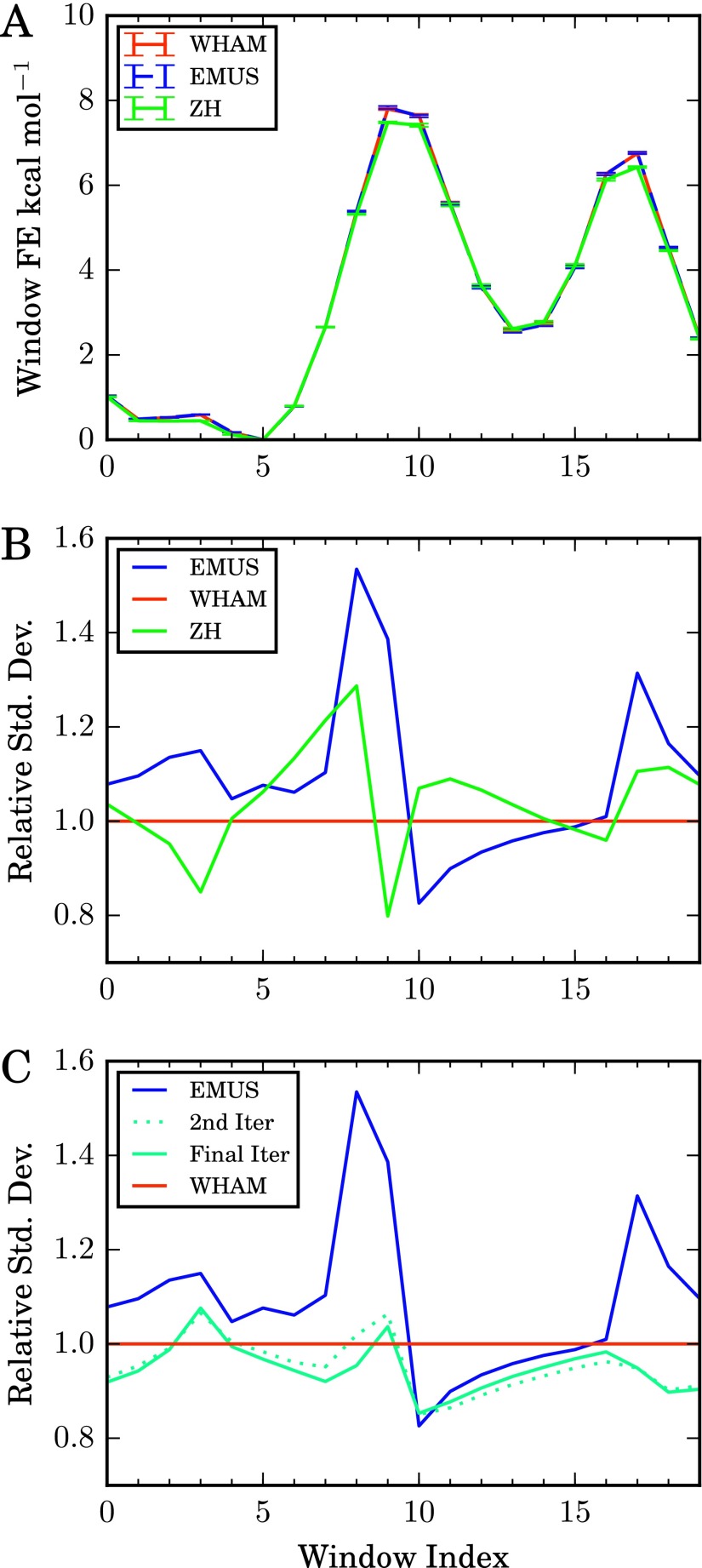

The data were then analyzed with EMUS, Grossfield’s implementation of WHAM,27 and the algorithm proposed by Zhu and Hummer (ZH) (see Equation (A1) and the discussion following it in Appendix A of that paper12). The data were also analyzed with pyMBAR;6 as pyMBAR gave results virtually identical to WHAM, the results are not shown. In Figure 1, we show the resulting average potentials of mean force, as well as the standard deviation of the estimates over the 100 runs. WHAM and EMUS converge to the same result. This is to be expected, as both algorithms are consistent (i.e., they converge to the exact result as the amount of samples in each window tends to infinity; see Section VII), although WHAM exhibits a small bias from the binning of data for the histograms.6 The standard deviations of the free energies are slightly higher for EMUS than for the other two algorithms, but these differences are negligible compared with those expected for physically weighted simulations. Moreover, the relative performances are likely to be problem dependent. We note that ZH is based on thermodynamic integration, and the finite number of integration points causes quadrature error,28 and, in turn, a systematic error in the barrier height.

FIG. 1.

Comparison of umbrella sampling methods applied to simulation data for the alanine dipeptide. (a) Average window free energies, Gi, for the indicated methods. Error bars are estimated standard deviations of the means. (b) Standard deviation of each method relative to that of the WHAM algorithm. Colors are the same as in (a). (c) EMUS as the first step in a self-consistent iteration to solve the MBAR equations (see text). The number of uncorrelated samples in each window (ni) was estimated by calculating the integrated autocorrelation of the ϕ dihedral angle from each trajectory. Results shown are for identical molecular dynamics data (see text for simulation details); the methods differ only with respect to combination of the data to estimate the free energies.

In Figure 1(c), we apply the self-consistent iteration described in Section IV. For this calculation, we estimate the number of independent samples in each window (ni) from the integrated autocorrelation time of the ϕ dihedral angle time series. We plot the standard deviation of the values of z calculated after the first iteration (EMUS), the second iteration, and after convergence to a relative residual smaller than 10−6. In general, convergence is achieved after an average of 9 iterations; none of the 100 data sets required more than 15 iterations. However, we note that after two iterations, the estimates of z already have a standard deviation equivalent to that of the WHAM algorithm. In this article we focus on the scheme corresponding to the first iteration only and do not attempt to analyze the improvement due to multiple iterations. In our tests the performance gain from multiple iterations is negligible compared to the improvement over direct approximation of free energy differences using long unbiased trajectories.

VI. JUSTIFICATION FOR UMBRELLA SAMPLING BY SCALING ARGUMENTS

The quality of a statistical estimate from umbrella sampling depends strongly on the choices made for the simulation windows. In this section, we discuss how the error scales as properties of the simulation change. We begin in Section VI A with a description of a prevalent justification for the use of US. We show in Section VI B that this argument is incomplete and, in turn, misleading. In Section VI C, we provide an alternative justification; namely, we show that in the low temperature limit, the cost to achieve a fixed accuracy by US grows slowly compared to direct simulation. In this section we make several simplifying assumptions that allow us to draw precise conclusions about the scaling properties of EMUS. In Section VII we provide error bounds for EMUS under much more general assumptions.

A. Scaling in the limit of many windows

To justify umbrella sampling, it is often suggested that the total computational time required to accurately sample statistics is inversely proportional to the number of windows, L.17,29–32 The argument for this scaling proceeds as follows.

-

•

Divide a one-dimensional collective variable space into L windows of equal length, inversely proportional to L (i.e., L−1).

-

•

Assume the windows are small enough that no free energy barriers exist in each window. The time to explore a window should be diffusion limited and proportional to the length of the window squared. Therefore, the simulation time required to accurately sample statistics in one window is also proportional to L−2.

-

•

Because there are L windows, the total simulation time required to compute averages to fixed accuracy should scale as L × L−2 = L−1.

While this argument is now standard,17,29–32 Virnau and Müller33 observed that the error for computing the free energy difference between phases of Lennard-Jones particles with an approximately fixed amount of sampling was insensitive to the number of windows in practice, and they noted that the argument above neglects the error associated with combining the data from different simulation windows. Nguyen and Minh recently made a similar suggestion for a related class of methods.34 This intuition is supported by our analysis in Section VI B, which shows that the total computational cost to achieve a fixed accuracy should be at best insensitive to the choice of L, so long as it is sufficiently large.

B. A simple model problem

To perform a more precise analysis, we make a number of simplifying assumptions. We emphasize that these assumptions are in force only for the purposes of the scaling arguments in this section. We provide more general error bounds for EMUS in Section VII.

Assumption 6.1.

The total computation time, N, is divided equally among the windows such that Ni = N/L.

Assumption 6.2.

The ψi are functions on the one-dimensional interval [0, 1], and the set of points where ψi is non-zero, {q : ψi > 0}, is an interval of length |{q : ψi > 0}| ≤ γ/L. We also assume that ψiψj = 0 unless |j − i| ≤ 1. Consequently, both the exact matrix F and the sample mean are tri-diagonal. This assumption clearly does not hold when the bias functions ψi are Gaussian. Nonetheless, the rapid decay of Gaussian bias functions away from their peaks guarantees that entries of F and far from the diagonal are very small, such that we expect our conclusions to still hold (though their justification would be more complicated).

Assumption 6.3.

The overlap of ψi and ψi±1 (i.e., the integral of their product) is large enough that

(35) for all L and for all i ≤ L. If our last assumption holds, but this one does not, then we can find more than one vector z satisfying Equations (17) and (18). This assumption is a slightly stronger version of the notion of irreducibility that we defined earlier (see Section III B). Note that we require the irreducibility to hold uniformly in the large L limit, and we thus introduce the δ, which is independent of L.

Assumption 6.4.

Sample averages computed in different windows are independent, i.e., and for j ≠ i are independent. We do not assume (here or anywhere else in this paper) that samples generated within a single window are independent. Indeed, even if the samples from πi are independent, and are dependent random variables.

As an example average, let us consider the error in the free energy difference between the first and last windows,

| (36) |

Assumption 6.2 is sufficient for to be in detailed balance with (Kelly Ref. 35, Lemma 1.5 and Section 1.3),

| (37) |

Using (37) recursively,

| (38) |

To understand the error (variance) of the terms in (38), we must further specify Fi,i+1 and Fi,i−1.

Assumption 6.5.

For Nmin and Lmin sufficiently large, when Ni ≥ Nmin and L ≥ Lmin,

(39) for i = 2, 3, …, L − 1, and the same upper and lower bounds hold for and . This is just a precise interpretation of the diffusion limited sampling assumption made in the standard justification of US reproduced in Section VI A. Under such an assumption we expect both and to have variance on the order of 1/(NiL2) and, in light of (35), the function ln(x/y) is smooth near (x, y) = (Fi,i+1, Fi,i−1). These considerations are closely related to Lemma 7.2 in Section VII A.

With all the assumptions in hand, we now complete the argument by taking the variance of both sides of (38). Since samples from different windows are independent, the variance of is a sum of contributions from each window,

| (40) |

Using (39) and substituting Ni = N/L, we find that, as long as N/L ≥ Nmin and L ≥ Lmin,

| (41) |

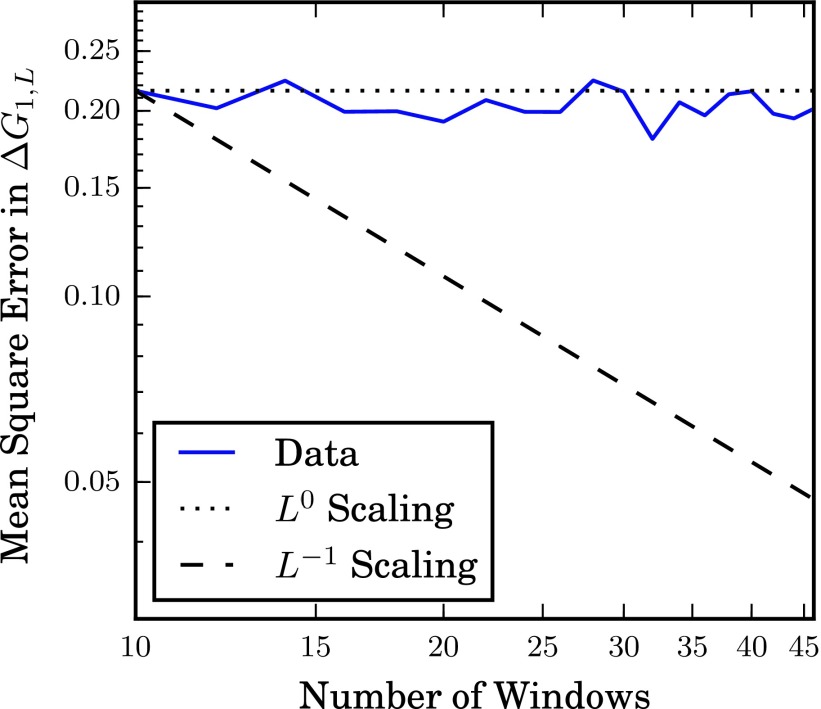

To verify that this conclusion carries over to harmonic bias potentials, we performed multiple umbrella sampling calculations for a Brownian particle on a flat potential on the interval with a stepsize of 1.0 × 10−6 and kBT = 1.0 using Gaussian bias functions with a standard deviation of 1/L. The number of windows was varied from L = 10 to 46 in steps of 2. For each value, a total of 107 steps were distributed equally in the windows, and the US calculation was repeated 480 times. We then calculated the mean square error of the free energy difference between the first and last window over the 480 replicates and determined how the mean square error scaled with L. Rather than the mean square error varying inversely with the number of windows, the data plotted in Figure 2 support a scaling of L0, consistent with (41).

FIG. 2.

The scaling of umbrella sampling error with number of windows on a flat potential. A Brownian particle on a flat, one-dimensional potential was simulated for 480 identical runs, and the free energy difference between the first and last windows was calculated, as described in the text. Here, the mean square error from the exact result is plotted against the number of windows. The lines show the scaling in error predicted by the L−1 and L0 scalings. Fitting the data on a log-log scale give a scaling exponent of −0.026 ± 0.028.

It is worth noting that the inverse scaling with total cost N in (41) is exactly the scaling one would expect for the variance of an estimate of the free energy difference constructed from a molecular dynamics trajectory of length N. Because US and direct simulations of comparable total numbers of steps require comparable computational effort (ignoring the overhead associated with combining the simulation data, which is typically small in comparison with the computational cost of the sampling), the benefits of US must be encoded in the constants Kmin and Kmax. A dramatic demonstration of this observation is the purpose of Section VI C.

C. The low temperature limit

To understand the benefits of umbrella sampling, we must study its performance in the presence of free energy barriers. In particular, we compare the performance of umbrella sampling to physically weighted sampling as the temperature goes to zero. In this limit, the cost of direct sampling increases exponentially with 1/T, while, as we show, the cost of umbrella sampling increases only algebraically. A formal discussion is given in a separate publication;36 here, we present a simple plausibility argument.

Owing to the free energy barriers, the assumption of diffusive dynamics in each window no longer holds. Instead, we expect a form typical of reaction rate theories in each window. We define ΔWi as the maximum difference in the PMF in window i,

| (42) |

Assumption 6.5 ’:

We now replace the upper and lower bounds in (39) by the upper bound

(43) for i = 2, 3, …, L − 1 with analogous replacements for i = 1 and i = L, as long as Ni ≥ Nmin and L ≥ Lmin. The constant K here is assumed to be independent of temperature. This bound captures the diffusion limited sampling assumption when L is very large, but is more detailed than (39) in that it captures (crudely) the increasing difficulty of the sampling problem as the temperature decreases with all other parameters held fixed. Under reasonable additional assumptions on the underlying potential, the bias functions ψi, and the sampling scheme, one can rigorously establish an asymptotic (large Ni) bound of the form in (43).36

Substituting this new bound into (40), we find that, if L ≥ Lmin and N/L ≥ Nmin, then

| (44) |

As the temperature decreases, we choose to increase L such that ΔWi/kBT is bounded above. This can be achieved by scaling L linearly with 1/T: if the derivative of the PMF is bounded (in absolute value) by , choosing L so that

| (45) |

ensures that ΔWi/kBT is bounded by Ω (since we have assumed that the argument of W is in [0, 1]). On the other hand, our assumption that the length of {q : ψi > 0} (Assumption 6.2) does not exceed γ/L implies that

| (46) |

Consequently, as long as (45) holds,

| (47) |

Finally, substituting this result into (44) we find that if L ≥ Lmin and N/L ≥ Nmin, then

| (48) |

With the best possible (smallest) choice of L allowed by (45), this bound becomes

| (49) |

The remarkable feature of the bound in (49) is that it is independent of T. This does not mean that the cost to achieve a fixed accuracy is independent of T. However, it does imply that as the temperature is decreased, we do not have to increase Nmin to maintain a fixed accuracy. Expression (45) and the fact that Ni ≥ Nmin together imply that, under the assumptions of this section, the computational cost of obtaining an accurate estimate of by US increases algebraically with (kBT)−1. That scaling is to be compared to exponential in (kBT)−1 to achieve the same accuracy by direct simulation.

Finally, we remark that while our analysis provides a convincing explanation for the performance benefits offered by umbrella sampling, it neglects a number of practical realities. First, we have ignored the cost of equilibrating the simulation in each window, which can be challenging. Second, we have ignored the practical difficulties that arise when the number of windows grows large. The bias functions (restraints) can introduce additional free energy barriers that slow mixing in the degrees of freedom orthogonal to the collective variable, and, if sufficiently restrictive, could in principle necessitate modifying the elementary simulation step sizes. Our analysis reveals, however, that these difficulties are not responsible for the slow (L0) error scaling observed for large L.

VII. ANALYSIS OF THE ERROR OF EMUS

In this section, we study the error of EMUS in full generality, without imposing the simplifying assumptions of Section VI. Our main results are a central limit theorem for EMUS (Theorem 7.4) and an easily computed, practical error estimator which reveals the contributions of the different windows to the total error. These results may be used to compare the efficiency of EMUS and other methods and to study how the efficiency of EMUS depends on parameters such as the number of samples allocated to each window.

A. A central limit theorem for EMUS

Before developing the error analysis, we define a single notation for EMUS which incorporates both the case of a free-energy difference and the case of an ensemble average. In either case, one must compute and also and for two real valued functions g1 and g2. To compute a free energy difference, we choose based on (3),

| (50) |

To compute an ensemble average 〈g〉, we choose based on (16),

| (51) |

We furthermore define the function

| (52) |

so that

| (53) |

where we remind the reader that, for each i, the process samples the biased distribution πi. Define

| (54) |

and let

| (55) |

denote the corresponding vector of exact averages. Using the notation defined in Section III B, the EMUS estimator takes the form , where for a free-energy difference,

| (56) |

and for an ensemble average,

| (57) |

We now proceed with the error analysis. First, we characterize the error of the sample means over the biased distributions. As discussed by Frenkel and Smit (Ref. 17, Appendix D), the variance of a sample mean may be expanded in terms of the integrated autocovariance of the process. We define the autocovariance function of to be

| (58) |

where T denotes a vector transpose, and here the outer 〈…〉i denotes the exact average not only over sampled from πi but also subsequent points of the sequence . Note that Ci(t) is a (L + 2) × (L + 2) matrix. We define the integrated autocovariance to be

| (59) |

The integrated autocovariance is the leading order coefficient in an expansion of the covariance (see Ref. 17, D.1.3),

| (60) |

where o(1/Ni) denotes terms that go to zero faster than 1/Ni (i.e., Nio(1/Ni) → 0).

Under certain conditions on the process , one can strengthen the expansion of the covariance (60) to a central limit theorem (CLT) for . We expect such a CLT to hold for most problems and most sampling methods in computational statistical physics. However, to avoid a lengthy and technical digression, we simply take the CLT as an assumption; we justify this assumption in more detail in another work,36 and we refer to the work of Lelièvre et al. (Ref. 37, Section 2.3.1.2) for a general discussion of the CLT in the context of computational statistical physics.

Assumption 7.1 Central limit theorem for —

We assume that

(61) where Σi ∈ ℝ(L+2)×(L+2) is the integrated autocovariance matrix defined in (59). The symbol denotes convergence in distribution as Ni → ∞. Notice that when the elements of the sequence are independent and drawn from πi then . More generally, samples are correlated, so Σi includes a factor that accounts for the time to decorrelate.

Having characterized the errors in the sample means, we now study how these errors propagate through the EMUS algorithm. Our goal is to prove a CLT for EMUS. We accomplish this using the delta method.

Lemma 7.2 The delta method; Proposition 6.2 of Bilodeau and Brenner38 —

Let θN be a sequence of random variables taking values in ℝd. Assume that a central limit theorem holds for θN with mean μ ∈ ℝd and asymptotic covariance matrix Σ ∈ ℝd×d; that is, assume

(62) Let Φ : ℝd → ℝ be a function differentiable at μ. Then we have the central limit theorem

(63) for the sequence of random variables Φ(θN).

To motivate the delta method, we observe that if X has distribution N(μ, Σ), then ∇Φ(μ)TX has distribution . That is, according to the delta method, the asymptotic distribution of Φ(X) is the linearization of Φ at μ applied to the asymptotic distribution of X. Thus, one may regard the delta method as a rigorous version of the standard error propagation formula based on linearization.

We prove the CLT for EMUS by applying the delta method with taking the place of θN and with the function B taking the place of Φ. We require the following assumptions in addition to Assumption 7.1.

Assumption 7.3.

We assume the following:

- 1.

The proportion of the total number of samples drawn from each window is constant in the limit as N → ∞; that is,

(64) - 2.

Sampling in different windows is independent; that is, is independent of when j ≠ i.

- 3.

The biasing functions ψi are chosen so that F is irreducible; see Section III B.

We now give the CLT for EMUS.

Theorem 7.4 Central Limit Theorem for EMUS —

Let Assumptions 7.1 and 7.3 hold. Let

(65) denote the partial derivative of B with respect to . Under the assumptions stated above,

(66) where

(67) We refer to σ2 as the asymptotic variance of EMUS.

Proof.

First, we write down a central limit theorem for . We have that

(68) by Assumption 7.1 and (64). Since the sampling in different windows is assumed to be independent, (68) implies

(69) where Σ ∈ ℝL(L+2)×L(L+2) is the block diagonal matrix

(70) Second, we verify that B is differentiable at . Since F is assumed to be an irreducible stochastic matrix, is differentiable at . We refer to Thiede et al.39 Lemma 3.1 for a complete explanation. It follows from the chain rule that B is differentiable at .

Finally, applying Lemma 7.2 with B playing the role of Φ and the role of θN concludes the proof.□

The asymptotic variance σ2 appearing in Theorem 7.4 measures the rate at which the error of EMUS decreases with the number of samples. To make this precise, we observe that Theorem 7.4 is equivalent to the following asymptotic result concerning confidence intervals. For every α > 0,

| (71) |

where P denotes a probability and erf denotes the error function.

The asymptotic variance is commonly used to measure the efficiency of an estimator. We refer to the work of van der Vaart40 for an explanation and for a discussion of other possibilities. In Section VII B, we explain how the proportion κi of samples allocated to each window may be adjusted to minimize the asymptotic variance of EMUS, thereby maximizing efficiency.

We note that a central limit theorem similar to Theorem 7.4 has been proved for the MBAR estimator by Gill et al. (Ref. 4, Proposition 2.2). However, the authors of this work do not study the dependence of the asymptotic variance on the parameters, as we do. In fact, the MBAR estimator is significantly more complicated than EMUS, and its dependence on the number of windows and the allocation of samples is harder to understand.

We36 use a result similar to Theorem 7.4 to generalize the conclusions of Section VI to periodic and multi-dimensional reaction coordinates and to a wider class of observables than free energy differences. We show both that the asymptotic variance is constant in the limit of large L and that the work required to compute an average to fixed precision increases only algebraically in the low temperature limit. In addition, we use recently developed perturbation estimates for Markov chains39 to quantify the dependence of the asymptotic variance of EMUS on the degree to which the bias functions overlap.

B. Estimating the asymptotic variance of EMUS

Our goal in this section is to derive a computable estimate of the asymptotic variance σ2, which can be decomposed to assess the contributions from individual windows to errors in averages. We recall that formula (67) for σ2 involves partial derivatives of B. Our estimate of σ2 requires explicit formulas for these partial derivatives. We provide the appropriate expressions, both for ensemble averages and for free-energy differences, in Lemma 7.5. Following the partial derivatives, we present an algorithm for evaluating and demonstrate it for the alanine dipeptide. Finally, we compare with the output of a procedure from Zhu and Hummer (ZH)12 in Section VII B 3.

Lemma 7.5.

We have the following formulas for :

- 1.

- 2.

When EMUS is used to compute a free-energy difference, B is defined by (56), and we have

(75)

(76)

(77) - 3.

When EMUS is used to compute the free energy of the k th window, B is defined by (11), and we have

(78) Note that for a free energy difference between windows, we can simply subtract derivatives for the corresponding windows.

(79)

Proof.

We begin by reminding the reader that the output of EMUS is the vector of window normalization constants, z, which depends on the sample mean . Because all other averages and, in turn, their derivatives rely on z, we need to determine the sensitivity of each element of z to each element of (i.e., ). Since is a stochastic matrix, some care must be taken in defining this derivative. We resolve the technical difficulties in detail elsewhere; see Ref. 36 and Ref. 39 (Lemma 3.1). Here, to obtain the derivative , evaluated at , we perturb around ,

(80) where E is an arbitrary matrix, ε is a scalar, and we assume that the sum is also a stochastic matrix. The right hand side follows from the chain rule, effectively treating each element of the matrix as a separate argument to each element z. Then, we employ a relation from Golub and Meyer (Ref. 13, Theorem 3.1),

(81) where # denotes the group inverse, a generalized matrix inverse similar to the Moore-Penrose inverse. It is defined as satisfying AA#A = A, A#AA# = A#, AA# = A#A. We refer to Golub and Meyer13 for further discussion of the group inverse and an algorithm for computing it. Finally, we equate (80) and (81) and solve for the derivative of interest,

(82) Thus the sensitivity of each element of z to each element of can be computed from linear algebra operations.

With (82), we can now compute the derivatives of B. We derive the formulas for the free-energy difference explicitly; the other cases are similar. In this case,

(83) By the chain rule,

(84) and

(85) The stated result follows by substituting g1 = 1S1, g2 = 1S2, and the expression in (82) for .□

1. Computational procedure

We now provide a practical procedure that uses the derivatives above to estimate σ2 from trajectories that sample the distributions πi. For clarity, we assume that the system is equilibrated (i.e., has distribution πi, so that the process is stationary) throughout this section.

We begin by rewriting (67) as

| (86) |

where

| (87) |

Defining the sequence

| (88) |

we find that

| (89) |

which is the integrated autocovariance of .

We thus propose the following algorithm, given simulation data:

-

1.

Compute .

-

2.

Compute and using the algorithm of Golub and Meyer.13

-

3.

Evaluate at using the formulas in Lemma 7.5.

-

4.Compute

(90) -

5.

Compute an estimate of the integrated autocovariance of using an algorithm such as ACOR.41

-

6.Compute the estimate of σ2,

(91)

Since , , and z are all computed in the process of obtaining the EMUS averages, estimating only requires one additional pass over the simulation data. This additional cost is insignificant compared with that of computing the trajectories.

Both (67) and its approximation (91) decompose the asymptotic variance of EMUS into a sum of contributions from each window. By comparing the sizes of terms in the sum, we can determine the degrees to which different windows contribute to the error. In principle, this information can be used to guide modification of the parameters of the simulation to improve efficiency. For instance, one might adjust the proportion of samples allocated to each window, κi, to minimize the asymptotic variance. From (86), the asymptotic variance σ2 is minimized when κi ∝ χi (see Equation (42) of Ref. 12). Consequently, we can define the relative importance of window i as

| (92) |

where the normalization is chosen so that μi = 1, regardless of L, if all windows have the same importance. The relative importance represents how many samples would be allocated to a window to optimally estimate a specific observable, compared to a uniform distribution over all windows.

2. Numerical results

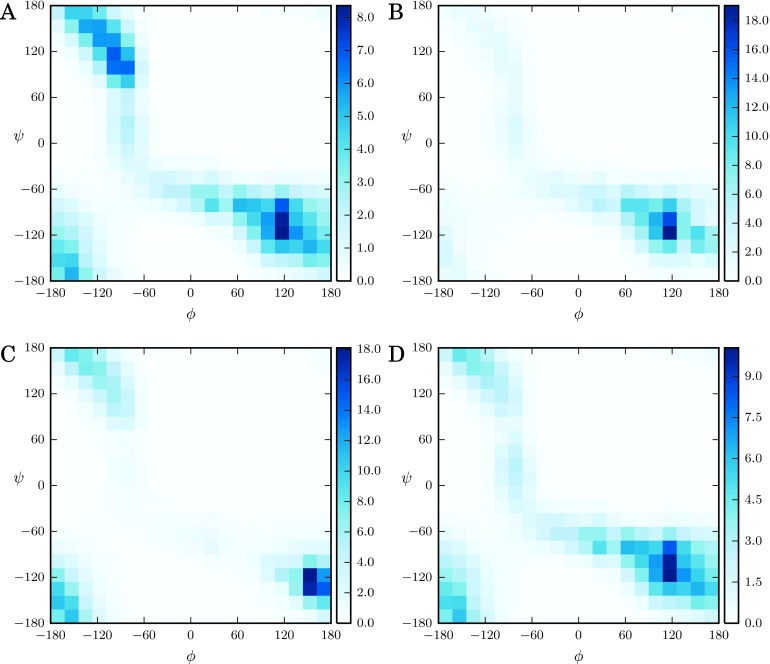

To study the behavior of these estimates, we performed a two-dimensional umbrella sampling calculation with restraints on the ϕ and ψ dihedral angles of the alanine dipeptide. Parameters were the same as in the one-dimensional calculation above, with the addition of 20 bias functions in the ψ dihedral with the same force constant, creating a grid of 400 windows. Each window was equilibrated for 40 ps and sampled for a further 150 ps, with the collective variable values output every 10 fs.

In Figures 3 and 4(a), we plot the two-dimensional PMF from EMUS and the importances for the free energy difference between two windows located at the C7 equatorial and C7 axial configurations. Comparison shows that the importances are high for windows on low free energy pathways between the two windows of interest. Two such pathways exist. In the representation in Figure 3, one proceeds up and to the left of the C7 equatorial basin and then (via the periodic boundaries) enters the C7 axial basin through transition state 1 (TS1 in Figure 3). The other pathway proceeds down then right through transition state 2 (TS2 in Figure 3). Of these two pathways, the first has a lower free energy barrier. We observe that the EMUS importances are larger for windows located on this pathway. In contrast, windows off these pathways in regions with high free energies have very low importances presumably because, though sampling error may be large in those regions, they do not contribute significantly to the desired averages. We refer the reader to Thiede et al.39 for a mathematical discussion of the sensitivities of the averages.

FIG. 3.

Potential of mean force obtained from US with biases on the ϕ and ψ dihedral angles. Major basins and barriers on pathways connecting them are indicated. Angles are measured in degrees. The scale bar indicates PMF values in kcal/mol, and the contour spacing is 2 kBT. The surface is constructed from simulation data accumulated in histograms with 100 bins in each collective variable. See text for simulation details.

FIG. 4.

EMUS relative importances. Angles are measured in degrees. (a) Relative importances for the free energy difference between windows in the C7 axial and C7 equatorial basins. The window in the C7 equatorial basin is centered at (–81°,81°), and the window in the C7 axial basin at (63°,–63°). (b) Window importances for the free energy difference between windows in the C7 axial basin and at TS1. Windows are centered at (63°,–63°) and (135°,−117°), respectively. (c) Importances for the free energy of the window at TS1. (d) Importances for the window in the C7 axial basin.

We expect the importances to depend on the computed average. To illustrate that this is the case numerically, we show the log importances for the free energy difference between a window in the C7 axial basin and one located on TS1 in Figure 4(b). Compared to Figure 4(a), the importances are higher in the C7 axial basin and lower in the C7 equatorial basin, which highlights that the importances depend on the average computed and do not simply mirror the free energy. In Figures 4(c) and 4(d), we plot the importances for estimating the window free energy (not the free energy difference) of the window on TS1 and the window in the C7 axial basin, respectively. We note that the importances in the C7 equatorial basin are higher in Figures 4(c) and 4(d) than in 4(b). This suggests that when the free energy difference between the two windows is considered, there is some cancellation of the errors arising in the C7 equatorial basin.

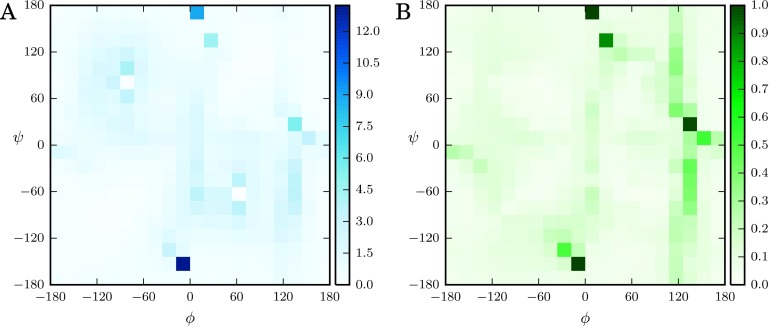

3. Comparison with other algorithms for determining error contributions

Zhu and Hummer12 proposed an algorithm for determining window free energies by calculating the mean restraining forces for each window and using thermodynamic integration to estimate free energy differences between adjacent windows. These are combined using least squares to calculate window free energies. Like EMUS, this algorithm allows one to construct error estimates that can be decomposed into contributions from individual windows. The authors give an expression for the error in the free energy of one window. This expression can be easily extended to the free energy difference between two windows, giving

| (93) |

where is the average force exerted by the bias function for window k in the αth dimension. The constants cikα and cjkα are defined in Appendix A of Zhu and Hummer.12 The authors propose that these error estimates are applicable to WHAM and other umbrella sampling algorithms.

Both (67) and (93) are sums of contributions from individual windows. Using the formalism introduced in Section VII B 1, we define the process

| (94) |

and as the integrated autocovariance of . This allows us to define importance for the Zhu and Hummer algorithm analogously to those for EMUS (see (92)).

We applied the ZH error analysis to the two-dimensional umbrella sampling data used in Section VII B 2 and calculated the importances for the same free energy difference as in Figure 4(a) (Figure 5(a)). Rather than falling along the low free energy pathways, as for EMUS, the ZH importances mirror the autocorrelation times (Figure 5(b)). This indicates that windows have large ZH importances if they have large fluctuations in free energy. We thus see that different algorithms emphasize different windows in US. We can understand the behaviors of these two algorithms by considering (88) and (94). The factor in (88) depends explicitly on the normalization constant for each window (see Lemma 7.5). By contrast, the factor in (94) depends only on the relative positions of the windows and not on their free energies.

FIG. 5.

Comparison to the work of Zhu and Hummer. (a) ZH estimates for the relative importances for the free energy difference between windows in the C7 axial and C7 equatorial basins. Compare with Figure 4(a). (b) Autocorrelation times of the trajectory in each window. The largest value observed is 3 ps, but the scale is limited to 1 ps for visual clarity.

VIII. CONCLUSIONS

The success of an umbrella sampling simulation depends on the choice of windows (i.e., how the system is biased) and the estimator used to determine the normalization constants of the windows from trajectory data. Here, we show that the normalization constants can be obtained from an eigenvector of a stochastic matrix. This eigenvector method for umbrella sampling (EMUS) can be viewed as the first step in an implementation of the MBAR estimator. In our experience, this first step is nearly converged, and machine precision is reached in only a few iterations. Moreover, each iteration yields a consistent estimate. Most importantly, error analysis is considerably easier for EMUS than MBAR because the elements of the stochastic matrix do not depend on the normalization constants.

Within this framework, we revisited a common scaling argument for justifying umbrella sampling and showed that once the number of windows becomes sufficiently large, the scheme does not benefit from the addition of more windows (i.e., the variance is not further reduced for a fixed computational effort). We show that an alternative scaling regime in which temperature decreases (or, equivalently, free-energy barrier heights increase) as the number of windows increases best demonstrates the potential benefits of the umbrella sampling strategy; in that regime the efficiency improvement over direct simulation is exponential in the (inverse) temperature.

Our main theoretical result is a central limit theorem for the statistical averages obtained from EMUS. This result relies on the delta method, which we use to characterize the propagation of the asymptotic error through the solution of a stochastic matrix eigenproblem. The central limit theorem provides an expression for the asymptotic variance of the averages of interest. It is a sum of contributions from individual windows, and we use it to develop a prescription for estimating the relative importances of windows for averages from the trajectory data. For free energy differences of states of the alanine dipeptide, we find numerically that the importances are largest for low-free energy pathways that connect the specific states of interest. These results suggest that the importances could serve as the basis for adaptive schemes that focus computational effort on the windows of most importance. Even more interesting would be to adjust the bias functions as the simulation progresses. How best to do this remains an open area of investigation.

Acknowledgments

This research was supported by National Institutes of Health (NIH) Grant No. 5 R01 GM109455-02. We wish to thank Jonathan Mattingly, Jeremy Tempkin, and Charlie Matthews for helpful discussions.

APPENDIX: CONSISTENCY OF ITERATIVE EMUS

Here, we prove that for fixed finite m, zm is a consistent estimator of the vector of normalization constants z. With the initial guess z0 = n, the result, z1, of the first iteration is the EMUS estimator. We now show that z2 is also consistent in the sense that if the trajectory averages defining converge then z2 converges to z. Because the various sequences in question are sequences of random variables, one must specify what is meant by convergence. The argument below applies when convergence refers either to convergence in probability or convergence with probability one (almost sure convergence) as long as the notion of convergence is consistent throughout. Consistency of zm follows by induction on m using a similar argument.

For any positive vector w, we define

| (A1) |

and we write

| (A2) |

where

| (A3) |

We then observe that

| (A4) |

Because hij(u, x) ≤ ui/uj,

| (A5) |

Therefore,

| (A6) |

for a continuous function γ defined for positive vectors u and and such that γ(u, u) = 0 for any u. The function must explode when the entries of u or approach 0. Now define

| (A7) |

where z is the exact vector of normalization constants. By (A6), we have

| (A8) |

As the number of samples N increases, converges to F(z). Moreover, since z1 is the EMUS estimate of z, z1 converges to z. Therefore, u converges to , and (A8) implies that converges to Fij(z). Finally, since the function mapping an irreducible, stochastic matrix to its invariant vector is continuous, it follows that z2 converges to the invariant vector of F(z), which is z. This verifies the consistency of z2.

REFERENCES

- 1.Torrie G. M. and Valleau J. P., J. Comput. Phys. , 187 (1977). 10.1016/0021-9991(77)90121-8 [DOI] [Google Scholar]

- 2.Pangali C., Rao M., and Berne B., J. Chem. Phys. , 2975 (1979). 10.1063/1.438701 [DOI] [Google Scholar]

- 3.Vardi Y., Ann. Stat. , 178 (1985). 10.1214/aos/1176346585 [DOI] [Google Scholar]

- 4.Gill R. D., Vardi Y., and Wellner J. A., Ann. Stat. , 1069 (1988). 10.1214/aos/1176350948 [DOI] [Google Scholar]

- 5.Kumar S., Bouzida D., Swendsen R. H., Kollman P. A., and Rosenberg J. M., J. Comput. Chem. , 1011 (1992). 10.1002/jcc.540130812 [DOI] [Google Scholar]

- 6.Shirts M. R. and Chodera J. D., J. Chem. Phys. , 124105 (2008). 10.1063/1.2978177 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rosta E. and Hummer G., J. Chem. Theory Comput. , 276 (2014). 10.1021/ct500719p [DOI] [PubMed] [Google Scholar]

- 8.Mey A. S., Wu H., and Noé F., Phys. Rev. X , 041018 (2014). 10.1103/PhysRevX.4.041018 [DOI] [Google Scholar]

- 9.Tan Z., Gallicchio E., Lapelosa M., and Levy R. M., J. Chem. Phys. , 144102 (2012). 10.1063/1.3701175 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lelièvre T., Stoltz G., and Rousset M., Free Energy Computations: A Mathematical Perspective (World Scientific, 2010). [Google Scholar]

- 11.Minh D. D. and Chodera J. D., J. Chem. Phys. , 134110 (2009). 10.1063/1.3242285 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhu F. and Hummer G., J. Comput. Chem. , 453 (2012). 10.1002/jcc.21989 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Golub G. H. and C. D. Meyer, Jr., SIAM J. Algebraic Discrete Methods , 273 (1986). 10.1137/0607031 [DOI] [Google Scholar]

- 14.Thiede E. H., EMUS, 2016, https://github.com/ehthiede/EMUS.

- 15.Schneider H., Linear Algebra Appl. , 139 (1977). 10.1016/0024-3795(77)90070-2 [DOI] [Google Scholar]

- 16.Tan Z., J. Am. Stat. Assoc. , 1027 (2004). 10.1198/016214504000001664 [DOI] [Google Scholar]

- 17.Frenkel D. and Smit B., Understanding Molecular Simulation: From Algorithms to Applications (Academic Press, 2001). [Google Scholar]

- 18.Geyer C. J., Stat. Sci. , 473 (1992). 10.1214/ss/1177011137 [DOI] [Google Scholar]

- 19.Doss H. and Tan A., J. R. Stat. Soc.: Ser. B , 683 (2014). 10.1111/rssb.12049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Meng X.-L. and Wong W. H., Statistica Sinica , 831 (1996). [Google Scholar]

- 21.Paliwal H. and Shirts M. R., J. Chem. Theory Comput. , 4700 (2013). 10.1021/ct4005068 [DOI] [PubMed] [Google Scholar]

- 22.Shirts M. R., Mobley D. L., and Chodera J. D., Annu. Rep. Comput. Chem. , 41 (2007). 10.1016/S1574-1400(07)03004-6 [DOI] [Google Scholar]

- 23.Abraham M. J., Murtola T., Schulz R., Páll S., Smith J. C., Hess B., and Lindahl E., SoftwareX , 19 (2015). 10.1016/j.softx.2015.06.001 [DOI] [Google Scholar]

- 24.Tribello G. A., Bonomi M., Branduardi D., Camilloni C., and Bussi G., Comput. Phys. Commun. , 604 (2014). 10.1016/j.cpc.2013.09.018 [DOI] [Google Scholar]

- 25.MacKerell A. D., Banavali N., and Foloppe N., Biopolymers , 257 (2000). [DOI] [PubMed] [Google Scholar]

- 26.Hess B., Bekker H., Berendsen H. J. C., and Fraaije J. G. E. M., J. Comput. Chem. , 1463 (1997). [DOI] [Google Scholar]

- 27.Grossfield A., “WHAM: The weighted histogram analysis method (version 2.0.9),” 2013, http://membrane.urmc.rochester.edu/content/wham.

- 28.Süli E. and Mayers D. F., An Introduction to Numerical Analysis (Cambridge University press, 2003). [Google Scholar]

- 29.Chandler D., Introduction to Modern Statistical Mechanics (Oxford University Press, 1987). [Google Scholar]

- 30.Chipot C. and Pohorille A., Free Energy Calculations (Springer, 2007), p. 86. [Google Scholar]

- 31.van Duijneveldt J. and Frenkel D., J. Chem. Phys. , 4655 (1992). 10.1063/1.462802 [DOI] [Google Scholar]

- 32.Wojtas-Niziurski W., Meng Y., Roux B., and Bernèche S., J. Chem. Theory Comput. , 1885 (2013). 10.1021/ct300978b [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Virnau P. and Müller M., J. Chem. Phys. , 10925 (2004). 10.1063/1.1739216 [DOI] [PubMed] [Google Scholar]

- 34.Nguyen T. H. and Minh D. D., J. Chem. Theory Comput. , 2154 (2016). 10.1021/acs.jctc.6b00060 [DOI] [PubMed] [Google Scholar]

- 35.Kelly F. P., Reversibility and Stochastic Networks (Cambridge University Press, 2011). [Google Scholar]

- 36.Dinner A. R., Thiede E. H., Van Koten B., and Weare J., “Stratification of Markov chain Monte Carlo sampling” (unpublished). [DOI] [PMC free article] [PubMed]

- 37.Lelièvre T., Stoltz G., and Rousset M., Free Energy Computations: A Mathematical Perspective (Imperial College Press, Hackensack, NJ, 2010), p. 458. [Google Scholar]

- 38.Bilodeau M. and Brenner D., Theory of Multivariate Statistics (Springer Science & Business Media, 2008). [Google Scholar]

- 39.Thiede E., Van Koten B., and Weare J., SIAM J. Matrix Anal. Appl. , 917 (2015). 10.1137/140987900 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.van der Vaart A. W., Asymptotic Statistics, Cambridge Series on Statistical and Probabilistic Mathematics (Cambridge University Press, Cambridge, New York, 1998), p. 443. [Google Scholar]

- 41.Foreman-Mackey D. and Goodman J., ACOR 1.1.1, 2014, https://pypi.python.org/pypi/acor/1.1.1.