SUMMARY

The number of large-scale genomics projects is increasing due to the availability of affordable high-throughput sequencing (HTS) technologies. The use of HTS for bacterial infectious disease research is attractive because one whole-genome sequencing (WGS) run can replace multiple assays for bacterial typing, molecular epidemiology investigations, and more in-depth pathogenomic studies. The computational resources and bioinformatics expertise required to accommodate and analyze the large amounts of data pose new challenges for researchers embarking on genomics projects for the first time. Here, we present a comprehensive overview of a bacterial genomics projects from beginning to end, with a particular focus on the planning and computational requirements for HTS data, and provide a general understanding of the analytical concepts to develop a workflow that will meet the objectives and goals of HTS projects.

INTRODUCTION

High-throughput sequencing (HTS) has transformed biomedical research. Declining costs and development of accessible computing options have resulted in the widespread adoption of these technologies in the scientific community. PCR and Sanger sequencing (often referred to as “traditional sequencing” methods) required proportionally more time generating the data than was needed for downstream analysis; in contrast, HTS platforms can produce massive amounts of data relatively quickly compared to the time needed for analysis and interpretation. The bottleneck between data generation and meaningful interpretation has generated a need for new, efficient, and innovative data management and analysis methods.

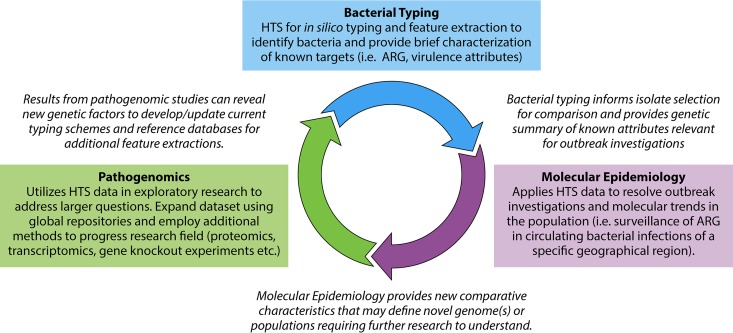

Here, we provide a comprehensive review on how to conduct an HTS project in bacterial genomics with particular emphasis on infectious disease microbiology. Although basic scientific processes and experimental design have not changed, the additional steps and scale of data generation have caused a paradigm shift in the time and resource allocations required to successfully complete HTS projects. We present the process in the context of three applications with various scopes, with the goal that this review will be relevant and scalable to many areas of infectious disease genomics research. The three applications include (i) bacterial typing, (ii) molecular epidemiology, and (iii) pathogenomics. Figure 1 illustrates how the use of HTS for whole-genome sequencing (WGS) can apply to scalable projects in a feedback loop. The whole-genome data can be mined for comparison with current typing schemes or used to create expanded “fingerprints” of the bacteria (bacterial typing), which in turn can contribute to investigating a larger defined bacterial population (molecular epidemiology). The comparative information regarding population trends, identification of novel strains, or genomic features can be studied in more depth by employing complementary research methods to understand pathogenic mechanisms (pathogenomics).

FIG 1.

Example of three HTS applications for infectious disease bacterial genomics. These applications use HTS data to answer common questions regarding bacterial pathogenesis in a public health/clinical microbiological research setting from bacterial typing to molecular epidemiology and in-depth pathogenomic investigations. These applications are shown in a feedback loop to demonstrate that HTS provides data that can be analyzed to various degrees (both depth and breadth) based on the hypotheses under test and the number of isolates included for comparative genomics.

To overcome the bottleneck associated with big data analysis, a shift in resource allocation is needed to ensure that adequate computational resources and expertise are available to efficiently produce high-quality data and results. Therefore, proper planning and a multidisciplinary team are essential to successfully execute large-scale HTS projects. This review provides a resource for conducting HTS projects from beginning to end, based on expertise from successful infectious disease genomics projects in the literature and personal experiences.

PREPARATION

Reallocation of resources to efficiently handle the increasing sample sizes and large amounts of HTS data produced presents new challenges to researchers. The amount of data generated often exceeds the computational storage and computing capacity of local systems, requiring researchers to find additional resources to organize and manage it all through their analysis workflows. Therefore, an end-to-end understanding of microbial HTS projects and available options will better equip researchers to anticipate bottlenecks and prepare sufficient resources to mitigate them.

HTS technologies enhance our ability to characterize and differentiate clinically relevant bacterial populations, understand and predict epidemiological trends, and create new analytical tools or improve existing non-HTS molecular tests (Fig. 1). The timeline for project completion depends on many variables such as the scope of the project (i.e., number of samples, size of the research team, and depth of research questions), biological characteristics of bacteria under study, sequencing platform(s) used, and outcome goals. Figure 2 illustrates a generalized timeline of the major stages in a large-scale HTS project. We have placed a large emphasis on the planning stage prior to data generation and the need for ongoing project management to maintain continual forward progression of tasks through each stage. The analysis has been separated into three stages: primary, secondary, and tertiary. Primary analysis is the first analytical pass: quality assurance (QA) and control of the HTS data. Secondary analysis employs common (likely automated) workflows typically performed on newly generated genomes, which can include reference mapping and de novo assembly. Tertiary analysis is the “sense-making” stage of the project, where interpretations and conclusions are drawn from comparative analyses, and it includes more specialized or focused processes.

FIG 2.

General HTS project stages and timeline. The importance and time requirements for the project planning stage and ongoing project management are sometimes underestimated but are invaluable for large-scale HTS projects for which hundreds of samples and terabytes of data are produced. The stages are divided by natural timeline progression and also increasing depth of investigation and specialized analysis requirements.

Project Management

The project manager role is often filled by the lead principal investigator or may be divided among senior members of the project. For some large-scale projects, a dedicated project manager may be assigned. In general terms, the project manager is responsible for organizing and controlling performance as the project progresses (1). HTS project-specific considerations are summarized in Table 1. Project management tasks can be categorized into communication, logistical facilitation (i.e., transfer of materials/data), and data management. For more detailed information on data management, we refer readers to recent publications that summarize the need for data management throughout the data life cycle in HTS projects (i.e., raw, intermediate, and result data) and propose some best-practice guidance to develop policies for the management, analysis, and sharing of data within HTS projects (2, 3).

TABLE 1.

Project management principles and how they relate to HTS research projects

| Principle | General components | HTS project-specific considerations |

|---|---|---|

| Planning | Identify questions to be addressed; identify stakeholders; identify tasks; identify outcomes, identify risks and plan for them; establish roles and responsibilities | Funding and ethics timelines; equipment costs/availability for all stages; choose sequencing platform and configurations; computational resources and analysis plan; appropriate strain selection; metadata organization and coding of samples; anticipate risks or bottlenecks in the workflow |

| Organizing | Organize resources; organize data | Staff training if required; data management; procurement; data collection |

| Controlling performance | Organize, focus, and motivate personnel; track work and results; communicate; update stakeholders; monitor and mitigate risks | Curate data on an ongoing basis; identify bottlenecks/issues and facilitate progression to next steps; confirm and assess work against milestones; progress reports as required |

Experimental Design

The experimental design should be established during the planning stage and encompass the entire project from the initial question/hypothesis through the sampling strategy, data generation methodology, and analysis plans to defining the outcome goals and deliverable endpoints. There are many legitimate sampling strategies in scientific research; the application will dictate which strategy is appropriate. Table 2 describes some general examples of sampling strategies for the three applications targeted in this review.

TABLE 2.

Sampling strategy examples for 3 applications of HTS projects

| Example of sampling strategy | Application(s)a |

|---|---|

| Unbiased prospective or retrospective (or combined) sampling—sampling of all strains meeting a specific definition (i.e., over a time period or region) for unbiased discovery (typically population-based studies) | |

| ∙ Characterization of genome population: what is circulating? | BT, ME |

| ∙ Sample and reveal trends for strains based on geography or time | ME |

| Differential or niche sampling—categorically biased sampling to assess anticipated population differences (typical case-vs-control or cohort studies) | |

| ∙ Compare genomic differences between epidemiologically defined groups (i.e., community vs hospital acquired; presence vs absence of a pathogenic phenotype or clinical outcome) | BT, ME, P |

| ∙ Characterize and compare genomes of closely related strains from different environmental niches | BT, ME, P |

| ∙ Pathogenic vs commensal isolates within the same species | ME, P |

| ∙ Characterization of new pathogen genotype(s) and/or novel strains | P |

BT, bacterial typing; ME, molecular epidemiology; P, pathogenomics.

The data describing each sample are known as the “metadata” and are crucial to the extraction of meaningful biological interpretation from the analysis results. Minimal information such as the source, location, and collection date should be supplied for each sample to ensure that results can be correctly inferred for the surveyed population. A metadata assessment metric, the Metadata Coverage Index (MCI), has been suggested as a standardized metric for quantifying database annotation richness. In the future, the MCI might be used to ascertain the richness of metadata coverage for genomics standard compliance, quality filtering, and reporting (4), and yet it remains unlikely that manual curation of metadata can be eliminated. Researchers persevere globally to establish performance specifications and to fit HTS within existing communities of practice (regulatory or professional standards) (5, 6). Hence, in this changing context, HTS processing and quality guidelines will remain a “space to watch” for the foreseeable future.

One of the first and most important steps in any scientific investigation is the generation of a hypothesis. Although the large data sets generated by genomics technologies do permit data-driven research, these studies are typically designed to help sharpen a broad hypothesis, not to resolve it (7). Once the project has a defined question or hypothesis, the outcome goals and deliverables can be established. These desired goals will guide the course that the analysis workflow should follow. There are likely multiple paths that analysis can pursue; thus, establishing a clear objective and defined endpoint early will help ensure that the project is successfully completed in a timely fashion and that resources will be applied most efficiently. Depending on the purpose and nature of the study, deliverables may include publications, presentations, regulatory/response action, or policy changes. Knowledge translation in the form of sharing data publicly should be recognized with high priority to enhance global data repository resources and analysis tool development.

Computational infrastructure resources.

The large amount of data generated by HTS and the processing required to perform comparative genomics require a substantial computing infrastructure and sophisticated software. Before undertaking an HTS project, careful consideration should be given to the computing requirements and qualified experts (i.e., computational biologists and bioinformaticians) necessary to complete the data analysis. For example, a single Illumina MiSeq run can produce up to 15 gigabases and many contemporary, large-scale projects require multiple MiSeq runs or the use of larger-capacity platforms. Consequently, analysis of such output data sets can take a significant amount of time and resources. Although some of the most rudimentary analyses for a single genome can be achieved on modern desktop computers with the proper software and configuration, generating accurate and timely results for hundreds of simultaneously analyzed genomes requires considerably more computational “muscle.” A standard desktop computer may have only 8 gigabytes (GB) of memory, 4 processing cores, and 1 terabyte (TB) of storage space, whereas high-end machines found in large data centers likely have hundreds of gigabytes of memory, as many as 64 processing cores per machine, and access to hundreds or thousands of terabytes of storage. These high-end machines can be linked together to construct high-performance computing clusters capable of simultaneously analyzing hundreds or thousands of genomes. Computing on this scale typically has its own administrator and requires housing within a data center with redundant, uninterruptable power supplies and industrial-scale cooling systems. Although such large-scale computing clusters may not be required for small or even some medium-size HTS projects, the HTS project planning stage should include advance estimates for the computational resources required. If the requisite computing infrastructure is not available locally or as a shared resource within an institution, a popular alternative is commercial cloud computing services, in which large-scale computational resources are provided on demand for a fee.

(i) Estimating computational resources.

Estimating computational resources should include attention to items such as physical memory of the machine (random access memory [RAM]) and processing power (central processing unit [CPU] cores and speed), as well as network bandwidth for large data transfers (e.g., transfer of data from the HTS instrument to its interim data storage location or final archive).

Computational resource comparisons are often made with respect to secondary processes such as reference mapping (the alignment/mapping of HTS sequence reads to a reference genome) or de novo assembly (the process of combining sequence reads to reconstruct the original genome without the guidance of a reference). Both processes are fully described in the Secondary Analysis section. While resource requirements can vary between tasks and software chosen, they are often on the order of several gigabytes of memory and several hours per genome (8). For small numbers of genomes, secondary analyses can be performed sequentially on a single workstation or even a high-end laptop; however, large projects with high sample numbers multiply these resource requirements. Thus, adjustments may require different software and/or upgraded computers depending on the software's computational time requirements, the number of genomes to be analyzed, and the project deadlines.

Network bandwidth is another important consideration, particularly if cloud computing or offsite computing resources are used, or if additional data are required from external resources such as NCBI's Sequence Read Archive (SRA) (9). Hence, the time to transfer these data should be taken into account before initiating an HTS project. Gigabit networking cards are affordable, and comparable Internet speeds are increasingly becoming available from most service providers, which are adequate for timely transfers of HTS data.

(ii) Data storage requirements.

Storage requirements for an HTS project include both storage of the initial sequence reads and the necessary space for performing data analysis. Although storage is relatively inexpensive, with most standard hard drives capable of storing 1 TB or more, the inherent large file sizes of raw sequence data, as well as the incorporation of publicly available sequence read data for many analysis pipelines, can take up significant storage space. Common file formats used to store sequence reads include FASTQ (10), BAM (11), and the SRA (12) format. These formats store both the individual bases for each sequence read (ATCG) and a Phred quality score encoding the probability of an error in the base (13), often in a compressed form. An estimate of the sequence read storage requirements for a single Escherichia coli genome stored in FASTQ format may be on the order of several hundred megabytes. Hence, permanent raw data storage requirements must be scaled accordingly for larger numbers of bacterial genomes.

Estimation of the storage requirements for data analysis is even more challenging owing to the numerous analytical possibilities and the large temporary interim files generated. These analysis steps often produce multiple redundant copies of the compressed reads along with large internal temporary files, expanding the initial storage requirements by severalfold. Although many of these large temporary files can eventually be deleted, maintaining these copies over the course of an investigation may be desirable for troubleshooting and validation of the results. When considering the tens, hundreds, or thousands of genomes to be processed in parallel, for example, when generating large-scale whole-genome phylogenies, one quickly realizes the impact of HTS data volume on data storage and on the computing and qualified personnel required to manage it.

(iii) Cloud-based computing.

Cloud-based analysis environments, where computational resources are provided by large-scale commercial data centers, have become increasingly commonplace and can provide high-performance computing on demand. Cloud computing can be divided into three different service models: Infrastructure as a Service (IaaS), which provides physical computing resources (e.g., 40 CPU cores and 160 GB of memory) and complete control over the operating system and software installed; Platform as a Service (PaaS), which provides a preinstalled operating system and suite of standard software; and Software as a Service (SaaS), which provides access to specific software applications through a common interface such as a Web browser. Amazon Web Services (Amazon.com Inc., Seattle, WA, USA), Google Cloud Platform (Google, Mountain View, CA, USA), and Microsoft Azure (Microsoft, Redmond, WA, USA), shown in Table 3, provide a mixture of IaaS and PaaS cloud services and have been used for large-scale bioinformatics analysis (14–16). However, the setup and configuration of an HTS cloud-based analysis environment may still require considerable time and expertise. SaaS providers, such as Illumina's BaseSpace (San Diego, CA, USA), impart value by removing the required setup and maintenance of HTS computing environments. For those lacking resources or time for a local HTS data analysis environment, SaaS may be the preferred option so long as requisite analysis software is available to achieve project goals.

TABLE 3.

List of bioinformatics analysis resources

| Type | Name | Cost | Comments |

|---|---|---|---|

| Cloud services (IaaS/PaaS) | Amazon Web Services (Amazon.com Inc., Seattle, WA, USA) | Commercial | Commercial cloud environments providing resources to construct customized high-performance computing environments; acts as a base from which additional software (e.g., Galaxy) can be utilized |

| Microsoft Azure (Microsoft, Redmond, WA, USA) | Commercial | ||

| Google Cloud Platform (Google, Mountain View, CA, USA) | Commercial | ||

| Cloud services (SaaS) | Illumina BaseSpace (San Diego, CA, USA) | Commercial | Commercial cloud-based bioinformatics analysis environments associated with different sequencing instruments; provides analysis tools and data management fine-tuned for each sequencing instrument; often integrates free and open-source bioinformatics tools described in this review (e.g., FastQC for quality control of sequence reads) |

| Thermo Fisher Cloud (South San Francisco, CA, USA) | Commercial | ||

| Metrichor (Oxford, UK) | Commercial | ||

| DNAnexus (Mountain View, CA, USA) | Commercial | Cloud-based bioinformatics environment not specifically tied to any sequencing platform | |

| Web services | Galaxy (24) | Free | Free and open-source bioinformatics analysis environment available at https://galaxyproject.org/; private instances can be installed on local hardware or within a cloud-based environment; |

| RAST (29) | Free | Web service focused on genome annotation; available at http://rast.nmpdr.org/ | |

| Center for Genomic Epidemiology (30–32) | Free | Available at http://www.genomicepidemiology.org/; provides access to free tools related to genomic epidemiology (e.g., genome sequence typing or construction of phylogenetic trees) | |

| Desktop based | CLC Genomics Workbench (CLC Bio, Aarhus, Denmark) | Commercial | Commercial desktop-based bioinformatics environments; may also provide support for integration with high-performance computing environments; often integrates existing free and open-source bioinformatics tools (e.g., Velvet for de novo assembly) |

| BioNumerics (Applied Maths, Sint-Martens-Latem, Belgium) | Commercial | ||

| Ridom SeqSphere+ (Ridom GmbH, Münster, Germany) | Commercial |

For any cloud-based solution, data privacy and security become a consideration. In the United States, the Health Insurance Portability and Accountability Act (HIPAA) defines a set of standards for the protection and security of electronic health information (http://www.hhs.gov/hipaa/). In particular, the HIPAA Privacy Rule establishes standards for the use of “protected health information” (i.e., individually identifiable information) managed by “covered entities” (i.e., health care providers) (17). The promising use of cloud computing services within health services has generated an interest in developing HIPAA-compliant or other privacy-compliant systems in a cloud environment, often requiring the use of technical solutions such as well-defined access controls, data encryption, and auditing (18). Most cloud providers will advertise their privacy and security policies, and interested readers are encouraged to review these policies for additional information.

While HIPAA is concerned with the protection of personally identifiable information, such as clinical records, there are fewer restrictions on the use or disclosure of deidentified health information (17). DNA has previously been excluded from being regarded as personally identifiable (17), although this is increasingly being called into question for human-derived data wherein there may be a risk of deducing identifiable information in certain circumstances (19). As reported by “Pathogen Genomics into Practice” from the PHG Foundation (20), such risk (of being personally identifiable) is much lower for microbial HTS data generated from isolated microbial cultures unless there is (unlikely) contaminating human DNA carryover. HTS sequences from uncultured samples sourced from humans (i.e., metagenomics) are perceived as a higher risk owing to the presence of human genomic information. Such human-derived data can and should be removed in the primary data processing stage. Metadata associated with clinical samples (e.g., description of isolate source) have the highest risk, as they often include personally identifiable information.

Thus, for the use of cloud computing services, or more broadly for sharing data into the public archives, a clear definition of what constitutes personally identifiable information should be preestablished. For privacy compliance purposes, only deidentified information (e.g., HTS data) should be shared with and stored within cloud services where possible, with more sensitive information kept separate. The GenomeTrakr network in the United States mitigates this challenge by segregating the HTS data away from the sensitive metadata. In that system, only HTS data from microbial cultures and a minimal set of metadata are deposited in public archives to facilitate efficient monitoring of foodborne pathogens nationally and globally, while the more sensitive information is kept confidential (21). However, even in the absence of storing identifiable information within cloud services, plans should be made in advance related to data control, security, and accountability in the event of a cloud service failure (22).

Software and workflow management.

Analysis of HTS data requires the execution of a large collection of software through a series of stages, called workflows or pipelines, before the final result is produced and interpretation can begin. The individual software components at each analysis stage are made available through a variety of sources such as free and open-source downloadable packages, Web services, or commercial software. Organizing these software components into a data analysis workflow can be challenging. For example, software outputs are often needed as input to the next step (but may not conform); thus, these workflows need appropriate transformation and management. Software to assist in this process has been developed, spanning a spectrum from generic scientific workflow managers to extremely customized data analysis pipelines. As part of the HTS planning stage, examination of these software solutions should be performed, keeping in mind the desired results of the project and the cost, including expertise for the setup and maintenance of any software selected. A number of available software options are described below and also shown in Table 3. Readers are encouraged to refer to additional reviews (23) or the software-specific citations for further details.

Galaxy (24) is a popular Web-based bioinformatics data and workflow management platform. Galaxy provides a large collection of data analysis and statistical software as well as data manipulation tools that can be executed through a standard Web browser. Software and tools required for the analysis can be linked together within Galaxy to create automated workflows. The customized workflow can subsequently be configured to run multiple data sets in parallel using high-performance computing environments. Many workflows are publicly available, making it easier for novice Galaxy users to run standard analysis pipelines without having to create complex workflows themselves. Tools, software, and workflows are continually being added by a large community of bioinformaticians and software developers through the Galaxy ToolShed (25). Galaxy is publicly available online (https://usegalaxy.org/) but may not provide the required storage and rapid processing time for large-scale data analysis or offer requisite data privacy. Galaxy is free, open-source software allowing anyone to download and install it on a local computing environment, be it a desktop/laptop or high-performance computing cluster. Unfortunately, the setup and maintenance of such an environment require considerable expertise well beyond the skill set and interest of most nonbioinformaticians. CloudMan (26) provides a method to alleviate some of the setup and maintenance difficulties by simplifying the process of deploying Galaxy within a cloud environment and has been used successfully, for example, by the University of Melbourne researchers to develop the Genomics Virtual Laboratory (27). However, the varying quality of documentation and support for individual tools may leave Galaxy less suited for clinical applications. Commercially supported Galaxy environments, such as Globus Genomics (28) and the BioTeam Galaxy Appliance (BioTeam Inc., Middleton, MA, USA), are available and attempt to address some of these shortcomings for a cost.

Alternatively, many cloud-based SaaS platforms have been developed with fine-tuned pipelines for HTS data analysis. This software requires no installation or local computational infrastructure and is commonly used through a standard Web browser. Illumina provides BaseSpace, while Thermo Fisher (South San Francisco, CA, USA) provides Thermo Fisher Cloud. Pacific Biosciences (PacBio; Menlo Park, CA, USA) provides its single molecular real-time (SMRT) analysis software in the form of a downloadable virtual machine image that can be executed locally or in cloud-based environments and provides additional analysis support through partner companies. Oxford Nanopore (Oxford, United Kingdom) provides cloud-based analysis for its nanopore sequencers such as the portable MinION and high-throughput PromethION via the company Metrichor. In addition to sequencer-specific cloud-based analysis platforms, companies such as DNAnexus (Mountain View, CA, USA) can provide alternative options.

For many SaaS platforms, HTS data can be directly uploaded to the cloud either via a Web interface or directly from compatible sequencing platforms. Once uploaded, a variety of software applications can be executed on these data for tasks such as de novo assembly or variant identification. These software applications may be linked together to form complex scientific workflows. Unfortunately, not all data analysis types (such as constructing whole-genome phylogenies) or pipeline operating procedures are supported. Alternative, user-supplied solutions may be required. Additionally, many of these solutions are provided only commercially and associated costs may be prohibitive.

As an alternative to Web-based cloud software, a variety of commercial desktop applications have been developed. Unlike cloud-based software, desktop applications are installed on a specific local machine and interaction is via a (point-and-click) graphical user interface (GUI). Data analysis can be performed locally, or data can be submitted to a preconfigured high-performance computational cluster for more complex analysis procedures. The list of desktop-based bioinformatics software for analysis of HTS data is large and growing; however, some popular options include CLC Genomics Workbench (CLC Bio, Aarhus, Denmark), BioNumerics (Applied Maths, Sint-Martens-Latem, Belgium), and Ridom SeqSphere+ (Ridom GmbH, Münster, Germany). Built-in analysis modules are provided by each application for standard analysis types, such as de novo assembly; however, more advanced analysis modules may be available. In particular, BioNumerics and Ridom SeqSphere+ have both been developing whole-genome and core genome multilocus sequence typing (MLST) modules (as wgMLST and cgMLST, respectively), thereby enabling rapid phylogenomic comparisons of many genomes. However, the associated cost of some of these applications may be prohibitive for smaller-scale HTS projects or some investigators.

Another set of software includes the variety of free bioinformatics Web services. These are operated using a standard Web browser with data analysis performed on remote computing infrastructure. However, unlike generic SaaS providers, these services are often focused on a particular analysis type, such as the RAST server (29) for genome annotation, and provide minimal data management capabilities. The Center for Genomic Epidemiology provides a large collection of free Web services for analysis types such as in silico MLST typing (30), identification of antimicrobial resistance genes (31), and construction of whole-genome phylogenies (32). These services can provide a rapid method for data analysis; however, minimal control is provided over the operating procedures of each pipeline, caps may be implemented on the amount of data that can be uploaded, and no guarantee is provided as to when results will be completed. Data are generally processed on demand, but there is limited retention of the analysis or the results other than for a short duration.

(i) Data analysis reproducibility.

Reproducibility of analysis results is an important aspect of scientific research (33); however, reproducibility in the data sciences can be challenging owing to the use of complex analysis workflows and incomplete recording of details and software necessary to replicate a study (34, 35). The use of HTS data for infectious disease analysis is a growing field, with a large collection of data analysis software and pipelines actively under development. Use of the previously mentioned workflow managers and analysis software is useful, but there exists no single software package that can handle all data types and all analyses of interest to the typical research laboratory (although there is effort being made in this area; see, for example, http://irida.ca). Thus, it is common to analyze HTS data using a variety of different software from multiple sources, either desktop based or Web based, commercial or open source, before a final result can be generated (23). Data transformations between software are also common, often requiring custom-written scripts. Reference databases used in many types of analysis (e.g., genome annotation) are often changing over time, and software is continually being revised. This complexity leads to difficulties in repeating analyses as well as potential for introducing and propagating errors through to the final result. Differences in the choices of bioinformatics software, databases, and analysis strategies for the same data sets have been shown to lead to differences in the final results and potential misinterpretations (36, 37). At minimum, a thorough record of all software (versions), databases, data transformations, and software operating parameters used to generate the final results is necessary for identification of errors and to assess analysis reproducibility.

Laboratory resources: choosing an HTS platform.

The term “laboratory” in this review refers to the wet laboratory component of HTS projects, which is the preanalytical steps, including the sample processing and data generation. Sample processing includes the thawing of archived strains and/or isolation of the bacteria through culturing and DNA extraction, of which the majority of molecular biology or microbiology laboratories are well equipped to execute. Some laboratories may already have sequencers or ready access to HTS platforms, whereas other projects may need to incur the cost of purchasing such equipment or sending the samples to a third-party sequencing service center.

The HTS field is fluid with regular updates and technology developments; thus, we present general terminology and considerations for those embarking on HTS projects and refer readers to several excellent reviews on the currently available HTS platforms (38–41). Additionally, the “NGS Field Guide” (first published in 2011 [42]) is now updated online, providing a comprehensive comparison of HTS platforms (http://www.molecularecologist.com/next-gen-fieldguide-2016/). Beyond the restraints of cost and accessibility, selecting the optimal HTS platform(s) to meet the project outcome goals should take additional key features into consideration: (i) read length, (ii) read type, (iii) error types and rates, and (iv) coverage and run output. It should be noted that these features are not necessarily exclusive or fixed; modifications can be made to improve affordability and to meet the project needs within the constraints of one platform or by combining technologies.

(i) Read length.

Read length is a general but distinguishing feature of the currently available platforms, with short-read sequencers producing reads between 75 and 1,000 bp and long-read sequencers producing reads from 1,000 to >30,000 bp; however, by the time that this review is published, these numbers may have changed. The most common short-read HTS platforms include the HiSeq, NextSeq, and MiSeq platforms from Illumina and the Ion PGM and S5 platforms from Thermo Fisher (South San Francisco, CA, USA). The longer-read, single-molecule sequencing technologies are the Sequel and RSII systems from Pacific Biosciences and the MinION, PromethION, and SmidgION by Oxford Nanopore Technologies. The outcome goals and biology of the microbes being sequenced will dictate the read lengths required to provide accurate data to traverse repetitive DNA elements and unambiguously resolve the order and orientation of genomic sequences flanked by such repetitive elements. If only short-read sequencers are available, modifying the read type (see below) may be one avenue to traverse low-complexity regions.

(ii) Read type.

Once the sequencing platform is chosen, there are additional options for how the template libraries are prepared and/or how the instrument is run to optimize the data toward the project goals. With respect to the ubiquitous Illumina technology (as a short-read sequencer example), libraries can be prepared and indexed as single-end (SE) reads, paired-end (PE) reads, or mate-pairs (MP). The choice of library will impact how one elects to fragment or shear the DNA. The sequencing kits have a “cycle” number, which is the number of times that the instrument will add a nucleotide to the DNA fragment copy. For example, a “600-cycle kit” could theoretically synthesize up to 600-bp-length sequences in massively paralleled clusters. If a single-read library is chosen, the user would set the instrument parameters to sequence a 600-bp fragment in one direction only. A PE library would reduce the individual read lengths achievable with the same sequencing kit but would read the same template library fragment from both directions (similar in concept to the forward- and reverse-strand sequencing reads on the Sanger platform). Therefore, if the DNA fragments are 1,000 bp in length (insert size), a PE run could generate 300-bp reads from either end of the 1,000-bp library fragment, leaving an intervening gap (inner distance) of 400 bp that remains unsequenced. The known inner distance between the PE reads can be applied algorithmically to traverse repeat regions larger than the single-read length alone. MP libraries are also known as “long-insert paired-end,” as procedural differences in the library preparation utilize much longer DNA fragments and leave a greater inner distance between the two PE sequences, enabling one to effectively traverse larger repetitive regions in the genome.

Knowing some genome biology for the microbes being sequenced can aid greatly in the selection and design of the HTS library. For example, monomorphic organisms such as Bacillus anthracis or Mycobacterium tuberculosis containing small-scale variations may be suitably sequenced using short single reads. Organisms with highly promiscuous genomes and those with multiple internally repeated sequences (e.g., ribosomal operons and insertion sequence [IS] elements) and foreign acquired DNA (e.g., prophage and genomic islands) may require multiple data types—PE, MP, and/or long-read sequence data—from a complementary platform in order to suitably assemble the genome.

The long-read sequencing technologies are evolving quickly as fast, accurate data with the ability to traverse repetitive or low-complexity genomic regions are in high demand (43, 44). Several library approaches may be applied for Pacific Biosciences single molecular real-time (SMRT) technology and be used to produce continuous long reads (CLR; 1,000 to 25,000 bp) and shorter, more accurate circular consensus reads (CCS; 500 to 1,000 bp). An optimal approach is to combine the longer but more error-prone reads with the shorter but more accurate, higher-coverage data from the same platform (45) or another platform. In another useful development, the Oxford Nanopore system offers longer reads with new real-time flexibility options such as resequencing regions for higher coverage or stopping the sequencer in midrun to focus on specific microorganisms in a metagenomic sample (46). Illumina, meanwhile, also offers a library preparation kit to produce synthetic long reads (Moleculo) that have been shown to improve resolution of low-complexity genomic regions (47).

(iii) Error types and rates.

Error types and rates vary between platform technologies, with the short-read technologies such as Illumina having lower error rates, more comparable to those of traditional Sanger sequencing at ∼2%. Despite this low overall error rate, Illumina sequences are prone to single nucleotide substitutions (48–50). Substitution errors can usually be overcome with sufficient coverage depth (essentially sequencing redundancy at each base) (51) and an adequate number of replicates to identify true variants between genomes (52). In contrast, ion-measuring sequencers remain prone to insertions/deletions (indels) owing to base calling errors in homonucleotide regions. The ion-based sequencers also have lower error rates (∼4%) relative to the long reads produced by platforms such as Pacific Biosciences and Oxford Nanopore, which are more prone to deletions and can have a higher frequency of deletion errors (∼18%). However, as mentioned above, options within the long-read platforms have been developed to improve their consensus base call accuracy. In all cases, the key to overcoming most platform error types remains related to ensuring that one acquires sufficient depth of read coverage.

(iv) Coverage.

Based on the experimental design and outcome goals, the depth of coverage and quality of the resultant assembled genome(s) should be a major focus when choosing a sequencing platform. The term “coverage” is often used interchangeably with “depth” or “sequence redundancy” and refers to the number of times that a base is represented in the raw sequencing data (51). The sequences produced by the instrument are not equally distributed across the genome, and thus, the term coverage is often reported as the average coverage (e.g., 10× coverage) and is used to plan in advance the number of samples placed simultaneously on a sequencing run. The theoretical average coverage (C) can be calculated with the Lander-Waterman equation as C = LN/G, where L is the length of the read, N is the number of reads, and G is the length of the genome in base pairs (53). Knowing that the reads will not be evenly distributed across the entire genome, it may be wise to overestimate the coverage required for each sample so that lower-coverage regions are sufficient for downstream analyses such as variant calling (i.e., if a minimum 50× coverage is deemed required for confident variant calling, calculate the expected coverage for each sample to be 75 to 90× to ensure that all regions meet the minimum coverage requirement). All HTS platform vendors have resources to provide the theoretical run output information needed to calculate the number of genomes that can be combined on a run (i.e., multiplexed) once the desired coverage has been stipulated. Note that owing to wet lab inefficiencies and operational complexity, it is not always be possible to achieve theoretical run outputs per vendor specification, and so conservative estimates are recommended at the stage of configuring runs.

SAMPLE PROCESSING AND DATA GENERATION

DNA Extraction and Template Assessment

Steps to avoid contamination, ongoing programs of staff competency training, and proactive method improvement procedures are considered good standards of practice. Similar to Sanger sequencing, the input template is often the cause of HTS failure. Poorly prepared samples rarely make good libraries for HTS. Quality monitoring and control in the wet lab workflow begin with quantification and assessment of extracted nucleic acid template quality (yield, purity, and integrity [size]).

Accurate quantitation is critical to successful HTS. Most library preparation protocols are very sensitive to DNA input concentration (libraries may generate poor yields or smaller fragment sizes); therefore, it is important to achieve accurate template quantification. One should measure template concentrations via two methods of quantitation, such as absorbance (e.g., spectrophotometer or NanoDrop) and fluorescence (e.g., Qubit) systems. Fluorescence approaches (e.g., Picogreen) are more precise than UV absorbance-based methods; hence, templates quantified with fluorescence will yield more accurate measures of template concentration. However, if concentration measurements from the two approaches are grossly different, the sample is likely contaminated and will need to be cleaned up.

HTS is exceptionally more sensitive than Sanger sequencing to contaminants carried over in the templates. Impurities are problematic as they negatively impact many enzymatic stages during HTS library preparation; hence, all templates should be assessed for the presence of excess proteins, organics, and/or other enzyme inhibitors such as bile salts or carbohydrates (e.g., bacterial capsular slime), a problem which demonstrates the benefit of employing absorbance measurements. Template purity is assessed by calculating absorbance ratios, namely, A260/A280 (the ratio of the absorbance at 260 nm divided by the reading at 280 nm) and A260/A230; lower ratios indicate that more contaminants are present. Low A260/A280 ratios (below 1.8) suggest the presence of contaminating protein, phenol, or surfactant micelles; nucleic acids that are not fully resuspended can scatter light, also resulting in low A260/A280 ratios. Elevated absorption at 230 nm is caused by contamination with particulates (e.g., silica particles), precipitates such as carryover of chaotropic salt crystals (i.e., guanidine thiocyanate, LiCl, or NaI), phenolate ions, solvents, and other organic compounds, which also may cause abnormal A260/A280 ratios. Although A260/A280 ratios lack sensitivity for protein contamination in nucleic acids, a DNA sample is considered sufficiently pure when an A230/A260/A280 ratio of at least 1:1.8:1 is achieved (54). Elevated A260/A280 ratios (higher than 2.1) usually indicate the presence of RNA; this can be tested by running the sample (∼1 μg) on an agarose gel. Protein or phenol contamination is indicated by A230/A260 ratios greater than 0.5. Additional RNase treatment after nucleic acid template extraction and postextraction cleanup of templates may be required. Lastly, although isolation of virtually intact high-molecular-weight genomic DNA (gDNA) is not essential for short-read HTS technologies (such as Illumina), it is crucial for longer-read platforms (e.g., PacBio and Oxford Nanopore). Hence, template integrity (size of extracted gDNA) should be qualitatively assessed by performing electrophoresis in an agarose gel or similar device (e.g., Agilent Tapestation device or equivalent) before proceeding to HTS library generation. Although templates will appear as smears, the predominant DNA species should be located very high in the gel or digital image (appearing close to the loading well), which is indicative of high-molecular-weight (intact) template.

HTS Library Preparation and Sequencing

As discussed in the Preparation section, the outcome goals will determine the sequencing data needed (i.e., read length, read type [SE, MP, PE], and average coverage) and the chosen HTS platform(s) will dictate the options available for library preparation to generate said data. Consequently, all HTS platforms as well as commercial library preparation kit vendors provide protocols, with appropriate procedural stopping points as opportunities for library quality monitoring and control. There are diverse library preparation methods, each of which comes with its own set of nuances. Detailed commentary will not be made here as such decisions are based not only on the project goals but also on the laboratory equipment available; instead, motivated readers are referred to the appropriate proprietary protocols for their chosen library kit(s) and HTS platform(s). Users are urged to think carefully about these protocols, weighing them against their own experience and training, and consider appropriate stopping points to apply controls and quality checks, even beyond what may be minimally recommended by the manufacturers.

PRIMARY ANALYSIS

For meaningful, confident biological inference and interpretation, all HTS users should implement robust quality assurance (QA) and quality control (QC) procedures, formalized in a quality management system (QMS) for reproducibility. QA specifies the laboratory operational measures taken to produce data of documented accuracy, whereas QC procedures are applied to demonstrate that the process is robust. For example, QC processes are designed to immediately detect errors caused, for example, by HTS (the test system) failure, adverse environmental conditions, or operator error. In HTS, QA procedures are implemented for determining the quality of laboratory data (measured against internal and external quality control measures), as in proficiency panel comparisons or training, and for monitoring the accuracy and precision of the method's performance over time. Although quality best practices for microbial genomics/forensics deploying HTS have lagged behind the clinical genetics field (5, 55), significant global efforts such as the Global Coalition for Regulatory Science Research (GCRSR) (56), the OIE Ad Hoc Working Group on High Throughput Sequencing and Bioinformatics and Computational Biology (HTS-BCG; Massimo Palmorini, personal communication), and the Global Microbial Identifier (GMI) (57) are under way, aiming to formalize such standards and quality metrics for infectious disease surveillance, food regulatory activities, and clinical diagnostics (58).

This section describes general quality practices for wet lab workflows and data generation for HTS. Quality practices for the analyses of resultant data are described in subsequent sections of the review.

The computational analysis of HTS sequence data can be conceptualized in primary, secondary, and tertiary stages. Primary HTS data analysis may be performed on-instrument (i.e., the HTS sequencer) or directly after the data have been generated. On-instrument primary analysis output includes reports and visualizations of HTS run metrics that are proprietary to each HTS platform. These primary data analysis outputs summarize run characteristics for monitoring platform performance and assessing HTS data quality; some are provided even before all data are collected (i.e., cluster density for Illumina). At minimum, metrics for a completed run should meet performance specifications established by the HTS platform manufacturer. Ideally, any HTS run should yield close to the instrument's expected specification for the numbers of raw (unprocessed) output reads and for on-instrument quality-filtered reads (i.e., percent Q score of >30). Additional run performance metrics may include density or number of read-generating templates, G+C content template bias, or first base read success.

HTS runs should be assessed not only to ascertain whether the sequencer performed and collected sound data but also to assess whether project requirements/expectations of the data will be met by the data generated (59). Assessment of the HTS read quality with respect to base call quality scores is but one important consideration. So, too, is the read signal intensity plotted over the read length: an expected decline over cumulative bases is observed for most HTS platforms, affecting the accuracy of individual base calls. Thus, base calling error rates are typically dependent on the length of read and where (within the read) the base error rate is measured. Regardless of the HTS application or platform, representative additional metrics that should be evaluated include depth of coverage, uniformity of coverage, and whether multiplexed libraries were well balanced or if particular genomic regions or sequences are under- or overrepresented.

All HTS platforms will provide said metrics as described above; however, third-party software also has been developed to assess raw HTS data before beginning downstream analysis. These tools are important when the sequencing run assessment metrics are unavailable or for applying standardized quality checks across a large and varied set of data. This is of particular importance when incorporating publicly available sequence read data. NCBI's SRA (12) provides a few common quality assessments, such as base-quality charts of the reads, but sequencer-specific quality metrics are missing, and quality standards for data may have been inconsistently applied before the data were deposited.

FastQC (http://www.bioinformatics.babraham.ac.uk/projects/fastqc/) is a popular open-source software package that can be used for a general overview of the sequence read data quality. FastQC produces a summary report consisting of a series of charts for aspects such as base quality and G+C content of the sequenced reads. The report is evaluated by FastQC and given a grade of “pass,” “warning,” or “failure” based on built-in criteria. Guidance for interpreting such reports is available on the FastQC website.

In addition to quality reports, cleaning of the reads may be performed to generate higher-quality read sets for more stringent downstream analyses. Cleaning of reads is accomplished by removing low-quality reads, masking (replacing low-quality bases with an “N” to represent an “undetermined” base), trimming low-quality ends of reads, and removing adaptors and other sequencing artifacts. Software for cleaning reads includes the FASTX-Toolkit (http://hannonlab.cshl.edu/fastx_toolkit/), Trimmomatic (60), and PEAT (61). The effectiveness of read cleaning methods has been studied, and the methods have been shown by some to have a positive impact on de novo assembly, reference mapping, and variant calling (62, 63). However, not all read cleaning methods are equally effective. While the method of read trimming has been shown by some to aid in variant calling (62), other studies suggest that read trimming can also increase the number of misaligned reads, leading to an increase in the number of false-positive variants called (64, 65). This is supported by others (63), who recommend masking as a more effective read cleaning method due to the removal of low-quality base calls while still maximizing the information retained within each read.

Another quality control step for sequencing reads is the detection and possible removal of contaminated DNA sequences. Adaptor sequences, ligated onto the ends of DNA fragments during library preparation, can sometimes be included in the read data set if the DNA fragments are smaller than the sequencing read length capabilities. These can be detected and removed by software such as FastQC or Cutadapt (66). Other sources of contamination include the control plasmid (e.g., phiX used in Illumina) (67) or a potentially contaminated/mixed sample. Programs designed to identify and, for some, filter contaminants include Kraken, Deconseq, MGA, QC-Chain, and Genome Peek (68–72). Ensuring that the input data are high quality and cleaned of potential contamination will increase the quality of downstream analysis results.

SECONDARY ANALYSIS

Reference Mapping and Variant Calling

One of the most common types of analysis performed on newly generated HTS data is to compare them with and identify the variations observed between other, similar genomes. This type of analysis is carried out by reference mapping followed by variant calling. Reference mapping is the process of determining the optimal placement of reads along a previously assembled, closely related reference genome, and variant calling is the process of detecting variation from the reference genome in the form of single nucleotide variants (SNVs), insertions/deletions (indels), or other types of structural variation. The output of the reference mapping process is a file called a “pileup,” containing the optimal placement of the sequence reads along the chosen reference genome, often stored using sequence alignment/map (SAM) or the binary version (BAM) formatted files (11). The aligned reads are further processed in a subsequent variant calling stage, which examines the pileup and produces a list of identified variants often stored in a variant call format (VCF) or binary version (BCF) file (73, 74). Table 4 provides a sample of popular software used for reference mapping and variant calling, and readers are encouraged to refer to additional reviews (75, 76) for more details.

TABLE 4.

List of reference mapping and variant calling software

| Type | Name (reference[s]) | Comments |

|---|---|---|

| Reference mapping | Bowtie 2 (83) | Available at http://bowtie-bio.sourceforge.net/bowtie2/ |

| BWA-SW (84) | Available at http://bio-bwa.sourceforge.net/ | |

| SMALT | Available at http://www.sanger.ac.uk/science/tools/smalt-0 | |

| Variant calling | GATK (Unified Genotyper) (90) | Part of the GATK toolkit for variant calling of nondiploid organisms |

| FreeBayes | Uses Bayesian inference to detect variants; available at https://github.com/ekg/freebayes | |

| BreakDancer (86) | Detects structural variation from anomalous read pairs produced by mate-pair sequencing; available at http://breakdancer.sourceforge.net/ | |

| MetaSV (245) | Executes and combines results from many different structural variation detection software; available at http://bioinform.github.io/metasv/ | |

| Variant annotation | SnpEff (88) | Available at http://snpeff.sourceforge.net/ |

| TRAMS (89) | Available at https://sourceforge.net/projects/strathtrams/files/Latest/ or as integrated within Galaxy; free for academic use, requires written consent from authors for commercial use | |

| Toolkits | GATK (77, 90) | All-in-one toolkit for reference mapping and variant analysis; free for academic use, nonfree for commercial use; available at https://www.broadinstitute.org/gatk/ |

| SAMtools (11, 73) | Toolkit for working with sequence alignments in SAM/BAM formats; can also be used for variant calling but assumes a diploid model; available at http://www.htslib.org/ | |

| BCFtools (11, 73) | Toolkit for working with variants in VCF/BCF formats; available at http://www.htslib.org/ | |

| VCFtools (74) | Toolkit for working with variants in VCF format; available at https://vcftools.github.io/ | |

| Picard | Toolkit for working with sequence alignments and variants in SAM/BAM or VCF formats; available at http://broadinstitute.github.io/picard/ | |

| Visualization | IGV (85) | Available at https://www.broadinstitute.org/igv/; visualization of multiple tracks of information and multiple genomes |

| Tablet (246) | Available at https://ics.hutton.ac.uk/tablet/; visualization of sequence alignments, variants, or genes | |

| iobio (247) | Web service for upload and visualization of sequence alignments (BAM format), or variants (VCF format); available at http://iobio.io/ |

Guidelines for variant calling, such as the Genome Analysis Toolkit (GATK) best practices (77), have previously been published. However, these guidelines often default to giving instructions for variant calling in human and other eukaryotic data sets and so contain subtle differences that are not suitable for variant calling in microbial genomes. In particular, the assumption of variant calling with diploid organisms is often made, such as is the case with the SAMtools package (73). Variant calling software that assumes a diploid model may produce heterozygous variant calls, which are unexpected for haploid organisms and can be indicative of false positives introduced owing to read misalignment or copy number variation of repetitive regions (78). For the GATK best practices, it is recommended that the Unified Genotyper be applied, as opposed to the Haplotype Caller, when dealing with nondiploid organisms (77).

Reference mapping issues.

There are a number of common issues that can impact the results of reference mapping. One such issue is the presence of repetitive regions in the sequenced genome, the reference genome, or both. A combination of short read lengths for existing HTS technologies (on the order of hundreds of base pairs) and repetitive regions on the reference genome will result in ambiguity in selecting the best location to align matching reads (79). Approaches aimed at mitigating such ambiguity include completely ignoring reads aligning to multiple locations, picking a random location for reads with equal-scoring mapping locations, or reporting all nonunique read alignment locations. Potential caveats of these approaches range from excluding potential variation in the final results to misidentifying variants. Repetitive regions within the sequenced genome that are not present in the reference genome will lead to an unusually large pileup of reads in the repeat region (e.g., a genome sequenced to 50× coverage will show 100× coverage or more in the repetitive region depending on the number of extra copies harbored by the sequenced genome). Treangen and Salzberg describe in more detail the effect of reference mapping in repetitive regions (79).

In addition to repeat regions, structural variation (e.g., deletions or translocations) and additional mobile elements (e.g., plasmids, transposons, and prophage) can be problematic for reference mapping. Structural variation can cause reads to be mapped in an incorrect manner, while mobile elements can be excluded from reference mapping analysis altogether if absent from the reference genome. One approach to capture mobile elements not present in the reference genome is to perform de novo assembly, gene prediction, and annotation of a newly sequenced genome's unmapped reads. The presence of many mobile genes after this analysis will be an indication that a putative mobile element exists in the sequenced genome but is absent in the chosen reference genome. Approaches for structural variation often require alternative analysis strategies and potentially alternative sequencing methods (i.e., long-read sequencing or mate-pair sequencing) (80).

Selecting a reference genome.

Selecting an appropriate reference genome is an important first step to reference mapping and yet can be a nuanced decision. Ideally, the reference genome chosen should have no gaps or errors in the sequence data and should be genetically a very close match to the sequenced genome. NCBI (http://www.ncbi.nlm.nih.gov/) provides access to a large collection of previously published reference genomes that can be used, and yet caution should be exercised since publicly available genomes may be too genetically dissimilar for use as a reference in one's own investigation. Generating a high-quality reference genome in an ad hoc manner is possible, especially with longer-read technologies such as PacBio's SMRT sequencing, which can often produce completely or nearly ungapped genomes. Closely related draft genomes can be used as a reference; however, it should be noted that contiguous consensus sequence (contig) breaks and collapsed repeats in such draft genomes are problematic for mapping HTS reads, and extra consideration, such as manual inspection of the pileup in these regions and possibly masking of these regions, should be conducted before variant selection.

Quality control of input data.

As mentioned in the Primary Analysis section above, inspection of the sequence reads should be done to verify that they pass standard quality checks before proceeding with any secondary analysis. Additionally, assessment of whether or not an appropriate depth of coverage has been achieved for sequencing should be conducted. Low coverage can lead to false-negative variant calls, while excessive coverage is wasteful and can lead to performance issues such as longer running time or higher memory usage (76). A read coverage of at least 50× has been recommended for the best results (37, 81). Sequenced genomes that do not pass these quality checks can be excluded from further analysis or resequenced to generate a better-quality data set. Once a raw data set has been selected, cleaning of the sequence reads (as described in the Primary Analysis section) can be performed to verify that the data are of sufficient quality for downstream use.

Generating a read pileup.

After quality control of input sequence reads, an alignment of the quality-filtered reads is generated to produce a collection of mapped reads (along with their optimal placement on a reference genome), resulting in a read pileup against the reference. A large collection of software has been developed for efficiently aligning HTS reads to a reference genome (76, 82); popular options include Bowtie2 (83) and BWA-SW (84). Standard input files include sequence reads (in FASTQ format) and a reference genome. The output is a read pileup often stored in the SAM (text-based, uncompressed) or BAM (binary, compressed) file format (11).

Quality control of a read alignment pileup.

Following the generation of a sequence read alignment pileup, validation should occur to verify that the pileup is correct. A large collection of bioinformatics toolkits, such as SAMtools (11), have been developed for inspection of read alignment files and generating summary statistics. Additional visual inspection and quality analysis of the pileup can be performed with software such as the Integrative Genomics Viewer (IGV) (85).

One important issue to evaluate is whether a high percentage of unmapped reads exists, which can indicate quality issues with the read data or contamination or could indicate a large number of unique regions in the sequenced genome (i.e., a mobile element). SAMtools along with other tools have the capability to check for the percentage of unmapped reads. High numbers of unmapped reads may also indicate that the reference genome selection was inappropriate; in this case, selection of a new reference genome is advised.

Variant calling, filtering, and annotation.

Variant calling is the process of scanning the SAM/BAM file and searching for areas of significant variation from the reference genome. This is often limited to SNVs, insertions/deletions, and other small regions of variation due to the shorter read length of the sequenced reads. Larger-scale variant detection is possible when using appropriate sequencing techniques, such as mate-pair sequencing with longer insert sizes. Here, each pair of reads is mapped, and anomalies in the distances between pairs of reads or the orientation of pairs of reads are used to detect larger structural variations such as insertions, deletions, or inversions (86). Variant callers typically produce a report of potential variants using the VCF (text-based variant call format) or BCF (smaller, more-efficient binary) file formats (73, 74). Examples of variant calling software are given in Table 4, with additional reviews (75) providing more details.

Variant filtering involves removing variants that do not match defined thresholds to remove false positives from further analysis. Many metrics can be used for filtering variants, such as the depth of coverage or the QUAL field of a VCF file, which provides a Phred-scaled quality score for the listed variant (74). The GATK best practices describe a process known as variant quality score recalibration, which requires a known set of true variant calls used to calibrate the variant quality scores followed by removal of variants with low scores (77). For novel variant discovery in microbial genomes, these known variant calls may not be available, limiting the use of variant quality scores due to unknown thresholds. Instead, the use of other hard-filtering thresholds to remove poor-quality variants can be used, such as a minimum depth of coverage or a minimum proportion of reads supporting a variant call (e.g., minimum of 10 reads and 75% of all reads supporting a variant call) (77, 87).

Once there is adequate evidence that the variants are true, they can be annotated with relation to an annotated reference genome. Variant annotation is the process of placing the variation in the context of the genomic features that contain those variants and their effects on those features such as amino acid changes and frameshifts. Software for variant annotation includes snpEff (88), TRAMS (89), and GATK (90). Each program requires an annotated reference genome as input along with a list of variant calls, in VCF/BCF format, and will produce a list of the effects of these variants. Although the variant calling process can be automated, it is important to note that variants should be manually inspected to ensure that the gene annotations are accurate, and ideally, those inferred to alter metabolic processes or virulence mechanisms would be further confirmed with laboratory experimentation, as described under “Bacterial Pathogenomics.”

De Novo Assembly

De novo assembly is defined as the reconstruction of a genome from sequence reads without the aid of a reference. More technically, de novo assembly is the computational process of reconstructing longer contiguous consensus sequences (contigs) by determining the longest overlap and optimal placement of shorter reads. The result of this initial automated approach is considered a draft genome. If additional information such as optical mapping data, mate-pair, or long-read sequences is available, these contigs can be ordered into larger scaffolds; the resulting assembly is classified as a “high-quality draft genome.” A designation of “closed genome” requires that the gaps between these scaffolds be resolved. A “finished genome” requires the resolution of any misassemblies or other sequencing anomalies and uncertainties. The level of closure or finishing (sequence polishing) pursued for the genomes in a project will depend on requirements of the sequencing project as defined in the project planning phase. Additionally, the sequencing data for each isolate should be of sufficient quantity, quality, and type (e.g., paired-end or single short reads, long reads, or a combination of data types) to generate a de novo assembly that satisfies the project objectives determined in the planning phase.

Choosing de novo assembly software.

As HTS technology evolves, so too does the development of new and/or improved de novo assembly programs. There are detailed reviews of assembly software found elsewhere (48, 91–94); however, we have included a comparative list of some popular assemblers within each of the major assembly algorithms, greedy, overlap-layout-consensus (OLC), and de Bruijn graphs, in Table 5. Assemblers have evolved in roughly this order with early assemblers such as TIGR using the greedy approach during the Human Genome Project (95). The OLC assemblers organize reads into graph structures with each read being a node in the graph connected by an edge to other overlapping reads (48). This paradigm was more commonly used with Sanger data and HTS longer reads as the process is computationally intensive and, in the past, has not performed as well with high volumes of short, high-coverage HTS reads, although advances have been made to improve the performance of OLC-based assemblers (93). For example, the AMOS suite of assembly tools remains a popular choice for OLC-based assembly of HTS data (96). De Bruijn graph assemblers partition the reads into overlapping subsequences of length k, called k-mers, to create the nodes for efficient graph structures, allowing programs to computationally handle larger data sets. Early de Bruijn-based assemblers such as Euler (97) and Velvet (98) popularized the use of these methods for bacterial genomes. Algorithms have since evolved and expanded upon these original paradigms to improve assemblies of long-read data such as HGAP, Edena, and SGA (99–101) and short, high-coverage data such as SOAPdenovo and SPAdes (102–104).

TABLE 5.

List of de novo assembly software

| Type | Name | Read type | Comments |

|---|---|---|---|

| OLC | String Graph Assembler (SGA) | Illumina (>200-bp reads) | Performs best on larger genomes with high coverage; has a built-in error correction module; https://github.com/jts/sga |

| MIRA | Sanger, Ion Torrent, Illumina, PacBio (CCS reads or error-corrected long CLR reads) | Can combine multiple libraries/sequencing technologies into a single, hybrid assembly; slower run times than other assemblers; capable of producing high-quality assemblies; requires higher level of expertise to set run parameters; https://sourceforge.net/p/mira-assembler/wiki/Home/ | |

| Hierarchical Genome Assembly Process (HGAP) | PacBio | Long-read de novo assembler for PacBio SMRT sequencing data; only one long-insert shotgun DNA library required; uses short reads to correct long reads within the same library; de novo assembly using Celera assembler; includes assembly polishing with Quiver; https://github.com/PacificBiosciences/Bioinformatics-Training/wiki/HGAP | |

| De Bruijn graph | Velvet | Illumina, 454, Ion Torrent, Sanger | High memory requirement; easy to run; can map reads onto a reference sequence(s) to help guide the assembly (Columbus module); user must select a single k-mer length to use (VelvetOptimiser can be used to select the optimal k-mer length); https://www.ebi.ac.uk/∼zerbino/velvet/ |

| Velvet-SC | Illumina | Adaptation of Velvet assembler for single-cell sequencing data; no error-correction module; http://bix.ucsd.edu/projects/singlecell/ | |

| SOAPdenovo | Illumina | Has an error correction, scaffolder, and gap-filler module; relatively fast compared to other assemblers; http://soap.genomics.org.cn/soapdenovo.html | |

| Ray | Illumina, 454 | Can combine different technologies to create a hybrid assembly; well documented; http://denovoassembler.sourceforge.net/ | |

| A5-MiSeq | Illumina | Uses the IDBA-UD algorithm; easy to use-little bioinformatics experience required; relatively fast with low memory requirements; https://sourceforge.net/projects/ngopt/ | |

| ALLPATHS | Illumina, PacBio | Requires at least 2 specialized libraries (e.g., Illumina fragment and PacBio long-read or Illumina jump library); has an error correction module; http://www.broadinstitute.org/software/allpaths-lg/blog/ | |

| Assembly by Short Sequences (ABySS) | Illumina, 454, Sanger | http://www.bcgsc.ca/platform/bioinfo/software/abyss | |

| SPAdes | Illumina, Ion Torrent, PacBio, Nanopore | Can support single-cell sequencing input data; can handle nonuniform coverage; has an error correction module (BayesHammer/IonHammer) and scaffolder; uses multiple k-mer lengths; capable of producing high-quality assemblies; relatively fast assembler; most widely used assembler for bacterial genome assembly; http://bioinf.spbau.ru/spades | |

| Maryland Super-Read Celera Assembler (MaSuRCA) | Illumina only or mixture of short and long reads (Sanger, 454) | Attempts to create superreads using the paired-end reads; http://www.genome.umd.edu/masurca.html | |

| OLC/de Bruijn hybrid | CLC Assembly Cell (CLC Bio, Aarhus, Denmark) | Illumina, 454, Ion Torrent | Commercial software with licensing fee; easy to use with point-and-click graphical user interface; contains a scaffolder module; fast |

| Proprietary algorithm | SeqMan NGen (DNAStar Inc., Madison, WI, USA) | Illumina, PacBio, 454, Ion Torrent | Commercial software with licensing fee; easy to use with point-and-click graphical user interface; fully integrated with Lasergene's SeqMan Pro; patented algorithm (black box) |

As this review is aimed at researchers working with bioinformaticians on HTS projects (not bioinformaticians themselves), we want to stress that it is not essential to understand the mathematical theory behind all de novo assemblers. It is, however, important to understand that all assemblers have their strengths and limitations. The performance of an assembler is influenced by the biology of the genome (e.g., repetitive elements, overall size, multiple extrachromosomal plasmids, etc.), the nature of the data (e.g., sequence length, orientation, coverage depth, and uniformity), and the computational resources available (105).

Evaluating de novo assemblies.

Without knowing the true genome structure of an organism, a de novo assembly is a hypothesis formed by short DNA segments compiled into contigs through computed mathematical models. Contiguity and correctness are two attributes of the resultant assembly that can be assessed. There have recently been reports in the literature focused on comparing the performances of assembly workflows (8, 106–109). Common summary statistics for genome assemblies include the total number and lengths of the contigs. An additional popular measure is the N50 statistic. The N50 refers to the median contig length of which 50% of the assembled nucleotides are found to be; this definition extends to the N50 scaffold and the NG50, which incorporates the expected genome size (107). These summary statistics, however, assess only the contiguity of the assembled sequences, not their correctness.

The correctness or accuracy of an assembly can be evaluated by mapping the original reads back onto the assembly to identify regions with unusually high coverage (possibly a repeat collapse) or low coverage (possibly indicating an incorrect join) (94). There are a growing number of programs compiled to aid with assembly evaluations such as Amosvalidate, Quast, and REAPR software, described in more detail elsewhere (110–113). There have also been genome assembly competitions, such as Assemblathon 1 and 2 (106, 107) or GAGE-B (109), where several researchers were tasked with constructing de novo assemblies with the same data. These studies concluded that there was no one assembler that performed best for all organisms and metrics used to evaluate the assembly quality. Therefore, it may be useful to test a few de novo assemblers and evaluate the workflow that meets project goals for assembly quality and can perform efficiently within the available computational resources.

TERTIARY ANALYSIS