Abstract

The importance of cancer screening is well-recognized, yet there is great variation in how adherence is defined and measured. This manuscript identifies measures of screening adherence and discusses how to estimate them. We begin by describing why screening adherence is of interest: to anticipate long-term outcomes, to understand differences in outcomes across settings, and to identify areas for improvement. We outline questions of interest related to adherence, including questions about uptake, currency or being up-to-date, and longitudinal adherence, and then identify which measures are most appropriate for each question. Our discussion of how to select a measure focuses on study inclusion criteria and outcome definitions. Finally, we describe how to estimate different measures using data from two common data sources, survey studies and surveillance studies. Estimation requires consideration of data sources, inclusion criteria, and outcome definitions. Censoring often will be present and must be accounted for. We conclude that consistent definitions and estimation of adherence to cancer screening guidelines will facilitate comparison across studies, tests, and settings and help elucidate areas for future research and intervention.

Keywords: cancer screening, adherence, measurement

Introduction

The importance of cancer screening is well-recognized, yet there is no consensus on how adherence should be defined and measured. Based on work by the International Cancer Screening Network, Bulliard and colleagues published a review of cancer screening participation definitions and measures.1 Bulliard et al. focused primarily on participation in a single round of screening and noted that measures of participation across multiple rounds of screening are complex and in need of further research. We build upon Bulliard et al.’s work by considering in more detail measures not only of one-time screening participation, as are often used by national screening programs,2 but also of long-term adherence.

The goal of this paper is to describe definitions and measures of adherence appropriate for answering different questions and to specify how to estimate these measures. We first define adherence and describe reasons why adherence may be of interest. We review questions of interest regarding adherence, measures that allow us to quantify the answers to these questions, and statistical methods for estimating these quantities. Throughout, we identify strengths and limitations of alternative data sources for answering different questions and discuss their ability to estimate different types of measures related to adherence.

What is adherence?

In the context of cancer screening, adherence has several dimensions because recommendations are usually multi-faceted. For example, recommendations often include guidance on starting and stopping ages, types of screening tests, and frequency of screening. A person may be adherent to some aspects of a cancer screening recommendation but not others. This paper focuses on selecting measures of cancer screening adherence based on the question of interest. We first frame this discussion by addressing why adherence matters.

Why do we care about adherence?

To anticipate long-term outcomes

An underlying assumption in studying screening adherence is that better adherence leads to better outcomes. In the context of an intervention or program, studying adherence may help anticipate potential benefits before long-term outcome data are available. It will often take years for an effect on cancer mortality, or even incidence, to manifest itself. An early sign that an intervention may be working or failing is whether people undergo screening as intended; adherence is necessary, but not sufficient, for screening to be effective.

To understand differences across settings

The effectiveness of cancer screening interventions, programs, recommendations or guidelines depends, in part, on the level of adherence in the population in which the intervention takes place. Generalizability of results to other settings depends, in part, upon comparable levels of adherence. For example, a screening recommendation that reduces cancer mortality when adherence is nearly 100% would not be expected to be as effective in a setting where only 50% of the population was adherent. Measuring adherence may help explain why the effectiveness of screening varies across settings.

To identify areas for improvement

Measuring adherence may also help identify opportunities to develop interventions and programs that reduce disparities. If one group is more adherent to an intervention than another, or an intervention elicits better adherence in one setting over another, we can learn where there is room for improvement and greater equity.

How are data on adherence collected?

The way in which data are collected influences the types of adherence questions that can be answered. For the purposes of this discussion, we consider two data sources common in studies of cancer screening: surveys and surveillance.

Survey data

Healthcare delivery systems, health departments, medical clinics, and research studies often administer surveys. A common feature is that they can assess past cancer testing behavior and sometimes diagnoses of specific cancers. They may be administered once or multiple times.

A recent example of a survey used to assess cancer screening adherence was reported in Morbidity and Mortality Weekly Reports (MMWR).3 The authors used cross-sectional data from the 2013 National Health Interview Survey (NHIS) to determine what percent of patients was up-to-date with testing for different cancers. The interview included questions about type and timing of different tests to detect cancer, as well as ages at diagnosis with specific cancers.4 MMWR reported the percent of persons who had been tested within the recommended interval (e.g., mammography within past two years) in the period prior to survey administration.

Surveillance data

For the purposes of this paper, we consider surveillance data to be information on cancer screening and tests recorded in real-time (or close to real-time). These data may be available in medical records, administrative databases, or screening program databases. One key feature of surveillance data – as defined for our purposes – is that they do not rely on recall. Most countries with screening programs use this kind of data to monitor screening participation.2,5–8.

In 2013, Green et al. published results from an RCT based on data from an electronic health record (EHR).9 Briefly, the 4-arm trial compared interventions to increase colorectal cancer screening. The outcome – a binary indicator of being up-to-date for testing in both years following randomization – was based on colonoscopy procedure codes and laboratory fecal occult blood tests (FOBT) in participants’ EHR, recorded as they occurred.

What kinds of questions can we ask about adherence?

For simplicity, we focus on three categories of questions about adherence: 1) questions about screening uptake, i.e., first-time screening after the inception of an intervention or becoming eligible to screen; 2) questions about being up-to-date for or not in need of screening at a given point in time; and 3) longitudinal adherence, i.e., screening over a period of time that generally spans more than one recommended screening interval (or round of screening, in the case of a screening program). There are other kinds of questions about adherence (e.g., over-use, stopping age), but these three categories cover some of the most frequently asked and important questions. Of note, all questions can be framed as comparisons between different groups, but for simplicity we focus on questions about a single group.

Questions about uptake

At what age do people start screening?

What is the prevalence of having been screened at least once?

Bulliard et al. points out that uptake can be a useful indicator of test acceptability, the efficiency of the screening process, and the impact of organizational characteristics.1 Outside of an organized program, uptake may reflect effects of the screening setting, such as the availability of providers offering screening, cost of screening, or health literacy.

Questions about currency or being up-to-date

What is the prevalence of being current for screening?

What is the prevalence of being out of compliance for screening?

What is the prevalence of needing screening?

What is the prevalence of not needing screening?

We propose a conceptual distinction between two outcomes in a population eligible for screening (e.g., on the basis of age or other cancer risk factors): not needing screening and being current (or up-to-date) for screening. Being classified as “not in need of screening” requires only that an individual was tested, regardless of the indication. For example, someone who has a diagnostic colonoscopy to investigate rectal bleeding has not been screened but is also not in need of screening. These data help us understand who is in need of screening and who is not; the MMWR analysis based on NHIS data is an example of this kind of analysis. In contrast, in order to be classified as “current for screening”, an individual must have received a test conducted for the purpose of screening, i.e., in the absence of signs or symptoms of cancer.

Questions about longitudinal adherence

How long do people remain adherent to screening guidelines?

What is the prevalence of receiving regular screening? (Note that “regular” could be defined in a number of ways.)

The RCT conducted by Green et al., described above, is an example of a study that assessed adherence over time. Surveillance and other sources of longitudinal data are well-suited to answering questions about the period of time over which individuals remain adherent to screening recommendations. Longitudinal adherence is sometimes described using the concept of “covered time,” the period of time during which an individual is considered to be not in need of screening. For instance, Vogt et al. incorporated the concept of covered time into the prevention index, an assessment of healthcare quality designed to be more sensitive than traditional measures like Health Plan Employer Data and Information Set (HEDIS) score.10

In the context of an organized screening program, one might refine questions based on the number of screening rounds that have occurred. Based on examples by Bulliard et al., we can pose questions such as:

In a given round of screening, does participation vary based on participation in past rounds?

What proportion of invitees participates in all screening rounds?

None of the questions described in this section are the right or wrong ones to ask; they focus on different aspects of adherence, and our list is not exhaustive.

What measures are best used to answer each question?

Once the question is refined, one must next consider corresponding measures of adherence. Examples of measures for different questions provided in Table 1 include those from prior studies of cancer screening,11–20 some from Bulliard et al.,1 measures adapted from the pharmacoepidemiology literature,21,22 the prevention index,10 and ones we have not seen before but think could be useful.

Table 1.

Scientific questions about screening adherence and corresponding measures

| Question | Measure |

|---|---|

| Questions about uptake | |

| At what age do people start screening? |

|

| What is the prevalence of having been screened at least once? | |

| Questions about currency | |

| What is the prevalence of participation in the current round of screening |

|

| What is the prevalence of being current for screening? |

|

| What is the prevalence of being out of compliance for screening? |

|

| What is the prevalence of needing screening? |

|

| What is the prevalence of not needing screening? |

|

| Questions about longitudinal adherence | |

| How long do people remain adherent to screening guidelines? | |

| What is the prevalence of receiving regular screening? |

|

Within table row, measures are functions of each other.

Within table row, measures are functions of each other.

Covered = not yet recommended to screen again based on time since last test.

To estimate the measures in Table 1, one needs to determine the relevant denominator population and identify an outcome of interest corresponding to the question under study. Below, we describe some considerations in making these decisions.

Inclusion criteria

Determining who is eligible to be studied depends on the question of interest. Broadly, there are three possibilities: the entire population (i.e., everyone), people meeting eligibility criteria for screening, and people who have previously screened. It is important to note that a person’s eligibility may change over time and so a person may be included in analyses at some points but not others.

Entire population

For studies that focus on the proportion of the population in need of screening, the entire population of a country or health plan may be the denominator of interest. However, usually we are not interested in the entire population because we do not wish to study people who are not eligible for screening.

People eligible for screening

For most questions about screening adherence, we are interested in the population eligible for screening. This is consistent with an epidemiologic approach in which only people who are “at-risk” for the event of interest are included. In the context of cancer screening, relevant considerations for screening eligibility include those related to: risk level (questions 1- 2 in Text Box); invitation to screen (question 3); prior cancer, symptoms, or tests (questions 4–6 in Text Box); and screening ascertainment (question 7 in Text Box).

Is the person at risk for the cancer of interest? Some people are not eligible for certain kinds of cancer screening because they lack the specific organ. For example, women with no cervix due to hysterectomy are not at risk of cervical cancer and, therefore, not in need of cervical cancer screening.

Is the person recommended for cancer screening? For some cancers, only specific persons are recommended for screening. We may be interested in adherence only among that subset of the population. For example, in a study of adherence to lung cancer screening, we might want to restrict our analysis to persons recommended for screening: individuals aged 55 to 80 years who have a 30 pack-year smoking history and currently smoke or have quit within the past 15 years.23

Was the person invited to be screened? In the context of a national screening program or health plan that intervenes on a population, we might be interested in people who have been invited to screen. In a setting without organized screening outreach, there is no such population to study.

Did the person have cancer prior to the period during which we are measuring adherence to screening? Persons with a prior diagnosis of the cancer under study are generally not considered eligible for routine screening. If a person was diagnosed with the cancer of interest during the follow-up period, they are not eligible for screening after their diagnosis but would have been before.

Is the person free from symptoms? Persons with symptoms of a cancer are not eligible for screening, which is defined as testing in the absence of signs or symptoms of the disease. However, while the symptom-free population may be of greatest interest for certain scientific questions, symptom onset can be difficult or impossible to ascertain, particularly in patients who do not seek care.

Did the person have a recent diagnostic or screening test? Once a person has a diagnostic or screening test, there is a period of time during which she may not require screening. For example, if a woman has rectal bleeding and receives a diagnostic colonoscopy to investigate her symptoms and is found to be free of cancer or high risk findings, she will not need colorectal cancer screening for 10 subsequent years. Similarly, once a person has been screened for colorectal cancer via colonoscopy, she will not need additional screening for 10 years. Whether or not these people should be included in the study depends on the question of interest.

Would a screening event be observable if one occurred? If the event of interest cannot be observed, a person should not be classified as “at-risk.” Including a person in the denominator who can never be part of the numerator will bias observed rates downward. For example, once someone moves out of the catchment area of an organized screening program, the program will no longer be aware of his screening behavior. Thus, such persons should no longer be included in the denominator after they move.

People previously screened

In some measures of interest, the denominator (population) is limited to persons previously screened. One example of this is a measure of time to rescreening.

Outcome of interest

In order to estimate the screening measures of interest, it is necessary to define the outcome, e.g., screened or in need of screening. If the goal of a study is to assess the need for screening, then the outcome of interest should be receipt of any test for the condition of interest, regardless of indication. For example, the MMWR study described above does not differentiate between screening and diagnostic tests. But by reporting the percent of screen-eligible persons who have not been tested (e.g., 100% minus 72.6% who received a mammogram), they provide an estimate of the percent of the population in need of screening (27.4%, assuming persons do not have other conditions that contra-indicate screening.)

In order to be classified as “current for screening”, an individual must have received a test conducted for the purpose of screening, i.e., in the absence of signs or symptoms of cancer, within the relevant timeframe. However, even in such a study, it might be sufficient to measure testing for any indication. Some studies may wish to evaluate how well an intervention promotes screening. In the context of an intervention to increase screening (such as the RCT example by Green et al.9), differences in rates or prevalence of testing across groups is likely to reflect differences in screening because there is no reason to expect large differences in the prevalence of symptoms or, therefore, diagnostic testing across groups. So, even if screening per se is the main outcome of interest, focusing on testing may still be informative and will be more straightforward to evaluate because it does not require knowledge of test indication or screening eligibility.

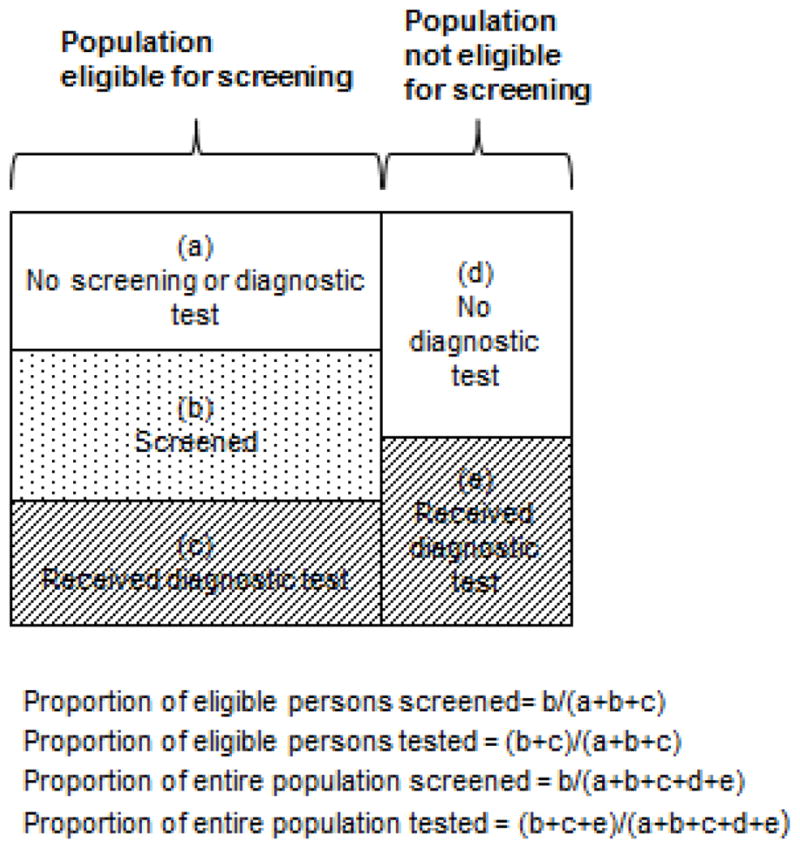

Figure 1 illustrates proportions that can be computed for alternative outcomes of interest: the proportion of eligible persons screened; the proportion of eligible persons tested; the proportion of the entire population screened; and the proportion of the entire population tested. Each may be of interest depending on the scientific question, and data availability may limit which are possible to compute.

Figure 1.

Figure 1 shows how a hypothetical population consisting of persons ineligible and eligible for screening at a point in time. Unshaded boxes represent individuals who are not screened or tested; dotted boxes represented screened individuals; and hashed boxes represent persons who have received a diagnostic test.

How do we estimate the measures of interest?

In this section, we describe approaches to estimating the measures of interest in Table 1. We consider how approaches may vary based on how data were collected. When comparing results across studies that have collected data differently, it is important to consider whether differences in results are attributable to data sources or whether the studies are answering slightly different questions.

Questions about uptake

Questions about uptake typically require estimating a summary measure of age at screening initiation. For instance, questions about the age at which individuals begin screening can be answered by considering age at first screening exam to be the outcome of interest. If information on age at first screening exam is available for a representative sample of the target population we could summarize age at first screening using simple descriptive statistics such as the mean age at first screening exam. However, with either surveillance or survey data, it is unlikely that age at first screening will be observed for the entire population under study. Using either survey or surveillance data, there will typically be some individuals who have not yet been screened. For these individuals, age at first screening is only known to be greater than their current age, making age at first screening right censored. If the population of interest is the screening eligible population, then age at first screening will also be right censored by events that remove individuals from the target population such as a cancer diagnosis. Under right censoring it is not possible to estimate the mean age at screening initiation without making strong parametric assumptions. However, standard non-parametric survival analysis methods provide an alternative. Survival methods allow us to quantify age at first screening using summary statistics like the median age at first screening or the proportion of the population screened by a given age. This can be done by treating individuals who have not yet been screened as right censored at the most recent age at which data were collected or at the time of other censoring events. Comparative questions about uptake can also be answered using survival analysis methods. Summary statistics can be computed within strata (e.g., median age at first screening in group A vs B) or inferential statistical tests like the log-rank test can be used to test for a difference in uptake between strata.

Questions about being up-to-date or not in need of screening

Questions about screening currency focus on point prevalence and thus can be answered using cross-sectional study designs. A single round of survey data provides a cross-sectional snapshot of screening currency. It is straightforward to use these data to estimate quantities like the proportion of the target population who are up-to-date on screening. Surveillance data can also be used to answer prevalence questions but require additional specificity about the question of interest. For instance, surveillance data – such as those from an organized screening program – could be used to summarize the proportion of individuals up-to-date for screening (or not in need of screening, depending on the question) on a particular date (e.g., as of April 1, 2015 what proportion of the population was up-to-date for screening?) or at a particular age (e.g., among individuals 50–55 years of age, what proportion are up-to-date for screening?) One challenge arising when using surveillance data for this purpose is that not all individuals in the surveillance population will have adequate data available to compute the outcome of interest. For instance, if we are interested in estimating the proportion of the population age 65 and over who are compliant with colorectal cancer screening guidelines, it is necessary to have data on all eligible individuals in the population covering their entire relevant period of screening. Since screening colonoscopy any time in the prior 10 years would result in a classification of an individual as “current,” this implies that 10 years’ prior data are required for all eligible individuals. In practice, it is unlikely that complete data will be available for all eligible individuals in the surveillance population. Excluding individuals with incomplete information (i.e., only including those for whom at least 10 years of prior data are available) could lead to selection bias since individuals who remain in the surveillance population over a lengthy period of time are not necessarily representative of all individuals eligible for screening. Methods to address selection bias such as inverse probability weighting can be used if it is possible to characterize the population that remains under surveillance for the complete period of time required using, e.g., demographic and health care utilization data.

Questions about longitudinal adherence

Estimating longitudinal measures of screening adherence requires longitudinal data. For surveys, which most often collect data on screening during a defined period, multiple rounds of survey assessments of the same individuals are generally needed to estimate longitudinal adherence measures. Estimating longitudinal measures of adherence from repeated rounds of survey data can be challenging because these data take the form of discrete snapshots of individuals’ screening participation. These can be used to assemble a complete history of screening participation in order to determine how long an individual was adherent and when he or she first became non-adherent. Individuals may experience events that remove them from the target population between successive waves of the survey, such as a cancer diagnosis; they should be censored at the time of these events, if available, or at the last prior wave of the survey. Surveillance data that follow a cohort longitudinally lend themselves more naturally to answering questions about longitudinal adherence.

Longitudinal adherence questions about length of time adherent or age when an individual first becomes non-adherent can be answered using survival methods. It is necessary to define a starting point, such as the beginning of screening eligibility, for each individual and then follow them forward longitudinally until they become non-adherent or are censored from the cohort. The timescale for such analyses could be age or time since first eligibility. Censoring could be due to a variety of causes including leaving the study catchment pool, death, cancer diagnosis, or becoming ineligible for screening. The length of time people remain adherent to screening guidelines can be summarized in terms of the median time adherent using survival methods to account for right censoring. Comparative questions can be answered descriptively by comparing the median length of adherence or inferentially using regression models for right censored data such as the Cox proportional hazards model. Over any period of interest, the cumulative probability of having been screened can also be estimated using survival approaches.

Other questions about longitudinal adherence may focus on the total time spent adherent to screening guidelines, rather than the length of time prior to the first instance of non-adherence. This could be summarized with a variety of different measures including the mean or proportion of time eligible for screening spent adherent to guidelines (i.e., covered time). The mean time adherent will typically not be a useful measure for summarizing adherence since it does not account for differences between individuals in the total amount of time eligible for screening and under observation. The proportion of eligible time spent adherent can be computed for each individual and then summarized across individuals as the mean proportion of time spent adherent. In interpreting the percent of time spent adherent, it is important to consider the average length of follow-up. Being adherent for 1 year is easier than being adherent for 10 years, especially when a screening test offers several years of coverage (e.g., mammography). We therefore suggest that reports of covered time also present the mean or median follow-up (observation) time in each group being studied.

Summary

Adherence is an important concept in cancer screening, and is becoming increasingly important as screening recommendations proliferate. Measurement of adherence can help predict long-term effectiveness of screening interventions, explain differences in screening outcomes across settings, and identify areas for improvement or intervention. There are many subtle variations in questions that one can ask about screening adherence, each with several measures that can be computed. Estimating these measures requires careful consideration of the data sources, inclusion criteria, and outcome definition. Censoring often will be present and must be accounted for. Consistent use and estimation of adherence to cancer screening guidelines will facilitate comparison across studies, tests, and settings and help elucidate areas for future research.

Acknowledgments

We thank Ms. Melissa Anderson, Dr. Noel Weiss, Dr. Sally Vernon, and Dr. Aruna Kamineni for comments on previous versions of the manuscript and Dr. Karen Wernli for helpful discussions during the early phases of this work.

Funding:

This study was funded by the National Cancer Institute at the National Institutes of Health, Award Numbers: U54CA163261 (Chubak), R03CA182986 (Hubbard), and UC2CA148576 (Buist and Doubeni). The content is solely the responsibility of the authors and does not necessarily express the views of the National Institutes of Health.

Footnotes

Declarations: The authors have no conflicts of interest in the past three years to report

Conflicts of interest:

The authors have no conflicts of interest in the past three years to report.

References

- 1.Bulliard JL, Garcia M, Blom J, Senore C, Mai V, Klabunde C. Sorting out measures and definitions of screening participation to improve comparability: The example of colorectal cancer. Eur J Cancer. 2013 doi: 10.1016/j.ejca.2013.09.015. [DOI] [PubMed] [Google Scholar]

- 2.Screening and Immunisations team, Health & Social Care Information Centre. Breast Screening Programme, England, Statistics for 2013–14. London: Government Statistical Service; 2015. [Google Scholar]

- 3.Sabatino SA, White MC, Thompson TD, Klabunde CN. Cancer screening test use - United States, 2013. MMWR Morb Mortal Wkly Rep. 2015;64(17):464–8. [PMC free article] [PubMed] [Google Scholar]

- 4.Centers for Disease Control and Prevention. 2013 NHIS Questionnaire - Sample Adult. Atlanta, GA: Centers for Disease Control and Prevention; 2014. [updated May 29, 2014; cited June 26 2015]; Available from: ftp://ftp.cdc.gov/pub/Health_Statistics/NCHS/Survey_Questionnaires/NHIS/2013/English/qadult.pdf. [Google Scholar]

- 5.Goulard H, Boussac-Zarebska M, Ancelle-Park R, Bloch J. French colorectal cancer screening pilot programme: results of the first round. Journal of Medical Screening. 2008;15(3):143–8. doi: 10.1258/jms.2008.008004. [DOI] [PubMed] [Google Scholar]

- 6.National Screening Unit. The National Cervical Screening Programme (NCSP) Register. Wellington, New Zealand: New Zealand Ministry of Health; [updated December 3, 2015; cited November 23 2015]; Available from: https://www.nsu.govt.nz/health-professionals/national-cervical-screening-programme/ncsp-register. [Google Scholar]

- 7.Metadata Online Registry. Cervical screening data 2012–2013. Canberra, Australia: Australian Institute of Health and Welfare; [updated February 6, 2014; cited November 23 2015]; Available from: http://meteor.aihw.gov.au/content/index.phtml/itemId/569669. [Google Scholar]

- 8.Metadata Online Registry. BreastScreen Australia data 2012–2013. Canberra, Australia: Australian Institute of Health and Welfare; [updated February 6, 2014; cited November 23 2015]; Available from: http://meteor.aihw.gov.au/content/index.phtml/itemId/569662. [Google Scholar]

- 9.Green BB, Wang CY, Anderson ML, Chubak J, Meenan RT, Vernon SW, Fuller S. An automated intervention with stepped increases in support to increase uptake of colorectal cancer screening: a randomized trial. Ann Intern Med. 2013;158(5 Pt 1):301–11. doi: 10.7326/0003-4819-158-5-201303050-00002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Vogt TM, Feldstein AC, Aickin M, Hu WR, Uchida AR. Electronic Medical Records and Prevention Quality: The Prevention Index. American Journal of Preventive Medicine. 2007;33(4):291–6. doi: 10.1016/j.amepre.2007.05.011. [DOI] [PubMed] [Google Scholar]

- 11.Ricardo-Rodrigues I, Jimenez-Garcia R, Hernandez-Barrera V, Carrasco-Garrido P, Jimenez-Trujillo I, Lopez-de-Andres A. Adherence to and predictors of participation in colorectal cancer screening with faecal occult blood testing in Spain, 2009–2011. Eur J Cancer Prev. 2014 doi: 10.1097/CEJ.0000000000000088. [DOI] [PubMed] [Google Scholar]

- 12.Pornet C, Denis B, Perrin P, Gendre I, Launoy G. Predictors of adherence to repeat fecal occult blood test in a population-based colorectal cancer screening program. Br J Cancer. 2014 doi: 10.1038/bjc.2014.507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kearns B, Whyte S, Chilcott J, Patnick J. Guaiac faecal occult blood test performance at initial and repeat screens in the English Bowel Cancer Screening Programme. Br J Cancer. 2014 doi: 10.1038/bjc.2014.469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.de Munck L, Kwast A, Reiding D, de Bock GH, Otter R, Willemse PH, Siesling S. Attending the breast screening programme after breast cancer treatment: a population-based study. Cancer Epidemiol. 2013;37(6):968–72. doi: 10.1016/j.canep.2013.09.003. [DOI] [PubMed] [Google Scholar]

- 15.Kapidzic A, Grobbee EJ, Hol L, van Roon AH, van Vuuren AJ, Spijker W, Izelaar K, van Ballegooijen M, Kuipers EJ, van Leerdam ME. Attendance and Yield Over Three Rounds of Population-Based Fecal Immunochemical Test Screening. Am J Gastroenterol. 2014 doi: 10.1038/ajg.2014.168. [DOI] [PubMed] [Google Scholar]

- 16.Lo SH, Halloran S, Snowball J, Seaman H, Wardle J, von Wagner C. Colorectal cancer screening uptake over three biennial invitation rounds in the English bowel cancer screening programme. Gut. 2014 doi: 10.1136/gutjnl-2013-306144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Long M, Lance T, Robertson D, Kahwati L, Kinsinger L, Fisher D. Colorectal Cancer Testing in the National Veterans Health Administration. Digestive Diseases and Sciences. 2012;57(2):288–93. doi: 10.1007/s10620-011-1895-4. [DOI] [PubMed] [Google Scholar]

- 18.Martin-Lopez R, Hernandez-Barrera V, de Andres AL, Carrasco-Garrido P, de Miguel AG, Jimenez-Garcia R. Trend in cervical cancer screening in Spain (2003–2009) and predictors of adherence. Eur J Cancer Prev. 2012;21(1):82–8. doi: 10.1097/CEJ.0b013e32834a7e46. [DOI] [PubMed] [Google Scholar]

- 19.Sinicrope PS, Goode EL, Limburg PJ, Vernon SW, Wick JB, Patten CA, Decker PA, Hanson AC, Smith CA, Beebe TJ, Sinicrope FA, Lindor NM, Brockman TA, Melton LJ, Petersen GM. A population-based study of prevalence and adherence trends in average risk colorectal cancer screening, 1997–2008. Cancer Epidemiology Biomarkers & Prevention. 2011;21(2):347–50. doi: 10.1158/1055-9965.EPI-11-0818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Worthington C, McLeish K, Fuller-Thomson E. Adherence over time to cervical cancer screening guidelines: insights from the Canadian National Population Health Survey. J Womens Health (Larchmt) 2012;21(2):199–208. doi: 10.1089/jwh.2010.2090. [DOI] [PubMed] [Google Scholar]

- 21.Andrade SE, Kahler KH, Frech F, Chan KA. Methods for evaluation of medication adherence and persistence using automated databases. Pharmacoepidemiol Drug Saf. 2006;15(8):565–74. doi: 10.1002/pds.1230. discussion 75–7. [DOI] [PubMed] [Google Scholar]

- 22.Hess LM, Raebel MA, Conner DA, Malone DC. Measurement of adherence in pharmacy administrative databases: a proposal for standard definitions and preferred measures. Ann Pharmacother. 2006;40(7–8):1280–88. doi: 10.1345/aph.1H018. [DOI] [PubMed] [Google Scholar]

- 23.Moyer VA U.S. Preventive Services Task Force. Screening for lung cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2014;160(5):330–8. doi: 10.7326/M13-2771. [DOI] [PubMed] [Google Scholar]