Abstract

We propose numerical algorithms for solving large deformation diffeomorphic image registration problems. We formulate the nonrigid image registration problem as a problem of optimal control. This leads to an infinite-dimensional partial differential equation (PDE) constrained optimization problem. The PDE constraint consists, in its simplest form, of a hyperbolic transport equation for the evolution of the image intensity. The control variable is the velocity field. Tikhonov regularization on the control ensures well-posedness. We consider standard smoothness regularization based on H1- or H2-seminorms. We augment this regularization scheme with a constraint on the divergence of the velocity field (control variable) rendering the deformation incompressible (Stokes regularization scheme) and thus ensuring that the determinant of the deformation gradient is equal to one, up to the numerical error. We use a Fourier pseudospectral discretization in space and a Chebyshev pseudospectral discretization in time. The latter allows us to reduce the number of unknowns and enables the time-adaptive inversion for nonstationary velocity fields. We use a preconditioned, globalized, matrix-free, inexact Newton–Krylov method for numerical optimization. A parameter continuation is designed to estimate an optimal regularization parameter. Regularity is ensured by controlling the geometric properties of the deformation field. Overall, we arrive at a black-box solver that exploits computational tools that are precisely tailored for solving the optimality system. We study spectral properties of the Hessian, grid convergence, numerical accuracy, computational efficiency, and deformation regularity of our scheme. We compare the designed Newton–Krylov methods with a globalized Picard method (preconditioned gradient descent). We study the influence of a varying number of unknowns in time. The reported results demonstrate excellent numerical accuracy, guaranteed local deformation regularity, and computational efficiency with an optional control on local mass conservation. The Newton–Krylov methods clearly outperform the Picard method if high accuracy of the inversion is required. Our method provides equally good results for stationary and nonstationary velocity fields for two-image registration problems.

Keywords: large deformation diffeomorphic image registration, optimal control, PDE constrained optimization, Stokes regularization, Newton–Krylov method, pseudospectral Galerkin method, Stokes solver

1. Introduction and motivation

Image registration has become a key area of research in computer vision and (medical) image analysis [54, 65]. The task is to establish spatial correspondence between two images, mR : Ω̄ → R, x ↦ mR(x), and mT: Ω̄ → R, x ↦ mR(x), with compact support on some domain Ω := (−π, π)d ⊂ Rd via a mapping y : Rd → Rd, x ↦ y(x), such that mT ∘ y ≈ mR [22, 27], where ∘ is the function composition. Here, mT is referred to as the template image (the image to be registered), mR is referred to as the reference image (the fixed image), and d ∈ {2, 3} is the data dimensionality. We limit ourselves to non-rigid image registration. The search for y is typically formulated as a variational optimization problem [27, 54],

| (1.1) |

The proximity between mR and mT ∘ y is measured on the basis of an L2-distance (other measures can be considered [54, 65]). The functional 𝒮 in (1.1) is a regularization model that is introduced to overcome ill-posedness. The regularization parameter β > 0 weights the contribution of 𝒮. Various regularization models 𝒮 have been considered (see [13, 14, 19, 22, 25, 26, 28, 42, 63] for examples).

A key requirement in many image registration problems is that the mapping y is a diffeomorphism [4, 14, 23, 68, 69, 70]. This translates into the necessary condition det(∇y) > 0, where ∇y ∈ Rd×d is the deformation gradient (also referred to as the Jacobian matrix). An intuitive approach is to explicitly safeguard against nondiffeomorphic mappings y by adding a constraint to (1.1) [39, 48, 61]. Another strategy is to perform the optimization on the manifold of diffeomorphic mappings [2, 3, 4, 44, 52, 53, 69, 70]. The latter models, in general, do not control geometric properties of the deformation field and may result in fields that are close to being nondiffeomorphic. Further, for certain image registration problems, restricting the search space to the manifold of diffeomorphisms does not necessarily guarantee that y is physically meaningful. Some applications may benefit from extending these types of models by introducing additional constraints. One example for such a constraint is incompressibility (i.e., enforcing det(∇y) = 1; see also [10, 16, 52, 62]). Incompressibility is a requirement that might be of interest in medical image computing applications. If required, we can modify the incompressibility constraint to control the deviation of det(∇y) from identity. This will be the topic of a follow-up paper, in which we will extend our formulation. Here, we focus on the algorithmic issues of incorporating the incompressibility constraint. Furthermore, we remark that our optimal control formulation can naturally be extended to account for more complex constraints on the velocity field, for example, constraints related to biophysical models (examples of such models can be found in [31, 40, 45, 51, 66]).

In what follows, we outline our method (see section 1.1), summarize the key contributions (see section 1.2), list the limitations of our method (see section 1.3), and relate our work to existing approaches (see section 1.4).

1.1. Outline of the method

We assume the images are compactly supported functions, defined on the open set Ω := (−π, π)d ⊂ Rd with boundary ∂Ω and closure Ω̄ := Ω ∪ ∂Ω. The deformation is modeled in an Eulerian frame of reference. We introduce a pseudotime variable t > 0 and solve on a unit time horizon for a velocity field v : Ω̄ × [0, 1] → Rd, (x, t) ↦ v(x, t), as follows:

| (1.2a) |

where

| (1.2b) |

with periodic boundary conditions on ∂Ω. The parameter γ ∈ {0, 1} in (1.2b) is introduced to enable or disable this constraint on the control v. In PDE constrained optimization theory, m is referred to as the state variable and v as the control variable. The first equation in (1.2b), in combination with its initial condition (second equation), models the flow of mT subject to v, where m : Ω̄ × [0, 1] → R, (x, t) ↦ m(x, t), represents the transported intensities of mT. Accordingly, the final state m1 := m(·, 1), m1 : Ω̄ → R, x ↦ m1(x), corresponds to mT ∘ y in (1.1). We measure the proximity between the deformed template image m1 and the reference image mR in terms of an L2-distance. Once we have found v, we can compute y from v as a postprocessing step (this is also true for the deformation gradient ∇y; see Appendix D for details). The third equation in (1.2b) is a control on the divergence of v and guarantees that the flow is incompressible (Stokes flow), i.e., the volume is conserved. This is equivalent to enforcing det(∇y) = 1 (see [33, p. 77ff.]).

We use either an H1- or an H2-seminorm for the smoothness regularization 𝒮 (resulting in Laplacian or biharmonic vector operators, respectively; see section 3.1). We report results for standard H1- and H2-regularization (neglecting ∇·v = 0, i.e., γ = 0 in (1.2b)) and for a Stokes regularization scheme (incompressible flow; enforcing ∇ ·v = 0, i.e., γ = 1 in (1.2b)).

In section 4 we will see that the optimality condition for (1.2) is a system of space-time nonlinear multicomponent PDEs for the transported image m, the velocity v, and the adjoint variables for the transport and the divergence condition. Efficiently solving this system is a significant challenge. For example, when we include the incompressibility constraint, the equation for the velocity ends up being a linear Stokes equation.

We solve for the first-order optimality conditions using a globalized, matrix-free, preconditioned Newton–Krylov method for the Schur complement of the velocity v (a linearized Stokes problem driven by the image mismatch). We first derive the optimality conditions and then discretize using a pseudospectral discretization in space with a Fourier basis. We use a second-order Runge–Kutta method in time. The preconditioner for the Newton–Krylov schemes is based on the exact spectral inverse of the second variation of 𝒮.

1.2. Contributions

The fundamental contributions are as follows:

-

We design a numerical scheme for (1.2) with the following key features:

We use an adjoint-based Newton-type solver.

We provide a fast Stokes solver.

We introduce a spectral Galerkin method in time.

We design a parameter continuation method for automatically selecting the regularization parameter β.

Our framework guarantees deformation regularity.1

We provide a numerical study for the designed framework. We compare a globalized Picard method (preconditioned gradient descent) to an inexact Newton–Krylov and a Gauss–Newton–Krylov method. We report results for synthetic and real-world problems. We study spectral properties, grid convergence, and numerical accuracy of the proposed scheme. We study the effects of compressible (plain H1- and H2-regularization) and incompressible (Stokes regularization) deformation models. We report results for a varying number of unknowns in time (i.e., inverting for stationary and nonstationary velocity fields).

The numerical discretization (pseudospectral) allows for an efficient solution of the Stokes-like equations by eliminating the pressure (i.e., the adjoint variable for the incompressibility constraint ∇ ·v = 0 in (1.2b)).

The inf-sup condition for pressure spaces [12, p. 200ff.] is not an issue with our scheme.2 In fact, for smooth images, our scheme is spectrally accurate in space and second-order accurate in time. We will see that we can numerically enforce incompressibility up to almost machine precision. Also, our scheme allows for efficient preconditioning of the Hessian: at the cost of a diagonal scaling we obtain a problem with a bounded condition number.

Overall, we demonstrate that the designed framework(i) is efficient and accurate, (ii) features a precise control of the deformation regularity, and (iii) does not require manual tuning of parameters.

1.3. Limitations

The main limitations of our method are as follows:

The considered model assumes a constant illumination of mR and mT (a consequence of the transport equation and the L2-distance in (1.2)). Therefore, it is (in its current state) not directly applicable to multimodal registration problems. Nevertheless, let us remark that the L2-distance is commonly used in practice [4, 16, 28, 41, 44, 48, 57, 71].

The efficient use of a Fourier discretization for the PDEs requires periodic boundary conditions. If the images are not periodic, we artificially introduce periodic boundary conditions by mollification and zero padding.

1.4. Related work

Due to the vast body of literature, it is not possible to provide a comprehensive review of numerical methods for nonrigid image registration. Background on image registration formulations and numerics can be found in [27, 54, 65]. We limit the discussion to approaches that(i) model the deformation via a velocity field v, (ii) view image registration as a problem of optimal control, and/or (iii) constrain v to be divergence free (i.e., introduce a mass conservation equation as an additional constraint).

Fluid mechanical models have been introduced [18, 19] to overcome limitations of small deformation models [13, 25, 26]. The work in [18, 19] has been extended in [4, 23, 53, 68] using concepts from differential geometry. This class of approaches is referred to as large deformation diffeomorphic metric mapping (LDDMM). Under the assumption that v is adequately smooth, it is guaranteed that y is a diffeomorphism [23, 68]. The associated smoothness requirements are enforced by the regularization model 𝒮 (typically an H2-norm) [3, 4]. The optimization is performed on the space of nonstationary velocity fields [4]. To reduce the number of unknowns, it has been suggested to perform the optimization either on the space of stationary velocity fields [1, 2, 44] or with respect to an initial momentum that entirely defines the flow of the map y [3, 71, 74].

The idea of parameterizing a diffeomorphism y via a stationary velocity field [1] has also been introduced to the demons registration framework [52, 69, 70]. Here, optimization is performed in a sequential fashion, alternating between updates resulting from the distance measure (forcing term) and the application of a smoothing operator to regularize the problem (typically through Gaussian smoothing [67, 69, 70]). This scheme is somewhat equivalent to the Picard scheme we discuss in our paper, but it is unclear how one couples it with line search or trust region techniques.

Approaches that more closely reflect an optimal control PDE constrained optimization formulation (1.2) are described in [10, 16, 41, 48, 49, 57, 62, 71]. The model in [16] is equivalent to (1.2). The model in [62] follows the traditional optical flow formulation [46]. The conceptual difference between our formulation and the latter is that in optical flow, the transport equation constraint appears in the objective (see, e.g., [46, 47, 62]) and is therefore only fulfilled approximately in an L2 least squares sense. We treat it as a hard constraint instead. In [57] an optimal mass transport formulation is described, which is based on the Monge-Kantorovich problem. The formulations in [41, 71] do not account for incompressibility. The optical flow approach in [10] treats incompressibility as an L2-penalty. An optimal control formulation for a constant-in-time velocity field was proposed in [48, 49], in which the divergence of the velocity field is penalized along with smoothness constraints.

What sets our work apart are the numerical algorithms and the discretization scheme. Almost all existing efforts on large deformation diffeomorphic image registration that are closely related to our optimal control formulation exclusively use first-order information for numerical optimization [2, 4, 10, 16, 18, 19, 41, 44, 48, 49, 57, 62, 71, 74]. We use second-order information. The only work3 in the context of large deformation diffeomorphic image registration that to our knowledge uses second-order information is [3]. The model in [3] is based on the LDDMM framework [4, 23, 48, 49, 53, 44, 68]. The inversion is, likewise to [71], performed with respect to an initial momentum. No additional constraints on v are considered. Another difference is that we use a Galerkin method in time to reduce the number of unknowns. This allows us to invert for stationary [1, 2, 44, 52, 69, 70] as well as time-varying [4, 10, 16, 41] velocity fields. Nothing changes in our formulation other than the number of unknowns. Furthermore, we globalize our methods with a line search strategy (i.e., we guarantee a sufficient decrease of the objective 𝒥). This is a standard—yet important—ingredient for guaranteeing convergence, which is often not accounted for [2, 16, 17, 41, 52, 69, 70].

We, likewise to [10, 16, 48, 49, 52, 62], consider incompressibility as an optional constraint (see (1.2b)). Operating with divergence-free velocity fields is equivalent to enforcing det(∇y) = 1 up to numerical accuracy (see [33, p. 77ff.]; other formulations for controlling det(∇y) can be found in [14, 36, 37, 39, 50, 55, 58, 60, 61, 64, 73]). Unlike [10, 48, 49], which penalize the divergence of the velocity, we treat it exactly. We are not arguing that this approach is better per se. The use of penalties is adequate unless one has reasons to insist on an incompressible velocity field. In that case, a penalty method results in ill-conditioning.

Finally our pseudospectral formulation in space allows us to resolve several numerical difficulties related to the incompressibility constraint. For example, the inf-sup condition for pressure spaces is not an issue with our scheme. Regarding accuracy, for smooth images, our scheme is spectrally accurate in space and second-order accurate in time. We do not have to use different discretization models [16, 62] for solving the individual subsystems of the mixed-type (hyperbolic-elliptic) optimality conditions. Since we use second-order explicit time stepping in combination with Fourier spectral methods, we have at hand a scheme that displays minimal numerical diffusion and does not require flux-limiters [10, 16, 41, 71]. As we will see, the conditioning of the Hessian that appears in our Newton–Krylov scheme can be quite bad. Although the literature for preconditioners for PDE constrained optimization problem is quite rich (e.g., [6, 10, 8, 34]), none of these methods directly applies to our formulation. Developing effective preconditioning schemes for our formulation is ongoing work in our group.

2. Outline

In section 3 the mathematical model is developed. The numerical strategies are described in section 4. In particular, we specify(i) the optimality conditions (see section 4.1), (ii) strategies for numerical optimization (see section 4.2), and (iii) implementation details (see section 4.3). Numerical experiments on synthetic and real-world data are reported in section 5. Final remarks can be found in section 6.

3. Continuous problem formulation

We provide a summary of the basic notation in Table 1. The original problem formulation is stated in section 1.1. The only missing building block is the considered choices for 𝒮 in (1.2a). This is what we discuss next. Note that we neglect any technicalities with respect to the associated function spaces; we assume that the considered functions are adequately smooth (i.e., sufficiently many derivatives exist and are bounded).

Table 1.

Notation (frequently used acronyms and symbols).

| Notation | Description |

|---|---|

|

| |

| GN | Gauss–Newton |

| KKT system | Karush–Kuhn–Tucker system |

| PCG | preconditioned conjugate gradient method |

| PDE | partial differential equation |

| PDE solve | solution of hyperbolic PDEs of optimality systems (4.1) and (4.3) |

|

| |

| mR | reference (fixed) image (mR : Rd → R) |

| mT | template image (image to be registered; mT: Rd → R) |

| y | mapping (deformation; y : Rd → Rd) |

| v | velocity field (control variable; v : Rd × [0, 1] → Rd) |

| m | state variable (transported image; m : Rd × [0, 1] → R) |

| m1 | state variable at t = 1 (deformed template image; m1 : Rd → R) |

| λ | adjoint variable (transported mismatch; λ : Rd × [0, 1] → R) |

| f | body force (drives the registration; f : Rd × [0, 1] → Rd) |

| F1 | deformation gradient (tensor field) at t = 1 (F1 : Rd → Rd×d) |

| 𝒥 | objective functional |

| 𝒮 | regularization functional |

| 𝒜 | differential operator (first and second variation of 𝒮) |

| β | regularization parameter |

| γ | parameter that enables (γ = 1) or disables (γ = 0) the incompressibility constraint |

| nt | number of time points (discretization) |

| nc | number of coefficient fields (spectral Galerkin method in time) |

| nx | number of grid points (discretization; ) |

| g | reduced gradient (first variation of Lagrangian with respect to v) |

| ℋ | reduced Hessian (second variation of Lagrangian with respect to v) |

3.1. Regularization models

In contrast to [10] we do not explicitly enforce continuity in time. We relax the model to an L2-integrability instead (see (1.2a)). This relaxation still yields a velocity field that varies smoothly in time [16].

Quadratic smoothing regularization models are commonly used in nonrigid image registration [27, 54, 56, 65]. They can be defined as

| (3.1) |

where ℬ is a differential operator that (together with its dual) defines the function space 𝒲 and β > 0 is a regularization parameter that balances the contribution of 𝒮.

As images are functions of bounded variation, regularity requirements on v ∈ 𝒱, 𝒱 := L2([0, 1]; 𝒲) (i.e., the choice of 𝒲 in (3.1)) have to be considered with care (for an analytical result see [16]). Experimental analysis suggests that an H1-seminorm is appropriate if incompressibility is considered (i.e., γ = 1 in (1.2b); see also [16]). Thus,

This choice is motivated from continuum mechanics and yields a viscous model of linear Stokes flow (see section 4.1; Stokes regularization). If we neglect the incompressibility constraint (i.e., γ = 0 in (1.2b)), we use a vectorial Laplacian operator,

instead. This choice is motivated by the fact that H2-norm-based quadratic regularization is commonly used in large deformation diffeomorphic image registration [4, 41, 44].

4. Numerics

We describe the numerical methods used to solve (1.2) next. Whenever discretized quantities are considered, a superscript h is added to the continuous variables and operators (i.e., the discretized representation of v is denoted by vh). Likewise, if we refer to a discrete variable at a particular iteration, we will add the iteration index as a subscript (i.e., vh at iteration k is denoted by ).

We discretize the data on a nodal grid in space and time. The number of spatial grid points is denoted by with spatial step size . The number of time points is denoted by nt ∈ N with step size ht = 1/nt, ht > 0.

We use the method of Lagrange multipliers to numerically solve (1.2) with Lagrange multipliers λ : Ω̄ ×[0, 1] → R, (x, t) ↦ λ(x, t) (for the hyperbolic transport equation in (1.2b)), and p : Ω̄ ×[0, 1] → R, (x, t) ↦ p(x, t) (pressure; for the incompressibility constraint in (1.2b)). We use an optimize-then-discretize approach (for a discussion on advantages and disadvantages see [32, p. 57ff.]). The resulting optimality conditions are described next.

4.1. Optimality conditions

Computing variations of the Lagrangian with respect to perturbations of the state (m), adjoint (λ and p), and control (v) variables, respectively, yields the (necessary) first-order optimality (KKT) conditions (in strong form)

| (4.1a) |

| (4.1b) |

| (4.1c) |

| (4.1d) |

subject to the initial and terminal conditions

and periodic boundary conditions on ∂Ω; (4.1d) is referred to as the reduced gradient, where f := λ∇m, f : Ω̄ × [0, 1] → Rd, (x, t) ↦ f(x, t), is the applied body force and 𝒜 = ℬℬH is the Gâteaux derivative of 𝒮. In particular, we have

| (4.2a) |

| (4.2b) |

respectively. We refer to (4.1a) (hyperbolic initial value problem) as the state equation, to (4.1b) as the adjoint equation (hyperbolic final value problem) and to (4.1d) as the control equation (elliptic problem). Note that the adjoint equation (4.1b) is, likewise to (4.1a), a scalar conservation law that flows the mismatch between mR and m1 backward in time. If we neglect the incompressibility constraint in (1.2b), γ in (4.1) is set to zero (i.e., (4.1) consists only of (4.1a), (4.1b), and (4.1d)).

Taking second variations of the Lagrangian yields the system

| (4.3a) |

| (4.3b) |

| (4.3c) |

| (4.3d) |

subject to initial and terminal conditions m̃0 := m̃(·, 0) = 0, m̃0 : Ω̄ → R, x ↦ m̃0(x), and λ̃1 := λ̃(·, 1) = −m̃1, λ̃1 : Ω̄ → R, x ↦ λ̃1(x), m̃1 := m̃(·, 1), m̃1 : Ω̄ → R, x ↦ m̃1(x), respectively, and periodic boundary conditions on ∂Ω. Here, (4.3a), (4.3b), and (4.3d) are referred to as the incremental state, adjoint, and control equations, respectively; the incremental variables are denoted with a tilde. Further, ℋ in (4.3d) is referred to as the reduced Hessian and f̃:= λ̃∇m+ λ∇m̃, f̃ : Ω̄ ×[0, 1] → R, (x, t) ↦ f̃(x, t), is the incremental body force. The operator 𝒜 in (4.3d) represents the second variation of 𝒮 with respect to the control v. We use the same symbol as in (4.1), since the second variation of 𝒮 with respect to v is identical to its first variation (the corresponding vectorial differential operators are given in (4.2a) and (4.2b), respectively).

4.2. Numerical optimization

We discuss strategies for numerical optimization next. We consider second-order Newton–Krylov methods (see section 4.2.1) and a first-order Picard method (see section 4.2.3).

We use a backtracking line search subject to the Armijo condition with search direction sk ∈ Rn and step size αk > 0 at (outer) iteration k ∈ N0 to ensure a sequence of monotonically decreasing objective values 𝒥h (we use default parameters; see [59, Algorithm 3.1, p. 37]). Note that each evaluation of 𝒥h requires a forward solve (i.e., the solution of (4.1a) to obtain given some trial solution ). Therefore, it is desirable to keep the number of line search steps at minimum.

4.2.1. Inexact Newton–Krylov method

Applying Newton’s method to (4.1) yields a large KKT system that has to be solved numerically at each outer iteration k. We will refer to the iterative solution of this system as inner iterations.4 In reduced space methods, incremental adjoint and state variables are eliminated from the system via block elimination (under the assumption that state and adjoint equations are fulfilled exactly) [8, 9]. We obtain the reduced KKT system

| (4.4) |

where corresponds to the reduced Hessian in (4.3d) (i.e., the Schur complement of the full Hessian for the control variable vh) and to the incremental control variable in (4.3) (which is nothing but the search direction sk mentioned earlier). Further, the right-hand side corresponds to the reduced gradient in (4.1d).

The numerical scheme amounts to a sequential solution of the optimality conditions (4.1) and (4.3). Algorithm 1 illustrates a realization of an outer iteration.5 Note that we eliminate (4.1c) and (4.3c) from the optimality conditions (see section 4.3.4). The inner iteration (i.e., the solution of (4.4)) is what we discuss next.

Forming or storing ℋh in (4.4) is computationally prohibitive. Therefore, it is desirable to use an iterative solver for which ℋh does not have to be assembled in practice. Krylov-subspace methods are a popular choice [5, 8, 15, 34], as they only require matrix-vector products. We use a PCG method, exploiting the fact that ℋh is positive definite (i.e., ℋh ≻ 0; see section 4.2.2 for a discussion) and symmetric.

Solving (4.4) exactly can be prohibitively expensive and might not be justified if an iterate is far from the (true) solution [20]. A common strategy is to perform inexact solves. That is, starting with a large tolerance for the Krylov-subspace method we successively reduce the tolerance and by that solve more accurately for the search direction, as we approach a (local) minimizer [21, 24]. This can be achieved with the termination criterion

| (4.5) |

for the Krylov-subspace method. Here, , ηk ∈ [0, 1), is referred to as a forcing sequence (assuming superlinear convergence; details can be found in e.g., [59, p. 165ff.]); ι ∈ N in (4.5) is the iteration index of the inner iteration (i.e., for the iterative solution of (4.4)) at a given outer iteration k.

Algorithm 1.

Outer iteration of the designed inexact Newton–Krylov method.

| 1: | compute , and ; k ← 0 | |

| 2: | while true do | |

| 3: | stop ← (4.9) | |

| 4: | if stop break | |

| 5: | sk ← solve (4.4) given , and | ▷ Newton step |

| 6: | αk ← perform line search on sk | |

| 7: | , | |

| 8: | ||

| 9: | (4.1a) forward in time given | ▷ forward solve |

| 10: | ||

| 11: | (4.1b) backward in time given and | ▷ adjoint solve |

| 12: | compute and given , and | |

| 13: | k ← k + 1 | |

| 14: | end while |

The course of an inner iteration follows the standard PCG steps (see, e.g., [59, p. 119, Algorithm 5.3]). During each inner iteration ι we have to apply ℋh in (4.3d) to a vector. We summarize this matrix-vector product in Algorithm 2. As can be seen, each application of ℋh requires an additional forward and adjoint solve (i.e., the solution of the incremental state and adjoint equations (4.3a) and (4.3b), respectively). This is a direct consequence of the block elimination in reduced space methods.

The number of inner iterations essentially depends on the spectrum of the operator ℋh. Typically, ℋh displays poor conditioning. An optimal preconditioner P ∈ Rn×n renders the number of iterations independent of n and β. The design of such a preconditioner is an open area of research [6, 7, 8, 34]. Standard techniques like incomplete factorizations or algebraic multigrid are not applicable, as they require the assembling of ℋh in (4.4). Geometric, matrix-free preconditioners are a valid option. This is something we will investigate in the future. Here, we consider a left preconditioner based on the exact spectral inverse of the regularization part of ℋh. That is, P := 𝒜h (implementation details can be found in section 4.3.3). Note that the PCG method only requires the action of P−1 on a vector (i.e., a matrix-free implementation is in place). Since we use a Fourier spectral method, the cost of our preconditioning amounts to a spectral diagonal scaling. We will refer to this algorithm as the Newton-PCG (N-PCG) method.

Algorithm 2.

Hessian matrix-vector product of the designed inexact Newton–Krylov algorithm at outer iteration k ∈ N. We illustrate the computational steps required for applying ℋh in (4.3d) to the PCG search direction at inner iteration index ι ∈ N.

4.2.2. GN approximation

Even though ℋh is in the proximity of a (local) minimum by construction positive semidefinite (i.e., ℋh ⪰ 0) it can be indefinite or singular far away from the solution. Accordingly, the search direction is not guaranteed to be a descent direction. One remedy is to terminate the inner iteration whenever negative curvature occurs [21]. Another approach is to use a quasi-Newton approximation. We consider a GN approximation instead. Here, we drop certain expressions of ℋh, which in turn guarantees that . In particular, we drop all expressions in (4.3) in which λ appears. Accordingly, we obtain the (continuous) system

| (4.6a) |

| (4.6b) |

| (4.6c) |

| (4.6d) |

We expect the rate of convergence to drop from quadratic to (super-)linear when turning to (4.6). However, if the L2-distance can be driven to zero, we recover fast local convergence close to the true solution v★, even if the adjoint variable is neglected. This is due to the fact that (4.1b) models the flow of the mismatch backward in time, such that λ → 0 for v → v★. We refer to this method as the (inexact) GN-PCG method [8, 9]. We remark that all algorithmic details described in this note apply to both Newton–Krylov methods.

4.2.3. Picard method

We consider a globalized Picard iteration (fixed point iteration) in addition to the described Newton–Krylov methods. Based on (4.1d) we have

| (4.7) |

Since we use Fourier spectral methods, the inversion of 𝒜h in (4.7) comes at the cost of a diagonal scaling (implementation details can be found in section 4.3.3). Accordingly, this scheme does not require the (iterative) solution of a linear system. However, it potentially results in a larger number of outer iterations until convergence as we expect the optimization problem to be poorly conditioned.

We do not directly use the solution of (4.7) as a new iterate but compute a search direction sk instead. This in turn allows us to perform a line search on sk. That is, we subtract the new from the former iterate. This scheme can be viewed as a gradient descent in the function space induced by 𝒲 (i.e., a preconditioned gradient descent scheme; see Appendix C).

Note that sk is, in contrast to Newton methods, arbitrarily scaled. Therefore, we provide an augmented implementation that tries to estimate an optimal scaling during the course of optimization. Details can be found in section 4.3.5.

4.2.4. Termination criteria

The termination criteria are in accordance with [56] (see [29, p. 305 ff.] for a discussion) given by

| (4.8) |

Here, τ𝒥 > 0 is a user defined tolerance, εmach > 0 is the machine precision, and nopt ∈ N is the maximal number of outer iterations. The algorithm is terminated if

| (4.9) |

where ∧ denotes the logical or and ∨ the logical and operator, respectively.

4.3. Algorithmic details

This section provides additional specifics on the implementation. In particular, we describe(i) the numerical discretization (see section 4.3.1), (ii) the parameterization in time (see section 4.3.2), (iii) the inversion of the operator 𝒜h (see section 4.3.3), and (iv) strategies for the parameter selection (see section 4.3.5).

4.3.1. Numerical discretization

We use a (regular) nodal grid for the discretization in space and time. The problem is defined on the space-time interval Ω × [0, 1], where Ω := (−π, π)d. Accordingly, we obtain the time step size via ht = 1/nt. The cell size (pixel or voxel size) for a spatial grid cell can be computed via , i = 1, …, d, where is the number of grid points along the ith spatial direction xi.

The derivative operators are discretized via Fourier spectral methods [11]. The time integrator for the forward and adjoint solves is an explicit second-order Runge–Kutta method, which, in connection with Fourier spectral methods, displays minimal numerical diffusion.

Following standard numerical theory for hyperbolic equations, the step size ht > 0 is bounded from above by ht,max := εCFL/ max(||vh||∞ ⊘ hx), ht,max > 0 (Courant–Friedrich–Lewy (CFL) condition). Here, ⊘ denotes a Hadamard division and εCFL > 0 is the CFL number. The theoretical bound for ht,max is attained for εCFL = 1. We use εCFL = 0.2 for all experiments. Since we use a spectral Galerkin method in time (see section 4.3.2), we can adaptively adjust nt (and therefore ht) for the forward and adjoint solves as required by the CFL condition.

4.3.2. Spectral Galerkin method

To reduce the number of unknowns, v is expanded in time in terms of basis functions bl : [0, 1] → R, t ↦ bl(t), l = 1, …, nc,

| (4.10) |

where vl : Rd → Rd, x ↦ vl(x), is a coefficient field. The coefficients vl are the new unknowns of our problem. This reduces the number of unknowns in time from nt to nc, where nc ≪ nt. Thus, we can invert for a stationary (nc = 1) or a nonstationary velocity field as required. Nothing changes in our formulation—just the number of unknowns.

We use Chebyshev polynomials as basis functions bl on account of their excellent approximation properties as well as their orthogonality (see Appendix A for details). The expansion (4.10) solely affects 𝒮 and the (incremental) control equation (i.e., (4.1d) and (4.3d)); v is computed from the coefficient fields vl, l = 1, …, nc, during the forward and adjoint solves according to (4.10).

4.3.3. Inversion: Regularization operators

The Picard iteration in (4.7) as well as the preconditioning of (4.4) require the inversion of the differential operator 𝒜h. Since we use Fourier spectral methods this inversion can be accomplished at the cost of a spectral diagonal scaling. However, 𝒜h has a nontrivial kernel (which only includes constant functions due to the periodic boundary conditions). We make 𝒜h invertible by setting the base frequency of the inverse of 𝒜h (including the scaling by β) to one. This ensures not only invertibility, but also that the constant part of the (incremental) body force f (or f̃, respectively) remains in the kernel of our regularization scheme. This in turn allows us to invert for constant velocity fields.

4.3.4. Elimination of p and p̃

In our numerical scheme, we eliminate p and by that (4.1c) from (4.1). Details on the derivation can be found in Appendix B. We obtain

| (4.11) |

to replace (4.1d), where f is the body force as defined in section 4.1 and 𝒜 the first variation of 𝒮 with respect to v (see (4.2a) and (4.2b), respectively). It immediately follows that we obtain

| (4.12) |

to replace (4.3d); f̃ denotes the incremental body force defined in section 4.1.

4.3.5. Parameter selection

To the extent possible, it is desirable to design a numerical scheme that does not require a selection of parameters (black-box solver). This is challenging for previously unseen data. In general, the user should only be required to decide on the following:

The desired accuracy of the inversion (controlled by the tolerance τ𝒥; see section 4.2.4).

The desired properties of the mapping y (controlled by εθ or εF, respectively; see below).

The budget we are willing to assign to the computation (controlled by nopt; see section 4.2.4).

For the purpose of this numerical study we proceed as follows.

Optimization

We set the maximum number of iterations nopt (see (4.8)) to 1E6, as we do not want our algorithm to terminate early (i.e., we make sure that we terminate only if either we reach the defined tolerances or we no longer observe a decrease in 𝒥h). For the convergence study in section 5, we use the relative change of the ℓ∞-norm of the gradient gh as a stopping criterion, as we are interested in studying convergence properties. This enables an unbiased comparison in terms of the required work to solve an optimization problem up to a desired accuracy. In particular, we terminate the optimization if the relative change of the reduced gradient gh is larger than or equal to three orders of magnitude.

Following standard textbook literature [29, 56] we use the stopping criteria in (4.8) for the remainder of the experiments. We set the tolerance to τ𝒥 = 1E–3. We qualitatively did not observe significant differences in the final results for the experiments performed in this study, when turning to smaller tolerances. We will further elaborate on the required accuracy for the inversion (i.e., the registration quality) in a follow-up paper.

The tolerance of the PCG method is set as discussed in section 4.2.1 (see (4.5)). The maximal number of iterations for the PCG method is set to n (order of the reduced KKT system in (4.4)). In theory, this guarantees that the PCG method converges to a solution. This choice not only ensures that we provide an unbiased study (i.e., we do not terminate early) but also makes sure that we do not miss any issues in the implementation or parameter selection. We converged for all experiments conducted in this study after only a fraction of n inner iterations. This statement is confirmed by the reported number of PDE solves.6

For all our experiments we initialized the line search with a factor of αk = 1 (see section 4.2). This is a sensible choice, as search directions obtained from second-order methods are nicely scaled (i.e., we expect αk to be 1). However, this is not the case for the Picard scheme (i.e., the preconditioned gradient descent). Our implementation features an option to memorize the scaling of sk for the next outer iteration. That is, we introduce an additional scaling factor α̃k > 0 that is applied to sk before entering the line search (initialized with α̃k = 1). If the line search kicks in, we downscale α̃k by αk. On the contrary, we upscale α̃k by a factor of two if αk = 1.

PDE solver

The number of time steps nt is bounded from below due to stability requirements (see section 4.3.1). Since we use an expansion in time (see section 4.3.2), it is possible to adaptively adjust nt, so that numerical stability is attained.

However, we fix nt for the numerical experiments in section 5.3 as we are interested in studying the convergence behavior with respect to the employed grid size. We set nt to 4 max(nx). This is a pessimistic choice. If we still encounter instabilities (as judged by monitoring the CFL condition (see section 4.3.1)), sk is scaled by a factor of 0.5 until numerical stability is attained, before entering the line search. For all numerical experiments conducted in this study, we did not observe any instabilities for the Newton–Krylov methods. However, for the Picard method we observed instabilities in the case when we did not consider the rescaling procedure detailed above. This is due to the fact that sk is arbitrarily scaled for first-order methods (as opposed to second-order methods). By introducing the additional scaling parameter α̃k we could stabilize the Picard method—we did not observe a violation of the CFL condition for any of the conducted experiments (for nt fixed).

Regularization

Estimating an optimal value for β is an area of research by itself. A variety of methods has been designed (see, e.g., [72]). A key difficulty is computational complexity. Methods based on the assumption that differences between model output and observed data are associated with random noise (such as generalized cross validation) might not be reliable in the context of nonrigid image registration. This is due to the fact that the noise in the images is likely to be highly structured [38]. Another possibility is to estimate the regularization parameter on the basis of the spectral properties of the Hessian (see section 5.3.1). That is, we can estimate the condition number of the problem during the PCG solves for the unregularized problem using the Lanczos algorithm (see [30, p. 528]). We can do this very efficiently by initializing the problem with a zero velocity field. Given v is zero, the application of the Hessian within the PCG is computationally inexpensive, as a lot of the terms in the optimality systems drop (see section 4.1). However, the level of regularization depends not only on properties of the data, but also on regularity requirements on y.

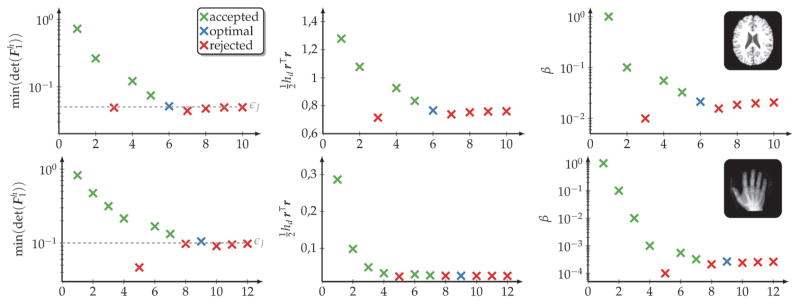

Another common strategy is to perform a parameter continuation in β (see, e.g., [35, 38]). In [38] it has been suggested to inform the algorithm about the required regularity of a solution on the basis of a lower bound on the L2-distance between the reference and the deformed template image. The decision on such bound, however, might not be intuitive for practitioners. Further, one is ultimately interested not only in a small residual but also in a bounded determinant of the deformation gradient. Therefore, we propose to inform the algorithm on regularity requirements in terms of a lower bound εF ∈ (0, 1) on (i.e., a bound on the tolerable compression of a volume element). If the Stokes regularization scheme (γ = 1 in (1.2b) and 𝒮 is an H1-seminorm) is considered, bounds on geometric constraints of the deformation of a volume element can be used. In particular, we use a lower bound εθ > 0 on the acute angle of a grid element. The upper bound on the obtuse angle is given by 2π − εθ. Note that it is actually necessary to monitor geometric properties to guarantee a local diffeomorphism; a lower bound on is not sufficient.

Our algorithm proceeds as follows. In the first step, the registration problem is solved for a large value of β (β = 1 in our experiments) so that we underfit the data.7 Subsequently, β is reduced by one order of magnitude until we reach εF (or εθ). From there on, a binary search is performed. The algorithm is terminated if the change in β is below 5% of the value for β, for which εF (or εθ) was breached. We add a lower bound of 1E–6 on β as well as a lower bound for the relative change of the L2-distance of 1E–2 to ensure that we do not perform unnecessary work. We never reached these bounds for the experiments conducted in this study.

Presmoothing

A numerical challenge in image computing is that images are functions of bounded variation. Therefore, an accurate computation of the derivatives becomes more involved. A common approach to ensure numerical stability and avoid the Gibbs phenomena is to reduce high-frequency information in the data. We use a Gaussian smoothing, which is parametrized by a user-defined standard deviation σ > 0. We experimentally found a value of σ = 2π/min(nx) to be adequate for the problems at hand. However, we note that we increased σ by a factor of 2 for one set of experiments in section 5.3.2. We also note that we implemented a method for grid and scale continuation for the images. This avoids the problem of deciding on σ. We will investigate an automatic selection strategy for σ in a follow-up paper.

It is important to note that the sensitivity of second-order derivatives to noise in the data is problematic. Therefore, we refrain from applying the N-PCG method to nonsmooth images.

5. Numerical experiments

We report results only in two dimensions. We test the algorithm on real-world and synthetic registration problems (see section 5.1). The measures to analyze the registration results are summarized in section 5.2. We conduct a numerical study (see section 5.3), which includes an analysis of(i) the spectral properties of the Hessian (see section 5.3.1), (ii) grid convergence (see section 5.3.2), and (iii) the effects of varying the number of the unknowns in time (see section 5.3.3). We additionally report results for a fully automatic registration on high-resolution images based on the designed parameter continuation in β (see section 5.4).

5.1. Data

We consider synthetic and real-world registration problems.8 These are illustrated in Figure 1. All images have been normalized to an intensity range of [0, 1]. The synthetic problems are constructed by solving the forward problem to create an artificial template image mT given some image mR and some velocity field v★ (sinusoidal images and UT images in Figure 1). Here, v★ is chosen to live on the manifold of divergence-free velocity fields to provide a testing environment for the Stokes regularization scheme. Further, v★ is by construction assumed to be constant in time (i.e., nc = 1). In particular, we have

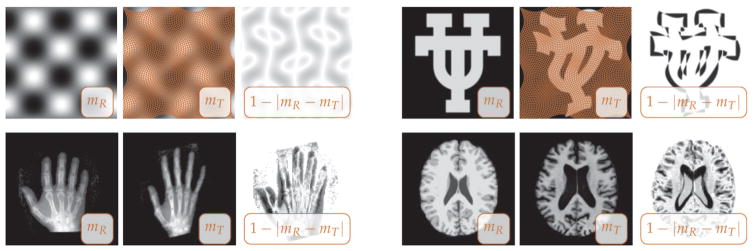

Figure 1.

Synthetic and real-world registration problems. All images have been normalized to an intensity range of [0, 1]. The registration problems are referred to as sinusoidal images (top row, left), UT images (top row, right), hand images (bottom row, left), and brain images (bottom row, right). Each row displays the reference image mR, the template (deformable) image mT, and a map of their pointwise difference (from left to right as identified by the inset in the images). We provide an illustration of the deformation pattern y (overlaid onto mT) for the synthetic problems. This mapping is computed from v★ in (5.1) via (D.1).

| (5.1) |

5.2. Measures of performance

We report the number of the (outer) iterations and the number of the hyperbolic PDE solves to assess the computational work load. The latter is a good proxy for the wall clock time. It provides a transparent comparison between the designed first- and second-order methods, given that the number of the hyperbolic PDE solves varies between these methods: Two hyperbolic PDE solves are required during each iteration of the Picard method (we have to solve (4.1a) and (4.1b) to evaluate the reduced gradient in (4.1d); see also Algorithm 1). For the Newton–Krylov methods, we require an additional two hyperbolic PDE solves per inner iteration (we have to solve (4.3a) and (4.3b) to compute the Hessian matrix-vector product given in (4.3d); see also Algorithm 2). Each evaluation of 𝒥h in (1.2) (i.e., each line search step) requires an additional hyperbolic PDE solve (we have to compute the deformed template image at t = 1 by solving (4.1a)). Note that the solution of the forward and adjoint problems is the key bottleneck of our algorithm. We will report the wall clock times in a follow-up paper, in which we extend the current framework to a three-dimensional implementation. This study focuses on algorithmic features.

We report the relative change of(i) the L2-distance, (ii) the objective functional 𝒥h, and (iii) the ℓ∞-norm of the (reduced) gradient gh to assess the quality of the inversion. We additionally report values for the determinant of the deformation gradient to study local deformation properties. These measures are defined more explicitly in Table 2.

Table 2.

Overview of the quantitative measures that are used to assess the registration performance. We report the number of outer iterations (steps for updating the control variable vh) and the number of hyperbolic PDE solves (i.e., how often we have to solve one of the hyperbolic PDEs (4.1a), (4.1b), (4.3a), and (4.3b) that appear in the optimality systems) to assess the work load. We report the relative change of the L2-distance, the objective, and the reduced gradient to assess the quality of the inversion. We report values for the determinant of the deformation gradient to assess the regularity of the computed deformation map. We report the relative power spectrum of the coefficients to assess which of the coefficients of the expansion in (4.10) are significant.

| Description | Definition | |

|---|---|---|

| # of required outer iterations | k★ | |

| # of required hyperbolic PDE solves | nPDE | |

| Relative change of L2-distance |

|

|

| Relative change of objective value |

|

|

| Relative change of reduced gradient |

|

|

| Determinant of deformation gradient |

|

|

| Relative power spectrum of |

|

We visually support this quantitative analysis on the basis of snapshots of the registration results. Information on the reconstruction accuracy can be obtained from pointwise maps of the residual difference between mR and m1. The deformation regularity and the mass conservation can be assessed via images of the pointwise determinant of the deformation gradient and/or of a deformed grid overlaid onto m1. Details on how these are obtained and on how to interpret them can be found in Appendix D.

5.3. Numerical study

We study the spectral properties of the Hessian (see section 5.3.1), grid convergence (see section 5.3.2), as well as the influence of an increase in the number of the unknowns in time (see section 5.3.3).

5.3.1. Spectral analysis

Purpose

We study the ill-posedness and the ill-conditioning of the problem at hand. We report spectral properties of ℋh. We study the eigenvalues and the eigenvectors with respect to different choices for β. We study the differences between plain H2-regularization and the Stokes regularization scheme (H1-regularization).

Setup

This study is based on the UT images (the true solution vh, ★ is divergence free; see section 5.1 for more details on the construction of this synthetic registration problem; nx = (64, 64)⊤ and nc = 1 so that n = 8192). The eigendecomposition V Λ V −1, , νi ∈ Rn, ||νi||2 = 1, Λ = diag(Λ11, …, Λnn), Λii > 0, is computed at the true solution vh, ★ to guarantee that ℋh ≻ 0. The spectrum is computed for three different choices of β: for the unregularized problem (β = 0), an empirically determined (moderate) value (β = 1E–3), and solely for the regularization model (β = 1E6).

Results

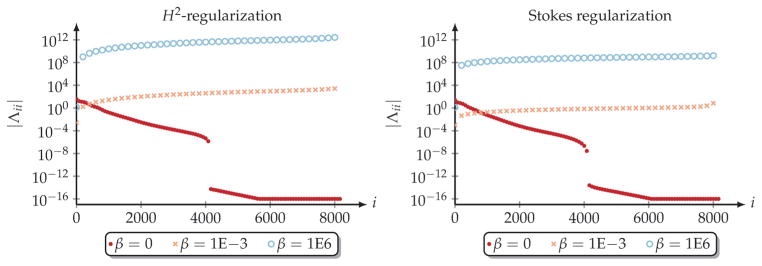

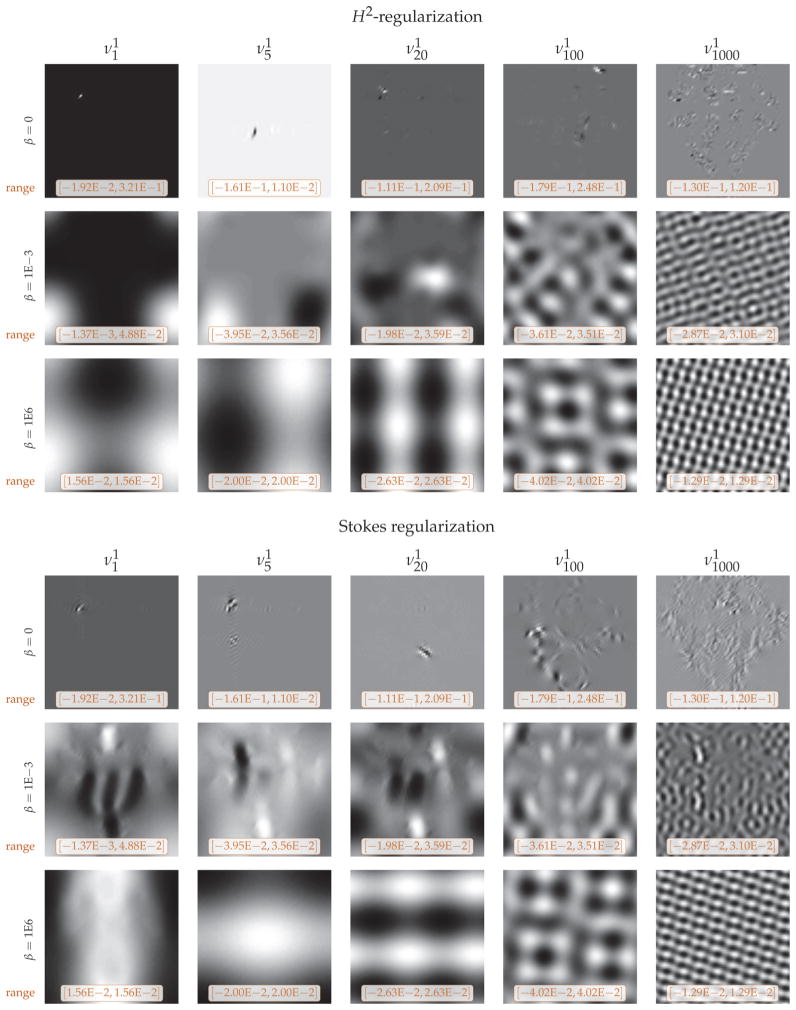

Figure 2 displays the trend of the absolute value of the eigenvalues Λii, i = 1, …, n. They are sorted in descending order for β = 0 and in ascending order otherwise. If an eigenvalue drops below machine precision (i.e., 1E–16), it is set to 1E–16 (only for visualization purposes). The extremal real and imaginary part of the eigenvalues is summarized in Table 3. Figure 3 provides the spatial variation of the eigenvectors νi ∈ Rn that correspond to the eigenvalues Λii, i ∈ {1, 5, 20, 100, 1000}, in Figure 2 with respect to different choices for β and different regularization schemes. We only display the first component of the coefficient field . The pattern for the second component is (qualitatively) alike.

Figure 2.

Trend of the absolute value of the eigenvalues Λii, i = 1, …, 8192, of the reduced Hessian ℋh for plain H2-regularization (γ = 0; left) and the Stokes regularization scheme (γ = 1; H1-regularization; right) for β ∈ {0, 1E–2, 1E6} (as indicated in the legend of the plots). We report the trend of the entire set of 8192 eigenvalues. The test problem is the UT images (see section 5.1 for details on the construction of this synthetic registration problem; nx = (64, 64)⊤ and nc = 1). The Hessian is computed at the true solution vh, ★ to ensure that ℋh ≻ 0 (this statement is confirmed by the values reported in Table 3). The eigenvalues (absolute value) are sorted in descending order for the unregularized problem (i.e., for β = 0) and in ascending order otherwise (i.e., for β = 1E–3 and β = 1E6).

Table 3.

Extrema of the eigenvalues Λii, i = 1, …, 8192, of the reduced Hessian reported in Figure 2. We report values for plain H2-regularization (γ = 0; top block) and the Stokes regularization scheme (γ = 1; H1-regularization; bottom block). We refer to Figure 2 and the text for details on the experimental setup. We report the smallest and the largest real part as well as the largest absolute value of the imaginary part of the eigenvalues Λii with respect to different choices of the regularization parameter β ∈ {0, 1E–2, 1E6}.

| H2-regularization (γ = 0) | ||||||

|---|---|---|---|---|---|---|

| β |

|

|

|

|||

| 0 | −8.35E–15 | 3.72E1 | 3.26E–7 | |||

| 1E–3 | 2.59E–3 | 4.19E3 | 0. | |||

| 1E6 | 1.30 | 4.19E12 | 0. | |||

| Stokes regularization (γ = 1) | ||||||

|---|---|---|---|---|---|---|

| β |

|

|

|

|||

| 0 | −3.74E–13 | 2.48E1 | 5.92E–6 | |||

| 1E–3 | 1.00E–3 | 2.56E1 | 2.59E–6 | |||

| 1E6 | 1.29 | 2.05E9 | 1.43E–7 | |||

Figure 3.

Eigenvector plots of the reduced Hessian ℋh ∈ Rn×n, n = 8192, for β ∈ {0, 1E–3, 1E6} for plain H2-regularization (γ = 0; top) and for the Stokes regularization scheme (γ = 1; H1-regularization; bottom). The results correspond to the eigenvalue plots reported in Figure 2. We refer to Figure 2 and the text for details on the experimental setup. Each plot provides the spatial variation of the portion of an eigenvector νi ∈ Rn associated with the first component of the coefficient field , l = nc, nc = 1. The individual plots correspond to the eigenvalues Λii > 0, i = 1, 5, 20, 100, 1000 in Figure 2. The range of the values for is provided below each plot.

Observations

The most important observations are that(i) the regularization schemes display a very similar behavior (as judged by the clustering of the eigenvalues as well as the spatial variation of the eigenvectors for β = 1E6), (ii) the smoothness of the eigenvectors decreases with a decreasing regularization parameter β and increasing eigenvalues (for β = 1E–3 and β = 1E6) for both regularization schemes, and (iii) it is less clear how to identify the smooth eigenvectors within the eigenspace of the Stokes regularization scheme.

The eigenvalues Λii, i = 1, …, 8192, drop rapidly for the unregularized problem, approaching almost machine precision for i ≈ 4000 (see Figure 2). This demonstrates ill-conditioning and ill-posedness. The eigenvalues are bounded away from zero for the regularized problem. Increasing β shifts the trend of |Λii| to larger numbers. The values in Table 3 confirm that ℋh ≻ 0 (up to almost machine precision) at the true solution vh, ★.

Turning to the eigenvector plots, we can see that the first eigenvector displays a delta peak like structure for both regularization schemes, since there is no local coupling of the spatial information. For the regularized problem we can observe a smooth spatial variation for the eigenvectors associated with large eigenvalues for both regularization schemes. The first eigenvector plot is almost constant for β = 1E6 (bottom row of each block in Figure 3). The structure of the pattern for β = 1E6 is analogue for both schemes, which indicates similarities in the behavior of both schemes. As the index i increases, the eigenvectors become more oscillatory. We can observe strong differences between the two schemes for a moderate regularization (β = 1E–3; middle row of each block in Figure 3). Also, we can observe a more complex structure for the Stokes regularization scheme for small eigenvalues (i.e., it is difficult to identify where the smoothest eigenvectors are located within the eigenspace).

Conclusion

We conclude that the Hessian operator is singular if we do not include a smoothness regularization model for the control variable. For practical values of the regularization parameter, the Hessian behaves as a compact operator; larger eigenvalues are associated with smooth eigenvectors. It is well known that designing a preconditioner for such operators is challenging.

5.3.2. Convergence study

We study the grid convergence of the considered iterative optimization methods on the basis of synthetic registration problems. We use a rigid setting to prevent bias originating from adaptive changes during the computations. That is, the results are computed on a single resolution level. No grid, scale, or parameter continuation is applied. The number of the time points is fixed to nt = 4max(nx) for all experiments. Further, we use empirically determined values for the regularization parameter β, namely, β ∈ {1E–2, 1E–3}. Since we are interested in studying the convergence properties of our method, we consider the relative change of the ℓ∞-norm of the reduced gradient gh as a stopping criterion. This yields a fair comparison between the different optimization methods, as a reduction in the norm of gh directly reflects how well an optimization problem is solved (i.e., we exploit that gh = 0 is a necessary condition for a minimizer). We terminate if the relative change of the ℓ∞-norm of gh is at least three orders of magnitude. However, since the Picard method tends to converge slowly for low tolerances with respect to the gradient, we stop if we detect a stagnation in the objective. In particular, we terminate the optimization if the change in the objective in ten consecutive iterations was equal or below 1E–6. We solve for a stationary velocity field (i.e., nc = 1).

C∞ registration problem

Purpose

We study the numerical behavior for smooth registration problems. We report results for grid convergence and deformation regularity. We compare the Picard, GN-PCG, and N-PCG methods.

Setup

This experiment is based on the sinusoidal images (see section 5.1 for more details on the construction of this synthetic registration problem). Therefore, mT, mR ∈ C∞(Ω), and v★ ∈ L2([0, 1];C∞(Ω)d) so that the excellent convergence properties of Fourier spectral methods are expected to pay off. Additionally, it is not problematic to apply the N-PCG method. We report results for different grid sizes , i = 1, 2, nt = 4max(nx). No presmoothing is applied. We use an experimentally determined value of β = 1E–3 for all experiments. The remainder of the parameters is chosen as stated in the introduction to this section as well as in section 4.3.5.

Results

The grid convergence results are summarized in Table 4. Values derived from the deformation gradient are reported in Table 5. Exemplary results for the plain H2-regularization (γ = 0) and the Stokes regularization scheme (γ = 1; H1-regularization) are displayed in Figure 4. The definitions of the quantitative measures reported in Table 4 and Table 5 can be found in Table 2.

Table 4.

Quantitative analysis of the convergence of the Picard, N-PCG, and GN-PCG methods. The test problem is the sinusoidal images (see section 5.1 for details on the construction of this synthetic registration problem). We compare convergence results for plain H2-regularization (γ = 0; top block) and the Stokes regularization scheme (γ = 1; H1-regularization; bottom block). We report results for different grid sizes , i = 1, 2. We invert for a stationary velocity field (i.e., nc = 1). We terminate the optimization if the relative change of the ℓ∞-norm of the reduced gradient gh is at least three orders of magnitude or if the change in 𝒥h between 10 successive iterations is below or equal to 1E–6 (i.e., the algorithm stagnates). The regularization parameter is empirically set to β = 1E–3. The number of the (outer) iterations (k★), the number of the hyperbolic PDE solves (nPDE), and the relative change of (i) the L2-distance ( ), (ii) the objective ( ), and (iii) the (reduced) gradient (||gh||∞,rel) and the average number of the required line search steps ᾱ are reported. Note that we introduced a memory for the step size into the Picard method (preconditioned gradient descent) to stabilize the optimization (see section 4.3.5 and the description of the results). The definitions for the reported measures are summarized in Table 2.

|

|

k★ | nPDE |

|

|

||gh||∞,rel | ᾱ | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||

| H2-regularization | Picard | 64 | 30 | 420 | 4.78E–3 | 6.05E–2 | 7.86E–3 | 1.72 | |||

| 128 | 34 | 414 | 4.69E–3 | 6.04E–2 | 6.48E–3 | 1.82 | |||||

| 256 | 33 | 414 | 4.68E–3 | 6.03E–2 | 7.42E–3 | 1.75 | |||||

|

| |||||||||||

| GN-PCG | 64 | 5 | 78 | 4.63E–3 | 6.05E–2 | 5.57E–5 | 1.00 | ||||

| 128 | 4 | 45 | 4.59E–3 | 6.04E–2 | 5.60E–4 | 1.00 | |||||

| 256 | 4 | 45 | 4.58E–3 | 6.03E–2 | 5.10E–4 | 1.00 | |||||

|

| |||||||||||

| N-PCG | 64 | 5 | 63 | 4.63E–3 | 6.05E–2 | 1.94E–4 | 1.00 | ||||

| 128 | 5 | 61 | 4.57E–3 | 6.04E–2 | 2.83E–4 | 1.00 | |||||

| 256 | 5 | 61 | 4.57E–3 | 6.03E–2 | 2.86E–4 | 1.00 | |||||

|

| |||||||||||

| Stokes regularization | Picard | 64 | 57 | 269 | 6.61E–4 | 1.56E–2 | 2.55E–3 | 1.68 | |||

| 128 | 52 | 250 | 5.79E–4 | 1.55E–2 | 3.55E–3 | 1.65 | |||||

| 256 | 50 | 233 | 5.67E–4 | 1.55E–2 | 2.31E–3 | 1.71 | |||||

|

| |||||||||||

| GN-PCG | 64 | 5 | 88 | 5.86E–4 | 1.56E–2 | 7.30E–5 | 1.00 | ||||

| 128 | 5 | 86 | 4.87E–4 | 1.55E–2 | 8.09E–5 | 1.00 | |||||

| 256 | 5 | 86 | 4.86E–4 | 1.55E–2 | 8.33E–5 | 1.00 | |||||

|

| |||||||||||

| N-PCG | 64 | 5 | 75 | 5.86E–4 | 1.56E–2 | 2.60E–4 | 1.00 | ||||

| 128 | 5 | 75 | 4.87E–4 | 1.55E–2 | 2.69E–4 | 1.00 | |||||

| 256 | 5 | 75 | 4.87E–4 | 1.55E–2 | 2.51E–4 | 1.00 | |||||

Table 5.

Obtained values for of exemplary registration results of the convergence study reported in Table 2 with respect to different iterative optimization methods (Picard, N-PCG, and GN-PCG). We refer to Table 4 and the text for details on the experimental setup. We report results for plain H2-regularization (γ = 0; top block) and for the Stokes regularization scheme (γ = 1; H1-regularization; bottom block) for a grid size of nx = (256, 256)⊤.

|

|

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|---|

| H2-regularization | Picard | 8.81E–1 | 1.19 | 1.00 | 7.58E–2 | ||||

| GN-PCG | 8.83E–1 | 1.19 | 1.00 | 7.56E–2 | |||||

| N-PCG | 8.83E–1 | 1.19 | 1.00 | 7.54E–2 | |||||

| Stokes regularization | Picard | 1.00 | 1.00 | 1.00 | 4.55E–12 | ||||

| GN-PCG | 1.00 | 1.00 | 1.00 | 4.52E–12 | |||||

| N-PCG | 1.00 | 1.00 | 1.00 | 4.52E–12 | |||||

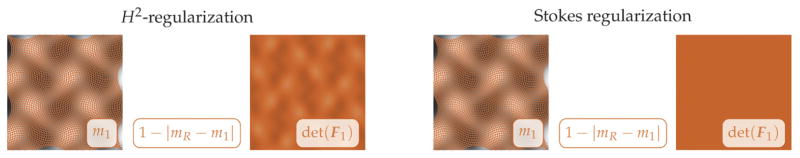

Figure 4.

Qualitative comparison of exemplary registration results of the convergence study reported in Table 4. In particular, we display the results for the N-PCG method for a grid size of nx = (256, 256)⊤. We refer to Table 4 and the text for details on the experimental setup. We report results for plain H2-regularization (γ = 0; images to the left) and the Stokes regularization scheme (γ = 1; H1-regularization; images to the right). We display the deformed template image m1, a pointwise map of the residual differences between mR and m1 (which appears completely white, as the residual differences are extremely small), as well as a pointwise map of the determinant of the deformation gradient det(F1) (from left to right as identified by the inset in the images). The values for the det(F1) are reported in Table 5. Information on how to interpret these images can be found in Appendix D.

Observations

The most important observations are that (i) there are significant differences in computational work between the Picard and Newton–Krylov methods with the latter being much more efficient, (ii) the differences between the Newton–Krylov methods are insignificant, (iii) the rate of convergence is independent of the grid resolution, and (iv) the numerical accuracy is almost at the order of machine precision.

The registered images are quantitatively (see Table 4) and qualitatively (see Figure 4) in excellent agreement. For the considered tolerance (reduction of the ℓ∞-norm of the reduced gradient by three orders of magnitude) we can reduce the L2-distance between three (compressible deformation) and four (incompressible deformation) orders of magnitude (see Table 4). The search direction of the Newton–Krylov methods is nicely scaled. No additional line search steps are necessary. We require 1.57 to 1.71 line search steps for the Picard iteration (on average). Note that we prescale the search direction of the Picard method by an additional parameter α̃k, which is estimated during the computation (see section 4.3.5 for details). Otherwise, the number of the line search steps would be seven to eight on average for the Picard method. The Picard method did stagnate during the computations. This is why the gradient has not been reduced by three orders of magnitude for the Picard method. However, it is in general possible to reduce the gradient accordingly. We decided to report only until stagnation for the Picard method as the number of the iterations would significantly increase without making any real progress.

The Newton–Krylov methods display quick convergence. Only five outer iterations are necessary to reduce the gradient by more than four orders of magnitude. The results demonstrate a significant difference in the computational work between first- and second-order methods for the considered tolerance.

The reconstruction quality improves by approximately one order of magnitude when switching from plain H2-regularization (γ = 0) to a Stokes regularization scheme (γ = 1; H1-regularization), as judged by the relative change in the L2-distance. This is expected, since the synthetic problem has been created under the assumption of mass conservation (i.e., ∇ · v★ = 0). Second, we expect a smaller contribution of the H1-regularization model on the solution for the same values of β.

From a theoretical point of view, we expect N-PCG to outperform GN-PCG (quadratic versus superlinear convergence). The reported results demonstrate an almost identical performance. This is due to the fact that we can drive the residual almost to zero, such that we can recover fast local convergence for the GN-PCG method (see section 4.2.2 for details).

The Picard method converges faster for the Stokes regularization scheme. However, the differences between the Picard and the Newton–Krylov methods are still significant with an approximately four-fold difference in nPDE. For Newton–Krylov methods, we can globally observe a slight increase in the number of the inner iterations when switching from plain H2-regularization to the Stokes regularization scheme. These differences have to be attributed to a varying relative change in the reduced gradient.9

The results reported in Table 5 demonstrate an excellent numerical accuracy for the mass conservation for all numerical schemes. The error in the determinant of the deformation gradient is 𝒪(1E–12), i.e., we achieve an accuracy that is almost at the order of machine precision for a grid resolution of nx = (256, 256)⊤ and nt = 1024.

Conclusion

We conclude that we can interchangeably use the Newton–Krylov methods. Therefore, given that N-PCG is more sensitive to noise and discontinuities in the data, we will exclusively consider GN-PCG for the remainder of the experiments. Also, if we require an inversion with high accuracy, the Newton–Krylov methods clearly outperform the Picard method (i.e., the preconditioned gradient descent).

Images with sharp features

Purpose

We study the grid convergence and deformation regularity for an image with sharp features. We compare the Picard and the GN-PCG method.

Setup

We consider the UT images (see section 5.1 for details on the construction of this synthetic registration problem). We report results for experimentally determined values of β ∈ {1E–2, 1E–3} with respect to different grid resolution levels , i = 1, 2, nt = 4max(nx). The remainder of the parameters are chosen as stated in the introduction of this section and in section 4.3.5. Both plain H2-regularization (γ = 0) as well as the Stokes regularization scheme (γ = 1; H1-regularization) are considered.

For images of size nx = (256, 256)⊤ and a Stokes regularization scheme, we observed difficulties in the inversion (only the number of the outer and the inner iterations increased; the algorithm still converges to the same solution), due to a strong forcing (i.e., the sharp features pushed the solver at an early stage to a solution that was far away from the final minimizer). We increased the smoothing by a factor of two as a remedy. This is not an issue for the practical application of our algorithm, as our framework features a method for performing a scale continuation as well as a continuation in the regularization parameter. Therefore, the user does not have to decide on σ or on β. In addition to that, we currently investigate adaptive approaches to automatically detect insufficient smoothness during the course of the optimization to prevent a deterioration in the convergence behavior.

The remainder of the parameters are chosen as stated in the introduction of this section as well as in section 4.3.5.

Results

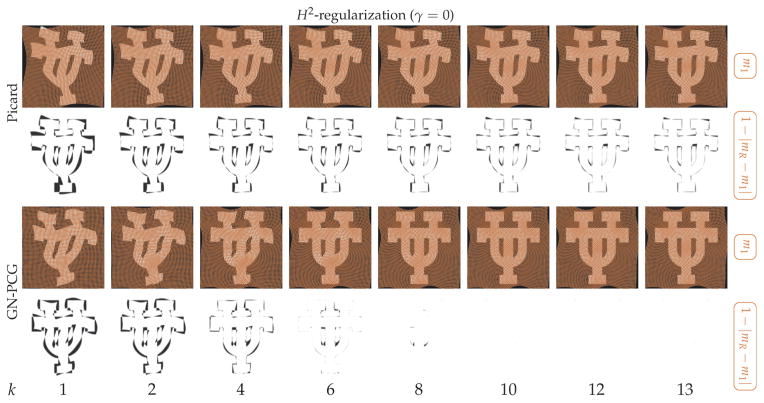

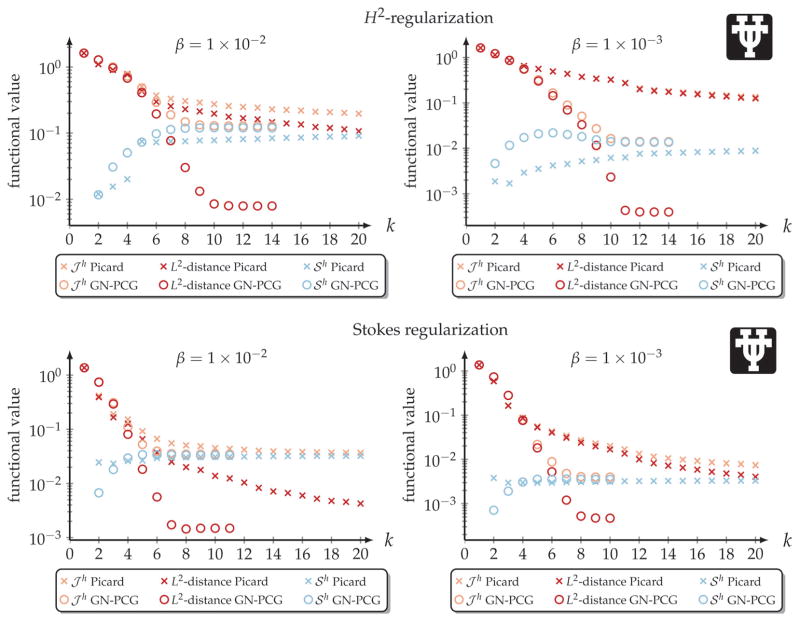

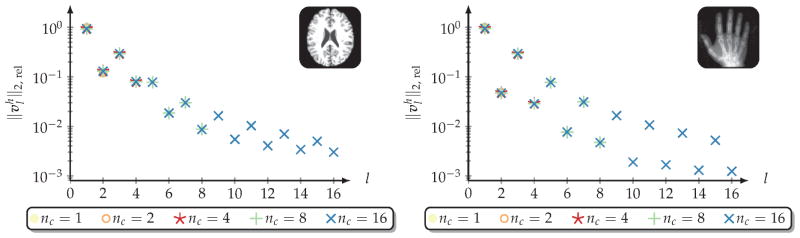

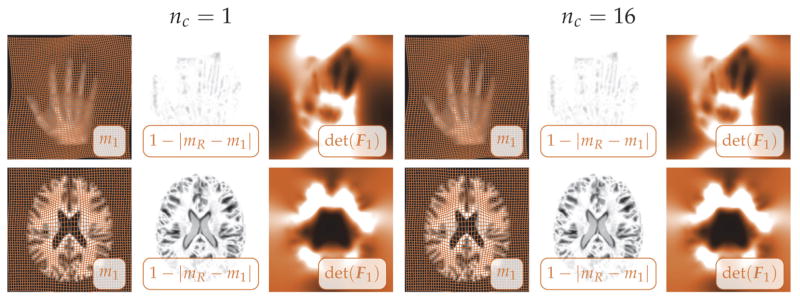

Table 6 summarizes the results of the convergence study. We illustrate intermediate results with respect to the first 13 (outer) iterations k in Figure 5 (plain H2-regularization; γ = 0). We report the trend of the individual building blocks of 𝒥h (contribution of the L2-distance and the regularization model 𝒮h) in Figure 6. We report measures of deformation regularity in Table 7.

Table 6.

Quantitative analysis of the convergence for the Picard and the GN-PCG method. The test problem is the UT images (see section 5.1 for more details on the construction of this synthetic registration problem). We compare convergence results for plain H2-regularization (γ = 0; top block) and the Stokes regularization scheme (γ = 1; bottom block; H1-regularization) for empirically chosen regularization parameters β ∈ {1E–2, 1E–3}. We report results for different grid sizes , i = 1, 2, nt = 4 max(nx). We invert for a stationary velocity field (i.e., nc = 1). We terminate the optimization if the relative change of the ℓ∞-norm of the reduced gradient gh is larger than or equal to three orders of magnitude or if the change in 𝒥h between 10 successive iterations is below or equal to 1E–6 (i.e., the algorithm stagnates). We report the number of the (outer) iterations (k★), the number of the hyperbolic PDE solves (nPDE) and the relative change of (i) the L2-distance ( ), (ii) the objective ( ), and (iii) the (reduced) gradient (||gh||∞,rel) as well as the average number of the line search steps ᾱ. Note that we introduced a memory for the step size into the Picard method to stabilize the optimization (see section 4.3.5 and the description of the results). The definitions for the reported measures can be found in Table 2. This study directly relates to the results for the smooth registration problem (see section 5.3.2, in particular Table 4).

|

|

k★ | nPDE |

|

|

||gh||∞,rel | ᾱ | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||||

| H2-regularization | β = 1E–2 | Picard | 64 | 130 | 752 | 1.99E–2 | 1.18E–1 | 2.06E–2 | 1.68 | |||

| 128 | 231 | 1589 | 8.29E–3 | 8.47E–2 | 4.03E–2 | 1.72 | ||||||

| 256 | 388 | 3022 | 5.05E–3 | 7.92E–2 | 9.86E–2 | 1.68 | ||||||

|

| ||||||||||||

| GN-PCG | 64 | 7 | 282 | 1.94E–2 | 1.16E–1 | 3.30E–4 | 1.00 | |||||

| 128 | 9 | 450 | 8.09E–3 | 8.37E–2 | 6.07E–4 | 1.00 | ||||||

| 256 | 13 | 789 | 4.87E–3 | 7.85E–2 | 7.28E–4 | 1.00 | ||||||

|

| ||||||||||||

| β = 1E–3 | Picard | 64 | 339 | 4410 | 1.84E–3 | 1.54E–2 | 2.46E–2 | 1.56 | ||||

| 128 | 466 | 9671 | 7.19E–4 | 9.84E–3 | 5.98E–2 | 1.64 | ||||||

| 256 | 632 | 8690 | 5.35E–4 | 8.81E–3 | 1.08E–1 | 1.64 | ||||||

|

| ||||||||||||

| GN-PCG | 64 | 7 | 670 | 1.44E–3 | 1.50E–2 | 2.59E–4 | 1.00 | |||||

| 128 | 8 | 929 | 4.47E–4 | 9.60E–3 | 4.76E–4 | 1.00 | ||||||

| 256 | 13 | 1744 | 2.45E–4 | 8.55E–3 | 4.04E–4 | 1.00 | ||||||

|

| ||||||||||||

| Stokes regularization | β = 1E–2 | Picard | 64 | 92 | 448 | 2.73E–3 | 3.62E–2 | 7.54E–3 | 1.64 | |||

| 128 | 136 | 958 | 8.92E–4 | 2.38E–2 | 1.63E–2 | 1.72 | ||||||

| 256 | 143 | 1004 | 1.09E–3 | 2.53E–2 | 1.75E–2 | 1.68 | ||||||

|

| ||||||||||||

| GN-PCG | 64 | 7 | 313 | 2.72E–3 | 3.59E–2 | 4.70E–4 | 1.00 | |||||

| 128 | 9 | 437 | 8.88E–4 | 2.37E–2 | 5.59E–4 | 1.00 | ||||||

| 256 | 10 | 514 | 1.09E–3 | 2.52E–2 | 5.49E–4 | 1.00 | ||||||

|

| ||||||||||||

| β = 1E–3 | Picard | 64 | 216 | 2823 | 9.99E–4 | 4.69E–3 | 1.34E–2 | 1.56 | ||||

| 128 | 179 | 5465 | 3.57E–4 | 2.77E–3 | 1.79E–2 | 1.69 | ||||||

| 256 | 175 | 5569 | 5.27E–4 | 3.07E–3 | 2.22E–2 | 1.68 | ||||||

|

| ||||||||||||

| GN-PCG | 64 | 8 | 1162 | 7.57E–4 | 4.55E–3 | 8.23E–4 | 1.00 | |||||

| 128 | 9 | 1069 | 2.15E–4 | 2.65E–3 | 5.67E–4 | 1.00 | ||||||

| 256 | 9 | 769 | 3.43E–4 | 2.92E–3 | 6.44E–4 | 1.00 | ||||||

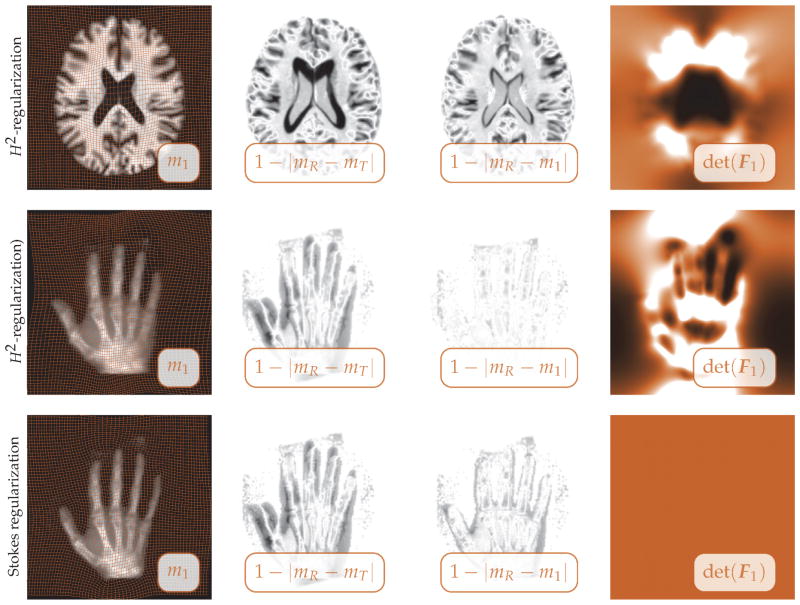

Figure 5.

Illustration of the course of the optimization for the Picard (top block) and the GN-PCG (bottom block) methods with respect to the (outer) iteration index k for exemplary results of the convergence study reported in Table 6. We refer to Table 6 and the text for details on the experimental setup. We report results for plain H2-regularization (γ = 0), with an empirically chosen regularization parameter of β = 1E–3 for images of grid size nx = (256, 256)⊤. We report results until convergence of the GN-PCG method (k★ = 13). We display the deformed template m1 (top row) and a map of the pointwise difference between mR and m1 (bottom row) for both iterative optimization methods (as identified by the inset on the right of the images). Information on how to interpret these images can be found in Appendix D.

Figure 6.

Trend of the objective 𝒥h, the L2-distance, and the regularization model 𝒮h (logarithmic scale) for the Picard and the GN-PCG method with respect to the (outer) iteration index k for exemplary results of the convergence study reported in Table 6. We refer to Table 6 and the text for details on the experimental setup. The trend of the functionals is plotted for different (empirically determined) choices of β (left column: β = 1E–2; right column: β = 1E–3) and a grid size of nx = (256, 256)⊤. We report results for plain H2-regularization (γ = 0; top row) and the Stokes regularization scheme (γ = 1; H1-regularization; bottom row).

Table 7.

Values for the determinant of the deformation gradient for exemplary results of the convergence study reported in Table 6. We report results for the Picard and the GN-PCG method. We refer to Table 6 and the text for details on the experimental setup. We report results for the Stokes regularization scheme (γ = 1; H1-regularization). The regularization parameter is set to β = 1E–3. The grid size is nx = (256, 256)⊤. These results directly relate to those reported for the smooth registration problem (see section 5.3.2, in particular Table 5).

|

|

|

|

|

|||||

|---|---|---|---|---|---|---|---|---|

| Picard | 10.00E–1 | 1.00 | 1.00 | 2.03E–5 | ||||

| GN-PCG | 10.00E–1 | 1.00 | 1.00 | 2.29E–5 |

Observations

The most important observations are(i) the GN-PCG method displays a quicker convergence than the Picard method, (ii) we cannot achieve the same inversion accuracy with the Picard method as compared to the GN-PCG method, and (iii) the number of the (inner and outer) iterations increases and is no longer independent of the resolution level.

The rate of convergence decreases compared to the results reported in the former section (see Table 4). Overall, we require more outer and inner iterations to solve the registration problem.

The residual differences between mR and m1 clearly depend on the choice of β (see Table 4 and Figure 6). We achieve a similar reduction in the L2-distance for both the Picard and the GN-PCG method (two to four orders of magnitude). The residual differences are less pronounced when switching from plain H2-regularization to the Stokes regularization scheme as compared to the results reported in section 5.3.2.

We cannot guarantee that it is possible to reduce the gradient by three orders of magnitude if we use the Picard method. Even if we do not include a condition to terminate if we observe stagnation (i.e., the change in 𝒥h is below or equal to 1E–6 for 10 consecutive iterations), it is for some of the experiments not possible to reduce the gradient by three orders of magnitude as the changes of the objective hit our numerical accuracy (which causes the line search to fail). We do not observe this issue when considering the GN-PCG method. Further, there are significant differences in terms of the computational work. If we do not account for the stagnation of the Picard method we have observed a number of hyperbolic PDE solves that is well above 𝒪(1E4). Clearly, in a practical application we terminate the Picard method at an earlier stage, as we no longer make significant progress. However, in this part of the study we are interested in the convergence properties. This experiment demonstrates that we cannot guarantee a high inversion accuracy (i.e., a significant reduction in the gradient) when turning to first-order methods. Note that we have stabilized the Picard method by introducing an additional scaling parameter for the search direction that prevents additional line search steps (see section 4.3.5). If we neglect this scaling, we observe seven to nine line search steps on average (results not included in this study) for the considered problem; also, the optimization fails at an early stage. The search direction obtained via the GN-PCG method is nicely scaled; no additional line search steps are necessary.