Abstract

In this manuscript, we study the statistical properties of convex clustering. We establish that convex clustering is closely related to single linkage hierarchical clustering and k-means clustering. In addition, we derive the range of the tuning parameter for convex clustering that yields a non-trivial solution. We also provide an unbiased estimator of the degrees of freedom, and provide a finite sample bound for the prediction error for convex clustering. We compare convex clustering to some traditional clustering methods in simulation studies.

Keywords: Degrees of freedom, fusion penalty, hierarchical clustering, k-means, prediction error, single linkage

1. Introduction

Let be a data matrix with n observations and p features. We assume for convenience that the rows of X are unique. The goal of clustering is to partition the n observations into K clusters, D1, . . . , DK, based on some similarity measure. Traditional clustering methods such as hierarchical clustering, k-means clustering, and spectral clustering take a greedy approach (see, e.g., Hastie, Tibshirani and Friedman, 2009).

In recent years, several authors have proposed formulations for convex clustering (Pelckmans et al., 2005; Hocking et al., 2011; Lindsten, Ohlsson and Ljung, 2011; Chi and Lange, 2014a). Chi and Lange (2014a) proposed efficient algorithms for convex clustering. In addition, Radchenko and Mukherjee (2014) studied the theoretical properties of a closely related problem to convex clustering, and Zhu et al. (2014) studied the condition needed for convex clustering to recover the correct clusters.

Convex clustering of the rows, X1., . . . , Xn., of a data matrix X involves solving the convex optimization problem

| (1) |

where for q ∈ {1, 2, ∞}. The penalty Qq(U) generalizes the fused lasso penalty proposed in Tibshirani et al. (2005), and encourages the rows of Û, the solution to (1), to take on a small number of unique values. On the basis of Û, we define the estimated clusters as follows.

Definition 1

The ith and i′th observations are estimated by convex clustering to belong to the same cluster if and only if Ûi. = Ûi′..

The tuning parameter λ controls the number of unique rows of Û, i.e., the number of estimated clusters. When λ = 0, Û = X, and so each observation belongs to its own cluster. As λ increases, the number of unique rows of Û will decrease. For sufficiently large λ, all rows of Û will be identical, and so all observations will be estimated to belong to a single cluster. Note that (1) is strictly convex, and therefore the solution Û is unique.

To simplify our analysis of convex clustering, we rewrite (1). Let and let , where the vec(·) operator is such that x(i–1)p+j = Xij and u(i–1)p+j = Uij. Construct , and define the index set such that the p × np submatrix satisfies . Furthermore, for a vector , we define

| (2) |

Thus, we have . Problem (1) can be rewritten as

| (3) |

When q = 1, (3) is an instance of the generalized lasso problem studied in Tibshirani and Taylor (2011). Let û be the solution to (3). By Definition 1, the ith and i′th observations belong to the same cluster if and only if . In what follows, we work with (3) instead of (1) for convenience.

Let be the Moore-Penrose pseudo-inverse of D. We state some properties of D and D† that will prove useful in later sections.

Lemma 1

The matrices D and D† have the following properties.

-

(i)

rank(D) = p(n – 1).

-

(ii)

.

-

(iii)

(DTD)†DT = D† and (DDT)†D = (DT)†.

-

(iv)

is a projection matrix onto the column space of D.

-

(v)

Define Λmin(D) and Λmax(D) as the minimum non-zero singular value and maximum singular value of the matrix D, respectively. Then, Λmin(D) = Λmax(D) = √n.

In this manuscript, we study the statistical properties of convex clustering. In Section 2, we study the dual problem of (3) and use it to establish that convex clustering is closely related to single linkage hierarchical clustering. In addition, we establish a connection between k-means clustering and convex clustering. In Section 3, we present some properties of convex clustering. More specifically, we characterize the range of the tuning parameter λ in (3) such that convex clustering yields a non-trivial solution. We also provide a finite sample bound for the prediction error, and an unbiased estimator of the degrees of freedom for convex clustering. In Section 4, we conduct numerical studies to evaluate the empirical performance of convex clustering relative to some existing proposals. We close with a discussion in Section 5.

2. Convex clustering, single linkage hierarchical clustering, and k-means clustering

In Section 2.1, we study the dual problem of convex clustering (3). Through its dual problem, we establish a connection between convex clustering and single linkage hierarchical clustering in Section 2.2. We then show that convex clustering is closely related to k-means clustering in Section 2.3.

2.1. Dual problem of convex clustering

We analyze convex clustering (3) by studying its dual problem. Let s, q ∈ {1, 2, ∞} satisfy . For a vector , let denote the dual norm of Pq(b), which takes the form

| (4) |

We refer the reader to Chapter 6 in Boyd and Vandenberghe (2004) for an overview of the concept of duality.

Lemma 2

The dual problem of convex clustering (3) is

| (5) |

where is the dual variable. Furthermore, let û and be the solutions to (3) and (5), respectively. Then,

| (6) |

While (3) is strictly convex, its dual problem (5) is not strictly convex, since D is not of full rank by Lemma 1(i). Therefore, the solution to (5) is not unique. Lemma 1(iv) indicates that is a projection matrix onto the column space of D. Thus, the solution Dû in (6) can be interpreted as the difference between Dx, the pairwise difference between rows of X, and the projection of a dual variable onto the column space of D.

We now consider a modification to the convex clustering problem (3). Recall from Definition 1 that the ith and i'th observations are in the same estimated cluster if . This motivates us to estimate γ = Du directly by solving

| (7) |

We establish a connection between (3) and (7) by studying the dual problem of (7).

Lemma 3

The dual problem of (7) is

| (8) |

where is the dual variable. Furthermore, let and be the solutions to (7) and (8), respectively. Then,

| (9) |

Comparing (6) and (9), we see that the solutions to convex clustering (3) and the modified problem (7) are closely related. In particular, both Dû in (6) and in (9) involve taking the difference between Dx and some function of a dual variable that has norm less than or equal to λ. The main difference is that in (6), the dual variable is projected into the column space of D.

Problem (7) is quite simple, and in fact it amounts to a thresholding operation on Dx when q = 1 or q = 2, i.e., the solution is obtained by performing soft thresholding on Dx, or group soft thresholding on for all i < i′, respectively (Bach et al., 2011). When q = ∞, an efficient algorithm was proposed by Duchi and Singer (2009).

2.2. Convex clustering and single linkage hierarchical clustering

In this section, we establish a connection between convex clustering and single linkage hierarchical clustering. Let be the solution to (7) with Pq(·) norm and let s, q ∈ {1, 2, ∞} satisfy . Since (7) is separable in for all i < i′, by Lemma 2.1 in Haris, Witten and Simon (2015), it can be verified that

| (10) |

It might be tempting to conclude that a pair of observations (i, i′) belong to the same cluster if . However, by inspection of (10), it could happen that and , but .

To overcome this problem, we define the n × n adjacency matrix Aq(λ) as

| (11) |

Subject to a rearrangement of the rows and columns, Aq(λ) is a block-diagonal matrix with some number of blocks, denoted as R. On the basis of Aq(λ), we define R estimated clusters: the indices of the observations in the rth cluster are the same as the indices of the observations in the rth block of Aq(λ).

We now present a lemma on the equivalence between single linkage hierarchical clustering and the clusters identified by (7) using (11). The lemma follows directly from the definition of single linkage clustering (see, for instance, Chapter 3.2 of Jain and Dubes, 1988).

Lemma 4

Let Ê1, . . . , ÊR index the blocks within the adjacency matrix Aq(λ). Let s satisfy . Let D̂1, . . . , D̂K denote the clusters that result from performing single linkage hierarchical clustering on the dissimilarity matrix defined by the pairwise distance between the observations ∥Xi. – Xi′∥s, and cutting the dendrogram at the height of λ > 0. Then K = R, and there exists a permutation π : {1, . . . , K} → {1, . . . , K} such that Dk = Eπ(k) for k = 1, . . . , K.

In other words, Lemma 4 implies that single linkage hierarchical clustering and (7) yield the same estimated clusters. Recalling the connection between (3) and (7) established in Section 2.1, this implies a close connection between convex clustering and single linkage hierarchical clustering.

2.3. Convex clustering and k-means clustering

We now establish a connection between convex clustering and k-means clustering. k-means clustering seeks to partition the n observations into K clusters by minimizing the within cluster sum of squares. That is, the clusters are given by the partition D̂1, . . . , D̂K of {1, . . . , n} that solves the optimization problem

| (12) |

We consider convex clustering (1) with q = 0,

| (13) |

where is an indicator function that equals one if Ui. ≠ Ui′.. Note that (13) is no longer a convex optimization problem.

We now establish a connection between (12) and (13). For a given value of λ, (13) is equivalent to

| (14) |

subject to the constraint that {μ1, . . . , μK} are the unique rows of U and Ek = {i : Ui. = μk}. Note that is an indicator function that equals to one if i ∈ Ek and . Thus, we see from (12) and (14) that k-means clustering is equivalent to convex clustering with q = 0, up to a penalty term .

To interpret the penalty term, we consider the case when there are two clusters E1 and E2. The penalty term reduces to λ|E1| · (n – |E1|), where |E1| is the cardinality of the set E1. The term λ|E1| · (n – |E1|) is minimized when |E1| is either 1 or n – 1, encouraging one cluster taking only one observation. Thus, compared to k-means clustering, convex clustering with q = 0 has the undesirable behavior of producing clusters whose sizes are highly unbalanced.

3. Properties of convex clustering

We now study the properties of convex clustering (3) with q ∈ {1, 2}. In Section 3.1, we establish the range of the tuning parameter λ in (3) such that convex clustering yields a non-trivial solution with more than one cluster. We provide finite sample bounds for the prediction error of convex clustering in Section 3.2. Finally, we provide unbiased estimates of the degrees of freedom for convex clustering in Section 3.3.

3.1. Range of λ that yields non-trivial solution

In this section, we establish the range of the tuning parameter λ such that convex clustering (3) yields a solution with more than one cluster.

Lemma 5

Let

| (15) |

Convex clustering (3) with q = 1 or q = 2 yields a non-trivial solution of more than one cluster if and only if λ < λupper.

By Lemma 5, we see that calculating λupper boils down to solving a convex optimization problem. This can be solved using a standard solver such as CVX in MATLAB. In the absence of such a solver, a loose upper bound on λupper is given by for q = 1, or for q = 2.

Therefore, to obtain the entire solution path of convex clustering, we need only consider values of λ that satisfy λ ≤ λupper.

3.2. Bounds on prediction error

In this section, we assume the model x = u + ϵ, where is a vector of independent sub-Gaussian noise terms with mean zero and variance σ2, and u is an arbitrary np-dimensional mean vector. We refer the reader to pages 24-25 in Boucheron, Lugosi and Massart (2013) for the properties of sub-Gaussian random variables. We now provide finite sample bounds for the prediction error of convex clustering (3). Let λ be the tuning parameter in (3) and let .

Lemma 6

Suppose that x = u + ϵ, where and the elements of ϵ are independent sub-Gaussian random variables with mean zero and variance σ2. Let û be the estimate obtained from (3) with q = 1. If , then

holds with probability at least , where c1 and c2 are positive constants appearing in Lemma 10.

We see from Lemma 6 that the average prediction error is bounded by the oracle quantity ∥Du∥1 and a second term that decays to zero as n, p → ∞. Convex clustering with q = 1 is prediction consistent only if λ′∥Du∥1 = o (1). We now provide a scenario for which λ′∥Du∥1 = o (1) holds.

Suppose that we are in the high-dimensional setting in which p > n and the true underlying clusters differ only with respect to a fixed number of features (Witten and Tibshirani, 2010). Also, suppose that each element of Du — that is, Uij–Ui′j — is of order O(1). Therefore, ∥Du∥1 = O(n2), since by assumption only a fixed number of features have different means across clusters. Assume that . Under these assumptions, convex clustering with q = 1 is prediction consistent.

Next, we present a finite sample bound on the prediction error for convex clustering with q = 2.

Lemma 7

Suppose that x = u + ϵ, where and the elements of ϵ are independent sub-Gaussian random variables with mean zero and variance σ2. Let û be the estimate obtained from (3) with q = 2. If , then

holds with probability at least , where c1 and c2 are positive constants appearing in Lemma 10.

Under the scenario described above, , and therefore . Convex clustering with q = 2 is prediction consistent if .

3.3. Degrees of freedom

Convex clustering recasts the clustering problem as a penalized regression problem, for which the notion of degrees of freedom is established (Efron, 1986). Under this framework, we provide an unbiased estimator of the degrees of freedom for clustering. Recall that û is the solution to convex clustering (3). Suppose that Var(x) = σ2I. Then, the degrees of freedom for convex clustering is defined as (see, e.g., Efron, 1986). An unbiased estimator of the degrees of freedom for convex clustering with q = 1 follows directly from Theorem 3 in Tibshirani and Taylor (2012).

Lemma 8

Assume that x ~ MVN(u, σ2I), and let û be the solution to (3) with q = 1. Furthermore, let . We define the matrix by removing the rows of D that correspond to . Then

| (16) |

is an unbiased estimator of the degrees of freedom of convex clustering with q = 1.

The following corollary follows directly from Corollary 1 in Tibshirani and Taylor (2011).

Corollary 1

Assume that x ~ MVN(u, σ2I), and let û be the solution to (3) with q = 1. The fit û has degrees of freedom

There is an interesting interpretation of the degrees of freedom estimator for convex clustering with q = 1. Suppose that there are K estimated clusters, and all elements of the estimated means corresponding to the K estimated clusters are unique. Then the degrees of freedom is Kp, the product of the number of estimated clusters and the number of features.

Next, we provide an unbiased estimator of the degrees of freedom for convex clustering with q = 2.

Lemma 9

Assume that x ~ MVN(u, σ2I), and let û be the solution to (3) with q = 2. Furthermore, let . We define the matrix by removing rows of D that correspond to . Let be the projection matrix onto the complement of the space spanned by the rows of . Then

| (17) |

is an unbiased estimator of the degrees of freedom of convex clustering with q = 2.

When λ = 0, for all i < i′. Therefore, and the degrees of freedom estimate is equal to tr(I) = np. When λ is sufficiently large that is an empty set, one can verify that P = I – DT (DDT)†D is a projection matrix of rank p, using the fact that rank(D) = p(n – 1) from Lemma 1(i). Therefore d̂f2 = tr(P) = p.

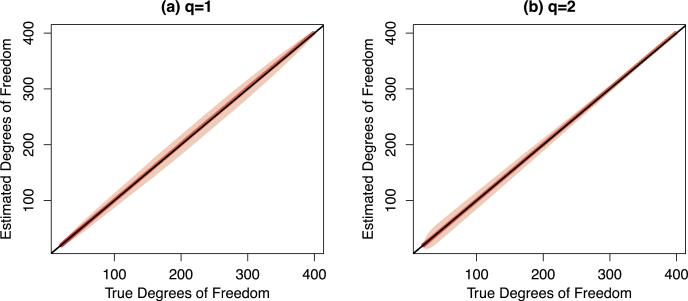

We now assess the accuracy of the proposed unbiased estimators of the degrees of freedom. We simulate Gaussian clusters with K = 2 as described in Section 4.1 with n = p = 20 and σ = 0.5. We perform convex clustering with q = 1 and q = 2 across a fine grid of tuning parameters λ. For each λ, we compare the quantities (16) and (17) to

| (18) |

which is an unbiased estimator of the true degrees of freedom, , averaged over 500 data sets. In addition, we plot the point-wise intervals of the estimated degrees of freedom (mean ± 2 × standard deviation). Note that (18) cannot be computed in practice, since it requires knowledge of the unknown quantity u. Results are displayed in Figure 1. We see that the estimated degrees of freedom are quite close to the true degrees of freedom.

Fig 1.

We compare the true degrees of freedom of convex clustering (x-axis), given in (18), to the proposed unbiased estimators of the degrees of freedom (y-axis), given in Lemmas 8 and 9. Panels (a) and (b) contain the results for convex clustering with q = 1 and q = 2, respectively. The red line is the mean of the estimated degrees of freedom for convex clustering over 500 data sets, obtained by varying the tuning parameter λ. The shaded bands indicate the point-wise intervals of the estimated degrees of freedom (mean ± 2 × standard deviation), over 500 data sets. The black line indicates y = x.

4. Simulation studies

We compare convex clustering with q = 1 and q = 2 to the following proposals:

Single linkage hierarchical clustering with the dissimilarity matrix defined by the Euclidean distance between two observations.

The k-means clustering algorithm (Lloyd, 1982).

Average linkage hierarchical clustering with the dissimilarity matrix defined by the Euclidean distance between two observations.

We apply convex clustering (3) with q = {1, 2} using the R package cvxclustr (Chi and Lange, 2014b). In order to obtain the entire solution path for convex clustering, we use a fine grid of λ values for (3), in a range guided by Lemma 5. We apply the other methods by allowing the number of clusters to vary over a range from 1 to n clusters. To evaluate and quantify the performance of the different clustering methods, we use the Rand index (Rand, 1971). A high value of the Rand index indicates good agreement between the true and estimated clusters.

We consider two different types of clusters in our simulation studies: Gaussian clusters and non-convex clusters.

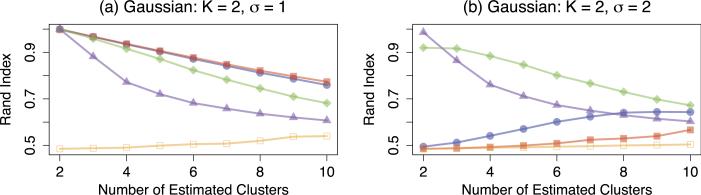

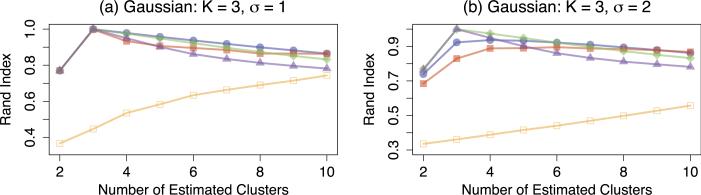

4.1. Gaussian clusters

We generate Gaussian clusters with K = 2 and K = 3 by randomly assigning each observation to a cluster with equal probability. For K = 2, we create the mean vectors μ1 = 1p and μ2 = −1p. For K = 3, we create the mean vectors μ1 = −3 · 1p, μ2 = 0p, and μ3 = 3 · 1p. We then generate the n × p data matrix X according to Xi. ~ MVN(μk, σ2I) for i ∈ Dk. We consider n = p = 30 and σ = {1, 2}. The Rand indices for K = 2 and K = 3, averaged over 200 data sets, are summarized in Figures 2 and 3, respectively.

Fig 2.

Simulation results for Gaussian clusters with K = 2, n = p = 30, averaged over 200 data sets, for two noise levels σ = {1, 2}. Colored lines correspond to single linkage hierarchical -clustering ( ), average linkage hierarchical clustering (

), average linkage hierarchical clustering ( ), k-means clustering (1074i03), convex clustering with q = 1 (

), k-means clustering (1074i03), convex clustering with q = 1 ( ), and convex clustering with q = 2 (

), and convex clustering with q = 2 ( ).

).

Fig 3.

Simulation results for Gaussian clusters with K = 3, n = p = 30, averaged over 200 data sets, for two noise levels σ = {1, 2}. Line types are as described in Figure 2.

Recall from Section 2.2 that there is a connection between convex clustering and single linkage clustering. However, we note that the two clustering methods are not equivalent. From Figure 2(a), we see that single linkage hierarchical clustering performs very similarly to convex clustering with q = 2 when the signal-to-noise ratio is high. However, from Figure 2(b), we see that single linkage hierarchical clustering outperforms convex clustering with q = 2 when the signal-to-noise ratio is low.

We also established a connection between convex clustering and k-means clustering in Section 2.3. From Figure 2(a), we see that k-means clustering and convex clustering with q = 2 perform similarly when two clusters are estimated and the signal-to-noise ratio is high. In this case, the first term in (14) can be made extremely small if the clusters are correctly estimated, and so both k-means and convex clustering yield the same (correct) cluster estimates. In contrast, when the signal-to-noise ratio is low, the first term in (14) is relatively large regardless of whether or not the clusters are correctly estimated, and so convex clustering focuses on minimizing the penalty term in (14). Therefore, when convex clustering with q = 2 estimates two clusters, one cluster is of size one and the other is of size n – 1, as discussed in Section 2.3. Figure 2(b) illustrates this phenomenon when both methods estimate two clusters: convex clustering with q = 2 has a Rand index of approximately 0.5 while k-means clustering has a Rand index of one.

All methods outperform convex clustering with q = 1. Moreover, k-means clustering and average linkage hierarchical clustering outperform single linkage hierarchical clustering and convex clustering when the signal-to-noise ratio is low. This suggests that the minimum signal needed for convex clustering to identify the correct clusters may be larger than that of average linkage hierarchical clustering and k-means clustering. We see similar results for the case when K = 3 in Figure 3.

4.2. Non-convex clusters

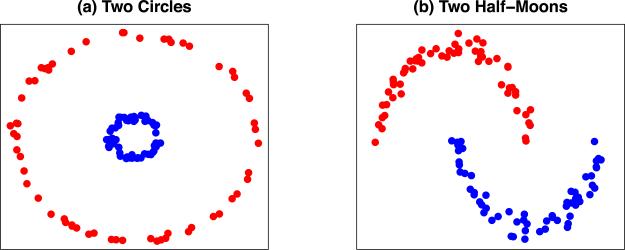

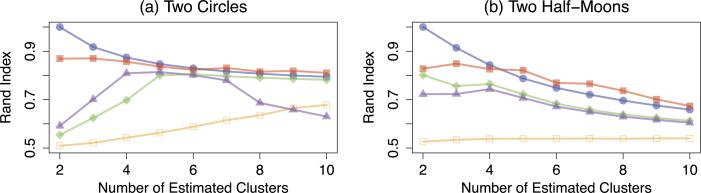

We consider two types of non-convex clusters: two circles clusters (Ng, Jordan and Weiss, 2002) and two half-moon clusters (Hocking et al., 2011; Chi and Lange, 2014a). For two circles clusters, we generate 50 data points from each of the two circles that are centered at (0, 0) with radiuses two and 10, respectively. We then add Gaussian random noise with mean zero and standard deviation 0.1 to each data point. For two half-moon clusters, we generate 50 data points from each of the two half-circles that are centered at (0, 0) and (30, 3) with radius 30, respectively. We then add Gaussian random noise with mean zero and standard deviation one to each data point. Illustrations of both types of clusters are given in Figure 4. The Rand indices for both types of clusters, averaged over 200 data sets, are summarized in Figure 5.

Fig 4.

Illustrations of two circles clusters and two half-moons clusters with n = 100.

Fig 5.

Simulation results for the two circles and two half-moons clusters with n = 100, averaged over 200 data sets. Line types are as described in Figure 2.

We see from Figure 5 that convex clustering with q = 2 and single linkage hierarchical clustering have similar performance, and that they outperform all of the other methods. Single linkage hierarchical clustering is able to identify non-convex clusters since it is an agglomerative algorithm that merges the closest pair of observations not yet belonging to the same cluster into one cluster. In contrast, average linkage hierarchical clustering and k-means clustering are known to perform poorly on identifying non-convex clusters (Ng, Jordan and Weiss, 2002; Hocking et al., 2011). Again, convex clustering with q = 1 has the worst performance.

4.3. Selection of the tuning parameter λ

Convex clustering (3) involves a tuning parameter λ, which determines the estimated number of clusters. Some authors have suggested a hold-out validation approach to select tuning parameters for clustering problems (see, for instance, Tan and Witten, 2014; Chi, Allen and Baraniuk, 2014). In this section, we present an alternative approach for selecting λ using the unbiased estimators of the degrees of freedom derived in Section 3.3.

The Bayesian Information Criterion (BIC) developed in Schwarz (1978) has been used extensively for model selection. However, it is known that the BIC does not perform well unless the number of observations is far larger than the number of parameters (Chen and Chen, 2008, 2012). For convex clustering (3), the number of observations is equal to the number of parameters. Thus, we consider the extended BIC (Chen and Chen, 2008, 2012), defined as

| (19) |

where is the convex clustering estimate for a given value of q and λ, γ ∈ [0, 1], and d̂fq is given in Section 3.3. Note that we suppress the dependence of ûq and d̂fq on λ for notational convenience. We see that when γ = 0, the extended BIC reduces to the classical BIC.

To evaluate the performance of the extended BIC in selecting the number of clusters, we generate Gaussian clusters with K = 2 and K = 3 as described in Section 4.1, with n = p = 20, and σ = 0.5. We perform convex clustering with q = 2 over a fine grid of λ, and select the value of λ for which the quantity eBICq,γ is minimized. We consider γ ∈ {0, 0.5, 0.75, 1}. Table 1 reports the proportion of datasets for which the correct number of clusters was identified, as well as the average Rand index.

Table 1.

Simulation study to evaluate the performance of the extended BIC for tuning parameter selection for convex clustering with q = 2. Results are reported over 100 simulated data sets. We report the proportion of data sets for which the correct number of clusters was identified, and the average Rand index.

| eBIC2,γ | Correct number of clusters | Rand index | |

|---|---|---|---|

| Gaussian clusters, K = 2 | γ = 0 | 0.94 | 0.9896 |

| γ = 0.5 | 0.98 | 0.9991 | |

| γ = 0.75 | 0.99 | 0.9995 | |

| γ =1 | 0.99 | 0.9995 | |

| Gaussian clusters, K = 3 | γ = 0 | 0.06 | 0.7616 |

| γ = 0.5 | 0.59 | 0.9681 | |

| γ = 0.75 | 0.70 | 0.9768 | |

| γ =1 | 0.84 | 0.9873 | |

From Table 1, we see that the extended BIC is able to select the true number of clusters accurately for K = 2. When K = 3, the classical BIC (γ = 0) fails to select the true number of clusters. In contrast, the extended BIC with γ = 1 has the best performance.

5. Discussion

Convex clustering recasts the clustering problem into a penalized regression problem. By studying its dual problem, we show that there is a connection between convex clustering and single linkage hierarchical clustering. In addition, we establish a connection between convex clustering and k-means clustering. We also establish several statistical properties of convex clustering. Through some numerical studies, we illustrate that the performance of convex clustering may not be appealing relative to traditional clustering methods, especially when the signal-to-noise ratio is low.

Many authors have proposed a modification to the convex clustering problem (1),

| (20) |

where W is an n × n symmetric matrix of positive weights, and (Pelckmans et al., 2005; Hocking et al., 2011; Lindsten, Ohlsson and Ljung, 2011; Chi and Lange, 2014a). For instance, the weights can be defined as for some constant ϕ > 0. This yields better empirical performance than (1) (Hocking et al., 2011; Chi and Lange, 2014a). We leave an investigation of the properties of (20) to future work.

Acknowledgments

We thank Ashley Petersen, Ali Shojaie, and Noah Simon for helpful conversations on earlier drafts of this manuscript. We thank the editor and two reviewers for helpful comments that improved the quality of this manuscript. D. W. was partially supported by a Sloan Research Fellowship, NIH Grant DP5OD009145, and NSF CAREER DMS-1252624.

Appendix A: Proof of Lemmas 2–3

Proof of Lemma 2

We rewrite problem (3) as

with the Lagrangian function

| (A-1) |

where is the Lagrangian dual variable. In order to derive the dual problem, we need to minimize the Lagrangian function over the primal variables u and η1. Recall from (4) that is the dual norm of . It can be shown

and

Therefore, the dual problem for (3) is

| (A-2) |

We now establish an explicit relationship between the solution to convex clustering and its dual problem. differentiating the Lagrangian function (A-1) with respect to u and setting it equal to zero, we obtain

where is the solution to the dual problem, which satisfies by (A-2). Multiplying both sides by D, we obtain the relationship (6).

Proof of Lemma 3

We rewrite (7) as

with the Lagrangian function

| (A-3) |

where is the Lagrangian dual variable. In order to derive the dual problem, we minimize the Lagrangian function over the primal variables γ and η2. It can be shown that

and

Therefore, the dual problem for (7) is

| (A-4) |

We now establish an explicit relationship between the solution to (7) and its dual problem. Differentiating the Lagrangian function (A-3) with respect to γ and setting it equal to zero, we obtain

where is the solution to the dual problem, which we know from (A-4) satisfies .

Appendix B: Proof of Lemma 5

Proof of Lemma 5

Since D is not of full rank by Lemma 1(i), the solution to (5) in the absence of constraint is not unique, and takes the form

| (B-1) |

for . The second equality follows from Lemma 1(iii) and the last equality follows from Lemma 1(ii).

Let û be the solution to (3). Substituting given in (B-1) into (6), we obtain

Recall from Definition 1 that all observations are estimated to belong to the same cluster if Dû = 0. For any in (B-1), picking ) guarantees that the constraint on the dual problem (5) is inactive, and therefore that convex clustering has a trivial solution of Dû = 0.

Since is not unique, is not unique. In order to obtain the smallest tuning parameter λ such that Dû = 0, we take

Any tuning parameter λ ≥ λupper results in an estimate for which all observations belong to a single cluster. The proof is completed by recalling the definition of the dual norm in (4).

Appendix C: Proof of Lemmas 6–7

To prove Lemmas 6 and 7, we need a lemma on the tail bound for quadratic forms of independent sub-Gaussian random variables.

Lemma 10

(Hanson and Wright, 1971) Let z be a vector of independent sub-Gaussian random variables with mean zero and variance σ2. Let M be a symmetric matrix. Then, there exists some constants c1, c2 > 0 such that for any t > 0,

where ∥ · ∥F and ∥ · ∥sp are the Frobenius norm and spectral norm, respectively.

In order to simplify our analysis, we start by reformulating (3) as in Liu, Yuan and Ye (2013). Let be the singular value decomposition of D, where , and . Construct such that is an orthogonal matrix, that is, VTV = VVT = I. Note that .

Let and . Also, let . Optimization problem (3) then becomes

| (C-1) |

where . Note that rank(Z) = p(n – 1) and therefore, there exists a pseudo-inverse such that Z†Z = I. Recal from Section 1 that the set contains the row indices of D such that . Let the submatrices and denote the rows of Z and the columns of Z†, respectively, corresponding to the indices in the set . By Lemma 1(v),

| (C-2) |

Let and denote the solution to (C-1).

Proof of Lemma 6

We establish a finite sample bound for the prediction error of convex clustering with q = 1 by analyzing (C-1). First, note that and . Thus, . Recall from (2) that P1(Zβ) = ∥Zβ∥1. By the definition of and , we have

implying

| (C-3) |

where . Recall that and . By the optimality condition of (C-1),

Therefore, substituting into , we obtain

We now establish bounds for and that hold with high probability.

Bound for

First, note that is a projection matrix of rank p, and therefore and . By Lemma 10 and taking z = ϵ and , we have that

where c1 and c2 are constants in Lemma 10. Picking , we have

| (C-4) |

Bound for

Let ej be a vector of length with a one in the jth entry and zeroes in the remaining entries. Let . Using the fact that Λmax(Vβ) = 1 and (C-2), we know that each vj is a sub-Gaussian random variable with zero mean and variance at most . Therefore, by the union bound,

Picking , we obtain

| (C-5) |

Combining the two upper bounds

Setting and combining the results from (C-4) and (C-5), we obtain

| (C-6) |

with probability at least . Substituting (C-6) into (C-3), we obtain

We get Lemma 6 by an application of the triangle inequality and by rearranging the terms.

Proof of Lemma 7

We establish a finite sample bound for the prediction error of convex clustering with q = 2 by analyzing (C-1). Recall from (2) that . By the definition of and , we have

implying

| (C-7) |

where . Again, by the optimality condition of (C-1), we have that . Substituting this into , we obtain

where the second inequality follows from an application of the triangle inequality and the third inequality from an application of the Cauchy-Schwarz inequality. We now establish bounds for and that hold with large probability.

Bound for

This is established in the proof of Lemma 6 in (C-4), i.e.,

Bound for

First, note that there are p indices in each set . Therefore,

Note that

| (C-8) |

Therefore, using (C-8),

| (C-9) |

where the last inequality follows from (C-5) in the proof of Lemma 6.

Therefore, for , we have with probability at most . Combining the results from (C-4) and (C-9), we have that

| (C-10) |

with probability at least . Substituting (C-10) into (C-7), we obtain

We get Lemma 7 by an application of the triangle inequality and by rearranging the terms.

Appendix D: Proof of Lemma 9

Proof of Lemma 9

Directly from the dual problem (5), is the projection of x onto the convex set . Using the primal-dual relationship , we see that û is the residual from projecting x onto the convex set K. By Lemma 1 of Tibshirani and Taylor (2012), û is continuous and almost differentiable with respect to x. Therefore, by Stein's formula, the degrees of freedom can be characterized as .

Recall that denotes the rows of D corresponding to the indices in the set . Let . By the optimality condition of (3) with q = 2, we obtain

| (D-1) |

where

We define the matrix by removing the rows of D that correspond to elements in . Let be the projection matrix onto the complement of space spanned by the rows of .

By the definition of , we obtain . Therefore, Pû = û. Multiplying P onto both sides of (D-1), we obtain

| (D-2) |

where the second equality follows from the fact that for any .

Vaiter et al. (2014) showed that there exists a neighborhood around almost every x such that the set is locally constant with respect to x. Therefore, the derivative of (D-2) with respect to x is

| (D-3) |

using the fact that for any matrix A with .

Solving (D-3) for , we have

| (D-4) |

Therefore, an unbiased estimator of the degrees of freedom is of the form

Contributor Information

Kean Ming Tan, Department of Biostatistics, University of Washington, Seattle, WA 98195, U.S.A., keanming@uw.edu.

Daniela Witten, Departments of Statistics and Biostatistics, University of Washington, Seattle, WA 98195, U.S.A., dwitten@uw.edu.

References

- Bach F, Jenatton R, Mairal J, Obozinski G. Convex optimization with sparsity-inducing norms. Optimization for Machine Learning. 2011:19–53. [Google Scholar]

- Boucheron S, Lugosi G, Massart P. Concentration Inequalities: a Nonasymptotic Theory of Independence. OUP Oxford. 2013 [Google Scholar]

- Boyd S, Vandenberghe L. Convex Optimization. Cambridge university press; 2004. MR2061575. [Google Scholar]

- Chen J, Chen Z. Extended Bayesian information criteria for model selection with large model spaces. Biometrika. 2008;95:759–771. MR2443189. [Google Scholar]

- Chen J, Chen Z. Extended BIC for small-n-large-P sparse GLM. Statistica Sinica. 2012;22:555. MR2954352. [Google Scholar]

- Chi EC, Allen GI, Baraniuk RG. Convex biclustering. arXiv preprint arXiv. 2014:1408.0856. [Google Scholar]

- Chi E, Lange K. Splitting methods for convex clustering. Journal of Computational and Graphical Statistics. 2014a doi: 10.1080/10618600.2014.948181. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chi E, Lange K. cvxclustr: Splitting methods for convex clustering. R package version 1.1.1. 2014b doi: 10.1080/10618600.2014.948181. http://cran.r-project.org/web/packages/cvxclustr. [DOI] [PMC free article] [PubMed]

- Duchi J, Singer Y. Efficient online and batch learning using forward backward splitting. The Journal of Machine Learning Research. 2009;10:2899–2934. MR2579916. [Google Scholar]

- Efron B. How biased is the apparent error rate of a prediction rule? Journal of the American Statistical Association. 1986;81:461–470. MR0845884. [Google Scholar]

- Hanson DL, Wright FT. A bound on tail probabilities for quadratic forms in independent random variables. The Annals of Mathematical Statistics. 1971;42:1079–1083. MR0279864. [Google Scholar]

- Haris A, Witten D, Simon N. Convex modeling of interactions with strong heredity. Journal of Computational and Graphical Statistics. 2015 doi: 10.1080/10618600.2015.1067217. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning; Data Mining, Inference and Prediction. Springer Verlag; New York: 2009. MR2722294. [Google Scholar]

- Hocking TD, Joulin A, Bach F, Vert J-P, et al. Clusterpath: an algorithm for clustering using convex fusion penalties. 28th International Conference on Machine Learning. 2011 [Google Scholar]

- Jain AK, Dubes RC. Algorithms for Clustering Data. Prentice-Hall; 1988. MR0999135. [Google Scholar]

- Lindsten F, Ohlsson H, Ljung L. Statistical Signal Processing Workshop (SSP) IEEE; 2011. Clustering using sum-of-norms regularization: with application to particle filter output computation. pp. 201–204. [Google Scholar]

- Liu J, Yuan L, Ye J. Guaranteed sparse recovery under linear transformation. Proceedings of the 30th International Conference on Machine Learning (ICML-13) 2013:91–99. [Google Scholar]

- Lloyd S. Least squares quantization in PCM. IEEE Transactions on Information Theory. 1982;28:129–137. MR0651807. [Google Scholar]

- Ng AY, Jordan MI, Weiss Y. On spectral clustering: analysis and an algorithm. Advances in Neural Information Processing Systems. 2002 [Google Scholar]

- Pelckmans K, De Brabanter J, Suykens J, De Moor B. Convex clustering shrinkage. PASCAL Workshop on Statistics and Optimization of Clustering Workshop. 2005 [Google Scholar]

- Radchenko P, Mukherjee G. Consistent clustering using 1 fusion penalty. arXiv preprint arXiv. 2014:1412.0753. [Google Scholar]

- Rand WM. Objective criteria for the evaluation of clustering methods. Journal of the American Statistical association. 1971;66:846–850. [Google Scholar]

- Schwarz G. Estimating the dimension of a model. The Annals of Statistics. 1978;6:461–464. MR0468014. [Google Scholar]

- Tan KM, Witten DM. Sparse biclustering of transposable data. Journal of Computational and Graphical Statistics. 2014;23:985–1008. doi: 10.1080/10618600.2013.852554. MR3270707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibshirani RJ, Taylor J. The solution path of the generalized lasso. The Annals of Statistics. 2011;39:1335–1371. MR2850205. [Google Scholar]

- Tibshirani RJ, Taylor J. Degrees of freedom in lasso problems. The Annals of Statistics. 2012;40:1198–1232. MR2985948. [Google Scholar]

- Tibshirani R, Saunders M, Rosset S, Zhu J, Knight K. Sparsity and smoothness via the fused lasso. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2005;67:91–108. MR2136641. [Google Scholar]

- Vaiter S, Deledalle C-A, Peyré G, Fadili JM, Dossal C. The degrees of freedom of partly smooth regularizers. arXiv preprint arXiv. 2014:1404.5557. MR3281282. [Google Scholar]

- Witten DM, Tibshirani R. A framework for feature selection in clustering. Journal of the American Statistical Association. 2010;105:713–726. doi: 10.1198/jasa.2010.tm09415. MR2724855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu C, Xu H, Leng C, Yan S. Convex optimization procedure for clustering: theoretical revisit. Advances in Neural Information Processing Systems. 2014 [Google Scholar]