Summary

Lateral intraparietal (LIP) neurons encode a vast array of sensory and cognitive variables. Recently, we proposed that the flexibility of feature representations in LIP reflect the bottom-up integration of sensory signals, modulated by feature-based attention (FBA), from upstream feature-selective cortical neurons. Moreover, LIP activity is also strongly modulated by the position of space-based attention (SBA). However, the mechanisms by which SBA and FBA interact to facilitate the representation of task-relevant spatial and non-spatial features in LIP remain unclear. We recorded from LIP neurons during performance of a task which required monkeys to detect specific conjunctions of color, motion-direction, and stimulus position. Here we show that FBA and SBA potentiate each other’s effect in a manner consistent with attention gating the flow of visual information along the cortical visual pathway. Our results suggest that linear bottom-up integrative mechanisms allow LIP neurons to emphasize task-relevant spatial and non-spatial features.

Introduction

Visual attention is a set of mechanisms for selectively prioritizing the neuronal processing of behaviorally relevant aspects of visual scenes (Carrasco, 2011). It is traditionally described as being either allocated toward a specific spatial location (space-based attention or SBA) or toward non-spatial visual features (feature-based attention or FBA) such as color, motion direction or orientation. For example, detecting someone in a crowd is facilitated by prior information about the color of her clothes, the direction of her movement or her spatial position.

SBA or FBA have both been shown to enhance encoding of task relevant locations or features throughout the visual cortical hierarchy (Cohen and Maunsell, 2011; Connor et al., 1997; Ipata et al., 2012; Martinez-Trujillo and Treue, 2004; Maunsell and Treue, 2006) as well as higher order areas such as the Lateral Intraparietal (LIP) area (Bisley and Goldberg, 2010; Herrington and Assad, 2009; Ibos and Freedman, 2014), frontal-eye field (FEF) (Armstrong et al., 2009; Ibos et al., 2013; Zhou and Desimone, 2011) and prefrontal cortex (PFC) (Bichot et al., 2015; Hussar and Pasternak, 2013; Lennert and Martinez-Trujillo, 2011, 2013; Tremblay et al., 2015). Moreover, the impact of SBA and FBA on the response of visual cortical neurons suggests that both types of attention modulate neuronal processing in similar ways (Cohen and Maunsell, 2009, 2011; Hayden and Gallant, 2009; Leonard et al., 2015). However, no study has characterized their joint impact on the response of higher order cortical areas which are hypothesized to be more closely involved in mediating attentional control and decision-making (Bisley and Goldberg, 2010).

The main goal of this study was to test how SBA and FBA modulate visual selectivity in LIP in order to better understand its involvement in both types of attention. LIP is a core node in the network of brain areas mediating attention. For example, recordings from LIP during a wide range of tasks show strong modulations of neuronal activity due to both SBA (Bisley and Goldberg, 2010; Herrington and Assad, 2009; Saalmann et al., 2007) and FBA (Ibos and Freedman, 2014). However, the extent to which LIP plays a central role in generating attentional modulations, or receives attentional control signals from other brain areas remains unclear. On one hand, SBA modulations of LIP activity precedes attentional modulations in area MT (Herrington and Assad, 2009), consistent with LIP being a source for attentional modulations in extra-striate cortical visual areas (Herrington and Assad, 2009; Saalmann et al., 2007). On the other hand, we recently proposed that FBA modulations of LIP activity reflect the bottom-up integration of attentional modulations of upstream visual areas (e.g. V4 or MT) (Ibos and Freedman, 2014), in order to enhance the representation of task-relevant features and to facilitate decision-making. This apparent dichotomy between LIP being either an “emitter” of SBA signals or a “receiver” of FBA modulations raises a question about the precise role of LIP in attentional control. The ability to address this emitter/receiver question would benefit from characterizing the joint impact of SBA and FBA on visual selectivity of LIP neurons. This study directly examines LIP’s role in mediating performance of a visual matching task in which we independently manipulated both FBA and SBA.

We trained two monkeys to perform a delayed conjunction matching (DCM) task. Successions of visual stimuli (each composed of a conjunction of one color and one motion-direction) were presented simultaneously at two positions. One position was within the recorded neuron’s receptive field (RF), while the other position was in the opposite quadrant of the display. At the beginning of each trial, a sample stimulus cued the monkeys about which spatial location and which conjunction of color and direction were behaviorally relevant.

We show that both types of attention potentiate each other’s effects in LIP. FBA affects LIP neurons in a spatially global manner as feature tuning shifts toward the relevant features, consistent with our earlier report (Ibos and Freedman, 2014), were qualitatively independent of the spatial position of attention. However, SBA modulated FBA effects as the amplitudes of feature-tuning shifts were larger when behaviorally-relevant stimuli were located inside neurons’ RFs. Interestingly, the amplitude of SBA modulations—which consisted of both increases and decreases of neuronal responses—depended on the feature tuning properties of LIP neurons, with larger modulations when monkeys attended neurons’ preferred features. Finally, a feedforward two-layer integrative model suggests that the modulations of both spatial and feature selectivity observed in LIP can arise via linear integration of SBA and FBA-modulations of neurons from upstream visual areas such as MT and V4.

Results

Task and Behavior

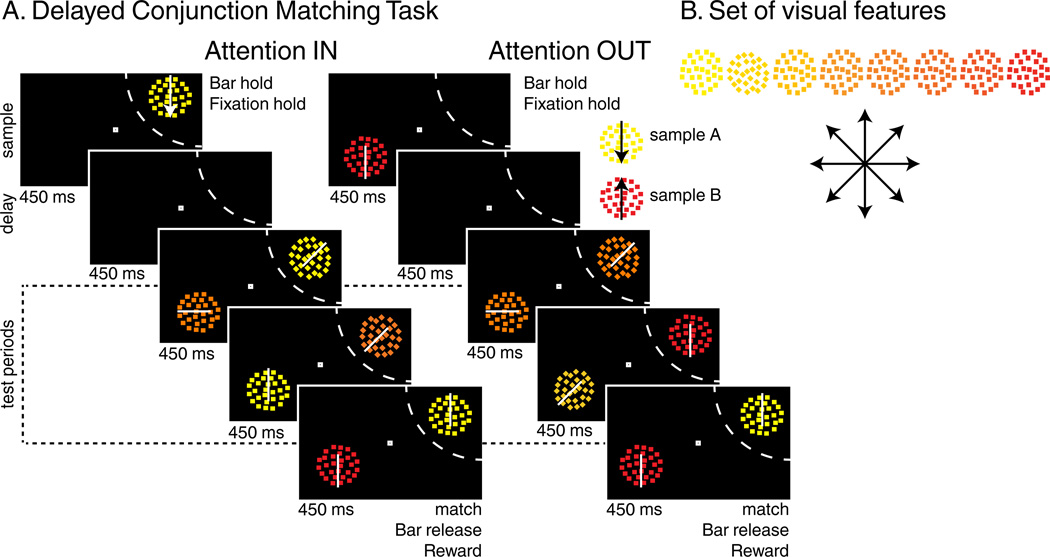

Two monkeys performed a modified version of a delayed conjunction matching (DCM) task used in a previous study (Ibos and Freedman, 2014). One sample (450 ms) was followed by a delay (450 ms) and 1 to 4 successive test stimuli (450 ms each) which were simultaneously presented with the same number of distractor stimuli located in the opposite hemifield (Figure 1A). Stimuli were conjunctions of one of eight directions and one of eight colors (for a total of 64 stimuli, Figure 1B). Monkeys were rewarded for releasing a touch bar when a “target” stimulus was presented that matched the sample in both color and direction. Although distractor stimuli could match both features of the sample, they were always irrelevant and monkeys had to ignore them. On 20% of trials, no target was presented and monkeys were rewarded for withholding their response until the end of the fourth test period. On each trial, either sample A (yellow dots moving downward) or sample B (red dots moving upward) was presented either inside (attention IN, AttIN) or outside (attention OUT, AttOUT) the RF of the recorded neuron. Each of the four test stimuli were pseudo-randomly picked between three types: (1) target stimuli, matching the sample in both color and direction, (2) the sample stimulus which was not presented during that trial (e.g. stimulus A during sample B trials), (3) any of the 62 remaining non-match stimuli. Distractors were pseudo-randomly picked among the entire set of 64 stimuli. By manipulating both the location and the identity of the sample stimulus, this task allows us to test how SBA and FBA modulate spatial, color and direction selectivity of individual neurons. Therefore, the following analyses will primarily focus on four conditions: sample A or B presented either inside (AIN or BIN trials) or outside (AOUT or BOUT trials) the RF.

Figure 1.

Behavioral Task: (A) Delayed conjunction matching task. Either sample A (yellow dots moving downwards) or sample B (red dots moving upwards) was presented at one of two positions. After a delay, one to four test stimuli were successively presented on the sample position while as many distractors were simultaneously presented in the opposite hemifield. During AttIN, sample and test stimuli were presented in the RF of the recorded neuron (dashed arc, not shown to monkeys). In AttOUT, sample and test stimuli were presented outside while distractors were located inside the RF. To receive a reward, monkeys had to release a lever when one of the test stimuli matched the sample in both features and to ignore distractors. (B) Stimulus features: 64 different test/distractor stimuli were generated using 8 colors and 8 directions.

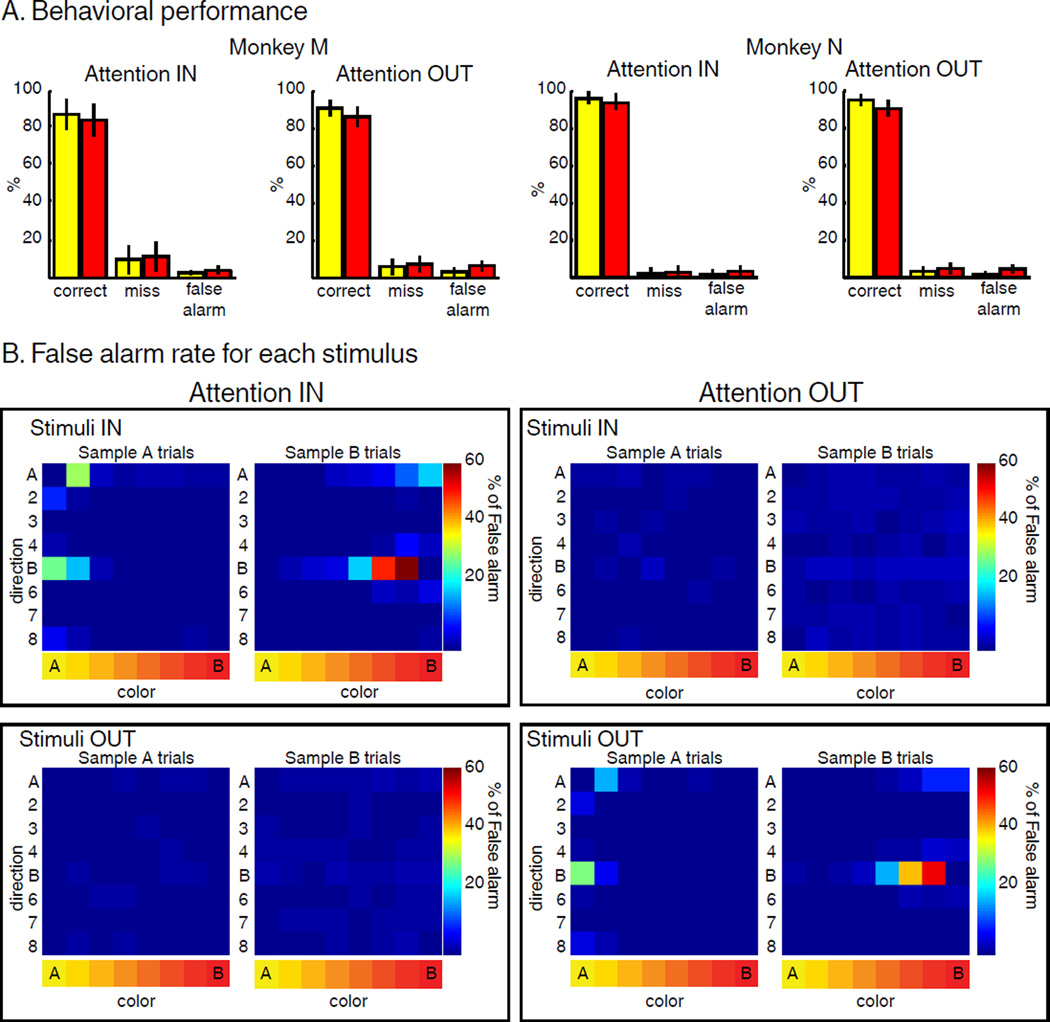

Both monkeys performed the task with >80% accuracy. Fewer than 15% of trials were “misses” (unreported target) and fewer than 5% of trials were “false alarms” (response to non-target stimuli, Figure 2A) during all four conditions. We verified that subjects successfully attended to test stimuli and ignored distractor stimuli. Monkeys had to release the lever only for test stimuli matching the sample in color and direction and ignore the same conjunction of features for distractor stimuli at the irrelevant position. This allowed us to maximize the effect of SBA, but prevented us from examining traditional behavioral markers of attention (e.g. difference in detection rate or reaction time when target stimuli were presented at the attended vs. unattended location). Instead, we examined the identity and position of stimuli that triggered false alarm responses (Figure 2B). This revealed that most false alarm errors were in response to stimuli that were visually similar to the sample at the relevant position only, and that monkeys correctly ignored stimuli at the irrelevant position. This confirms that the monkeys appropriately deployed their attention to task-relevant positions and features.

Figure 2.

Behavior. (A) Behavioral performance: both monkeys performed the task with high accuracy in both AttIN and AttOUT. (B) False alarm rate (averaged from both monkeys) for each of the 64 stimuli located inside (top) or outside (bottom) the RF of the recorded neuron during AttIN (left) and AttOUT (right) conditions. Each row represents one direction, and each column represents one color.

Neurophysiology

The central goal of this study was to understand how SBA and FBA enhance the representation of task-relevant stimuli in LIP. Given the small number of error trials, we analyzed exclusively correct trials. Because the monkeys were required to release a lever in response to target stimuli, we excluded from analysis activity which followed presentation of target stimuli (excluding neuronal activity directly related to the motor response). We only describe feature selectivity of LIP neurons to stimuli located inside neurons’ RFs (test stimuli during AttIN and distractor stimuli during AttOUT). In the following, we first characterize the effect of SBA and FBA on LIP selectivity. Second, we show how linear integrative mechanisms could account for the respective characteristics of SBA and FBA in LIP.

We recorded from 74 LIP neurons from two monkeys (Monkey M, N=27; Monkey N, N=47) performing the DCM task. A large fraction of the neuronal population was modulated by one or more task-related factors during test stimulus presentation (4-way ANOVA with sample position, sample identity, and the color and direction of in-RF stimuli as factors, p<0.01, see Methods). A majority of LIP neurons (65/74) were modulated by the position of the sample stimulus, indicating an impact of SBA on test-period activity. 28/74 neurons showed a main effect of sample identity, consistent with FBA modulating test-period responses. 62/74 neurons were selective for the color of test stimuli, and 61/74 neurons were selective for their motion direction.

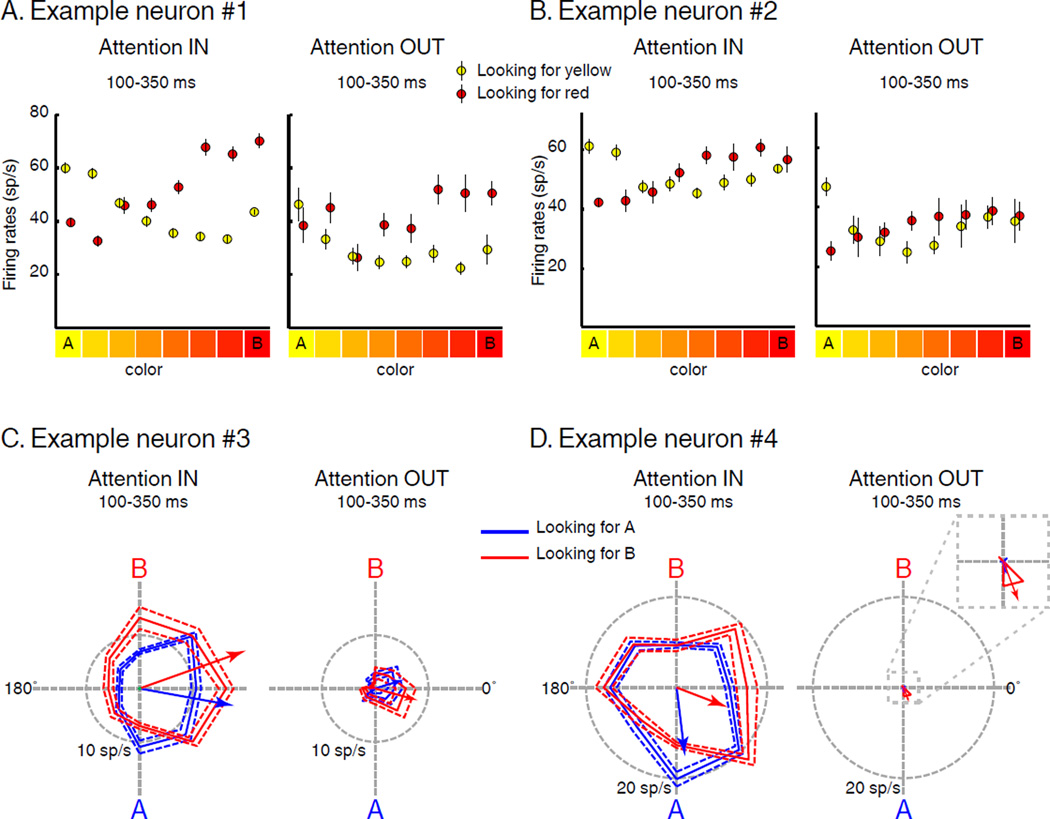

Figure 3 shows example tuning functions of two color selective (Figure 3A, B) and two direction selective (Figure 3C, D) neurons for stimuli located inside their RFs, separately during AttIN and AttOUT. Although there was always a stimulus shown in the RF, test-period neuronal activity was strongly modulated by both the location of the sample (i.e. SBA) and its identity (i.e. FBA). Each neuron showed greater activity during AttIN compared to AttOUT. This was particularly evident for the neuron in Figure 3D, which showed almost no response to distractor stimuli located inside its RF. In addition, feature tuning during the test period was also modified on a trial to trial basis according to the identity of the sample stimulus. Neuronal color tuning shifted depending on the color that was task relevant (e.g. Figure 3A and 3B). Both example neurons responded preferentially to yellow stimuli during AIN trials (yellow relevant) and red stimuli during BIN trials (red relevant). Interestingly, these neurons also showed qualitatively similar shifts of color tuning for distractors located inside their RF, with color tuning reflecting the task-relevant color even though monkeys ignored those stimuli. Both direction selective neurons (Figure 3C and 3D) showed modulations of direction tuning consistent with a shift of their preferred direction toward the relevant direction. However, these effects were only evident during AttIN: the preferred direction (see Methods) of the neuron in Figure 3C was 349.7° during A IN trials and 19.6° during B IN trials (angular distance of 29.8°, permutation tes t, p=0.021). When attention was directed outside the RF, the direction tuning of this neuron did not significantly vary according to the identity of the sample, in part because the response barely rose above baseline activity (17.1° and 344.5° during A and B trials, a ngular distance = −32°, permutation test, p=0.088). The preferred direction of the neuron in Figure 3D was 276.8° during A IN trials and 338.9° during B IN trials (angular distance = 62.1°, permutation test , p=0.042). When attention was directed outside the RF, the absence of response prevented a measure of direction selectivity.

Figure 3.

Examples of feature selectivity. (A) and (B) Color tuning. Average firing rate to the color of each stimulus located in the RF during AIN (yellow) and BIN (red) trials (Attention IN) and during AOUT (yellow) and BOUT (red) trials (Attention OUT). Error bars indicate standard error of the mean (SEM). (C) and (D) Direction tuning. Polar plots show average firing rate to the direction of each stimulus located inside RF during AIN (blue) and BIN (red) trials (Attention IN) and during AOUT (blue) and BOUT (red) trials (Attention OUT). Solid traces indicate mean firing rate, dotted traces indicate SEM. Blue and red oriented arrows correspond to each neuron’s direction vector.

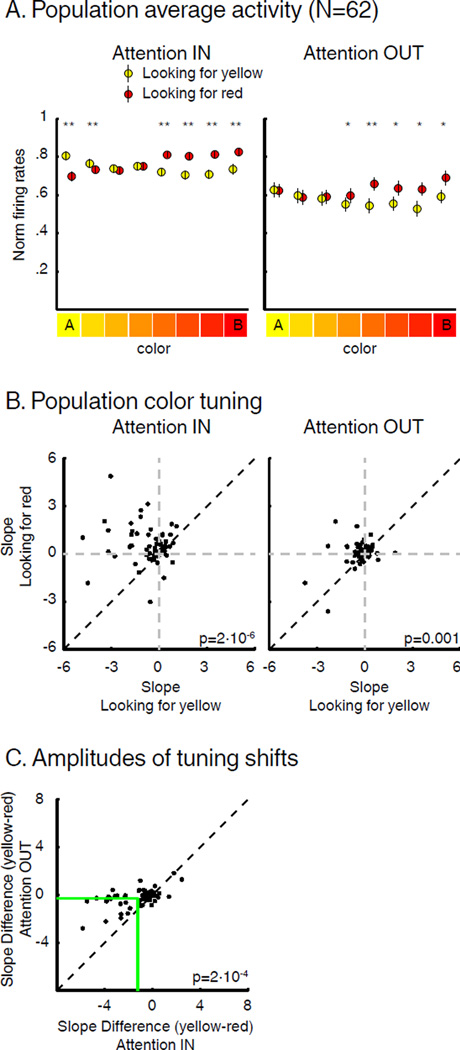

FBA modulates the representation of task-relevant features

Population analysis of color selectivity was performed using all color selective neurons (N=62; 4-way ANOVA, p<0.01). This revealed clear modulation of test-period color tuning (Figure 4A), with a shift of tuning toward the color that was task-relevant (yellow during AIN, red during BIN). The population color tuning for task-irrelevant distractors located inside neurons’ RFs also revealed a modulation of color selectivity, as the response to red stimuli was higher during BOUT than AOUT (paired T-Test, p<0.05). This indicated a spatially-global impact of FBA on population-level color tuning.

Figure 4.

Impact of SBA and FBA on color tuning. (A) Average test-period activity of color-selective neurons (N=62) when monkeys were looking for yellow (yellow) or for red (red) (paired T-Test, * p<0.05, ** p<0.001) during both AttIN and AttOUT. Error bars indicate SEM. (B) Each point represents the slope of the linear regression fit of each neuron’s color tuning curve during sample A (x-axis) vs. sample B (y-axis) trials. (C) Effect of SBA on the amplitude of color tuning shifts. Green lines represent average slopes difference in each condition.

The amplitude of color tuning shifts appeared to be modulated by the position of spatial attention. To test this, we compared the slope of a linear regression across the response to the 8 colors for each neuron during AIN, BIN, AOUT and BOUT trials. By convention, yellow was the first color while red was the eighth value on X-axis, so that the slopes of yellow or red preferring neurons were negative or positive, respectively. As shown in Figure 4B, the slope was greater during sample B (red) than sample A (yellow) trials for a majority of neurons during both AttIN (paired T-Test, p=2*10−6) and AttOUT (paired T-Test, p=0.001). Specifically, slopes were positive during sample B trials and negative during sample A trials for a substantial number of neurons during both AttIN (N=30/62) or AttOUT (N=19/62), indicating dynamic encoding of the task-relevant color. The impact of FBA was larger during AttIN (mean slope difference=−1.4) than AttOUT (mean slope difference=−0.39, paired T-Test, p=2*10−4) consistent with larger color tuning shifts when SBA was located inside LIP neurons’ RF (Figure 4C).

We considered whether the differences in tuning shift amplitude between AttIN and AttOUT could be explained by the difference in mean spike rate between the two conditions. To do so, we equated spike rate by decimating each neurons’ activity in AttIN (see Methods) to match activity in AttOUT. This revealed greater amplitude of feature tuning shifts when SBA was directed inside LIP neurons’ RFs (paired T-Test, p=0.0075) and confirms that spatial attention impacts the magnitude of FBA-related tuning shifts.

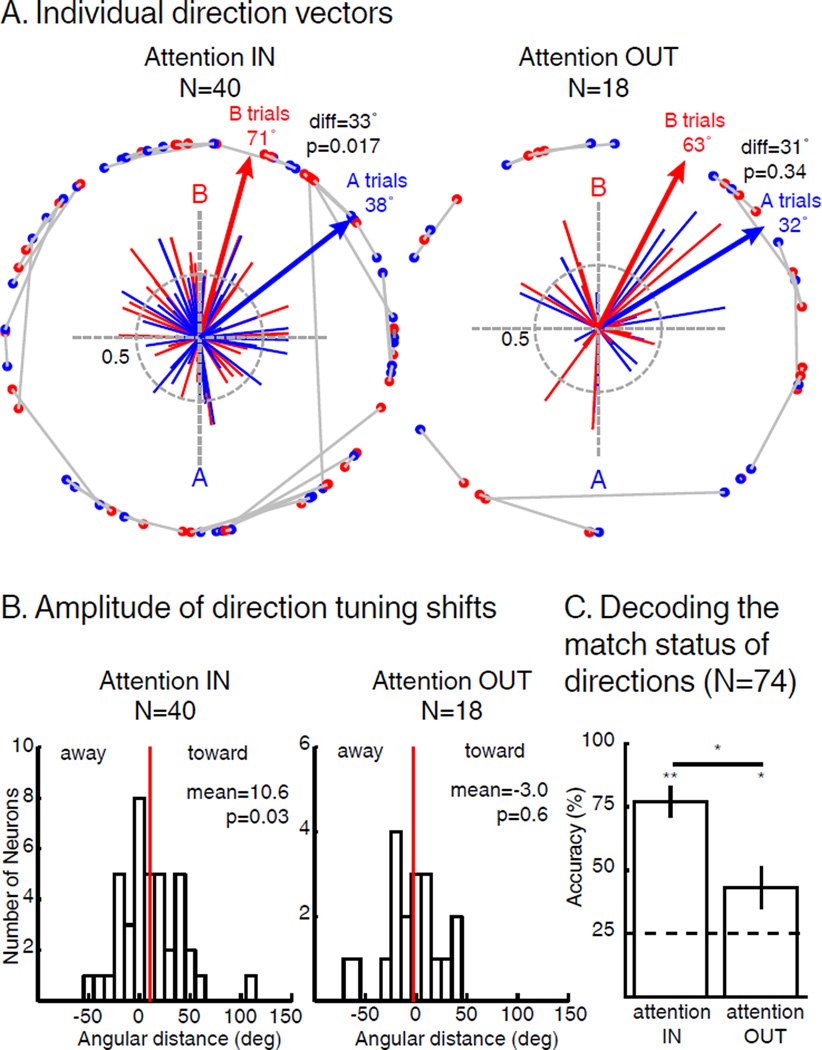

We examined the impact of SBA and FBA on direction selectivity among direction selective LIP neurons (determined separately in AttIN and AttOUT) by computing the angular distance between each neuron’s preferred direction during sample A and B trials and comparing these angular differences between AttIN (N=40, permutation test, p<0.05) and AttOUT (N=18). The population direction vector (the sum of all individual neuron vectors) during AIN trials (37.9°) was significantly shifted toward the direction of sample A compared to the population direction vector during BIN trials (70.8°, angular distance=32.9°, Hotelling t est for paired circular data, p=0.017). Similarly, the average shift amplitude (average angular distance between AIN and BIN vectors, independent of each vector’s amplitude) was 10.6° toward the attended direction (Figure 5B, T-Test, p=0.029). In addition, 10/40 individual neurons showed a significant shift of their preferred direction (8 toward and 2 away from the attended direction; permutation test, p<0.05). Substantially fewer neurons were direction selective during AttOUT (N=18). Likewise, we did not observe a significant population-level shift in preferred directions during AttOUT (Figure 5A; Hotelling test for paired circular data, p=0.34) and the angular distance between preferred directions during AOUT and BOUT trials was not significantly different (mean distance=−3.0°, T-Test, p=0.63; Figure 5B). Moreover, only 2/19 neurons showed significant shifts of their preferred direction (1 toward and 1 away from the attended direction, permutation test, p<0.05). We then directly compared these effects for neurons that were direction tuned during both AttIN and AttOUT (N=18). It revealed larger tuning shifts during AttIN, whether the activity during AttIN was decimated to the level of AttOUT (paired T-Test, p=0.024) or not (paired T-Test, p=0.026). Finally, we show that the lack of significance for shifts of direction tuning during AttOUT cannot be explained by a difference in firing rates or by a difference in neuronal population size (see Supplementary Materials).

Figure 5.

Impact of SBA and FBA on direction tuning of direction selective neurons. (A) Individual direction vectors during AttIN (N=40, permutation test, p<0.05) and AttOUT (N=18, permutation test, p<0.05). Solid lines represent direction vectors of each neuron during either sample A (blue) or sample B (red) trials. Blue and red arrows represent the sum of blue and red direction vectors (respectively). Blue and red dots paired by grey lines represent unitary projections of each individual vectors during A and B trials respectively. diff: angular distance between sample A and sample B vectors. (B) Angular distance between the preferred direction of each neuron during sample A and B trials. Sign of the angular distance has been normalized so that positive and negative values represent respectively shifts toward and away the attended direction. Red lines represent the mean of the distributions (T-Test). (C) Accuracy of an SVM classifier to decode the match status of direction A and B during both AttIN (left) and AttOUT (right) conditions (permutation test, * p<0.05, ** p<0.01). Dotted line: chance level. Error bars represent standard deviation to the mean.

Given the lack of significant modulation of direction tuning during AttOUT at the level of individual LIP neurons, we tested whether a population-decoding approach could reveal effects of SBA on FBA modulations of relevant motion directions. We used a pseudo-population of LIP neurons recorded during performance of the DCM task (N=74). First, we observed that an SVM classifier (see Methods) could decode with above-chance accuracy (50%) the identity of the sample based on neuronal responses to test stimuli during AttIN (75.9% correct, p<0.001) and to distractor stimuli during AttOUT (73.3% correct, p=0.001; AttIN vs AttOUT, p=0.33). Second, we trained an SVM classifier to decode whether the test direction matched the sample direction during AttIN and AttOUT (Figure 5C). The classifiers were able to decode with greater than chance (25%, see Methods) accuracy the match status of test direction during AttIN (mean accuracy=78.0%, p<0.001) and of distractor stimuli during AttOUT (mean accuracy=43.2%, p=0.01). However, decoding performance was greater during AttIN than AttOUT (permutation test, p=5.5×10−4), suggesting that FBA modulates direction representations in a spatially global manner but that the amplitude of these modulations depends on the position of SBA.

In additional control analyses (see Supplementary Materials), we show that FBA modulations in LIP are not related to similarity encoding between sample and test stimuli.

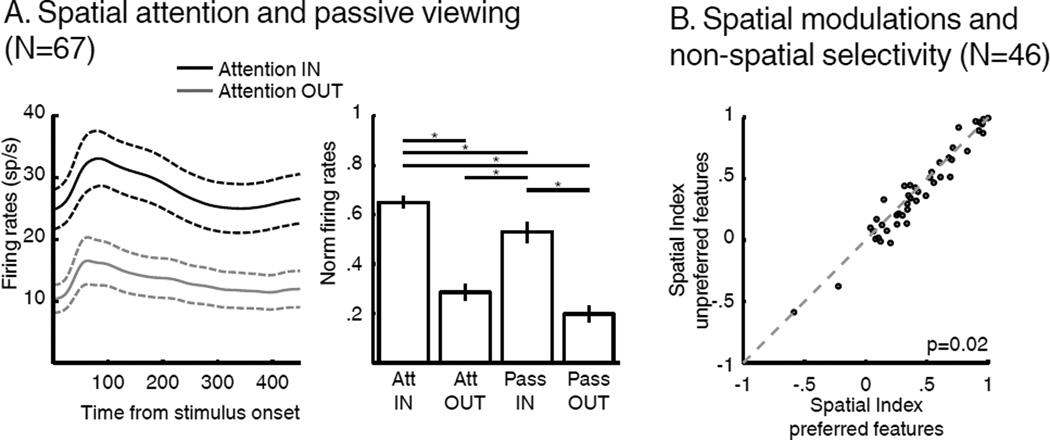

SBA and passive viewing

We next examined the impact of SBA and FBA on LIP neurons’ spatial selectivity. As evident in the example neurons and previous studies (Bisley and Goldberg, 2010; Herrington and Assad, 2009), LIP activity was modulated by the position of SBA. We focused on the population of neurons that were visually responsive relative to baseline for sample stimuli shown in their RF (N=67/74, paired T-Test, p<0.01). Among this population, neuronal activity was significantly greater for test stimuli compared to visually identical distractors shown within their RF (Figure 6, paired T-Test, p=5*10−10). On a subset of sessions (N=38/67 neurons) LIP activity was examined during both the DCM task and passive viewing (PV) of the same stimuli (see Methods and Figure 6A). This revealed that neuronal activity to task-irrelevant distractor stimuli within neurons’ RFs was reduced compared to the same stimuli shown in the RF during PV. Furthermore, LIP activity was more similar between AttOUT (with a task-irrelevant distractor shown in the RF) and PV with a stimulus shown outside the RF (two-way ANOVA, main effect of task identity, p=0.0019, main effect of position, p<<.001, interaction, p=0.61; Figure 6A Tukey-Kramer post-hoc test). This indicates that SBA not only emphasized the response of LIP neurons with RFs overlapping the attended location, but also inhibited the response to task-irrelevant stimuli shown within their RFs.

Figure 6.

Effect of SBA and FBA on spatial selectivity. (A) Effect of SBA on the response of LIP neurons. Left: Time course of average firing rates to test stimuli (attention IN, black), and distractors (attention OUT, grey; N=67, neurons showing a significant response to the onset of the sample). Dashed lines represent SEM. Right: Comparison of neuronal responses during AttIN, AttOUT, passive-viewing IN and passive-viewing OUT (HSD post-hoc test, P<0.05). (B) Effect of FBA on the amplitude of SBA modulations (paired T-test).

We showed above that the amplitude of feature tuning shifts in LIP depended on the allocation of SBA. We tested whether SBA modulations were affected similarly by FBA (Figure 6B) by comparing the amplitude of SBA modulations when monkeys attended to each neuron’s preferred or non-preferred sample stimulus. We performed this analysis on the fraction of LIP neurons which showed selectivity to one sample stimulus over the other (N=46, 18 preferred sample A, 28 preferred sample B, T-Test, p<0.01—this apparent preferential encoding of sample B can be explained by the relatively small size of our data pool (N=74) and unassessed feature selectivity of each LIP neuron prior recording). We quantified the impact of SBA using a spatial modulation index which compared the response to test stimuli during AttIN to the response to distractor stimuli during AttOUT ((AttIN-AttOUT)/(AttIN+AttOUT)). This index was significantly greater when monkeys were attending to each neuron’s preferred sample stimulus (mean index=0.44, A trials for cells preferring sample A, B trials for cells preferring sample B) than non-preferred sample (mean index=0.41; B trials for cells preferring sample A, A trials for cells preferring sample B, paired T-Test, p=0.022). This shows that the effect of SBA on LIP neurons was modulated by non-spatial properties of each neuron.

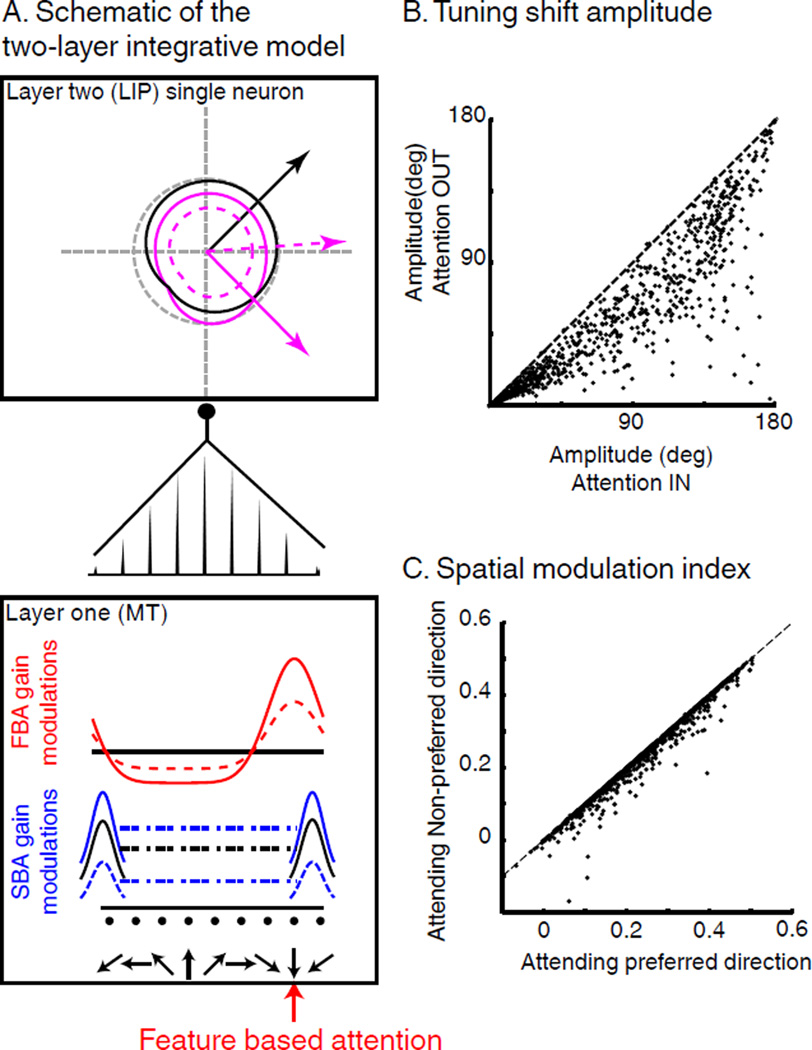

Interaction between SBA and FBA in a two-layer integration model

In a previous study, we proposed that feature tuning shifts observed in LIP (Ibos and Freedman, 2014) could arise from the linear integration of activity in upstream feature-selective cortical areas which is known to show modulations of response gain due to FBA (Maunsell and Treue, 2006). The model consists of two connected layers (L1 and L2). Each L2 neuron linearly integrates the activity of a pool of L1 neurons. The impact of L1 neurons on feature tuning of L2 neurons depends on the synaptic weights of each connection.

In the following, we propose that this model accounts for the effects of FBA and SBA on LIP spatial and non-spatial selectivity. We specifically address two main questions. First, do attentional modulations in LIP reflect interactions between SBA and FBA or independent multiplicative gain-modulations of L1 neurons? Thus, we tested whether SBA and FBA modulations of L1 could account for our results. SBA and FBA had either super-additive (i.e. they interacted) or additive (i.e. they were independent) effects on L1. Second, could FBA and SBA modulations directly targeting L2 neurons reproduce our results? Thus, we have tested the impact of independent SBA and FBA modulations applied on both L1 and L2 based on their respective spatial and feature selectivity.

Modulations of L1 neurons consisted of three functions. First, we applied SBA-related gain modulations to L1 neurons’ firing rates similar to that described in V4 and MT (Connor et al., 1997; Hayden and Gallant, 2009; Masse et al., 2012; Reynolds et al., 2000). Second, we implemented FBA-related multiplicative gain modulations of L1 neuron similar to that described in previous studies (Maunsell and Treue, 2006; McAdams and Maunsell, 2000). Third, SBA and FBA show super-additive effects in MT and V4 (Hayden and Gallant, 2009; Patzwahl and Treue, 2009) with larger FBA gain modulations when relevant stimuli were located inside each neuron’s RF. Thus, in a subset of simulation, we implemented such an interaction by increasing the amplitude of FBA gain modulations on L1 neurons during AttIN, and decreasing it during AttOUT (Figure 7A).

Figure 7.

Two-layer integration model. (A) Schematic of the model. Feature selectivity of L2 neurons (top box) comes from the linear integration of population of L1 neurons (bottom box) and depends on the distribution of synaptic weights (middle). Black curves represent native tuning (no attention). For clarity, we only illustrate the tuning curves of the L1 neurons located on the extremities of the axis. Blue and red lines represent respective multiplicative gain factors of SBA and FBA on L1 neurons. Purple lines represent the joint effect of FBA and SBA on L2 neuron’s direction tuning. Full lines represent AttIN conditions. Dotted lines represent AttOUT. (B) Effect of SBA on the amplitude of feature-tuning shifts. (C) Effect of direction tuning of L2 neurons on the amplitude of SBA modulations.

We then tested the effect of each of these components on the spatial and feature selectivity of L2 neurons. Consistent with our previous report, linear integration of FBA-dependent gain-modulations resulted in shifts of the direction tuning of L2 neurons toward the attended direction. Moreover, the inclusion of modulations due to SBA in the model revealed that L2 responses were greater when task-relevant stimuli were located inside neurons’ RFs. Consistent with our observations, interactions between both types of attention in L1 resulted in larger direction tuning shifts during AttIN compared to AttOUT (Figure 7B) and larger SBA-modulations when the preferred direction of each L2 neuron was relevant (Figure 7C). We also tested whether apparent interaction between SBA and FBA in LIP could be explained by multiplicative gain-modulations, with independent FBA and SBA modulations of L1 neurons. Interestingly this condition produced strictly independent FBA and SBA modulations in L2 neurons (correlation coefficient=1, p=0) and failed to reproduce the observed neuronal results from LIP.

One concern is that FBA and SBA modulations of L2 neurons were indirect as they reflected integration of attention modulations of L1 neurons. It raises the possibility that independent top-down SBA and FBA directly targeting L2 neurons could contribute to either SBA-dependent feature-tuning shifts or FBA-dependent spatial attention modulations. Therefore, in a series of control simulations, we tested whether these properties could be explained by additional and independent FBA and SBA modulations applied directly on L1 and L2 neurons. This revealed similar L2 feature-tuning shifts during AttIN and AttOUT, and similar L2 spatial modulation indices during sample A and B trials (correlation coefficient=1, p=0). Together, these results suggest that the joint modulations of SBA and FBA observed in LIP reflect the linear integration of their interaction in upstream visual cortical areas.

Discussion

We trained two monkeys to identify specific conjunctions of color and motion-direction at a cued position, while ignoring similar, but task irrelevant, distractor stimuli located in the opposite visual hemifield. This task allowed us to simultaneously manipulate the voluntary allocation of FBA and SBA and consequently investigate their respective effects on LIP activity. FBA produced shifts of color and direction tuning of LIP neurons toward task-relevant features and SBA modulated neurons’ spatial selectivity, with higher firing rates when relevant stimuli were located inside each neurons’ RF. In addition, each type of attention potentiated the effect of the other; the amplitudes of FBA and SBA modulations were influenced by each neuron’s respective spatial and non-spatial selectivity. The amplitude of feature tuning shifts was larger when relevant stimuli were located inside each neuron’s RF. Similarly, the amplitude of SBA modulations depended on each neuron’s feature-selectivity, with larger effects observed when FBA was allocated to the neuron’s preferred conjunction of color and motion-direction.

The observed attentional modulations of LIP neurons are consistent with bottom-up integrative mechanisms implemented in a two-layer neural network model. In this model, L2 neurons (corresponding to area LIP) linearly integrate the activity of spatially-selective and feature-selective L1 neurons (corresponding to areas MT or V4). When attending to a specific feature and position, SBA and FBA simultaneously modulate the activity of L1 neurons, mimicking the observations of previous studies (Martinez-Trujillo and Treue, 2004; McAdams and Maunsell, 2000). The joint effects of SBA and FBA on L1 neurons result in changes in responses of L2 neurons similar to those observed in LIP in our study. Importantly, independent multiplicative SBA and FBA modulations of neurons from L1 or L2 in the model failed to reproduce our experimental observations. Together, these results suggest that FBA and SBA gate the bottom-up flow of visual information from the distributed network of upstream visual cortical areas, which are subsequently integrated by LIP neurons.

Interaction between SBA and FBA

Much previous work in LIP examined its role in attention (especially SBA) (Bisley and Goldberg, 2010; Herrington and Assad, 2009; Ibos et al., 2013; Saalmann et al., 2007), visual feature representation (Fanini and Assad, 2009; Toth and Assad, 2002) or encoding cognitive variables (Freedman and Assad, 2006; Sarma et al., 2015). Our previous study (Ibos and Freedman, 2014) was the first to parametrically characterize how FBA impacts feature tuning in LIP. To our knowledge, the current study is one of the few studies (Cohen and Maunsell, 2011; Hayden and Gallant, 2005, 2009; Patzwahl and Treue, 2009), and the only one in LIP, to directly compare the relative impact of SBA and FBA on the representation of spatial and non-spatial visual information. We show that joint modulations due to both types of attention in LIP reflect the integration of their interaction in cortical areas located one synapse upstream (such as MT or V4). This is consistent with bottom-up integrative mechanisms for both SBA and FBA, suggesting that LIP neurons act as generalized integrators of task-relevant visual inputs from upstream visual areas.

Despite the low level of neuronal response during AttOUT conditions, we were able to show that FBA modulates color selectivity of LIP neurons to irrelevant stimuli. A similar effect for direction appeared to be weaker, evident only at the population level. However, comparing the effect of attention on color and direction representation is difficult for at least two reasons. First, color and direction feature-tuning shifts were expressed in different units. Second, color space (ranging from yellow to red and therefore not covering the entire spectrum of visible colors) and direction space (8 directions evenly spaced in 360°) were highly dissimilar. Future work should focus on testing color selectivity of LIP neurons to a larger range of visible colors.

SBA in LIP

Our study suggests that endogenous SBA modulations in LIP are the result of bottom-up integration of upstream visually selective signals which show changes in response gain due to top-down SBA. This hypothesis is potentially at odds with the idea that LIP is a source of SBA modulations in upstream areas such as MT, as has been suggested by several recent studies. For example, in one task, monkeys were endogenously cued to orient SBA toward one position in space, while MT and LIP activity was examined. Modulations of LIP activity emerged ~60 ms prior to the modulations of MT neurons (Herrington and Assad, 2009). In another study, monkeys were trained to match both the position and orientation of visual stimuli (Saalmann et al., 2007). LIP and MT showed synchronized spiking activity, with LIP neuronal response leading LFP modulations in MT. Although the respective timing of attentional modulations are consistent with LIP driving attentional modulations in MT, these correlations do not provide direct evidence for a causal relationship. An alternative, and perhaps likely possibility is that attentional modulations in visual and parietal cortices are driven by top-down modulation from FEF (Astrand et al., 2015; Gregoriou et al., 2009; Ibos et al., 2013; Moore and Armstrong, 2003; Wardak et al., 2006) and PFC (Bichot et al., 2015; Hussar and Pasternak, 2013; Lennert and Martinez-Trujillo, 2011, 2013; Tremblay et al., 2015). For example, both electrical and pharmacological manipulation of FEF have been shown to produce attention-like modulation of activity in V4 (Armstrong and Moore, 2007; Noudoost and Moore, 2011; Schafer and Moore, 2011). Furthermore, functional anatomical studies reveal that FEF is more strongly connected to LIP than to MT (Ekstrom et al., 2008). Therefore, differences in timing of SBA modulations between LIP and MT could reflect this difference in functional connectivity with FEF. Here we propose a parsimonious hypothesis which can account for the characteristics of attentional modulations observed in LIP. However, this hypothesis still needs to be directly validated by additional experiments testing the flow of information between FEF, PFC, LIP and cortical visual neurons during multifactor-attentional tasks.

Differences between SBA and FBA in LIP

Our model posits that FBA and SBA modulate the activity of sensory neurons (corresponding to L1 in the model) in similar ways, with FBA and SBA changing the response gain of neurons selective to the attended portion of space and stimulus feature respectively. Given the similarity of SBA and FBA modulations of L1 activity, the apparent difference between each type of attentional modulation on LIP neurons’ spatial and feature selectivity needs to be examined further. For instance, SBA appeared to produce changes in response gain of LIP neurons while FBA resulted in shifts of neurons’ feature-tuning, suggesting that space is preferentially processed by LIP neurons compared to non-spatial features. However, these effects could be due to one or more details of our task design. First, these effects were predicted by our model as described in a previous report (Ibos and Freedman, 2014). On one hand attending to one of L2 neurons’ non-preferred feature values induced feature-tuning shifts in L2. In our experiments, motion and color feature selectivity were never assessed prior to recording and monkeys always had to attend to the same two feature conjunctions which were not matched to each neuron’s preferred features—a condition which led to shifts of feature tuning in L2. On the other hand, our model predicts that attending to each L2 neuron’s preferred feature value would produce gain modulations of L2 neurons (Ibos and Freedman, 2014). In our experiment, monkeys always attended either to the preferred or the non-preferred location of each LIP neuron, and SBA created gain-modulations of each neuron’s response, Second, several studies have demonstrated that the spatial selectivity of LIP neurons can vary according to changing task demands (Ben Hamed et al., 2002) and motor-preparation (Duhamel et al., 1992). However, our study was not designed to examine shifts of spatial selectivity and leaves open the possibility that LIP RFs could shift their position, size or shape as a result of SBA, as has been observed in V4 and MT (Connor et al., 1997; Womelsdorf et al., 2006). Interestingly, these modulations of V4 neurons’ spatial selectivity have been explained by the effects of recurrent inhibitions which surround the spotlight of SBA (Compte and Wang, 2006).

Limitations of the model

In this model, L1 neurons are targeted directly by attention, and L2 neurons (corresponding to LIP) are neither directly modulated by attention (except for control iterations), nor are they the source of attentional modulations in L1. However, top-down attention signals are presumed to originate in the FEF (Astrand et al., 2015; Gregoriou et al., 2009; Ibos et al., 2013; Moore and Armstrong, 2003; Wardak et al., 2006) or PFC (Bichot et al., 2015; Hussar and Pasternak, 2013; Lennert and Martinez-Trujillo, 2011, 2013; Tremblay et al., 2015), and are likely to target neurons from several areas simultaneously, including area V4, MT and also LIP (Ekstrom et al., 2008). Therefore, it is unlikely that the effects of attention on LIP activity exclusively result from the integration of the attention-modulated activity of upstream visual neurons. Instead, they might reflect the integration of both indirect (relayed by visual neurons) and direct top-down modulations (resulting from cortico-cortical connections between FEF, PFC and LIP). This hypothesis puts LIP at the interface between visual feature representation and attentional control, in a good position to integrate and multiplex (Meister et al., 2013; Rigotti et al., 2013; Rishel et al., 2013) task relevant sensory and cognitive information in order to mediate task performance. However, whether LIP neurons are directly targeted by both FBA and SBA, and whether FEF or PFC is the primary source of those modulations, remain open questions. Better understanding these circuit mechanisms should be addressed by examining cortical-layer-specific interactions between LIP and other areas involved in attentional control.

Finally, attention has been shown to modulate temporal aspects of neuronal spiking in addition to spike rate and to rely on wide range of computations such as divisive-normalization (Carandini and Heeger, 2012). For example, attending to either a position or a specific feature can result in decorrelation of the response of populations of V4 neurons tuned to the relevant position or features (Cohen and Maunsell, 2011), potentially improving the encoding of task-relevant information at the population level (Averbeck et al., 2006). The impact of integrating such modulations by downstream neurons remains experimentally untested. Our model needs to be refined and expanded to account for the full range of attention-related effects described in previous studies (such as the structure of noise correlation between L1 neurons or divisive-normalization in LIP).

Methods

Behavioral task and stimulus display

Experimental procedures were similar to the ones described in a previous study (Ibos and Freedman, 2014). The same two male monkeys (macaca mulatta, monkey M, ~10 kg; monkey N, ~11 kg) were seated head restrained in a primate chair inserted inside an isolation box (Crist Instrument), facing a 21 inch CRT monitor on which stimuli were presented (1280*1024 resolution, refresh rate 85 Hz, 57 cm viewing distance). Stimuli were 6° diameter circular patch of 476 random colored dots moving at a speed of 10 °/ s with 100% coherence. All stimuli were generated using the LAB color space (1976 CIE L*a*b) and all colors were measured as isoluminant in experimental condition using a luminance meter (Minolta). All procedures were in accordance with the University of Chicago’s Animal Care and Use Committee and US National Institutes of Health guidelines.

Gaze position was measured with an optical eye tracker (SR Research) at 1.0 kHz sample rate. Reward delivery, stimulus presentation, behavioral signals and task events were controlled by MonkeyLogic software (Asaad et al., 2013), running under MATLAB on a Windows-based PC.

For a subset of recording sessions, after the DCM task, we tested neuronal response during a passive viewing task. Stimuli were located inside neurons’ RF for ~ 100 to 160 trials. If neuron stability allowed it, we then recorded neuronal response to stimuli located 180° away from the neuron’s RF, in the opposite hemifield. Monkeys were rewarded for fixating a central dot and holding a manual touch bar while a sequence of four stimuli (450 ms each), randomly picked among the set of 64 stimuli used for the DCM task, were sequentially presented.

Data analysis

We analyzed the response of LIP neurons during correct trials only and exclusively for stimuli located inside their RF (test stimuli during AttIN, distractor stimuli during AttOUT). Neuronal activity acquired while a target stimulus was presented (during AttIN or AttOUT) was excluded from analyses. Analyses were performed independently on four different conditions: sample A or B presented inside (AIN, BIN) or outside (AOUT, BOUT) RFs. Behavioral and neurophysiological results were similar in both monkeys. Thus both datasets were merged for population analyses.

For all the following analyses using permutation procedures, the number of trials for each direction was equated as follow: the number of trials ni used to analyze response to direction-i was defined as the lowest number of trials among the four conditions. For example, if 20, 30, 15 and 38 direction-1 trials were acquired during AIN, BIN, AOUT and BOUT respectively, 15 trials (randomly picked with replacement from each respective pools of direction-1 trials) were used to define the response to direction-1 during each of the 4 conditions. This ensured that the same number of trials was used for each condition. Similar procedure was used for color.

Color tuning

We assessed color selectivity of LIP neurons using a 4-way ANOVA, with sample position, sample identity, color of test-period stimuli and direction of test-period stimuli as factors. Color selective neurons showed significant modulations (p<0.01) of their activity (100 to 350 ms after stimulus onset) for at least one of the 3 following factors: 1) color of test stimuli during AttIN and distractor during AttOUT, 2) interaction between sample identity and color of test-period stimuli, or 3) interaction between sample position and color of test-period stimuli.

The slope of a linear regression fitting the neuronal response to each color quantified each neuron’s color tuning. Yellow and red corresponded to values 1 and 8 of the X-axis respectively. The amplitude of color tuning shifts was assessed by subtracting the slopes of linear regressions during AIN and BIN and during AOUT and BOUT.

Population color tuning curves shown in Figure 4A were built by averaging the normalized neuronal activity to each color. For each neuron, we divided their response to each of the 8 color during AIN and BIN (100 to 350 ms time window) by the maximum response from these 16 conditions. Similarly, the response to each of the 8 color during AOUT and BOUT was divided by the maximum response of these 16 conditions.

Direction selectivity

We tested two different methods to define direction selectivity. We first used the previously described 4-way ANOVA. Direction selective neurons showed significant modulations (100 to 350 ms after stimulus onset) (p<0.01) to at least one of the 3 following conditions: 1) direction of test-period stimuli, 2) interaction between sample identity and the direction of test-period stimuli, 3) interaction between sample position and the direction of test-period stimuli. The selection criteria permitted the following analyses on the same pool of neurons during AttIN and AttOUT but did not account for specificities of circular data.

Therefore, we also defined direction selectivity using direction vectors coupled with permutation tests. This method corresponds to a more accurate way to describe selectivity for circular data and also ensures balanced number of presentation of each direction for each of the 4 conditions.

The preferred direction of each neuron during AIN, BIN, AOUT and BOUT were quantified independently by computing directional vectors defined by the following equation:

Where FR(i) is the mean firing rate of the neuron to the ith direction; [0 X] and [0 Y] are the Cartesian coordinates of the direction vector. For population vectors, individual vectors were not normalized by their firing rates as it is traditionally done in motor system. For each neuron, we compared the amplitudes of direction vectors and of a null direction vector. Direction vectors: we first computed each neuron’s direction vector during each condition using bootstrap based analysis similar to the one used in a previous study (Ibos and Freedman, 2014). From each pool of trials corresponding to each direction, we sampled with replacement ni trials for the ith direction. We computed the preferred direction during AIN, AOUT, BIN and BOUT trials (Pref-dirAIN, Pref-dirAOUT and Pref-dirBIN, Pref-dirBOUT) based on those re-sampled trials. This procedure, repeated 1000 times, resulted in 1000 vectors for each of the 4 conditions. Null direction vector: we computed each neuron’s null direction vector using permutations across directions. For each condition, trials from each direction were randomly picked with replacement and reassigned to any of the 8 directions. It resulted in homogeneous responses to each direction which were used to compute 4 null direction vectors (null-dirAIN, null-dirAOUT and null-dirBIN, null-dirBOUT). The number of trials for each direction was balanced so that direction vectors and null direction vectors were constructed using the exact same number of trials. This procedure was performed 1000 times for each of the four conditions.

Both procedures resulted in 4 distributions of 1000 vectors each. The amplitudes of each vector ( were compared to the amplitude of all of the paired null direction vectors (e.g. amplitude of Pref-dirAIN - amplitude of null-dirAIN, 106 comparisons). If more than 97.5 % of pref-dirAIN minus null-dirAIN were positive, or 97.5% of pref-dirBIN minus null-dirBIN were positive (p≤0.05), this neuron was considered direction selective during AttIN. Similarly, if more than 97.5 % of pref-dirAOUT minus null-dirAOUT or 97.5% of pref-dirBOUT minus null-dirBOUT were positive (p≤0.05), this neuron was considered direction selective during AttOUT.

Significance of feature-tuning shifts

Significance of direction tuning shifts was defined using the same permutation method as the one described in a previous study (Ibos and Freedman, 2014).

Conventional statistics such as the T-test or Wilcoxon test are not suited for circular data. Thus, equity of the means of circular data were compared using a parametric Hotelling test for paired circular data (Zar, 1984).

Decimating activity

We tested the impact of the overall difference of level of neuronal activity between AttIN and AttOUT on the amplitude of FBA modulations. To do so, we decimated the response of neurons during AttIN to their level during AttOUT. For each neuron, we first computed a ratio (R) of the averaged response to all test stimuli during AttOUT over the averaged response to all test stimuli during AttIN (450 ms window). For each AttIN trial, we then randomly removed from respective spike trains a number of action potential which corresponded to the rounded product of 1-R with the number of action potentials for this trial. For example, if one neuron had a mean firing to all test stimuli of 100 sp/s during AttIN and a mean firing rates of 75 sp/s during AttOUT, 1/4 of the action potential of each AttIN trial were randomly removed.

Support vector machine classifier

We trained and tested support vector machine classifiers (Chih-chung Chang, 2011) to decode 1) the identity of the sample stimulus based on the response to test stimuli and 2) the match status of the direction of stimuli located inside neurons’ RF. Analyses were performed independently for AttIN and AttOUT based on the activity of the entire pool of recorded LIP neurons (N=74). Global levels of activity during AttIN and AttOUT were equated using the decimating procedure described previously. Sample identity: Training sets of test-period data were built by randomly picking with replacement 70 trials from the pool of AIN trials, and 70 trials from the pool of BIN trials. Testing sets were built by picking with replacement 30 trials, different from training trials, for similar conditions each. Match status: Training sets of trials were built by randomly picking with replacement 70 trials from the pool of direction-1 (direction A, excluding color A) during AIN trials (direction match), 70 trials from the pool of direction-5 (direction B, excluding color B) during BIN trials (direction match), 70 trials from the pool of direction-1 (direction A) during BIN trials (non-match) and 70 trials from the pool direction-5 (direction B) during AIN trials (non-match). Testing sets were built by picking with replacement 30 trials, different from training trials, for similar conditions each.

Each of these procedures was repeated 1,000 times, and classifiers were considered to perform above chance (50 % for sample identity, 25% for match status of test stimuli’s direction) if the accuracy of the decoder was higher than chance level for more than 950/1,000 iteration (p<0.05). Similar approach was used during AttOUT. Significance of the difference of accuracy between AttIN and AttOUT was assessed by computing all the combinatory differences between the 1,000 accuracy values during AttIN and the 1,000 accuracy values during AttOUT (106 comparisons). If more than 95% of these differences were positive, accuracy was considered significantly higher during AttIN compared to AttOUT (p<0.05).

Two-layer integration model

The integration model consists of two neuronal layers whose structure has been described in a previous study (Ibos and Freedman, 2014). We test how interactions between SBA and FBA modulations of cortical visual areas like V4 and MT (simulated in this model by 360 L1 neurons) influence feature and spatial tuning of LIP neurons (L2 neurons).

Each of 1000 second-layer (L2) neurons received weighted inputs from 360 neurons of the first layer (L1). Each L1 neuron’s direction tuning function (Tun1i) (equation(1)) was a Gaussian distribution centered on its preferred direction µ with a 50° standard deviation (σ). Tun1i amplitudes were modulated by both SBA spacegain (two values randomly picked, independently during attIN (sp=1) and attOUT (sp = 0), from uniform distributions (U) and held constant for each L1 neuron of the same iteration; equation(2)) and FBA featgain (360° wide Gaussian distribution centered on the relevant direction (θ; 90° or 270°) with a standard deviation of 45° equation(3)). Lower and higher bo unds of featgain (which modulates neurons tuned to the un-attended and attended direction on most of the 1000 iterations) were randomly picked between 0.85 and 1 (lower bound) and 0.95 and 1.25 (higher bound). Int (uniform distribution), which changed the amplitude of featgain, represents interactions between SBA and FBA. In the case SBA and FBA were independent, Int was set to 1 for each iteration. During passive viewing, spacegain was equal to 0 and featgain was equal to 1 for all layer one neurons.

| (1) |

Where:

-

-

sp: position of attention (sp = 1 during attIN; sp = 0 during attOUT).

-

-

Θ: attended direction (Θ = 270° during A trials; Θ = 90° during B trials).

-

-

α: direction of the observed stimulus (ranged from 1 to 360°).

| (2) |

| (3) |

Feature tuning of L2 neurons depended on the linear integration of L1 inputs and followed equation (4):

| (4) |

Tun2 is the direction tuning of L2 neurons after linear integration of the weighted inputs from the 360 neurons of L1. W(i) is the synaptic weight of the connection between the ith neuron of L1 and L2 neuron.

For each of the 1000 L2 neurons, the standard deviation of the distribution of the synaptic weight (W) was randomly assigned between 1 to 360°. We also randomly defined the center of the distribution of the connection weights (between 1 to 360°), which consequently modulated the preferred direction of L2 neurons.

Additional controls

We tested whether direct modulations on L2 neurons could account for our results. In these simulations, response of L2 neurons follow equation (5):

| (5) |

Where Tun2c corresponds to direction tuning of L2 neurons during these controls; the value of featgain2 depends on the distance between the attended direction and the preferred direction of L2 neurons during passive viewing (when spacegain =0 ; featgain = 1; featgain2 = 1).

Supplementary Material

Acknowledgments

We thank N. Buerkle for animal training, N.Y. Masse for analysis advice, the staff of the University of Chicago Animal Resources Center for expert veterinary assistance. We also thank N.Y. Masse, K. Mohan and B.E. Verhoef for comments and discussion on earlier versions of this manuscript. This work was supported by NIH R01 EY019041 and NSF CAREER award 0955640. Additional support was provided by The McKnight Endowment Fund for Neuroscience.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Author Contributions

Conceptualization, Methodology, Investigation, Formal Analysis: G.I.; Writing, Visualization, Project Administration: G.I. and D.J.F. Supervision, Resources, Funding Acquisition: D.J.F.

References

- Armstrong KM, Moore T. Rapid enhancement of visual cortical response discriminability by microstimulation of the frontal eye field. Proc. Natl. Acad. Sci. U. S. A. 2007;104:9499–9504. doi: 10.1073/pnas.0701104104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Armstrong KM, Chang MH, Moore T. Selection and maintenance of spatial information by frontal eye field neurons. J. Neurosci. 2009;29:15621–15629. doi: 10.1523/JNEUROSCI.4465-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asaad WF, Santhanam N, McClellan S, Freedman DJ. High-performance execution of psychophysical tasks with complex visual stimuli in MATLAB. J. Neurophysiol. 2013;109:249–260. doi: 10.1152/jn.00527.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astrand E, Ibos G, Duhamel J-R, Ben Hamed S. Differential Dynamics of Spatial Attention, Position, and Color Coding within the Parietofrontal Network. J. Neurosci. 2015;35:3174–3189. doi: 10.1523/JNEUROSCI.2370-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Averbeck BB, Latham PE, Pouget A. Neural correlations, population coding and computation. Nat. Rev. Neurosci. 2006;7:358–366. doi: 10.1038/nrn1888. [DOI] [PubMed] [Google Scholar]

- Bichot NP, Heard MT, DeGennaro EM, Desimone R. A Source for Feature-Based Attention in the Prefrontal Cortex. Neuron. 2015 doi: 10.1016/j.neuron.2015.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Attention, intention, and priority in the parietal lobe. Annu. Rev. Neurosci. 2010;33:1–21. doi: 10.1146/annurev-neuro-060909-152823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carandini M, Heeger DJ. Normalization as a canonical neural computation. Nat. Rev. Neurosci. 2012;13:51–62. doi: 10.1038/nrn3136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrasco M. Visual attention: the past 25 years. Vision Res. 2011;51:1484–1525. doi: 10.1016/j.visres.2011.04.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chih-chung Chang C-JL. LIBSVM: a Library for Support Vector Machines. ACM Trans. Intell. Syst. Technol. 2011;227:1–27. [Google Scholar]

- Cohen MR, Maunsell JHR. Attention improves performance primarily by reducing interneuronal correlations. Nat. Neurosci. 2009;12:1594–1600. doi: 10.1038/nn.2439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen MR, Maunsell JHR. Using neuronal populations to study the mechanisms underlying spatial and feature attention. Neuron. 2011;70:1192–1204. doi: 10.1016/j.neuron.2011.04.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Compte A, Wang X-J. Tuning curve shift by attention modulation in cortical neurons: a computational study of its mechanisms. Cereb. Cortex. 2006;16:761–778. doi: 10.1093/cercor/bhj021. [DOI] [PubMed] [Google Scholar]

- Connor CE, Preddie DC, Gallant JL, Van Essen DC. Spatial Attention Effects in Macaque Area V4. J. Neurosci. 1997;17:3201–3214. doi: 10.1523/JNEUROSCI.17-09-03201.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye movements. Science. 1992;255:90–92. doi: 10.1126/science.1553535. [DOI] [PubMed] [Google Scholar]

- Ekstrom LB, Roelfsema PR, Arsenault JT, Bonmassar G, Vanduffel W. Bottom-up dependent gating of frontal signals in early visual cortex. Science. 2008;321:414–417. doi: 10.1126/science.1153276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fanini A, Assad Ja. Direction selectivity of neurons in the macaque lateral intraparietal area. J. Neurophysiol. 2009;101:289–305. doi: 10.1152/jn.00400.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freedman DJ, Assad JA. Experience-dependent representation of visual categories in parietal cortex. Nature. 2006;443:85–88. doi: 10.1038/nature05078. [DOI] [PubMed] [Google Scholar]

- Gregoriou GG, Gotts SJ, Zhou H, Desimone R. High-frequency, long-range coupling between prefrontal and visual cortex during attention. Science. 2009;324:1207–1210. doi: 10.1126/science.1171402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben Hamed S, Duhamel J-R, Bremmer F, Graf W. Visual receptive field modulation in the lateral intraparietal area during attentive fixation and free gaze. Cereb. Cortex. 2002;12:234–245. doi: 10.1093/cercor/12.3.234. [DOI] [PubMed] [Google Scholar]

- Hayden BY, Gallant JL. Time course of attention reveals different mechanisms for spatial and feature-based attention in area V4. Neuron. 2005;47:637–643. doi: 10.1016/j.neuron.2005.07.020. [DOI] [PubMed] [Google Scholar]

- Hayden BY, Gallant JL. Combined effects of spatial and feature-based attention on responses of V4 neurons. Vision Res. 2009;49:1182–1187. doi: 10.1016/j.visres.2008.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrington TM, Assad JA. Neural activity in the middle temporal area and lateral intraparietal area during endogenously cued shifts of attention. J. Neurosci. 2009;29:14160–14176. doi: 10.1523/JNEUROSCI.1916-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hussar CR, Pasternak T. Common rules guide comparisons of speed and direction of motion in the dorsolateral prefrontal cortex. J. Neurosci. 2013;33:972–986. doi: 10.1523/JNEUROSCI.4075-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibos G, Freedman DJ. Dynamic Integration of Task-Relevant Visual Features in Posterior Parietal Cortex. Neuron. 2014;83:1468–1480. doi: 10.1016/j.neuron.2014.08.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibos G, Duhamel J-R, Ben Hamed S. A Functional Hierarchy within the Parietofrontal Network in Stimulus Selection and Attention Control. J. Neurosci. 2013;33:8359–8369. doi: 10.1523/JNEUROSCI.4058-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ipata AE, Gee AL, Goldberg ME. Feature attention evokes task-specific pattern selectivity in V4 neurons. Proc. Natl. Acad. Sci. U. S. A. 2012;109:16778–16785. doi: 10.1073/pnas.1215402109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lennert T, Martinez-Trujillo J. Strength of response suppression to distracter stimuli determines attentional-filtering performance in primate prefrontal neurons. Neuron. 2011;70:141–152. doi: 10.1016/j.neuron.2011.02.041. [DOI] [PubMed] [Google Scholar]

- Lennert T, Martinez-Trujillo JC. Prefrontal neurons of opposite spatial preference display distinct target selection dynamics. J. Neurosci. 2013;33:9520–9529. doi: 10.1523/JNEUROSCI.5156-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard CJ, Balestreri A, Luck SJ. Interactions between space-based and feature-based attention. J. Exp. Psychol. Hum. Percept. Perform. 2015;41:11–16. doi: 10.1037/xhp0000011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martinez-Trujillo JC, Treue S. Feature-based attention increases the selectivity of population responses in primate visual cortex. Curr. Biol. 2004;14:744–751. doi: 10.1016/j.cub.2004.04.028. [DOI] [PubMed] [Google Scholar]

- Masse NY, Herrington TM, Cook EP. Spatial attention enhances the selective integration of activity from area MT. J. Neurophysiol. 2012;108:1594–1606. doi: 10.1152/jn.00949.2011. [DOI] [PubMed] [Google Scholar]

- Maunsell JHR, Treue S. Feature-based attention in visual cortex. Trends Neurosci. 2006;29:317–322. doi: 10.1016/j.tins.2006.04.001. [DOI] [PubMed] [Google Scholar]

- McAdams CJ, Maunsell JH. Attention to both space and feature modulates neuronal responses in macaque area V4. J. Neurophysiol. 2000;83:1751–1755. doi: 10.1152/jn.2000.83.3.1751. [DOI] [PubMed] [Google Scholar]

- Meister MLR, Hennig JA, Huk AC. Signal multiplexing and single-neuron computations in lateral intraparietal area during decision-making. J. Neurosci. 2013;33:2254–2267. doi: 10.1523/JNEUROSCI.2984-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore T, Armstrong KM. Selective gating of visual signals by microstimulation of frontal cortex. Nature. 2003;421:370–373. doi: 10.1038/nature01341. [DOI] [PubMed] [Google Scholar]

- Noudoost B, Moore T. Control of visual cortical signals by prefrontal dopamine. Nature. 2011;474:372–375. doi: 10.1038/nature09995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patzwahl DR, Treue S. Combining spatial and feature-based attention within the receptive field of MT neurons. Vision Res. 2009;49:1188–1193. doi: 10.1016/j.visres.2009.04.003. [DOI] [PubMed] [Google Scholar]

- Reynolds JH, Pasternak T, Desimone R. Attention increases sensitivity of V4 neurons. Neuron. 2000;26:703–714. doi: 10.1016/s0896-6273(00)81206-4. [DOI] [PubMed] [Google Scholar]

- Rigotti M, Barak O, Warden MR, Wang X-J, Daw ND, Miller EK, Fusi S. The importance of mixed selectivity in complex cognitive tasks. Nature. 2013 doi: 10.1038/nature12160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rishel CA, Huang G, Freedman DJ. Independent Category and Spatial Encoding in Parietal Cortex. Neuron. 2013;77:969–979. doi: 10.1016/j.neuron.2013.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saalmann YB, Pigarev IN, Vidyasagar TR. Neural mechanisms of visual attention: how top-down feedback highlights relevant locations. Science. 2007;316:1612–1615. doi: 10.1126/science.1139140. [DOI] [PubMed] [Google Scholar]

- Sarma A, Masse NY, Wang X-J, Freedman DJ. Task-specific versus generalized mnemonic representations in parietal and prefrontal cortices. Nat. Neurosci. 2015 doi: 10.1038/nn.4168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schafer RJ, Moore T. Selective attention from voluntary control of neurons in prefrontal cortex. Sci. New York NY. 2011;332:1568–1571. doi: 10.1126/science.1199892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toth LJ, Assad Ja. Dynamic coding of behaviourally relevant stimuli in parietal cortex. Nature. 2002;415:165–168. doi: 10.1038/415165a. [DOI] [PubMed] [Google Scholar]

- Tremblay S, Pieper F, Sachs A, Martinez-Trujillo J. Attentional filtering of visual information by neuronal ensembles in the primate lateral prefrontal cortex. Neuron. 2015;85:202–215. doi: 10.1016/j.neuron.2014.11.021. [DOI] [PubMed] [Google Scholar]

- Wardak C, Ibos G, Duhamel J-R, Olivier E. Contribution of the monkey frontal eye field to covert visual attention. J. Neurosci. 2006;26:4228–4235. doi: 10.1523/JNEUROSCI.3336-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Womelsdorf T, Anton-Erxleben K, Pieper F, Treue S. Dynamic shifts of visual receptive fields in cortical area MT by spatial attention. Nat. Neurosci. 2006;9:1156–1160. doi: 10.1038/nn1748. [DOI] [PubMed] [Google Scholar]

- Zar JH. Biostatistical analysis (Englewood Cliffs) 1984 [Google Scholar]

- Zhou H, Desimone R. Feature-based attention in the frontal eye field and area V4 during visual search. Neuron. 2011;70:1205–1217. doi: 10.1016/j.neuron.2011.04.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.