Abstract

This study examined three methodological approaches to defining the critical elements of Illness Management and Recovery (IMR), a curriculum-based approach to recovery. Sixty-seven IMR experts rated the criticality of 16 IMR elements on three dimensions: defining, essential, and impactful. Three elements (Recovery Orientation, Goal Setting and Follow-up, and IMR Curriculum) met all criteria for essential and defining and all but the most stringent criteria for impactful. Practitioners should consider competence in these areas as preeminent. The remaining 13 elements met varying criteria for essential and impactful. Findings suggest that criticality is a multifaceted construct, necessitating judgments about model elements across different criticality dimensions.

Keywords: critical elements, fidelity, Illness management and recovery, implementation, severe mental illness

Psychosocial interventions can ideally be distilled to a finite set of critical elements (Bickman, 1987; Bond, 1991) and implementation of a model is facilitated by well-defined elements that provide a clear picture of the ‘core components’ versus the ‘adaptable periphery’ (Damschroder et al., 2009). However, these elements are often not self-evident. Identification of critical elements is a precursor for measuring program fidelity (also known as treatment integrity; Carroll et al., 2007; Keith, Hopp, Subramanian, Wiitala, & Lowery, 2010; Kitson, Harvey, & McCormack, 1998; Mihalic, 2004; Perepletchikova, Treat, & Kazdin, 2007). Treatment fidelity is a key indicator of construct validity in research (Cook, Campbell, & Day, 1979), and higher fidelity has been associated with better outcomes across a variety of interventions (e.g., Baer et al., 2007; Durlak & DuPre, 2008; Dusenbury, Brannigan, Falco, & Hansen, 2003; Haddock et al., 2001; McGrew & Griss, 2005; however, see Bond & Salyers (2004) and Webb, Derubeis, and Barber (2010) for counterexamples).

Previous efforts have outlined and demonstrated the process of identifying critical elements. Bond, Williams, et al. (2000) provide a comprehensive review and step-by-step guide. These authors suggest that the identification of critical elements differs based on the degree to which the model has been previously articulated, with poorly defined models calling for inductive methods and well-articulated models calling for confirmatory methods. Regardless of the method of identification, criticality of elements should be validated. Some methods include model specification based on norms in the field, expert evaluation of the likelihood of success of various hypothetical descriptions of different treatment programs (Sechrest, West, Phillips, Redner, & Yeaton, 1979), and “component analysis,” in which fidelity data are examined to determine which elements directly impact outcomes (Carroll et al., 2007; McGrew, Bond, Dietzen, & Salyers, 1994). Another increasingly popular method is expert ratings of element criticality (Marty, Rapp, & Carlson, 2001; McEvoy, Scheifler, & Frances, 1999; McGrew & Bond, 1995; McGuire & Bond, 2011; Schaedle & Epstein, 2000). This method holds certain practical advantages. Expert opinions may be easier to collect than actual practice in the field, are not as burdensome for respondents as vignette ratings, and do not require data on element adherence paired with outcome data. Moreover, expert panels have built-in content validity and can be leveraged to integrate both research and clinical experience (based on the experts polled).

Although previous works (Bond, Evans, Salyers, Williams, & Kim, 2000; Carroll et al., 2007; Mowbray, Holder, Teague, & Bybee, 2003) provide substantial guidance, the authors of such works do not address methodological decisions encountered when specifying critical elements, most notably the basis on which an element should be considered “critical.” Bond and colleagues (Bond, Evans, et al., 2000) suggest defining model elements as critical if they are judged as “important, critical, or essential.” Similarly, Carroll and colleagues (2007) define critical elements are those that “are prerequisite if the intervention is to have its desired effect” (p. 5). However, predicating “criticality” of an element on its direct relationship with patient outcomes is problematic. Practically, it presupposes the identification of putative model elements and measurement of those elements in conjunction with patient outcomes—a scenario rarely existing in research (let alone practice). Moreover, these approaches to defining criticality fail to account for and assess other important factors that can have major impacts on judgments of criticality. For example, critical elements of a model may vary depending on the intended outcome (e.g., hospital use, quality of life, cost effectiveness), the intended client (e.g., physical or intellectual co-morbidities, ethnic or cultural factors), the setting (e.g., rural vs. urban, country), or the rater (e.g., administrators, clinicians, family members, clients). In addition, there may be cases in which an element should be considered critical to a program model even though it is not believed to directly affect outcomes.

Dimensions of Criticality

An element may be defining of the model, i.e., the element is intrinsic to the definition of the intervention, but may not be directly associated with improved consumer outcomes. Consider eye-movement desensitization and reprocessing (EMDR), an evidence-based treatment for trauma (Davidson & Parker, 2001). The hallmark of this intervention is the use of eye movements concurrent with trauma exposure. Initially, eye movement was believed to have a theoretical link to outcomes (Shapiro & Solomon, 1995); however, subsequent research (Davidson & Parker, 2001) has shown that the eye movement component is not directly linked to patient outcomes and that effects were reducible to exposure training. Nonetheless, eye movements are the hallmark of EMDR—this element is both defining of the intervention (i.e., “eye movement” is in the name) and is unique to EMDR. Despite the lack of link between eye movements and outcomes, eye movements should be considered a “critical” element of EMDR.

Other elements may be considered essential to the implementation of a model in that some threshold level must be present in order for the intervention to be considered successfully implemented. For instance, the element may accord with important values or support other elements which do impact outcomes. Regarding the former, the inclusion of peer specialists on assertive community treatment teams is considered critical because peer inclusion is consistent with recovery oriented services; however, there is inconsistent evidence as to the impact of peer inclusion on outcomes (McGrew et al., 1994; Wright-Berryman, McGuire, & Salyers, 2011). As an example of a critical element with indirect impact, the inclusion of a credentialed employment specialist or registered nurse (versus a licensed practicing nurse) may not be directly linked with consumer outcomes in assertive community treatment (McGrew et al., 1994), but these elements are still considered critical to the model, in part because they help guarantee quality staff who can address consumer needs.

Generally, elements that are impactful, i.e., directly affect consumer outcomes, are considered critical to the model. However, just because an element is linked with desired outcomes does not mean it is uniquely part of the model of interest. Consider the role of common factors in model specification. In the case of psychotherapy models, certain factors (e.g., therapeutic alliance) are common to most models and directly affect outcomes (Lambert & Barley, 2001); however, it is unclear whether these elements should be considered “critical” elements of each of these models. Alternatively, some elements are specifically prohibited in some models, despite their association with consumer outcomes in other programs. For instance, although rapid-job search is associated with better employment rates in supported employment (Bond, 2004), this element would be prohibited in other vocational rehabilitation models such as transitional employment (Koop et al., 2004).

Clinical Context and Intervention

IMR is a standardized psychosocial intervention developed to help people with severe mental illness acquire knowledge and skills to better manage their illness, as well as set and achieve personal recovery goals (Mueser & Gingerich, 2002). Created as part of the Substance Abuse and Mental Health Services Administration’s National Implementing Evidence-Based Practices (EBP) Project (Drake et al., 2001), IMR uses a manualized curriculum, workbook, and implementation toolkit. The third edition of IMR contains 11 modules: recovery strategies, mental illness practical facts, stress-vulnerability model, social support, medication management, relapse prevention, coping with stress, coping with problems and symptoms, getting needs met in the mental health system, drug and alcohol use, and physical health. Each module is covered over the course of several sessions using a combination of cognitive-behavioral techniques, motivation-based strategies, and interactive educational techniques. Substantial evidence, including three randomized-controlled trials (Färdig, Lewander, Melin, Folke, & Fredriksson, 2011; Hasson-Ohayon, Roe, & Kravetz, 2007; Levitt et al., 2009) and six other quasi-experimental trials (see McGuire et al. (2014) for a review) have supported the effectiveness of IMR in increasing illness self-management and reducing psychiatric symptoms.

Descriptions of IMR elements are available from several sources, including a review by the creators of evidence supporting model elements (Mueser, Corrigan, et al., 2002), a program-level fidelity scale (Mueser, Gingerich, Bond, Campbell, & Williams, 2002), and a clinician competency scale—the IMR treatment integrity scale (IT-IS, McGuire et al., 2012). The latter is the most recent enumeration of model elements and was the basis for the current study (see Table 1 for model elements). The scale incorporated elements listed in the previous two sources, was compiled following research on the full model (as opposed to the others, which were constructed prior to research on IMR as a package), and its reliability has been examined. As noted above, given the relatively clear articulation of the IMR model, the current study used a confirmatory method to establish expert agreement regarding critical elements.

Table 1.

IMR Model Elements with Corresponding Survey Description

| Model Element | Definition |

|---|---|

| Therapeutic Relationship | Practitioner(s)’ ability to develop rapport with client(s) and display warmth and empathy. |

| Recovery Orientation | Practitioner(s) displaying an attitude consistent with “a process of change through which individuals improve their health and wellness, live a self-directed life, and strive to reach their full potential” (SAMHSA, 2012). |

| Group Member Involvement | Practitioner(s) engaging all group members in the group’s activities. Note: This element is only relevant when IMR is administered in a group format. |

| Enlisting Mutual Support | Practitioner(s) encouraging positive interactions among group members that conveys emotional or instrumental support. Note: This element is only relevant when IMR is administered in a group format. |

| Involvement of Significant Other | Practitioner(s) eliciting participation of significant other(s) in the IMR process. Significant others are people the client views as an important person in her or his life (excluding direct-care staff members). Involvement is defined by either a) attending the IMR sessions or b) the consumer reporting that the person intentionally helped them work toward an IMR goal or reviewed the IMR materials. |

| Structure and Efficient Use of Time | Practitioner(s) following a standard structure for each IMR session, the ability to adequately cover all components of IMR sessions, and stay with the agenda planned for the session. |

| IMR Curriculum | Practitioner(s) basing the session on a structured curriculum that is related to one of the 10 IMR topics. Raters should independently consider two factors:

|

| Goal Setting and Follow-up | The process by which clients conceptualize a desired future state and regularly assess progress toward that end state. This process includes initially establishing the goal, checking progress toward the goal, and evaluating the current relevance and importance of the goal. |

| Weekly Action Plan | Practitioner(s) regular collaboration with consumer(s) to develop explicit and intentional assignments. Assignments could include action steps: weekly activities aimed at progressing toward measurable benchmarks of goal progress. Weekly assignments could also include homework assignments: weekly activities aimed at learning and applying the information and skills presented during the session. In certain circumstances, action steps and homework may overlap. |

| Action Plan Review | Practitioner(s) regularly reviewing the last session’s assignment (could be an action step towards a goal or homework based on the curriculum). |

| Motivational Enhancement | Practitioner(s) regularly using clinical strategies designed to enhance client motivation for change. |

| Educational | Practitioner(s) regularly applying techniques that are effective for adult learning. |

| Cognitive-Behavioral | Practitioner(s) using therapeutic techniques aimed at helping the client change their thinking and/or behavior in order to reduce symptoms and/or the impact of symptoms. |

| Relapse Prevention | Relapse prevention training refers to: Identification of environmental triggers, identification of early warning signs, developing a plan to manage early warning signs, developing a plan for managing stress, and involving significant others in the plan. |

| Behavioral Tailoring | Practitioner(s) teaching client(s) how to modify their environment to help clients incorporate taking medication into their daily lives. |

| Coping Skills | Practitioner(s) helping client(s) identify and develop ways to reduce the frequency, intensity, and/or functional impact of their symptoms. |

The Current Study

The current study examined the critical elements of IMR through an expert survey to achieve three aims. First, the study aimed to explicate and solidify the critical elements of IMR, which secondly, critiqued the content validity of the IT-IS. Thirdly, the study examined the implications of various methodological decisions inherent in an expert survey.

The identification and validation of the “critical” elements of an intervention can facilitate program implementation, guide responsible adaptation to local context, and serve as a necessary building block for fidelity assessment. Although previous work has provided guidance on the identification of critical elements, there are no concrete recommendations for selecting among the varying criteria by which criticality can be established. Therefore, we use the example of an established evidence-based practice and report on an expert survey of the critical elements of illness management and recovery (IMR). Our survey differed from previous efforts in its use of differing dimensions (e.g., essential, defining, impactful) by which experts were asked to rate “criticality,” thus allowing us to explore the conceptual impact of such methodological decisions on model formulation and to examine factors impacting expert agreement.

Methods

Sampling

IMR experts were identified via multiple sources in two stages. The first stage targeted research experts, defined as individuals who had published peer-reviewed articles or obtained research grants on IMR. These research experts were identified using several strategies. We identified 93 published experts using Psychinfo, Web of Science, Medline, Google Scholar, and the reference lists of identified IMR articles. Accurate contact information was available for 70 (75.3%) of the experts. Grant recipients were identified through the Report Expenditures and Results tool (RePORTER) from the National Institute of Health, the ClincalTrail.gov website, and the Research and Development list of funded studies from the Department of Veterans Affairs. After eliminating grant recipients who were co-investigators on the current study or previously identified, five additional experts were identified and contacted, for a total of 75 research experts with viable contact information.

The second stage targeted clinical experts, defined as individuals with at least two years of IMR experience in a setting serving individuals with severe mental illness. Research experts were asked to nominate up to 12 additional experts, three within each of the following categories: trainers/consultants, IMR supervisors, IMR clinicians, and IMR peer providers (i.e., individuals in recovery from a severe mental illness who are providing services). Responding clinical experts were also asked to nominate additional clinical experts. Altogether, survey respondents and IMR research groups known to the research team identified 46 trainers/consultants, 22 supervisors, 12 clinicians, and nine peer providers. Of those identified, we were able to contact 45 trainers/consultants (97.8%), 20 supervisors (90.9%), 10 clinicians (83.3%), and eight peer providers (88.9%). Only respondents agreeing or strongly agreeing with the statement “I am knowledgeable enough about Illness Management and Recovery to rate the criticality of IMR elements” were included in the final sample.

Of 158 research or clinical experts invited, 73 (46.2%) did not respond to multiple invitations, nine (5.7%) did not agree they were “knowledgeable enough about Illness Management and Recovery to rate the criticality of IMR elements,” and nine (5.7%) accessed the survey but completed less than 25% of the questions, resulting in a final sample of 67 respondents (42.4% of 158). Specifically, 33 out of 75 (44%) published experts, 21 out of 45 (46.7%) trainers/consultants, seven out of 20 (35.0%) supervisors, two out of 10 (20.0%) clinicians, and four out of eight (50.5%) peer providers were included. Of the total sample, 41(61.2%) reported ever providing IMR services, while 26 (38.8%) identified as non-providers.

Procedures

A research assistant sent identified experts an email with a hyperlink to the survey on SurveyMonkey.com, along with contact information for questions or comments. Emails also provided a brief rationale for why the expert was being contacted and a brief overview of the survey. Once participants clicked on the hyperlink, they were directed to an informed consent page that was required to proceed to the survey questions. Participants completed surveys in approximately one hour. One month after the initial email was sent, a study investigator sent a reminder email to any experts who had not completed the survey. Personalized reminder emails were also sent to any IMR experts personally known to the study investigators. The study took place from July 2012 to November 2012. All procedures for the study were approved by the Indiana University Institutional Review Board.

Measures

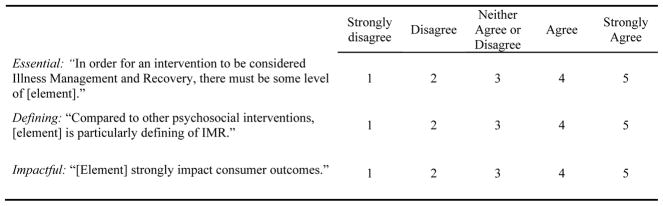

The survey consisted of screening and background questions, as well as questions regarding the criticality of 16 IMR elements. Potential IMR elements were taken from the IMR treatment integrity scale (IT-IS; McGuire et al., 2012). For each of the 16 potential IMR elements and one distracter item, experts rated criticality using three dimensions: Essential—that some level of the element was necessary in order for an intervention to be considered IMR. Defining—in comparison with other interventions, this element defined IMR. Impactful—thought to have a direct link to outcomes (Figure 1). The distractor item (“exploring childhood experiences”) was included in order to guard against positive response bias. Finally, participants were asked to indicate additional elements of IMR that may be critical.

Figure 1.

Survey Questions

Analyses

We calculated mean ratings, percent “agreeing” (rating of 4 or 5), and percent “strongly agreeing” (5) with each criticality question for each element across all expert types. We first examined a mean rating greater than that received by the distractor item as a minimum threshold for further consideration. We then examined varying criteria (mean rating ≥4.0, >75% agreeing (McGrew & Bond, 1995; McGuire & Bond, 2011), >50% strongly agreeing, and mean score greater than the distracter item) within each criticality dimension (essential, defining, and impactful). In order to test gross differences in dimensions, we examined mean differences (averaged across items) using a series of three paired t-tests. To examine the correlation between dimensions, we examined Pearson’s correlations between dimensions within each element. We also conducted exploratory analyses regarding differences between types of experts on each dimension (averaged across element) using independent t-tests and within each element using repeated-measures MANOVAs. Because the analyses were exploratory, we used a criterion of p<.05. All analyses were conducted in SPSS version 21.

Results

Sample

Sixty-seven expert respondents from 10 countries, including 17 states in the US, completed the survey. Most respondents (80.3%) identified as providers/supervisors, while fewer (59.0%) reported experience with an IMR research project. Self-reported characteristics of the final sample can be found in Table 2.

Table 2.

Self-Reported Respondent Characteristics (n = 67)

| Variable | Frequency Endorsed | Percentage Endorsed |

|---|---|---|

| Role/Experience with IMRab | ||

| Provider/supervisor | 49 | 80.3 |

| Trainer/consultant | 38 | 62.3 |

| Research project | 36 | 59.0 |

| Experience with IMR (mean years, SD)c | 6.5 | 4.2 |

| IMR treatment settingad | ||

| Outpatient | 52 | 91.2 |

| Inpatient | 20 | 35.1 |

| Residential | 18 | 31.6 |

Respondents were able to choose multiple responses.

n = 61.

n = 56.

n = 57.

Methodological Considerations

Average ratings (across elements) were lower for defining (mean = 3.78, s.d. = .62) than for essential (mean = 4.25, s.d. = .33; t = 6.89, d.f. = 66, p < .001) and impactful (mean = 4.24, s.d. = .32; t = −7.06, d.f. = 66, p < .001). Average ratings for essential and impactful did not differ. We sought to explore the degree to which ratings of criticality dimensions (essential, defining, and impactful) were associated with one another. We examined Pearson’s correlations between each dimension within each of the 16 elements. The correlations between ratings within each element were significant (p < .05), except: Item 8: Goal Setting and Follow-up where defining and impactful were correlated at a trend level (r = .21, p = .09); and Item 12: Educational Techniques where impactful dimension was not significantly correlated with essential (r = .18, p = .18) or defining (r = .17, p = .21).

We next sought to examine whether different thresholds for determining criticality impacted which elements would be considered critical. We first examined criticality as determined by the liberal threshold of a mean rating greater than the distracter item; all elements (except the distractor) met this threshold for each dimension of criticality. We considered three additional thresholds: a mean rating of ≥4.0 or greater, >75% of experts “agreeing” (i.e., rating 4 or 5), and >50% of experts “strongly agreeing” (i.e., rating of 5; Table 3). The mean rating of 4.0 and >75% of experts “agreeing” thresholds yielded almost identical results—in only one case (impactful dimension of Involvement of Significant Others) did an element meeting one threshold not meet the other. The threshold of >50% of experts “strongly agreeing” was much more conservative; in cases where both other thresholds were met (30 dimension-element pairs), only 13 (43.3%) of those met this threshold.

Table 3.

Critical Elements Based on Threshold and Criteria

| Element | N | Essential to IMRa

|

Defining of IMRa

|

Impactful on Outcomesa

|

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean ≥ 4.0 | >75% Agree | >50% Strongly Agree | Mean ≥ 4.0 | >75% Agree | >50% Strongly Agree | Mean ≥ 4.0 | >75% Agree | >50% Strongly Agree | ||

| Essential, Defining, and Impactful Elements Across all Criteria | ||||||||||

|

| ||||||||||

| Recovery Orientation | 67 | 4.7 (0.5) | 100.0 | 71.6 | 4.3 (0.9) | 85.1 | 55.2 | 4.6 (0.5) | 98.5 | 56.7 |

| Goal Setting and Follow-up | 61 | 4.7 (0.4) | 100.0 | 73.8 | 4.4b (0.9) | 91.7b | 55.0 | 4.7 (0.5) | 100.0 | 65.6 |

|

| ||||||||||

| Essential and Defining Elements Across all Criteria | ||||||||||

|

| ||||||||||

| IMR Curriculum | 61 | 4.6 (0.5) | 100.0 | 62.3 | 4.5 (0.7) | 93.40 | 62.3 | 4.2 (0.7) | 86.9 | - |

|

| ||||||||||

| Essential and Impactful Elements Across all Criteria | ||||||||||

|

| ||||||||||

| Relapse Prevention | 59 | 4.5 (0.6) | 98.3 | 54.2 | - | - | - | 4.5 (0.5) | 98.3 | 55.9 |

| Motivational Enhancement | 61 | 4.4 (0.6) | 91.8 | 50.8 | - | - | - | 4.5 (0.6) | 95.1 | 50.8 |

|

| ||||||||||

| Impactful Elements Across all Criteria | ||||||||||

|

| ||||||||||

| Therapeutic Relationship | 67 | 4.4 (0.6) | 94.0 | - | - | - | - | 4.5 (0.7) | 95.5 | 56.7 |

|

| ||||||||||

| Elements that Met Some Essential and Impactful Criteria | ||||||||||

|

| ||||||||||

| Coping Skills | 59 | 4.5 (0.5) | 100.0 | - | - | - | - | 4.5 (0.5) | 98.3 | - |

| Educational | 60 | 4.4c (0.6) | 94.8c | - | - | - | - | 4.2 (0.6) | 91.7 | - |

| Cognitive-Behavioral | 59 | 4.4 (0.7) | 93.2 | - | - | - | - | 4.4 (0.6) | 96.6 | - |

| Action Plan Review | 61 | 4.3b (0.7) | 91.7b | - | - | - | - | 4.2 (0.7) | 90.2 | - |

| Weekly Action Planning | 61 | 4.1 (0.8) | 90.0 | - | - | - | - | 4.2 (0.8) | 88.5 | - |

| Enlisting Mutual Support | 67 | 4.0 (0.8) | 80.6 | - | - | - | - | 4.2 (0.6) | 89.6 | - |

|

| ||||||||||

| Elements that Met Some Essential Criteria | ||||||||||

|

| ||||||||||

| Structure and Efficient Use of Time | 62 | 4.3b (0.6) | 95.1b | - | - | - | - | - | - | - |

| Group Member Involvement | 67 | 4.0 (0.8) | 80.6 | - | - | - | - | - | - | - |

|

| ||||||||||

| Elements that Met Some Impactful Criteria | ||||||||||

|

| ||||||||||

| Behavioral Tailoring | 59 | - | - | - | - | - | - | 4.0 (0.6) | 84.7 | - |

| Involvement of Significant Other(s) | 63 | - | - | - | - | - | - | 4.0 (0.9) | - | - |

|

| ||||||||||

| Failed to Meet Any Criteria | ||||||||||

|

| ||||||||||

| Childhood Experiencesd | 35 | - | - | - | - | - | - | - | - | - |

Data is listed when an element met criteria.

Missing one response.

Missing two responses.

Distractor item.

Critical Elements Analyses

Defining elements

Three elements were considered defining of IMR, as well as essential and impactful (by any of the thresholds); Recovery Orientation and Goal Setting and Follow-up met all three thresholds for all three dimensions. IMR Curriculum met all thresholds for all dimensions except only 32.8% strongly agreed it is impactful.

Essential and impactful elements

Two elements met all thresholds for essential and impactful: Relapse Prevention and Motivational Enhancement. Six additional items were considered essential and impactful based on all criteria except the most stringent (>50% Strongly Agree): Coping Skills, Educational Techniques, Cognitive-Behavioral Techniques, Action Plan Review, Weekly Action Planning, and Enlisting Mutual Support. One additional item, Therapeutic Relationship, met all thresholds for essential and impactful except only 47.8% strongly agreed that it was essential.

Essential elements

Two items were considered essential by most thresholds: Structure and Efficient Use of Time, and Group Member Involvement; however, most experts did not strongly agree that these items were essential, with only 34.4% and 26.9% strongly agreeing, respectively.

Impactful elements

Behavioral Tailoring met two thresholds for impactful and Involvement of Significant Others met one threshold for impactful.

Non-critical item

The distractor item did not meet any of the thresholds for any dimensions considered.

Differences between IMR Providers and Non-Providers

We next examined whether experts who self-reported as IMR providers differed from self-reported non-providers in their assessment of criticality. Providers and non-providers did not differ on their average ratings across elements on essential, defining, or impactful. Repeated-measures MANOVAs showed that providers rated Therapeutic Relationship (mean = 4.31, s.d. = .49) and IMR Curriculum (mean = 4.54, s.d. = .41) higher across dimensions than non-providers (mean = 3.83, s.d. = .61, F(1,65) = 11.49, p = .001 for Therapeutic Relationship; mean = 4.23, s.d. = .50, F(1,59) = 7.05, p = .01 for IMR Curriculum.

Additional Elements

Several additional elements were suggested. Most suggestions (n = 12) were regarding additional clinical or teaching methods; however, there was no consensus regarding which to include. Some respondents (n = 10) suggested an element regarding whole health/physical wellness, while others (n = 6) suggested an element regarding community/social integration and a new emphasis on an element already included (n = 3).

Discussion

The current study had three purposes, to further solidify the critical elements of IMR, demonstrate how certain methodological decisions may affect the determination of criticality, and to establish content validity for the IT-IS fidelity scale. Regarding the critical elements of IMR, three elements received clear and universal support: Recovery Orientation, Goal-Setting and Follow-up, and IMR Curriculum. These elements were the only items to meet thresholds for defining and also received high ratings as essential and impactful. These results make logical and theoretical sense. Recovery is the guiding philosophy of IMR (Mueser et al., 2006); in the seminal work on IMR, Mueser and colleagues (2006) emphasize that IMR is intended to teach self-management skills in the service of forwarding recovery. Similarly, Goal Setting and Follow-up were highlighted as a unique aspect of IMR. Moreover, substantial research has indicated the importance of goal setting on outcomes (Locke & Latham, 2002; Michalak & Holtforth, 2006). Finally, while the use of a structured curriculum is not unique to IMR (e.g., social skills training, [Bellack, 2004]), the IMR curriculum is the most tangible manifestation of IMR. The curriculum provides psychoeducational content and worksheets, which facilitate the provision of other elements such as goal setting and coping skills training.

Regarding critical elements, the defining elements are the elements that set IMR apart from other psychosocial interventions. In clinical practice, if a clinician is utilizing these elements (i.e., is setting goals with consumers, using the IMR curriculum, and emphasizing a recovery philosophy), an informed observer would readily recognize this intervention as IMR. However, these elements are not sufficient for the successful implementation of IMR—they are supported by nine essential elements, which, although not defining of IMR (in comparison to other psychosocial interventions), are necessary at least at some level for the intervention to be considered IMR. To take one example, if the informed observer observed a group intervention that included goal setting and was recovery oriented, but there was no use of educational techniques, the observer would likely not consider IMR to have been truly implemented. This, of course, presupposes a dichotomous view of implementation, based on presence or absence of an element, rather than quality of an element, which may not be accurate. It may be more accurate to say that as more essential and impactful elements are implemented to threshold, the intervention cumulatively approximates IMR. Once the threshold level of educational techniques is met, though, does additional usage increase the “IMRness” of the program? The dose-response relationship between elements and fidelity (and/or outcomes) remains an unanswered question. It should be emphasized that this question is not purely academic. As practitioners in the field seek to implement elements of EBPs, they are often faced with restricted resources (i.e., session time, time and money for training and other implementation supports, etc.). Therefore, empirical guidance regarding return on investment in supporting clinicians reaching threshold competency on an element versus exceeding threshold could assist stakeholders in allocation of these precious resources.

Two elements, behavioral tailoring and the involvement of significant others, are notable in that they were considered impactful on outcomes, but were not considered essential or defining of IMR. These two elements have strong empirical support demonstrating their effects on important outcomes such as hospitalization rate (Dixon et al., 2001), engagement in treatment (Kurtz, Rose, & Wexler, 2011), and medication adherence (Velligan et al., 2008). While these elements may lead to better consumer outcomes, experts appeared to believe that high-fidelity IMR can be implemented without these elements. This differentiation speaks directly to the definition of criticality and argues against an isolated definition of criticality based on direct impact on consumer outcomes. Our results indicate experts view involvement of significant others and behavioral tailoring for medication management to be important elements of psychosocial rehabilitation and positive outcomes, but not necessary for IMR fidelity.

IMR providers did not generally differ from non-providers in their assessment of element criticality averaged across items. Providers and non-providers did not systematically vary in their overall rating of importance. Should the two groups have differed on average across elements, it would have indicated that perhaps clinicians and researchers may respond differently to the questions as asked. Conversely, our results support the notion that providers and non-providers can be queried with the same questions, and responses can be aggregated. Providers only rated two items—therapeutic alliance and IMR curriculum—as more important than non-providers, suggesting that for providers, IMR curriculum is differentially important and discernable from the other defining and essential IMR elements.

Regarding the content validity of the IT-IS, as noted above, most items on the IT-IS received a high level of support from experts using one or more of the criticality criteria. The only exceptions were the involvement of significant others and behavioral tailoring elements, which could be considered for exclusion. However, given that these elements were part of the original formulation of IMR (Mueser et al., 2006) and their documented impact on recovery, caution is merited before the items are excluded from the scale.

Limitations

Several limitations of the current study should be noted. First, the study focused on one intervention—IMR—and methodological conclusions should be viewed with caution and replicated in the context of other interventions. Secondly, although we elicited opinions of experts, we did not engage in a consensus process in which experts dialogue and produce one unified document that they all endorse. Such a process should take place before elements are added or removed from the model. Additionally, a portion of experts chose not to participate in our survey, therefore important perspectives may have been missed.

Implications for Practice

Providers are often faced with competing clinical demands within session. These results provide some guidance regarding relative priorities for IMR services. Namely, maintaining a recovery orientation, engaging in goal-setting and follow-up, and use of the IMR curriculum should be considered the touchstones of IMR practice. In our opinion, competence in other elements generally supports competence in these three core areas. For instance, goal-setting is facilitated by skillful application of motivational enhancement strategies and coping skills training. However, when in doubt, IMR practitioners are well served by maintaining focus on these three elements.

Summary and Future Directions

Our results indicate that criticality is a multifaceted and complex construct which includes judgments regarding how emblematic an element is for a given program in comparison to other similar programs (defining), what elements must be present at least at some level (essential), as well as what elements are thought to directly impact outcomes (impactful). Direct impact on outcomes is not adequate, in isolation, to merit an element’s inclusion as a critical element—there must be some additional conceptual tie to the model. In the case of IMR, Involvement of Significant Others and Behavioral Tailoring should be reviewed. Experts did not consider these elements as essential or defining; however, they have a well-established empirical and theoretical relationship to consumer outcomes. The inclusion or exclusion of an element in a program’s critical elements, and resulting fidelity measurement, also depends on the intended usage of the scale. Scales intended to outline necessary components for successful implementation would likely be more comprehensive and include elements common across programs. In contrast, if the intent is to differentiate one program from another, perhaps measuring inclusion or absence of defining elements would be adequate.

Acknowledgments

This work was supported in part by a grant from the VA HSR&D: RRP 11-017 and the National Institute of Mental Health (R21 MH096835). Dr. McGuire is supported by a grant from VA RR&D: D0712-W and by the Department of Veterans Affairs, Veterans Health Administration, Health Services Research and Development Service (CIN 13-416).

Footnotes

Disclosures: The views expressed in this article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs or NIMH.

Previous Presentation: Preliminary results from this project were presented at the 65th Institute on Psychiatric Services in Philadelphia, Pennsylvania.

Contributor Information

Alan B. McGuire, Research Health Scientist at the Roudebush VA Medical Center, Indianapolis, IN and Clinical Research Scientist at the ACT Center of Indiana, Indiana University–Purdue University Indianapolis, Indianapolis, IN

Lauren Luther, Doctoral graduate student in clinical psychology at Indiana University–Purdue University Indianapolis, Indianapolis, IN

Dominique White, Doctoral graduate student in clinical psychology at Indiana University–Purdue University Indianapolis, Indianapolis, IN

Laura M. White, Doctoral graduate student in clinical psychology at Indiana University–Purdue University Indianapolis, Indianapolis, IN

John McGrew, Professor at Indiana University–Purdue University Indianapolis, Indianapolis, IN

Michelle P. Salyers, Associate Professor of Psychology, Director of the Clinical Psychology Program, and Co-Director of the ACT Center, at Indiana University–Purdue University Indianapolis, Indianapolis, IN

References

- Baer JS, Ball SA, Campbell BK, Miele GM, Schoener EP, Tracy K. Training and fidelity monitoring of behavioral interventions in multi-site addictions research. Drug and Alcohol Dependence. 2007;87(2–3):107–118. doi: 10.1016/j.drugalcdep.2006.08.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bellack AS. Skills training for people with severe mental illness. Psychiatric Rehabilitation Journal. 2004;27(4):375. doi: 10.2975/27.2004.375.391. [DOI] [PubMed] [Google Scholar]

- Bickman L. The functions of program theory. In: Bickman L, editor. Using Program Theory in Evaluation. San Francisco: Jossey-Bass; 1987. pp. 5–18. [Google Scholar]

- Bond GR. Variations in an assertive outreach model. New Directions for Mental Health Services. 1991;52:65–80. doi: 10.1002/yd.23319915207. [DOI] [PubMed] [Google Scholar]

- Bond GR. Supported employment: evidence for an evidence-based practice. Psychiatric Rehabilitation Journal. 2004;27(4):345–359. doi: 10.2975/27.2004.345.359. [DOI] [PubMed] [Google Scholar]

- Bond GR, Evans L, Salyers MP, Williams J, Kim HW. Measurement of fidelity in psychiatric rehabilitation. Mental Health Services Research. 2000;2(2):75–87. doi: 10.1023/a:1010153020697. [DOI] [PubMed] [Google Scholar]

- Bond GR, Salyers MP. Prediction of outcome from the Darthmouth assesrtive community treatment fidelty scale. CNS Spectrums. 2004;9(12):932–942. doi: 10.1017/s1092852900009792. [DOI] [PubMed] [Google Scholar]

- Bond GR, Williams J, Evans L, Salyers M, Kim HW, Sharpe H, Leff HS. Psychiatric Rehabilitation Fidelity Toolkit. Cambridge, MA: Human Services Research Institute; 2000. [Google Scholar]

- Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implementation Science. 2007;2(40):1–9. doi: 10.1186/1748-5908-2-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook TD, Campbell DT, Day A. Quasi-experimentation: Design & analysis issues for field settings. Boston, MA: Houghton Mifflin; 1979. [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsch SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4 doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson PR, Parker KC. Eye movement desensitization and reprocessing (EMDR): a meta-analysis. Journal of consulting and clinical psychology. 2001;69(2):305. doi: 10.1037//0022-006x.69.2.305. [DOI] [PubMed] [Google Scholar]

- Dixon L, McFarlane WR, Lefley H, Lucksted A, Cohen M, Falloon I, … Sondheimer D. Evidence-based practices for services to families of people with psychiatric disabilities. Psychiatric Services. 2001;52(7):903–910. doi: 10.1176/appi.ps.52.7.903. [DOI] [PubMed] [Google Scholar]

- Drake RE, Goldman HH, Leff HS, Lehman AF, Dixon L, Mueser KT, Torrey WC. Implementing evidence-based practices in routine mental health service settings. Psychiatric Services. 2001;52(2):179–182. doi: 10.1176/appi.ps.52.2.179. [DOI] [PubMed] [Google Scholar]

- Durlak JA, DuPre EP. Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American journal of community psychology. 2008;41(3–4):327–350. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- Dusenbury L, Brannigan R, Falco M, Hansen WB. A review of research on fidelity of implementation: implications for drug abuse prevention in school settings. Health Education Research. 2003;18(2):237–256. doi: 10.1093/her/18.2.237. [DOI] [PubMed] [Google Scholar]

- Färdig R, Lewander T, Melin L, Folke F, Fredriksson A. A randomized controlled trial of the illness management and recovery program for persons with schizophrenia. Psychiatric Services (Washington, DC) 2011;62(6):606–612. doi: 10.1176/appi.ps.62.6.606. [DOI] [PubMed] [Google Scholar]

- Haddock G, Devane S, Bradshaw T, McGovern J, Tarrier N, Kinderman P, … Harris N. An investigation into the psychometric properties of the cognitive therapy scale for psychosis (CTS-Psy) Behavioural and Cognitive Psychotherapy. 2001;29:221–233. [Google Scholar]

- Hasson-Ohayon I, Roe D, Kravetz S. A randomized controlled trial of the effectiveness of the illness management and recovery program. Psychiatric Services. 2007;58(11):1461–1466. doi: 10.1176/appi.ps.58.11.1461. [DOI] [PubMed] [Google Scholar]

- Keith RE, Hopp FP, Subramanian U, Wiitala W, Lowery JC. Fidelity of implementation: development and testing of a measure. Implement Sci. 2010;5(1):99. doi: 10.1186/1748-5908-5-99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitson A, Harvey G, McCormack B. Enabling the implementation of evidence based practice: a conceptual framework. Quality in Health care. 1998;7(3):149–158. doi: 10.1136/qshc.7.3.149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koop JI, Rollins AL, Bond GR, Salyers MP, Dincin J, Kinley T, … Marcelle K. Development of the DPA Fidelity Scale: Using fidelity to define an existing vocational model. Psychiatric Rehabilitation Journal. 2004;28(1):16–24. doi: 10.2975/28.2004.16.24. [DOI] [PubMed] [Google Scholar]

- Kurtz MM, Rose J, Wexler BE. Predictors of participation in community outpatient psychosocial rehabilitation in schizophrenia. Community Mental Health Journal. 2011;47(6):622–627. doi: 10.1007/s10597-010-9343-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lambert MJ, Barley DE. Research summary on the therapeutic relationship and psychotherapy outcome. Psychotherapy: Theory, Research, Practice, Training. 2001;38(4):357. doi: 10.1037/0033-3204.38.4.357. [DOI] [Google Scholar]

- Levitt AJ, Mueser KT, DeGenova J, Lorenzo J, Bradford-Watt D, Barbosa A, … Chernick M. Randomized Controlled Trial of Illness Management and Recovery in Multiple-Unit Supportive Housing. Psychiatric Services. 2009;60(12):1629–1636. doi: 10.1176/appi.ps.60.12.1629. [DOI] [PubMed] [Google Scholar]

- Locke EA, Latham GP. Building a practically useful theory of goal setting and task motivation: A 35-year odyssey. American Psychologist. 2002;57(9):705–717. doi: 10.1037//0003-066x.57.9.705. [DOI] [PubMed] [Google Scholar]

- Marty D, Rapp CA, Carlson L. The experts speak: the critical ingredients of strengths model case management. Psychiatric Rehabilitation Journal. 2001;24(3):214. doi: 10.1037/h0095090. [DOI] [PubMed] [Google Scholar]

- McEvoy JP, Scheifler PL, Frances A. The expert consensus guideline series: Treatment of schizophrenia 1999. Journal of Clinical Psychiatry. 1999;60(Supplement 11):1–80. [Google Scholar]

- McGrew JH, Bond GR. Critical ingredients of assertive community treatment: Judgments of the experts. Journal of Mental Health Administration. 1995;22:113–125. doi: 10.1007/BF02518752. [DOI] [PubMed] [Google Scholar]

- McGrew JH, Bond GR, Dietzen LL, Salyers MP. Measuring the fidelity of implementation of a mental health program model. Journal of Consulting and Clinical Psychology. 1994;62(4):670–678. doi: 10.1037//0022-006x.62.4.670. [DOI] [PubMed] [Google Scholar]

- McGrew JH, Griss ME. Concurrent and Predictive Validity of Two Scales to Assess the Fidelity of Implementation of Supported Employment. Psychiatric Rehabilitation Journal. 2005;29(1):41–47. doi: 10.2975/29.2005.41.47. [DOI] [PubMed] [Google Scholar]

- McGuire AB, Bond GB. Critical elements of the crisis intervention team model of jail diversion: An expert survey. Behavioral Science and the Law. 2011;29:81–94. doi: 10.1002/bsl.941. [DOI] [PubMed] [Google Scholar]

- McGuire AB, Stull LG, Mueser KT, Santos M, Mook A, Rose N, … Salyers MP. Development and reliability of a measure of clinician competence in providing illness management and recovery. Psychiatric Services. 2012;63(8):772–778. doi: 10.1176/appi.ps.201100144. [DOI] [PubMed] [Google Scholar]

- McGuire AB, Kukla M, Green A, Gilbride D, Mueser KT, Salyers MP. Illness management and recovery: A review of the literature. Psychiatric Services. 2014;65(2):171–179. doi: 10.1176/appi.ps.201200274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michalak J, Holtforth MG. Where Do We Go From Here? The Goal Perspective in Psychotherapy. Clinical Psychology: Science and Practice. 2006;13(4):346–365. doi: 10.1111/j.1468-2850.2006.00048.x. [DOI] [Google Scholar]

- Mihalic S. The importance of implementation fidelity. Report on Emotional and Behavioral Disorders in Youth. 2004;4:83–86. 99–105. [Google Scholar]

- Mowbray CT, Holder M, Teague GB, Bybee D. Fidelity criteria: Development, measurement, and validation. American Journal of Evaluation. 2003;24:315–340. doi: 10.1177/109821400302400303. [DOI] [Google Scholar]

- Mueser KT, Corrigan PW, Hilton DW, Tanzman B, Schaub A, Gingerich S, … Herz MI. Illness management and recovery: A review of the research. Psychiatric Services. 2002;53:1272–1284. doi: 10.1176/appi.ps.53.10.1272. [DOI] [PubMed] [Google Scholar]

- Mueser KT, Gingerich S, Bond GR, Campbell K, Williams J. Illness Management and Recovery Fidelity Scale. In: Mueser KT, Gingerich S, editors. Illness Management and Recovery Implementation Resource Kit. Rockville, MD: Center for Mental Health Services, Substance Abuse and Mental Health Services Administration; 2002. [Google Scholar]

- Mueser KT, Gingerich S. Illness Management and Recovery Implementation Resource Kit. Rockville, MD: Center for Mental Health Services, Substance Abuse and Mental Health Services Administration; 2002. [Google Scholar]

- Mueser KT, Meyer PS, Penn DL, Clancy R, Clancy DM, Salyers MP. The Illness Management and Recovery program: Rationale, development, and preliminary findings. Schizophrenia Bulletin. 2006;32(1):32–43. doi: 10.1093/schbul/sbl022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perepletchikova F, Treat TA, Kazdin AE. Treatment integrity in psychotherapy research: analysis of the studies and examination of the associated factors. Journal of Consulting and Clinical Psychology. 2007;75(6):829–841. doi: 10.1037/0022-006X.75.6.829. [DOI] [PubMed] [Google Scholar]

- SAMHSA. SAMHSA’s working definition of recovery: 10 guiding principles of recovery. Rockville, MD: Substance Abuse and Mental Health Services Administration; 2012. (DHHS Publication No. PEP12-RECDEF) [Google Scholar]

- Schaedle R, Epstein I. Specifying intensive case management: A multiple stakeholder approach. Mental Health Services Research. 2000;2:95–105. doi: 10.1023/a:1010157121606. [DOI] [PubMed] [Google Scholar]

- Sechrest L, West SG, Phillips MA, Redner R, Yeaton W. Some neglected problems in evaluation research: Strength and integrity of treatments. Evaluation studies review annual. 1979;4:15–35. [Google Scholar]

- Shapiro F, Solomon RM. Eye movement desensitization and reprocessing. Wiley Online Library; 1995. [Google Scholar]

- Velligan DI, Diamond PM, Mintz J, Maples N, Li X, Zeber J, … Miller AL. The use of individually tailored environmental supports to improve medication adherence and outcomes in schizophrenia. Schizophrenia bulletin. 2008;34(3):483–493. doi: 10.1093/schbul/sbm111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webb CA, DeRubeis RJ, Barber JP. Therapist adherence/competence and treatment outcome: A meta-analytic review. Journal of Consulting and Clinical Psychology. 2010;78(2):200–211. doi: 10.1037/a0018912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wright-Berryman J, McGuire AB, Salyers MP. A review of consumer-provided services on Assertive Community Treatment and intensive case management teatms: Implications for future research and practice. Journal of the American Psychiatric Nurses Association. 2011;17(1):37–44. doi: 10.1177/1078390310393283. [DOI] [PMC free article] [PubMed] [Google Scholar]