Abstract

The aim of this study was to investigate the brain processes underlying emotions during natural music listening. To address this, we recorded high-density electroencephalography (EEG) from 22 subjects while presenting a set of individually matched whole musical excerpts varying in valence and arousal. Independent component analysis was applied to decompose the EEG data into functionally distinct brain processes. A k-means cluster analysis calculated on the basis of a combination of spatial (scalp topography and dipole location mapped onto the Montreal Neurological Institute brain template) and functional (spectra) characteristics revealed 10 clusters referring to brain areas typically involved in music and emotion processing, namely in the proximity of thalamic-limbic and orbitofrontal regions as well as at frontal, fronto-parietal, parietal, parieto-occipital, temporo-occipital and occipital areas. This analysis revealed that arousal was associated with a suppression of power in the alpha frequency range. On the other hand, valence was associated with an increase in theta frequency power in response to excerpts inducing happiness compared to sadness. These findings are partly compatible with the model proposed by Heller, arguing that the frontal lobe is involved in modulating valenced experiences (the left frontal hemisphere for positive emotions) whereas the right parieto-temporal region contributes to the emotional arousal.

Keywords: ICA, music-evoked emotions, theta, alpha, valence and arousal

Introduction

A considerable part of our everyday emotions is due to music listening (Juslin et al., 2008). Music, a cultural universal, serves social functions (Juslin and Laukka, 2003; Hagen and Bryant, 2003), and has the power to evoke emotions and influence moods (Goldstein, 1980; Sloboda, 1991; Sloboda et al., 2001; Baumgartner et al., 2006a,b). In fact, regulating these affective states is our main motivation for engaging with music (Panksepp, 1995; Juslin and Laukka, 2004; Thoma et al., 2011a,b). Affective research already provided many valuable insights into the underlying mechanisms of music-evoked emotions. For example, there is consensus that specific limbic (e.g. nucleus accumbens and amygdala), paralimbic (e.g. insular and orbitofrontal cortex) and neocortical brain areas (e.g. fronto-temporal-parietal areas) contribute to music-evoked emotions that partly also underlie non-musical emotional experiences in everyday life (Blood et al., 1999; Koelsch, 2014). Pleasure experienced during music listening is associated with mesolimbic–striatal structures (Blood et al., 1999; Blood and Zatorre, 2001; Brown et al., 2004; Menon and Levitin, 2005; Salimpoor et al., 2011, 2013) also involved in experiencing pleasure in various reward-related behaviors such as sex (Pfaus et al., 1995; Aron et al., 2005; Komisaruk and Whipple, 2005), feeding (Hernandez and Hoebel, 1988; Berridge, 2003; Small et al., 2003) or even money handling (Knutson et al., 2001). In contrast, the amygdala (another limbic core structure) is mostly associated with negatively valenced emotions experienced during music listening (Koelsch et al., 2006; Mitterschiffthaler et al., 2007; Koelsch et al., 2008) as well as in response to a wide range of non-musical aversive stimuli (Phan et al., 2002). However, these phylogenetically old circuits interact with neocortical areas (Zatorre et al., 2007; Salimpoor et al., 2013; Zatorre and Salimpoor, 2013), enabling the emergence of more complex and music-specific (so-called ‘aesthetic’; Scherer, 2004) emotions, such as the ones classified by the Geneva Emotional Music Scale (GEMS) (Zentner et al., 2008; Brattico and Jacobsen, 2009; Trost et al., 2012). Further agreement among researchers concerns the hemispheric lateralization of functions related to emotions, as provided by a great body of neuroimaging and clinical studies making frontal (Hughlings-Jackson, 1878; Davidson, 2004, 1998; Hagemann et al., 1998; Sutton and Davidson, 2000; Craig, 2005) or global lateralization a subject of discussion (Silberman and Weingartner, 1986; Henriques and Davidson, 1991; Meadows and Kaplan, 1994; Hagemann et al., 2003). In this context, it is important to remark that similar effects of lateralization also underlie music-evoked emotions. In fact, music-related studies using electroencephalography (EEG) have provided evidence indicating that the right frontal brain region preferably contributes to arousal and negatively valenced emotions, whereas the left one to positively valenced emotions (Schmidt and Trainor, 2001; Tsang et al., 2001; Altenmüller et al., 2002; Mikutta et al., 2012). Despite music’s effectiveness in evoking emotions and its closeness to everyday life, within affective research music is not the most preferred stimulus material. To a certain extent, this restraint is due to the idiosyncratic nature of musical experiences (Gowensmith and Bloom, 1997; Juslin and Laukka, 2004; Zatorre, 2005). Otherwise, there is evidence indicating a certain stability of music-evoked emotional experiences across cultures (Peretz and Hébert, 2000; Trehub, 2003) in response to specific elementary musical structures such as the musical mode (major/minor) and tempo inducing happiness and sadness (Hevner, 1935, 1937; Peretz et al., 1998; Dalla Bella et al., 2001), or consonant (dissonant) music intervals inducing (un)pleasantness (Bigand et al., 1996; Trainor and Heinmiller, 1998; Zentner and Kagan, 1998). However, these physical features possess only negligible explanatory power considering the full variability of musical experiences among humans. Another crucial problem here refers to the fact that authentic music-evoked emotions unfold particularly over time (Koelsch et al., 2006; Sammler et al., 2007; Bachorik et al., 2009; Lehne et al., 2013; Jäncke et al., 2015), as for example due to violation or confirmation of established expectancies (Meyer, 1956; Sloboda, 1991). Temporal characteristics and specific moments accounting for music-evoked emotions are not only reflected behaviorally (Grewe et al., 2007; Bachorik et al., 2009), but also in psychophysiological activity (Grewe et al., 2005; Grewe et al., 2007; Lundqvist et al., 2008; Grewe et al., 2009; Koelsch and Jäncke, 2015), and in brain activity (Koelsch et al., 2006; Lehne et al., 2013; Trost et al., 2015). Such temporal dynamics of emotional experiences requires rather longer stimuli for experimental purposes, challenging research implementation especially in terms of classical event-related paradigms. Thus, alternative methods are indicated to more fully capture music-evoked emotions.

Independent component analysis (ICA) is a promising data-driven approach increasingly used to investigate brain states during real-world experiences. From complex brain activities, ICA allows to ‘blindly’ determine distinct neural sources with independent time courses associated with features of interest while ensuring an optimal signal-to-noise ratio (Jutten and Herault, 1991; Makeig et al., 1996; Makeig et al., 1997; Makeig et al., 2000; Jung et al., 2001; Makeig et al., 2004; Lemm et al., 2006). So far, ICA has already been proved to be fruitful in gaining insights into natural music processing (Schmithorst, 2005; Sridharan et al., 2007; Lin et al., 2010; Cong et al., 2013; Cong et al., 2014; Lin et al., 2014), but additionally in other real-world conditions such as resting state (Damoiseaux et al., 2006; Mantini et al., 2007; Jäncke and Alahmadi, 2015), natural film watching (Bartels and Zeki, 2004, 2005; Malinen et al., 2007) and the riddle of the cocktail party effect (Bell and Sejnowski, 1995).

By applying ICA in combination with high-density EEG, this study aims at examining the independent components (IC) underlying music-evoked emotions. In particular, this study attempts to provide an ecologically valid prerequisite for natural music listening by including whole music excerpts with sufficient length as experimental stimuli. Similar to previous music-related studies (Schubert, 1999; Schmidt and Trainor, 2001; Chapin et al., 2010; Lin et al., 2010), we analyzed music-evoked emotions in terms of two affective dimensions, namely scales representing valence and arousal. We manipulated musical experience by presenting different musical excerpts corresponding to different manifestations on these two dimensions. Subject-wise, we provided individual sets of stimuli in order to take into consideration the idiosyncratic nature of musical experiences. Despite the exposure of non-identical stimuli across subjects, we expected ICA to reveal functionally distinct EEG sources contributing to the both affective dimensions.

Materials and methods

Participants

Twenty-two subjects (13 female, age range 19–30 years, M = 24.2, s.d. = 3.1) who generally enjoyed listening to music but were not actively engaged in making music for at least the past 5 years participated in this study; 29.4% of the subjects had never played a musical instrument. According to the Advanced Measures of Music Audition test (Gordon, 1989), the subjects ranked on average on the 56th percentile, indicating a musical aptitude corresponding to 56% of the non-musical population. At the time of the study as well as for the last 10 years, the subjects listened to music of various genres between 1 and 3 h per day. According to the Annett-Handedness-Questionnaire (Annett, 1970), all participants were consistently right-handed. Participants gave written consent in accordance with the Declaration of Helsinki and procedures approved by the local ethics committee and were paid for participation. None of the participants had any history of neurological, psychiatric or audiological disorders.

Stimuli

A pool of 40 various musical excerpts was heuristically assembled by psychology students from our lab with the aim of equally covering each quadrant of the two-dimensional affective space. The musical excerpts were of different genres namely of soundtracks, classical music, ballet and operas but did not contain any vocals. The pool of musical excerpts is listed in Table 1. Each musical excerpt was 60 s of length, stored in MP3 format on hard disk, logarithmically smoothed with a rise and fall time of 2 s to avoid an abrupt decay, and normalized in amplitude to 100% (corresponding to 0 decibel full scale, i.e. dB FS) by using Adobe Audition 1.5 (Adobe Systems, Inc., San Jose, CA). This is an automatized process that changes the level of each sample in a digital audio signal by the same amount, such that the loudest sample reaches a specified level. Consequently, the volume was consistent throughout all musical pieces presented to the participants.

Table 1.

Musical excerpts

| Composer | Excerpt | Neg | Pos | High | Low |

|---|---|---|---|---|---|

| Albinoni, T. | Adagio, G Minor (7′40) | 0.64 | 0 | 0.27 | 0.36 |

| Alfvén, H. | Midsommarvaka (0′02) | 0 | 0.55 | 0.36 | 0.18 |

| Barber, S. | Adagio for Strings (1′00) | 0.64 | 0.05 | 0.45 | 0.23 |

| Barber, S. | Adagio for Strings (5′10) | 0.41 | 0 | 0.32 | 0.09 |

| Beethoven, L. | Symphony No. 6 ‘Pastoral’ 3rd Mvt.(2′30) | 0 | 0.77 | 0.68 | 0.09 |

| Beethoven, L. | Moonlight Sonata, 1st Mvt. (0′19) | 0.77 | 0 | 0.41 | 0.36 |

| Boccherini, L. | Minuetto (0’00) | 0 | 0.5 | 0.36 | 0.14 |

| Chopin, F. | Mazurka Op 7 No. 1, B flat Major (0′00) | 0 | 0.5 | 0.14 | 0.41 |

| Corelli, A. | Christmas Concerto–Vivace-Grave (0′20) | 0.55 | 0 | 0.09 | 0.45 |

| Galuppi, B. | Sonata No. 5, C Major (0′00) | 0 | 0.32 | 0 | 0.32 |

| Grieg, E. | Suite No. 1, Op. 46–Aase's Death (1′22) | 0.68 | 0.05 | 0.36 | 0.36 |

| Händel, G.F. | Water Music, Suite No. 2 D Major Alla Hornpipe (0′00) | 0 | 0.68 | 0.45 | 0.23 |

| Haydn, J. | Andante Cantabile from String Quartet Op. 3 No. 5 (0′00) | 0.09 | 0.32 | 0.09 | 0.32 |

| Mozart, A. | Clarinet Concerto, A Major, K 622 Adagio (0′00) | 0.09 | 0.32 | 0.19 | 0.23 |

| Mozart, A. | Eine kleine Nachtmusik–Allegro (2′04) | 0 | 0.73 | 0.59 | 0.14 |

| Mozart, A. | Eine kleine Nachtmusik–Rondo allegro (0′00) | 0 | 0.5 | 0.32 | 0.19 |

| Mozart, A. | Manuetto, Trio, KV 68 (0′00) | 0 | 0.36 | 0 | 0.36 |

| Mozart, A. | Piano Sonata No. 10, C Major, K. 330–Allegro moderato (0′00) | 0 | 0.5 | 0.18 | 0.36 |

| Mozart, A. | Rondo, D Major, K. 485 (0′00) | 0 | 0.59 | 0.19 | 0.4 |

| Mozart, A. | Violin Concerto No. 3, G Major, K. 216 1st Mvt. (0′00) | 0 | 0.5 | 0.27 | 0.23 |

| Murphy, J. | Sunshine–Adagio, D Minor (1′30) | 0.32 | 0.27 | 0.55 | 0.05 |

| Murphy, J. | 28 days later–Theme Soundtrack (0′25) | 0.64 | 0.05 | 0.41 | 0.27 |

| Ortega, M. | It’s hard to say goodbye (0′00) | 0.45 | 0 | 0.27 | 0.18 |

| Pyeong Keyon, J. | Sad romance (0′00) | 0.77 | 0 | 0.59 | 0.18 |

| Rodriguez, R. | Once upon a time in Mexico–Main Theme (0′00) | 0.45 | 0.05 | 0.18 | 0.32 |

| Rossini, G. | Die diebische Elster (la gazza ladra), Ouvertüre (3′47) | 0 | 0.45 | 0.27 | 0.18 |

| Scarlatti, D. | Sonata, E Major, K. 380–Andante comodo (0′30) | 0 | 0.55 | 0 | 0.55 |

| Schumann, R. | Kinderszenen–Von fremden Ländern und Menschen (0′00) | 0.05 | 0.36 | 0.05 | 0.36 |

| Shostakovich, D. | Prelude for Violin and Piano (0′00) | 0.68 | 0 | 0.41 | 0.27 |

| Strauss, J. | Pizzicato Polka (0′00) | 0 | 0.5 | 0.36 | 0.14 |

| Tiersen, Y. | I saw daddy today, Goodbye Lenin (0′25) | 0.86 | 0 | 0.32 | 0.55 |

| Tiersen, Y. | Sur le fil, Amélie (1′40) | 0.55 | 0 | 0.05 | 0.5 |

| Tschaikowsky, P. | Danse Espagnole (0′20) | 0 | 0.59 | 0.45 | 0.14 |

| Vagabond | One hour before the trip (1′39) | 0.14 | 0 | 0.05 | 0.09 |

| Vivaldi, A. | Concerto, A Major, p. 235, Allegro (0′00) | 0 | 0.59 | 0.41 | 0.18 |

| Vivaldi, A. | Concerto for 2 violins, D major RV 512 (1′15) | 0.45 | 0.05 | 0.05 | 0.45 |

| Vivaldi, A. | Spring: II. Largo (0′00) | 0.73 | 0 | 0.23 | 0.5 |

| Webber, J.L.P. Chowhan, P. | Return to paradise (0′05) | 0.64 | 0 | 0.45 | 0.18 |

| Yiruma | Kiss The Rain, Twilight (0′00) | 0.45 | 0.05 | 0.18 | 0.32 |

| Zimmer, H. | This Land, Lion King (0′45) | 0.45 | 0.18 | 0.64 | 0 |

Notes: Listed are all musical excerpts with occurrence frequency for each condition. Neg, negatively valenced; Pos, positively valenced; High, highly arousing; Low, lowly arousing. Excerpt onsets are indicated in brackets.

Experimental procedure

Online rating

Prior to the main experimental session, participants rated all 40 musical excerpts at home according to the valence and arousal dimension via open source platform called ‘Online Learning and Training’ (OLAT, provided by the University of Zurich, http://www.olat.org/). Seven-point scales were provided to assess the experienced emotions in response to each musical excerpt. The scale representing valence ranged from −3 (sad) to +3 (happy), whereas the scale representing arousal ranged from 0 (calm) to 6 (stimulating).

Experimental session

The sets of stimuli presented during EEG recording were assembled subject-wise based on median splits calculated for the individual online ratings so that half of the stimuli represented both opposite parts of the valence and the arousal dimension, respectively. These sets contained 24 musical excerpts, reflecting most extreme values represented within this two-dimensional affective space. Table 1 shows the occurrence of each stimulus during EEG recording. For each musical excerpt, the tempo, tonal centroid and zero-crossing rate were extracted using the Music Information Retrieval toolbox (Lartillot and Toiviainen, 2007). Regarding these values, the subject-wise selected stimuli did not differ between the conditions, indicating overall comparability in the rhythmic [valence: t(21) = 0.996, P = 0.331; arousal: t(21) = −0.842, P = 0.409], tonal [valence: t(21) = 0.505, P = 0.619; arousal: t(21) = −1.141, P = 0.267) and timbral structure [valence: t(21) = 0.714, P = 0.482; arousal: t(21) = 1.968, P = 0.062].

During EEG measurements, the participants were placed on a comfortable chair in a dimmed and acoustically shielded room, at a distance of about 100 cm from a monitor. They were instructed to sit quietly, to relax and to look at the fixation mark on the screen to minimize muscle and eye movement artifacts. All musical excerpts were delivered binaurally with a sound pressure level of about 80 dB by using HiFi headphones (Sennheiser, HD 25-1, 70 Ω, Ireland). The participants were required to, respectively, rate their experienced emotions after listening to each musical excerpt. Ratings were performed by presenting two 5-degreed Self-Assessment Manikin (SAM) (Bradley and Lang, 1994), reflecting valence and arousal. The SAM scales contain non-verbal graphical depictions, whereby rating responses were also recorded between the depictions. The valence scale ranged from −10 to 10, whereas the arousal scale ranged from 0 to 10. After each stimulus rating, a baseline period of 30 s followed. The presentation of the stimuli and the recording of behavioral responses were controlled by the Presentation software (Neurobehavioral Systems, Albany, CA; version 17.0).

Data acquisition

The high-density EEG (128 channels) was recorded with a sampling rate of 500 Hz and a band pass filter from 0.3 to 100 Hz (Electrical Geodesics, Eugene, OR). Electrode Cz served as online reference, and impedances were kept below 30 kΩ. Before data pre-processing, the electrodes in the outermost circumference were removed, resulting in a standard 109-channel electrode array.

Data processing and analyses

Pre-processing

Raw EEG data were imported into EEGLAB v.13.2.1 (Delorme and Makeig, 2004; http://www.sccn.ucsd.edu/eeglab), an open source toolbox running under Matlab R2013b (MathWorks, Natick, MA, USA). Raw EEG data were band-pass filtered at 1–100 Hz and re-referenced to an average reference. Noisy channels exceeding averaged kurtosis and probability Z-scores of ±5 were removed. On average, 8.4% (s.d. = 3.4) of the channels were removed. Unsystematic artifacts were removed and reconstructed by using the Artifact Subspace Reconstruction method (Mullen et al., 2013; e.g. Jäncke et al., 2015; http://sccn.ucsd.edu/eeglab/plugins/ASR.pdf) and electrical line noise was removed by the CleanLine function (e.g. Brodie et al., 2014; http://www.nitrc.org/projects/cleanline).

For each musical excerpt, segments of 65 s duration were created, including a 5 s pre-stimulus period. Furthermore, a baseline correction relative to the −5 to 0 s pre-stimulus time period was applied.

Independent component analysis

The epoched EEG data were decomposed into temporally maximally independent signals using the extended infomax ICA algorithm (Lee et al., 1999). ICA determines the ‘unmixing’ matrix W with which it unmixes the multi-channel EEG data X into a matrix U comprising the channel-weighted sum of statistically IC activity time courses. Thus, U equals WX. For ICA, we used an iteration procedure based on the ‘binica’ algorithm with default parameters implemented in EEGLAB (stopping weight change = 10 − 7, maximal 1000 learning steps) (Makeig et al., 1997), revealing as many ICs as data channels. ICs not corresponding to cortical sources such as eye blinks, lateral eye movement and cardiac artifacts were excluded from further analyses. Given that only ICs with dipolar scalp projections appear as biologically plausible brain sources (Makeig et al., 2002; Delorme et al., 2012), only such were included in further analyses. Thus for each IC, we estimated a single-equivalent current dipole model and fitted the corresponding dipole sources within a co-registered boundary element head model (BEM) by using the FieldTrip function DIPFIT 2.2 (http://sccn.ucsd.edu/wiki/A08:_DIPFIT). Furthermore, dipole localizations were mapped to the Montreal Neurological Institute brain template. Only ICs accounting for more than 85% of variance of the best-fitting single-equivalent dipole model were further processed (Onton and Makeig, 2006).

Spectral analysis

A 512-point Fast Fourier transform with a 50% overlapping Hanning window of 1 s was applied to compute the IC spectrogram for each segment. The power of each segment was normalized by subtracting a mean baseline derived from the first 5 s of stimulus onset (Lin et al., 2010, 2014). The spectrogram was then divided into the five characteristic frequency bands, namely delta (1–4 Hz), theta (4–7 Hz), alpha-1 (8–10.5 Hz), alpha-2 (10.5–13 Hz) and beta (14–30 Hz).

IC clustering

In order to capture functionally equal ICs across all participants and enable group-level analyses, we applied cluster analyses based on the k-means algorithm. All ICs from all participants were clustered on the basis of the combination of spatial (dipole location and scalp topography) as well as functional (spectra) characteristics (Onton and Makeig, 2006). The smallest exhibited number of ICs determined the number of clusters used for this calculation (Lenartowicz et al., 2014). Furthermore, we removed ICs whose centroids were 3 s.d. of Euclidean distance away from fitting into any of the other clusters (Wisniewski et al., 2012). After calculating the cluster analysis, we visually confirmed consistency of the ICs within each cluster in terms of spatial and functional characteristics.

Statistical analyses

Responses to all musical excerpts were analyzed regarding the valence and arousal dimension independently from each other. The excerpt ratings during EEG recording were subject-wise and condition-wise averaged. Paired t-tests were used to statistically compare averaged responses to positively with negatively valenced excerpts as well as to highly with lowly arousing ones, respectively.

In order to determine the affective effects on brain activity regarding each IC cluster, we conducted analyses of variance (ANOVA) with two repeated measurements, one with a five-way factor (delta, theta, alpha-1, alpha-2 and beta) and another one with a two-way factor (high vs low arousal or positive vs negative valence). Statistical analyses were adjusted for non-sphericity using Greenhouse–Geisser Epsilon when equal variances could not be assumed. Significant interaction effects were further inspected by using post hoc t-tests. All post hoc t-tests were corrected for multiple comparisons by using the Holm procedure (Holm, 1979).

As it is important to report the strength of an effect independent of the sample size, we also calculated the effect size (ηp2) by dividing the sums of squares of the effects by the sums of squares of these effects plus its associated error variance within the ANOVA computation. All statistical analyses were performed using the SPSS software (SPSS 19 for Windows; www.spss.com).

Results

Behavioral data

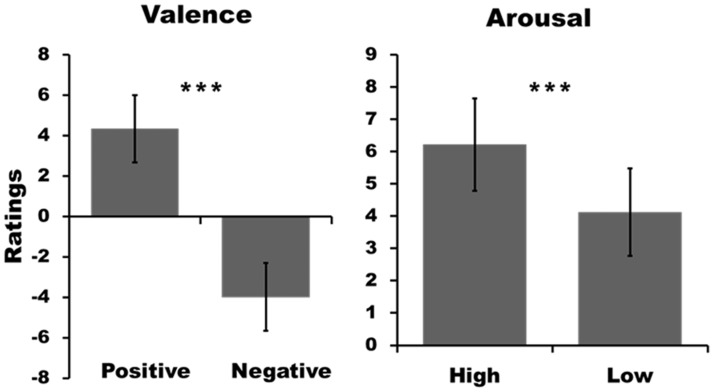

As confirmed by the ratings during EEG recording, the participants experienced the musical excerpts in accordance with the conditions they were previously assembled for. Ratings between the positively valenced (M = 4.4, s.d. = 1.7) and negatively valenced stimuli (M= −4.0, s.d. = 1.7) differed significantly from each other [t(21) = 14.2, P < 0.001]. Furthermore, the participants rated highly arousing stimuli (M = 6.2, s.d. = 1.4) significantly more arousing than low arousing ones [M = 4.1, s.d. = 1.4; t(21) = 10.7, P < 0.001]. Behavioral results are depicted in Figure 1.

Fig. 1.

Mean ratings of the stimuli during the EEG session, separately for the valence (left) and arousal (right) dimensions. The bars depict standard deviations. The asterisks indicate the level of significant threshold (***P < 0.001).

Electrophysiological data

IC clusters

Our cluster analysis on the estimated single-equivalent current dipoles fitted within the BEM using the DIPFIT function revealed 10 IC clusters. Sample size and the number of the ICs contained by each cluster, the Talairach coordinates of the particular centroids as well as the residual variances (RV) of the fitted models are reported in Table 2.

Table 2.

IC clusters and the centroids of their dipole location

| # | Cluster | N | ICs | x | y | z | RV% |

|---|---|---|---|---|---|---|---|

| 1 | Limbic–thalamic | 11 | 16 | −3 (18) | −9 (12) | 10 (9) | 11 (3) |

| 2 | Orbitofrontal | 14 | 21 | −10 (18) | 25 (21) | −24 (6) | 9 (2) |

| 3 | L frontal | 11 | 15 | −28 (21) | 30 (15) | 29 (12) | 11 (3) |

| 4 | R frontal | 11 | 12 | 19 (12) | 38 (19) | 32 (12) | 12 (2) |

| 5 | Frontoparietal | 11 | 22 | 17 (13) | −18 (13) | 52 (14) | 10 (3) |

| 6 | Precuneous | 15 | 19 | 0 (12) | −49 (12) | 50 (13) | 9 (3) |

| 7 | Parieto-occipital | 17 | 31 | −4 (14) | −75 (13) | 22 (13) | 11 (3) |

| 8 | R temporal–occipital | 9 | 14 | 41 (18) | −49 (16) | −4 (15) | 10 (3) |

| 9 | L occipital | 15 | 22 | −24 (12) | −87 (10) | −16 (10) | 10 (3) |

| 10 | R occipital | 17 | 24 | 13 (10) | −86 (11) | −18 (10) | 10 (3) |

Notes: Listed are sample size, number of ICs, the means of the Talairach coordinates (x, y, z) and RVs. Standard deviations are reported in brackets. L, left; R, right.

Two of the centroids (#1 and 2) were modeled mainly within subcortical regions, exhibiting individual dipoles located in the thalamus, amygdala, parahippocampus, posterior cingulate and insular cortex as well as in the orbitofrontal cortex. Two of them were modeled near the frontal midline, namely left- (# 3) and right-lateralized (# 4), exhibiting dipoles distributed around the inferior, middle and superior frontal lobe. Five of them were modeled within ‘junction’ regions between lobes: cluster #5 covered regions from frontal (precentral gyrus, superior, middle and medial frontal gyrus) to parietal (postcentral gyrus) and around the posterior insular cortex. Cluster #6 was mainly located in the precuneus but additionally included other parietal regions (postcentral gyrus, superior parietal lobus). The individual dipoles of cluster #7 were distributed around the parietal–occipital junction (centralized around the cuneus) and cluster #8 was right-lateralized covering temporal–occipital regions (middle occipital lobe, superior-, middle- and inferior temporal lobe). Finally, the two remaining centroids were modeled within posterior regions, left- (#9) and right-lateralized (#10), exhibiting individual dipoles distributed around the occipital lobe (fusiform gyrus, lingual gyrus) and cerebellar structures. In addition, most of the clusters exhibited few individual dipoles in the anterior and posterior cingulate cortex, namely in BA 24 (#5), BA 30 (#7, 8, 9), BA 31 (#5, 6, 7) and BA 32 (#3).

Scalp topographies, dipole locations and spectra of each IC cluster are depicted in Figure 2.

Fig. 2.

IC clusters: mean scalp maps showing distribution of relative projection strengths (W-1; warm colors indicating positive and cold colors negative values); dipole source locations (red = centroid; blue = individual dipoles) and spectrogram (black = mean; gray = individual).

IC spectra

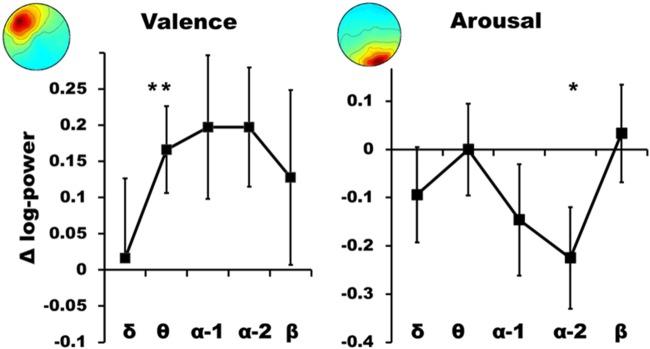

No cluster reached any significant main effects of valence or arousal, but all of them revealed significant main effects of frequency (P < 0.001, ηp2 > 0.8). Only two clusters revealed significant interaction effects. Cluster #3 exhibited a significant valence × frequency effect [F(1,10) = 5.96, P = 0.035, ηp2 = 0.373]. According to post hoc t-tests, this effect was due to theta power. Positive valence was associated with a power increase in this frequency band [t(10) = −2.77 P < 0.01]. This accounted for 24.09% of EEG variance. Cluster #10 exhibited a significant arousal × frequency effect [F(1,16) = 15.928, P < 0.001, ηp2 > 0.499]. This effect was due to alpha-2 activity. Arousal was associated with a power suppression in this frequency band [t(16) = −2.133 P = 0.025]. This accounted for 34.85% of EEG variance.

Figure 3 illustrates these two interaction effects in terms of differences calculated between the two affective conditions.

Fig. 3.

Differences (in Δ log-power) plotted as a function of frequency range for cluster #3 (left: positive–negative) and cluster #10 (right: high–low). The bars depict standard errors. The asterisks indicate significant effects (*P < 0.05, **P < 0.01). Holm-corrected.

Discussion

The focus of this work was to examine the neurophysiological activations evoked during natural music-listening conditions. In order to get access to functionally distinct brain processes related to music-evoked emotions, we decomposed the EEG data by using ICA. The advantage in interpreting ICs lies in its unmasked quality, making it easier to disentangle and identify EEG patterns, which might have remained undetectable when using standard EEG techniques (Makeig et al., 2004; Onton and Makeig, 2006; Jung et al., 2001). ICA denoises and provides an EEG signal considerably less influenced by non-brain artifacts, making source analysis more precise. Thus, the EEG results we revealed here are closely related to neurophysiological processes. In this study, we revealed a valence–arousal distinction during music listening, which is clearer as has been reported in previous studies of this type. In the following, the main findings will be discussed in a broader context.

Brain sources underlying music-evoked emotions

Consistent with a great body of studies on music listening (e.g. Platel et al., 1997; Brown et al., 2004; Schmithorst, 2005), we found multiple neural sources contributing to the emergence of music-evoked emotions. In fact, the IC clusters we revealed here largely overlap with the ones found in a previous ICA study in which musical excerpts were manipulated in mode and tempo (Lin et al., 2014). Moreover, we revealed distinct subcortical sources, a finding supported by many functional imaging studies on music and emotions. Limbic as well as paralimbic structures are known to be involved in music listening (Brown et al., 2004), and are strongly related to pleasure and reward (Blood et al., 1999; Blood and Zatorre, 2001; Koelsch et al., 2006; Koelsch et al., 2008; Salimpoor et al., 2011; Salimpoor et al., 2013). In addition, the thalamus and anterior cingulate cortex (ACC) constitute a considerable part of the arousal system (Paus, 1999; Blood and Zatorre, 2001). Furthermore, also valence has frequently been ascribed to such subcortical structures, namely to the amygdala, parahippocampus, ACC, insular cortex and orbitofrontal cortex (Khalfa et al., 2005; Baumgartner et al., 2006b; Mitterschiffthaler et al., 2007; Green et al., 2008; Brattico et al., 2011; Liégeois-Chauvel et al., 2014; Omigie et al., 2014). Altogether, the mesolimbic reward network has recently been associated with valence during continuous music listening (Alluri et al., 2015). Worthy of mention, a recent study also using a data-driven approach, namely one based on inter-subject correlations, was able to identify specific moments during music listening and thereby associate valence and arousal with responses of subcortical regions, such as the amygdala, insula and the caudate nucleus (Trost et al., 2015).

In line with many music-related EEG studies (Schmidt and Trainor, 2001; Tsang et al., 2001; Altenmüller et al., 2002; Sammler et al., 2007; Lin et al., 2010; Mikutta et al., 2012; Tian et al., 2013; Lin et al., 2014), we identified important contributing sources in the frontal lobe. In fact, several frontal regions are known to be involved in music processing, such as the motor- and premotor cortex (BA 4/6) in rhythm processing (Popescu et al., 2004), and the middle frontal gyrus in musical mode and tempo processing (Khalfa et al., 2005). In general, the medial prefrontal cortex is strongly associated with emotional processing (Phan et al., 2002). However, although dipoles are frequently found around the frontal midline (Lin et al., 2010; Lin et al., 2014), here we revealed two frontal clusters slightly lateralized on either side. This finding has previously been reported in auditory processing and working memory studies (e.g. Lenartowicz et al., 2014; Rissling et al., 2014). In contrast, the clusters we revealed around the fronto-central region and the precuneus overlap with the ones previously reported in music-related EEG studies (Lin et al., 2010, 2014). According to functional imaging studies, the inferior parietal lobule (BA 7) also contributes to musical mode (major/minor) processing (Mizuno and Sugishita, 2007), and the precuneus has been associated with the processing of (dis)harmonic melodies (Blood et al., 1999; Schmithorst, 2005).

Finally, several contributing neural sources were identified in the posterior portion of the brain. Similar posterior scalp maps have previously been reported in many music-related EEG studies focusing on ICs (Cong et al., 2013; Lin et al., 2014), even at the level of single channels (Baumgartner et al., 2006a; Elmer et al., 2012). This is not surprising, considering the robust finding of occipital and cerebellar structures being active during music listening (Brown et al., 2004; Schmithorst, 2005; Baumgartner et al., 2006b; Chapin et al., 2010; Koelsch et al., 2013). The cerebellum is (together with sensorimotor regions) involved in rhythmic entrainment (Molinari et al., 2007; Chen et al., 2008; Alluri et al., 2012), whereas occipital regions and also the precuneus/cuneus contribute to visual imagery (Fletcher et al., 1995; Platel et al., 1997), both psychological mechanisms proposed to be partly responsible for giving rise to musical emotions, as conceptualized in the BRECVEM model proposed by Juslin (2013).

Arousal and posterior alpha

The right posterior area of the brain, including occipital and cerebellar structures, appeared to be crucial in mediating arousal during music listening as indicated by a suppression of upper alpha power. In general, alpha power has frequently been related to affective processing (Aftanas et al., 1996; Aftanas and Golocheikine, 2001) and various aspects of music processing (Ruiz et al., 2009; Schaefer et al., 2011). Alpha power is inversely related to brain activity (Laufs et al., 2003a,b; Oakes et al., 2004), thus a decrement reflecting stronger cortical engagement. This suppression effect in connection with arousal has been reported in several studies (for a review see Foxe and Snyder, 2011), and has again been confirmed by our findings (Figure 3). However, the alpha suppression effect we revealed here was only apparent in the upper frequency range. A similar finding was reported by a recent EEG study employing graph theoretical analyses on the basis of EEG data. In this study, enhanced synchronization in the alpha-2 band during music listening was observed (Wu et al., 2013). However, in addition to this alpha suppression there was also a (non-significant) suppression in delta activity. This is consistent with a previous ICA finding showing differential delta power in response to highly arousing music (Lin et al., 2010).

Alpha oscillation, especially originating from parieto-occipital regions, drives an inhibitory process in primarily uninvolved brain areas (such as visual areas) (Fu et al., 2001; Klimesch et al., 2007; Jensen and Mazaheri, 2010; Sadaghiani and Kleinschmidt, 2013) and is related to internally directed attention constituting mental states such as imagery (Cooper et al., 2003; Cooper et al., 2006) or a kind of roping into the music as proposed by Jäncke et al. (2015). In conclusion, low-arousing music appears to provide a promoting condition for visual imagery.

Valence and frontal theta

The left frontal lobe appeared to be crucial in mediating valence during music listening as indicated by differential theta power. Happiness appeared to be associated with an increase in theta frequency power. In general, theta power has not only been linked to aspects of working memory and other mnemonic processes (Onton et al., 2005; Elmer et al., 2015) but also emotional processing (Aftanas and Golocheikine, 2001), especially in the case of theta power originating from the ACC (Pizzagalli et al., 2003). In line with our results, increased frontal theta power has been reported in response to positively valenced music, such as in music inducing pleasure or joy (Sammler et al., 2007; Lin et al., 2010). Even though we revealed several dipoles along the midline, here the effect in the theta frequency range was principally linked to a frontal cluster slightly lateralized to the left hemisphere. This left-sided hemispheric dominance is consistent with previous reported power asymmetry in frontal regions in connection with positively valenced music, at least in the alpha frequency range (Schmidt and Trainor, 2001; Tsang et al., 2001). Worthy of mention, there was also a trend at this area pointing to differences in the alpha frequency range (Figure 3). The involvement of alpha (together with theta power) in the context of processing valenced stimuli has recently been revealed in an intracranial EEG study (Omigie et al., 2014). However, these differences here did not reach statistical significance (alpha-1: P = 0.075; alpha-2: P = 0.037) after correction for multiple comparisons. Furthermore, this increase in theta power is also linked to a (non-significant) increase in beta activity. This is in line with the previous ICA study by Lin et al. (2014) relating differential beta activity over the medial frontal cortex to music with major mode.

Lateralization effects and emotion models

In the past decades, emotions have principally been discussed on the basis of neurophysiological models postulating functional asymmetries of arousal and valence. Regarding the valence dimension, it has been proposed that the left frontal lobe contributes to the processing of positive (approach) emotions, while its right-hemisphere counterpart is involved in the processing of negative (avoidance) affective states (Davidson et al., 1990). In line with this model, our results also suggest an association between positive emotions and the left-sided frontal areas. However, although our analyses also yield a right-sided frontal cluster, our findings do not confirm an effect of negative emotion there. A reason for this discrepancy may be due to the fact that sadness in the context of music is rather complex involving moods and personality traits and situational factors (Vuoskoski et al., 2012; Taruffi and Koelsch, 2014). Therefore, music-induced sadness does not lead to withdrawal in the same manner as it does in a non-musical context. In fact, sadness induced by music may be experienced as pleasurable (Sachs et al., 2015; Brattico et al., 2016), which is why some authors have also argued to consider such emotions as vicarious (Kawakami et al., 2013, 2014). Thus, the approach-withdrawal model that was proposed on the basis of rather everyday emotions does not seem to be entirely suitable for describing music-evoked emotions. Heller (1993) proposed a similar model, however, incorporating the arousal dimension. In addition to the frontal lobe modulating valence by either hemispheric side, this model assumes that arousal is modulated by the right parieto-temporal region, a brain region we also identified in our study as being associated with music-evoked arousal. Still in line with this model, our analyses revealed another right lateralized cluster (R temporal–occipital) close to the area described in the model.

Limitations

Similar to many studies on emotions (Schubert, 1999; Schmidt and Trainor, 2001; Chapin et al., 2010; Lin et al., 2010), we investigated affective responses within a two-dimensional framework. Although our findings are to some extent transferable to more general non-musical emotions, our setting does not allow capturing more differentiated emotions such as the aesthetic ones characterized by the GEMS (Zentner et al., 2008).

In order to take into account the idiosyncratic nature of music-listening behavior, our experimental conditions were directly manipulated on the affective level, entailing exposure of non-identical stimuli sets. Although the subject-wise selected stimuli demonstrated physical comparability among conditions, our experimental setting does not permit to reasonably determine the impact of acoustic features on emotional processing.

Conclusion

By applying ICA, we decomposed EEG data recorded from subjects during music listening into functionally distinct brain processes. We revealed multiple contributing neural sources typically involved in music and emotion processing, namely around the thalamic–limbic and orbitofrontal domain as well as at frontal, frontal–parietal, parietal, parieto-occipital, temporo-occipital and occipital regions. Arousal appeared to be mediated by the right posterior portion of the brain, as indicated by alpha power suppression, and valence appeared to be mediated by the left frontal lobe, as indicated by differential theta power. These findings are partly in line with the model proposed by Heller (1993), arguing that the frontal lobe is involved in modulating valenced experiences (the left frontal hemisphere for positive emotions) whereas the right parieto-temporal region contributes to the emotional arousal. The exciting part of this study is that our results emerged ‘blindly’ from a set of musical excerpts selected on an idiosyncratic basis.

Funding

This work was supported by the Swiss National Foundation (grant no. 320030B_138668 granted to L.J.).

Conflict of interest. None declared.

References

- Aftanas L.I., Golocheikine S.A. (2001). Human anterior and frontal midline theta and lower alpha reflect emotionally positive state and internalized attention: high-resolution EEG investigation of meditation. Neuroscience Letters, 310, 57–60. [DOI] [PubMed] [Google Scholar]

- Aftanas L.I., Koshkarov V.I., Pokrovskaja V.L., Lotova N.V., Mordvintsev Y.N. (1996). Pre-and post-stimulus processes in affective task and event-related desynchronization (ERD): do they discriminate anxiety coping styles? International Journal of Psychophysiology, 24, 197–212. [DOI] [PubMed] [Google Scholar]

- Alluri V., Brattico E., Toiviainen P., Burunat I., Bogert B., Numminen J, et al. (2015). Musical expertise modulates functional connectivity of limbic regions during continuous music listening. Psychomusicology: Music, Mind, and Brain, 25, 443. [Google Scholar]

- Alluri V., Toiviainen P., Jääskeläinen I.P., Glerean E., Sams M., Brattico E. (2012). Large-scale brain networks emerge from dynamic processing of musical timbre, key and rhythm. Neuroimage, 59, 3677–89. [DOI] [PubMed] [Google Scholar]

- Altenmüller E., Schürmann K., Lim V.K., Parlitz D. (2002). Hits to the left, flops to the right: different emotions during listening to music are reflected in cortical lateralisation patterns. Neuropsychologia, 40, 2242–56. [DOI] [PubMed] [Google Scholar]

- Annett M. (1970). A classification of hand preference by association analysis. British Journal of Psychology, 61, 303–21. [DOI] [PubMed] [Google Scholar]

- Aron A., Fisher H., Mashek D.J., Strong G., Li H., Brown L.L. (2005). Reward, motivation, and emotion systems associated with early-stage intense romantic love. Journal of Neurophysiology, 94, 327–37. [DOI] [PubMed] [Google Scholar]

- Bachorik J.P., Bangert M., Loui P., Larke K., Berger J., Rowe R, et al. (2009). Emotion in motion: Investigating the time-course of emotional judgments of musical stimuli. Music Perception: An Interdisciplinary Journal, 26(4), 355-64.

- Bartels A., Zeki S. (2004). The chronoarchitecture of the human brain—natural viewing conditions reveal a time-based anatomy of the brain. Neuroimage, 22, 419–33. [DOI] [PubMed] [Google Scholar]

- Bartels A., Zeki S. (2005). Brain dynamics during natural viewing conditions—a new guide for mapping connectivity in vivo. Neuroimage, 24, 339–49. [DOI] [PubMed] [Google Scholar]

- Baumgartner T., Esslen M., Jäncke L. (2006a). From emotion perception to emotion experience: Emotions evoked by pictures and classical music. International Journal of Psychophysiology, 60, 34–43. [DOI] [PubMed] [Google Scholar]

- Baumgartner T., Lutz K., Schmidt C.F., Jäncke L. (2006b). The emotional power of music: how music enhances the feeling of affective pictures. Brain Research, 1075, 151–64. [DOI] [PubMed] [Google Scholar]

- Bell A.J., Sejnowski T.J. (1995). An information-maximization approach to blind separation and blind deconvolution. Neural Computation, 7, 1129–59. [DOI] [PubMed] [Google Scholar]

- Berridge K.C. (2003). Pleasures of the brain. Brain and Cognition, 52, 106–28. [DOI] [PubMed] [Google Scholar]

- Bigand E., Parncutt R., Lerdahl F. (1996). Perception of musical tension in short chord sequences: the influence of harmonic function, sensory dissonance, horizontal motion, and musical training. Perception and Psychophysics, 58, 125–41. [PubMed] [Google Scholar]

- Blood A.J., Zatorre R.J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proceedings of the National Academy of Sciences of the United States of America, 98, 11818–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blood A.J., Zatorre R.J., Bermudez P., Evans A.C. (1999). Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nature Neuroscience, 2, 382–7. [DOI] [PubMed] [Google Scholar]

- Bradley M.M., Lang P.J. (1994). Measuring emotion: the self-assessment manikin and the semantic differential. Journal of Behavior Therapy and Experimental Psychiatry, 25, 49–59. [DOI] [PubMed] [Google Scholar]

- Brattico E., Alluri V., Bogert B., Jacobsen T., Vartiainen N., Nieminen S, et al. (2011). A functional MRI study of happy and sad emotions in music with and without lyrics. Frontiers in Psychology, 2(308), 10–3389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brattico E., Bogert B., Alluri V., Tervaniemi M., Eerola T., Jacobsen T. (2016). It’s sad but I like it: the neural dissociation between musical emotions and liking in experts and laypersons. Frontiers in Human Neuroscience, 9, doi:10.3389/fnhum.2015.00676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brattico E., Jacobsen T. (2009). Subjective appraisal of music. Annals of the New York Academy of Sciences, 1169, 308–17. [DOI] [PubMed] [Google Scholar]

- Brodie S.M., Villamayor A., Borich M.R., Boyd L.A. (2014). Exploring the specific time course of interhemispheric inhibition between the human primary sensory cortices. Journal of Neurophysiology, 112(6), 1470–6. [DOI] [PubMed] [Google Scholar]

- Brown S., Martinez M.J., Parsons L.M. (2004). Passive music listening spontaneously engages limbic and paralimbic systems. Neuroreport, 15, 2033–7. [DOI] [PubMed] [Google Scholar]

- Chapin H., Jantzen K., Kelso J.S., Steinberg F., Large E. (2010). Dynamic emotional and neural responses to music depend on performance expression and listener experience. PloS One, 5, e13812.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J.L., Penhune V.B., Zatorre R.J. (2008). Moving on time: brain network for auditory-motor synchronization is modulated by rhythm complexity and musical training. Journal of Cognitive Neuroscience, 20, 226–39. [DOI] [PubMed] [Google Scholar]

- Cong F., Alluri V., Nandi A.K., Toiviainen P., Fa R., Abu-Jamous B, et al. (2013). Linking brain responses to naturalistic music through analysis of ongoing EEG and stimulus features. IEEE Transactions on Multimedia, 15, 1060–9. [Google Scholar]

- Cong F., Puoliväli T., Alluri V., Sipola T., Burunat I., Toiviainen P, et al. (2014). Key issues in decomposing fMRI during naturalistic and continuous music experience with independent component analysis. Journal of Neuroscience Methods, 223, 74–84. [DOI] [PubMed] [Google Scholar]

- Cooper N.R., Burgess A.P., Croft R.J., Gruzelier J.H. (2006). Investigating evoked and induced electroencephalogram activity in task-related alpha power increases during an internally directed attention task. Neuroreport, 17, 205–8. [DOI] [PubMed] [Google Scholar]

- Cooper N.R., Croft R.J., Dominey S.J.J., Burgess A.P., Gruzelier J.H. (2003). Paradox lost? Exploring the role of alpha oscillations during externally vs. internally directed attention and the implications for idling and inhibition hypotheses. International Journal of Psychophysiology, 47, 65–74. [DOI] [PubMed] [Google Scholar]

- Craig A.D. (2005). Forebrain emotional asymmetry: a neuroanatomical basis? Trends in Cognitive Sciences, 9, 566–71. [DOI] [PubMed] [Google Scholar]

- Dalla Bella S., Peretz I., Rousseau L., Gosselin N. (2001). A developmental study of the affective value of tempo and mode in music. Cognition, 80, B1–10. [DOI] [PubMed] [Google Scholar]

- Damoiseaux J.S., Rombouts S., Barkhof F., Scheltens P., Stam C.J., Smith S.M, et al. (2006). Consistent resting-state networks across healthy subjects. Proceedings of the National Academy of Sciences of United States of America, 103, 13848–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson R.J. (1998). Anterior electrophysiological asymmetries, emotion, and depression: conceptual and methodological conundrums. Psychophysiology, 35, 607–14. [DOI] [PubMed] [Google Scholar]

- Davidson R.J. (2004). What does the prefrontal cortex “do” in affect: perspectives on frontal EEG asymmetry research. Biological Psychology, 67, 219–34. [DOI] [PubMed] [Google Scholar]

- Davidson R.J., Ekman P., Saron C.D., Senulis J.A., Friesen W.V. (1990). Approach-withdrawal and cerebral asymmetry: emotional expression and brain physiology: I. Journal of Personality and Social Psychology, 58, 330.. [PubMed] [Google Scholar]

- Delorme A., Makeig S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134, 9–21. [DOI] [PubMed] [Google Scholar]

- Delorme A., Palmer J., Onton J., Oostenveld R., Makeig S. (2012). Independent EEG sources are dipolar. PloS One, 7, e30135.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elmer S., Meyer M., Jäncke L. (2012). The spatiotemporal characteristics of elementary audiovisual speech and music processing in musically untrained subjects. International Journal of Psychophysiology, 83, 259–68. [DOI] [PubMed] [Google Scholar]

- Elmer S., Rogenmoser L., Kühnis J., Jäncke L. (2015). Bridging the gap between perceptual and cognitive perspectives on absolute pitch. The Journal of Neuroscience, 35, 366–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fletcher P.C., Frith C.D., Baker S.C., Shallice T., Frackowiak R.S., Dolan R.J. (1995). The mind's eye—precuneus activation in memory-related imagery. Neuroimage, 2, 195–200. [DOI] [PubMed] [Google Scholar]

- Foxe J.J., Snyder A.C. (2011). The role of alpha-band brain oscillations as a sensory suppression mechanism during selective attention. Frontiers in Psychology, 2, 154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu K.M.G., Foxe J.J., Murray M.M., Higgins B.A., Javitt D.C., Schroeder C.E. (2001). Attention-dependent suppression of distracter visual input can be cross-modally cued as indexed by anticipatory parieto–occipital alpha-band oscillations. Cognitive Brain Research, 12, 145–52. [DOI] [PubMed] [Google Scholar]

- Goldstein A. (1980). Thrills in response to music and other stimuli. Physiological Psychology, 8, 126–9. [Google Scholar]

- Gordon E. (1989). Manual for the Advanced Measures of Music Education. Chicago: G.I.A. Publications, Inc. [Google Scholar]

- Gowensmith W.N., Bloom L.J. (1997). The effects of heavy metal music on arousal and anger. Journal of Music Therapy, 34, 33–45. [Google Scholar]

- Green A.C., Bærentsen K.B., Stødkilde-Jørgensen H., Wallentin M., Roepstorff A., Vuust P. (2008). Music in minor activates limbic structures: a relationship with dissonance? Neuroreport, 19, 711–5. [DOI] [PubMed] [Google Scholar]

- Grewe O., Kopiez R., Altenmüller E. (2009). Chills as an indicator of individual emotional peaks. Annals of the New York Academy of Sciences, 1169, 351–4. [DOI] [PubMed] [Google Scholar]

- Grewe O., Nagel F., Kopiez R., Altenmüller E. (2005). How does music arouse “Chills”? Annals of the New York Academy of Sciences, 1060, 446–9. [DOI] [PubMed] [Google Scholar]

- Grewe O., Nagel F., Kopiez R., Altenmüller E. (2007). Emotions over time: synchronicity and development of subjective, physiological, and facial affective reactions to music. Emotion, 7, 774.. [DOI] [PubMed] [Google Scholar]

- Hagemann D., Naumann E., Becker G., Maier S., Bartussek D. (1998). Frontal brain asymmetry and affective style: a conceptual replication. Psychophysiology, 35, 372–88. [PubMed] [Google Scholar]

- Hagemann D., Waldstein S.R., Thayer J.F. (2003). Central and autonomic nervous system integration in emotion. Brain and Cognition, 52, 79–87. [DOI] [PubMed] [Google Scholar]

- Hagen E.H., Bryant G.A. (2003). Music and dance as a coalition signaling system. Human Nature, 14, 21–51. [DOI] [PubMed] [Google Scholar]

- Heller W. (1993). Neuropsychological mechanisms of individual differences in emotion, personality, and arousal. Neuropsychology 7, 476. [Google Scholar]

- Henriques J.B., Davidson R.J. (1991). Left frontal hypoactivation in depression. Journal of Abnormal Psychology, 100, 535.. [DOI] [PubMed] [Google Scholar]

- Hernandez L., Hoebel B.G. (1988). Food reward and cocaine increase extracellular dopamine in the nucleus accumbens as measured by microdialysis. Life Sciences, 42, 1705–12. [DOI] [PubMed] [Google Scholar]

- Hevner K. (1935). The affective character of the major and minor modes in music. The American Journal of Psychology, 103–18. [Google Scholar]

- Hevner K. (1937). The affective value of pitch and tempo in music. The American Journal of Psychology, 621–30. [Google Scholar]

- Holm S. (1979). A simple sequentially rejective multiple test procedure. Scandinavian Journal of Statistics, 6(2), 65–70. [Google Scholar]

- Hughlings-Jackson J. (1878). On affections of speech from disease of the brain. Brain, 1, 304–30. [Google Scholar]

- Jäncke L., Alahmadi N. (2015). Resting state EEG in children with learning disabilities an independent component analysis approach. Clinical EEG and Neuroscience, 1550059415612622. [DOI] [PubMed] [Google Scholar]

- Jäncke L., Kühnis J., Rogenmoser L., Elmer S. (2015). Time course of EEG oscillations during repeated listening of a well-known aria. Frontiers in Human Neuroscience, 9, doi:10.3389/fnhum.2015.00401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen O., Mazaheri A. (2010). Shaping functional architecture by oscillatory alpha activity: gating by inhibition. Frontiers in Human Neuroscience, 4, doi: http://dx.doi.org/10.3389/fnhum.2010. 00186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jung T.P., Makeig S., McKeown M.J., Bell A.J., Lee T.W., Sejnowski T.J. (2001). Imaging brain dynamics using independent component analysis. Proceedings of the IEEE, 89, 1107–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Juslin P.N. (2013). From everyday emotions to aesthetic emotions: towards a unified theory of musical emotions. Physics of Life Reviews, 10, 235–66. [DOI] [PubMed] [Google Scholar]

- Juslin P.N., Laukka P. (2003). Communication of emotions in vocal expression and music performance: different channels, same code? Psychological Bulletin, 129, 770.. [DOI] [PubMed] [Google Scholar]

- Juslin P.N., Laukka P. (2004). Expression, perception, and induction of musical emotions: a review and a questionnaire study of everyday listening. Journal of New Music Research, 33, 217–38. [Google Scholar]

- Juslin P.N., Liljeström S., Västfjäll D., Barradas G., Silva A. (2008). An experience sampling study of emotional reactions to music: listener, music, and situation. Emotion, 8, 668.. [DOI] [PubMed] [Google Scholar]

- Jutten C., Herault J. (1991). Blind separation of sources, part I: an adaptive algorithm based on neuromimetic architecture. Signal Processing, 24, 1–10. [Google Scholar]

- Kawakami A., Furukawa K., Katahira K., Okanoya K. (2013). Sad music induces pleasant emotion. Frontiers in Psychology, 4, [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawakami A., Furukawa K., Okanoya K. (2014). Music evokes vicarious emotions in listeners. Frontiers in Psychology, 5, doi: http://dx.doi.org/10.3389/fpsyg.2014.00431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khalfa S., Schon D., Anton J.L., Liégeois-Chauvel C. (2005). Brain regions involved in the recognition of happiness and sadness in music. Neuroreport, 16, 1981–4. [DOI] [PubMed] [Google Scholar]

- Klimesch W., Sauseng P., Hanslmayr S. (2007). EEG alpha oscillations: the inhibition–timing hypothesis. Brain Research Reviews, 53, 63–88. [DOI] [PubMed] [Google Scholar]

- Knutson B., Adams C.M., Fong G.W., Hommer D. (2001). Anticipation of increasing monetary reward selectively recruits nucleus accumbens. Journal of Neuroscience, 21, RC159.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koelsch S. (2014). Brain correlates of music-evoked emotions. Nature Reviews Neuroscience, 15, 170–80. [DOI] [PubMed] [Google Scholar]

- Koelsch S., Fritz T., Müller K., Friederici A.D. (2006). Investigating emotion with music: an fMRI study. Human Brain Mapping, 27, 239–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koelsch S., Fritz T., Schlaug G. (2008). Amygdala activity can be modulated by unexpected chord functions during music listening. Neuroreport, 19, 1815–9. [DOI] [PubMed] [Google Scholar]

- Koelsch S., Jäncke L. (2015). Music and the heart. European Heart Journal, 36, 3043–9. [DOI] [PubMed] [Google Scholar]

- Koelsch S., Skouras S., Fritz T., Herrera P., Bonhage C., Küssner M.B, et al. (2013). The roles of superficial amygdala and auditory cortex in music-evoked fear and joy. Neuroimage, 81, 49–60. [DOI] [PubMed] [Google Scholar]

- Komisaruk B.R., Whipple B. (2005). Functional MRI of the brain during orgasm in women. Annual Review of Sex Research, 16, 62–86. [PubMed] [Google Scholar]

- Lartillot P., Toiviainen P. (2007). A Matlab Toolbox for Musical Feature Extraction From Audio. In: International Conference on Digital Audio Effects (DAFx-07) Bordeaux, France.

- Laufs H., Kleinschmidt A., Beyerle A., Eger E., Salek-Haddadi A., Preibisch C, et al. (2003a). EEG-correlated fMRI of human alpha activity. Neuroimage, 19, 1463–76. [DOI] [PubMed] [Google Scholar]

- Laufs H., Krakow K., Sterzer P., Eger E., Beyerle A., Salek-Haddadi A, et al. (2003b). Electroencephalographic signatures of attentional and cognitive default modes in spontaneous brain activity fluctuations at rest. Proceedings of the National Academy of Sciences of United States of America, 100, 11053–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee T.W., Girolami M., Sejnowski T.J. (1999). Independent component analysis using an extended infomax algorithm for mixed subgaussian and supergaussian sources. Neural Computation, 11, 417–41. [DOI] [PubMed] [Google Scholar]

- Lehne M., Rohrmeier M., Koelsch S. (2013). Tension-related activity in the orbitofrontal cortex and amygdala: an fMRI study with music. Social Cognitive and Affective Neuroscience, nst141.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lemm S., Curio G., Hlushchuk Y., Muller K.R. (2006). Enhancing the signal-to-noise ratio of ICA-based extracted ERPs. IEEE Transactions on Biomedical Engineering, 53, 601–7. [DOI] [PubMed] [Google Scholar]

- Lenartowicz A., Delorme A., Walshaw P.D., Cho A.L., Bilder R.M., McGough J.J, et al. (2014). Electroencephalography correlates of spatial working memory deficits in attention-deficit/hyperactivity disorder: vigilance, encoding, and maintenance. The Journal of Neuroscience: the Official Journal of the Society for Neuroscience, 34, 1171–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liégeois-Chauvel C., Bénar C., Krieg J., Delbé C., Chauvel P., Giusiano B, et al. (2014). How functional coupling between the auditory cortex and the amygdala induces musical emotion: a single case study. Cortex, 60, 82–93. [DOI] [PubMed] [Google Scholar]

- Lin Y.P., Duann J.R., Chen J.H., Jung T.P. (2010). Electroencephalographic dynamics of musical emotion perception revealed by independent spectral components. Neuroreport, 21, 410–5. [DOI] [PubMed] [Google Scholar]

- Lin Y.P., Duann J.R., Feng W., Chen J.H., Jung T.P. (2014). Revealing spatio-spectral electroencephalographic dynamics of musical mode and tempo perception by independent component analysis. Journal of NeuroEngineering and Rehabilitation, 11, 18.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundqvist L.O., Carlsson F., Hilmersson P., Juslin P. (2008). Emotional responses to music: experience, expression, and physiology. Psychology of Music, 37(1), 61–90 [Google Scholar]

- Makeig S., Bell A.J., Jung T.P., Sejnowski T.J. (1996). Independent component analysis of electroencephalographic data. Advances in Neural Information Processing Systems, 8, 145–51. [Google Scholar]

- Makeig S., Debener S., Onton J., Delorme A. (2004). Mining event-related brain dynamics. Trends in Cognitive Sciences, 8, 204–10. [DOI] [PubMed] [Google Scholar]

- Makeig S., Enghoff S., Jung T.P., Sejnowski T.J. (2000). A natural basis for efficient brain-actuated control. IEEE Transactions on Rehabilitation Engineering, 8, 208–11. [DOI] [PubMed] [Google Scholar]

- Makeig S., Jung T.P., Bell A.J., Ghahremani D., Sejnowski T.J. (1997). Blind separation of auditory event-related brain responses into independent components. Proceedings of the National Academy of Sciences of United States of America, 94, 10979–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makeig S., Westerfield M., Jung T.P., Enghoff S., Townsend J., Courchesne E, et al. (2002). Dynamic brain sources of visual evoked responses. Science, 295, 690–4. [DOI] [PubMed] [Google Scholar]

- Malinen S., Hlushchuk Y., Hari R. (2007). Towards natural stimulation in fMRI—issues of data analysis. Neuroimage, 35, 131–9. [DOI] [PubMed] [Google Scholar]

- Mantini D., Perrucci M.G., Del Gratta C., Romani G.L., Corbetta M. (2007). Electrophysiological signatures of resting state networks in the human brain. Proceedings of the National Academy of Sciences of United States of America, 104, 13170–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meadows M.E., Kaplan R.F. (1994). Dissociation of autonomic and subjective responses to emotional slides in right hemisphere damaged patients. Neuropsychologia, 32, 847–56. [DOI] [PubMed] [Google Scholar]

- Menon V., Levitin D.J. (2005). The rewards of music listening: response and physiological connectivity of the mesolimbic system. Neuroimage, 28, 175–84. [DOI] [PubMed] [Google Scholar]

- Meyer L. (1956). Emotion and meaning in music: Chicago: University of Chicago Press. [Google Scholar]

- Mikutta C., Altorfer A., Strik W., Koenig T. (2012). Emotions, arousal, and frontal alpha rhythm asymmetry during Beethoven’s 5th symphony. Brain Topography, 25, 423–30. [DOI] [PubMed] [Google Scholar]

- Mitterschiffthaler M.T., Fu C.H.Y., Dalton J.A., Andrew C.M., Williams S.C.R. (2007). A functional MRI study of happy and sad affective states induced by classical music. Human Brain Mapping, 28, 1150–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mizuno T., Sugishita M. (2007). Neural correlates underlying perception of tonality-related emotional contents. Neuroreport, 18, 1651–5. [DOI] [PubMed] [Google Scholar]

- Molinari M., Leggio M., Thaut M. (2007). The cerebellum and neural networks for rhythmic sensorimotor synchronization in the human brain. The Cerebellum, 6, 18–23. doi:10.1080/14734220601142886. [DOI] [PubMed] [Google Scholar]

- Mullen T., Kothe C., Chi Y.M., Ojeda A., Kerth T., Makeig S, et al. (2013). Real-Time Modeling and 3D Visualization of Source Dynamics and Connectivity Using Wearable EEG. Conference proceedings: Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Conference, 2013, 2184–7, doi:10.1109/EMBC.2013.6609968. [DOI] [PMC free article] [PubMed]

- Oakes T.R., Pizzagalli D.A., Hendrick A.M., Horras K.A., Larson C.L., Abercrombie H.C, et al. (2004). Functional coupling of simultaneous electrical and metabolic activity in the human brain. Human Brain Mapping, 21, 257–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Omigie D., Dellacherie D., Hasboun D., George N., Clement S., Baulac M, et al. (2014). An intracranial EEG study of the neural dynamics of musical valence processing. Cerebral Cortex, bhu118. [DOI] [PubMed] [Google Scholar]

- Onton J., Delorme A., Makeig S. (2005). Frontal midline EEG dynamics during working memory. Neuroimage, 27, 341–56. [DOI] [PubMed] [Google Scholar]

- Onton J., Makeig S. (2006). Information-based modeling of event-related brain dynamics. Progress in Brain Research, 159, 99–120. [DOI] [PubMed] [Google Scholar]

- Panksepp J. (1995). The emotional sources of “chills” induced by music. Music Perception, 171–207. [Google Scholar]

- Paus T. (1999). Functional anatomy of arousal and attention systems in the human brain. Progress in Brain Research, 126, 65–77. [DOI] [PubMed] [Google Scholar]

- Peretz I., Gagnon L., Bouchard B. (1998). Music and emotion: perceptual determinants, immediacy, and isolation after brain damage. Cognition, 68, 111–41. [DOI] [PubMed] [Google Scholar]

- Peretz I., Hébert S. (2000). Toward a biological account of music experience. Brain and Cognition, 42, 131–4. [DOI] [PubMed] [Google Scholar]

- Pfaus J.G., Damsma G., Wenkstern D., Fibiger H.C. (1995). Sexual activity increases dopamine transmission in the nucleus accumbens and striatum of female rats. Brain Research, 693, 21–30. [DOI] [PubMed] [Google Scholar]

- Phan K.L., Wager T., Taylor S.F., Liberzon I. (2002). Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. Neuroimage, 16, 331–48. [DOI] [PubMed] [Google Scholar]

- Pizzagalli D.A., Oakes T.R., Davidson R.J. (2003). Coupling of theta activity and glucose metabolism in the human rostral anterior cingulate cortex: an EEG/PET study of normal and depressed subjects. Psychophysiology, 40, 939–49. [DOI] [PubMed] [Google Scholar]

- Platel H., Price C., Baron J.C., Wise R., Lambert J., Frackowiak R.S, et al. (1997). The structural components of music perception. Brain, 120, 229–43. [DOI] [PubMed] [Google Scholar]

- Popescu M., Otsuka A., Ioannides A.A. (2004). Dynamics of brain activity in motor and frontal cortical areas during music listening: a magnetoencephalographic study. Neuroimage, 21, 1622–38. [DOI] [PubMed] [Google Scholar]

- Rissling A.J., Miyakoshi M., Sugar C.A., Braff D.L., Makeig S., Light G.A. (2014). Cortical substrates and functional correlates of auditory deviance processing deficits in schizophrenia. Neuroimage: Clinical, 6, 424–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruiz M.H., Koelsch S., Bhattacharya J. (2009). Decrease in early right alpha band phase synchronization and late gamma band oscillations in processing syntax in music. Human Brain Mapping, 30, 1207–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sachs M., Damasio A., Habibi A. (2015). The pleasures of sad music: a systematic review. Frontiers in Human Neuroscience, 9, doi:10.3389/fnhum.2015.00404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sadaghiani S., Kleinschmidt A. (2013). Functional interactions between intrinsic brain activity and behavior. Neuroimage, 80, 379–86. [DOI] [PubMed] [Google Scholar]

- Salimpoor V.N., Benovoy M., Larcher K., Dagher A., Zatorre R.J. (2011). Anatomically distinct dopamine release during anticipation and experience of peak emotion to music. Nature Neuroscience, 14, 257–62. [DOI] [PubMed] [Google Scholar]

- Salimpoor V.N., van den Bosch I., Kovacevic N., McIntosh A.R., Dagher A., Zatorre R.J. (2013). Interactions between the nucleus accumbens and auditory cortices predict music reward value. Science, 340, 216–9. [DOI] [PubMed] [Google Scholar]

- Sammler D., Grigutsch M., Fritz T., Koelsch S. (2007). Music and emotion: electrophysiological correlates of the processing of pleasant and unpleasant music. Psychophysiology, 44, 293–304. [DOI] [PubMed] [Google Scholar]

- Schaefer R.S., Vlek R.J., Desain P. (2011). Music perception and imagery in EEG: alpha band effects of task and stimulus. International Journal of Psychophysiology, 82, 254–9. [DOI] [PubMed] [Google Scholar]

- Scherer K.R. (2004). Which emotions can be induced by music? What are the underlying mechanisms? And how can we measure them? Journal of New Music Research, 33, 239–51. [Google Scholar]

- Schmidt L.A., Trainor L.J. (2001). Frontal brain electrical activity (EEG) distinguishes valence and intensity of musical emotions. Cognition and Emotion, 15, 487–500. [Google Scholar]

- Schmithorst V.J. (2005). Separate cortical networks involved in music perception: preliminary functional MRI evidence for modularity of music processing. Neuroimage, 25, 444–51. [DOI] [PubMed] [Google Scholar]

- Schubert E. (1999). Measuring emotion continuously: validity and reliability of the two-dimensional emotion-space. Australian Journal of Psychology, 51, 154–65. [Google Scholar]

- Silberman E.K., Weingartner H. (1986). Hemispheric lateralization of functions related to emotion. Brain and Cognition, 5, 322–53. [DOI] [PubMed] [Google Scholar]

- Sloboda J.A. (1991). Music structure and emotional response: some empirical findings. Psychology of Music, 19, 110–20. [Google Scholar]

- Sloboda J.A., O'Neill S.A., Ivaldi A. (2001). Functions of music in everyday life: an exploratory study using the experience sampling method. Musicae Scientiae, 5, 9–32. [Google Scholar]

- Small D.M., Jones-Gotman M., Dagher A. (2003). Feeding-induced dopamine release in dorsal striatum correlates with meal pleasantness ratings in healthy human volunteers. Neuroimage, 19, 1709–15. [DOI] [PubMed] [Google Scholar]

- Sridharan D., Levitin D.J., Chafe C.H., Berger J., Menon V. (2007). Neural dynamics of event segmentation in music: converging evidence for dissociable ventral and dorsal networks. Neuron, 55, 521–32. [DOI] [PubMed] [Google Scholar]

- Sutton S.K., Davidson R.J. (2000). Prefrontal brain electrical asymmetry predicts the evaluation of affective stimuli. Neuropsychologia, 38, 1723–33. [DOI] [PubMed] [Google Scholar]

- Taruffi L., Koelsch S. (2014). The paradox of music-evoked sadness: An online survey. doi: http://dx.doi.org/10.1371/journal.pone.0110490. [DOI] [PMC free article] [PubMed]

- Thoma M.V., Ryf S., Mohiyeddini C., Ehlert U., Nater U.M. (2011a). Emotion regulation through listening to music in everyday situations. Cognition and Emotion, 26, 550–60. [DOI] [PubMed] [Google Scholar]

- Thoma M.V., Scholz U., Ehlert U., Nater U.M. (2011b). Listening to music and physiological and psychological functioning: the mediating role of emotion regulation and stress reactivity. Psychology and Health, 27, 227–41. [DOI] [PubMed] [Google Scholar]

- Tian Y., Ma W., Tian C., Xu P., Yao D. (2013). Brain oscillations and electroencephalography scalp networks during tempo perception. Neuroscience Bulletin, 29, 731–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trainor L.J., Heinmiller B.M. (1998). The development of evaluative responses to music: infants prefer to listen to consonance over dissonance. Infant Behavior and Development, 21, 77–88. [Google Scholar]

- Trehub S.E. (2003). The developmental origins of musicality. Nature Neuroscience, 6, 669–73. [DOI] [PubMed] [Google Scholar]

- Trost W., Ethofer T., Zentner M., Vuilleumier P. (2012). Mapping aesthetic musical emotions in the brain. Cerebral Cortex, 22, 2769–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trost W., Frühholz S., Cochrane T., Cojan Y., Vuilleumier P. (2015). Temporal dynamics of musical emotions examined through intersubject synchrony of brain activity. Social Cognitive and Affective Neuroscience, nsv060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsang C.D., Trainor L.J., Santesso D.L, Tasker S.L., La Schmidt, (2001). Frontal EEG responses as a function of affective musical features. Annals of the New York Academy of Sciences, 930, 439–42. [DOI] [PubMed] [Google Scholar]

- Vuoskoski J.K., Thompson W.F., McIlwain D., Eerola T. (2012). Who enjoys listening to sad music and why? Music Perception, 29, 311–7. [Google Scholar]

- Wisniewski M.G., Mercado E., III, Gramann K., Makeig S. (2012). Familiarity with speech affects cortical processing of auditory distance cues and increases acuity. PloS One, 7, e41025.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu J., Zhang J., Ding X., Li R., Zhou C. (2013). The effects of music on brain functional networks: a network analysis. Neuroscience, 250, 49–59. [DOI] [PubMed] [Google Scholar]

- Zatorre R. (2005). Music, the food of neuroscience? Nature, 434, 312–5. doi:10.1038/434312a. [DOI] [PubMed] [Google Scholar]

- Zatorre R.J., Chen J.L., Penhune V.B. (2007). When the brain plays music: auditory–motor interactions in music perception and production. Nature Reviews Neuroscience, 8, 547–58. [DOI] [PubMed] [Google Scholar]

- Zatorre R.J., Salimpoor V.N. (2013). From perception to pleasure: music and its neural substrates. Proceedings of the National Academy of Sciences of the United States of America, 110, 10430–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zentner M., Grandjean D., Scherer K.R. (2008). Emotions evoked by the sound of music: characterization, classification, and measurement. Emotion, 8, 494.. [DOI] [PubMed] [Google Scholar]

- Zentner M.R., Kagan J. (1998). Infants' perception of consonance and dissonance in music. Infant Behavior and Development, 21, 483–92. [Google Scholar]