Abstract

Event-related potentials (ERPs) were recorded in 26 right-handed students while they detected pictures of animals intermixed with those of familiar objects, faces and faces-in-things (FITs). The face-specific N170 ERP component over the right hemisphere was larger in response to faces and FITs than to objects. The vertex positive potential (VPP) showed a difference in FIT encoding processes between males and females at frontal sites; while for men, the FIT stimuli elicited a VPP of intermediate amplitude (between that for faces and objects), for women, there was no difference in VPP responses to faces or FITs, suggesting a marked anthropomorphization of objects in women. SwLORETA source reconstructions carried out to estimate the intracortical generators of ERPs in the 150–190 ms time window showed how, in the female brain, FIT perception was associated with the activation of brain areas involved in the affective processing of faces (right STS, BA22; posterior cingulate cortex, BA22; and orbitofrontal cortex, BA10) in addition to regions linked to shape processing (left cuneus, BA18/30). Conversely, in the men, the activation of occipito/parietal regions was prevalent, with a considerably smaller activation of BA10. The data suggest that the female brain is more inclined to anthropomorphize perfectly real objects compared to the male brain.

Keywords: ERPs, perception, face processing, social cognition, sex differences

Introduction

The aim of the study was to compare the neural correlates of time-locked processes involved in the recognition of faces and face patterns as opposed to object stimuli. We were particularly interested in investigating the neural mechanism responsible for the recognition of human resemblances in things (anthropomorphization) and the existence of possible sex differences in this phenomenon.

Sometimes, while observing the clouds in the sky, coffee foam or random decorative patterns, it is possible to be struck by the impression of clearly perceiving a face that is so well defined and yet so illusory. This phenomenon is called face pareidolia, i.e. the illusory perception of non-existent faces. How this process is made possible in the brain?

Liu et al. (2014) performed an interesting fMRI study in which participants were shown faces, letters and pure-noise images in which they reported seeing faces or letters illusorily. The right fusiform face area (rFFA) showed a specific response when the participants ‘saw’ faces as opposed to letters in the pure-noise images, suggesting that the right FFA plays a specific role not only in the processing of real faces but also in illusory face perception. The MEG study by Hadjikhani et al. (2009) investigated the time course of processes related to illusory faces perception in objects and found that objects incidentally perceived as faces evoked an early M165 activation in the ventral fusiform cortex (corresponding to the FFA); this time and location of activation was similar to that evoked by faces, whereas common objects did not evoke such activation. The authors concluded that face perception evoked by face-like objects is a relatively early process, and not a late reinterpretation of a cognitive phenomenon. Face pareidolia may be so perceptually salient to trigger face-specific attentional process. This has been shown by Takahashi and Watanabe (2013), who examined whether a shift in spatial attention would be produced by gaze cueing of face-like objects. A robust cueing effect was observed when face-like objects were perceived as faces. The magnitude of the cueing effect was comparable between the face-like objects and a cartoon face. However, the cueing effect was eliminated when the observer did not perceive the objects as faces.

Face pareidolia seems to be a universal phenomenon, and it has been observed even in autistic individuals who may show a deficit in face processing. Akechi et al. (2014) recorded the N170 event-related potential (ERP) in age-matched autistic (ASD) and control adolescents during the perception of objects and faces-in-objects. The results showed that both the ASD adolescents and the typically developing (TD) adolescents showed highly similar face-likeness ratings. Both groups also showed enhanced face-sensitive N170 amplitudes to face-like objects vs. objects. The authors concluded that both ASD and TD individuals exhibit perceptual and neural sensitivity to face-like features in objects.

Although previous studies of pareidolia were interesting and insightful, they only focused on the perceptual stage of face processing (without considering higher-order social processes that enable conscious representation and stimulus abstraction). Furthermore, they did not specifically investigate the existence of sex differences in this perceptual phenomenon, which concerns the processing of social information. According to the literature (Proverbio et al., 2008; Christov-Moore et al., 2014), the processing of social information may be subject to gender bias. This is of particular relevance because Pavlova et al. (2015a) recently showed a sex difference in the tendency to recognize a face in Arcimboldi-like food-plate images resembling faces. While it seems that women are better at seeing faces where there are none, the neural underpinnings of such a phenomenon have remained unexplored to date.

To shed some light on this matter, we compared the brain processing of perfectly real objects that stimulated face-devoted brain areas due to their face-likeness (also named ‘faces-in-things’, FITs) with that of objects and faces in age-matched male and female university students. We also obtained behavioral data related to face-likeness ratings of the objects as a function of the viewer’s sex.

We did not focus our attention only on the N170 and FFA responses but also quantified the anterior vertex positive potential (VPP) and N250 frontal components and performed source reconstruction to determine the complex neural circuits involved. Indeed the available literature have identified N170 and VPP as face-specific responses of ERPs (Bentin et al., 1996; Joyce & Rossion, 2005; Proverbio et al., 2006), while anterior N250 seems to be, on the opposite, an object sensitive component or, at least, to reflect object processing as opposed to face processing (Proverbio et al., 2007, 2011a; Wheatley et al., 2011). Therefore, these ERP components seem to represent the best candidates for indicating if ‘faces-in-things’ are considered more objects than faces (or vice versa) by a given brain.

Materials and methods

Subjects

Twenty-six Italian university students (13 males and 13 females) participated in the present study. The male participants were aged between 20 and 26 years (23.54, SD = 1.76), with an average schooling of 14 years. The female participants were aged between 19 and 29 years (24.08, SD = 2.87), with an average schooling of 14.6 years.

All had normal or corrected-to-normal vision and reported no history of neurological illness or drug abuse. Handedness was assessed by a laterality preference inventory, while eye dominance was determined by two independent practical tests. The experiments were conducted with the understanding and written consent of each participant and in accordance with ethical standards (Helsinki, 1964) and were approved by the University of Milano-Bicocca ethical committee. The subjects earned academic credits for their participation.

Stimuli

The stimulus set consisted of 390 color pictures that were 9.5 × 9.5 cm (250 × 250 pixels) in size and that belonged to the three distinct semantic classes: 130 faces, 130 objects and 130 faces-in-objects (briefly = FITs). Examples are shown in Figure 1. Some faces and FITs expressed emotions (e.g. contentedness, rage, fear and bewilderment); the effect was matched across face and FIT classes. The pictures were downloaded from Google Images. The faces were taken from a pre-existing face set used in a recent psychophysiological study (Proverbio et al., 2015). The faces were matched for sex and facial expression in three age classes (children: 13 M, 13 F; adults: 29 M, 27 F; elderly: 25 M, 23 F). The FIT stimuli were carefully matched with similar objects as reported in Table 1.

Fig. 1.

Examples of stimuli used in the study, as a function of category: faces (upper row), faces-in-things (middle row) and objects (lower row).

Table 1.

Stimuli belonging to the object (N = 130) and FIT (N130) classes were carefully matched for semantic category and perceptual characteristics

| Object | Fit |

|---|---|

| 1 Alarm | 1 Alarm |

| 3 Backpack | 3 Backpack |

| 1 Bell tower | 1 Bell tower |

| 1 Belt | 1 Belt |

| 1 Bin | 1 Bin |

| 1 Binoculars | 1 Binoculars |

| 1 Biscuit | 1 Biscuit |

| 1 Boat | 1 Boat |

| 1 Bolt | 1 Bolt |

| 7 Box | 7 Box |

| 1 Burner | 1 Burner |

| 1 Can | 1 Can |

| 1 Cane | 1 Cane |

| 4 Candle holder | 4 Candle holder |

| 1 Canoe | 1 Canoe |

| 1 Cartel | 1 Cartel |

| 3 Chair | 3 Chair |

| 1 Clamp | 1 Clamp |

| 5 Church | 5 Church |

| 1 Coconut | 1 Coconut |

| 2 Coffee | 2 Coffee |

| 1 Coffee machine | 1 Coffee machine |

| 2 Covers | 2 Covers |

| 1 Drawer | 1 Drawer |

| 1 Elevator | 1 Elevator |

| 1 Exhaust Pipe | 1 Exhaust Pipe |

| 2 Fan | 2 Fan |

| 1 Flowerbed | 1 Flowerbed |

| 1 Gearshift | 1 Gearshift |

| 2 Grater | 2 Grater |

| 1 Guitar | 1 Guitar |

| 1 Hat | 1 Hat |

| 9 House | 9 House |

| 2 Inflatable raft | 2 Inflatable raft |

| 2 Intercom | 2 Intercom |

| 1 Knife | 1 Knife |

| 1 Lock | 1 Lock |

| 2 Log | 2 Log |

| 1 Manhole | 1 Manhole |

| 1 Microscope | 1 Microscope |

| 1 Motorcycle | 1 Motorcycle |

| 1 Mouse | 1 Mouse |

| 3 Oil | 3 Oil |

| 1 Onion | 1 Onion |

| 1 Opener | 1 Opener |

| 2 Oven | 2 Oven |

| 1 Paper napkins | 1 Paper napkins |

| 1 Parking meter | 1 Parking meter |

| 4 Pepper | 4 Pepper |

| 2 Pasta | 2 Pasta |

| 3 Plug | 3 Plug |

| 3 Pole | 3 Pole |

| 2 Postbox | 2 Postbox |

| 1 Pot | 1 Pot |

| 1 Printer | 1 Printer |

| 2 Purse | 2 Purse |

| 3 Radio | 3 Radio |

| 1 Rake | 1 Rake |

| 1 Salt | 1 Salt |

| 5 Sink | 5 Sink |

| 1 Steering Wheel | 1 Steering Wheel |

| 2 Suitcase | 2 Suitcase |

| 2 Switch | 2 Switch |

| 1 Tank | 1 Tank |

| 1 Thermometer | 1 Thermometer |

| 2 Toy | 2 Toy |

| 1 Tractor | 1 Tractor |

| 1 Typewriter | 1 Typewriter |

| 3 USB | 3 USB |

| 3 Wheel | 3 Wheel |

| 1 Window Cleaner | 1 Window Cleaner |

| 1 Wine | 1 Wine |

To validate the FIT pictures as eliciting a strong illusion of a face, an initial larger sample of 400 pictures was created (200 FITs and 200 objects) and presented to a group of judges for evaluation. The judges were 10 university students or scholars (5 men of 33.5 years and 5 women of 28.6 years). The judges were asked to evaluate each of the object and FIT stimuli (that were presented randomly intermixed in a PPT presentation) in terms of their face-likeness using a 3-point scale (with 2, 1 and 0 indicating ‘object with a face’, ‘object with a weak face resemblance’ and ‘object without a face’, respectively).

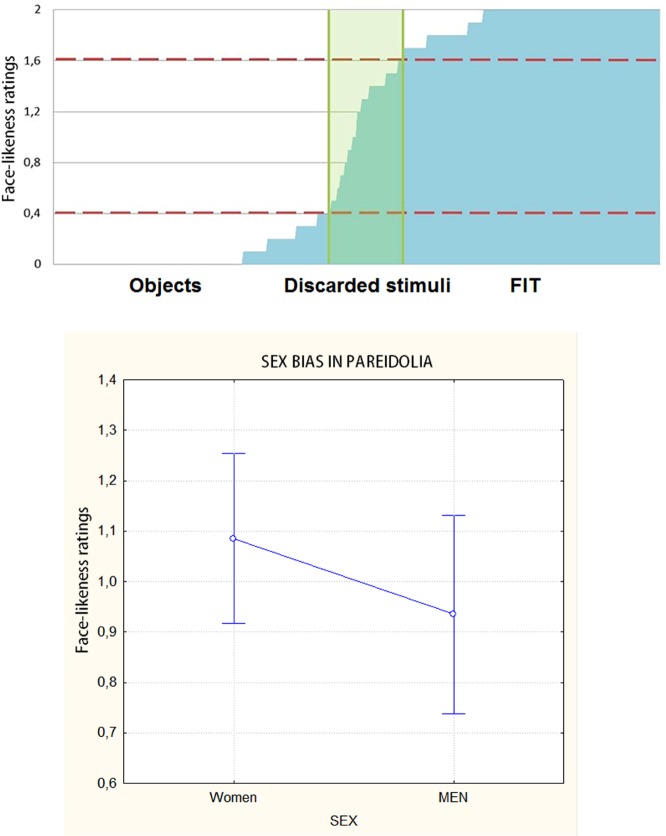

The standard objects scored on average between 0 and 0.4; all stimuli with a score between 0.5 and 1.5 were discarded (Figure 2, top). Objects scoring between 1.6 and 2 were included in the FIT category. Based on this selection, 130 objects and 130 FITs were included in the final set of stimuli. A repeated-measures ANOVA performed on the validation scores produced by the male vs female judges showed a strong sex effect [F(1, 399) = 72.853, P < 0.00001], with women attributing higher face-likeness scores to objects (regardless of category) than men (F = 1.1, SE = 0.08; M = 0.935, SE = 0.1; Figure 2, bottom)

Fig. 2.

(Top) Face-likeness ratings (along with standard deviations) obtained for a set of 400 pictures of real objects. Validation was performed to select the 130 objects more likely (FITs) and less likely (objects) to be perceived as faces. (Bottom) Validation data showing a clear bias toward face-likeness in women compared to men. (0= non-face; 2= face).

Stimuli were equiluminant across classes, as determined through an ANOVA carried out on individual luminance levels measured via a Minolta luminance meter [F(2, 288) = 0.505, P = 0.6]. Mean luminance levels were as follows: faces = 16.78 FL; objects = 16.66 FL; and FITs = 16.0 FL. Forty-five additional pictures depicting familiar animals (250 × 250 pixels in size) were added as rare targets to be responded to.

Task and procedure

The participants were comfortably seated in a darkened test area that was acoustically and electrically shielded. A high-resolution screen was placed 85 cm in front of their eyes. The subjects were instructed to gaze at the center of the screen (where a small blue circle served as a fixation point) and to avoid any eye or body movements during the recording session. The stimuli were presented for 1000 ms in random order at the center of the screen (ISI = 800 ± 200 ms random) in eight different, randomly mixed short runs that each lasted approximately 2 min. To keep the participants focused on the visual stimuli, the task consisted of responding as accurately and quickly as possible to photos displaying animals by pressing a response key with the index finger of the left or right hand; all other photos were to be viewed but ignored. The left and right hands were used alternately throughout the recording session, and the order of the hand and task conditions were counterbalanced across the subjects. For each experimental run, the number of target stimuli varied between 3 and 7 (frequency of 12%), and the presentation order differed among the subjects. The beginning of each trial was preceded by three visually presented warning signals (Attention! Set! Go!). The experimental session was preceded by two novel sequences for training.

EEG recordings

The EEG was continuously recorded from 128 scalp sites according to the 10-5 International system at a sampling rate of 512 Hz. Horizontal and vertical eye movements were also recorded. The averaged ears served as the reference lead. The EEG and electro-oculogram (EOG) were amplified with a half-amplitude band-pass of 0.016–70 Hz. Electrode impedance was kept below 5 kΩ. EEG epochs were synchronized with the onset of stimulus presentation and analyzed with ANT-EEProbe software. Computerized artifact rejection was performed before averaging to discard epochs in which eye movements, blinks, excessive muscle potentials or amplifier blocking occurred. EEG epochs associated with an incorrect behavioral response were also excluded. The artifact rejection criterion was peak-to-peak amplitude exceeding 50 µV, and the rejection rate was ∼5%. The ERPs were averaged off-line from −100 ms before to 1000 ms after stimulus onset.

Topographical voltage maps of the ERPs were made by plotting color-coded isopotentials obtained by interpolating voltage values between scalp electrodes at specific latencies. Low Resolution Electromagnetic Tomography (LORETA) (Pascual-Marqui et al., 1994) was performed on the ERP difference waves of interest at various time latencies using ASA4 software. LORETA, which is a discrete linear solution to the inverse EEG problem, corresponds to the 3D distribution of neuronal electrical activity that has maximum similarity (i.e. maximum synchronization), in terms of orientation and strength, between neighboring neuronal populations (represented by adjacent voxels). The source space properties were grid spacing = 5 mm and estimated SNR = 3. In this study, an improved version of the standardized sLORETA was used; this version, swLORETA, incorporates a singular value decomposition-based lead field weighting.

Data analysis

The peak amplitude and peak latency of the posterior N170, anterior VPP and frontal N250 were measured when and where the ERP components reached their maximum amplitude with respect to the baseline voltage. The occipito/temporal N170 was quantified at posterior sites (P9, P10, TPP9h and TPP10h) within the 160–190 ms time window. The frontal VPP was quantified at anterior sites (AFF1, AFF2, FC1 and FC2) within the 150–190 ms time window. The N250 was quantified at anterior sites (AF3, AF4, Fp1 and Fp2) within the 200–250 ms time window.

The ERP data were subjected to multifactorial repeated-measures ANOVA. The between-groups factor was ‘sex’ (male and female), the within-group factors were ‘stimulus type’ (faces, objects and FITs), ‘electrode’ (2 levels dependent on the ERP of interest: P9-P10-TPP9h-TPP10h for N170 amplitude and latency; AFF1-AFF2-FC1-FC2 for VPP amplitude and latency; AF3-AF4-Fp1-Fp2 for N250 amplitude and latency) and ‘hemisphere’ (left and right). Multiple comparisons of means were performed by post hoc Tukey tests.

Results

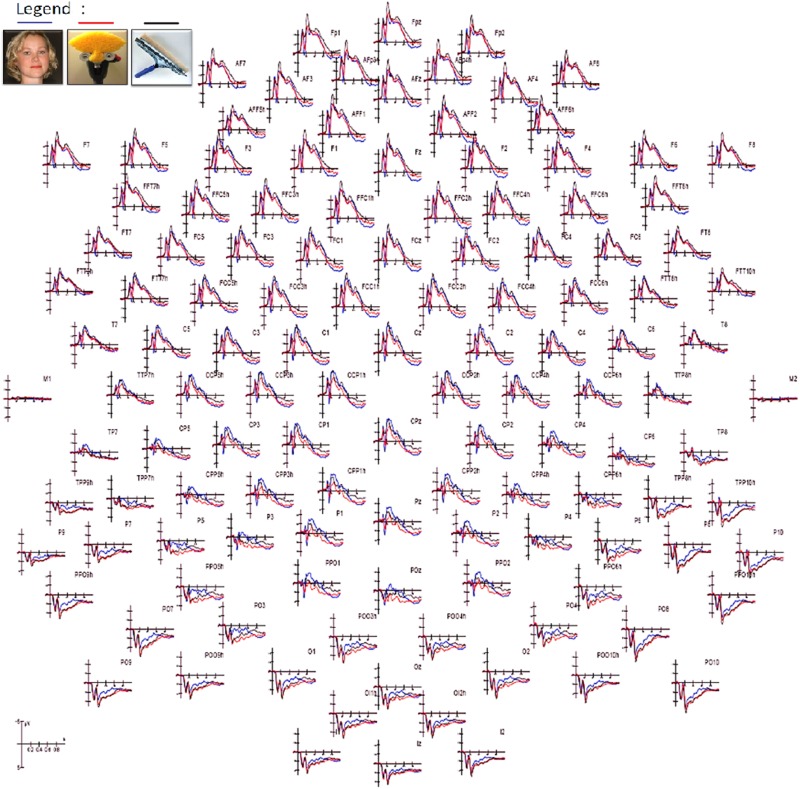

The mean response time to targets (pictures of animals) was 539.4 in women and 538.7 ms in men, with no hand or sex differences. Figure 3 shows grand-average waveforms recorded as a function of stimulus type. Several early and late latency differential effects are visible at posterior and anterior sites. This paper will focus on the analysis of brain activity recorded in the 100–250 ms time window.

Fig. 3.

Grand-average ERPs recorded from all scalp sites as a function of stimulus category.

N170 (160–190 ms)

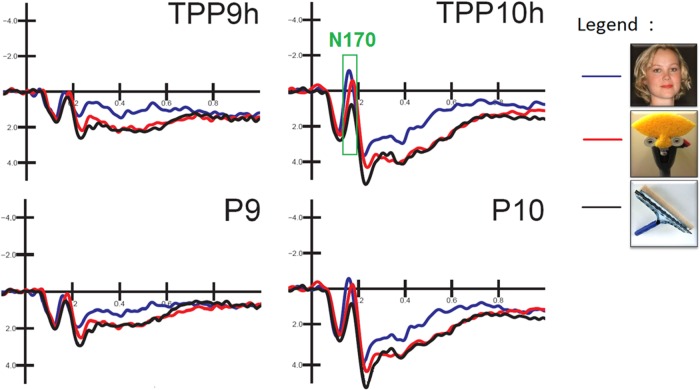

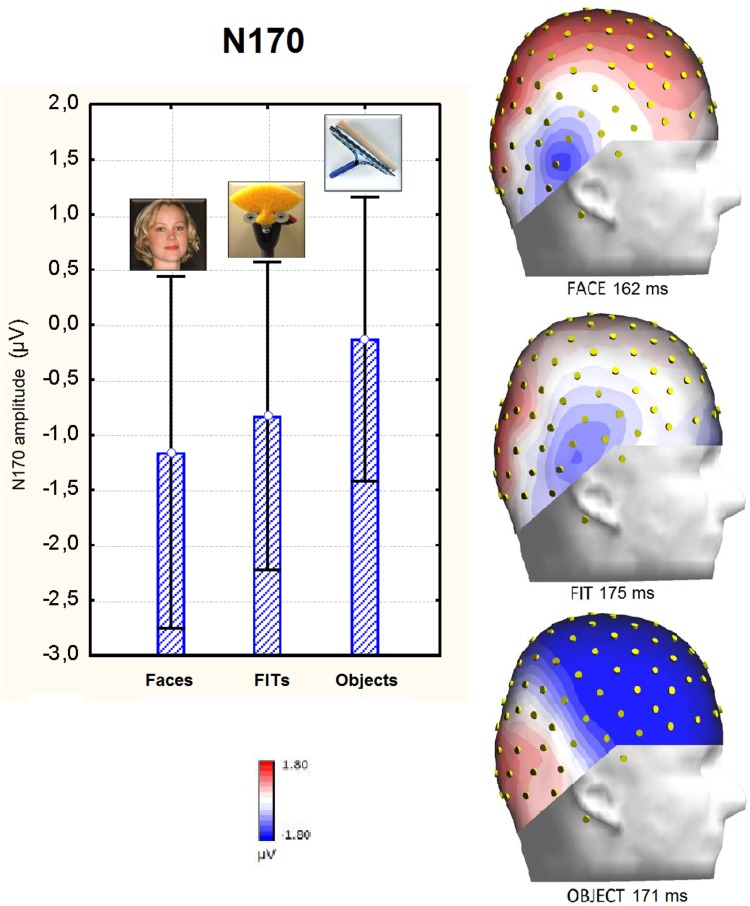

The ANOVA performed on the peak amplitude of the N170 measured at occipito/temporal sites (P9–P10–TPP9h–TPP10h) showed a significant effect of stimulus type [F(2,48) = 10.22, P < 0.001. Post hoc comparisons provided evidence of a significantly earlier N170 response to faces than to FITs (P < 0.001) or objects (P < 0.04) and to objects than to FITs (P < 0.03), as shown in Figure 4. In detail, face processing required 9 ms less than FIT processing (face = 166 ms, SE = 0.003; FIT = 175 ms, SE = 0.002; and object = 170 ms, SE = 0.002). The ANOVA performed on peak amplitude yielded a significant effect of stimulus type [F(2,48) = 12.32, P < 0.01] with larger N170s to faces (−1.16 μV, SE = 0.39) than to objects (−0.13 μV, SE = 0.32; P < 0.01) and to FITs (−0.83 μV, SE = 0.34) than to objects (P < 0.01). Post hoc comparisons showed no differences between the N170s elicited by FITs or faces, as displayed in Figure 5. The factor electrode also had a significant effect [F(1,24) = 5.69, P < 0.05], with larger negativities over the posterior temporal sites TPP9h–TPP10h (−0.87 μV, SE = 0.35) than over occipito/temporal sites P9–P10 (−0.55 μV, SE = 0.33). The significant interaction of stimulus type × hemisphere [F(2,48) = 7.60, P < 0.01) and relative post hoc comparisons indicated a stimulus type discrimination focused over the right hemisphere (visible in the waveforms of Figure 4), where the face-related N170 (−1.74 μV, SE = 0.53) was larger (P < 0.001) than that elicited by objects (0.02 μV, SE = 0.42) and, in turn, smaller (P < 0.002) than that elicited by FITs (−1.10 μV, SE = 0.43).

Fig. 4.

Close-up of ERP waveforms recorded at left and right posterior temporal sites as a function of stimulus category. The N170 response over the right hemisphere was larger to faces and faces-in-things.

Fig. 5.

Mean amplitude of the N170 response recorded as a function of stimulus type and relative scalp distribution. Isocolor topographical maps show the N170 surface voltage distribution over the right hemisphere.

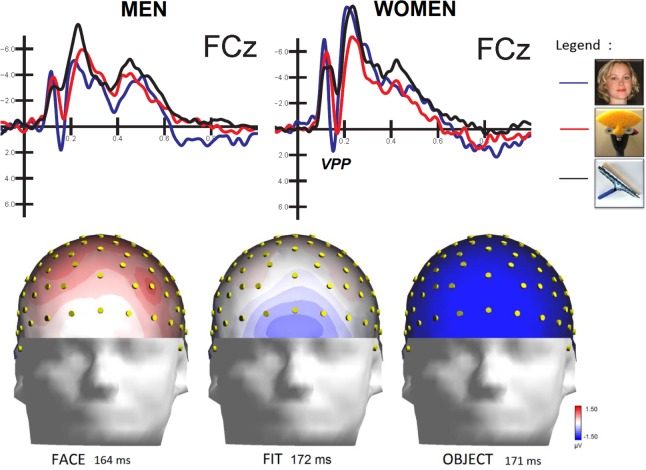

VPP (150–190 ms)

The ANOVA carried out on VPP latency at the AFF1–AFF2–FC1–FC2 electrode sites showed a significant effect of stimulus type [F(2,48) = 8.82, P < 0.001], with earlier peaks to faces (163 ms, SE = 0.002) than to FITs (172 ms, SE = 0.002) and objects (170 ms, SE = 0.003).

The analysis of VPP amplitude also showed a significant of the stimulus type factor [F(2,48) = 47.59, P < 0.01]. Post hoc tests showed a significant difference between the VPPs elicited by faces and objects (P < 0.01), by FITs and objects (P < 0.01) and by faces and FITs (P < 0.05). Overall, the VPPs elicited by faces (1.57 μV, SE = 0.63) were of greater amplitude than those elicited by FITs (0.67 μV, SE = 0.58) or objects (−1.96 μV, SE = 0.59), as visible in the waveforms of Figure 6.

Fig. 6.

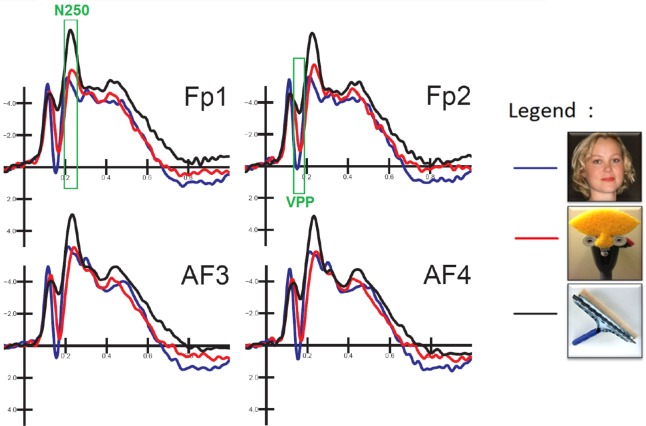

Close-up of ERP waveforms recorded at left and right prefrontal sites as a function of stimulus category. Overall, the VPP response was larger to faces than to objects, while the N250 was larger to objects than to faces or FITs.

However, the significance of the stimulus type x sex interaction [F(2,48) = 4.59, P < 0.01] revealed differential processing of FITs in men and women. In detail, post hoc comparisons showed that while in men, VPPs to faces (2.58 μV, SE = 0.90) were greater (P < 0.001) than those to FITs (0.62 μV, SE = 0.82) and objects (P < 0.001; −1.86 μV, SE = 0.83), in women, VPPs to faces (0.57 μV, SE = 0.90) and FITs (0.72 μV, SE = 0.82) were equal in magnitude. However, VPPs to both faces and FITs were much greater in amplitude (P < 0.001) than those to objects (−2.07 μV, SE = 0.83). Figure 7 shows the grand-average ERP waveforms recorded at midline frontocentral sites in the two sexes and clearly illustrates this sex difference; a strong face-specific response to FIT patterns were found in the female but not the male brains. The electrode factor was also significant [F(1,24) = 7.64, P < 0.01], showing larger positivities at frontocentral (FC1–FC2 = 0.27 μV, SE = 0.53) than anterior frontal (AFF1–AFF2 = −0.09 μV, SE = 0.60) sites.

Fig. 7.

Sex difference in the amplitude of the VPP component recorded at midline frontocentral sites. Isocolor topographical maps show the VPP surface voltage distribution (top view) recorded regardless of the sex of the viewer. The number indicates the peak latency of the component.

N250 (200–250 ms)

The analysis of N250 latency at anterior sites (AF3–AF4–Fp1–Fp2) yielded an effect of stimulus type [F(2,48) = 4.51, P < 0.01], with earlier responses to faces (233 ms, SE = 0.007) than to objects (236 ms, SE = 0.005) or FITs (242 ms, SE = 0.005). Furthermore, the N250 at prefrontal sites was earlier in the females (225.6 ms, SE= 0.003) compared to the males (232 ms, SE= 0.003) [F(1,24 = 4.31; P < 0.05]. The ANOVA performed on N250 amplitude showed a significant effect of stimulus type [F(2,48) = 9.02, P < 0.001], with larger negativities (P < 0.005) to objects (−8.45 μV, SE = 0.82) than to faces (−6.97 μV, SE = 0.69) or FITs (−6.53 μV, SE = 0.74), with no differences between the latter (see waveforms in Figure 6).

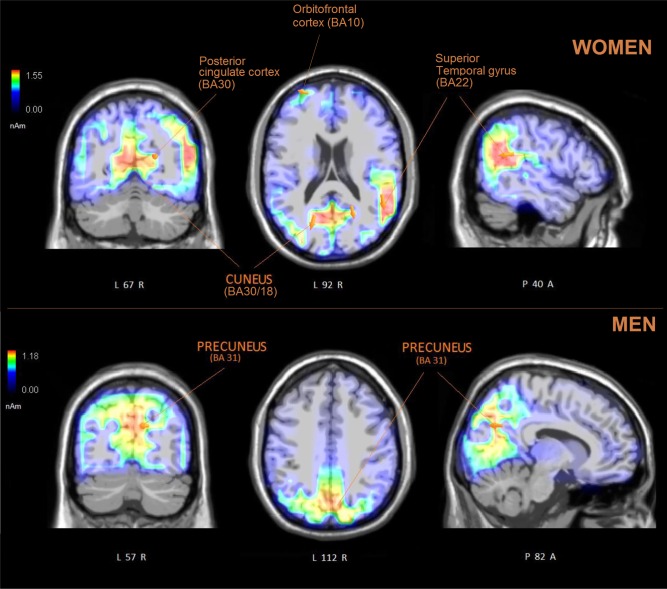

Source reconstruction

To identify the neural generators of the intracranial activity associated with FIT processing at the N170/VPP level, two swLORETAs were performed on the brain responses recorded between 150 and 190 ms in the two groups of participates (females vs males). Table 2 shows the active electromagnetic dipoles explaining the surface voltage that reflects the neural processing of FIT patterns. A strong sex difference can be appreciated in the number, strength and localization of sources across the two genders. In the female brain, FIT perception was associated with the activation of areas devoted to affective facial information, such as the right STS (BA22), the posterior cingulate cortex (BA22) and the orbitofrontal cortex (BA10), in addition to regions devoted to object shape processing (left cuneus, BA18/30). Conversely, in the male brain, the activation of occipito/parietal regions was prevalent, with a considerably smaller activation of BA10 (4.98 nAm in men vs 13.2 nAm in women). The LORETA for the two sexes is displayed in Figure 8.

Table 2.

Talairach coordinates (in mm) corresponding to the intracranial generators explaining the surface voltage recorded during the 150–190 ms time window during the processing of FIT patterns in the two sexes

| Magn. | T-x [mm] | T-y [mm] | T-z [mm] | Hem. | Lobe | Gyrus | BA |

|---|---|---|---|---|---|---|---|

| Women | |||||||

| 17.1 | 50.8 | −48.7 | 15.3 | R | T | Superior Temporal (STS) | 22 |

| 16.0 | −18.5 | −69 | 13.6 | L | O | Cuneus | 30 |

| 15.9 | −18.5 | −90.3 | 20.8 | L | O | Cuneus | 18 |

| 14.7 | 21.2 | −58.9 | 14.5 | R | Limbic | Posterior Cingulate | 30 |

| 13.2 | −28.5 | 53.4 | 24.8 | L | F | Superior Frontal | 10 |

| 11.6 | −8.5 | 57.3 | −9 | L | F | Superior Frontal | 10 |

| MEN | |||||||

| Magn. | T-x [mm] | T-y [mm] | T-z [mm] | Hem. | Lobe | Gyrus | BA |

| 11.8 | 11.3 | −70 | 22.5 | R | P | Precuneus | 31 |

| 4.98 | 31 | 55.3 | 7 | R | F | Superior Frontal | 10 |

Magn. = Magnitude in nAm; H = hemisphere, BA = Brodmann area. See the text for a discussion of studies related to brain functions.

Fig. 8.

Coronal, axial and sagittal views of active sources during the processing of FIT patterns (150–190 ms) in women and men. The different colors represent differences in the magnitude of the electromagnetic signal (in nAm). The electromagnetic dipoles are shown as arrows and indicate the position, orientation and magnitude of the dipole modeling solution applied to the ERP waveform within the specific time window. The numbers refer to the displayed brain slice in sagittal view: A = anterior, P = posterior. The images highlight the strong activation of social and affective area (STS and cingulate and orbitofrontal cortices) in female participants, suggesting how they are more inclined to anthropomorphize objects.

Discussion

We compared the functional and temporal correlates of the neural processing of objects displaying illusory faces compared to faces and non-face objects between the two sexes. The N170 response to faces was earlier than that to FITs and objects. The ERP data also showed a modulation of the occipito/temporal N170 as a function of stimulus type, with the largest peaks elicited by face stimuli. This finding strongly agrees with available literature, according to which the N170 to faces is typically of greater amplitude than that to objects (Bentin et al., 1996; Carmel & Bentin, 2002; Proverbio et al., 2011b). Although the N170 to FITs tended to be of intermediate amplitude, the difference between the N170s to faces and FITs did not reach significance, supporting previous ERP studies of face pareidolia (Hadjikhani et al., 2009). No sex difference was observed at the N170/FFA level, which fully agrees with previous neuroimaging studies (Hadjikhani et al., 2009; Takahashi & Watanabe, 2013; Akechi et al., 2014; Liu et al., 2014).

Conversely, the anterior VPP amplitude showed sexual dimorphism in face-likeness sensitivity in that this response was larger to faces than to FITs in men, but in women, the anterior VPP to faces and FITs were of equal amplitude. This finding can be interpreted in light of other evidence in the literature regarding the greater interest of women in social information and correlates with behavioral data obtained in the validation, demonstrating the existence of a sex difference in face-likeness ratings. Although female individuals participating to the validation session were not the same identical individuals participating to the EEG recording session, they belonged to the same statistical cohort, being all fellow students at the same Faculty at University of Milano-Bicocca. Overall, compared to the men, the women were significantly more inclined to perceive faces in perfectly real object photographs. In particular, the sex difference in face sensitivity fits with some electrophysiological literature (Proverbio et al., 2008) that has provided evidence of greater electro-cortical responsivity to faces and people than to inanimate displays in females. In Proverbio et al.’s study (2008), an enhanced frontal N2 component to persons was found in women; this finding was interpreted as an index of greater interest in or attention to this class of biologically relevant signals. The stronger responsivity to social stimuli in women may reflect a privileged automatic processing of images depicting the faces of conspecifics in the female brain (Pavlova et al., 2015a, b). Consistent with this hypothesis, numerous studies have demonstrated that women have a greater ability to decipher emotions through facial expressions (Proverbio et al., 2006; Hall et al., 2010; Thompson & Voyer, 2014). Further evidence has demonstrated that women, as opposed to men, react more strongly to the sounds of infantile tears and laughter (Seifritz et al., 2003; Sander et al., 2007) and have stronger emotional reactions upon viewing affective stimuli (such as IAPS) involving human beings, suggesting a more general sex difference in empathy (Klein et al., 2003; Gard & Kring, 2007; Proverbio et al., 2009; Christov-Moore, et al., 2014). In a very interesting study, Pavlova et al. (2015a) recently investigated face pareidolia processes by presenting healthy adult females and males with a set of food-plate images resembling faces (Arcimboldi style). In a spontaneous recognition task, the women not only more readily recognized the images as a face (they reported resemblance to a face for images that the males did not) but also gave more face responses overall.

Overall, the present data provide direct evidence of a sex difference in face pareidolia, as demonstrated by the lack of a difference in face-specific VPP responses to faces and FITs in women and a significant difference in men. Furthermore, a sex difference in the number, strength and localization of sources for the surface potentials recorded in response to FITs between 150 and 190 ms was observed. In detail, only in the women were brain regions involved in the affective processing of social information identified (right STG, pCC, left orbitofrontal cortex) during the processing of objects with illusory faces that were otherwise perfectly canonical and standard.

In the men, posteriorly, activation of the right precuneus, which is involved in the structural encoding of the stimulus and in visuospatial processing (Fink et al., 1997; Nagahama et al., 1999), was observed, which suggests a lack of processing of the socio-affective content of the incoming visual input in the male brain. Anteriorly, the perception of faces-in-things stimulated the activation of the orbitofrontal cortex (BA10), but the signal strength was considerably smaller than that in the women (4.98 nAm in men vs. 13.2 nAm in women). It is known that the orbitofrontal cortex plays a major role in different aspects of social cognition. Less known is that it contains orbitofrontal face-responsive neurons, which were first observed by Thorpe et al. (1983) and Rolls et al. (2006). These neurons are involved in the processing of facial expressions, especially anger (Hornak et al., 1996), but also gestures and movement (Kringelbach & Rolls, 2004; Rolls & Grabenhorst, 2008). They are also very responsive to face attractiveness (O’Doherty et al., 2003; Pegors et al., 2015). It may be assumed that in this brain region, the presence of a living entity with a face and feelings is abstractly represented (Rolls et al., 2006).

Clear evidences have been recently provided that the orbitofrontal cortex (OFC) is deeply involved in the affective processing of faces (Xiu et al., 2015), and that it modulates the connectivity between the amygdala and hippocampus during retrieval of emotional stimuli (Smith et al., 2006), besides being implicated in the representation of affective value and reward. Again, many evidences show how OBC is involved in the brain response to the faces of infants, or ‘baby schema’ response (Kringelbach et al., 2008; Glocker et al., 2009; Proverbio et al., 2011b; Luo et al., 2015). Furthermore, the OFC is supposed to be engaged in cognitive processes integrating affective information with task-related information. The OFC has been proposed to provide top-down control signals that enforce category distinctions (such as facial expressions) in the STS (Said et al., 2010). It should be noted that, in the present study, decision making processes did not directly involve a face/object discrimination, since both stimuli were task-irrelevant and had to be ignored. Therefore, FITs ambiguity (i.e. being not exactly a face, not exactly an object) was completely irrelevant for the performance. Plus, objects and faces were easily distinguishable from animals, the semantic distinction occurring at an early sensory stage, as documented by ERP studies (Zani & Proverbio, 2012). The rapid and automatic animals recognition is also documented by the fast mean reaction times to targets (about 538 ms). Therefore the hypothesis is favored of an OFC role in processing the affective value of FITs as animated entities, as other studies on the role of OFC in assessing the affective value of faces seem to prove (Said et al., 2010, 2011).

The role of the STS, and especially the right STS, in facial emotional expression coding has been clearly established in the neuroimaging literature (Puce et al., 1998; Lahnakoski et al., 2012; Baseler et al, 2014; Candidi et al., 2015; Flack et al., 2015). The fact that the rSTS was primarily activated in women (and not in men) during the perception of faces-in-things suggests a clear anthropomorphizing process, leading to the recognition of a face-endowed entity and the automatic extraction of affective information to represent its mental and emotional state at the perceptual level. In addition to social and affective-related brain areas, in the women, an occipital generator in the cuneus (BA18) was also found. The lateral occipital cortex at the level of the cuneus is presumably involved in the processing of object shape, as revealed by neuroimaging data (Stilla & Sathian, 2008; Lee Masson et al., 2015) and clinical data from patients suffering from visual agnosia (Ptak et al., 2014).

Overall, the present data also showed an anatomical and functional dissociation between the face-related N170 and VPP, demonstrating how they do not necessarily reflect the intracortical activity of the same neural generators, as previously found by some authors (Joyce & Rossion, 2005). In the same vein, Jeffreys (1989) proposed that the vertex positive potential (VPP) did not arise in face-selective areas in the occipital-temporal region (i.e. in the FFA) but instead in the limbic system, which shares some similarities with the present source reconstruction data. Therefore, it may be speculated that the VPP arose from the limbic system rather than generators located in face selective regions (FFA). This could be linked to studies showing that emotion-related responses in FFA partly result from feedback signals from the amygdala, which encodes the social and motivational meaning of the face, boosting attention and perceptual analysis (Vuilleumier et al., 2004; Todorov & Engell, 2008; Adolphs, 2010; Pessoa 2008). Thus, the VPP might reflect the encoding of face relevance/salience whereas the N170 (FFA) might sustain perceptual encoding of face stimuli, as insightfully suggested by one of the reviewer of this paper.

As for the later ERP components, the data showed larger N250 responses to objects than to faces at anterior sites, which has been observed in the past. For example, Proverbio et al. (2007) found a larger anterior N2 (200–260 ms) to images of man-made artifacts than to images of animals. In another ERP investigation that compared the neural processing of pictures depicting technological objects vs human faces of various ages and sex, a much larger frontocentral negativity to objects than to faces was found in the 400–500 ms temporal window (Proverbio et al., 2011b). Again, Wheatley et al. (2011), contrasting the neural processing of human faces with that of dolls vs objects, found a much larger anterior N250 to objects than faces (both of humans and dolls). It may hypothesized that N250 indexed a prefrontal stimulus encoding stage (Cansino et al., 2002) and therefore reflected higher workload and processing demands for object encoding, belonging to many different semantic categories (e.g. buildings, clothes, tools, vehicles, furniture, food, etc.), as opposed to faces, clearly detected as such at N170 and VPP level. However, this hypothesis is rather speculative and it certainly deserves further investigation.

In conclusion, the present data provide direct evidence of a sex difference in face pareidolia, with the female brain being more engaged in affective and social processes during object perception (FIT stimuli). As suggested by Leibo et al. (2011), the visual cortex separates face processing from object processing so that faces are automatically processed in ways that are inapplicable to objects (e.g. gaze detection, gender detection and facial expression coding). However, the present data showed sexual dimorphism, with this dichotomy being much stricter in men than women because of an anthropomorphizing bias in the female brain.

Funding

This work was supported by the University of Milano-Bicocca 2014FAR grant.

Conflict of interest. None declared.

Acknowledgements

We wish to express our gratitude to Andrea Orlandi, Evelina Bianchi and Ezia Rizzi for their technical support and to Alberto Zani for his valuable advice.

References

- Adolphs R. (2010). What does the amygdala contribute to social cognition? Annals of the New York Academy of Sciences, 1191, 42–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Akechi H., Kikuchi Y., Tojo Y., Osanai H., Hasegawa T. (2014). Neural and behavioural responses to face-likeness of objects in adolescents with autism spectrum disorder. Scientific Reports, 27(4), 3874.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baseler H.A., Harris R.J., Young A.W., Andrews T.J. (2014). Neural responses to expression and gaze in the posterior superior temporal sulcus interact with facial identity. Cerebral Cortex, 24(3), 737–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S., McCarthy G., Perez E., Puce A., Allison T. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8, 551–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Candidi M., Stienen B.M., Aglioti S.M., de Gelder B. (2015). Virtual lesion of right posterior superior temporal sulcus modulates conscious visual perception of fearful expressions in faces and bodies. Cortex, 65, 184–94. [DOI] [PubMed] [Google Scholar]

- Cansino S., Maquet P., Dolan R.J., Rugg M.D. (2002). Brain activity underlying encoding and retrieval of source memory. Cerebral Cortex, 12(10), 1048–56. [DOI] [PubMed] [Google Scholar]

- Carmel D., Bentin S. (2002). Domain specificity versus expertise: factors influencing distinct processing of faces. Cognition, 83, 1–29. [DOI] [PubMed] [Google Scholar]

- Christov-Moore L., Simpson E.A., Coudé G., Grigaityte K., Iacoboni M., Ferrari P.F. (2014). Empathy: gender effects in brain and behavior. Neuroscience and Biobehavioral Reviews, 46(4), 604–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fink G.R., Halligan P.W., Marshall J.C., Frith C.D., Frackowiak R.S.J., Dolan R.J. (1997). Neural mechanisms involved in the processing of global and local aspects of hierarchically organized visual stimuli. Brain, 120, 1779–91. [DOI] [PubMed] [Google Scholar]

- Flack T., Andrews T.J., Hymers M., et al. (2015). Responses in the right posterior superior temporal sulcus show a feature-based response to facial expression. Cortex, 69, 14–23. [DOI] [PubMed] [Google Scholar]

- Gard M.G., Kring A.M. (2007). Sex differences in the time course of emotion. Emotion, 7(2), 429–37. [DOI] [PubMed] [Google Scholar]

- Glocker M.L., Langleben D.D., Ruparel K., et al. (2009). Baby schema modulates the brain reward system in nulliparous women. Proceedings of the National Academy of Sciences of the United States of America, 106, 9115–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N., Kveraga K., Naik P., Ahlfors S.P. (2009). Early (N170) activation of face-specific cortex by face-like objects. Neuroreport, 20(4), 403–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall J.K., Hutton S.B., Morgan M.J. (2010). Sex differences in scanning faces: does attention to the eyes explain female superiority in facial expression recognition? Cognition and Emotion, 24, 629–37. [Google Scholar]

- Hornak J., Rolls E.T., Wade D. (1996). Face and voice expression identification in patients with emotional and behavioural changes following ventral frontal lobe damage. Neuropsychologia, 34, 247–61. [DOI] [PubMed] [Google Scholar]

- Klein S., Smolka M.N., Wrase J., et al. (2003). The influence of gender and emotional valence of visual cues on fMRI activation in humans. Pharmacopsychiatry, 36(3), 5191–4. [DOI] [PubMed] [Google Scholar]

- Kringelbach M.L., Rolls E.T. (2004). The functional neuroanatomy of the human orbitofrontal cortex: evidence from neuroimaging and neuropsychology. Progress in Neurobiology, 72, 341–72. [DOI] [PubMed] [Google Scholar]

- Kringelbach M.L., Lehtonen A., Squire S., et al. (2008). A specific and rapid neural signature for parental instinct. PLoS One, 3, e1664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeffreys D.A. (1989). A face-responsive potential recorded from the human scalp. Experimental Brain Research, 78, 193–202. [DOI] [PubMed] [Google Scholar]

- Joyce C.A., Rossion B. (2005). The face-sensitive N170 and VPP components manifest the same brain processes: the effect of reference electrode site. Clinical Neurophysiology, 116, 2613–31. [DOI] [PubMed] [Google Scholar]

- Lahnakoski J.M., Glerean E., Salmi J., et al. (2012). Naturalistic FMRI mapping reveals superior temporal sulcus as the hub for the distributed brain network for social perception. Frontiers in Human Neuroscience, 13(6), 233.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee Masson H., Bulthé J., Op de Beeck H.P., Wallraven C. (2015). Visual and haptic shape processing in the human brain: unisensory processing, multisensory convergence, and top-down influences. Cerebral Cortex, 28, 70.. [DOI] [PubMed] [Google Scholar]

- Leibo J.Z., Mutch J., Poggio T. (2011). Why the brain separates face recognition from object recognition. Part of: Advances in Neural Information Processing Systems 24, (NIPS 2011), 711–9.

- Liu J., Li J., Feng L., Li L., Tian J., Lee K. (2014). Seeing Jesus in toast: neural and behavioral correlates of face pareidolia. Cortex, 53, 60–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luo L., Ma X., Zheng X., et al. (2015). Neural systems and hormones mediating attraction to infant and child faces. Frontiers in Psychology, 6, 970.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagahama Y., Okada T., Katsumi Y., et al. (1999). Transient neural activity in the medial superior frontal gyrus and precuneus time locked with attention shift between object features. Neuroimage, 10, 193–9. [DOI] [PubMed] [Google Scholar]

- O'Doherty J., Winston J., Critchley H., Perrett D., Burt D.M., Dolan R.J. (2003). Beauty in a smile: the role of medial orbitofrontal cortex in facial attractiveness. Neuropsychologia, 41(2), 147–55. [DOI] [PubMed] [Google Scholar]

- Pascual-Marqui R.D., Michel C.M., Lehmann D. (1994). Low resolution electromagnetic tomography: a new method for localizing electrical activity in the brain. International Journal of Psychophysiology, 18(1), 49–65. [DOI] [PubMed] [Google Scholar]

- Pavlova M.A., Scheffler K., Sokolov A.N. (2015a). Face-n-food: gender differences in tuning to faces. PLoS One, 10(7), e0130363.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pavlova M.A., Sokolov A.N., Bidet-Ildei C. (2015b). Sex differences in the neuromagnetic cortical response to biological motion. Cerebral Cortex, 25(10), 3468–74. [DOI] [PubMed] [Google Scholar]

- Pegors T.K., Kable J.W., Chatterjee A., Epstein R.A. (2015). Common and unique representations in pFC for face and place attractiveness. Journal of Cognitive Neuroscience, 27(5),959–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L. (2008). On the relationship between emotion and cognition. Nature Reviews Neuroscience, 9, 148–58. [DOI] [PubMed] [Google Scholar]

- Proverbio A.M., Brignone V., Matarazzo S., Del Zotto M., Zani A. (2006). Gender and parental status affect the visual cortical response to infant facial expression. Neuropsychologia, 44(14), 2987–99. [DOI] [PubMed] [Google Scholar]

- Proverbio A.M., Del Zotto M., Zani A. (2007). The emergence of semantic categorization in early visual processing: ERP indices of animal vs. artifact recognition. BMC Neuroscience, 8, 24.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proverbio A.M., Zani A., Adorni R. (2008). Neural markers of a greater female responsiveness to social stimuli. BMC Neuroscience, 30(9), 56.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proverbio A.M., Adorni R., Zani A., Trestianu L. (2009). Sex differences in the brain response to affective scenes with or without humans. Neuropsychologia, 47(12), 2374–88. [DOI] [PubMed] [Google Scholar]

- Proverbio A.M., Adorni R., D'Aniello G.E. (2011a). 250 ms to code for action affordance during observation of manipulable objects. Neuropsychologia, 49(9), 2711–7. [DOI] [PubMed] [Google Scholar]

- Proverbio A.M., Riva F., Zani A., Martin E. (2011b). Is it a baby? Perceived age affects brain processing of faces differently in women and men. Journal of Cognitive Neuroscience, 23(11), 3197–208. [DOI] [PubMed] [Google Scholar]

- Proverbio A.M., Lozano Nasi V., Arcari L.A., et al. (2015). The effect of background music on episodic memory and autonomic responses: listening to emotionally touching music enhances facial memory capacity. Science Report, 15(5), 15219.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ptak R., Lazeyras F., Di Pietro M., Schnider A., Simon S.R. (2014). Visual object agnosia is associated with a breakdown of object-selective responses in the lateral occipital cortex. Neuropsychologia, 60, 10–20. [DOI] [PubMed] [Google Scholar]

- Puce A., Allison T., Bentin S., Gore J.C., McCarthy G. (1998). Temporal cortex activation in humans viewing eye and mouth movements. Journal of Neuroscience, 18(6), 2188–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolls E.T., Grabenhorst F. (2008). The orbitofrontal cortex and beyond: from affect to decision-making. Progresses in Neurobiology, 86(3), 216–44. [DOI] [PubMed] [Google Scholar]

- Rolls E.T., Critchley H.D., Browning A.S., Inoue K. (2006). Face-selective and auditory neurons in the primate orbitofrontal cortex. Experimental Brain Research, 170, 74–87. [DOI] [PubMed] [Google Scholar]

- Said C.P., Haxby J.V., Todorov A. (2011). Brain systems for assessing the affective value of faces. Philosophical Transactions of the Royal Society of London, 366(1571), 1660–70. 2011 Jun 12; [DOI] [PMC free article] [PubMed] [Google Scholar]

- Said C.P., Moore C.D., Engell A.D., Todorov A., Haxby J.W. (2011). Distributed representations of dynamic facial expressions in the superior temporal sulcus. Journal of Vision, 10(5), 11.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sander K., Frome Y., Scheich H. (2007). FMRI activations of amygdala, cingulate cortex, and auditory cortex by infant laughing and crying. Human Brain Mapping, 28, 1007–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seifritz E., Esposito F., Neuhoff J.G., et al. (2003). Differential sex-independent amygdala response to infant crying and laughing in parents versus nonparents. Biological Psychiatry, 54, 1367.. [DOI] [PubMed] [Google Scholar]

- Smith A.P., Stephan K.E., Rugg M.D., Dolan R.J. (2006). Task and content modulate amygdala-hippocampal connectivity in emotional retrieval. Neuron, 49(4), 631–8. [DOI] [PubMed] [Google Scholar]

- Stilla R., Sathian K. (2008). Selective visuo-haptic processing of shape and texture. Human Brain Mapping, 29(10), 1123–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takahashi K., Watanabe K. (2013). Gaze cueing by pareidolia faces. Iperception, 4(8), 490–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson A.E., Voyer D. (2014). Sex differences in the ability to recognize non-verbal displays of emotion: a meta-analysis. Cognition and Emotion, 28(7), 1164–95. [DOI] [PubMed] [Google Scholar]

- Thorpe E.T., Rolls S.J., Maddison S. (1983). Neuronal activity in the orbitofrontal cortex of the behaving monkey. Experimental Brain Research, 49, 93–115. [DOI] [PubMed] [Google Scholar]

- Todorov A., Engell A.D. (2008). The role of the amygdala in implicit evaluation of emotionally neutral faces. Social Cognitive and Affective Neuroscience, 3, 303–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P., Richardson M.P., Armony J.L., Driver J., Dolan R.J. (2004). Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience, 7, 1271–8. [DOI] [PubMed] [Google Scholar]

- Wheatley T., Weinberg A., Looser C., Moran T., Hajcak G. (2011). Mind perception: real but not artificial faces sustain neural activity beyond the N170/VPP. PLoS One, 6(3), e17960.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xiu D., Geiger M.J., Klaver P. (2015). Emotional face expression modulates occipital-frontal effective connectivity during memory formation in a bottom-up fashion. Frontiers in Behavioral Neuroscience, 23(9), 90.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zani A., Proverbio A.M. (2012). Is that a belt or a snake? Object attentional selection affects the early stages of visual sensory processing. Behavioral and Brain Functions, 2(8), 6.. [DOI] [PMC free article] [PubMed] [Google Scholar]