Abstract

For most animals, survival depends on rapid detection of rewarding objects, but search for an object surrounded by many others is known to be difficult and time consuming. However, there is neuronal evidence for robust and rapid differentiation of objects based on their reward history in primates (Hikosaka, Kim, Yasuda, & Yamamoto, 2014). We hypothesized that such robust coding should support efficient search for high-value objects, similar to a pop-out mechanism. To test this hypothesis, we let subjects (n = 4, macaque monkeys) view a large number of complex objects with consistently biased rewards with variable training durations (1, 5, or 30 + days). Following training, subjects searched for a high-value object (Good) among a variable number of low-value objects (Bad). Consistent with our hypothesis, we found that Good objects were accurately and quickly targeted, often by a single and direct saccade with a very short latency (<200 ms). The dependence of search times on display size reduced significantly with longer reward training, giving rise to a more efficient search (40 ms/item to 16 ms/item). This object-finding skill showed a large capacity for value-biased objects and was maintained in the long-term memory with no interference from reward learning with other objects. Such object-finding skill, and in particular its large capacity and long term retention, would be crucial for maximizing rewards and biological fitness throughout life where many objects are experienced continuously and/or intermittently.

Keywords: object search, reward, long-term memory, skill

Introduction

Surrounded by many objects, animals often need to quickly find valuable objects, such as food. In humans and monkeys, object identification is best done at the fovea and degrades in the periphery (Low, 1951; Rentschler & Treutwein, 1985; Strasburger, Rentschler, & Juettner, 2011). Due to these perceptual and or attentional limitations (Xu & Chun, 2009), search for a target object can be difficult (Wolfe & Bennett, 1997) and may require multiple shifts of gaze (saccades) (Motter & Belky, 1998; Zelinsky & Sheinberg, 1997). Yet, based on some recent findings, we speculated that such difficulty may be overcome by certain ecological experiences. Studies from our laboratory suggest that the caudal part of the basal ganglia, which is involved in oculomotor and attentional control, differentially responds to high- and low-valued objects (Kim & Hikosaka, 2013; Yamamoto, Kim, & Hikosaka, 2013; Yasuda, Yamamoto, & Hikosaka, 2012). In particular, substantia nigra pars reticulata (SNr) neurons projecting to the superior colliculus automatically and rapidly (<200 ms) discriminate stably high- and low-valued objects (Yasuda et al., 2012). They have long-term memories of object values (>100 days) with a high capacity (>300 experienced objects). Behaviorally, monkeys were found to exhibit strong gaze bias toward objects with memory of high-reward when freely viewing multiple objects (Ghazizadeh, Griggs, & Hikosaka, 2016; Yasuda, Yamamoto, & Hikosaka, 2012). These findings are consistent with studies on human subjects showing that previously reward-associated objects can automatically distract attention (Anderson, Laurent, & Yantis, 2011; Chelazzi, Perlato, Santandrea, & Della Libera, 2012; Theeuwes & Belopolsky, 2012).

These results suggest that repeated reward experience should enable primates to efficiently locate Good objects when searching for reward. However, this hypothesis has not been directly tested, because in the previous studies, the subjects did not look for objects based on reward experience. Therefore, the following critical questions remain: How quickly and accurately do attention and/or gaze reach a Good object during search? How does reward history affect search efficiency when one is confronted with increasing numbers of distractors? To answer these questions, we used a visual search task. Instead of focusing on the effects of visual features, we examined how reward history of objects controls object search.

Materials and methods

General procedures

Four adult rhesus monkeys (Macaca mulatta) were used for the experiments (monkeys B, R, D male, and U female). All animal care and experimental procedures were approved by the National Eye Institute Animal Care and Use Committee and complied with the Public Health Service Policy on the humane care and use of laboratory animals and were in adherence to the ARVO animal statement. Monkeys were implanted with a head-post for fixation and scleral search coils to monitor eye movements prior to training in the tasks.

Stimuli

We created visual stimuli using fractal geometry (Miyashita, Higuchi, Sakai, & Masui, 1991; Yamamoto, Monosov, Yasuda, & Hikosaka, 2012). One fractal was composed of four point-symmetrical polygons that were overlaid around a common center such that smaller polygons were positioned more toward the front. The parameters that determined each polygon (size, edges, color, etc.) were chosen randomly. Fractal sizes were on average ∼ 8° × 8° but ranged from 5° to 10°. Each monkey saw three groups of 72 fractals with 1, 5, and 30+ days reward training (1 day, 5 day and 30+ day training groups, respectively, Figure 1A). The order of training groups was randomized between monkeys.

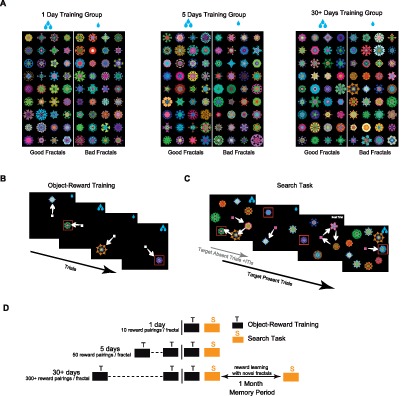

Figure 1.

Training and testing procedures. (A) Fractal objects for monkey R (n = 216), each of which was consistently associated with a large reward (Good objects) or a small reward (Bad objects). (B) Object-reward training: A large or small reward was delivered after the monkey made a saccade to a fractal object. The fractal was presented in one of eight directions peripherally (15°). (C) Search task (test): A large or small reward was delivered after the monkey chose one among multiple fractals. The choice was determined by gaze longer than 400 ms so that the monkey was allowed to make multiple saccades with shorter gaze durations. Monkey could return to center to go to the next trial if no Good fractal was found. The number of simultaneously presented fractals varied across trials (15° eccentricity, display size = 3, 5, 7, 9). Among them, only one fractal was Good object in half of the trials. In the other half, no Good object was present (not shown). In (B) and (C), red square indicates Good object (not shown to the monkey). (D) Each monkey viewed three separate groups of reward-biased fractals (Figure 1A) with different training amounts (1, 5, or 30+ days; 72 fractals/group) followed on the last day (vertical line) by the search task (Figure 1C). Fractals in the “30+ days” group were tested again after 1 month (Mem, in subsequent Figures). During this time monkeys never saw the 30+ day fractals but were engaged in object-reward training with a new set of fractals. Monkeys also saw 72 fractals with choice trials during training and another 72 fractals in perceptual group (Figure 7) not shown in (A).

Additionally, each monkey saw another group of 48 fractals associated with large or small reward (>5 days) during the 1-month memory period (see Figure 1D). This group was not used in search task, but was there to ensure that novel reward learning experiences did not interfere with long-term memory results. Finally, another group of 72 fractals was trained with four days of equal medium reward and one day of biased reward (perceptual training group) to test for effects of stimulus familiarity on search task. A separate group of 72 fractals was also used to test monkeys' value learning after one day of training (see Object-reward training task, choice trials). No choice trials were used during training of fractals used in the search task. Overall, each monkey saw a total of 408 fractals during this study.

Behavioral procedures

Behavioral tasks were controlled by custom made C++ based software “Blip” (www.simonhong.org). Data acquisition and output control was performed using National Instruments NI-PCIe 6353 (National Instruments Corporation, Austin, TX). The monkeys sat in a primate chair with their head fixed facing a screen 30 cm in front of them. Stimuli generated by an active-matrix liquid crystal display projector (PJL3211, ViewSonic, Brea, CA) were rear-projected on the screen. Diluted apple juice (33% and 66% for monkeys B, D and monkeys R, U, respectively) was used as reward. Rewards amounts could be either small (0.08 mL and 0.1 mL for monkeys B, D and monkeys R, U, respectively) or large (0.21 mL and 0.35 mL for monkeys B, D and monkey R, U, respectively). For the perceptual group, reward was average of small and large reward for each monkey (medium reward) during unbiased reward training. Eye position was sampled at 1 kHz.

The behavioral procedure consisted of two phases: training (object-reward training task) and testing (search task). Monkeys were trained with separate groups of fractals that differed based on the number of reward training sessions (see Stimuli). After the last session of training, performance was tested for 24 fractals per group in each search session. This resulted in three search sessions per group per animal (total of 12 sessions per training group for all four animals).

Object-reward training task

We used an object-directed saccade task to train object value associations (Figure 1B). Each session of training was performed with a set of eight fractals (four Good/ four Bad fractals). After central fixation on a white dot, one object appeared on the screen at one of the eight peripheral locations (eccentricity 15°). After an overlap period of 400 ms, the fixation dot disappeared and the animal was required to make a saccade to the fractal. After 500 ± 100 ms of fixating the fractal, a large or small reward was delivered (biased reward training). For the perceptual group, equal medium reward was delivered for all fractals during the unbiased reward training (first four days) and large and small on the fifth day. The displayed fractal was turned off after the time required for large reward delivery for equal perceptual exposure between Good and Bad fractals. This initiated inter-trial intervals (ITI) of 1 to 1.5 s with a blank screen. Each training session consisted of 80 trials with each object pseudo-randomly presented 10 times. Any error resulting from breaking fixation or a premature saccade to fractal resulted in an error tone. Errors were not frequent (<7% of trials). A correct tone was played at conclusion of a correct trial.

The same task structure was used to test monkeys knowledge of object values after 1 day of training using fractals different from the 1 day group by adding choice trials to the training. In choice trials two fractals (one Good and one Bad) were presented diametrically on the screen and monkey was asked to choose one of the fractals after the overlap period by making a saccade to it. Each monkey was trained for one day with a new set of eight fractals (total nine sessions with 72 fractals per monkey). There were 16 choice trials (randomly selected one out of every five trials) in an 80-trial training session. The result of choice in the second half of training (last eight choices) in each session was averaged and used as an estimate of the monkey's knowledge of object values following one-day training.

Search task procedure

Search task consisted of target present and target absent trials that were intermixed with equal probability. A single Good object was present in target present trials while all objects were Bad in target absent trials (not analyzed in this study). The task started with appearance of a purple fixation dot (Figure 1C). After 400 ms of fixation, a display with 3, 5, 7, or 9 fractals was turned on and the fixation point was turned off. Fractals were arranged equidistant from each other on an imaginary 15° radius circle. The location of the first fractal (arbitrary) was uniformly distributed around this circle. Fractals shown in a trial were chosen pseudo-randomly from a set of 24 (12 Good/12 Bad). If the gaze left the fixation window (5°), the animal had up to 3 s to choose an object or reject the trial. The animal could reject a trial either by staying at center (for 600 ms) or coming back to center and staying for 300 ms if it did not find a Good object (fixation dot turned back on once gaze left fixation). This resulted in quick progression to the next trial (after 400 ms), which had 50% chance of having a Good object present. The animal received a small reward after two to four consequent rejections. This was included to reward animals for rejecting multiple target absent trials in a row and to discourage selection of Bad fractals (small reward guarantee). Animal was free to make multiple saccades to objects before committing to one by fixating it for at least 400 ms (committing time). After committing, animal was required to continue looking for another 100 ms (total gaze duration: 500 ms) after which display was turned off and reward corresponding to the chosen object was delivered. Reward receipt was followed by an ITI interval of 1–1.5 s. A session of search task consisted of 240 correct trials. Errors included fixation break before display onset (not used in analysis) or fixation breaks after committing to an object (used in analysis). Animals almost never failed to reach a decision (detect or reject) within the 3-s window (0.08% failed trials). All subjects had extensive training in search task with fractals not used in this study prior to being tested in search task using the current fractals.

Data analysis

Data analysis, plotting and statistical tests were done using MATLAB 2014b (MathWorks, Natick, MA) custom-written software. Gaze locations were analyzed with an automated script and saccades (displacement > 0.5°, peak velocity > 50°/s) versus stationary periods were separated in a given trial. Objects were considered to be fixated when gaze was stationary and was within a 5° window of their center. Target present trials with rejection, committing to a Bad object or breaking fixation after committing to a Good object were considered misses. Trials with successful gaze to Good objects until reward collection were considered hits. Good object detection rate (e.g., Figure 2B) was the ratio of hits/(hits + misses). For first saccade percentage, first saccade to each direction (Figure 2C), Good and Bad objects (Figure 4), or only Good objects (Figure 6B, 7C) is divided by the total number of trials with saccades. Slopes of linear fits to search time (e.g., Figure 5A, B) and saccade number (e.g., Figure 5D, E) were obtained using the MATLAB “regress” function for each session.

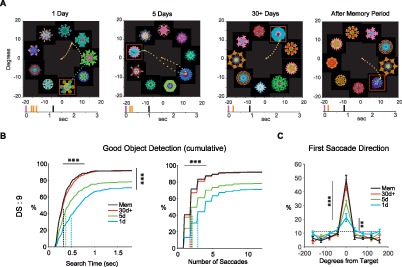

Figure 2.

Effects of the repeated object-reward association on the detection of Good objects (DS: 9). (A) Example search performance of monkey R after different training amounts and memory period. Eye position is shown by time-dependent color-coded dots (2/ms dot, from orange to blue). Red square indicates Good object (not shown to the monkey). Tick marks at bottom show the timings of saccades (orange) and reward (black) relative to display onset (purple). (B) Search time and number of saccades (cumulative) for detecting Good object, shown separately for different training amounts (left and right, respectively). Dotted lines: average of median across search sessions. Detection of Good objects shows significant increase and median search time and saccade number shows significant decrease by longer reward training. (C) Distribution of the first saccade directions toward nine equally spaced objects relative to Good object for different training amounts. Dotted line: chance level. First saccade toward Good object was already higher than chance even after 1-day training and significantly increased by longer reward training. Data in (B) and (C) are from all four monkeys. *p < 0.05, **p < 0.01, ***p < 0.001.

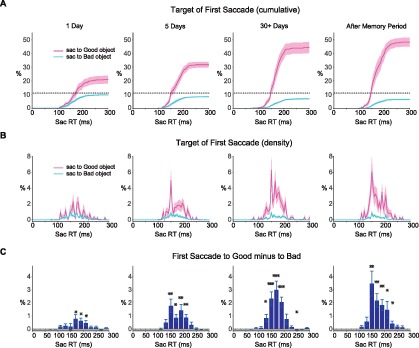

Figure 4.

The first saccade bias toward Good objects as a function of saccade reaction time (DS: 9). (A) Cumulative percentage of the first saccade to Good object (pink) and Bad object (blue) as a function of saccade reaction time. Dotted line: chance level. Preference for the target is evident even at short saccade latencies (<150 ms). Shading shows SEM. (B) Same as (A) but showing differential percentage of the first saccade to Good object (pink) and Bad object (blue). (C) Percentage of first saccade to Good minus Bad, binned over saccade reaction time (20-ms bins). Paired t test used for significance in each time bin (two-sided). *p < 0.05, **p < 0.01, ***p < 0.001.

Figure 6.

Effects of search trials on search performance. Data are divided into the early, middle, and late epochs (80 trials each) of search task, and are shown separately for different training amounts. (A) Percentage of target present trials where subjects detected Good objects (ordinate) against the trial number in search task (abscissa) for different training amounts and for memory. (B) percentage of trials with first saccade toward Good object, (C) time to find the Good object, (D) number of saccades before finding Good object, (E) search time slopes by display size, (F) saccade number slopes by display size.(=, \, ×: main effect of training amount, search trials, and interaction, respectively).

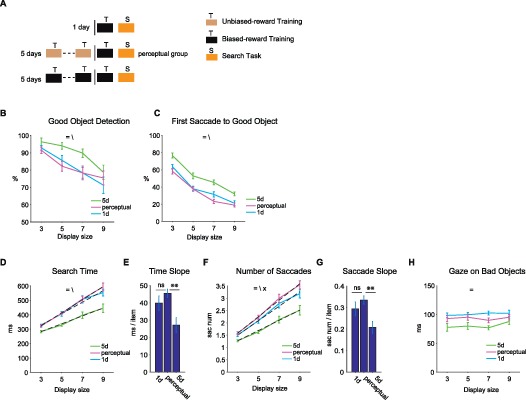

Figure 7.

Effects of perceptual exposure without reward bias on search performance. (A) Each monkey viewed a group of 72 fractals for 4 days without reward bias (medium reward). On the fifth day, the same fractals were associated with a large or small reward during training, which was followed by a test session (search task). The data were compared with those of 1-day and 5-day training groups (same data used in Figures 2 through 5). (B) Percentage of targets present trials where subjects detected Good objects (ordinate) against display size (abscissa) for 1-day, perceptual and 5-day training groups. (C) Same format as (B) but for percentage of trials with first saccade toward Good object. (D) through (H), Same format as Figure 5A, B, D, E, F respectively, but for 1-day, perceptual, and 5-day training groups. (=, \, ×: main effect of training amount, display size, and interaction, respectively).

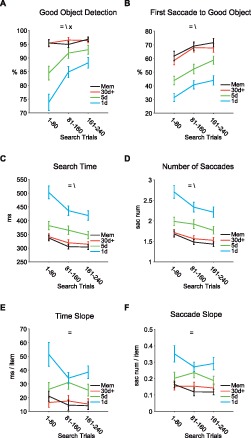

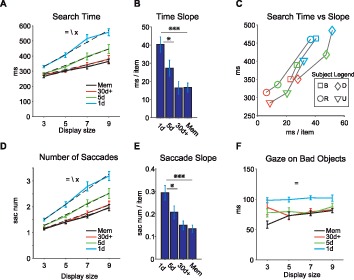

Figure 5.

Effects of the display size (DS) on the detection of Good objects. (A) Time to find the Good object (search time, ordinate) against the number of presented objects (DS, abscissa) for different training amounts and for memory. Dashed lines: linear fits. (B) Changes in search time slope by training amounts and for memory. Post hoc tests show significant change in slope between groups. (C) Search time (ordinate) and search time slope (abscissa) for individual subjects (monkeys B, D, R, U) after different training amounts (blue: 1d, green: 5d, red: 30 days+, Mem not shown). (D) and (E) Number of saccades to find the Good object, shown in the same format as in (A) and (B). (F) Duration of gaze on Bad objects for different training amounts. (=, \, ×: main effect of training amount, display size, and interaction, respectively). *p < 0.05, **p < 0.01, ***p < 0.001.

Statistical test and significance levels

One-way analysis of variance with training amount was performed for changes in detection rate, median search time and first saccade toward a Good object (Figure 2B, C). Three-way ANOVA with Training × Display size × Subject was performed for search time and saccade number in search task (Figure 5, 7). Three-way ANOVA with Training × Search trial × Subject was performed for effect of search trials on search performance (Figure 6). For effect of training on slopes (Figure 5B, E and Figure 7E, G), two-way ANOVA with Training × Subject was done. Significant ANOVAs were followed by HSD (honestly significant difference) post hoc test when needed (e.g., Figure 5B, E). Assumption of normality was confirmed using Lilliefors test in >94% of cases. Error-bars show standard error of the mean (SEM). Significance threshold and marking convention was *p < 0.05, **p < 0.01, ***p < 0.001 (two-sided).

Results

To study the effect of reward experience on visual search, we let monkeys (n = 4) view many fractal objects (Figure 1A), only one in each trial (Figure 1B), and did so across multiple sessions (Figure 1D). Each object was consistently associated with a large or small reward (Good and Bad fractals; Figure 1A). Following a given number of object-value learning sessions, we tested the monkey's ability to find Good objects (target) using a search task (Figure 1C, D). A Good object was present in half of the trials (see Materials and methods). Before the final choice, the monkey was allowed to make multiple saccades (Figure 1C, 2A).

To study the search performance systematically, we used two parameters: (a) reward learning duration (1 day, 5 days, or 30+ days; Figure 1D) and (b) search display size (DS: three, five, seven, or nine objects; Figure 1C). For the three learning durations, we used three separate groups of fractals (1-day, 5-day, 30+ day training groups, Figure 1A). None of the fractal groups were used in search task prior to testing. Overall, each monkey learned the values of 216 objects that were later tested in the search task. To test whether the learning effects were retained for a long time, we retested the search performance of the 30+ day group after one month (Figure 1D, memory period). During the memory period, monkeys never viewed any fractals in the 30+ day group, but continued to be engaged in the object-value learning with a new group of fractals (n = 48, not used in search).

We found that the object-finding ability improved in two aspects concurrently: accuracy and speed. This learning effect was especially prominent when more objects were present (DS = 9), as shown in Figure 2. The average rate of finding the Good object (reflecting “accuracy”) grew significantly with more object-reward learning sessions: 71% (1 day), 78% (5 day), and 92% (30+ day), F(3, 44) = 8.4, p = 1.5 × 10−4 (Figure 2B left). Figure 2B also shows that the average median time to find the Good object (reflecting “speed”) across search sessions decreased significantly: 491 ms (1 day), 374 ms (5 day), and 326 ms (30+ day), F(3, 44) = 11, p = 1.5 × 10−5. Such reduction in search times was accompanied with a reduction in the average median number of saccades to find the Good object across search sessions, F(3, 44) = 9.6, p = 5.4 × 10−5 (Figure 2B right). These improvements in the accuracy and speed were maintained after the 1-month memory period with no observable decrement in the 30+ day group (92% detection rate, p = 0.93, 324 ms search time, p = 0.79).

A typical way to find the Good object was to explore the presented objects by making multiple saccades (Figure 2A). However, after extended reward training, the Good object could be reached directly with a single saccade. This “direct choice” rate significantly improved from 21% in the 1-day group to 45% for 30+ day fractal group, F(3, 44) = 16.7, p = 2.1 × 10−7 (Figure 2C) and was significantly higher than chance, even for the 1-day group (t11 = 3.6, p = 4 × 10−3). Once more, this direct choice rate was maintained after the 1-month memory period with no observable decrement in the 30+ day group (49% direct choice rate, p = 0.78). Similar improvements in search speed, accuracy, total saccade number, and direct choice rate were observed in other display sizes as reward training duration increased and showed long-term retention for the 30+ day training duration (Figure 3A–C, supplemental movies).

Figure 3.

Effects of the repeated object-reward association on the detection of Good objects (DS: 3, 5, 7). (A) Cumulative distributions of search time (left), the number of saccades (middle) and distribution of the first saccade directions relative to the Good object (right) for DS: 7. Same format as Figure 2B through C. (B) and (C), same format as (A) but for DS: 5 in (B) and DS: 3 in (C). Data are from all four monkeys. Significant increase in detection of Good objects for DS: 3, F(3, 44) = 4.7, p = 5 × 10−3; DS: 5, F (3, 44) = 6.5, p = 9.6 × 10−4; DS: 7, F (3, 44) = 13.5, p = 2.1 × 10−6. Significant decrease in search time for Good objects for DS: 3, F (3, 44) = 4.3, p = 9 × 10−3; DS: 5, F (3, 44) = 10.8, p = 1.8 × 10−5; DS: 7, F (3, 44) = 10.6, p = 2.1 × 10−5. Significant decrease in number of saccades to find Good objects for DS: 5, F(3, 44) = 11, p = 1.5 × 10−5; DS: 7, F(3, 44) = 10.6, p = 2.1 × 10−5; but not in DS: 3, F (3, 44) = 1, p = 0.4. Significant increase in first saccade direction for DS: 3, F(3, 44) = 12.8, p = 3.6 × 10−6; DS: 5, F(3, 44) = 21.7, p = 8.4 × 10−9; DS: 7, F (3, 44) = 17.5, p = 1.3 × 10−7. Significant difference from chance 1-day group for DS: 3, t(11) = 11.5, p = 1.8 × 10−7; DS: 5, t(11) = 5.9, p = 9.1 × 10−5; DS: 7, t(11) = 5.6, p = 1.5 × 10−4. *p < 0.05, **p < 0.01, ***p < 0.001.

Importantly, the direct choice of the Good object started quickly after object presentation. As shown in Figure 4, the first saccade was more likely to be directed to the Good object than a Bad object over all saccade latencies, even when the saccade occurred very quickly (<150 ms). The preferential saccade to the Good rather than a Bad object was enhanced (Figure 4A, B) and appeared to start earlier with longer reward training (significant difference as early as 130 ms for 30+ day group task compared to 170 ms for the 1-day group, Figure 4C).

Efficient search is characterized by diminishing influence of display size on search time (Wolfe, 1994). Indeed, our results showed a significant interaction between display size and learning duration on search time (i.e., time before reaching Good object), F(9, 173) = 3.5, p = 5.8 × 10−4 (Figure 5A). Analysis of search time slopes revealed a significant reduction (∼40 ms/item to ∼16 ms/item, F(3, 41) = 12.8, p = 4.78 × 10−6, with longer reward training, consistent with enhanced search efficiency (Figure 5B). Individual subject performance further confirmed consistent and concurrent improvement in search times and slopes in all participants (Figure 5C).

The number of saccades showed a similar trend to search times with saccade slopes reducing as a result of longer reward training (interaction between display size and learning duration, F(9, 173) = 3.5, p = 5.5 × 10−4; decrease in slope, F(3, 41) = 11.4, p = 1.4 × 10−5 (Figure 5D, E). The similarity of trends in search time and number of saccades is consistent with an overt scanning strategy during search when free gaze is allowed as suggested by previous reports (Motter & Belky, 1998; Zelinsky & Sheinberg, 1997). Notably, part of the efficiency in search times came from shorter gaze duration over nontarget objects (Bad objects) after longer reward training (Figure 5F). The gaze over Bad objects was ∼ 80 ms after extended reward training, which was significantly reduced from ∼ 100 ms in 1-day group, F(3, 171) = 23, p = 1.36 × 10−12. Such short inter-saccade intervals is suggested to arise from “parallel programing” of saccades (McPeek, Skavenski, & Nakayama, 2000).

Importantly, the gained search efficiency turned out to be resistant to the passage of time and interference from new object-reward learning that took place during the 1-month memory period. This can be verified by examining the search time slopes and saccade slopes for the 30+ day group tested immediately or after the 1-month memory period in search task (Figure 5B time slopes: 30+ day 16.4 ms/item vs. Mem 16.6 ms/item and Figure 5E saccade slopes: 30+ day 0.15 sacnum/item vs. Mem 0.13 sacnum/item, p > 0.95). These results further confirm the near perfect retention of object finding skill following the 1-month memory period using measures such as search time and direct choice rate described previously (Figures 2 through 4).

We note that the impaired search performance in 1-day group was not due to incomplete knowledge of object values. This was confirmed by asking the monkeys to make a choice between diametrically presented Good and Bad objects by adding choice trials to object-reward training for another group of fractals that was not used in search task (n = 72 fractals/subject, see Materials and methods). Subjects were able to choose the Good objects almost perfectly (>97% in all four subjects) with a single day of reward training (10 reward pairings/fractal). Thus, search efficiency requires repeated and extended object-reward association beyond what is normally required for making simple value-driven object choice.

An important aspect of our study was that the fractals, which were associated with biased rewards, had never been used in search task before the test session (Figure 1D). Thus, the differences in search efficiency between the groups of fractals can only be due to the differences in the duration of object-reward association. However, search performance within the test session could have improved as a result of practice. It is possible that such improvement within the test session contributed to the differential search efficiency between training groups (Figures 2 through 5). To test this possibility, we analyzed the search performance separately for the early, middle, and late epochs of search trials (80 trials × 3 = 240 trials; Figure 6). As expected, the search performance improved in all groups (significant main effect of search trials in Good object detection, first saccade to Good object, search time, and number of saccades, F(2, 561) > 9.5, main effect of search trials in time and saccade slopes did not reach significance F(2, 129) < 2, p > 0.1). Notably, this improvement was larger in 1-day group compared with 30+ day or memory group (Good object detection, interaction, F(6, 561) = 4.7, p < 0.001. In other words, the effect of the long-term object-reward association was clearest at the beginning of the search test. Thus, the search trials themselves weakened the differential effects of object-reward association.

One possible confound in our paradigm was the different levels of familiarity between the three groups of fractals (Figure 1). It is possible that differences in stimulus familiarity rather than reward association per se could fully or partially explain the differences seen in search task between training groups. To address this issue, we performed a control experiment using a new set of fractals (Figure 7A, perceptual group, 72 fractals/ subjects). The monkeys viewed these fractals for 4 days with no reward bias (with a medium reward amount) and, on the fifth day, with biased rewards (half with small and half with large rewards), which was followed by a test session (search task). Thus, these perceptual group fractals were the same as the 1-day group fractals for reward association, but were the same as the 5-day group fractals for perceptual familiarity (Figure 7A). We found that the search performance was similar between the perceptual group and the 1-day group, both of which were worse than the performance in the 5-day group (Figure 7B through H, post-hoc HSD, p < 0.001). These results suggest that the choice performance depends on object-reward association, but not mere object familiarity. There were some differences between the perceptual group and the 1-day group for the number of saccades (Figure 7F, perceptual > 1 day, post-hoc HSD, p < 0.001) and the gaze duration on bad objects (Figure 7H, 1 day > perceptual, post-hoc HSD, p < 0.001). These effects cancelled each other such that the total search times for these two groups were not different (Figure 7D, post-hoc HSD, p = 0.47).

Discussion

Our results demonstrate that finding complex objects in the periphery becomes accurate and quick, following long-term reward experience (Figures 2B, C, and 3). Search becomes more efficient, or less affected by the display size (Figure 5A through E). The Good object is targeted more often by the very first saccade, as if popping out from surrounding objects (Figure 2C).

Studies of visual search and attention have identified a series of visual guiding features (e.g., color) that can aid in the discrimination of a target object from its surrounding and create an easy search (Itti & Koch, 2000; Itti, Koch, & Niebur, 1998; Treisman & Gelade, 1980; Wolfe, 1994). However, it has been unclear whether nonvisual properties of objects can efficiently guide visual search (Wolfe & Horowitz, 2004). We show that after repeated object-reward associations, subjects often found a Good object quickly by making a single saccade to it while ignoring several Bad objects (Figure 4). Saccade reaction time was as short as reaction times for saccades to a single target (Schiller, Sandell, & Maunsell, 1987), suggesting that the object-finding saccade occurred automatically, not relying on active explorative search. Thus, our findings suggest that the long-term object value acts similar to a visual guiding feature.

The speed of object-finding is important in real life because we are surrounded by so many objects, only a small portion of which are rewarding or beneficial. Such a behavior may be called “object-finding skill.” This object finding skill is shown to bias monkey's gaze even when there is no reward outcome (free viewing; Ghazizadeh, Griggs, & Hikosaka, 2016; Yamamoto et al., 2013). Without the object-finding skill, we would rely on active search, which requires time-consuming exploration, and we may lose Good objects (Hikosaka et al., 2013).

It has been shown that human subjects improve their ability to find target objects with learning (Chun & Jian, 1998; Czerwinski, Lightfoot, & Shiffrin, 1992; Shiffrin & Schneider, 1977; Sigman & Gilbert, 2000). However, in previous studies, learning was the result of extended practice in visual search itself. In contrast, in our study, the subjects experienced objects associated with different amounts of reward one at a time and prior to search experience. Therefore, our results suggest that the object-finding skill can be created without active search experience. However, we also observed performance improvement during search task itself (Figure 6), consistent with previous reports. Interestingly, this improvement was larger for objects with shorter reward training (e.g., 1 day), compared with objects with longer training (e.g., 30+ days). Thus, experience in the search task reduced the difference in search performance between the training groups and was not contributing to it (Figures 2 through 5).

It is known that the familiarity of stimuli (target and distracters) can result in improved search performance (Mruczek & Sheinberg, 2005; Shen & Reingold, 2001; Wang, Cavanagh, & Green, 1994). To ensure that the search differences between training groups in our task were not simply reflecting differences in stimulus familiarity, we used a group of objects that had the same perceptual familiarity as the 5-day group, but had the same biased reward training as the 1-day group (Figure 7, perceptual group). We found that the performances for the perceptual group were almost indistinguishable with the 1-day group, but were much worse than for the 5-day group. These results suggest that the amount of reward bias training, not familiarity per se, determined subsequent search performance.

Our results seem different from the previous reports that showed familiarity benefits in visual search. This may be due to presence of object reward associations in our task. In the previous studies, familiarity was established by mere perceptual exposure. In addition, search targets were defined based on visual features alone rather than by biased reward-association. In our experiment, each object became increasingly familiar while it was associated with a certain reward outcome repeatedly. Such repeated reward pairing is known to create a stable value in the long-term memory (Kim, Ghazizadeh, & Hikosaka, 2015). For the perceptual group, this type of familiarization may then have impeded the associability with newly biased rewards on day 5, possibly due to reduced uncertainty about reward prediction (Dayan & Yu, 2003). Such an inhibitory influence may have counteracted the benefit of familiarity (e.g., shorter gaze duration on Bad objects, Figure 7H) by increasing the number of saccades (Figure 7F). To summarize, perceptual familiarity independent of reward bias was not sufficient to create the search efficiencies observed in our task.

Our data also address the underlying memory mechanism. Monkeys retained the object-finding skill for a long time (long-term memory) for many reward-associated objects (high-capacity memory). Importantly, this long-term memory seemed to be unaffected by continual reward learning with novel objects during the memory period. These memory features would be crucial because so many objects are often experienced throughout life and have to be detected in later encounters.

Recent studies in our laboratory suggest that the CDt-cdlSNr-SC circuitry (CDt: caudate tail, cdlSNr: caudal-dorsal-lateral substantia nigra pars reticulata, SC: superior colliculus) contribute to the oculomotor capture to Good objects by mediating long-term object value memories (Hikosaka et al., 2014; Kim & Hikosaka, 2013; Yamamoto et al., 2013). A majority of cdlSNr neurons respond to visual objects in an opposite manner depending on their long-term reward histories: inhibited by Good objects and excited by Bad objects (Yasuda et al., 2012). This leads to attention/gaze capture to Good objects (through disinhibition of SC neurons; Hikosaka & Wurtz, 1983) and attention/gaze repulsion from Bad objects (through enhanced inhibition of SC neurons). The value-differential responses of cdlSNr neurons remained virtually unchanged after long memory retention periods (>100 days), which may explain the complete retention of object-finding skill we found in this study (1 month). The long-retained object-value memories in the basal ganglia circuit seem to be created and or supported by inputs from dopaminergic neurons located in the substantia nigra pars compacta adjacent to cdlSNr (Kim, Ghazizadeh, & Hikosaka, 2014; Kim et al., 2015).

However, these results do not indicate that the basal ganglia are the only brain area that controls object-finding skill. In addition to SC, the value signals from the posterior basal ganglia circuit may influence cortical areas via thalamo-cortical circuitry (Deniau & Chevalier, 1992; Ilinsky, Jouandet, & Goldmanrakic, 1985; Middleton & Strick, 2002). Such cortical areas include areas that are involved in visual processing (Middleton & Strick, 1996) and attention (Balan, Oristaglio, Schneider, & Gottlieb, 2008; Cohen, Heitz, Woodman, & Schall, 2009), which would contribute to the rapid search for high-valued objects (Jagadeesh, Chelazzi, Mishkin, & Desimone, 2001). Future studies will directly examine the neuronal activity in these subcortical and cortical circuits to provide a mechanistic explanation for the emergence of search efficiency observed in the current experiment.

Supplementary Material

Acknowledgments

This work was supported by the Intramural Research Program at the National Eye Institute. We thank members of Hikosaka lab for valuable discussions and Simon Hong for providing technical assistance. The authors do not have any financial or non-financial conflict of interest to declare.

Commercial relationships: none.

Corresponding author: Ali Ghazizadeh.

Email: alieghazizadeh@gmail.com.

Address: Laboratory of Sensorimotor Research, National Eye Institute, National Institutes of Health Bethesda, MD, USA.

Contributor Information

Ali Ghazizadeh, Email: alieghazizadeh@gmail.com.

Whitney Griggs, Email: wsgriggs@gmail.com.

Okihide Hikosaka, Email: oh@lsr.nei.nih.gov.

References

- Anderson B. A.,, Laurent P. A.,, Yantis S. (2011). Value-driven attentional capture. Proceedings of the National Academy of Sciences, USA, 108 (25), 10367–10371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balan P. F.,, Oristaglio J.,, Schneider D. M.,, Gottlieb J. (2008). Neuronal correlates of the set-size effect in monkey lateral intraparietal area. PLOS Biology, 6 (7), 1443–1458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chelazzi L.,, Perlato A.,, Santandrea E.,, Della Libera C. (2012). Rewards teach visual selective attention. Vision Research, 85, 58–72. [DOI] [PubMed] [Google Scholar]

- Chun M. M.,, Jian Y. H. (1998). Contextual cueing: Implicit learning and memory of visual context guides spatial attention. Cognitive Psychology, 36 (1), 28–71. [DOI] [PubMed] [Google Scholar]

- Cohen J. Y.,, Heitz R. P.,, Woodman G. F.,, Schall J. D. (2009). Neural basis of the set-size effect in frontal eye field: Timing of attention during visual search. Journal of Neurophysiology, 101 (4), 1699–1704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czerwinski M.,, Lightfoot N.,, Shiffrin R. M. (1992). Automatization and training in visual-search. American Journal of Psychology, 105 (2), 271–315. [PubMed] [Google Scholar]

- Dayan P.,, Yu A. J. (2003). Uncertainty and learning. IETE Journal of Research, 49 (2-3), 171–181. [Google Scholar]

- Deniau J. M.,, Chevalier G. (1992). The lamellar organization of the rat substantia-nigra pars reticulata: Distribution of projection neurons. Neuroscience, 46 (2), 361–377. [DOI] [PubMed] [Google Scholar]

- Ghazizadeh A.,, Griggs W.,, Hikosaka O. (2016). Ecological origins of object salience: Reward, uncertainty, aversiveness, and novelty. Frontiers in Neuroscience, 10, 378, doi:10.3389/fnins.2016.00378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hikosaka O.,, Kim H. F.,, Yasuda M.,, Yamamoto S. (2014). Basal ganglia circuits for reward value-guided behavior. Annual Review of Neuroscience, 37, 289–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hikosaka O.,, Wurtz R. H. (1983). Visual and oculomotor functions of monkey substantia nigra pars reticulata. IV. Relation of substantia nigra to superior colliculus. Journal of Neurophysiology, 49 (5), 1285–1301. [DOI] [PubMed] [Google Scholar]

- Hikosaka O.,, Yamamoto S.,, Yasuda M.,, Kim H. F. (2013). Why skill matters. Trends in Cognitive Sciences, 17 (9), 434–441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ilinsky I. A.,, Jouandet M. L.,, Goldmanrakic P. S. (1985). Organization of the nigrothalamocortical system in the rhesus-monkey. Journal of Comparative Neurology, 236 (3), 315–330. [DOI] [PubMed] [Google Scholar]

- Itti L.,, Koch C. (2000). A saliency-based search mechanism for overt and covert shifts of visual attention. Vision Research, 40 (10-12), 1489–1506. [DOI] [PubMed] [Google Scholar]

- Itti L.,, Koch C.,, Niebur E. (1998). A model of saliency-based visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence, 20 (11), 1254–1259. [Google Scholar]

- Jagadeesh B.,, Chelazzi L.,, Mishkin M.,, Desimone R. (2001). Learning increases stimulus salience in anterior inferior temporal cortex of the macaque. Journal of Neurophysiology, 86 (1), 290–303. [DOI] [PubMed] [Google Scholar]

- Kim H. F.,, Ghazizadeh A.,, Hikosaka O. (2014). Separate groups of dopamine neurons innervate caudate head and tail encoding flexible and stable value memories. Frontiers in Neuroanatomy, 86, 120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H. F.,, Ghazizadeh A.,, Hikosaka O. (2015). Dopamine neurons encoding long-term memory of object value for habitual behavior. Cell, 163 (5), 1165–1175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H. F.,, Hikosaka O. (2013). Distinct basal ganglia circuits controlling behaviors guided by flexible and stable values. Neuron, 79 (5), 1001–1010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Low F. N. (1951). Peripheral visual acuity. AMA Archives of Ophthalmology, 45 (1), 80–99. [DOI] [PubMed] [Google Scholar]

- McPeek R. M.,, Skavenski A. A.,, Nakayama K. (2000). Concurrent processing of saccades in visual search. Vision Research, 40 (18), 2499–2516. [DOI] [PubMed] [Google Scholar]

- Middleton F. A.,, Strick P. L. (1996). The temporal lobe is a target of output from the basal ganglia. Proceedings of the National Academy of Sciences, USA, 93 (16), 8683–8687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middleton F. A.,, Strick P. L. (2002). Basal-ganglia ‘projections' to the prefrontal cortex of the primate. Cerebral Cortex, 12 (9), 926–935. [DOI] [PubMed] [Google Scholar]

- Miyashita Y.,, Higuchi S. I.,, Sakai K.,, Masui N. (1991). Generation of fractal patterns for probing the visual memory. Neuroscience Research, 12 (1), 307–311. [DOI] [PubMed] [Google Scholar]

- Motter B. C.,, Belky E. J. (1998). The zone of focal attention during active visual search. Vision Research, 38 (7), 1007–1022. [DOI] [PubMed] [Google Scholar]

- Mruczek R. E.,, Sheinberg D. L. (2005). Distractor familiarity leads to more efficient visual search for complex stimuli. Perception & Psychophysics, 67 (6), 1016–1031. [DOI] [PubMed] [Google Scholar]

- Rentschler I.,, Treutwein B. (1985). Loss of spatial phase-relationships in extrafoveal vision. Nature, 313 (6000), 308–310. [DOI] [PubMed] [Google Scholar]

- Schiller P. H.,, Sandell J. H.,, Maunsell J. H. R. (1987). The effect of frontal eye field and superior colliculus lesions on saccadic latencies in the rhesus-monkey. Journal of Neurophysiology, 57 (4), 1033–1049. [DOI] [PubMed] [Google Scholar]

- Shen J.,, Reingold E. M. (2001). Visual search asymmetry: The influence of stimulus familiarity and low-level features. Perception & Psychophysics, 63 (3), 464–475. [DOI] [PubMed] [Google Scholar]

- Shiffrin R. M.,, Schneider W. (1977). Controlled and automatic human information processing: II. Perceptual learning, automatic attending, and a general theory. Psychological Review, 84 (2), 127–190. [Google Scholar]

- Sigman M.,, Gilbert C. D. (2000). Learning to find a shape. Nature Neuroscience, 3 (3), 264–269. [DOI] [PubMed] [Google Scholar]

- Strasburger H.,, Rentschler I.,, Juettner M. (2011). Peripheral vision and pattern recognition: A review. Journal of Vision, 11 (5): 17 1–82. 82, doi:10.1167/11.5.13 [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Theeuwes J.,, Belopolsky A. V. (2012). Reward grabs the eye: oculomotor capture by rewarding stimuli. Vision Research, 74, 80–85. [DOI] [PubMed] [Google Scholar]

- Treisman A. M.,, Gelade G. (1980). Feature-integration theory of attention. Cognitive Psychology, 12 (1), 97–136. [DOI] [PubMed] [Google Scholar]

- Wang Q.,, Cavanagh P.,, Green M. (1994). Familiarity and pop-out in visual search. Perception & Psychophysics, 56 (5), 495–500. [DOI] [PubMed] [Google Scholar]

- Wolfe J. M. (1994). Guided Search 2.0: A revised model of visual search. Psychonomic Bulletin & Review, 1 (2), 202–238. [DOI] [PubMed] [Google Scholar]

- Wolfe J. M.,, Bennett S. C. (1997). Preattentive object files: Shapeless bundles of basic features. Vision Research, 37 (1), 25–43. [DOI] [PubMed] [Google Scholar]

- Wolfe J. M.,, Horowitz T. S. (2004). What attributes guide the deployment of visual attention and how do they do it? Nature Reviews Neuroscience, 5 (6), 495–501. [DOI] [PubMed] [Google Scholar]

- Xu Y.,, Chun M. M. (2009). Selecting and perceiving multiple visual objects. Trends in Cognitive Sciences, 13 (4), 167–174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamamoto S.,, Kim H. F.,, Hikosaka O. (2013). Reward value-contingent changes of visual responses in the primate caudate tail associated with a visuomotor skill. Journal of Neuroscience, 33 (27), 11227–11238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamamoto S.,, Monosov I. E.,, Yasuda M.,, Hikosaka O. (2012). What and where information in the caudate tail guides saccades to visual objects. Journal of Neuroscience, 32 (32), 11005–11016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yasuda M.,, Yamamoto S.,, Hikosaka O. (2012). Robust representation of stable object values in the oculomotor Basal Ganglia. Journal of Neuroscience, 32 (47), 16917–16932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zelinsky G. J.,, Sheinberg D. L. (1997). Eye movements during parallel-serial visual search. Journal of Experimental Psychology: Human Perception and Performance, 23 (1), 244–262. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.