Abstract

Cross-validation type of methods have been widely used to facilitate model estimation and variable selection. In this work, we suggest a new K-fold cross validation procedure to select a candidate ‘optimal’ model from each hold-out fold and average the K candidate ‘optimal’ models to obtain the ultimate model. Due to the averaging effect, the variance of the proposed estimates can be significantly reduced. This new procedure results in more stable and efficient parameter estimation than the classical K-fold cross validation procedure. In addition, we show the asymptotic equivalence between the proposed and classical cross validation procedures in the linear regression setting. We also demonstrate the broad applicability of the proposed procedure via two examples of parameter sparsity regularization and quantile smoothing splines modeling. We illustrate the promise of the proposed method through simulations and a real data example.

Keywords: Cross-validation, Model Averaging, Model Selection

1 Introduction

Cross-validation (CV) has been widely used for model selection in regression problems. Its theoretical properties and empirical performance have been extensively discussed in the literature including the pioneering work of Stone (1974, 1977) and Geisser (1975). Burman (1989) studied the properties of leave-one-out CV and K-fold CV procedures. Shao (1993) and Rao and Wu (2005) provided theoretical insights and asymptotic theories of model selection with a fixed number of variables for linear models. Wong (1983) examined consistency of CV in kernel nonparametric regression. Zhang (1993) showed superiority of leave-K-out CV over leave-one-out CV in linear regression. Kohavi (1995) assessed the performance in terms of model estimation and variable selection at various values of K1 and K2 respectively in K1-fold CV and leave-K2-out CV. For robust model selection, Ronchetti et al. (1997) suggested a robust loss function to measure the prediction error. Substantial practical and theoretical results of traditional and newer CV approaches were summarized in a review paper by Arlot and Celisse (2010).

In a typical K-fold CV procedure for a linear model, the data set is randomly and evenly split into K parts (if possible). A candidate model is built based on K − 1 parts of the data set, called a training set. Prediction accuracy of this candidate model is then evaluated on a test set containing the data in the hold-out part. By respectively using each of the K parts as the test set and repeating the model building and evaluation procedure, we choose the model with the smallest cross-validation score (typically, the mean squared prediction error MSPE) as the ‘optimal’ model. Given p independent variables, there are in total of 2p − 1 possible models. In the K-fold CV procedure, each model is in fact evaluated K times. Therefore, a single ‘optimal’ model is selected via K(2p − 1) times of model evaluation.

In this work, we propose a new cross-validation procedure with the core idea of first choosing K candidate ‘optimal’ models to build the ultimate model. On each training set, the 2p − 1 models are fitted and the candidate ‘optimal’ model is selected with the smallest cross-validation score obtained from the corresponding test set. This procedure is repeated on K training and test sets to obtain K candidate ‘optimal’ models. At last, the ultimate model is obtained with its parameter estimates as the average values across K candidate ‘optimal’ models. Therefore, we call the proposed method K-fold averaging cross-validation (ACV). Due to the averaging effect, efficiency of the final parameter estimates obtained by ACV improves over that of the traditional K-fold CV. We note that parameter estimates of CV and ACV are identical when all the candidate ‘optimal’ models from ACV are identical to the model selected by the traditional CV.

The proposed ACV procedure is also applicable to high dimensional data to determine the amount of penalty imposed by a regularization method, e.g., LASSO (Tibshirani; 1996). In fact, the ACV procedure has broad applications as CV to problems wherein regularization parameters associated with a penalization method need to be determined. This will be demonstrated via an application to smoothing spline based nonparametric modeling (Nychka et al.; 1995).

The paper is organized as follows. We describe the explicit expression of the ACV estimator and its asymptotic property in linear regression models in Section 2. We discuss the connection and distinction between ACV and CV in the applications of LASSO and smoothing spline based modeling in Section 3. We illustrate the proposed method through simulation studies in Section 4 and through application to a real data set in Section 5. And we provide some concluding remarks in Section 6.

2 K-fold averaging cross-validation in a linear model

2.1 Basic setting

Consider a linear model

where Y = (y1, …, yn)′ is the response vector, X = {xij} is an n × p design matrix for the full model, and ∊ = (∊1, …, ∊n)′ is a vector of iid random variables with mean 0 and variance σ2. We assume that p is fixed. All the observations are divided into K parts such that every part is mutually exclusive. Throughout this paper, we assume that K is finite and fixed. In this paper, we consider deterministic predictors, but when X is random, the results are valid almost surely if the two assumptions in Section 2.3 and the assumption on ∊ are satisfied almost surely for given X.

We use nk to denote the sample size in the kth fold, where . We define Mk as the selected model based on the kth hold-out fold (test set), and let its corresponding design matrix be Xk with all observations. We further assume that Xk is full rank for any k.

When the kth fold is a test set, a design matrix in the kth fold is defined as Xnk, and the design matrix of the corresponding training set is denoted as X−nk. Yk and Y−k are the response observations corresponding to Xnk and X−nk, respectively.

The choice of a candidate ‘optimal’ model is made based on the mean squared prediction error in the hold-out fold. The Mk can be represented as a subset of {1, …, p}. Let |Mk| be the number of elements (or, equivalently the number of independent variables) in Mk. Further, let XMK,−nk be the design matrix composed of variables of Mk in the training set which has a size of n − nk by |Mk|. And we denote β˜k as an estimator of β with Mk. For example, the β˜k obtained from the least squares method can be expressed as , which is a length-|Mk| vector.

Since the β˜ks are averaged over k to obtain the ultimate parameter estimates, we need to ensure that each of them is of length p. For this purpose, we introduce the transformation matrix Tk, which is of size p × |Mk|. Each column vector of Tk is composed of a 1 and (p − 1) 0s. For example, if x2 and x4 are selected and p = 6, then Mk = {2,4}, and Tk contains two column vectors of (0, 1, 0, 0, 0, 0)′ and (0, 0, 0, 1, 0, 0)′, where the position of 1 in the ith column is specified in the ith element of Mk, and the variables not contained in Mk are indexed as 0. As a result, the size of β˜k is changed to p via multiplying by Tk. Going back to the example, β˜k = (1,2)′ yields Tkβ˜k = (0, 1, 0, 2, 0, 0)′. And Tk also indicates the selected model with XTk = Xk.

To measure the discrepancy between the observations and the predicted values, we use a squared error loss. The MSPE evaluated with the data Yk can be written as

| (1) |

where the least squares estimate . Note that β̂−nk depends on the variables contained in Mk. Because β̂−nk is not our final estimator, convergence of (1) to 0 does not necessarily deduce consistency of the estimator defined in (2); whereas it does in the method of leave-nk-out cross-validation described in Zhang (1993) and Shao (1993). Rather, we choose Mk or equivalently Xk by minimizing (1), and use it to construct the ultimate estimator. The explicit form of the proposed parameter estimator based on the least squares method is provided below.

2.2 A new estimator

In the traditional K-fold CV, a selected model is what produces smallest prediction error where the number of predicted values is the same as sample size. That is, K-fold CV selects a model which minimizes .

The fundamental distinction of our method is to choose a candidate ‘optimal’ model Xk based on the hold-out fold, and then iterate this procedure for k = 1, …, K. Note that the model selection at each iteration provides an opportunity to choose different (up to K) plausible models, which is not the case in K-fold CV. At last, the K candidate ‘optimal’ models are averaged to yield an ultimate model.

The regression coefficient estimator of the model optimally chosen by the kth fold is

where Xk is an n × | Mk| design matrix containing all the n values of the explanatory variables in Mk selected by the kth fold according to (1). Note again that Tk is the transformation matrix to ensure the length of β̂k to be p. Then, the ultimate estimator of the regression coefficients in the K-fold ACV procedure is defined as

| (2) |

Accordingly, the fitted value of Y by ACV is

Let , then . Since X is deterministic predictors, we have,

| (3) |

Although each Pk is a projection matrix, HACV is not a projection matrix in general. This implies that β̂ACV is likely a biased estimator (Hoaglin and Welsch; 1978). However, efficiency of the estimator can be substantially gained from averaging. To account for variability induced by randomly splitting the data, we repeat the splitting step several times in both CV and ACV procedures. Alternatively, we can use a balanced split. Note that there are n!/(n1!n2!⋯nK!) different ways of splitting data. Then, all observations have equal probability of being included in the training set and in the test set, thus there is no random variation arises from the splitting. In practice, the random variation from the split is ignorable, and only a few times of splitting observed to be enough in the simulation studies.

Connection with Bagging

The bootstrap aggregating approach (Bagging) suggested by Breiman (1996) is similar to our proposed method in terms of the repeated parameter estimation and variable selection steps. The Bagging procedure first generates a sample of size n. Based on the generated sample, it performs model selection according to a criterion such as K-fold CV and obtains the least squares estimator, . After repeating this process B times, are obtained and averaged to form a final estimate. The distinction is that Bagging procedure requires generating bootstrap samples from the original data set, while the proposed ACV always use original data set and average only ‘optimal’ models obtained from each hold-out fold to form a final model.

Connection with BMA

The ACV method also shares some similarity with Bayesian model averaging (BMA) (Raftery et al.; 1997) where the posterior distribution of β given data D is

where V = 2p − 1. This is a weighted average of the posterior distribution of β, wherein the weight corresponds to the posterior model probabilities. Based on this formulation, we can view ACV as a special case of BMA by setting Pr(Mυ|D) = 1/K if Mυ ∈{M1, …, MK} and Pr(Mυ|D) = 0 otherwise. Thus, we average those ‘optimally’ selected models based on the MSPEk defined in (1) with the equal weight, instead of averaging across all 2p − 1 possible models as in BMA. In fact, ACV can be modified to obtain the similar form of BMA. We define , where β̂k,υ is the estimate of the υth model and its corresponding weight is wk,υ. Because we concern finding the smallest MSPEk among 2p − 1 possible ones using data in the kth hold-out fold, we use the MSPEk values from the υth model, MSPEk,υ, to estimate wk,υ. For example, we can use that is normalized to sum up to 1. Hence, we can build a new version of model averaging as . The properties of this model averaging method will not be pursued in this work.

Remark: The ACV procedure can be integrated with robust model estimation. For example, instead of the mean one may consider using a component-wise median estimation among the candidate models. M-estimator (Huber and Ronchetti; 2009) or other types of robust estimators can also be used.

2.3 Theoretical Properties

We denote the true model as M*. A model M can be classified into two categories (Shao; 1993):

Category I: M ⊇ M*

Category II: At least one component of M* is not contained in M.

Now, we introduce the following assumptions:

Assumption A. n/nk → K for all k = 1, …, K.

Assumption B. For Mk in Category II,

Assumption B is adopted from Zhang (1993). Notice that bk is non-zero when Mk is in Category II, but becomes zero when Mk is in category I.

We define the mean squared error (MSE) of ACV as

| (4) |

Note that MSEACV is defined based on the ultimate parameter estimates and uses all the explanatory variables, which is different from MSPEk used to select the candidate ‘optimal’ model in the hold-out fold k. Using (3), (4) is expressed as

| (5) |

where In is an identity matrix of size n, and tr(·) is a trace.

Now, let the model chosen by the traditional K-fold CV as Xo. Then, the MSE of the traditional K-fold CV (MSECV) is obtained by replacing HACV with the projection matrix of Xo.

| (6) |

where d = tr(PCV) ≤ p and . Then, it is readily seen that when Xo is in Category I, since the second term on the right-hand side (RHS) of equation (6) is 0. When Xo is in Category II, MSECV is dominated by , which is greater than 0 by Assumption B.

In Theorem 1, we show that the two estimates chosen by CV and ACV are asymptotically equivalent as the sample size increases.

Theorem 1

Let be a union of Mk for k = 1, …, K. Under Assumptions A and B,

MSEACV = MSECV + O(n−1) if Mf and Xo are in Category I.

MSEACV = MSECV + o(1) if Mf and X0 are in Category II.

Note that the rate of decrease in both MSEACV and MSECV is subject to inclusion of the true model. This implies that inclusion of the true model will determine the asymptotic behavior of ACV and CV, even though the two sets of parameter estimates are asymptotically equivalent.

Remark: Some existing work use a slightly different but more realistic definition of MSE, since β is unknown in practice, which can be written as and Using this definition, we can derive the same asymptotic equivalence of β̂ACV and β̂CV.

Now, we show the reduced variance of β̂ACV under the finite sample.

Lemma 1

Under the setting in Section 2.1, we have

The inequality holds when β̂ks are all identical.

This implies that the variance of β̂ACV is smaller than β̂CV (as measures the volume of Ak) due to the averaging effect except for the case when β̂ks are all identical. Under our empirical experiments in Section 4, the magnitude of the reduced variance tends to be larger than the allowed bias, thus results in more accurate model selection by ACV. This indicates the proposed method could take advantage of bias-variance tradeoff.

3 Integration of ACV with other methods

Lasso penalization

we introduce some applications of ACV beyond the traditional linear regression problem with a fairly small p as discussed in Section 2. When the number of variables p is large, it is impractical to fit all 2p − 1 models. Rather, we use a penalization method such as LASSO (Tibshirani; 1996), which is widely used for handling high-dimensional data. Such penalization methods convert a model selection problem to controlling the amount of regularization applied to the parameter estimates. Specifically, LASSO is defined as

Because λ takes a real number in [0, ∞), we cannot consider all the possible models. We examine some candidate values of λ in a reasonable range. With K-fold CV, a λ̂CV that produces the smallest cross validated score is claimed as ‘optimal.’

In this case, ACV intends to select K candidate ‘optimal’ values of λ̂k based on the cross-validated score from hold-out fold k = 1, …, K. Let be a LASSO estimate that is obtained based on all the samples excluding the ones in the kth fold. The ultimate estimate of λ follows as , where

Such way of averaging enables obtaining a more stable estimate of the penalization parameter. Because the possible number of λ values is infinite, a manageable solution is to use several values of λ on a discrete grid for assessment. Note that λ̂ACV is likely to be more accurate than the estimate of CV using the same grid, due to the averaging effect of ACV.

Moreover, the computational efficiency of ACV is similar to CV. In both the methods, the major computation comes from estimating model with the (K − 1) folds of training data and from evaluating the performance with the hold-out fold. For ACV, the additional steps of choosing K candidate ‘optimal’ values and averaging could significantly improve the performance at trivial additional computation cost. We make comparisons between β̂LASSO(λ̂CV) and β̂LASSC(λ̂ACV) via simulation studies that will be described later.

Quantile smoothing splines

The cross-validation method is also frequently used in many different versions of smoothing splines to control the amount of roughness of the fitted function. Quantile smoothing splines are developed for targeting conditional quantiles in a nonparametric fashion. There are several different definitions of such smoothing splines; we use the method proposed by Bloomfield and Steiger (1983) and Nychka et al. (1995). Basically, a quantile smoothing spline is taken as the minimizer of

where ρq(u) = u{q − I(u < 0)} is the check function of Koenker and Bassett (1978) and 0 < q < 1. With a conditional probability density of fY|X(Y|X), the qth conditional quantile function gq(x) is a function of x such that . Here, the roughness of the fitted curves is determined by the value of λ. We define as the minimizer of the cross-validated score where the check loss function is used for validation. Similar to the procedure of conducting ACV under LASSO, we can obtain the ACV version of for quantile smoothing splines. We use simulations to investigate the empirical properties of and .

4 Simulations

In empirical studies, it is desirable for K, the number of folds, to be reasonably large in order to receive the benefit of averaging. We consider K = 5 and 10 throughout the simulation studies. We investigate the performance of CV and ACV in three scenarios: the traditional linear regression model, LASSO regularization method, and quantile smoothing splines. For these scenarios, the corresponding ACV methods described in Section 2 and Section 3 are used. The classical CV procedure is described as follows.

The CV Procedure

Randomly and evenly split the data set into K folds.

Use K −1 folds of data as a training data set to fit the linear model (or, LASSO, and quantile smoothing splines).

With the fitted models in (2), predict the value of the response variable in the hold-out fold.

From the response variable in hold-out fold (say, kth fold), calculate mean squared prediction error by , where is the predicted value for yi and ℵk is the data set in kth fold.

Repeat (2) through (4) for K times so that each of K fold is used as a hold-out fold from which we obtain MSPE1, …, MSPEK.

Each candidate model obtains a prediction performance measure . The ‘optimal’ model which minimizes will be selected.

4.1 Linear model

Assume a linear model Y = Xβ + ∊ as described in Section 2.1 with ∊is following an iid standard normal distribution. We consider the number of samples n = 200. The X is generated from a multivariate normal distribution with mean 0 and the correlation between the ith and jth covariates ρ = 0.5|i−j|. We consider three cases of the true values of β: (i) β = (3, 0, 0, 0, 0, 0, 0, 0)′; (ii) β = (3, 1.5, 0, 0, 2, 0, 0, 0)′; and (iii) β = (2, 2, 2, 2, 2, 2, 2, 2)′ that represent sparse, intermediate, and dense parameter space, respectively.

To measure accuracy of the estimates, we use the mean squared error (MSE) defined as

| (7) |

With L Monte Carlo (MC) samples (L = 1000), the estimate can be computed as where β̂l is the estimate of β for the lth MC sample.

In addition, Bagging procedure is applied to the same MC samples. Since CV and ACV choose a model from 28 −1 = 255 possible models, we let Bagging procedure to select from 255 re-sampled (with replacement) data sets from one MC sample. The size of re-sampled data set is 200. The results of both CV and ACV are reported in Table 1. In Table 1, “reduction(%)” denotes the reduction of MSE achieved by ACV in comparison to CV. We see that the largest reduction is attained in case (i) which is the sparse scenario, while no reduction is made in the dense case (iii). In the dense case (iii), ACV is able to select the true model K times which leads to the accurate ultimate model via averaging, and thus results in the identical values of MSE as CV. Performance of Bagging is consistent over the three cases, and thus relatively works fine in the dense case, but is outperformed by CV and ACV.

Table 1.

Estimate of MSE (multiplied by 102) and its standard error (in parentheses, multiplied by 102) based on 1000 MC samples for CV, ACV and Bagging.

| β=(3,0,0,0,0,0,0,0)′ | β=(3,1.5,0,0,2,0,0,0)′ | β=(2,2,2,2,2,2,2,2)′ | |

|---|---|---|---|

| CV, K = 5 | 516.4 (13.6) | 593.1 (13.3) | 806.3 (13.0) |

| ACV, K = 5 | 405.8 (8.7) | 521.1 (5.8) | 806.3 (13.0) |

| reduction(%) | 21.43 | 12.15 | 0 |

|

| |||

| CV, K = 10 | 509.3 (13.7) | 590.9 (13.3) | 806.3 (13.0) |

| ACV, K = 10 | 370.1 (7.6) | 553.4 (5.8) | 806.3 (13.0) |

| reduction(%) | 27.34 | 6.35 | 0 |

|

| |||

| Bagging | 811.4(13.1) | 808.3(13.1) | 810.6(13.0) |

4.2 Lasso penalization

We also investigate the performance of CV and ACV in the case of large p with the usage of sparsity penalization. We use the similar simulation set-up with n = 200 in the previous section, but increase p to 1000 and set ρ = 0. The β is set to have 50 non-zero values and 950 zeros. Two different sets of non-zero values are considered as follows: (i) 25 values are randomly generated from Unif(1, 2), and the other 25 from Unif(−2, −1); and (ii) 25 values are taken to be 1 and the others are with values of −1. We interrogate 200 equally spaced values of λ in (0.001, 0.4) with K = 10.

We compare CV and ACV in terms of parameter estimation and identification of true non-zero parameters. We again use MSE in (7) to measure the estimation accuracy. The mean and its standard error of 1000 MSE values, respectively, obtained from 1000 MC samples in each case are reported in Table 2. We see that ACV reduces MSE by about 30% comparing to CV in each case. To investigate accuracy of detecting the 50 true non-zero parameters, we focus on the 50 variables with the largest absolute values of the parameter estimates, and record the number of detected true non-zero ones among them. In Table 3, we report the distribution of the number of detected true non-zero βs of CV subtracted from that of ACV. In case (i), ACV and CV show the identical detection accuracy for 352 times out of 1000 runs. Note that ACV performs better than CV for 426 times, while CV outperforms ACV for 222 times. In case (ii), ACV detects more true non-zero βs than CV for 466 times, but fewer for 228 times. It is also noticeable that ACV outperforms CV with the number of accurate detection as high as 15, while CV outperforms ACV with no more than 6 accurate detection.

Table 2.

Estimates of MSE and its standard error (in parentheses) based on 1000 MC samples for CV and ACV.

| CV | ACV | reduction(%) | |

|---|---|---|---|

| case (i) | 573.2 (17.8) | 381.3 (6.3) | 33.5 |

| case (ii) | 487.4 (13.3) | 342.8 (5.6) | 29.7 |

Table 3.

The distribution of the number of detected true non-zero βs of CV subtracted from that of ACV, based on 1000 MC samples.

| −6 to −4 | −3 | −2 | −1 | 0 | 1 | 2 | 3 | 4 | 5 to 7 | 8 to 15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| case (i) | 14 | 17 | 51 | 140 | 352 | 170 | 108 | 62 | 31 | 39 | 16 |

| case (ii) | 14 | 15 | 61 | 138 | 306 | 181 | 112 | 58 | 46 | 48 | 21 |

4.3 Quantile smoothing splines

In this section, we investigate applicability of the proposed method ACV in the problem of quantile smoothing splines. We consider the mean of the observations from a sinusoid curve with period 1, which is expressed as

where xis are iid from the standard uniform distribution with n = 200. We consider two distribution settings for the iid ∊i's: (i) N(0,0.22); and (ii) shifted exponential distribution with the median 0 and the standard deviation 0.2. A fine grid search of the smoothing parameter is conducted to select the ‘optimal’ value by CV and ACV with L MC samples (L = 1000) and K = 5. We adopt the empirical estimate of MSE by MC samples as,

where g(xi) is the true underlying function, and ĝl(xi) indicates the fitted value at xi from the lth MC sample. The results at several quantiles are shown in Table 4, where reduction(%) stands for the percentage of reduction in the average value of MSE achieved by ACV comparing to CV. Under the normal error distribution in case (i), the result for q > 0.5 is not reported because of the similarity to q < 0.5. The considerable reductions achieved by ACV indicate that its averaging strategy results in better selection of the penalization parameter. For both the cases, quantiles in the region of high conditional density of the errors lead to greater reduction (%) in MSE, comparing to the low density regions. The rationale is that fewer observations are available to estimate the targeted quantile in the low density regions, and thus the advantage of ACV is not as prominent. This can be seen at q = 0.9 in case (ii) that the performances of these two methods are very similar with n = 200. To verify this finding, we increase the sample size to 400 and observe 5% reduction in MSE by using ACV. Thus, it is important to keep a sufficient number of observations in the hold-out fold to take advantage of ACV.

Table 4.

Estimate of MSE and its standard error (in parentheses) based on 1000 MC samples for CV and ACV. All numbers are multiplied by 104.

| case (i) | q=0.1 | q=0.2 | q=0.3 | q=0.4 | q=0.5 |

|---|---|---|---|---|---|

| CV | 84.25(1.8) | 63.39(1.2) | 55.72(1.1) | 51.63(1.0) | 50.92(1.0) |

| ACV | 83.13(1.8) | 58.83(1.2) | 50.55(1.0) | 46.78(0.9) | 45.10(0.8) |

| reduction(%) | 1.32 | 7.21 | 9.28 | 9.4 | 11.42 |

|

| |||||

| case (ii) | q=0.1 | q=0.25 | q=0.5 | q=0.75 | q=0.9 |

|

| |||||

| CV | 2.21(0.06) | 5.02(0.12) | 13.14(0.28) | 35.84(0.08) | 89.67(2.0) |

| ACV | 1.81(0.04) | 4.28(0.09) | 11.81(0.23) | 33.68(0.07) | 90.41(2.3) |

| reduction(%) | 18.28 | 14.75 | 10.08 | 6.03 | −0.82 |

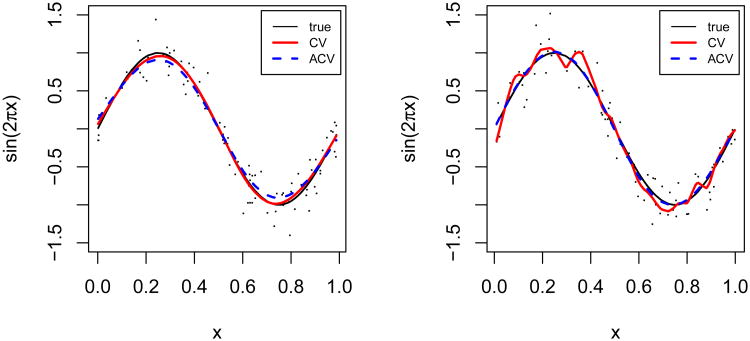

Another phenomenon often observed is data over-fitting by CV. In Figure 1, we demonstrate this point by two plots of the fitted curves that produce the maximum (left panel) and minimum (right panel) values of MSEACV/MSECV among 1000 MC samples, respectively. In the left panel, ACV in fact performs similarly to CV. In contrast, the right panel shows the obvious over-fitting by CV, manifested by its jagged curve.

Figure 1.

Under a normal error distribution, the fitted model selected by ACV performs relatively ‘worst’ compared to that selected by CV (left), and ACV performs relatively ‘best’ compared to CV (right) among 1000 simulated data sets.

5 A real example

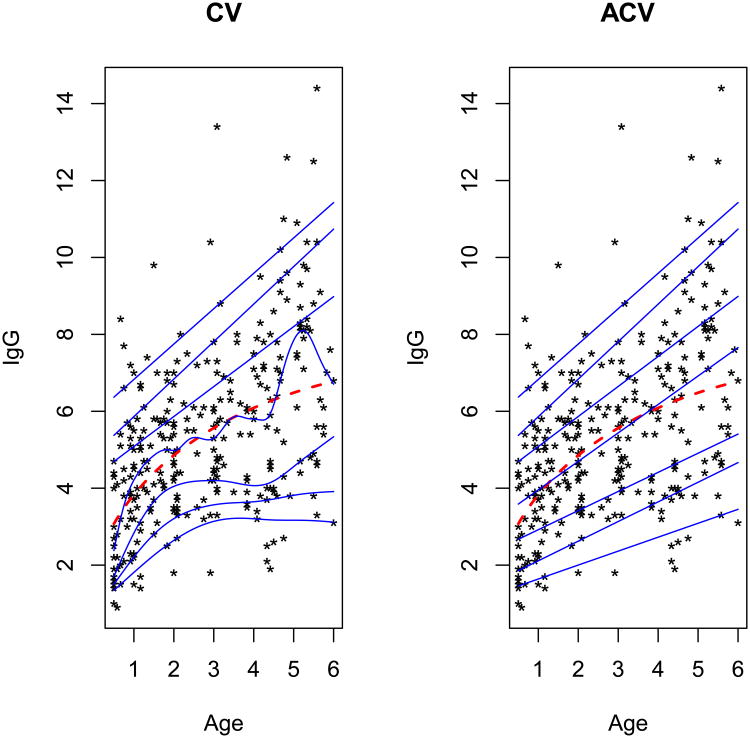

Isaacs et al. (1983) established the reference percentiles of children for the serum concentrations of immunoglobulin-G (IgG) in grams per liter, based on 298 children of ages 6 months to 6 years. They considered various polynomial regressions treating IgG as the response and age (in month) as an explanatory variable. The best-fit model provided by Isaacs et al. (1983) is for the 50th percentile, which is drawn as a dashed line in Figure 2. Royston and Altman (1994) revisited this data and used additive polynomial regressions with smoother fitted lines to eliminate the jaggedness near the boundary ages of 6 months and 6 years. Herein, we target selecting an appropriate smoothing penalty parameter for quantile smoothing splines to obtain well-behaved reference percentiles.

Figure 2.

Fitted quantile smoothing splines at q=0.05, 0.1, 0.25, 0.5, 0.75, 0.9, and 0.95, highlighted in blue from the bottom to the top, in the immunoglobulin-G data set. The dashed fitting line is obtained from the model given by Isaacs et al. (1983). The results of CV and ACV are shown in the left and right panels, respectively.

To find the ‘optimal’ λ̂, we examined 2000 log(λ) values within (−35, −0.2). First, the data is randomly divided into 5 (nearly) equal parts, among which one contains 58 observations and each other part contains 60 observations. To account for the variability due to random split, we iterate the procedure 200 times for both ACV and CV. The penalization parameter values, denoted by λ̂ACV and λ̂CV, are obtained by averaging their respective 200 estimates of λ. The fitted conditional quantile lines of the IgG data at q = 0.05, 0.1, 0.25, 0.5, 0.75, 0.9, and 0.95 are plotted in blue from the bottom to the top in Figure 2.

In the data set, we find mild heteroscedasticity of increasing variance as Age increases. It is reflected by the fit from both CV and ACV. It is clear that the fitted line at q = 0.5 provided by CV appears to be less smooth than ACV. We notice that ACV shares more similarity with the model given by Isaacs et al. (1983) which is the dashed line. At q = 0.25, the non-monotonic fitting line of CV implies that the IgG level of 3-year-old children is higher than that of 4-year-old children, which seems insensible from the perspective of biology. Overall, ACV provides smoother fit to data than CV. We also summarize the logarithm-transformed λ̂CV and λ̂ACV at various quantiles in Table 5. We can see that λ̂CV changes considerably across the quantiles, whereas λ̂ACV is stable (around −1.5) due to the averaging effect.

Table 5.

Average values of log(λ̂CV) and log(λ̂ACV) at various quantiles over 200 different random splits.

| q=0.05 | q=0.1 | q=0.25 | q=0.5 | q=0.75 | q=0.9 | q=0.95 | |

|---|---|---|---|---|---|---|---|

| CV | −15.49 | −15.59 | −16.73 | −19.86 | −4.02 | −3.85 | −3.84 |

| ACV | −1.58 | −1.62 | −1.7 | −1.64 | −1.42 | −1.5 | −1.52 |

6 Conclusion

In this work, we propose a K-fold averaging cross-validation procedure for model selection and parameter estimation. We establish its theoretical property and show its promise via empirical investigation. Since cross-validation is actively employed in many areas of statistics, ACV can also be applied to a broad range of modeling procedures. For example, ACV can be easily used with penalized model selection method, for which selection of penalty parameters is of interest. Demonstrated through simulations with usage of LASSO, the ACV method outperforms the CV method in terms of mean squared error and selection accuracy. We also investigate its applicability in quantile smoothing splines, and demonstrate its capability of providing more smooth data fit than the traditional CV method.

One shall pay attention to the size of the test set and selection of K when implementing ACV. A large value of K combined with a small sample size may cause insufficient fit of the data since K-fold ACV performs model selection based on 1/K of the data. We recommend including a sufficient number of observations in a test set. Note that the desirable size of the test set also depends on complexity of the underlying true model.

Acknowledgments

Hu's work was partially supported by the National Institute of Health Grants R01GM080503, R01CA158113, CCSG P30 CA016672, and 5U24CA086368-15.

Appendix

Proof of Theorem 1

Proof

| (8) |

Since and tr(Pk) = | Mk|, we have,

| (9) |

where is the mean number of selected variables from ACV. Thus, |d − d*| ≤ p, which leads to . The second term on the RHS of (5) will disappear when Mf is in Category I, which completes the proof of (a).

When Mf is in Category II, the second term on the RHS of (5) will be

| (10) |

When it is subtracted by the second term on the RHS of equation (6), we have

| (11) |

By Assumption B, the RHS of equation (11) is o(1), which completes the proof of (b).

Proof of Lemma 1

Proof

First, define max1≤k≤KAk to be Ak with maximum determinant for k = 1, …, K, where Ak is a positive definite matrix. Note that det(Ak) > 0 as a property of positive definite matrix, and this is the case here since we assume Xk is full column rank. From the facts that the value of correlation is always between −1 and 1, and det(AkAk′) = det(Ak)det(Ak′), we have

where the last inequality directly comes from the definition. Therefore, we have,

When β̂ks are identical for k = 1, …, K, the above inequality becomes equality. Further, when the selected models from all the folds {β̂k} are identical, it is in fact the same as a selected model by the traditional K-fold CV. Thus, we have β̂ACV = β̂CV, which confirms the last equality.

Contributor Information

Yoonsuh Jung, Email: yoonsuh@waikato.ac.nz.

Jianhua Hu, Email: jhu@mdanderson.org.

References

- Arlot S, Celisse A. A survey of cross-validation procedures for model selection. Statistics Surveys. 2010;4:40–79. [Google Scholar]

- Bloomfield P, Steiger W. Least Absolute Deviations: Theory, Applications and Algorithms. 1. Birkhauser Boston; Boston: 1983. [Google Scholar]

- Breiman L. Bagging predictors. Machine Learning. 1996;24(2):123–140. [Google Scholar]

- Burman P. A comparative study of ordinary cross-validation, v-fold cross-validation and the repeated learning-testing methods. Biometrika. 1989;76(3):503–514. [Google Scholar]

- Geisser S. The predictive sample reuse method with applications. Journal of the American Statistical Association. 1975;70(350):320–328. [Google Scholar]

- Hoaglin DC, Welsch RE. The hat matrix in regression and ANOVA. The American Statistician. 1978;32(1):17–22. [Google Scholar]

- Huber P, Ronchetti EM. Robust Statistics. 2. Wiley; 2009. Probability and Statistics. [Google Scholar]

- Isaacs D, Altman D, Tidmarsh C, Valman H, Webster A. Serum immunoglobulin concentrations in preschool children measured by laser nephelometry: Reference ranges for IgG, IgA, IgM. Journal of Clinical Pathology. 1983;36:1193–1196. doi: 10.1136/jcp.36.10.1193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koenker R, Bassett G. Regression quantiles. Econometrica. 1978;46(1):33–50. [Google Scholar]

- Kohavi R. Proceedings of the 14th international joint conference on Artificial intelligence. Vol. 2. Morgan Kaufmann Publishers Inc.; 1995. A study of cross-validation and bootstrap for accuracy estimation and model selection; pp. 1137–1143. [Google Scholar]

- Nychka D, Gray G, Haaland P, Martin D, O'Connell M. A nonparametric regression approach to syringe grading for quality improvement. Journal of the American Statistical Association. 1995;90(432):1171–1178. [Google Scholar]

- Raftery AE, Madigan D, Hoeting JA. Bayesian model averaging for linear regression models. Journal of the American Statistical Association. 1997;92(437):179–191. [Google Scholar]

- Rao CR, Wu YW. Linear model selection by cross-validation. Journal of Statistical Planning and Inference. 2005;128:231–240. [Google Scholar]

- Ronchetti E, Field C, Blanchard W. Robust linear model selection by cross-validation. Journal of the American Statistical Association. 1997;92(439):1017–1023. [Google Scholar]

- Royston P, Altman DG. Regression using fractional polynomials of continuous covariates: Parsimonious parametric modelling. Journal of the Royal Statistical Society Series C (Applied Statistics) 1994;43(3):429–467. [Google Scholar]

- Shao J. Linear model selection by cross-validation. Journal of the American Statistical Association. 1993;88(422):486–494. [Google Scholar]

- Stone M. Cross-validatory choice and assessment of statistical predictions. Journal of the Royal Statistical Society Series B (Methodological) 1974;36(2):111–147. [Google Scholar]

- Stone M. An asymptotic equivalence of choice of model by cross-validation and Akaike's criterion. Journal of the Royal Statistical Society, Series B. 1977;39(1):44–47. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society Series B (Methodological) 1996;58(1):267–288. [Google Scholar]

- Wong WH. On the consistency of cross-validation in kernel nonparametric regression. The Annals of Statistics. 1983;11(4):1136–1141. [Google Scholar]

- Zhang P. Model selection via multifold cross validation. The Annals of Statistics. 1993;21(1):299–313. [Google Scholar]