Abstract

Neuroscience has focused on the detailed implementation of computation, studying neural codes, dynamics and circuits. In machine learning, however, artificial neural networks tend to eschew precisely designed codes, dynamics or circuits in favor of brute force optimization of a cost function, often using simple and relatively uniform initial architectures. Two recent developments have emerged within machine learning that create an opportunity to connect these seemingly divergent perspectives. First, structured architectures are used, including dedicated systems for attention, recursion and various forms of short- and long-term memory storage. Second, cost functions and training procedures have become more complex and are varied across layers and over time. Here we think about the brain in terms of these ideas. We hypothesize that (1) the brain optimizes cost functions, (2) the cost functions are diverse and differ across brain locations and over development, and (3) optimization operates within a pre-structured architecture matched to the computational problems posed by behavior. In support of these hypotheses, we argue that a range of implementations of credit assignment through multiple layers of neurons are compatible with our current knowledge of neural circuitry, and that the brain's specialized systems can be interpreted as enabling efficient optimization for specific problem classes. Such a heterogeneously optimized system, enabled by a series of interacting cost functions, serves to make learning data-efficient and precisely targeted to the needs of the organism. We suggest directions by which neuroscience could seek to refine and test these hypotheses.

Keywords: cost functions, neural networks, neuroscience, cognitive architecture

1. Introduction

Machine learning and neuroscience speak different languages today. Brain science has discovered a dazzling array of brain areas (Solari and Stoner, 2011), cell types, molecules, cellular states, and mechanisms for computation and information storage. Machine learning, in contrast, has largely focused on instantiations of a single principle: function optimization. It has found that simple optimization objectives, like minimizing classification error, can lead to the formation of rich internal representations and powerful algorithmic capabilities in multilayer and recurrent networks (LeCun et al., 2015; Schmidhuber, 2015). Here we seek to connect these perspectives.

The artificial neural networks now prominent in machine learning were, of course, originally inspired by neuroscience (McCulloch and Pitts, 1943). While neuroscience has continued to play a role (Cox and Dean, 2014), many of the major developments were guided by insights into the mathematics of efficient optimization, rather than neuroscientific findings (Sutskever and Martens, 2013). The field has advanced from simple linear systems (Minsky and Papert, 1972), to nonlinear networks (Haykin, 1994), to deep and recurrent networks (LeCun et al., 2015; Schmidhuber, 2015). Backpropagation of error (Werbos, 1974, 1982; Rumelhart et al., 1986) enabled neural networks to be trained efficiently, by providing an efficient means to compute the gradient with respect to the weights of a multi-layer network. Methods of training have improved to include momentum terms, better weight initializations, conjugate gradients and so forth, evolving to the current breed of networks optimized using batch-wise stochastic gradient descent. These developments have little obvious connection to neuroscience.

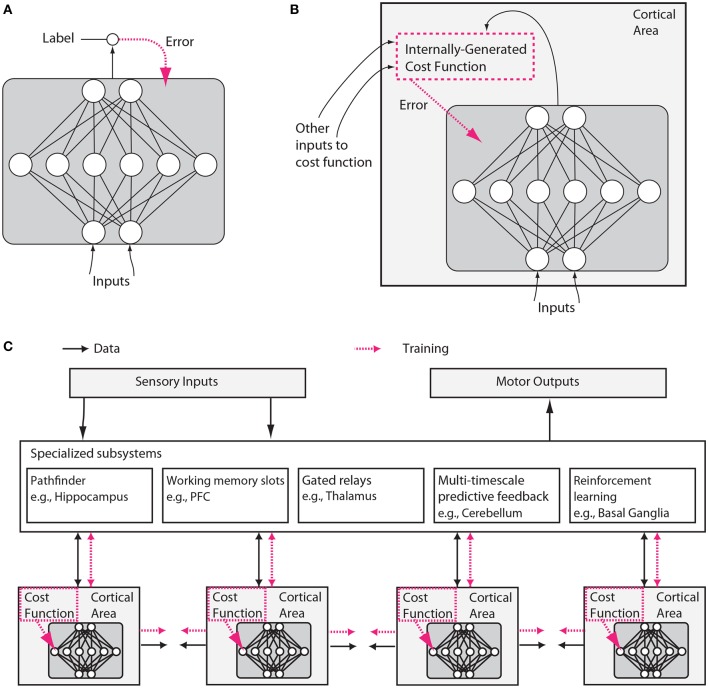

We will argue here, however, that neuroscience and machine learning are again ripe for convergence. Three aspects of machine learning are particularly important in the context of this paper. First, machine learning has focused on the optimization of cost functions (Figure 1A).

Figure 1.

Putative differences between conventional and brain-like neural network designs. (A) In conventional deep learning, supervised training is based on externally-supplied, labeled data. (B) In the brain, supervised training of networks can still occur via gradient descent on an error signal, but this error signal must arise from internally generated cost functions. These cost functions are themselves computed by neural modules specified by both genetics and learning. Internally generated cost functions create heuristics that are used to bootstrap more complex learning. For example, an area which recognizes faces might first be trained to detect faces using simple heuristics, like the presence of two dots above a line, and then further trained to discriminate salient facial expressions using representations arising from unsupervised learning and error signals from other brain areas related to social reward processing. (C) Internally generated cost functions and error-driven training of cortical deep networks form part of a larger architecture containing several specialized systems. Although the trainable cortical areas are schematized as feedforward neural networks here, LSTMs or other types of recurrent networks may be a more accurate analogy, and many neuronal and network properties such as spiking, dendritic computation, neuromodulation, adaptation and homeostatic plasticity, timing-dependent plasticity, direct electrical connections, transient synaptic dynamics, excitatory/inhibitory balance, spontaneous oscillatory activity, axonal conduction delays (Izhikevich, 2006) and others, will influence what and how such networks learn.

Second, recent work in machine learning has started to introduce complex cost functions, those that are not uniform across layers and time, and those that arise from interactions between different parts of a network. For example, introducing the objective of temporal coherence for lower layers (non-uniform cost function over space) improves feature learning (Sermanet and Kavukcuoglu, 2013), cost function schedules (non-uniform cost function over time) improve1 generalization (Saxe et al., 2013; Goodfellow et al., 2014b; Gülçehre and Bengio, 2016) and adversarial networks—an example of a cost function arising from internal interactions—allow gradient-based training of generative models (Goodfellow et al., 2014a)2. Networks that are easier to train are being used to provide “hints” to help bootstrap the training of more powerful networks (Romero et al., 2014).

Third, machine learning has also begun to diversify the architectures that are subject to optimization. It has introduced simple memory cells with multiple persistent states (Hochreiter and Schmidhuber, 1997; Chung et al., 2014), more complex elementary units such as “capsules” and other structures (Delalleau and Bengio, 2011; Hinton et al., 2011; Tang et al., 2012; Livni et al., 2013), content addressable (Graves et al., 2014; Weston et al., 2014) and location addressable memories (Graves et al., 2014), as well as pointers (Kurach et al., 2015) and hard-coded arithmetic operations (Neelakantan et al., 2015).

These three ideas have, so far, not received much attention in neuroscience. We thus formulate these ideas as three hypotheses about the brain, examine evidence for them, and sketch how experiments could test them. But first, let us state the hypotheses more precisely.

1.1. Hypothesis 1 – the brain optimizes cost functions

The central hypothesis for linking the two fields is that biological systems, like many machine-learning systems, are able to optimize cost functions. The idea of cost functions means that neurons in a brain area can somehow change their properties, e.g., the properties of their synapses, so that they get better at doing whatever the cost function defines as their role. Human behavior sometimes approaches optimality in a domain, e.g., during movement (Körding, 2007), which suggests that the brain may have learned optimal strategies. Subjects minimize energy consumption of their movement system (Taylor and Faisal, 2011), and minimize risk and damage to their body, while maximizing financial and movement gains. Computationally, we now know that optimization of trajectories gives rise to elegant solutions for very complex motor tasks (Harris and Wolpert, 1998; Todorov and Jordan, 2002; Mordatch et al., 2012). We suggest that cost function optimization occurs much more generally in shaping the internal representations and processes used by the brain. Importantly, we also suggest that this requires the brain to have mechanisms for efficient credit assignment in multilayer and recurrent networks.

1.2. Hypothesis 2 – cost functions are diverse across areas and change over development

A second realization is that cost functions need not be global. Neurons in different brain areas may optimize different things, e.g., the mean squared error of movements, surprise in a visual stimulus, or the allocation of attention. Importantly, such a cost function could be locally generated. For example, neurons could locally evaluate the quality of their statistical model of their inputs (Figure 1B). Alternatively, cost functions for one area could be generated by another area. Moreover, cost functions may change over time, e.g., guiding young humans to understanding simple visual contrasts early on, and faces a bit later3. This could allow the developing brain to bootstrap more complex knowledge based on simpler knowledge. Cost functions in the brain are likely to be complex and to be arranged to vary across areas and over development.

1.3. Hypothesis 3 – specialized systems allow efficient solution of key computational problems

A third realization is that structure matters. The patterns of information flow seem fundamentally different across brain areas, suggesting that they solve distinct computational problems. Some brain areas are highly recurrent, perhaps making them predestined for short-term memory storage (Wang, 2012). Some areas contain cell types that can switch between qualitatively different states of activation, such as a persistent firing mode vs. a transient firing mode, in response to particular neurotransmitters (Hasselmo, 2006). Other areas, like the thalamus appear to have the information from other areas flowing through them, perhaps allowing them to determine information routing (Sherman, 2005). Areas like the basal ganglia are involved in reinforcement learning and gating of discrete decisions (Doya, 1999; Sejnowski and Poizner, 2014). As every programmer knows, specialized algorithms matter for efficient solutions to computational problems, and the brain is likely to make good use of such specialization (Figure 1C).

These ideas are inspired by recent advances in machine learning, but we also propose that the brain has major differences from any of today's machine learning techniques. In particular, the world gives us a relatively limited amount of information that we could use for supervised learning (Fodor and Crowther, 2002). There is a huge amount of information available for unsupervised learning, but there is no reason to assume that a generic unsupervised algorithm, no matter how powerful, would learn the precise things that humans need to know, in the order that they need to know it. The evolutionary challenge of making unsupervised learning solve the “right” problems is, therefore, to find a sequence of cost functions that will deterministically build circuits and behaviors according to prescribed developmental stages, so that in the end a relatively small amount of information suffices to produce the right behavior. For example, a developing duck imprints (Tinbergen, 1965) a template of its parent, and then uses that template to generate goal-targets that help it develop other skills like foraging.

Generalizing from this and from other studies (Minsky, 1977; Ullman et al., 2012), we propose that many of the brain's cost functions arise from such an internal bootstrapping process. Indeed, we propose that biological development and reinforcement learning can, in effect, program the emergence of a sequence of cost functions that precisely anticipates the future needs faced by the brain's internal subsystems, as well as by the organism as a whole. This type of developmentally programmed bootstrapping generates an internal infrastructure of cost functions which is diverse and complex, while simplifying the learning problems faced by the brain's internal processes. Beyond simple tasks like familial imprinting, this type of bootstrapping could extend to higher cognition, e.g., internally generated cost functions could train a developing brain to properly access its memory or to organize its actions in ways that will prove to be useful later on. The potential bootstrapping mechanisms that we will consider operate in the context of unsupervised and reinforcement learning, and go well beyond the types of curriculum learning ideas used in today's machine learning (Bengio et al., 2009).

In the rest of this paper, we will elaborate on these hypotheses. First, we will argue that both local and multi-layer optimization is, perhaps surprisingly, compatible with what we know about the brain. Second, we will argue that cost functions differ across brain areas and change over time and describe how cost functions interacting in an orchestrated way could allow bootstrapping of complex function. Third, we will list a broad set of specialized problems that need to be solved by neural computation, and the brain areas that have structure that seems to be matched to a particular computational problem. We then discuss some implications of the above hypotheses for research approaches in neuroscience and machine learning, and sketch a set of experiments to test these hypotheses. Finally, we discuss this architecture from the perspective of evolution.

2. The brain can optimize cost functions

Much of machine learning is based on efficiently optimizing functions, and, as we will detail below, the ability to use backpropagation of error (Werbos, 1974; Rumelhart et al., 1986) to calculate gradients of arbitrary parametrized functions has been a key breakthrough. In Hypothesis 1, we claim that the brain is also, at least in part4, an optimization machine. But what exactly does it mean to say that the brain can optimize cost functions? After all, many processes can be viewed as optimizations. For example, the laws of physics are often viewed as minimizing an action functional, while evolution optimizes the fitness of replicators over a long timescale. To be clear, our main claims are: that (a) the brain has powerful mechanisms for credit assignment during learning that allow it to optimize global functions in multi-layer networks by adjusting the properties of each neuron to contribute to the global outcome, and that (b) the brain has mechanisms to specify exactly which cost functions it subjects its networks to, i.e., that the cost functions are highly tunable, shaped by evolution and matched to the animal's ethological needs. Thus, the brain uses cost functions as a key driving force of its development, much as modern machine learning systems do.

To understand the basis of these claims, we must now delve into the details of how the brain might efficiently perform credit assignment throughout large, multi-layered networks, in order to optimize complex functions. We argue that the brain uses several different types of optimization to solve distinct problems. In some structures, it may use genetic pre-specification of circuits for problems that require only limited learning based on data, or it may exploit local optimization to avoid the need to assign credit through many layers of neurons. It may also use a host of proposed circuit structures that would allow it to actually perform, in effect, backpropagation of errors through a multi-layer network, using biologically realistic mechanisms—a feat that had once been widely believed to be biologically implausible (Crick, 1989; Stork, 1989). Potential such mechanisms include circuits that literally backpropagate error derivatives in the manner of conventional backpropagation, as well as circuits that provide other efficient means of approximating the effects of backpropagation, i.e., of rapidly computing the approximate gradient of a cost function relative to any given connection weight in the network. Lastly, the brain may use algorithms that exploit specific aspects of neurophysiology—such as spike timing dependent plasticity, dendritic computation, local excitatory-inhibitory networks, or other properties—as well as the integrated nature of higher-level brain systems. Such mechanisms promise to allow learning capabilities that go even beyond those of current backpropagation networks.

2.1. Local self-organization and optimization without multi-layer credit assignment

Not all learning requires a general-purpose optimization mechanism like gradient descent5. Many theories of cortex (George and Hawkins, 2009; Kappel et al., 2014) emphasize potential self-organizing and unsupervised learning properties that may obviate the need for multi-layer backpropagation as such. Hebbian plasticity, which adjusts weights according to correlations in pre-synaptic and post-synaptic activity, is well established6. Various versions of Hebbian plasticity (Miller and MacKay, 1994), e.g., with nonlinearities (Brito and Gerstner, 2016), can give rise to different forms of correlation and competition between neurons, leading to the self-organized formation of ocular dominance columns, self-organizing maps and orientation columns (Miller et al., 1989; Ferster and Miller, 2000). Often these types of local self-organization can also be viewed as optimizing a cost function: for example, certain forms of Hebbian plasticity can be viewed as extracting the principal components of the input, which minimizes a reconstruction error (Pehlevan and Chklovskii, 2015).

To generate complex temporal patterns, the brain may also implement other forms of learning that do not require any equivalent of full backpropagation through a multilayer network. For example, “liquid-” (Maass et al., 2002) or “echo-state machines” (Jaeger and Haas, 2004) are randomly connected recurrent networks that form a basis set (also known as a “reservoir”) of random filters, which can be harnessed for learning with tunable readout weights. Variants exhibiting chaotic, spontaneous dynamics can even be trained by feeding back readouts into the network and suppressing the chaotic activity (Sussillo and Abbott, 2009). Learning only the readout layer makes the optimization problem much simpler (indeed, equivalent to regression for supervised learning). Additionally, echo state networks can be trained by reinforcement learning as well as supervised learning (Bush, 2007; Hoerzer et al., 2014). Reservoirs of random nonlinear filters are one interpretation of the diverse, high-dimensional, mixed-selectivity tuning properties of many neurons, e.g., in the prefrontal cortex (Enel et al., 2016). Other variants of learning rules that modify only a fraction of the synapses inside a random network are being developed as models of biological working memory and sequence generation (Rajan et al., 2016).

2.2. Biological implementation of optimization

We argue that the above mechanisms of local self-organization are likely insufficient to account for the brain's powerful learning performance (Brea and Gerstner, 2016). To elaborate on the need for an efficient means of gradient computation in the brain, we will first place backpropagation into its computational context (Hinton, 1989; Baldi and Sadowski, 2015). Then we will explain how the brain could plausibly implement approximations of gradient descent.

2.2.1. The need for efficient gradient descent in multi-layer networks

The simplest mechanism to perform cost function optimization is sometimes known as the “twiddle” algorithm or, more technically, as “serial perturbation.” This mechanism works by perturbing (i.e., “twiddling”), with a small increment, a single weight in the network, and verifying improvement by measuring whether the cost function has decreased compared to the network's performance with the weight unperturbed. If improvement is noticeable, the perturbation is used as a direction of change to the weight; otherwise, the weight is changed in the opposite direction (or not changed at all). Serial perturbation is therefore a method of “coordinate descent” on the cost, but it is slow and requires global coordination: each synapse in turn is perturbed while others remain fixed.

Weight perturbation (or parallel perturbation) perturbs all of the weights in the network at once. It is able to optimize small networks to perform tasks but generally suffers from high variance. That is, the measurement of the gradient direction is noisy and changes drastically from perturbation to perturbation because a weight's influence on the cost is masked by the changes of all other weights, and there is only one scalar feedback signal indicating the change in the cost7. Weight perturbation is dramatically inefficient for large networks. In fact, parallel and serial perturbation learn at approximately the same rate if the time measure counts the number of times the network propagates information from input to output (Werfel et al., 2005).

Some efficiency gain can be achieved by perturbing neural activities instead of synaptic weights, acknowledging the fact that any long-range effect of a synapse is mediated through a neuron. Like weight perturbation and unlike serial perturbation, minimal global coordination is needed: each neuron only needs to receive a feedback signal indicating the global cost. The variance of node perturbation's gradient estimate is far smaller than that of weight perturbation under the assumptions that either all neurons or all weights, respectively, are perturbed and that they are perturbed at the same frequency. In this case, node perturbation's variance is proportional to the number of cells in the network, not the number of synapses.

All of these approaches are slow either due to the time needed for serial iteration over all weights or the time needed for averaging over low signal-to-noise ratio gradient estimates. To their credit however, none of these approaches requires more than knowledge of local activities and the single global cost signal. Real neural circuits in the brain have mechanisms (e.g., diffusible neuromodulators) that appear to code the signals relevant to implementing those algorithms. In many cases, for example in reinforcement learning, the cost function, which is computed based on interaction with an unknown environment, cannot be differentiated directly, and an agent has no choice but to deploy clever twiddling to explore at some level of the system (Williams, 1992).

Backpropagation, in contrast, works by computing the sensitivity of the cost function to each weight based on the layered structure of the system. The derivatives of the cost function with respect to the last layer can be used to compute the derivatives of the cost function with respect to the penultimate layer, and so on, all the way down to the earliest layers8. Backpropagation can be computed rapidly, and for a single input-output pattern, it exhibits no variance in its gradient estimate. The backpropagated gradient has no more noise for a large system than for a small system, so deep and wide architectures with great computational power can be trained efficiently.

2.2.2. Biologically plausible approximations of gradient descent

To permit biological learning with efficiency approaching that of machine learning methods, some provision for more sophisticated gradient propagation may be suspected. Contrary to what was once a common assumption, there are now many proposed “biologically plausible” mechanisms by which a neural circuit could implement optimization algorithms that, like backpropagation, can efficiently make use of the gradient. These include Generalized Recirculation (O'Reilly, 1996), Contrastive Hebbian Learning (Xie and Seung, 2003), random feedback weights together with synaptic homeostasis (Lillicrap et al., 2014; Liao et al., 2015), spike timing dependent plasticity (STDP) with iterative inference and target propagation (Bengio et al., 2015a; Scellier and Bengio, 2016), complex neurons with backpropagating action-potentials (Körding and König, 2000), and others (Balduzzi et al., 2014). While these mechanisms differ in detail, they all invoke feedback connections that carry error phasically. Learning occurs by comparing a prediction with a target, and the prediction error is used to drive top-down changes in bottom-up activity.

As an example, consider O'Reilly's temporally eXtended Contrastive Attractor Learning (XCAL) algorithm (O'Reilly et al., 2012, 2014b). Suppose we have a multilayer neural network with an input layer, an output layer, and a set of hidden layers in between. O'Reilly showed that the same functionality as backpropagation can be implemented by a bidirectional network with the same weights but symmetric connections. After computing the outputs using the forward connections only, we set the output neurons to the values they should have. The dynamics of the network then cause the hidden layers' activities to evolve toward a stable attractor state linking input to output. The XCAL algorithm performs a type of local modified Hebbian learning at each synapse in the network during this process (O'Reilly et al., 2012). The XCAL Hebbian learning rule compares the local synaptic activity (pre x post) during the early phase of this settling (before the attractor state is reached) to the final phase (once the attractor state has been reached), and adjusts the weights in a way that should make the early phase reflect the later phase more closely. These contrastive Hebbian learning methods even work when the connection weights are not precisely symmetric (O'Reilly, 1996). XCAL has been implemented in biologically plausible conductance-based neurons and basically implements the backpropagation of error approach.

Approximations to backpropagation could also be enabled by the millisecond-scale timing of of neural activities (O'Reilly et al., 2014b). Spike timing dependent plasticity (STDP) (Markram et al., 1997), for example, is a feature of some neurons in which the sign of the synaptic weight change depends on the precise millisecond-scale relative timing of pre-synaptic and post-synaptic spikes. This is conventionally interpreted as Hebbian plasticity that measures the potential for a causal relationship between the pre-synaptic and post-synaptic spikes: a pre-synaptic spike could have contributed to causing a post-synaptic spike only if it occurs shortly beforehand9. To enable a backpropagation mechanism, Hinton has suggested an alternative interpretation: that neurons could encode the types of error derivatives needed for backpropagation in the temporal derivatives of their firing rates (Hinton, 2007, 2016). STDP then corresponds to a learning rule that is sensitive to these error derivatives (Xie and Seung, 2000; Bengio et al., 2015b). In other words, in an appropriate network context, STDP learning could give rise to a biological implementation of backpropagation10.

Another possible mechanism, by which biological neural networks could approximate backpropagation, is “feedback alignment” (Lillicrap et al., 2014; Liao et al., 2015). There, the feedback pathway in backpropagation, by which error derivatives at a layer are computed from error derivatives at the subsequent layer, is replaced by a set of random feedback connections, with no dependence on the forward weights. Subject to the existence of a synaptic normalization mechanism and approximate sign-concordance between the feedforward and feedback connections (Liao et al., 2015), this mechanism of computing error derivatives works nearly as well as backpropagation on a variety of tasks. In effect, the forward weights are able to adapt to bring the network into a regime in which the random backwards weights actually carry the information that is useful for approximating the gradient. This is a remarkable and surprising finding, and is indicative of the fact that our understanding of gradient descent optimization, and specifically of the mechanisms by which backpropagation itself functions, are still incomplete. In neuroscience, meanwhile, we find feedback connections almost wherever we find feed-forward connections, and their role is the subject of diverse theories (Callaway, 2004; Maass et al., 2007). It should be noted that feedback alignment as such does not specify exactly how neurons represent and make use of the error signals; it only relaxes a constraint on the transport of the error signals. Thus, feedback alignment is more a primitive that can be used in fully biological (approximate) implementations of backpropagation, than a fully biological implementation in its own right. As such, it may be possible to incorporate it into several of the other schemes discussed here.

The above “biological” implementations of backpropagation still lack some key aspects of biological realism. For example, in the brain, neurons tend to be either excitatory or inhibitory but not both, whereas in artificial neural networks a single neuron may send both excitatory and inhibitory signals to its downstream neurons. Fortunately, this constraint is unlikely to limit the functions that can be learned (Parisien et al., 2008; Tripp and Eliasmith, 2016). Other biological considerations, however, need to be looked at in more detail: the highly recurrent nature of biological neural networks, which show rich dynamics in time, and the fact that most neurons in mammalian brains communicate via spikes. We now consider these two issues in turn.

2.2.2.1. Temporal credit assignment:

The biological implementations of backpropagation proposed above, while applicable to feedforward networks, do not give a natural implementation of “backpropagation through time” (BPTT) (Werbos, 1990) for recurrent networks, which is widely used in machine learning for training recurrent networks on sequential processing tasks. BPTT “unfolds” a recurrent network across multiple discrete time steps and then runs backpropagation on the unfolded network to assign credit to particular units at particular time steps11. While the network unfolding procedure of BPTT itself does not seem biologically plausible, to our intuition, it is unclear to what extent temporal credit assignment is truly needed (Ollivier and Charpiat, 2015) for learning particular temporally extended tasks.

If the system is given access to appropriate memory stores and representations (Buonomano and Merzenich, 1995; Gershman et al., 2012, 2014) of temporal context, this could potentially mitigate the need for temporal credit assignment as such—in effect, memory systems could “spatialize” the problem of temporal credit assignment12. For example, memory networks (Weston et al., 2014) store everything by default up to a certain buffer size, eliminating the need to perform credit assignment over the write-to-memory events, such that the network only needs to perform credit assignment over the read-from-memory events. In another example, certain network architectures that are superficially very deep, but which possess particular types of “skip connections,” can actually be seen as ensembles of comparatively shallow networks (Veit et al., 2016); applied in the time domain, this could limit the need to propagate errors far backwards in time. Other, similar specializations or higher-levels of structure could, potentially, further ease the burden on credit assignment.

Can generic recurrent networks perform temporal credit assignment in in a way that is more biologically plausible than BPTT? Indeed, new discoveries are being made about the capacity for supervised learning in continuous-time recurrent networks with more realistic synapses and neural integration properties. In internal FORCE learning (Sussillo and Abbott, 2009), internally generated random fluctuations inside a chaotic recurrent network are adjusted to provide feedback signals that drive weight changes internal to the network while the outputs are clamped to desired patterns. This is made possible by a learning procedure that rapidly adjusts the network output to a state where it is close to the clamped values, and exerts continuous control to keep this difference small throughout the learning process13. This procedure is able to control and exploit the chaotic dynamical patterns that are spontaneously generated by the network.

Werbos has proposed in his “error critic” that an online approximation to BPTT can be achieved by learning to predict the backward-through-time gradient signal (costate) in a manner analogous to the prediction of value functions in reinforcement learning (Werbos and Si, 2004). This kind of idea was recently applied in (Jaderberg et al., 2016) to allow decoupling of different parts of a network during training and to facilitate backpropagation through time. Broadly, we are only beginning to understand how neural activity can itself represent the time variable (Xu et al., 2014; Finnerty et al., 2015)14, and how recurrent networks can learn to generate trajectories of population activity over time (Liu and Buonomano, 2009). Moreover, as we discuss below, a number of cortical models also propose means, other than BPTT, by which networks could be trained on sequential prediction tasks, even in an online fashion (O'Reilly et al., 2014b; Cui et al., 2015; Brea et al., 2016). A broad range of ideas can be used to approximate BPTT in more realistic ways.

2.2.2.2. Spiking networks:

It has been difficult to apply gradient descent learning directly to spiking neural networks15,16, although there do exist learning rules for doing so in specific representational contexts and network structures (Bekolay et al., 2013). A number of optimization procedures have been used to generate, indirectly, spiking networks which can perform complex tasks, by performing optimization on a continuous representation of the network dynamics and embedding variables into high-dimensional spaces with many spiking neurons representing each variable (Thalmeier et al., 2015; Abbott et al., 2016; DePasquale et al., 2016; Komer and Eliasmith, 2016). The use of recurrent connections with multiple timescales can remove the need for backpropagation in the direct training of spiking recurrent networks (Bourdoukan and Denève, 2015). Fast connections maintain the network in a state where slow connections have local access to a global error signal. While the biological realism of these methods is still unknown, they all allow connection weights to be learned in spiking networks.

These and other novel learning procedures illustrate the fact that we are only beginning to understand the connections between the temporal dynamics of biologically realistic networks, and mechanisms of temporal and spatial credit assignment. Nevertheless, we argue here that existing evidence suggests that biologically plausible neural networks can solve these problems—in other words, it is possible to efficiently optimize complex functions of temporal history in the context of spiking networks of biologically realistic neurons. In any case, there is little doubt that spiking recurrent networks using realistic population coding schemes can, with an appropriate choice of connection weights, compute complicated, cognitively relevant functions17. The question is how the developing brain efficiently learns such complex functions.

2.3. Other principles for biological learning

The brain has mechanisms and structures that could support learning mechanisms different from typical gradient-based optimization algorithms employed in artificial neural networks.

2.3.1. Exploiting biological neural mechanisms

The complex physiology of individual biological neurons may not only help explain how some form of efficient gradient descent could be implemented within the brain, but also could provide mechanisms for learning that go beyond backpropagation. This suggests that the brain may have discovered mechanisms of credit assignment quite different from those dreamt up by machine learning.

One such biological primitive is dendritic computation, which could impact prospects for learning algorithms in several ways. First, real neurons are highly nonlinear (Antic et al., 2010), with the dendrites of each single neuron implementing18 something computationally similar to a three-layer neural network (Mel, 1992)19. Individual neurons thus should not be regarded as single “nodes” but as multi-component sub-networks. Second, when a neuron spikes, its action potential propagates back from the soma into the dendritic tree. However, it propagates more strongly into the branches of the dendritic tree that have been active (Williams and Stuart, 2000), potentially simplifying the problem of credit assignment (Körding and König, 2000). Third, neurons can have multiple somewhat independent dendritic compartments, as well as a somewhat independent somatic compartment, which means that the neuron should be thought of as storing more than one variable. Thus, there is the possibility for a neuron to store both its activation itself, and the error derivative of a cost function with respect to its activation, as required in backpropagation, and biological implementations of backpropagation based on this principle have been proposed (Körding and König, 2001; Schiess et al., 2016)20. Overall, the implications of dendritic computation for credit assignment in deep networks are only beginning to be considered21. But it is clear that the types of bi-directional, non-linear, multi-variate interactions that are possible inside a single neuron could support gradient descent learning or other powerful optimization mechanisms.

Beyond dendritic computation, diverse mechanisms (Marblestone and Boyden, 2014) like retrograde (post-synaptic to pre-synaptic) signals using cannabinoids (Wilson and Nicoll, 2001), or rapidly-diffusing gases such as nitric oxide (Arancio et al., 1996), are among many that could enable learning rules that go beyond conventional conceptions of backpropagation. Harris has suggested (Harris, 2008; Lewis and Harris, 2014) how slow, retroaxonal (i.e., from the outgoing synapses back to the parent cell body) transport of molecules like neurotrophins could allow neural networks to implement an analog of an exchangeable currency in economics, allowing networks to self-organize to efficiently provide information to downstream “consumer” neurons that are trained via faster and more direct error signals. The existence of these diverse mechanisms may call into question traditional, intuitive notions of “biological plausibility” for learning algorithms.

Another potentially important biological primitive is neuromodulation. The same neuron or circuit can exhibit different input-output responses and plasticity depending on a global circuit state, as reflected by the concentrations of various neuromodulators like dopamine, serotonin, norepinephrine, acetylcholine, and hundreds of different neuropeptides such as opiods (Bargmann, 2012; Bargmann and Marder, 2013). These modulators interact in complex and cell-type-specific ways to influence circuit function. Interactions with glial cells also play a role in neural signaling and neuromodulation, leading to the concept of “tripartite” synapses that include a glial contribution (Perea et al., 2009). Modulation could have many implications for learning. First, modulators can be used to gate synaptic plasticity on and off selectively in different areas and at different times, allowing precise, rapidly updated orchestration of where and when cost functions are applied. Furthermore, it has been argued that a single neural circuit can be thought of as multiple overlapping circuits with modulation switching between them (Bargmann, 2012; Bargmann and Marder, 2013). In a learning context, this could potentially allow sharing of synaptic weight information between overlapping circuits. Dayan (2012) discusses further computational aspects of neuromodulation. Overall, neuromodulation seems to expand the range of possible algorithms that could be used for optimization.

2.3.2. Learning in the cortical sheet

A number of models attempt to explain cortical learning on the basis of specific architectural features of the 6-layered cortical sheet. These models generally agree that a primary function of the cortex is some form of unsupervised learning via prediction (O'Reilly et al., 2014b; Brea et al., 2016)22. Some cortical learning models are explicit attempts to map cortical structure onto the framework of message-passing algorithms for Bayesian inference (Lee and Mumford, 2003; Dean, 2005; George and Hawkins, 2009), while others start with particular aspects of cortical neurophysiology and seek to explain those in terms of a learning function, or in terms of a computational function, e.g., hierarchical clustering (Rodriguez et al., 2004). For example, the nonlinear and dynamical properties of cortical pyramidal neurons—the principal excitatory neuron type in cortex (Shepherd, 2014)—are of particular interest here, especially because these neurons have multiple dendritic zones that are targeted by different kinds of projections, which may allow the pyramidal neuron to make comparisons of top-down and bottom-up inputs23.

Other aspects of the laminar cortical architecture could be crucial to how the brain implements learning. Local inhibitory neurons targeting particular dendritic compartments of the L5 pyramidal could be used to exert precise control over when and how the relevant feedback signals and associative mechanisms are utilized. Notably, local inhibitory networks could also give rise to competition (Petrov et al., 2010) between different representations in the cortex, perhaps allowing one cortical column to suppress others nearby, or perhaps even to send more sophisticated messages to gate the state transitions of its neighbors (Bach and Herger, 2015). Moreover, recurrent connectivity with the thalamus, structured bursts of spiking, and cortical oscillations (not to mention other mechanisms like neuromodulation) could control the storage of information over time, to facilitate learning based on temporal prediction. These concepts begin to suggest preliminary, exploratory models for how the detailed anatomy and physiology of the cortex could be interpreted within a machine-learning framework that goes beyond backpropagation. But these are early days: we still lack detailed structural/molecular and functional maps of even a single local cortical microcircuit.

2.3.3. One-shot learning

Human learning is often one-shot: it can take just a single exposure to a stimulus to never forget it, as well as to generalize from it to new examples. One way of allowing networks to have such properties is what is described by I-theory, in the context of learning invariant representations for object recognition (Anselmi et al., 2015). Instead of training via gradient descent, image templates are stored in the weights of simple-complex cell networks while objects undergo transformations, similar to the use of stored templates in HMAX (Serre et al., 2007). The theories then aim to show that you can invariantly and discriminatively represent objects using a single sample, even of a new class (Anselmi et al., 2015)24.

Additionally, the nervous system may have a way of quickly storing and replaying sequences of events. This would allow the brain to move an item from episodic memory into a long-term memory stored in the weights of a cortical network (Ji and Wilson, 2007), by replaying the memory over and over. This solution effectively uses many iterations of weight updating to fully learn a single item, even if one has only been exposed to it once. Alternatively, the brain could rapidly store an episodic memory and then retrieve it later without the need to perform slow gradient updates, which has proven to be useful for fast reinforcement learning in scenarios with limited available data (Blundell et al., 2016).

Finally, higher-level systems in the brain may be able to implement Bayesian learning of sequential programs, which is a powerful means of one-shot learning (Lake et al., 2015). This type of cognition likely relies on an interaction between multiple brain areas such as the prefrontal cortex and basal ganglia.

These potential substrates of one-shot learning rely on mechanisms other than simple gradient descent. It should be noted, though, that recent architectural advances, including specialized spatial attention and feedback mechanisms (Rezende et al., 2016), as well as specialized memory mechanisms (Santoro et al., 2016), do allow some types of one-shot generalization to be driven by backpropagation-based learning.

2.3.4. Active learning

Human learning is often active and deliberate. It seems likely that, in human learning, actions are chosen so as to generate interesting training examples, and sometimes also to test specific hypotheses. Such ideas of active learning and “child as scientist” go back to Piaget and have been elaborated more recently (Gopnik et al., 2000). We want our learning to be based on maximally informative samples, and active querying of the environment (or of internal subsystems) provides a way route to this.

At some level of organization, of course, it would seem useful for a learning system to develop explicit representations of its uncertainty, since this can be used to guide the system to actively seek the information that would reduce its uncertainty most quickly. Moreover, there are population coding mechanisms that could support explicit probabilistic computations (Zemel and Dayan, 1997; Sahani and Dayan, 2003; Rao, 2004; Ma et al., 2006; Eliasmith and Martens, 2011; Gershman and Beck, 2016). Yet it is unclear to what extent and at what levels the brain uses an explicitly probabilistic framework, or to what extent probabilistic computations are emergent from other learning processes (Orhan and Ma, 2016)25,26.

Standard gradient descent does not incorporate any such adaptive sampling mechanism, e.g., it does not deliberately sample data so as to maximally reduce its uncertainty. Interestingly, however, stochastic gradient descent can be used to generate a system that samples adaptively (Alain et al., 2015; Bouchard et al., 2015). In other words, a system can learn, by gradient descent, how to choose its own input data samples in order to learn most quickly from them by gradient descent.

Ideally, the learner learns to choose actions that will lead to the largest improvements in its prediction or data compression performance (Schmidhuber, 2010). In Schmidhuber (2010), this is done in the framework of reinforcement learning, and incorporates a mechanisms for the system to measure its own rate of learning. In other words, it is possible to reinforcement-learn a policy for selecting the most interesting inputs to drive learning. Adaptive sampling methods are also known in reinforcement learning that can achieve optimal Bayesian exploration of Markov Decision Process environments (Sun et al., 2011; Guez et al., 2012).

These approaches achieve optimality in an arbitrary, abstract environment. But of course, evolution may also encode its implicit knowledge of the organism's natural environment, the behavioral goals of the organism, and the developmental stages and processes which occur inside the organism, as priors or heuristics27 which would further constrain the types of adaptive sampling that are optimal in practice. For example, simple heuristics like seeking certain perceptual signatures of novelty, or more complex heuristics like monitoring situations that other people seem to find interesting, might be good ways to bias sampling of the environment so as to learn more quickly. Other such heuristics might be used to give internal brain systems the types of training data that will be most useful to those particular systems at any given developmental stage.

We are only beginning to understand how active learning might be implemented in the brain. We speculate that multiple mechanisms, specialized to different brain systems and spatio-temporal scales, could be involved. The above examples suggest that at least some such mechanisms could be understood from the perspective of optimizing cost functions.

2.4. Differing biological requirements for supervised and reinforcement learning

We have suggested ways in which the brain could implement learning mechanisms of comparable power to backpropagation. But in many cases, the system may be more limited by the available training signals than by the optimization process itself. In machine learning, one distinguishes supervised learning, reinforcement learning and unsupervised learning, and the training data limitation manifests differently in each case.

Both supervised and reinforcement learning require some form of teaching signal, but the nature of the teaching signal in supervised learning is different from that in reinforcement learning. In supervised learning, the trainer provides the entire vector of errors for the output layer and these are back-propagated to compute the gradient: a locally optimal direction in which to update all of the weights of a potentially multi-layer and/or recurrent network. In reinforcement learning, however, the trainer provides a scalar evaluation signal, but this is not sufficient to derive a low-variance gradient. Hence, some form of trial and error twiddling must be used to discover how to increase the evaluation signal. Consequently, reinforcement learning is generally much less efficient than supervised learning.

Reinforcement learning in shallow networks is simple to implement biologically. For reinforcement learning of a deep network to be biologically plausible, however, we need a more powerful learning mechanism, since we are learning based on a more limited evaluation signal than in the supervised case: we do not have the full target pattern to train toward. Nevertheless, approximations of gradient descent can be achieved in this case, and there are cases in which the scalar evaluation signal of reinforcement learning can be used to efficiently update a multi-layer network by gradient descent. The “attention-gated reinforcement learning” (AGREL) networks of Stanisor et al. (2013), Brosch et al. (2015), and Roelfsema and van Ooyen (2005), and variants like KickBack (Balduzzi, 2014), give a way to compute an approximation to the full gradient in a reinforcement learning context using a feedback-based attention mechanism for credit assignment within the multi-layer network. The feedback pathway, together with a diffusible reward signal, together gate plasticity. For networks with more than three layers, this gives rise to a model based on columns containing parallel feedforward and feedback pathways (Roelfsema and van Ooyen, 2005), and for recurrent networks that settle into attractor states it gives a reinforcement-trained version (Brosch et al., 2015) of the Almeida/Pineda recurrent backpropagation algorithm (Pineda, 1987). The process is still not as efficient or generic as backpropagation, but it seems that this form of feedback can make reinforcement learning in multi-layer networks more efficient than a naive node perturbation or weight perturbation approach.

The machine-learning field has recently been tackling the question of credit assignment in deep reinforcement learning. Deep Q-learning (Mnih et al., 2015) demonstrates reinforcement learning in a deep network, wherein most of the network is trained via backpropagation. In regular Q learning, we define a function Q, which estimates the best possible sum of future rewards (the return) if we are in a given state and take a given action. In deep Q learning, this function is approximated by a neural network that, in effect, estimates action-dependent returns in a given state. The network is trained using backpropagation of local errors in Q estimation, using the fact that the return decomposes into the current reward plus the discounted estimate of future return at the next moment. During training, as the agent acts in the environment, a series of loss functions is generated at each step, defining target patterns that can be used as the supervision signal for backpropagation. As Q is a highly nonlinear function of the state, tricks are needed to make deep Q learning efficient and stable, including experience replay and a particular type of mini-batch training. It is also necessary to store the outputs from the previous iteration (or clone the entire network) in evaluating the loss function for the subsequent iteration28.

This process for generating learning targets provides a kind of bridge between reinforcement learning and efficient backpropagation-based gradient descent learning29. Importantly, only temporally local information is needed making the approach relatively compatible with what we know about the nervous system.

Even given these advances, a key remaining issue in reinforcement learning is the problem of long timescales, e.g., learning the many small steps needed to navigate from London to Chicago. Many of the formal guarantees of reinforcement learning (Williams and Baird, 1993), for example, suggest that the difference between an optimal policy and the learned policy becomes increasingly loose as the discount factor shifts to take into account reward at longer timescales. Although the degree of optimality of human behavior is unknown, people routinely engage in adaptive behaviors that can take hours or longer to carry out, by using specialized processes like prospective memory to “remember to remember” relevant variables at the right times, permitting extremely long timescales of coherent action. Machine learning has not yet developed methods to deal with such a wide range of timescales and scopes of hierarchical action. Below we discuss ideas of hierarchical reinforcement learning that may make use of callable procedures and sub-routines, rather than operating explicitly in a time domain.

As we will discuss below, some form of deep reinforcement learning may be used by the brain for purposes beyond optimizing global rewards, including the training of local networks based on diverse internally generated cost functions. Scalar reinforcement-like signals are easy to compute, and easy to deliver to other areas, making them attractive mechanistically. If the brain does employ internally computed scalar reward-like signals as a basis for cost functions, it seems likely that it will have found an efficient means of reinforcement-based training of deep networks, but it is an open question whether an analog of deep Q networks, AGREL, or some other mechanism entirely, is used in the brain for this purpose. Moreover, as we will discuss further below, it is possible that reinforcement-type learning is made more efficient in the context of specialized brain systems like short term memories, replay mechanisms, and hierarchically organized control systems. These specialized systems could reduce reliance on a need for powerful credit assignment mechanisms for reinforcement learning. Finally, if the brain uses a diversity of scalar reward-like signals to implement different cost functions, then it may need to mediate delivery of those signals via a comparable diversity of molecular substrates. The great diversity of neuromodulatory signals, e.g., neuropeptides, in the brain (Bargmann, 2012; Bargmann and Marder, 2013) makes such diversity quite plausible, and moreover, the brain may have found other, as yet unknown, mechanisms of diversifying reward-like signaling pathways and enabling them to act independently of one another.

3. The cost functions are diverse across brain areas and time

In the last section, we argued that the brain can optimize functions. This raises the question of what functions it optimizes. Of course, in the brain, a cost function will itself be created (explicitly or implicitly) by a neural network shaped by the genome. Thus, the cost function used to train a given sub-network in the brain is a key innate property that can be built into the system by evolution. It may be much cheaper in biological terms to specify a cost function that allows the rapid learning of the solution to a problem than to specify the solution itself.

In Hypothesis 2, we proposed that the brain optimizes not a single “end-to-end” cost function, but rather a diversity of internally generated cost functions specific to particular brain functions30. To understand how and why the brain may use a diversity of cost functions, it is important to distinguish the differing types of cost functions that would be needed for supervised, unsupervised and reinforcement learning. We can also seek to identify types of cost functions that the brain may need to generate from a functional perspective, and how each may be implemented as supervised, unsupervised, reinforcement-based or hybrid systems.

3.1. How cost functions may be represented and applied

What additional circuitry is required to actually impose a cost function on an optimizing network? In the most familiar case, supervised learning may rely on computing a vector of errors at the output of a network, which will rely on some comparator circuitry31 to compute the difference between the network outputs and the target values. This difference could then be backpropagated to earlier layers. An alternative way to impose a cost function is to “clamp” the output of the network, forcing it to occupy a desired target state. Such clamping is actually assumed in some of the putative biological implementations of backpropagation described above, such as XCAL and target propagation. Alternatively, as described above, scalar reinforcement signals are attractive as internally-computed cost functions, but using them in deep networks requires special mechanisms for credit assignment.

In unsupervised learning, cost functions may not take the form of externally supplied training or error signals, but rather can be built into the dynamics inherent to the network itself, i.e., there may be no need for a separate circuit to compute and impose a cost function on the network. For example, specific spike-timing-dependent and homeostatic plasticity rules have been shown to give rise to gradient descent on a prediction error in recurrent neural networks (Galtier and Wainrib, 2013). Thus, specific unsupervised objectives could be implemented implicitly through specific local network dynamics32 and plasticity rules inside a network without explicit computation of cost function, nor explicit propagation of error derivatives.

Alternatively, explicit cost functions could be computed, delivered to an optimizing network, and used for unsupervised learning, following a variety of principles being discovered in machine learning (e.g., Radford et al., 2015; Lotter et al., 2015). These networks rely on backpropagation as the sole learning rule, and typically find a way to encode the desired cost function into the error derivatives which are backpropagated. For example, prediction errors naturally give rise to error signals for unsupervised learning, as do reconstruction errors in autoencoders, and these error signals can also be augmented with additional penalty or regularization terms that enforce objectives like sparsity or continuity, as described below. Then these error derivatives can be propagated throughout the network via standard backpropagation. In such systems, the objective function and the optimization mechanism can thus be mixed and matched modularly. In the next sections, we elaborate on these and other means of specifying and delivering cost functions in different learning contexts.

3.2. Cost functions for unsupervised learning

There are many objectives that can be optimized in an unsupervised context, to accomplish different kinds of functions or guide a network to form particular kinds of representations.

3.2.1. Matching the statistics of the input data using generative models

In one common form of unsupervised learning, higher brain areas attempt to produce samples that are statistically similar to those actually seen in lower layers. For example, the wake-sleep algorithm (Hinton et al., 1995) requires the sleep mode to sample potential data points whose distribution should then match the observed distribution. Unsupervised pre-training of deep networks is an instance of this (Erhan and Manzagol, 2009), typically making use of a stacked auto-encoder framework. Similarly, in target propagation (Bengio, 2014), a top-down circuit, together with lateral information, has to produce data that directs the local learning of a bottom-up circuit and vice-versa. Ladder autoencoders make use of lateral connections and local noise injection to introduce an unsupervised cost function, based on internal reconstructions, that can be readily combined with supervised cost functions defined on the networks top layer outputs (Valpola, 2015). Compositional generative models generate a scene from discrete combinations of template parts and their transformations (Wang and Yuille, 2014), in effect performing a rendering of a scene based on its structural description. Hinton and colleagues have also proposed cortical “capsules” (Hinton et al., 2011; Tang et al., 2012, 2013) for compositional inverse rendering. The network can thus implement a statistical goal that embodies some understanding of the way that the world produces samples33.

Learning rules for generative models have historically involved local message passing of a form quite different from backpropagation, e.g., in a multi-stage process that first learns one layer at a time and then fine-tunes via the wake-sleep algorithm (Hinton et al., 2006). Message-passing implementations of probabilistic inference have also been proposed as an explanation and generalization of deep convolutional networks (Chen et al., 2014; Patel et al., 2015). Various mappings of such processes onto neural circuitry have been attempted (George and Hawkins, 2009; Lee and Yuille, 2011; Sountsov and Miller, 2015), and related models (Makin et al., 2013, 2016) have been used to account for optimal multi-sensory integration in the brain. Feedback connections tend to terminate in distinct layers of cortex relative to the feedforward ones (Felleman and Van Essen, 1991; Callaway, 2004) making the idea of separate but interacting networks for recognition and generation potentially attractive34. Interestingly, such sub-networks might even be part of the same neuron and map onto “apical” vs. “basal” parts of the dendritic tree (Körding and König, 2001; Urbanczik and Senn, 2014).

Generative models can also be trained via backpropagation. Recent advances have shown how to perform variational approximations to Bayesian inference inside backpropagation-based neural networks (Kingma and Welling, 2013), and how to exploit this to create generative models (Goodfellow et al., 2014a; Gregor et al., 2015; Radford et al., 2015; Eslami et al., 2016). Through either explicitly statistical or gradient descent based learning, the brain can thus obtain a probabilistic model that simulates features of the world.

3.2.2. Cost functions that approximate properties of the world

A perceiving system should exploit statistical regularities in the world that are not present in an arbitrary dataset or input distribution. For example, objects are sparse, at least in certain representations: there are far fewer objects than there are potential places in the world, and of all possible objects there is only a small subset visible at any given time. As such, we know that the output of an object recognition system must have sparse activations. Building the assumption of sparseness into simulated systems replicates a number of representational properties of the early visual system (Olshausen and Field, 1997; Rozell et al., 2008), and indeed the original paper on sparse coding obtained sparsity by gradient descent optimization of a cost function (Olshausen and Field, 1996). A range of unsupervised machine learning techniques, such as the sparse autoencoders (Le et al., 2012) used to discover cats in YouTube videos, build sparseness into neural networks. Building in such spatio-temporal sparseness priors should serve as an “inductive bias” (Mitchell, 1980) that can accelerate learning.

But we know much more about the regularities of objects. As young babies, we already know (Bremner et al., 2015) that objects tend to persist over time. The emergence or disappearance of an object from a region of space is a rare event. Moreover, object locations and configurations tend to be coherent in time. We can formulate this prior knowledge as a cost function, for example by penalizing representations which are not temporally continuous. This idea of continuity is used in a great number of artificial neural networks and related models (Wiskott and Sejnowski, 2002; Földiák, 2008; Mobahi et al., 2009). Imposing continuity within certain models gives rise to aspects of the visual system including complex cells (Körding et al., 2004), specific properties of visual invariance (Isik et al., 2012), and even other representational properties such as the existence of place cells (Wyss et al., 2006; Franzius et al., 2007). Unsupervised learning mechanisms that maximize temporal coherence or slowness are increasingly used in machine learning35.

We also know that objects tend to undergo predictable sequences of transformations, and it is possible to build this assumption into unsupervised neural learning systems (George and Hawkins, 2009). The minimization of prediction error explains a number of properties of the nervous system (Friston and Stephan, 2007; Huang and Rao, 2011), and biologically plausible theories are available for how cortex could learn using prediction errors by exploiting temporal differences (O'Reilly et al., 2014b) or top-down feedback (George and Hawkins, 2009). In one implementation, a system can simply predict the next input delivered to the system and can then use the difference between the actual next input and the predicted next input as a full vectorial error signal for supervised gradient descent. Thus, rather than optimization of prediction error being implicitly implemented by the network dynamics, the prediction error is used as an explicit cost function in the manner of supervised learning, leading to error derivatives which can be back-propagated. Then, no special learning rules beyond simple backpropagation are needed. This approach has recently been advanced within machine learning (Lotter et al., 2015, 2016). Recently, combining such prediction-based learning with a specific gating mechanism has been shown to lead to unsupervised learning of disentangled representations (Whitney et al., 2016). Neural networks can also be designed to learn to invert spatial transformations (Jaderberg et al., 2015). Statistically describing transformations or sequences is thus an unsupervised way of learning representations.

Furthermore, there are multiple modalities of input to the brain. Each sensory modality is primarily connected to one part of the brain36. But higher levels of cortex in each modality are heavily connected to the other modalities. This can enable forms of self-supervised learning: with a developing visual understanding of the world we can predict its sounds, and then test those predictions with the auditory input, and vice versa. The same is true about multiple parts of the same modality: if we understand the left half of the visual field, it tells us an awful lot about the right. Indeed, we can use observations of one part of a visual scene to predict the contents of other parts (Noroozi and Favaro, 2016; van den Oord et al., 2016), and optimize a cost function that reflects the discrepancy. Maximizing mutual information is a natural way of improving learning (Becker and Hinton, 1992; Mohamed and Rezende, 2015), and there are many other ways in which multiple modalities or processing streams could mutually train one another. This way, each modality effectively produces training signals for the others37. Evidence from psychophysics suggests that some kind of training via detection of sensory conflicts may be occurring in children (Nardini et al., 2010).

3.3. Cost functions for supervised learning

In what cases might the brain use supervised learning, given that it requires the system to “already know” the exact target pattern to train toward? One possibility is that the brain can store records of states that led to good outcomes. For example, if a baby reaches for a target and misses, and then tries again and successfully hits the target, then the difference in the neural representations of these two tries reflects the direction in which the system should change. The brain could potentially use a comparator circuit to directly compute this vectorial difference in the neural population codes and then apply this difference vector as an error signal.

Another possibility is that the brain uses supervised learning to implement a form of “chunking,” i.e., a consolidation of something the brain already knows how to do: routines that are initially learned as multi-step, deliberative procedures could be compiled down to more rapid and automatic functions by using supervised learning to train a network to mimic the overall input-output behavior of the original multi-step process. Such a process is assumed to occur in cognitive models like ACT-R (Servan-Schreiber and Anderson, 1990), and methods for compressing the knowledge in neural networks into smaller networks are also being developed (Ba and Caruana, 2014). Thus supervised learning can be used to train a network to do in “one step” what would otherwise require long-range routing and sequential recruitment of multiple systems.

3.4. Repurposing reinforcement learning for diverse internal cost functions

Certain generalized forms of reinforcement learning may be ubiquitous throughout the brain. Such reinforcement signals may be repurposed to optimize diverse internal cost functions. These internal cost functions could be specified at least in part by genetics.

Some brain systems such as in the striatum appear to learn via some form of temporal difference reinforcement learning (Tesauro, 1995; Foster et al., 2000). This is reinforcement learning based on a global value function (O'Reilly et al., 2014a) that predicts total future reward or utility for the agent. Reward-driven signaling is not restricted to the striatum, and is present even in primary visual cortex (Chubykin et al., 2013; Stanisor et al., 2013). Remarkably, the reward signaling in primary visual cortex is mediated in part by glial cells (Takata et al., 2011), rather than neurons, and involves the neurotransmitter acetylcholine (Chubykin et al., 2013; Hangya et al., 2015). On the other hand, some studies have suggested that visual cortex learns the basics of invariant object recognition in the absence of reward (Li and Dicarlo, 2012), perhaps using reinforcement only for more refined perceptual learning (Roelfsema et al., 2010).

But beyond these well-known global reward signals, we argue that the basic mechanisms of reinforcement learning may be widely re-purposed to train local networks using a variety of internally generated error signals. These internally generated signals may allow a learning system to go beyond what can be learned via standard unsupervised methods, effectively guiding or steering the system to learn specific features or computations (Ullman et al., 2012).

3.4.1. Cost functions for bootstrapping learning in the human environment

Special, internally-generated signals are needed specifically for learning problems where standard unsupervised methods—based purely on matching the statistics of the world, or on optimizing simple mathematical objectives like temporal continuity or sparsity—will fail to discover properties of the world which are statistically weak in an objective sense but nevertheless have special significance to the organism (Ullman et al., 2012). Indigo bunting birds, for example, learn a template for the constellations of the night sky long before ever leaving the nest to engage in navigation-dependent tasks (Emlen, 1967). This memory template is directly used to determine the direction of flight during migratory periods, a process that is modulated hormonally so that winter and summer flights are reversed. Learning is therefore a multi-phase process in which navigational cues are memorized prior to the acquisition of motor control.

In humans, we suspect that similar multi-stage bootstrapping processes are arranged to occur. Humans have innate specializations for social learning. We need to be able to read one another's expressions as indicated with hands and faces. Hands are important because they allow us to learn about the set of actions that can be produced by agents (Ullman et al., 2012). Faces are important because they give us insight into what others are thinking. People have intentions and personalities that differ from one another, and their feelings are important. How could we hack together cost functions, built on simple genetically specifiable mechanisms, to make it easier for a learning system to discover such behaviorally relevant variables?

Some preliminary studies are beginning to suggest specific mechanisms and heuristics that humans may be using to bootstrap more sophisticated knowledge. In a groundbreaking study, Ullman et al. (2012) asked how could we explain hands, to a system that does not already know about them, in a cheap way, without the need for labeled training examples? Hands are common in our visual space and have special roles in the scene: they move objects, collect objects, and caress babies. Building these biases into an area specialized to detect hands could guide the right kind of learning, by providing a downstream learning system with many likely positive examples of hands on the basis of innately-stored, heuristic signatures about how hands tend to look or behave (Ullman et al., 2012). Indeed, an internally supervised learning algorithm containing specialized, hard-coded biases to detect hands, on the basis of their typical motion properties, can be used to bootstrap the training of an image recognition module that learns to recognize hands based on their appearance. Thus, a simple, hard-coded module bootstraps the training of a much more complex algorithm for visual recognition of hands.

Ullman et al. (2012) then further exploits a combination of hand and face detection to bootstrap a predictor for gaze direction, based on the heuristic that faces tend to be looking toward hands. Of course, given a hand detector, it also becomes much easier to train a system for reaching, crawling, and so forth. Efforts are underway in psychology to determine whether the heuristics discovered to be useful computationally are, in fact, being used by human children during learning (Yu and Smith, 2013; Fausey et al., 2016).

Ullman refers to such primitive, inbuilt detectors as innate “proto-concepts” (Ullman et al., 2012). Their broader claim is that such pre-specification of mutual supervision signals can make learning the relevant features of the world far easier, by giving an otherwise unsupervised learner the right kinds of hints or heuristic biases at the right times. Here we call these approximate, heuristic cost functions “bootstrap cost functions.” The purpose of the bootstrap cost functions is to reduce the amount of data required to learn a specific feature or task, but at the same time to avoid a need for fully unsupervised learning.

Could the neural circuitry for such a bootstrap hand-detector be pre-specified genetically? The precedent from other organisms is strong: for example, it is famously known that the frog retina contains circuitry sufficient to implement a kind of “bug detector” (Lettvin et al., 1959). Ullman's hand detector, in fact, operates via a simple local optical flow calculation to detect “mover” events. This type of simple, local calculation could potentially be implemented in genetically-specified and/or spontaneously self-organized neural circuitry in the retina or early dorsal visual areas (Bülthoff et al., 1989), perhaps similarly to the frog's “bug detector.”

How could we explain faces without any training data? Faces tend to have two dark dots in their upper half, a line in the lower half and tend to be symmetric about a vertical axis. Indeed, we know that babies are very much attracted to things with these generic features of upright faces starting from birth, and that they will acquire face-specific cortical areas38 in their first few years of life if not earlier (McKone et al., 2009). It is easy to define a local rule that produces a kind of crude face detector (e.g., detecting two dots on top of a horizontal line), and indeed some evidence suggests that the brain can rapidly detect faces without even a single feed-forward pass through the ventral visual stream (Crouzet and Thorpe, 2011). The crude detection of human faces used together with statistical learning should be analogous to semi-supervised learning (Sukhbaatar et al., 2014) and could allow identifying faces with high certainty.

Humans have areas devoted to emotional processing, and the brain seems to embody prior knowledge about the structure of emotional expressions and how they relate to causes in the world: emotions should have specific types of strong couplings to various other higher-level variables such as goal-satisfaction, should be expressed through the face, and so on (Phillips et al., 2002; Skerry and Spelke, 2014; Baillargeon et al., 2016; Lyons and Cheries, 2016). What about agency? It makes sense to describe, when dealing with high-level thinking, other beings as optimizers of their own goal functions. It appears that heuristically specified notions of goals and agency are infused into human psychological development from early infancy and that notions of agency are used to bootstrap heuristics for ethical evaluation (Hamlin et al., 2007; Skerry and Spelke, 2014). Algorithms for establishing more complex, innately-important social relationships such as joint attention are under study (Gao et al., 2014), building upon more primitive proto-concepts like face detectors and Ullman's hand detectors (Ullman et al., 2012). The brain can thus use innate detectors to create cost functions and training procedures to train the next stages of learning. This prior knowledge, encoded into brain structure via evolution, could allow learning signals to come from the right places and to appear developmentally at the right times.