Abstract

Background

Despite substantial resources devoted to cancer screening nationally, the availability of clinical practice-based systems to support screening guidelines is not known.

Objective

To characterize the prevalence and correlates of practice-based systems to support breast and cervical cancer screening, with a focus on the patient-centered medical home (PCMH).

Design

Web and mail survey of primary care providers conducted in 2014. The survey assessed provider (gender, training) and facility (size, specialty training, physician report of National Committee for Quality Assurance (NCQA) PCMH recognition, and practice affiliation) characteristics. A hierarchical multivariate analysis clustered by clinical practice was conducted to evaluate characteristics associated with the adoption of practice-based systems and technology to support guideline-adherent screening.

Participants

Primary care physicians in family medicine, general internal medicine, and obstetrics and gynecology, and nurse practitioners or physician assistants from four clinical care networks affiliated with PROSPR (Population-based Research Optimizing Screening through Personalized Regimens) consortium research centers.

Main Measures

The prevalence of routine breast cancer risk assessment, electronic health record (EHR) decision support, comparative performance reports, and panel reports of patients due for routine screening and follow-up.

Key Results

There were 385 participants (57.6 % of eligible). Forty-seven percent (47.0 %) of providers reported NCQA recognition as a PCMH. Less than half reported EHR decision support for breast (48.8 %) or cervical cancer (46.2 %) screening. A minority received comparative performance reports for breast (26.2 %) or cervical (19.7 %) cancer screening, automated reports of patients overdue for breast (18.7 %) or cervical (16.4 %) cancer screening, or follow-up of abnormal breast (18.1 %) or cervical (17.6 %) cancer screening tests. In multivariate analysis, reported NCQA recognition as a PCMH was associated with greater use of comparative performance reports of guideline-adherent breast (OR 3.23, 95 % CI 1.58–6.61) or cervical (OR 2.56, 95 % CI 1.32–4.96) cancer screening and automated reports of patients overdue for breast (OR 2.19, 95 % CI 1.15–41.7) or cervical (OR. 2.56, 95 % CI 1.26–5.26) cancer screening.

Conclusions

Providers lack systems to support breast and cervical cancer screening. Practice transformation toward a PCMH may support the adoption of systems to achieve guideline-adherent cancer screening in primary care settings.

Electronic supplementary material

The online version of this article (doi:10.1007/s11606-016-3726-y) contains supplementary material, which is available to authorized users.

KEY WORDS: breast cancer screening, cervical cancer screening, patient-centered medical home

INTRODUCTION

Guidelines for breast and cervical cancer screening continue to increase in complexity as new screening technologies develop and the evidence base from clinical trial and comparative effectiveness research grows. National guidelines for breast and cervical cancer screening underwent major revisions in 2009 and 2012, respectively, and new American Cancer Society guidelines were issued in 2015.1 – 3 Guidelines now include a range of acceptable approaches that may be tailored to personal risk and patient preference. Conceptual models of cancer screening identify a role for risk assessment and communication between providers and patients as important aspects of effective screening processes.4 – 7 Prior studies indicate that the adoption of system-based strategies such as health information technology (HIT) and decision support improves the quality of primary care delivery, including uptake of cancer screening.8 – 10 However, they also report a lack of HIT and practice-based systems to support screening in primary care.8 Given the complexity of emerging guidelines, the need for practice-based systems and HIT to support guideline adherence is likely to increase.

Implementation of cancer screening is a commonly used quality indicator for primary care practice.11 – 14 As HIT and practice management are incorporated into clinical practice, often as part of establishing a patient-centered medical home (PCMH), recent studies have evaluated the relationship of practice factors and system design to cancer screening quality indicators.12 , 14 – 16 Characteristics such as the number of providers in the practice have been associated with adoption of systems and HIT in support of cancer screening.17 However, adoption of HIT systems at the practice level does not ensure that physicians will make use of them. As one study reports, one in five physicians did not use prompts or reminders even when the technology was available in their practice.18 Assessments of the impact of system processes associated with the PCMH on cancer screening quality measures have shown mixed results. Some studies have reported improvement in cancer screening rates following the adoption of a PCMH model.12 , 14 , 15 However, others have reported no change in cancer screening quality outcomes.11 , 13 , 16 Although adoption of the PCMH has been most prevalent in the primary care specialties of general internal medicine, family medicine, and pediatrics, other fields such as obstetrics and gynecology (OB/GYN) may consider primary care as part of their scope of practice and may benefit from similar systems.19 The objective of this study is to describe the adoption and use of practice-based systems and HIT to support guideline-adherent breast and cervical cancer screening among a diverse group of primary care practices associated with the PROSPR consortium. We further evaluate the association of provider- and practice-level factors, including provider-reported NCQA recognition as a PCMH, with adoption and use of these systems.

METHODS

Overview

This study was conducted as part of the NCI-funded consortium, Population-based Research Optimizing Screening through Personalized Regimens (PROSPR). The overall aim of PROSPR is to conduct multi-site, coordinated, transdisciplinary research to evaluate and improve cancer screening processes. The ten PROSPR research centers reflect the diversity of US delivery system organizations. We administered a confidential Web and mail survey to women’s health care providers affiliated with the four clinical care networks within the three PROSPR breast cancer research centers. The survey included questions on systems in place for managing patient panels pertaining to breast and cervical cancer screening.

Setting, Participants, and Recruitment Protocol

Study methods have been described previously20. In brief, women’s primary health care providers who practiced in the clinical care networks affiliated with Brigham and Women’s Hospital (BWH), Boston, MA; Dartmouth-Hitchcock health system (DH), Lebanon, NH; the University of Pennsylvania (PENN), Philadelphia, PA; and those practicing in the state of Vermont (VT) were the target population. We included currently practicing providers [physicians (both MDs and DOs), physician assistants (PAs), and nurse practitioners (NPs)] with a designated specialty of general internal medicine, family medicine, or OB/GYN. Providers in residency training were excluded.

The survey was fielded among 668 primary care providers from September through December of 2014 using a combination of email with a link to a Web-based survey and mailed versions, with multiple follow-up contacts with non-respondents. At the time of first contact, providers received a code for a $50 gift card to an online retailer as an incentive.21 The study protocol was approved by the institutional review boards of the participating institutions.

Survey Content and Measures

The questionnaire content was adapted from the NCI-sponsored National Survey of Primary Care Physicians’ Cancer Screening Recommendations and Practices, last fielded in 2007 (online Appendix).22 – 24 Provider characteristics assessed were age, gender, provider type (MD/DO or PA/NP practicing family medicine, general internal medicine, or OB/GYN), medical school affiliation, and number of office visits during a typical week. We asked providers about the characteristics of their main practice site including achievement of NCQA recognition as a PCMH, practice type (e.g., non-hospital-based office, hospital-based office, or community health center), and the number of full- or part-time physicians.

Definition of Primary Outcomes

We defined breast cancer risk assessment as occurring if the provider, someone else in their practice, or another physician to whom they referred patients performed a risk assessment at the time of annual or preventive visits. We defined EHR decision support as occurring if the provider affirmed that their main practice had an EHR that included decision support for cancer screening. We considered comparative performance reports to be available if providers had received reports within the previous 12 months that compared their completion of recommended breast or cervical cancer screening to the performance of other practitioners. We defined automated reports for overdue screening as available if reports of overdue examinations in the patient panel were reviewed by the provider or another member of the team. A similar definition was used for the outcome, i.e., automated report for follow-up of an abnormal screening test. We determined PCMH status by provider reports that their main practice had received NCQA certification as a medical home. Although other accreditation bodies exist, our survey only ascertained awareness of NCQA recognition as a PCMH. Questions were asked separately for breast and cervical cancer screening.

Data Analysis

We examined overall rates of practice-based systems and bivariate associations between NCQA recognition as a PCMH and availability of practice-based systems. In bivariate analysis, we considered an association with a p-value <0.01 to be statistically significant. We conducted hierarchical multivariable logistic regression analyses clustered by primary care practice to evaluate the association between PCMH status and our primary outcomes. In regression analyses, we controlled for provider gender and type, practice type, and practice size, and calculated odds ratios (ORs) and 95 % confidence intervals (CIs).

RESULTS

Study Population

Of 668 eligible providers, 385 (57.6 %), distributed among 133 practices, completed the survey. There were no significant differences in response rates by PROSPR site (p = 0.10) or provider type (p = 0.10). Women were more likely than men to respond (62.5 vs. 48.7 %, p < 0.001). There were more general internists than family physicians, OB/GYNs, or PAs/NPs. Approximately one-half of providers reported practicing in a PCMH. Characteristics associated with practicing in a PCMH were provider type (p < 0.0001) and practice type (0.0010) (Table 1).

Table 1.

Survey Participants

| Provider and practice characteristics | Total cohort (n = 385) | PCMH* (n = 181) No. (%) |

Not a PCMH (n = 204) No. (%) |

p-value† |

|---|---|---|---|---|

| Age | 0.6163 | |||

| <40 years | 96 (25.8) | 49 (27.2) | 47 (24.5) | |

| 40–49 years | 115 (30.9) | 57 (31.7) | 58 (30.2) | |

| 50–59 years | 93 (25.0) | 46 (25.6) | 47 (24.5) | |

| 60+ years | 68 (18.3) | 28 (15.6) | 40 (20.8) | |

| Gender | 0.0147 | |||

| Female | 270 (70.1) | 116 (64.1) | 154 (75.5) | |

| Male | 115 (29.9) | 65 (35.9) | 50 (24.5) | |

| Provider type | <0.0001 | |||

| Family medicine/general practice (MD/ DO) | 78 (21.0) | 66 (36.5) | 12 (6.3) | |

| General internal medicine (MD/DO) | 171 (46.0) | 96 (53.0) | 75 (39.3) | |

| Obstetrics and gynecology (MD/DO) | 77 (20.7) | 2 (1.1) | 75 (39.3) | |

| Physician assistant, nurse practitioner | 46 (12.4) | 17 (9.4) | 29 (15.2) | |

| Medical school affiliation | 0.2754 | |||

| Yes | 308 (82.8) | 153 (85.0) | 155 (80.7) | |

| No | 64 (17.2) | 27 (15.0) | 37 (19.3) | |

| Typical weekly no. of office visits | 0.1130 | |||

| ≤ 25 | 65 (17.5) | 24 (13.3) | 41 (21.5) | |

| 26–50 | 109 (29.4) | 51 (28.38) | 58 (30.4) | |

| 51–75 | 121 (32.6) | 67 (37.2) | 54 (28.3) | |

| 76+ | 76 (20.5) | 38 (21.1) | 38 (19.9) | |

| Practice type | 0.0010 | |||

| Non-hospital-based office | 207 (55.8) | 118 (65.6) | 89 (46.6) | |

| Hospital-based office | 138 (37.2) | 51 (28.3) | 87 (45.6) | |

| Community health center | 26 (7.0) | 11 (6.1) | 15 (7.9) | |

| Number of full- or part-time physicians in practice | 0.0143 | |||

| <5 | 76 (20.4) | 40 (22.1) | 36 (18.8) | |

| 5–10 | 141 (37.8) | 66 (36.5) | 75 (39.1) | |

| 11–20 | 96 (25.7) | 48 (26.5) | 48 (25.0) | |

| 21–50 | 37 (9.9) | 23 (12.7) | 14 (7.3) | |

| 50+ | 23 (6.2) | 4 (2.2) | 19 (9.9) |

* PCMH determined by provider report of NCQA recognition as medical home. † p-value for chi-square test of difference between PCMH and No PCMH practice

Systems to Support Breast and Cervical Cancer Screening Guideline Adherence

Among providers, 60.5 % responded that breast cancer risk was routinely assessed at the time of an annual or preventive visit. Only 21 % reported the use of a formal breast cancer risk calculator, with the Gail model most commonly used (17.9 % of total cohort; Table, Supplemental Materials). Approximately half reported that their practice had an EHR with decision support for breast (48.8 %) or cervical (46.2 %) cancer screening (Table, Supplemental Materials) Among those who affirmed availability of EHR decision support, a majority reported using it only some of the time or not at all (75 % for breast and 76.7 % for cervical cancer). A minority of providers had received a comparative performance report within the previous 12 months for breast (26.2 %) or cervical (19.7 %) cancer screening. Among those who received comparative performance reports, income adjustment based on performance was more common for breast (43.6 %) than cervical (21.1 %) cancer screening. More than half of providers reported EHR prompts at the time of a visit in the form of provider reminders of overdue cancer screening (55.8 % for breast and 52.7 % for cervical) and of overdue follow-up for an abnormal screening test (19.0 % for breast and 17.7 % for cervical) (Tables 2 and 3).

Table 2.

Provider and Facility Association with Practice-Based Systems for Breast Cancer Screening

| Characteristic | Practice-based system* | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Breast cancer risk assessment | EHR decision support | Comparative performance report | Automated report routine screening | Automated report follow-up screening | ||||||

| % | OR (95 % CI) | % | OR (95 % CI) | % | OR (95 % CI) | % | OR (95 % CI) | % | OR (95 % CI) | |

| Total cohort | 60.5 | – | 48.8 | 26.2 | 18.7 | 18.1 | ||||

| Gender | ||||||||||

| Female | 65.9 | Ref | 47.4 | Ref | 23.4 | Ref | 17.8 | Ref | 16.8 | Ref |

| Male | 47.8 | 0.45 (0.30–0.67) | 52.2 | 0.76 (0.44–1.31) | 32.7 | 0.97 (0.55–1.73) | 20.9 | 0.79 (0.44–1.43) | 21.3 | 0.95 (0.50–1.82) |

| Provider type | ||||||||||

| GIM | 55.6 | Ref | 62.6 | Ref | 35.7 | Ref | 20.5 | Ref | 18.9 | Ref |

| FP | 65.4 | 1.50 (0.87–2.60) | 53.9 | 0.93 (0.49–1.77) | 35.1 | 0.80 (0.45–1.43) | 32.1 | 1.42 (0.73–2.77) | 30.6 | 1.66 (0.91–3.04) |

| OB/GYN | 68.8 | 1.84 (1.07–3.17) | 20.8 | 0.25 (0.12–0.54) | 6.7 | 0.26 (0.10–0.69) | 11.7 | 0.84 (0.34–2.04) | 10.8 | 0.67 (0.23–1.97) |

| NP/PA | 65.2 | 1.18 (0.54–2.56) | 41.3 | 0.45 (0.23–0.92) | 9.1 | 0.20 (0.07–0.63) | 6.5 | 0.30 (0.10–0.95) | 13.0 | 0.63 (0.24–1.66) |

| PCMH | ||||||||||

| Yes | 63.0 | 1.33 (0.73–2.43) | 51.9 | 0.95 (0.53–1.72) | 41.9 | 3.23 (1.58–6.61) | 28.2 | 2.19 (1.15–4.18) | 24.7 | 1.43 (0.75–2.72) |

| No/unknown | 58.3 | Ref | 46.1 | Ref | 11.2 | Ref | 10.3 | Ref | 12.6 | Ref |

| Practice type | ||||||||||

| Office | 64.3 | Ref | 50.2 | Ref | 30.7 | Ref | 25.1 | Ref | 22.4 | Ref |

| Hospital | 54.4 | 0.73 (0.46–1.15) | 44.9 | 0.68 (0.41–1.10) | 19.0 | 0.89 (0.44–1.80) | 10.9 | 0.47 (0.21–1.06) | 12.1 | 0.55 (0.31–0.98) |

| CHC | 73.1 | 1.81 (0.70–4.68) | 73.1 | 2.01 (0.68–5.94) | 33.3 | 1.82 (0.55–6.07) | 19.2 | 1.1 (0.39–3.27) | 29.6 | 1.68 (0.58–4.92) |

| Practice size | ||||||||||

| 1–5 | 65.8 | Ref | 38.2 | Ref | 27.8 | Ref | 21.1 | Ref | 19.4 | Ref |

| 5–10 | 65.3 | 1.05 (0.60–1.84) | 46.8 | 1.41 (0.67–2.93) | 27.5 | 1.05 (0.51–2.16) | 19.9 | 0.94 (0.45–1.20) | 18.8 | 1.05 (0.51–2.15) |

| 11–20 | 53.1 | 0.73 (0.40–1.32) | 55.2 | 2.06 (0.94–4.54) | 29.2 | 1.20 (0.55–2.59) | 19,8 | 1.13 (0.53–2.41) | 22.0 | 1.51 (0.72–3.18) |

| 21–50 | 64.9 | 1.25 (0.52–2.99) | 51.4 | 1.97 (0.89–4.38) | 16.7 | 0.59 (0.25–1.41) | 13.5 | 0.74 (0.22–2.42) | 11.4 | 0.64 (0.15–2.75) |

| 50+ | 52.2 | 1.11 (0.49–2.51) | 82.6 | 2.85 (0.73–11.13) | 17.4 | 1.08 (0.15–7.57) | 17.4 | 2.24 (0.40–12.67) | 14.3 | 127 (0.22–7.40) |

*Multivariable regression models for each of the five practice systems, controlling for all other variables in the table, including provider gender, provider type, PCMH, practice type, and practice size

Table 3.

Provider and Facility Association with Practice Systems for Cervical Cancer Screening

| Characteristic | Practice-based system* | |||||||

|---|---|---|---|---|---|---|---|---|

| EHR decision support | Comparative performance report | Automated report routine screening | Automated report follow-up screening | |||||

| % | OR (CI) | % | OR (CI) | % | OR (CI) | % | OR (CI) | |

| Total cohort | 46.2 | – | 19.7 | 16.4 | 17.6 | |||

| Gender | ||||||||

| Female | 43.7 | Ref | 17.9 | Ref | 15.9 | Ref | 17.0 | Ref |

| Male | 52.2 | 0.96 (0.56–1.64) | 23.9 | 0.96 (0.55–1.67) | 17.4 | 0.79 (0.43–1.45) | 19.1 | 0.78 (0.43–1.40) |

| Provider type | ||||||||

| GIM | 59.1 | Ref | 25.6 | Ref | 16.4 | Ref | 19.0 | Ref |

| FP | 50.0 | 1.20 (0.62–2.29) | 25.0 | 0.85 (0.46–1.58) | 29.5 | 1.60 (0.81–3.14) | 29.2 | 1.68 (0.94–2.98) |

| OB/GYN | 19.5 | 0.28 (0.13–0.61) | 8.0 | 0.43 (0.18–1.02) | 11.7 | 1.24 (0.49–3.10) | 10.7 | 061 (0.24–1.55) |

| NP/PA | 41.3 | 0.55 (0.26–1.14) | 8.9 | 0.35 (0.12–0.98) | 6.5 | 0.42 (0.13–1.32) | 10.9 | 0.46 (0.17–1.23) |

| PCMH | ||||||||

| Yes | 47.0 | 0.88 (0.51–1.51) | 30.2 | 2.56 (1.32–4.96) | 24.9 | 2.56 (1.26–5.20) | 23.2 | 1.30 (0.69–2.47) |

| No/Unknown | 45.6 | Ref | 9.6 | Ref | 8.8 | Ref | 12.9 | Ref |

| Practice type | ||||||||

| Office | 47.3 | Ref | 22.3 | Ref | 22.2 | Ref | 21.0 | Ref |

| Hospital | 42.8 | 0.75 (0.45–1.25) | 16.8 | 1.13 (0.60–2.10) | 9.4 | 0.52 (0.23–1.18) | 13.4 | 0.65 (0.32–1.33) |

| CHC | 69.2 | 1.88 (0.65–5.40) | 16.7 | 1.02 (0.28–3.68) | 15.4 | 0.99 (0.31–3.23) | 23.1 | 1.41 (0.47–4.19) |

| Practice size | ||||||||

| 1–5 | 35.5 | Ref | 19.2 | Ref | 22.4 | Ref | 20.8 | Ref |

| 5–10 | 46.1 | 1.59 (0.75–3.34) | 21.0 | 1.07 (0.52–2.18) | 17.0 | 0.70 (0.34–1.47) | 16.3 | 0.77 (0.37–1.61) |

| 11–20 | 54.2 | 2.21 (0.98–4.96) | 22.9 | 1.26 (0.60–2.64) | 13.5 | 0.60 (0.27–1.33) | 21.5 | 1.25 (0.63–2.50) |

| 21–50 | 37.8 | 1.36 (0.55–3.36) | 13.9 | 0.78 (0.33–1.86) | 13.5 | 0.66 (0.21–2.11) | 11.4 | 0.55 (0.13–2.33) |

| 50+ | 78.3 | 1.76 (0.46–6.81) | 9.1 | 0.64 (0.09–4.59) | 17.4 | 2.12 (0.36–12.47) | 18.2 | 1.37 (0.30–6.22) |

*Multivariable regression models for each of the five practice systems, controlling all other variables in the table including provider gender, provider type, PCMH, practice type, and practice size

GIM: General Internal Medicine

FP: Family Practice

OB/GYN: Obstetrics & Gynecology

NP/PA: Nurse Practitioner and Physician Assistant

PCMH: Patient Centered Medical Home

CHC: Community Health Center

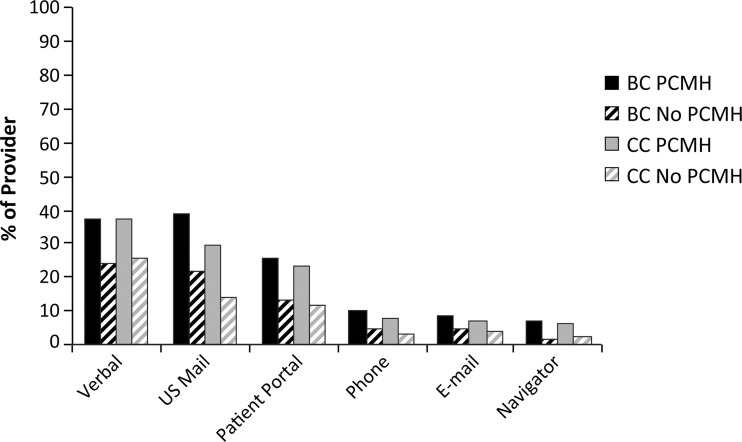

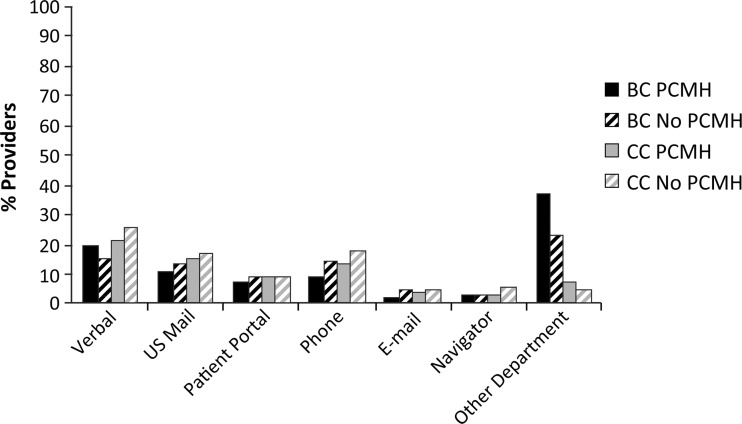

Patient Reminder Systems

A variety of systems were used to remind patients that they were overdue for routine screening or follow-up of an abnormal screening test. Providers working in PCMH practices were more likely than others to have systems in place for patient reminders about routine screening (Figs. 1 and 2).

Figure 1.

System for patient reminders of routine screening due. Providers in a PCMH reported higher use than others of BC patient reminders using verbal prompts (0.008), US Mail (p < 0.001), patient portal (p = 0.003), phone (p < 0.04), and use of a navigator (p = 0.013). Providers in a PCMH also reported higher use of CC patient reminders using verbal prompts (p = 0.015), US Mail (p < 0.001), patient portal (p = 0.003), and phone (p = 0.04). PCMH patient-centered medical home, BC breast cancer, CC cervical cancer.

Figure 2.

Patient reminders for overdue follow-up. There were no significant differences in the use of patient reminders between providers in a PCMH and others for overdue follow-up of abnormal screening tests. Providers in a PCMH were more likely to report that another department was responsible for the patient reminders for follow-up of abnormal BC screening tests (p = 0.004). PCMH patient-centered medical home, BC breast cancer, CC cervical cancer

Association between the PCMH and System Outcomes

In bivariate analyses, there were no differences by reported PCMH designation in the use of breast cancer risk assessment or availability of EHR decision support for breast or cervical cancer screening. However, providers working in a PCMH were more often able to customize the interval used in EHR decision support for breast (21.5 vs. 5.4 %, p = 0.0001) or cervical (33.3 vs. 10.0 %, p = 0.002) cancer screening. Providers in a PCMH more often endorsed a stopping age of 75 for breast cancer screening in EHR decision support (Table, Supplemental Materials). Providers in a PCMH also more often received comparative performance reports for breast (41.9 vs. 11.2 %, p < 0.001) and cervical (30.2, vs. 9.6 %, p < 0.0001) cancer screening, automated reports of patients in their panel overdue for routine breast (28.2 vs. 10.3 %, p < 0.0001) and cervical (24.9 vs. 8.8 %, p < 0.001) cancer screening, and follow-up of abnormal breast (24.7 vs. 12.6 %, p < 0.003) or cervical (23.2 vs. 12.9 %, p < 0.003) cancer screening tests.

Multivariate Analyses

In multivariate analyses, controlling for provider gender, provider type, and practice type and size, providers in a PCMH were more likely than others to receive comparative performance reports for breast (OR 3.23, 95 % CI 1.58–6.61) or cervical (OR 2.56, 95 % CI 1.32–4.96) cancer screening and to receive automated reports of patients in their panel overdue for breast (OR 2.19, 95 % CI 1.15–4.18) or cervical (OR 2.56, 95 % CI 1.26–5.20) cancer screening (Tables 2 and 3). Male providers were less likely than female providers (OR 0.45, 95 % CI 0.30–0.67) to use breast cancer risk assessment at an annual or preventive visit. Providers trained in OB/GYN were more likely than general internists (OR 1.84, 95 % CI 1.07–3.17) to use breast cancer risk assessment at an annual or preventive visit. In addition, OB/GYN providers were less likely than general internists to have EHR decision support for breast cancer screening or to receive comparative performance reports. Providers who were NPs/PAs were less likely than general internists to receive comparative performance reports or automated reports of patients overdue for breast cancer screening. Finally, providers working in hospital-based offices were less likely than non-hospital-based providers to receive automated reports of patients overdue for follow-up of abnormal breast cancer screening tests. A secondary analysis that excluded OB/GYN providers showed similar results.

DISCUSSION

In this study, we describe provider use of systems to support guideline-recommended strategies for breast and cervical cancer screening in a broad range of primary care practices. We also evaluate provider- and practice-level associations with the use of systems including breast cancer risk assessment, patient notification of routine or follow-up screening due, and the use of automated reports reviewed outside the context of the patient visit. In general, we observed decreased use of systems as the patient moved through the screening process, from routine screening to follow-up of abnormal screening tests. Although EHR reminders were available in the chart for review during patient visits for approximately 50 % of providers surveyed, far fewer (<20 %) reported having systems in place to review screening processes across patient panels. Our findings highlight vulnerable points in the screening process where systems are lacking, including notification of or about patients who are overdue for follow-up of screening abnormalities.

Previous studies have examined the adoption of system-based strategies to support cancer screening. In a national survey of 2475 primary care physicians in 2007, Yabroff and colleagues reported that less than 10 % of physicians used a comprehensive set of system-based strategies.8 In adjusted analyses, the adoption of performance reports, in-practice guidelines, and type of medical record system (electronic vs. paper) were associated with the use of patient and physician screening reminders for screening mammography, Pap testing, and colorectal cancer screening. Of note, 60 % of providers surveyed in the Yabroff study worked with paper charts. Our study indicates significant progress in the adoption of the EHR and EHR-based decision support over the intervening years. However, we still observed gaps in adoption of systems such as reminders, tracking, and automated reports.

In our study, 60.5 % of providers reported that breast cancer risk assessment was part of an annual or preventive visit. Far fewer used a formal risk calculator, despite the fact that risk assessment is a recognized component of the screening process6. Among those using a risk calculator, use was selective and was most often determined based on a family history of breast or ovarian cancer. Furthermore, multivariate analyses found that male providers were less likely than female providers to conduct an annual breast cancer risk assessment. This gender difference is consistent with previous studies reporting higher levels of preventive services provided by female providers.25 – 27 Possible reasons for this finding include knowledge, attitudes, or beliefs regarding the importance of individual risk assessment in the breast cancer screening process.

We also report provider-level differences by specialty and level of training. OB/GYN and NP/PA providers were less likely than their peers in family medicine and internal medicine to affirm the use of population-based reporting. This may be attributed to the relatively low uptake of PCMH strategies in OB/GYN practices. Primary care NPs are less likely than primary care physicians to be reimbursed based on productivity or quality indicators, a difference that may limit the adoption of systems that provide feedback to this group of providers on a population level.28 Our findings highlight the importance of implementing systems to support primary care in all clinical settings that incorporate primary care goals such as guideline-adherent cancer screening.

We showed that reported NCQA recognition as a PCMH was associated with having systems in place, targeted to both the provider and patient, to support breast and cervical cancer screening. Providers working in a PCMH reported more systems in place for patient reminders, including higher rates of reminders sent by mail or patient portals, for both breast and cervical cancer screening. Patient reminders have been shown to be effective in increasing breast and cervical cancer screening rates.29 – 32 Reported NCQA recognition as a PCMH also was associated with increased use of comparative performance reports and automated reports to alert providers of patients due for cancer screening outside the context of the clinical visit. Theoretical and empirical evidence supports the efficacy of physician audits and feedback in improving cancer screening.29 , 33 – 37 These findings are expected, given that NCQA-PCMH certification requires practices to use population management strategies to improve patient care.

In prior studies, the association between reported NCQA recognition and improvement in screening rates has been unclear, with some showing a positive association between PCMH practice redesign and higher screening rates,12 , 14 , 15 while other studies have reported no change in breast or cervical cancer screening quality outcomes with medical home interventions.11 , 13 , 16 One reason for these inconsistent findings may be a lack of uniformity in system changes required for NCQA recognition.38 Practices may achieve NCQA recognition through a range of system interventions and may not focus their system design and preventive or chronic care process changes specifically on cancer screening. There may also be variation in provider use of systems even when the technology is in place within the practice. In a recent study, McClellan and colleagues found that only one in five physicians used available prompts or reminders for technologies available in their practices.18 Downs et al. also identified a lack of coordination between nurses and providers, reminders outside routine workflow, and difficulty obtaining data as factors contributing to lower rates of adherence to reminders.39 Other factors including time constraints, difficulty of use, or lack of perceived value may limit technology adoption.40 Our study indicates that providers practicing in a PCMH adopt systems specifically to support adherence to cancer screening guidelines, including the use of comparative performance reports and automated reminders of patients overdue for routine screening.

Limitations

Our study has several limitations. First, the providers surveyed were associated with four clinical networks, all located in eastern US regions, and were more likely to include physicians with a medical school affiliation. Therefore, our data could in part reflect practice transformation that occurred outside the PCMH approach. However, the sites represented 133 practices of varying size in five states, both urban and rural settings, and a range of primary care practitioners (general internal medicine, family medicine, OB/GYN, and NP/PAs). Second, the study used a cross-sectional design. Therefore, the causality of effect between NCQA recognition as a PCMH and the adoption of systems to support cancer screening cannot be determined. Third, the study relied on self-report. There may be misclassification of practices with respect to NCQA-PCMH recognition as well as under- or over-reporting of available systems to support cancer screening. However, provider awareness of recognition as a PCMH is a necessary step toward adoption and incorporation of such systems in practice.

CONCLUSIONS

We found generally low levels of practice-based systems to support breast and cervical cancer screening in primary care. Our multilevel analyses suggest that provider factors including provider gender and specialty, as well as practice factors such as reported NCQA recognition as a PCMH, were associated with the adoption of systems to support guideline-adherent screening. Strategies for promoting guideline-adherent cancer screening in practice are increasingly needed given the growing complexity of and individualized approach to implementing cancer screening guidelines. Multilevel interventions that consider provider-level factors such as training and practice-level factors including system design are needed to optimize individualized and population guideline-adherent screening in primary care.

Electronic supplementary material

Below is the link to the electronic supplementary material.

(PDF 1178 kb)

Acknowledgments

The authors thank the participating PROSPR research centers for the data they have provided for this study. A list of the PROSPR investigators and contributing research staff is provided at: http://healthcaredelivery.cancer.gov/prospr/

Contributors

We appreciate the input of Alan Waxman, MD, on the development of the survey.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Funders

This study was conducted as part of the National Cancer Institute-funded consortium, Population-based Research Optimizing Screening through Personalized Regimens (PROSPR) (grant numbers U54 CA163307, U54CA 163313, U54 CA163303, U01CA163304). A list of PROSPR investigators and contributing research staff is provided at: http://healthcaredelivery.cancer.gov/prospr/

Footnotes

Prior Presentations

This work was presented at the AcademyHealth Annual Research Meeting, June 2015, Minneapolis, MN

PROSPR grant numbers

University of Pennsylvania (U54CA163313); University of Vermont (U54CA163303); Geisel School of Medicine at Dartmouth and Brigham and Women’s Hospital (U54CA163307); Fred Hutchinson Cancer Research Center (U01CA163304)

Electronic supplementary material

The online version of this article (doi:10.1007/s11606-016-3726-y) contains supplementary material, which is available to authorized users.

References

- 1.Force USPST Screening for breast cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2009;151:716–26. doi: 10.7326/0003-4819-151-10-200911170-00008. [DOI] [PubMed] [Google Scholar]

- 2.Moyer VA, Force USPST Screening for cervical cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2012;156:880–91. doi: 10.7326/0003-4819-156-12-201206190-00424. [DOI] [PubMed] [Google Scholar]

- 3.Oeffinger KC, Fontham ET, Etzioni R, et al. Breast cancer screening for women at average risk: 2015 guideline update from the American Cancer Society. JAMA. 2015;314:1599–614. doi: 10.1001/jama.2015.12783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Taplin SH, Anhang Price R, Edwards HM, et al. Introduction: understanding and influencing multilevel factors across the cancer care continuum. J Natl Cancer Inst Monogr. 2012;2012:2–10. doi: 10.1093/jncimonographs/lgs008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zapka JM, Edwards HM, Chollette V, Taplin SH. Follow-up to abnormal cancer screening tests: considering the multilevel context of care. Cancer epidemiology, biomarkers & prevention : a publication of the American Association for Cancer Research, cosponsored by the American Society of Preventive Oncology 2014;23:1965–73. [DOI] [PMC free article] [PubMed]

- 6.Beaber EF, Kim JJ, Schapira MM, et al. Unifying screening processes within the PROSPR consortium: a conceptual model for breast, cervical, and colorectal cancer screening. J Natl Cancer Inst. 2015;107. [DOI] [PMC free article] [PubMed]

- 7.Onega T, Beaber EF, Sprague BL, et al. Breast cancer screening in an era of personalized regimens: a conceptual model and National Cancer Institute initiative for risk-based and preference-based approaches at a population level. Cancer. 2014;120:2955–64. doi: 10.1002/cncr.28771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Yabroff KR, Zapka J, Klabunde CN, et al. Systems strategies to support cancer screening in U.S. primary care practice. Cancer epidemiology, biomarkers & prevention: a publication of the American Association for Cancer Research, cosponsored by the American Society of Preventive Oncology. 2011;20:2471–9. [DOI] [PMC free article] [PubMed]

- 9.Anhang Price R, Zapka J, Edwards H, Taplin SH. Organizational factors and the cancer screening process. J Natl Cancer Inst Monogr. 2010;2010:38–57. doi: 10.1093/jncimonographs/lgq008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mandelblatt JS, Yabroff KR. Effectiveness of interventions designed to increase mammography use: a meta-analysis of provider-targeted strategies. Cancer epidemiology, biomarkers & prevention : a publication of the American Association for Cancer Research, cosponsored by the American Society of Preventive Oncology 1999;8:759–67. [PubMed]

- 11.Rosenthal MB, Friedberg MW, Singer SJ, Eastman D, Li Z, Schneider EC. Effect of a multipayer patient-centered medical home on health care utilization and quality: the Rhode Island chronic care sustainability initiative pilot program. JAMA Intern Med. 2013;173:1907–13. doi: 10.1001/jamainternmed.2013.10063. [DOI] [PubMed] [Google Scholar]

- 12.Fifield J, Forrest DD, Burleson JA, Martin-Peele M, Gillespie W. Quality and efficiency in small practices transitioning to patient centered medical homes: a randomized trial. J Gen Intern Med. 2013;28:778–86. doi: 10.1007/s11606-013-2386-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Friedberg MW, Schneider EC, Rosenthal MB, Volpp KG, Werner RM. Association between participation in a multipayer medical home intervention and changes in quality, utilization, and costs of care. JAMA. 2014;311:815–25. doi: 10.1001/jama.2014.353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Markovitz AR, Alexander JA, Lantz PM, Paustian ML. Patient-centered medical home implementation and use of preventive services: the role of practice socioeconomic context. JAMA Intern Med. 2015;175:598–606. doi: 10.1001/jamainternmed.2014.8263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kern LM, Edwards A, Kaushal R. The patient-centered medical home, electronic health records, and quality of care. Ann Intern Med. 2014;160:741–9. doi: 10.7326/M13-1798. [DOI] [PubMed] [Google Scholar]

- 16.Shi L, Lock DC, Lee DC, et al. Patient-centered medical home capability and clinical performance in HRSA-supported health centers. Med Care. 2015;53:389–95. doi: 10.1097/MLR.0000000000000331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wiley JA, Rittenhouse DR, Shortell SM, et al. Managing chronic illness: physician practices increased the use of care management and medical home processes. Health Aff (Millwood) 2015;34:78–86. doi: 10.1377/hlthaff.2014.0404. [DOI] [PubMed] [Google Scholar]

- 18.McClellan SR, Casalino LP, Shortell SM, Rittenhouse DR. When does adoption of health information technology by physician practices lead to use by physicians within the practice? J Am Med Inform Assoc. 2013;20:e26–32. doi: 10.1136/amiajnl-2012-001271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.ACOG Medical Home Toolkit. April 15,2016. at http://www.acog.org/About-ACOG/ACOG-Departments/Practice-Management-and-Managed-Care/ACOG-Medical-Home-Toolkit).

- 20.Haas JS, Sprague BL, Klabunde CN, et al. Provider attitudes and screening practices following changes in breast and cervical cancer screening guidelines. J Gen Intern Med. 2015. [DOI] [PMC free article] [PubMed]

- 21.Chen JS, Sprague BL, Klabunde CN, et al. Take the money and run? Redemption of a gift card incentive in a clinician survey. BMC Med Res Methodol. 2016;16:25. doi: 10.1186/s12874-016-0126-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Meissner HI, Klabunde CN, Breen N, Zapka JM. Breast and colorectal cancer screening: U.S. primary care physicians’ reports of barriers. Am J Prev Med. 2012;43:584–9. doi: 10.1016/j.amepre.2012.08.016. [DOI] [PubMed] [Google Scholar]

- 23.Saraiya M, Berkowitz Z, Yabroff KR, Wideroff L, Kobrin S, Benard V. Cervical cancer screening with both human papillomavirus and Papanicolaou testing vs Papanicolaou testing alone: what screening intervals are physicians recommending? Arch Intern Med. 2010;170:977–85. doi: 10.1001/archinternmed.2010.134. [DOI] [PubMed] [Google Scholar]

- 24.Meissner HI, Klabunde CN, Han PK, Benard VB, Breen N. Breast cancer screening beliefs, recommendations and practices: primary care physicians in the United States. Cancer. 2011;117:3101–11. doi: 10.1002/cncr.25873. [DOI] [PubMed] [Google Scholar]

- 25.Reid RO, Friedberg MW, Adams JL, McGlynn EA, Mehrotra A. Associations between physician characteristics and quality of care. Arch Intern Med. 2010;170:1442–9. doi: 10.1001/archinternmed.2010.307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ewing GB, Selassie AW, Lopez CH, McCutcheon EP. Self-report of delivery of clinical preventive services by U.S. physicians. Comparing specialty, gender, age, setting of practice, and area of practice. Am J Prev Med. 1999;17:62–72. doi: 10.1016/S0749-3797(99)00032-X. [DOI] [PubMed] [Google Scholar]

- 27.Henderson JT, Weisman CS. Physician gender effects on preventive screening and counseling: an analysis of male and female patients’ health care experiences. Med Care. 2001;39:1281–92. doi: 10.1097/00005650-200112000-00004. [DOI] [PubMed] [Google Scholar]

- 28.Buerhaus PI, DesRoches CM, Dittus R, Donelan K. Practice characteristics of primary care nurse practitioners and physicians. Nurs Outlook. 2015;63:144–53. doi: 10.1016/j.outlook.2014.08.008. [DOI] [PubMed] [Google Scholar]

- 29.Stone EG, Morton SC, Hulscher ME, et al. Interventions that increase use of adult immunization and cancer screening services: a meta-analysis. Ann Intern Med. 2002;136:641–51. doi: 10.7326/0003-4819-136-9-200205070-00006. [DOI] [PubMed] [Google Scholar]

- 30.Bonfill X, Marzo M, Pladevall M, Marti J, Emparanza JI. Strategies for increasing women participation in community breast cancer screening. Cochrane Database Syst Rev. 2001:CD002943. [DOI] [PMC free article] [PubMed]

- 31.Camilloni L, Ferroni E, Cendales BJ, et al. Methods to increase participation in organised screening programs: a systematic review. BMC Public Health. 2013;13:464. doi: 10.1186/1471-2458-13-464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tseng DS, Cox E, Plane MB, Hla KM. Efficacy of patient letter reminders on cervical cancer screening: a meta-analysis. J Gen Intern Med. 2001;16:563–8. doi: 10.1046/j.1525-1497.2001.016008567.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Berwick DM, James B, Coye MJ. Connections between quality measurement and improvement. Med Care. 2003;41:I30–8. doi: 10.1097/00005650-200301001-00004. [DOI] [PubMed] [Google Scholar]

- 34.McPhee SJ, Bird JA, Jenkins CN, Fordham D. Promoting cancer screening. A randomized, controlled trial of three interventions. Arch Intern Med. 1989;149:1866–72. doi: 10.1001/archinte.1989.00390080116025. [DOI] [PubMed] [Google Scholar]

- 35.Jepson R, Clegg A, Forbes C, Lewis R, Sowden A, Kleijnen J. The determinants of screening uptake and interventions for increasing uptake: a systematic review. Health Technol Assess. 2000;4:i–vii. [PubMed] [Google Scholar]

- 36.Teleki SS, Damberg CL, Pham C, Berry SH. Will financial incentives stimulate quality improvement? Reactions from frontline physicians. Am J Med Qual. 2006;21:367–74. doi: 10.1177/1062860606293602. [DOI] [PubMed] [Google Scholar]

- 37.Jackson GL, Powers BJ, Chatterjee R, et al. Improving patient care. The patient centered medical home. A systematic review. Ann Intern Med. 2013;158:169–78. doi: 10.7326/0003-4819-158-3-201302050-00579. [DOI] [PubMed] [Google Scholar]

- 38.Aysola J, Bitton A, Zaslavsky AM, Ayanian JZ. Quality and equity of primary care with patient-centered medical homes: results from a national survey. Med Care. 2013;51:68–77. doi: 10.1097/MLR.0b013e318270bb0d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Downs SM, Anand V, Dugan TM, Carroll AE. You can lead a horse to water: physicians’ responses to clinical reminders. AMIA Ann Symp Proc. 2010;2010:167–71. [PMC free article] [PubMed] [Google Scholar]

- 40.Holden RJ, Karsh BT. The technology acceptance model: its past and its future in health care. J Biomed Inform. 2010;43:159–72. doi: 10.1016/j.jbi.2009.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(PDF 1178 kb)