Abstract

Background

In addition to training future members of the profession, medical schools perform the critical role of identifying students who are failing to meet minimum standards in core competencies.

Objective

To better understand reasons for failure in an internal medicine clerkship.

Design

A qualitative content analysis of letters describing reasons for students’ failure.

Participants

Forty-three students (31 men) who failed the internal medicine clerkship at the University of Minnesota Medical School, 2002–2013.

Approach

We conducted a qualitative content analysis of the 43 letters describing reasons for students’ failure. We coded critical deficiencies and mapped them to the Physician Competency Reference Set (PCRS) competency domains and classified them into two categories: conduct (unprofessional behaviors) and knowledge and skills specific to the practice of medicine. We then calculated the frequency of each critical deficiency. We statistically tested for relationships between gender and critical deficiencies in each of the competency domains.

Key Results

We coded 50 critical deficiencies with all codes mapping to a PCRS competency domain. The most frequently cited deficiencies were “insufficient knowledge” (79 % of students) and “inadequate patient presentation skills” (74 %). Students exhibited critical deficiencies in all eight competency domains, with the highest concentrations in Knowledge for Practice (98 %) and Interpersonal and Communication Skills (91 %). All students demonstrated deficiencies in multiple competencies, with 98 % having deficiencies in three or more. All 43 students demonstrated deficits in the knowledge and skills category, and 81 % had concurrent conduct issues. There were no statistically significant relationships between gender and critical deficiencies in any competency domain.

Conclusions

This study highlights both the diversity and commonality of reasons that students fail a clinical clerkship. Knowing the range of areas where students struggle, as well as the most likely areas of difficulty, may aid faculty in identifying students who are failing and in developing remediation strategies.

Electronic supplementary material

The online version of this article (doi:10.1007/s11606-016-3758-3) contains supplementary material, which is available to authorized users.

KEY WORDS: medical education, clerkship, failure, assessment

INTRODUCTION

Most students, upon matriculation into medical school, have the capacity to meet all competency standards and will successfully complete medical school.1 A national survey of internal medicine clerkship directors found that only 0.9 % of students failed the internal medicine clerkship.2 However, one-third of these same clerkship directors acknowledge passing students who should have failed.3 Clerkship directors cite poor documentation of areas of deficiency, concern about a possible appeal, and lack of remediation opportunities as reasons for passing students who may have otherwise failed.4

Practicing physicians who have been disciplined by a medical board are twice as likely to have had performance issues during their clinical clerkships as physicians who have not been disciplined.5 It is essential, therefore, that clinical clerkships have a well-established process for the high-stakes, low-frequency event of identifying the failing student. Common reasons for struggling in medical school include deficits in the competencies of medical knowledge, clinical reasoning, and practice-based learning and improvement.6 These struggling learners are most likely to be identified on inpatient rotations, but little has been written about the specific behaviors exhibited by students that lead to their failure.7,8

A better understanding of the types of deficits leading to failure and the development of robust remediation protocols for these areas may improve the success of learner remediation. Unfortunately, failing performance is often identified late,9 and remediation involves simply having the student do more of the same types of experiences.10 Earlier intervention and remediation targeted to specific areas of deficit may be more successful.6,11,12

The purpose of our study was to better understand the reasons that students failed the Medicine Clerkship at the University of Minnesota Medical School. We reviewed letters written by the clerkship site director to the Committee on Student Scholastic Standing (COSSS), describing in detail the specific reasons for student failure. These letters were comprehensively analyzed to identify and quantify the specific areas of critical deficiency. As unprofessional behaviors in medical school are difficult to remediate,6 and are associated with future disciplinary action by medical boards,5 we also sought to better understand the frequency with which these types of behavior occurred. In addition, given prior studies suggesting differences between male and female learners with difficulties,6,9 we also examined the areas of critical deficiency by gender.

Our research questions included the following: 1) What are the specific critical deficiencies described in the COSSS letters? 2) What is the frequency of these critical deficiencies across students? 3) To what extent are the critical deficiencies that led to failure issues of conduct (unprofessional behaviors) versus deficits in knowledge and skills specific to the practice of medicine? 4) To what extent do students who fail have critical deficiencies across multiple competency domains? 5) To what extent is gender associated with critical deficiencies in each competency domain?

METHODS

Context of Study

At the time of the study, the required internal medicine core clerkships included Medicine I and Medicine II. Each 6-week inpatient clinical rotation included clinical experience on general medicine wards or subspecialty services at one of five affiliated hospitals, along with didactic instruction through a mandatory conference series. In order to graduate from the University of Minnesota Medical School, students must pass both clerkships. The grading scale for both is as follows: unsatisfactory (fail), satisfactory, excellent, and honors. The grade is based on clinical performance (evaluated by supervising residents and attending physicians completing a standard clerkship evaluation form) and performance on an end-of-clerkship exam (National Board of Medical Examiners Medicine subject exam13 and an EKG/laboratory exam for Medicine I, and an in-house multiple choice question exam developed for Medicine II).

The medicine clerkship committee (consisting of each clinical site director and clerkship director) reviewed overall student performance for any student whose performance was rated “below expectations” in any competency area on any evaluation. During this performance review, the standard clerkship evaluations, verbal reports of performance from other clinical faculty, reports from lecturers, and input from administrative support staff were taken into account. The committee then determined the final grade. In addition to unsatisfactory performance in any competency domain, a failing grade could also be assigned based on failing the written exam(s).

COSSS Process and Letters

When the clerkship committee determined that a student failed, the clerkship or site director wrote a letter to COSSS summarizing the identified deficiencies. This letter synthesized the evaluations of the student’s performance on the clerkship, and described specific situations that occurred during the clerkship and any discussions with the student about the substandard performance. COSSS then met with the student to determine whether the student was granted permission to repeat the rotation or was dismissed from medical school.

Analysis

During the period from 2002 to 2013, 43 students failed the Medicine I or Medicine II clerkship.

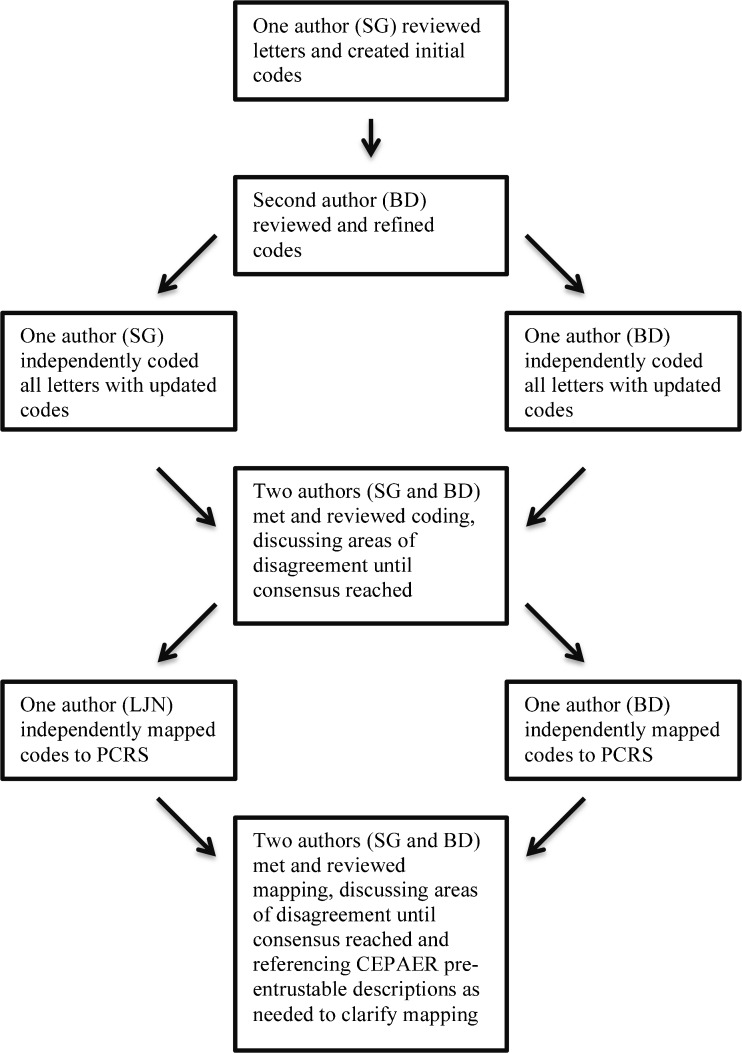

We conducted a qualitative content analysis of the 43 letters to COSSS using NVivo Software (version 9.0; QSR International, Melbourne, Australia).14 Content analysis is a qualitative research method used to interpret the content of text data through a systematic classification process of coding and identifying themes.15 One author (S.G.), who is not directly involved in the clerkship, read the de-identified letters multiple times, identifying and coding the critical deficiencies. These codes were reviewed and refined by a second author (B.D.), who was clerkship director. The same two authors then independently coded all of the letters using the updated codes, after which they met and compared their coding for each letter, discussing areas of disagreement until consensus was reached.16 Final coding was then reviewed for clarity by a third author (L.N.) (see online Appendix A for representative examples for each critical deficiency from the COSSS letters).

The next step in content analysis is to identify themes by clustering related codes into broader categories.15 Rather than create a new set of themes, we chose to use one of the existing taxonomies that organize related knowledge, skills and attitudes for medical trainees into competency domains. After considering the Internal Medicine Milestone sub-competencies designed jointly by the Accreditation Council for Graduate Medical Education (ACGME) and the American Board of Internal Medicine (ABIM),17 we, instead, selected the Physician Competencies Reference Set (PCRS) designed by the Association of American Medical Colleges (AAMC)18 as it is designed specifically for medical students to describe the knowledge, skills and attitudes needed by students.18

Two authors (L.N. and B.D.) then independently mapped the codes to the PCRS competency domains. When the mapping was unclear based on the competency and sub-competency descriptions, the authors referenced the document “Core Entrustable Professional Activities for Entering Residency”, as the descriptions of pre-entrustable behaviors for each PCRS competency domain19 often closely matched what was described as unsatisfactory performance on the clerkship. The two authors met and compared their mapping, discussing areas of disagreement until consensus was reached (Fig. 1).

Fig. 1.

Flowchart of qualitative content analysis of COSSS letters and mapping of critical deficiencies to PCRS competency domains.

To better understand the frequency with which unprofessional behaviors occur, the three authors reviewed the codes and associated text from the letters to classify the critical deficiencies into two broad categories: 1) conduct and 2) knowledge and skills specific to the practice of medicine (KS). We defined conduct as including: 1. all of the unprofessional behaviors described in the PCRS professionalism domain, 2. behaviors described as unprofessional in previous studies of unprofessional behavior20 and 3. those associated with future disciplinary action.5,21 Using this more inclusive definition, we identified behaviors related to conduct in multiple PCRS competency domains. For example, we classified the critical deficiency “resistance to feedback”, which we mapped to the Practice-Based Learning and Improvement domain, as conduct as it was identified as a type of unprofessional behavior (“diminished capacity for self-improvement”) in an earlier professionalism study.5 We defined the KS category as all other knowledge and skills needed for the practice of medicine.

We calculated the percentage of letters containing each coded critical deficiency and the percentage of letters with critical deficiencies mapped to each PCRS competency domain. We then determined the number of competencies with critical deficiencies identified in each letter and calculated the percentage of letters for each number of competency domains with deficiencies. We also calculated the percentage of letters with critical deficiencies categorized as conduct and as KS. Finally, we determined the percentage of letters with critical deficiencies in each competency domain by gender and conducted chi-square or Fisher exact tests, as appropriate, to determine whether there was a relationship between gender and critical deficiencies in each of the competency domains and in overall KS and conduct. As each letter represented a different student, we subsequently report the percentages as percentages of students rather than letters. Data analysis was performed using SPSS 19.0 software (IBM Corp., Armonk, NY, USA). Statistical significance was established at p < 0.05 for all tests.

IRB

This study was reviewed and approved by the University of Minnesota Institutional Review Board.

RESULTS

Forty-three students (1.5 % of the total student population of 2852) failed the medicine clerkships during the study period 2002–13. Thirty-one (72 %) were male compared to 50.8 % of the total students enrolled. No other demographic data were accessed.

Critical Deficiencies Contributing to Failure

We identified and coded 50 different critical deficiencies contributing to failure in the COSSS letters of the 43 students. All 50 codes mapped to at least one of the eight PCRS competency domains; 13 codes mapped to two competencies, and one code mapped to three competencies. The mapping and frequency of critical deficiencies are reported in Table 1.

Table 1.

Number and Percentage of Students with Critical Deficiencies Mapped to Physicians Competencies Reference Set (PCRS) and Knowledge and Skills for the Practice of Medicine (KS) and Conduct (C) categories (N = 43 students)

| Competency domain | Critical deficiency | Knowledge and Skill (KS)/Conduct (C) | No. (%) of students | |

|---|---|---|---|---|

| Knowledge for Practice | 42 (98) | |||

| Demonstrate knowledge of established and evolving biomedical, clinical, epidemiological, and social-behavioral sciences, as well as the application of this knowledge to patient care | Insufficient knowledge | KS | 34 (79) | |

| Inadequate differential diagnosis | KS | 25 (58) | ||

| Inadequate synthesis | KS | 19 (44) | ||

| Exam failure (shelf) | KS | 18 (42) | ||

| Inadequate application of knowledge | KS | 6 (14) | ||

| Interpersonal and Communication Skills | 39 (91) | |||

| Demonstrate interpersonal and communication skills that result in the effective exchange of information and collaboration with patients, their families, and health professions | Inadequate patient presentation skills | KS | 32 (74) | |

| Inadequate documentation | KS | 20 (47) | ||

| Poor communication with patients | C | 11 (26) | ||

| Poor communication with team | C | 7 (16) | ||

| Anger | C | 4 (9) | ||

| Poor teamwork | C | 3 (7) | ||

| Patient Care | 38 (88) | |||

| Provide patient-centered care that is compassionate, appropriate, and effective for the treatment of health problems and the promotion of health | Inadequate ability to create a plan | KS | 26 (60) | |

| Inadequate assessment | KS | 13 (30) | ||

| Inadequate clinical reasoning | KS | 12 (28) | ||

| Exam failure (lab/EKG) | KS | 12 (28) | ||

| Poor communication with patients | C | 11 (26) | ||

| Inadequate history taking skills | KS | 9 (21) | ||

| Failure to update clinical information | KS | 9 (21) | ||

| Inefficiency | KS | 8 (19) | ||

| Inadequate physical exam skills | KS | 6 (14) | ||

| Lack of comprehension | KS | 5 (12) | ||

| Inadequate data interpretation | KS | 4 (9) | ||

| Inadequate clinical judgment | KS | 2 (5) | ||

| Inability to write orders | KS | 2 (5) | ||

| Inability to manage expected patient volume | KS | 2 (5) | ||

| Inadequate data gathering | KS | 1 (2) | ||

| Inability to distinguish sick/not sick | KS | 1(2) | ||

| Professionalism | 28 (65) | |||

| Demonstrate a commitment to carrying out professional responsibilities and an adherence to ethical principles | Disinterested | C | 8 (19) | |

| Late | C | 8 (19) | ||

| Failure to complete coursework | C | 8 (19) | ||

| Distracted | C | 7 (16) | ||

| Absences | C | 7 (16) | ||

| Lying | C | 6 (14) | ||

| Excuses | C | 5 (12) | ||

| Sleepy | C | 4 (9) | ||

| Ethical lapses | C | 4 (9) | ||

| Inadequate basic student skills | C | 4 (9) | ||

| Non-response to pages/email | C | 4 (9) | ||

| Copying | C | 3 (7) | ||

| Disrespectful | C | 3 (7) | ||

| Unprofessional attire | C | 2 (5) | ||

| Blaming others | C | 2 (5) | ||

| Lack of patient ownership | C | 2 (5) | ||

| Cheating | C | 1 (2) | ||

| Impairment | C | 1 (2) | ||

| Practice-Based Learning and Improvement | 22 (51) | |||

| Demonstrate the ability to investigate and evaluate one’s care of patients, to appraise and assimilate scientific evidence, and to continuously improve patient care based on constant self-evaluation and lifelong learning | Inability to incorporate coaching | C | 13 (30) | |

| Resistant to feedback | C | 10 (23) | ||

| Inadequate independent learning | C | 7 (16) | ||

| Lack of insight | C | 4 (9) | ||

| Lack of EBM skills | KS | 1 (2) | ||

| Interprofessional Collaboration | 19 (44) | |||

| Demonstrate the ability to engage in an interprofessional team in a manner that optimizes safe, effective patient- and population-centered care | Poor communication with team | C | 7 (16) | |

| Non-response to pages/email | C | 4 (9) | ||

| Poor teamwork | C | 3 (7) | ||

| Disrespectful | C | 3 (7) | ||

| Unprofessional attire | C | 2 (5) | ||

| Personal and Professional Development | 19 (44) | |||

| Demonstrate the qualities required to sustain lifelong personal and professional growth | Late | C | 8 (19) | |

| Absences | C | 7 (16) | ||

| Lying | C | 6 (14) | ||

| Non-response to pages/email | C | 4 (9) | ||

| Lack of insight | C | 4 (9) | ||

| Cheating | C | 1 (2) | ||

| Impairment | C | 1 (2) | ||

| Systems-Based Practice | 15 (35) | |||

| Demonstrate an awareness of and responsiveness to the larger context and system of health care, as well as the ability to effectively call upon other resources in the system to provide optimal health care | Failure to complete coursework | C | 8 (19) | |

| Lack of participation | C | 5 (12) | ||

| Inadequate basic student skills | C | 4 (9) | ||

| Overall Knowledge and Skill | KS | 43 (100) | ||

| Overall Conduct | C | 35 (81) | ||

EBM evidence-based medicine

Critical Deficiencies

The most frequently cited critical deficiencies were “insufficient knowledge” (34/43, 79 % of students), “inadequate patient presentation skills” (32, 74 %), and “inadequate ability to create a plan” (26, 60 %).

PCRS Competency Domains

The number of failing students with critical deficiencies in each competency domain ranged from 42 of the 43 students (98 %) in Knowledge for Practice (KP) and 39 (91 %) in Interpersonal and Communication Skills (ICS), to 15 (35 %) in Systems-Based Practice (SBP).

Multiple Competency Domains

All failing students had deficiencies in at least two competency domains. Forty-two of the 43 students (98 %) had deficiencies in three or more domains, 27 (63 %) had deficiencies in five or more domains, and 4 (9 %) had deficiencies in all eight domains (Table 2).

Table 2.

Number and Percentage of Students with Critical Deficiencies in Multiple PCRS Competency Domains (N = 43 students)

| No. of competency domains | No. (%) of students |

|---|---|

| 1 | 0 (0) |

| 2 | 1 (2) |

| 3 | 8 (19) |

| 4 | 7 (16) |

| 5 | 9 (21) |

| 6 | 7 (16) |

| 7 | 7 (16) |

| 8 | 4 (9) |

Knowledge and Skill Versus Conduct

All 43 failing students had deficits in the KS category. Thirty-five (81 %) had concurrent conduct issues. None failed solely due to conduct issues.

Gender

While men comprised 50 % of the overall student body, they accounted for 72 % (31/43) of the failing students. The largest gender differences were found in the conduct category, where 27 of 31 (87 %) failing male students had critical deficiencies, compared with eight of 12 (67 %) women, and in professionalism, where 22 (71 %) men had critical deficiencies compared with six (50 %) women, though the results of the chi-square and Fisher exact tests indicated no statistically significant relationships between gender and critical deficiencies in any of the competency domains or overall KS and conduct (Table 3).

Table 3.

Comparison of Number and Percentage of Male (n = 31) and Female (n = 12) Students with Critical Deficiencies in Each PCRS Competency Domain and in Knowledge and Skills for the Practice of Medicine and Conduct Categories

| PCRS competency domain | No. (%) male | No. (%) female | p value |

|---|---|---|---|

| Knowledge for Practice | 30 (98) | 12 (100) | 1.0* |

| Interpersonal and Communication Skills | 28 (90) | 11 (92) | 1.0* |

| Patient Care | 27 (87) | 11 (92) | 1.0* |

| Professionalism | 22 (71) | 6 (50) | 0.29* |

| Practice-Based Learning and Improvement | 16 (52) | 6 (50) | 0.92† |

| Interprofessional Collaboration | 15 (48) | 4 (33) | 0.37† |

| Personal and Professional Development | 15 (48) | 4 (33) | 0.37† |

| Systems-Based Practice | 10 (32) | 5 (42) | 0.72* |

| Overall Knowledge and Skills | 31 (100) | 12 (100) | ND‡ |

| Overall Conduct | 27 (87) | 8 (67) | 0.18* |

PCRS Physician Competency Reference Set

*As assessed using Fisher exact test

†As assessed using chi-square test

‡No statistics are computed because the KS variable is a constant

Discussion

The 43 failing students represented in the letters to COSSS constitute 1.5 % of the students who completed the internal medicine clerkship during the 11-year period of this study. This failure rate is similar to clerkship failure rates reported previously.3,20 Identifying these failing students is a complex task, made more difficult by the low frequency of this event. The COSSS letters provide the most comprehensive description of failing students at our institution. Through analysis of these letters, we sought to understand in greater depth the reasons for students’ failure. We hope that by providing a more detailed description of the failing student, we may help to improve rater sensitivity for detecting these students within the broader population of competent students, and to provide guidance on where to focus remediation efforts.

Our population of failing students demonstrated critical deficiencies in all eight PCRS competency domains, suggesting that students can struggle in most, if not all, areas. Critical deficiencies were identified in all competency domains, however, we found higher concentrations of deficiencies in KP (98 % of students), ICS (91 %), and Patient Care (PC; 88 %), which is consistent with previous studies of struggling internal medicine residents and medical students.6,7,22 The most common critical deficiencies were “insufficient knowledge” (79 %), “inadequate patient presentation skills” (74 %), and “inadequate ability to create a plan” (60 %). This suggests that failing students are struggling with the core areas of fund of knowledge, the ability to synthesize and apply their knowledge, and the ability to clearly communicate their knowledge and synthesis during rounds. These findings are not unexpected, as these are essential skills and activities within the medicine clerkship that are frequently observed by preceptors during rounds.

Given the frequency with which these core areas (synthesis, knowledge application, and clear communication of knowledge and synthesis during rounds) contribute to student failure, it may be appropriate to develop programs for remediation in these core skills. Additionally, more rigorous assessments in these critical areas earlier in the medical school curriculum may aid in earlier detection and intervention. Earlier remediation interventions for deficiencies in these critical competencies is important, as it has the potential to stop the cycle of underperformance12, leading to greater success in clinical clerkships and helping to avoid the consequences associated with clerkship failure, including difficulty matching into residency.

We further found that the majority of failing students had critical deficiencies in multiple competency domains. All failing students demonstrated critical deficiencies in more than one domain, and 98 % demonstrated deficiency in three or more domains. This may, in part, reflect the overlap in skills required across these domains. Indeed, we coded 14 of the 50 critical deficiencies to multiple domains, suggesting that the same skill is used in multiple domains. In addition, skills are connected across domains; for example, a student struggling with synthesis of information (KP) would also be expected to struggle with documentation (ICS) and to appear inefficient (PC).

Our finding that failing students had deficits across multiple PCRS competency domains is similar to that of a prior study looking at learners with difficulties (not limited to failing), which evaluated learners using a different competency framework.6 Many factors likely contribute to this finding. Evaluators may have difficulty sorting out the specific reason for poor performance and may attribute it to multiple reasons. It may also be an artifact of the competency construct that poor performance in one area will lead to poor performance in related domains. Finally, it is also possible that poor performance in some areas results directly from deficits in foundational domains. Without a strong foundation, it is likely difficult to develop competency in associated higher-level skills. For example, a poor knowledge base is likely to lead to difficulty with synthesis.

We suggest that once a student is identified as failing to meet standards in one area, it should prompt timely investigation across all other competency domains. For example, if a student is having difficulty with presentations, it may be useful to conduct an assessment of the student's knowledge and test of clinical reasoning and to review the student's notes in an effort to determine the specific sources of difficulty. It may also be helpful to consider the overlap in critical deficiencies across multiple domains when designing remediation, to ensure that all potential problems are addressed.

Prior to this study, we had anticipated that conduct and KS problems might occur in different populations of students, and we were surprised to find that none of our students failed for isolated conduct problems and by the frequency of concurrent conduct and KS issues (81 % of students had deficits in both). It is possible that isolated conduct problems occurred, but students were given passing marks by their preceptors unless they were found to have concurrent KS deficiencies. We found that the majority of failing students had multiple examples of conduct problems described in their COSSS letters, suggesting that preceptors may be reluctant to record an isolated case of unprofessional behavior. This may mean that preceptors look for corroborating evidence to support their observations prior to assigning failing grades.4 Therefore, providing opportunities for longitudinal observations by a single attending physician or for detailed learner handoffs would likely help in identifying and documenting students with conduct problems. Longitudinal integrated clerkships may be one model enabling earlier identification of failing students.23

Similar to previous research, our cohort of failing students included more men than women.6 We found that a greater percentage of male than female students had deficiencies in the professionalism domain and in critical deficiencies related to conduct, though we found no statistically significant relationship between gender and critical deficiencies in professionalism or conduct. Prior studies have had varying results regarding gender percentages in cohorts with professionalism problems.5,6,20 In addition, there were no significant relationships between gender and critical deficiencies in the other competency domains.

Limitations

Our study has several limitations. First, this is a single clerkship within a single institution, which may limit the generalizability of our findings. Additionally, the clerkship is exclusively inpatient, which may affect the critical deficiencies observed and documented, further limiting generalizability. Second, it is possible that students who did not meet minimum standards were not identified by our evaluation system, and therefore, our analysis does not fully represent the population of students failing to meet minimum standards. Furthermore, since our qualitative analysis was based on a letter summarizing student performance, which drew upon verbal and written reports of student behavior, we cannot say with certainty that this fully represents the spectrum of failing student behaviors. The frequency with which deficiencies are documented relates to both the frequency with which the deficit is present and the frequency with which it is observed and subsequently documented. A further limitation is that, while our categorization of critical deficiencies into deficits in KS or conduct was based on specific definitions, it is possible that a deficit in KS is actually rooted in a deficit in conduct or vice versa. This highlights the problem of understanding the underlying causes of critical deficiencies and underscores the need for careful investigation when a critical deficiency is observed. In addition, while we observed differences in the frequency of critical deficiencies between male and female students, most notably in professionalism and conduct, it is possible that our sample size was too small to detect significant relationships between gender and critical deficiencies in the competency domains. Lastly, our analysis mapped the critical deficiencies identified in the letters to the PCRS competency domains. We attempted to map the critical deficiencies to the PCRS sub-competencies, but found the sub-competencies too specific compared to the critical deficiency descriptions in the COSSS letters to allow for accurate mapping to the sub-competencies. In the future, the PCRS sub-competencies will likely be more fully incorporated into evaluation forms, allowing for more specific mapping.

Conclusions

The findings from our study suggest several practical recommendations for the identification, assessment, and remediation of failure among learners in a medicine clerkship. Direct observation and careful assessment in all competency domains is essential, as learners can struggle in all areas. Knowing the range of areas where students may struggle, as well as the most likely areas for difficulty, may aid faculty in developing strategies for identifying a failing student. Faculty development should include examples of common scenarios of poor student performance, with the opportunity to practice delivering feedback in these cases. Knowledge of common areas of student underperformance may also aid in earlier identification of the at-risk student and subsequent targeted investigation to determine the full spectrum of a student’s deficiencies.

KP, ICS, and PC are the most common areas of deficiency in failing students, which suggests a need for robust evaluation and remediation in these foundational areas. Schools should consider having ready remediation plans for these common areas, as well as early detection strategies such as objective structured clinical exams. In addition, our finding that the majority of failing students had deficiencies in multiple domains suggests that identification of a single critical deficiency should prompt further investigation to determine the full scope of learner difficulties at the earliest stage possible.

Electronic supplementary material

Below is the link to the electronic supplementary material.

(DOCX 28 kb)

Compliance with Ethical Standards

Funding

None.

Prior Presentations

Oral presentation, "Diagnosing Failure", at the AMEE 2014 conference of the Association for Medical Education in Europe, August 30 to September 3, 2014, in Milan, Italy.

Poster, "Describing failure in a clinical clerkship: implications for identifying, assessing and remediating struggling learners", at the Academic Internal Medicine Week 2015 of the Alliance for Academic Internal Medicine, October 8–10, 2015, in Atlanta, GA.

Conflict of Interest

The authors declare that they have no conflict of interest.

Footnotes

Electronic supplementary material

The online version of this article (doi:10.1007/s11606-016-3758-3) contains supplementary material, which is available to authorized users.

References

- 1.Caulfield M, Redden G, Sondheimer H. Graduation rates and attrition factors for U.S. medical school students. AAMC Analysis in Brief. Available at: https://www.aamc.org/download/379220/data/may2014aib-graduationratesandattritionfactorsforusmedschools.pdf. Accessed May 5, 2016.

- 2.Data presented at the Alliance for Academic Internal Medicine Meeting in 2011. Available at: http://www.im.org/d/do/4344. Accessed February 9, 2015.

- 3.Fazio SB, Papp KK, Torre DM, DeFer TM. Grade Inflation in the Internal Medicine Clerkship: A National Survey. Teach Learn Med. 2013;25:71–76. doi: 10.1080/10401334.2012.741541. [DOI] [PubMed] [Google Scholar]

- 4.Dudek NL, Marks MB, Regehr G. Failure to Fail: The Perspectives of Clinical Supervisors. Acad Med. 2005;80(10 suppl):S84–S87. doi: 10.1097/00001888-200510001-00023. [DOI] [PubMed] [Google Scholar]

- 5.Papadakis MA, Teherani A, Banach MA, et al. Disciplinary action by medical boards and prior behavior in medical school. N Engl J Med. 2005;353:2673–82. doi: 10.1056/NEJMsa052596. [DOI] [PubMed] [Google Scholar]

- 6.Guerrasio J, Garrity MJ, Aagaard EM. Learner Deficits and Academic Outcomes of Medical Students, Residents, Fellows, and Attending Physicians Referred to a Remediation Program, 2006–2012. Acad Med. 2014;89:352–358. doi: 10.1097/ACM.0000000000000122. [DOI] [PubMed] [Google Scholar]

- 7.Dupras DM, Edson RS, Halvorsen AJ, Hopkins RH, McDonald FS. “Problem Residents”: Prevalence, Problems and Remediation in the Era of Core Competencies. Am J Med. 2012;125:421–425. doi: 10.1016/j.amjmed.2011.12.008. [DOI] [PubMed] [Google Scholar]

- 8.Hemmer PA, Hawkins R, Jackson JL, Pangaro LN. Assessing how well three evaluation methods detect deficiencies in medical students' professionalism in two settings of an internal medicine clerkship. Acad Med. 2000;75:167–173. doi: 10.1097/00001888-200002000-00016. [DOI] [PubMed] [Google Scholar]

- 9.Guerrasio J, Brooks E, Rumack CM, Christensen A, Aagaard EM. Association of Characteristics, Deficits, and Outcomes of Residents Placed on Probation at One Institution, 2002–2012. Acad Med. 2016;91:382–387. doi: 10.1097/ACM.0000000000000879. [DOI] [PubMed] [Google Scholar]

- 10.Aude’tat M-C, Laurin S, Dory V. Remediation for struggling learners: putting an end to ‘more of the same’. Med Educ. 2013;47:224–231. doi: 10.1111/medu.12120. [DOI] [PubMed] [Google Scholar]

- 11.Hauer KE, Ciccone A, Henzel TR, Katsufrakis P, Miller SH, Norcross WA, Papadakis MA, Irby DM. Remediation of the Deficiencies of Physicians Across the Continuum From Medical School to Practice: A Thematic Review of the Literature. Acad Med. 2009;84:1822–1832. doi: 10.1097/ACM.0b013e3181bf3170. [DOI] [PubMed] [Google Scholar]

- 12.Cleland J, Leggett H, Sandars J, Costa MJ, Patel R, Moffatt M. The remediation challenge: theoretical and methodological insights from a systematic review. Med Educ. 2013;47:242–51. doi: 10.1111/medu.12052. [DOI] [PubMed] [Google Scholar]

- 13.NBME Subject Exams. Available at: http://www.nbme.org/schools/subject-exams/subjects/exams.html. Accessed May 5, 2016.

- 14.NVivo qualitative data analysis software; QSR International Pty Ltd. Version 9, 2010.

- 15.Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15:1277–1288. doi: 10.1177/1049732305276687. [DOI] [PubMed] [Google Scholar]

- 16.Denzin NK, Lincoln YS. Collecting and interpreting qualitative materials. Thousand Oaks, Calif.: Sage Publications; 1998.

- 17.The Internal Medicine Milestone Project. Available at: https://www.acgme.org/acgmeweb/Portals/0/PDFs/Milestones/InternalMedicineMilestones.pdf. Accessed May 5, 2016.

- 18.Englander R, Cameron T, Ballard AJ, Dodge J, Bull J, Aschenbrener CA. Toward a common taxonomy of competency domains for health professions and competencies for physicians. Acad Med. 2013;88:1088–1094. doi: 10.1097/ACM.0b013e31829a3b2b. [DOI] [PubMed] [Google Scholar]

- 19.Drafting Panel, Core entrustable professional activities for entering residency. AAMC 2014. Available at: http://members.aamc.org/eweb/upload/Core%20EPA%20Curriculum%20Dev%20Guide.pdf. Accessed May 5, 2016.

- 20.Papadakis MA, Osborn EHS, Cooke M, Healy K. A strategy for the detection and evaluation of unprofessional behavior in medical students. Acad Med. 1999;74:980–990. doi: 10.1097/00001888-199909000-00009. [DOI] [PubMed] [Google Scholar]

- 21.Papadakis MA, Hodgson CS, Teherani A, Kohatsu ND. Unprofessional behavior in medical school is associated with subsequent disciplinary action by a state medical board. Acad Med. 2004;79:244–249. doi: 10.1097/00001888-200403000-00011. [DOI] [PubMed] [Google Scholar]

- 22.Yao DC, Wright SM. National survey of internal medicine residency program directors regarding problem residents. JAMA. 2000;284:1099–1104. doi: 10.1001/jama.284.9.1099. [DOI] [PubMed] [Google Scholar]

- 23.Mazotti L, O’Brien B, Tong L, Hauer KE. Perceptions of evaluation in longitudinal versus traditional clerkships. Med Educ. 2011;45(5):464–470. doi: 10.1111/j.1365-2923.2010.03904.x. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 28 kb)