Abstract

Background

Soma localization is an important step in computational neuroscience to map neuronal circuits. However, locating somas from large-scale and complicated datasets is challenging. The challenges primarily originate from the dense distribution of somas, the diversity of soma sizes and the inhomogeneity of image contrast.

Results

We proposed a novel localization method based on density-peak clustering. In this method, we introduced two quantities (the local density ρ of each voxel and its minimum distance δ from voxels of higher density) to describe the soma imaging signal, and developed an automatic algorithm to identify the soma positions from the feature space (ρ, δ). Compared with other methods focused on high local density, our method allowed the soma center to be characterized by high local density and large minimum distance. The simulation results indicated that our method had a strong ability to locate the densely positioned somas and strong robustness of the key parameter for the localization. From the analysis of the experimental datasets, we demonstrated that our method was effective at locating somas from large-scale and complicated datasets, and was superior to current state-of-the-art methods for the localization of densely positioned somas.

Conclusions

Our method effectively located somas from large-scale and complicated datasets. Furthermore, we demonstrated the strong robustness of the key parameter for the localization and its effectiveness at a low signal-to-noise ratio (SNR) level. Thus, the method provides an effective tool for the neuroscience community to quantify the spatial distribution of neurons and the morphologies of somas.

Electronic supplementary material

The online version of this article (doi:10.1186/s12859-016-1252-x) contains supplementary material, which is available to authorized users.

Keywords: Touching soma localization, Density-peak clustering, Optical microscopic image

Background

Reconstructing brain-wide wiring networks at single-neuron resolution is the key to understanding how neuronal circuits orchestrate complex behaviors [1], and represents a major engineer challenge [2–4]. To achieve this goal, many computational techniques are required such as the localization and segmentation of neuronal somas, which is the first step in digital neuronal circuit reconstruction. Soma segmentation can provide the spatial distribution and morphometrics of somas, which are quantitative aspects of some brain disease diagnosis [5, 6]. For example, in Alzheimer's disease we can sometimes observe selective loss of nigral neurons [7], whereas in the cortices of patients with Huntington disease there is an increase in the density of large glia and a reduction in the neuronal size and density [8]. Recent advances in molecular labeling [9, 10] and imaging techniques [11–16] have enabled imaging of the whole mouse brain at a micron spatial resolution, and have provided a database for the mapping of neuronal circuits. However, the localization and segmentation of neuronal somas from this type of dataset is challenging. These challenges primarily originate from the following three aspects: the dense distribution of somas (touching somas), the diversity of soma sizes, and the inhomogeneity of image contrast.

Many methods have been proposed for the automatic localization of touching cells, such as watershed algorithms [17–20], graph-based methods [21–25], energy functional-based models [26–31] and machine learning approaches [32–34]. Additionally, some special methods are available for splitting touching cells [35–42], such as distance transform based cell detection [35], concave point-based segmentation methods [36, 37] and the gradient flow tracking method [38, 39]. These methods have their own advantages and behave well for some specific applications. However, most require enhancement for wider applications. For example, watershed algorithms often suffer from over-segmentation of cells when the image contrast is inhomogeneous; initial localizations are not easy to set using energy functional-based models to locate touching cells; distance transform based cell detection method faces challenges for the case that multiple cells touching each other; and gradient flow tracking often results in under-segmentation in locating touching cells. Therefore, these methods experience difficulties in locating touching somas from large-scale 3D images in which a dense distribution of somas, diversity of soma sizes and inhomogeneity of image contrast are common. Recently, two methods have been proposed for the large-scale localization of neuronal somas. The first is our previous method named NeuroGPS [43], which introduces the regularization item in the sphere fitting model to eliminate the influence of the thick neurites on soma localization. However, when using NeuroGPS to locate closely positioned somas, the accuracy depends on the reasonable assignment of initial positions (seeds). Assigning too many initial positions usually generates false positive positions. The second method use mean-shift clustering to search soma positions (i.e., the center point of the cluster) [44], which generates high recall and precision rates for the analysis of specific datasets. However, the number of clusters in mean-shift clustering is determined by the key parameter, kernel width. A big kernel width usually leads to a small number of clusters, and conversely a large number of clusters is for small kernel width. Thus, it may be difficult to find a reasonable kernel width that is suitable for the diversity of soma sizes.

Here, we proposed a method for the automatic large-scale localization of neuronal somas. This method was based on density-peak clustering [45], in which two quantities (the local density ρ of each voxel and its minimum distance δ from voxels of higher density) were introduced and formed the feature space (ρ, δ). From the feature space, we developed an automatic algorithm to find the clusters. Each cluster corresponds to the morphology of a soma, which achieves the localization of a soma. We demonstrated the validity of the proposed method for the large-scale localization of somas. We also demonstrated its strong anti-noise ability, the robustness of the key parameter for localization, and the high efficiency in the analysis. Furthermore, we tested our method on two image stacks. From one dataset, our method achieved the localization with a F1-measure of 0.93, which was far superior to some state-of-the-art algorithms. From the other large scale dataset (4.3 × 2.4 × 2.7 mm3, 3.26 GB), our method located approximately 35,000 somas and achieved F1-measures of 0.93 and 0.98 from the analysis of two sub image stacks.

Methods

Data acquisition

The experimental datasets were obtained by imaging a mouse brain with the two-photon fluorescence micro-optical sectioning tomography system (2p-fMOST) [16]. All experiments were performed in compliance with the guidance of the Experimental Animal Ethics Committee at Huazhong University of Science and Technology. The original size of the volume pixel was 0.5 × 0.5 × 2 μm3; it was merged to 2 × 2 × 2 μm3 for our analysis. We used three experimental image stacks in Figs. 4c, 5 and 6. The synthetic data consisted of 28 image stacks with different signal-to-noise ratios (SNR = 1, 2, 4 and 6). Each image stack contained only one pair of somas. The synthetic data were used in Fig. 3 and 4a, b. In addition, we tested the proposed method on Nissl staining datasets [46] and structured illumination microscopy datasets [47] (Table 1).

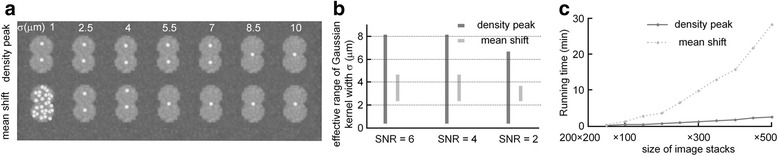

Fig. 4.

Comparison of density-peak clustering and mean shift in parameter robustness and computation complexities when segmenting touching somas. a Localization results of simulated touching somas with different values for σ in the condition of SNR = 3, d = 14 μm. b Effective range of σ for the two methods with SNR = 2, 4 and 6. c Running time (excluding the time of image preprocessing) of the two approaches on mouse hippocampal image stacks of different sizes

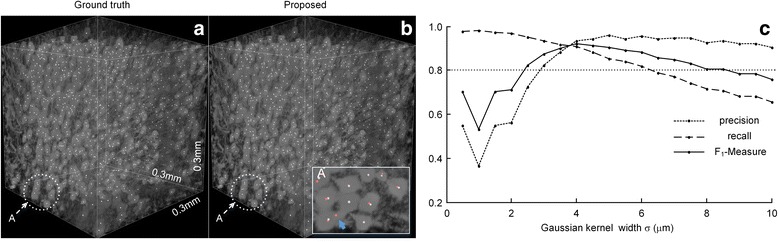

Fig. 5.

Soma localization results derived by the proposed method. a The manual localizations from the image stack with the total size of 150 × 150 × 150 voxels and with the voxel size of 2 × 2 × 2 μm3. b Shows the localization of the somas derived by the proposed approach. The detailed results can be found in the enlargement of the region (circles). White dots indicate manually located positions of somas; red dots are the positions located by our method. Arrows and triangles indicate the false positive positions and the missing positions respectively. c Robustness of Gaussian kernel width on the localization. The evaluation indexes, including localization precision, recall and F 1-measure, are obtained using our method to analyze this dataset and are plotted against kernel width

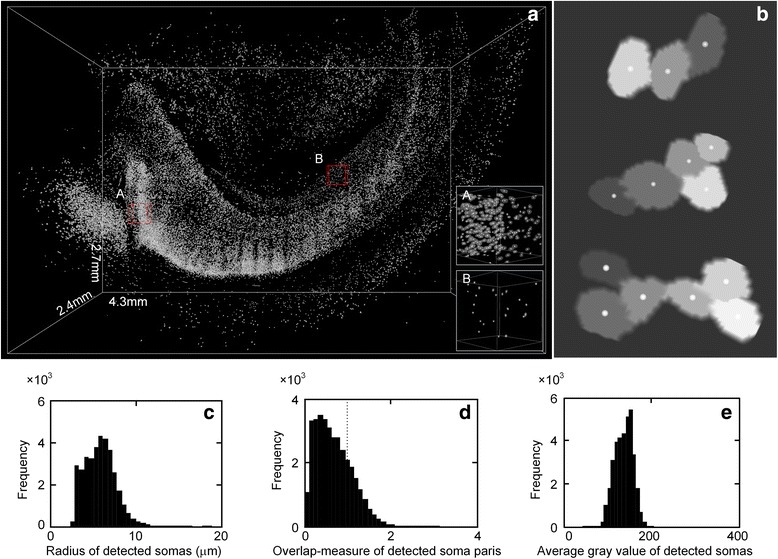

Fig. 6.

Localization of somas from large-scale dataset. a The max-intensity projection of an mouse hippocampal image stack with a volume of 4.3 × 2.7 × 2.4 mm3 and the detected soma centers (white dots). Sub-figures A and B indicate the localizations of densely positioned somas and sparsely positioned somas. b The localization and segmentation of touching somas in three connected components. Note that different gray level here represents different somas. c-e The frequency histograms of radius, overlap-measure and average gray value of detected somas. (radius: average distance between perimeter points of a soma and its center; overlap-measure, for a soma pair that consists of a soma and its closest soma, let s and d denote the sum of radius of these two somas and the distance between the two soma’s centers, overlap-measure is defined as the ratio of s to d; average gray value: average gray value of all points in a soma.)

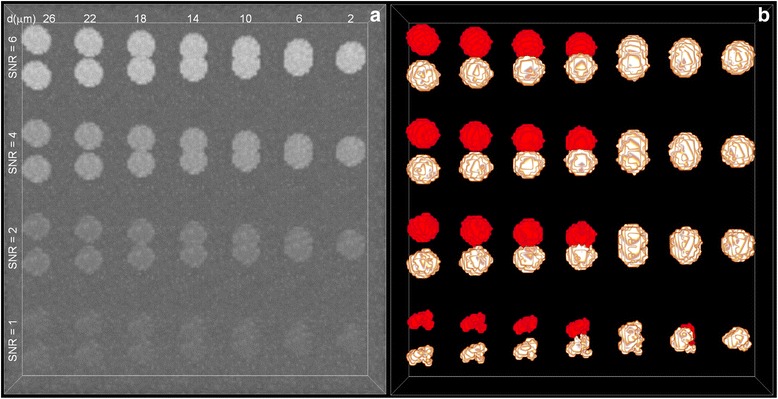

Fig. 3.

Segmentation of touching somas at different levels of SNR. a The simulation datasets that contain 28 pairs of somas. All somas had the fixed radius of 10 μm and the distance of a pair of somas, denoted by d, ranged from 2 μm to 26 μm. b The segmented results on the simulated datasets derived from our method

Table 1.

Performance comparison of FarSight, NeuroGPS, HeY’s method, GFT and the proposed algorithm in different datasets

| Dataset | Type | Volume | Ground truth | Proposed | FarSight | NeuroGPS | HeY’s method | GFT |

|---|---|---|---|---|---|---|---|---|

| Data 1 | 2p-fMOST | 150 × 150 × 150 | 788 | 764/0.92/ | 805/0.85/ | 767/0.78/ | 620/0.70/ | 490/0.60/ |

| 2 × 2 × 2 μm3 | 0.95/0.93a | 0.83/0.84 | 0.80/0.79 | 0.89/0.78 | 0.97/0.74 | |||

| Data 2 | 2p-fMOST | 100 × 100 × 100 | 288 | 280/0.92/ | 268/0.75/ | 265/0.84/ | 246/0.77/ | 231/0.74/ |

| 2 × 2 × 2 μm3 | 0.95/0.93 | 0.81/0.78 | 0.92/0.88 | 0.90/0.83 | 0.92/0.82 | |||

| Data 3 | 2p-fMOST | 100 × 100 × 100 | 25 | 24/0.96/ | 24/0.88/ | 24/0.96/ | 24/0.96/ | 24/0.96/ |

| 2 × 2 × 2 μm3 | 1.00/0.98 | 0.92/0.90 | 1.00/0.98 | 1.00/0.98 | 1.00/0.98 | |||

| Data 4 | 2p-fMOST | 100 × 100 × 100 | 164 | 170/0.94/ | 179/0.76/ | 172/0.88/ | 165/0.82/ | 144/0.73/ |

| 2 × 2 × 2 μm3 | 0.91/0.92 | 0.70/0.73 | 0.84/0.86 | 0.81/0.81 | 0.83/0.77 | |||

| Data 5 | 2p-fMOST | 100 × 100 × 100 | 148 | 147/0.96/ | 173/0.86/ | 146/0.94/ | 147/0.84/ | 135/0.86/ |

| 2 × 2 × 2 μm3 | 0.97/0.96 | 0.74/0.80 | 0.95/0.95 | 0.85/0.85 | 0.94/0.90 | |||

| Data 6 | SIMb | 100 × 100 × 100 | 496 | 486/0.95/ | 528/0.82/ | 407/0.82/ | 399/0.79/ | 452/0.89/ |

| 1 × 1 × 2 μm3 | 0.97/0.96 | 0.77/0.80 | 0.99/0.90 | 0.99/0.88 | 0.98/0.93 | |||

| Data 7 | SIM | 100 × 100 × 100 | 93 | 89/0.96/ | 97/0.88/ | 84/0.90/ | 87/0.90/ | 83/0.88/ |

| 1 × 1 × 2 μm3 | 1.00/0.98 | 0.85/0.86 | 1.00/0.95 | 0.97/0.93 | 0.99/0.93 | |||

| Data 8 | Nissl staining | 100 × 100 × 100 | 695 | 616/0.85/ | 680/0.86/ | 551/0.77/ | 502/0.66/ | 501/0.71/ |

| 1 × 1 × 1 μm3 | 0.95/0.90 | 0.84/0.85 | 0.97/0.86 | 0.91/0.77 | 0.98/0.82 | |||

| Data 9 | Nissl staining | 100 × 100 × 50 | 287 | 266/0.90/ | 263/0.79/ | 216/0.74/ | 197/0.67/ | 187/0.64/ |

| 1 × 1 × 1 μm3 | 0.97/0.93 | 0.86/0.82 | 0.98/0.84 | 0.97/0.79 | 0.99/0.78 | |||

| Mean ± SD | /recall | 0.93 ± 0.04 | 0.83 ± 0.05 | 0.85 ± 0.08 | 0.79 ± 0.10 | 0.78 ± 0.12 | ||

| /precision | 0.96 ± 0.03 | 0.81 ± 0.07 | 0.94 ± 0.07 | 0.92 ± 0.07 | 0.96 ± 0.05 | |||

| /F 1 | 0.94 ± 0.03 | 0.82 ± 0.05 | 0.89 ± 0.06 | 0.85 ± 0.07 | 0.85 ± 0.08 | |||

| two-side p-value of Mann–Whitney test | /recall | 0.001** | 0.021ns | 0.005** | 0.008** | |||

| compared with the proposed method | /precision | 0.000** | 0.893ns | 0.209ns | 0.789ns | |||

| /F 1 | 0.000** | 0.051ns | 0.010** | 0.025* | ||||

ns not significant

*p ≤ 0.05, ** p ≤ 0.01

aNumber of detected cells/recall/precision/F 1-measure

bStructured illumination microscopy

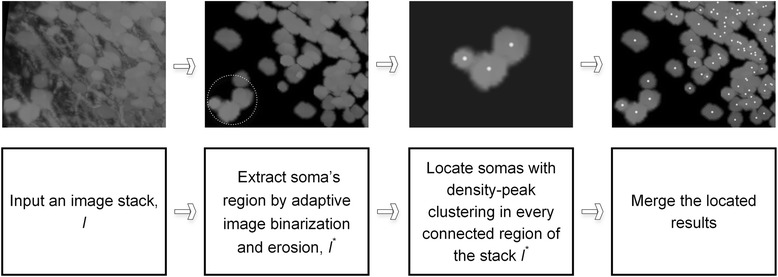

Our proposed method for the location of the neuronal somas consists of the following three steps: 1) extract the soma’s region by using an adaptive image binarization and erosion procedure; 2) locate somas with modified density-peak clustering; and 3) merge the located results. Detailed descriptions of each step are provided in the following sections. We also depict the entire routine of the proposed method in Fig. 1. Notably, our method can also segment the soma’s shape, and we present the related segmentation procedure.

Fig. 1.

The flow chart of the proposed method for the localization of neuronal somas

Estimation of the soma’s region

The procedure for estimating the soma’s region consists of the following steps: 1) split the image stack into sub-blocks; 2) binarize each sub-block and erode each binarized sub-block; 3) merge all eroded sub-blocks into a single image stack; and 4) extract the connected region from the merged image stack. We regard the extracted region as the soma’s region in which one or several somas may be included.

Generally, the signal intensities of somas vastly change in large-scale image stacks. This phenomenon increases the task difficulty in distinguishing between the foreground and background. Considering this point, we split a large-scale image stack into sub-blocks and analyze these sub blocks instead of the whole image stack. Using this procedure, we reduce the range of the signal intensity. When splitting the image stack, the size of a sub-block is set to approximately 200 × 200 × 200 voxels. Any two neighboring regions have overlapping regions to eliminate the boundary effects. The overlapped width is set to 12 fold of the size of a voxel (24 μm). This setting can eliminate boundary effects and avoids the extra calculations required for the overlapped regions.

For each sub-block, let I(x, y, zk) be its kth slice. We binarize I(x, y, zk) for all k, with the following formula

| 1 |

Here, C(x, y, zk) represents the background image, generated as follows: the image min (I(x, y, zk), threOTSU) is convolved 10 times with averaging template of 3 × 3 × 1 pixels. Here, 3 × 3 × 1 and 10 are empirical values that can ensure that the convolved images are sufficiently smooth and approach the background. threOTSU is a binarization threshold estimated by Otsu’s method [48]. threbinarization in Eq. (1) is the predetermined binarization parameter. The above binarization procedure is based on the assumption that C(x, y, zk) can be approached with the Poisson background model, which is suitable for most images collected with optical microscopy. The selection principle of threbinarization should assure that the soma regions can be completely identified, and allows the identified region to contain a small part of the background points. Generally, threbinarization is set to larger values 5 – 8 if the signal intensity of the soma regions is more than double the intensity of the background; otherwise it is set to 2 – 4. Based on this principle, threbinarization is set to 2 for the simulation dataset in our analysis. This value is low and ensure that all soma regions can be identified (even for SNR = 1) and that the identified region contain only about 3 % of the background points, estimated with the Poisson background model. For experimental signals with high SNRs but with soma artifacts, threbinarization is set to 6 to eliminate the soma artifacts. Notably, the soma regions consist of voxel points with B(x, y, zk) = 1.

The binarized image stack B also contains artifacts and noise points. Therefore, we eliminate these unnecessary points using the erosion operation [49]. For each volume pixel with a value of 1, we set its value to zero when the sum value of this volume pixel and its 26-connected volume pixels is less than the erosion threshold T; otherwise, there is no change in its value. We perform this operation for all this type of volume pixels in image stack B to complete one erosion operation. We iteratively repeat this erosion operation until the eroded image stack reaches steady state. We set the erosion threshold T to 9 in the first erosion operation and continuously increase this value with a step of 0.027 for the subsequent erosions. The maximum value of T is less than 11. The specific stability condition of erosion refers to that the relative change rates of two indices, generated with the erosion operation, are less than the given threshold threerosion (0.1 % in our experiment). In these two indices, one is the number of voxels with value 1 and the other is the number of connected regions in the eroded image. Here, threerosion and T are used to control the intensity of erosion. The reasonable number of erosion operations should ensure that the soma morphologies cannot be damaged and is determined by the voxel size and the minimum radius of the cells. We verified that the settings in our experiment are suitable for voxel sizes ranging from 1 × 1 × 1 μm3 to 2 × 2 × 2 μm3 and soma radii ranging from 3 to 10 μm.

We merge all eroded image stacks into a single image stack using the following method. We detect the overlapped region of two neighboring sub-blocks according to the label information from the splitting of the image stack. The overlapped region contacts two un-overlapped regions and is equally divided into two regions denoted by Ra and Rb. This partition leads to one sub-block denoted by A with Ra and Rb indicating the interior and boundary regions, respectively, and the other denoted by B with Rb and Ra indicating the interior and boundary regions, respectively. We assign the signal of A in region Ra and the signal of B in region Rb to the overlapped region. We merge all neighboring sub-blocks in x-axis direction, thereby making the size of the merged sub-blocks the same as the size of the original image stack in the x-axis direction. By continuously using the same operation in the y- and z-axis directions, all sub-blocks can finally be merged into a single image stack.

Finally, we extract the connected region (which refers to the estimated soma region) from the merged image stack using region growing. During the extraction, two volume pixels with values of one are regarded as connected if their positions appear in the sphere region with the radius of the square root of 3. Next, we locate somas in each connected region.

Localization and segmentation of somas based on fast search of density peaks

For each connected region, we use the density peak clustering method [45] to locate and segment somas. Briefly, we locate somas by finding density peaks of the signal (cluster center) and segment somas via the cluster assignment.

The density peak clustering is recently proposed clustering method. It depends on two quantities: the local density ρi of each point, and its minimum distance δi from points of higher density. The two quantities construct the 2D feature space (ρ, δ). The feature space, combined with the hypothesis “cluster center is characterized by a higher density ρ than their neighbors and by a relatively large distance δ” [45], provides the effective information for data clustering. However, the original density-peak clustering method has a disadvantage in that it requires the manual selection of cluster centers. It is infeasible to select cluster centers manually when we use the method to locate thousands of cells from large-scale images. Therefore, we developed a new method to automatically select cluster centers.

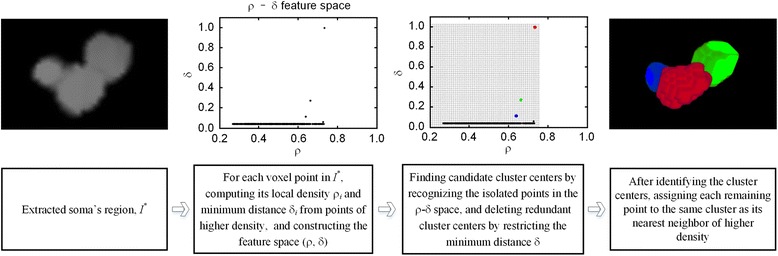

The modified density peak clustering method applied for soma localization and segmentation consists of three parts (Fig. 2):

computing the local density ρi of each voxel point, and its minimum distance δi from points of higher density, and constructing the feature space (ρ, δ);

finding candidate cluster centers by recognizing the isolated points in the ρ-δ space, and deleting redundant cluster centers by restricting the minimum distance δ;

assigning cluster for the points except the identified cluster centers.

Fig. 2.

The steps for the localization and segmentation of somas

Computing ρ, δ

For the voxel point pi (i = 1, 2, …, m) in the estimated soma region, the local density is defined as

| 2 |

Here, I(pi) represents the signal value of volume point pi, K(pi, pj) is a Gaussian kernel function with a kernel width σ, Z is a normalization constant, R is the window radius of the kernel function (R = 2σ), and ||.||2 is 2-norm. In our experimental dataset analysis, the kernel width σ is set to 4 μm, which is slightly more than half of the average value of the soma’s radius.

After obtaining the local density of each voxel point, we calculate the minimum distance δ of a voxel point using the following formula

| 3 |

Identifying cluster centers

Cluster centers are characterized by a higher density ρ than their neighbors and by a relatively large distance δ, and act as isolated points in the ρ-δ space. Therefore, the possible cluster center points can be selected according to the feature density Λ (the density computed in the ρ-δ space). The redundant cluster centers can be removed by restricting the minimum distance δ. Below are the specific steps.

-

Step 1)

Discretize the feature space. We equally divide the intervals (0, max ρi) and (0, max δi) into the [1000max ρi] + 1 and [1000max δi] + 1 subintervals, respectively. Here, [.] is a rounding operation. We count the number of feature points (ρ, δ) that drop into the grids to generate a feature image. We convolve the generated image with a two-dimensional Gaussian window, and obtain the filtered image that contains the information of the density of the feature points. Here, the size of the two-dimensional Gaussian window is 11 × 11 grids and the kernel widths for x- and y-coordinates are both set to 3-fold the size of the grid.

-

Step 2)

Estimate the density of the feature points. For each feature point, we estimate its density by using the value of the pixel, whose region contains the feature point, in the filtered image. This density is named the feature density, denoted by Λ.

-

Step 3)Generate the candidate cluster center points. We obtain the cluster center points by using the following formula

4 Here, threselective is a predetermined parameter and is set to 10-2 in our analysis. Rmin is the minimum value of the estimated soma radius, and is approximately equal to 3 μm in our dataset. Notably, we usually set threselective to a small value to ensure that the candidate points contain all soma positions in the estimated region. The redundant points generated here can be deleted in Step 4.

-

Step 4)

Delete redundant cluster centers. We sort the candidate center points generated in Step 3 in the order of the descending signal density ρ, obtained with Eq. (2). We traverse the points in this order using the following method. For the current point, we search its nearest point among the points that have not been traversed, and calculate the distance between the point and its nearest point. If the distance is less than the minimum value of the estimated soma radius, we label this nearest point. The above procedure is repeated until all candidate points are traversed. We regard the unlabeled candidate points as the final cluster centers, i.e., the positions of somas.

Assigning cluster

After identifying the cluster centers, we assign each remaining point to the same cluster as its nearest neighbor of higher density. The detailed steps are described below.

-

Step 1)

Label the cluster centers. We assign a unique sequence number to each cluster center.

-

Step 2)

Sort the points in the estimated region. We sort the points in the estimated region in the order of the descending local density ρ. The sorted points exclude cluster centers.

-

Step 3)

Assign the points to their clusters. For the current sorted point, we search its nearest point among the points that have higher local densities ρ than the current point. If the nearest point has been assigned to a sequence number, we assign this number to the current point; otherwise, no operation is performed on this point. Using this way, we traverse all sorted points and the unassigned points form a new point series.

-

Step 4)

Repeat Step 2 for the new point series until all points are assigned to their clusters. Points with the same labels are placed into the same cluster.

Performance evaluation

We use the precision rate, recall rate and F1-measure to evaluate the localization results derived by the algorithms. We regard the manually localized positions as true positions. We define an automatically localized position as a true positive position provided that the distance between the automated localized and true positions is less than the fixed threshold, which is set to 8 μm in our experiment. The precision rate is defined as the ratio of the true positive positions to the automatically localized positions. The recall rate is defined as the ratio of the true positive positions to the manually localized positions. The F1-measure is defined as . Note that these three evaluation indices are influenced by the threshold used to identify the true positive positions. The reasonable value should ensure that the evaluation indices change slowly when increasing or decreasing this threshold around the pre-set value.

Results

Segmentation of simulated touching somas at different levels of SNR

A simulation test was performed to validate the effectiveness of our method for soma localization. The synthetic data consisted of 28 image stacks with different signal-to-noise ratios (SNR = 1, 2, 4 and 6). Each image stack contained only one pair of somas. At each SNR level, there were 7 image stacks with different levels of overlap. All somas had a fixed radius of 10 μm, and the distance between a pair of somas (denoted by d) ranges from 2 to 26 μm. When generating the simulation dataset, let the sphere represent a soma. The signal in the inner and out region of a soma is a Poisson signal with mean value of Io + Ib and Ib. SNR is defined as the ratio of Io to the square root of Io + Ib. Ib was fixed and set to 100 in our analysis. In this case, the SNR was determined by Io. All simulation datasets are shown in Fig. 3a, and their localization results are shown in Fig. 3b. From Fig. 3b, we see that our method effectively separated the severely-touching somas at low SNR levels (d = 14 for SNR = 1). Additionally, the separated somas, the slightly-touching somas, and the severely-touching somas were well located and segmented with our method if the SNR was equal to 2 or greater than 2. These results indicate the effectiveness of our method.

Robustness of clustering parameter on soma localization

Our method used density-peak clustering for the localization of a soma. Compared with published localization methods [44, 50, 51] that employ mean-shift clustering [52] for this purpose, our method had a stronger robustness of the clustering parameter on soma localization. We used the simulated datasets, generated with the above-described procedure, to verify this point. These two cluster methods both have a common parameter (kernel width) that influences the localization results. Generally, a large kernel width results in a smooth signal point density curve, but loses the soma boundary information and thus easily confuses densely positioned somas. A small kernel width retains most of the soma boundary information but leads to more than one density peak in the inner region of a soma. Therefore, we regarded kernel width as the key cluster parameter, and we quantified the influence of kernel width on the localization results (Fig. 4a, b). We compared the localization results derived using these two methods on one dataset (Fig. 4a). The results indicated that the reasonable value of kernel width for density-peak clustering ranged from 1 to 7 μm, which was far larger than the range (2.5 - 4 μm) used for mean-shift clustering. We used more datasets to verify this conclusion and obtained the similar results (Fig. 4b), although the reasonable range narrowed as the SNR decreased.

Additionally, we compared the computation efficiencies of these two methods. We used an experimental image block from the hippocampal region with a 200 × 200 × 500 size to generate the testing datasets, which consisted of the first 50, 100, …, 500 slices of this image stack. The results indicated that our method had linear time complexity and was approximately 10-foldfaster than mean-shift based localization method when analyzing a large scale dataset with more than 400 slices (Fig. 4c). The localization part of our method included three steps: computing the local density ρ; searching the minimum distance δ; and identifying cluster center points. The bottleneck was the search for the minimum distance. We reduced the searching space to speed up our method using two strategies: locating somas in every connected region rather than in the whole image stack and using local search for the minimum distance in each connected region. We demonstrated that the complexity of our method is about proportional to the volume of the image stack. The detailed demonstration of the algorithm complexity is provided in Additional file 1.

Localization of the touching somas from an experimental dataset

We used a dataset with closely positioned somas to validate the effectiveness of our method for touching soma localization. The dataset was from a hippocampal region with a volume of 150 × 150 × 150 (2 × 2 × 2 μm3). From this dataset, 788 somas were manually detected (Fig. 5a) and many somas touched one another. We presented the soma localizations derived by the manual method and our method in Figs. 5a and b. Our method located 764 somas. The recall and precision rates were 0.92 and 0.95, respectively. The somas on the boundary were neglected in the quantifications, which could explain why some somas were not manually labeled. Furthermore, our method also behaved well when the key parameter, kernel width, vastly changed. Figure 5c showed that our method provided the segmented results with F1 scores greater than 0.8 for kernel width ranging from 2.5 to 8.0 μm. These two boundary values, 2.5 and 8 μm, vastly deviated from the optimal parameter of 4.0 μm, representing slightly more than half of the average soma radius. This result indirectly verified that our method was suitable for dealing with the diversity of soma sizes. From the above results, we conclude that our method is effective at locating touching somas.

Large-scale soma localization

Our method also effectively located somas from huge and complicated datasets. We used a dataset in which somas exhibited diversity in their spatial distributions and sizes to test our method. The size of this dataset was 2124 × 1200 × 1370 and the voxel size was 2 × 2 × 2 μm3. A total of 35274 somas were detected when our method was used to analyze this dataset. To quantify the segmentation, we selected two typical subregions with the same size of 100 × 100 × 100, labeled with A and B. Region A contained densely positioned somas and region B contained sparsely positioned somas, as shown in Fig. 6a- a and b. The quantified results were as follows: for region A, the recall rate, precision rate, and F1-measure were 0.95, 0.92, and 0.93, respectively, whereas for region B, these three quintiles were 1.00, 0.96, and 0.98, respectively. This result indicates the validity of our methods on this dataset. Figure 6b shows the touching soma segmentation results of three connected regions. Note that the different gray levels here represent different somas. We also illustrated the complexity of this dataset by quantifying related quantities. In Fig. 6c, we calculated the radii of the detected somas and the corresponding radius distribution with a mean of 5.9 μm and a standard deviation of 1.8 μm. Figure 6c showed that the radii of the detected somas were in a wide range (primarily 3 to 10 μm), indicating the diversity of soma sizes. In Fig. 6d, we calculated the distribution of the overlap measure of the detected somas. The overlap measure refers to the ratio of the total radii of a pair of detected somas to the distance between this pair of somas. A detected soma and its closest detected soma form a pair of detected somas. From this definition, an overlap measure of more than 1 corresponds to the two somas touching one another. In Fig. 6d, this value of more than 1 accounted for 23 % of the somas, indicating a certain number of touching measures. Figure 6e showed the different levels of signal intensity of the detected somas, which primarily ranged from 80 to 200. These statistical results verify the complexity of the dataset, which may be challenging for the previous methods.

Comparison of the proposed method with other methods in different datasets

We compared our method with four other methods (FarSight [42], our previously proposed method NeuroGPS [43], HeY’s concave point-based method [37] and the gradient flow tracking (GFT) [38]) and quantified the localizations obtained with these five methods on nine different experimental datasets. These datasets were from three types of images: 2p-fMOST [16], Nissl staining [46], structured illumination microscopy [47]. We made Mann-Whitney [53] test of evaluation indexes (precision, recall, F1-measure), since they were not normal distributions, and analyzed the test results.

The localization evaluation indexes of these methods on the nine datasets were listed in Table 1. The last two rows at the table were values of mean, standard deviation and the results of Mann-Whitney test. For the recall index, the p-values were all less than 0.05, indicating the proposed method is superior to the other approaches in recall. This was because the other methods easily generated under-segmentation for densely positioned cells (Data 1, Data 2, Data 4, Data 6, Data 8, Data 9), resulting in lower values of recall. For sparsely positioned cells (Data 3, Data 5, Data 7), almost all methods could get fine results. Though the proposed method was not significantly better than the other approaches in precision (except FarSight, p-value, 0.000), it still kept high accuracy (mean value, 0.96) and was more stable than other methods (standard deviation, 0.03 vs. ~0.07). The test results of F1-measure also indicated that the proposed method behaved more accurately and stably. We showed the localization results of these datasets in Additional file 2: Figure S1-S9. Notably, for better localization, all the datasets were preprocessed by the method in “Estimation of the soma’s region”, and we used the preprocessed datasets as the input of these methods.

Discussion

Methods [44, 50, 51] using the typical clustering method mean-shift [52] have been proposed to locate cells. Recently, Frasconi et al. [44] proposed a method for the large-scale localization of somas in which mean-shift was employed to estimate the initial soma positions. As indicated in Fig. 4, it is difficult to find a reasonable parameter when using mean-shift for estimating the positions of somas with a diversity of sizes. Therefore, this method considers the structure of the mouse brain region and regards it as prior information for the identification of the final soma positions. This operation significantly enhances the localization accuracy but constrains its application range. In contrast to this method, our method uses density-peak clustering rather than mean-shift to locate somas, and does not require prior structure information for soma identification. Thus, our method may have a wider application than this method and maintain a high level of localization accuracy.

Generally, the localization of cells consists of three steps: image preprocessing; initial cell localization; and identification of the real initial positions. For example, Frasconi’s method uses supervised semantic deconvolution for image preprocessing, mean-shift to estimate the initial cell positions, and structure information from the brain region to identify the real somas. Methods based on model fitting, including our previous NeuroGPS [43], use the threshold value to extract the soma’s region, search for positions corresponding to signal peaks that are regarded as the initial positions, and model fitting to screen the real soma positions. FarShight method [42] is not an exception, and employs a multi-scale Laplacian of Gaussian (LoG) filter to initially estimate the soma positions. The estimation of the initial positions is an important factor to determine the soma localization accuracy. In contrast to the above methods that use the feature (i.e., the soma center featured by a signal peak), our method introduced density-peak clustering, which allowed the soma center to be featured by a signal peak and a large distance δ between the signal peak point and its closest point corresponding to the higher signal peak. Thus, our method used more features, which might make the estimation of the initial soma positions be more accurate.

Image preprocessing is required for soma localization. The goal of image preprocessing is to increases the signal difference between the soma’s region and the background region, and to decrease the difficulties in extracting the soma’s region. Considering the diversity of images, it is difficult to find one commonly used image preprocessing method that is suitable for most soma images. Our method employs binarization and erosion to extract the soma’s region. The corresponding parameter settings are used to accurately extract the soma’s region based on the following assumptions: 1) the estimated radius of somas ranges from 3 to 10 μm; 2) the background noise is Poisson noise; and 3) the voxel size ranges from 1 × 1 × 1 μm3 to 2 × 2 × 2 μm3. With the exception of the assumption 3, the assumptions are suitable for the most cell imaging. We also note that the binarization parameter is not relevant to the voxel size, and that the estimation of the soma’s region for a voxel size far smaller than 1 × 1 × 1 μm3 can be obtained with high accuracy by increasing the erosion intensity. The above analysis indicate that our image processing method can be applied to most image stacks.

The proposed method contains five main parameters: threbinarization; threerosion; the maximum value of the erosion threshold T; Gaussian kernel width σ; and the threshold for selecting candidate cluster center points threselective. The preprocessing parameters, threbinarization, threerosion and the maximum value of the erosion threshold T are used to accurately extract the soma regions. The threbinarization setting is used to ensure that the extracted foreground can completely cover the soma regions. The erosion operation is used to eliminate the artifacts and noise points in the binarized image stack. threerosion and T are used to control the intensity of the erosion, and their settings are determined by the voxel size and the soma radii. We verified that these settings were suitable for voxel sizes ranging from 1 × 1 × 1 μm3 to 2 × 2 × 2 μm3 and soma radii ranging from 3 μm to 10 μm, indicating that these settings were suitable for most cell images. The Gaussian kernel width σ is a scale parameter used to compute the local density and is related to the soma radii. Too larger values of σ usually lead to under-segmentation, whereas too small values may result in over-segmentation. A suitable σ value is about half of the average value of the soma radius (3 – 4 μm). The threshold threselective is used to select candidate cluster center points. Cluster centers are characterized by a higher density ρ than their neighbors and by a relatively large distance δ, and act as isolated points in the ρ-δ feature space. Therefore, the possible cluster center points can be selected according to the feature density Λ (the density computed in the ρ-δ feature space). The threselective setting should ensure that all soma positions are included in the candidate cluster center points. Therefore we set it to a small value of 10-2 in our experiment. This setting is suitable for diverse datasets, including two-photon fluorescence datasets [16], Nissl staining datasets [46], structured illumination microscopy datasets [47], and wide field fluorescence datasets [54]. In short, for different datasets, clustering parameters are easily set, and we usually need to set suitable preprocessing parameters for accurately extracting soma regions according to the image contrast, the influence of neurites. For datasets with many neurites (such as Data 1 – 5 in Table 1), we need to fine tune the preprocessing parameters to eliminate the neurites and keep soma regions. In this case, the proposed method can be categorized as a semi-automated approach. For datasets without neurites (such as Data 6 – 9 in Table 1), we only need to set the binarization parameter according to the image contrast and the complex erosion operation is not necessary. Therefore, the proposed method can work relatively automatically.

Conclusions

In conclusion, we propose a novel method for the localization of touching somas based on modified density-peak clustering. This method can effectively locate somas from large-scale and complicated datasets. Furthermore, we have demonstrated the strong robustness of the key parameter for the localization and its effectiveness at a low SNR level. Thus, the method provides an effective tool for the neuroscience community to quantify the spatial distribution of neurons and the morphologies of somas.

Acknowledgements

We thank the members of the Britton Chance Center for Biomedical Photonics for the data acquisition and valuable suggestions.

Funding

This work was supported by the National Nature Science Foundation of China (91232306, 61205196) and Natural Science Foundation of Hubei Province (2014CFB564). The funding bodies had no role in the design of the study, the collection, analysis and interpretation of data, and in writing the manuscript.

Availability of data and materials

All the 2p-fMOST image datasets and the synthetic datasets supporting the conclusions of the article are available, and can be obtained via contacting the corresponding author (liuxiaomao2001@outlook.com) or visiting https://github.com/ShenghuaCheng/Touching-Cell-Localization. The Matlab package of this method is available via contacting the corresponding author or visiting https://github.com/ShenghuaCheng/Touching-Cell-Localization.

Authors’ contributions

The project was conceived by XL and SZ. SC and TQ designed the algorithms and wrote the software. SC, TQ and XL wrote the paper. SC performed image analysis and processing. All authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

All experiments were performed in compliance with the guidance of the Experimental Animal Ethics Committee at Huazhong University of Science and Technology.

Abbreviations

- 2p-fMOST

Two-photon fluorescence micro-optical sectioning tomography system

- GFT

Gradient flow tracking

- SNR

Signal-to-noise ratio

Additional files

Detailed derivation of the proposed method complexity. (PDF 104 kb)

The localization result comparison of the proposed method and other methods on nine different datasets. (PDF 7671 kb)

Contributor Information

Shenghua Cheng, Email: chengshcc@outlook.com.

Tingwei Quan, Email: qtw1003@163.com.

Xiaomao Liu, Phone: 0086-18963950708, Email: liuxiaomao2001@outlook.com.

Shaoqun Zeng, Email: sqzeng@mail.hust.edu.cn.

References

- 1.Chiang AS, Lin CY, Chuang CC, Chang HM, Hsieh CH, Yeh CW, et al. Three-dimensional reconstruction of brain-wide wiring networks in drosophila at single-cell resolution. Curr Biol. 2011;21(1):1–11. doi: 10.1016/j.cub.2010.11.056. [DOI] [PubMed] [Google Scholar]

- 2.Perry W, Broers A, El-Baz F, Harris W, Healy B, Hillis WD. Grand Challenges for Engineering. Washington, DC: National Academy of Engineering; 2008. [Google Scholar]

- 3.Roysam B, Shain W, Ascoli GA. The central role of neuroinformatics in the National Academy of Engineering's grandest challenge: reverse engineer the brain. Neuroinformatics. 2009;7(1):1–5. doi: 10.1007/s12021-008-9043-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Peng H, Ruan Z, Atasoy D, Sternson S. Automatic reconstruction of 3d neuron structures using a graph-augmented deformable model. Bioinformatics. 2010;26(12):i38–46. doi: 10.1093/bioinformatics/btq212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gómez-Isla T, Price JL, Jr MKD, Morris JC, Growdon JH, Hyman BT. Profound loss of layer II entorhinal cortex neurons occurs in very mild Alzheimer's disease. J Neurosci. 1996;16(14):4491–500. doi: 10.1523/JNEUROSCI.16-14-04491.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bundgaard MJ, Regeur L, Gundersen HJG, Pakkenberg B. Size Of Neocortical Neurons In Control Subjects And In Alzheimer's Disease. J Anat. 2002;198(4):481–9. doi: 10.1046/j.1469-7580.2001.19840481.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Uchihara T, Kondo H, Kosaka K, Tsukagoshi H. Selective loss of nigral neurons in Alzheimer's disease: a morphometric study. Acta Neuropathol. 1992;83(3):271–6. doi: 10.1007/BF00296789. [DOI] [PubMed] [Google Scholar]

- 8.Rajkowska G, Selemon LD, Goldman-Rakic PS. Neuronal and glial somal size in the prefrontal cortex: A postmortem morphometric study of schizophrenia and Huntington disease. Arch Gen Psychiatry. 1998;55(3):215–24. doi: 10.1001/archpsyc.55.3.215. [DOI] [PubMed] [Google Scholar]

- 9.Feng G, Mellor RH, Bernstein M, Keller-Peck C, Nguyen QT, Wallace M, et al. Imaging neuronal subsets in transgenic mice expressing multiple spectral variants of GFP. Neuron. 2000;28(1):41–51. doi: 10.1016/S0896-6273(00)00084-2. [DOI] [PubMed] [Google Scholar]

- 10.Li A, Gong H, Zhang B, Wang Q, Yan C, Wu J, et al. Micro-optical sectioning tomography to obtain a high-resolution atlas of the mouse brain. Science. 2010;330(6009):1404–8. doi: 10.1126/science.1191776. [DOI] [PubMed] [Google Scholar]

- 11.Ragan T, Kadiri LR, Venkataraju KU, Bahlmann K, Sutin J, Taranda J, et al. Serial two-photon tomography for automated ex vivo mouse brain imaging. Nat Methods. 2012;9(3):255–8. doi: 10.1038/nmeth.1854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Silvestri L, Bria A, Sacconi L, Iannello G, Pavone F. Confocal light sheet microscopy: micron-scale neuroanatomy of the entire mouse brain. Opt Express. 2012;20(18):20582–98. doi: 10.1364/OE.20.020582. [DOI] [PubMed] [Google Scholar]

- 13.Chung K, Wallace J, Kim SY, Kalyanasundaram S, Andalman AS, Davidson TJ, et al. Structural and molecular interrogation of intact biological systems. Nature. 2013;497(7449):332–7. doi: 10.1038/nature12107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Osten P, Margrie TW. Mapping brain circuitry with a light microscope. Nat Methods. 2013;10(6):515–23. doi: 10.1038/nmeth.2477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gong H, Zeng S, Yan C, Lv X, Yang Z, Xu T, et al. Continuously tracing brain-wide long-distance axonal projections in mice at a one-micron voxel resolution. Neuroimage. 2013;74(7):87–98. doi: 10.1016/j.neuroimage.2013.02.005. [DOI] [PubMed] [Google Scholar]

- 16.Zheng T, Yang Z, Li A, Lv X, Zhou Z, Wang X, et al. Visualization of brain circuits using two-photon fluorescence micro-optical sectioning tomography. Opt Express. 2013;21(8):9839–50. doi: 10.1364/OE.21.009839. [DOI] [PubMed] [Google Scholar]

- 17.Malpica N, De Solórzano CO, Vaquero JJ, Santos A, Vallcorba I, Del Pozo F. Applying watershed algorithms to the segmentation of clustered nuclei. Cytometry. 1997;28(4):289–97. doi: 10.1002/(SICI)1097-0320(19970801)28:4<289::AID-CYTO3>3.0.CO;2-7. [DOI] [PubMed] [Google Scholar]

- 18.Park J, Keller JM. Snakes on the Watershed. IEEE Trans Pattern Anal Mach Intell. 2001;23(10):1201–5. doi: 10.1109/34.954609. [DOI] [Google Scholar]

- 19.Yang X, Li H, Zhou X. Nuclei Segmentation Using Marker-Controlled Watershed, Tracking Using Mean-Shift, and Kalman Filter in Time-Lapse Microscopy. IEEE Trans Circuits Syst I Reg Papers. 2006;53(11):2405–14. doi: 10.1109/TCSI.2006.884469. [DOI] [Google Scholar]

- 20.Navlakha S, Ahammad P, Myers EW. Unsupervised segmentation of noisy electron microscopy images using salient watersheds and region merging. BMC Bioinformatics. 2013;14(1):294. doi: 10.1186/1471-2105-14-294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nath SK, Palaniappan K, Bunyak F. Cell segmentation using coupled level sets and graph-vertex coloring. Medical Image Computing and Computer-Assisted Intervention–MICCAI 2006. Berlin Heidelberg: Springer; 2006. pp. 101–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Nasr-Isfahani S, Mirsafian A, Masoudi-Nejad A. A new approach for touching cells segmentation. BioMedical Engineering and Informatics, 2008. BMEI 2008. Int Conf IEEE. 2008;2008(1):816–20. [Google Scholar]

- 23.Chen C, Li H, Zhou X, Wong ST. Constraint factor graph cut-based active contour method for automated cellular image segmentation in RNAi screening. J Microsc. 2008;230(2):177–91. doi: 10.1111/j.1365-2818.2008.01974.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Daněk O, Matula P, Ortiz-de-Solórzano C, Muñoz-Barrutia A, Maška M, Kozubek M. Segmentation of touching cell nuclei using a two-stage graph cut model. Segmentation of touching cell nuclei using a two-stage graph cut model. Image Analysis. Berlin Heidelberg: Springer; 2009. pp. 410–9. [Google Scholar]

- 25.Faustino GM, Gattass M, Rehen S, De Lucena C. Biomedical Imaging: From Nano to Macro, 2009. ISBI'09. IEEE International Symposium on. IEEE. 2009. Automatic embryonic stem cells detection and counting method in fluorescence microscopy images; pp. 799–802. [Google Scholar]

- 26.Jierong C, Rajapakse JC. Segmentation of clustered nuclei with shape markers and marking function. IEEE Trans Biomed Eng. 2009;56(3):741–8. doi: 10.1109/TBME.2008.2008635. [DOI] [PubMed] [Google Scholar]

- 27.Plissiti ME, Nikou C. Overlapping cell nuclei segmentation using a spatially adaptive active physical model. IEEE Trans Image Process. 2012;21(11):4568–80. doi: 10.1109/TIP.2012.2206041. [DOI] [PubMed] [Google Scholar]

- 28.Qi X, Xing F, Foran DJ, Yang L. Robust Segmentation of Overlapping Cells in Histopathology Specimens Using Parallel Seed Detection and Repulsive Level Set. IEEE Trans Biomed Eng. 2012;59(3):754–65. doi: 10.1109/TBME.2011.2179298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Li K, Lu Z, Liu W, Yin J. Cytoplasm And Nucleus Segmentation In Cervical Smear Images Using Radiating Gvf Snake. Pattern Recognit. 2012;45(4):1255–64. doi: 10.1016/j.patcog.2011.09.018. [DOI] [Google Scholar]

- 30.Chinta R, Wasser M. Three-dimensional segmentation of nuclei and mitotic chromosomes for the study of cell divisions in live Drosophila embryos. Cytom Part A. 2012;81(1):52–64. doi: 10.1002/cyto.a.21164. [DOI] [PubMed] [Google Scholar]

- 31.Lu Z, Carneiro G, Bradley AP. An improved joint optimization of multiple level set functions for the segmentation of overlapping cervical cells. IEEE Trans Image Process. 2015;24(4):1261–72. doi: 10.1109/TIP.2015.2389619. [DOI] [PubMed] [Google Scholar]

- 32.Turaga S, Murray J, Jain V, Roth F, Helmstaedter M, Briggman K, et al. Convolutional networks can learn to generate affinity graphs for image segmentation. Neural Comput. 2012;22(2):511–38. doi: 10.1162/neco.2009.10-08-881. [DOI] [PubMed] [Google Scholar]

- 33.Arteta C, Lempitsky V, Noble JA, Zisserman A. Learning to detect cells using non-overlapping extremal regions. Medical image computing and computer-assisted intervention–MICCAI 2012. Berlin Heidelberg: Springer; 2012. pp. 348–56. [DOI] [PubMed] [Google Scholar]

- 34.Chen C, Wang W, Ozolek JA, Rohde GK. A flexible and robust approach for segmenting cell nuclei from 2d microscopy images using supervised learning and template matching. Cytom Part A. 2013;83(5):495–507. doi: 10.1002/cyto.a.22280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Xiao H, Peng H. APP2: automatic tracing of 3D neuron morphology based on hierarchical pruning of a gray-weighted image distance-tree. Bioinformatics. 2013;29(11):1448–54. doi: 10.1093/bioinformatics/btt170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Bai X, Sun C, Zhou F. Splitting touching cells based on concave points and ellipse fitting. Pattern Recognit. 2009;42(11):2434–46. doi: 10.1016/j.patcog.2009.04.003. [DOI] [Google Scholar]

- 37.He Y, Meng Y, Gong H, Chen S, Zhang B, Ding W, et al. An automated three-dimensional detection and segmentation method for touching cells by integrating concave points clustering and random walker algorithm. PLoS One. 2014;9(8):e104437. doi: 10.1371/journal.pone.0104437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Li G, Liu T, Tarokh A, Nie J, Guo L, Mara A, et al. 3D cell nuclei segmentation based on gradient flow tracking. BMC Cell Biol. 2007;8(1):1. doi: 10.1186/1471-2121-8-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Li G, Liu T, Nie J, Guo L, Chen J, Zhu J, et al. Segmentation of touching cell nuclei using gradient flow tracking. J Microsc. 2008;231(1):47–58. doi: 10.1111/j.1365-2818.2008.02016.x. [DOI] [PubMed] [Google Scholar]

- 40.Wählby C, Sintorn IM, Erlandsson F, Borgefors G, Bengtsson E. Combining intensity, edge and shape information for 2d and 3d segmentation of cell nuclei in tissue sections. J Microsc. 2004;215(1):67–76. doi: 10.1111/j.0022-2720.2004.01338.x. [DOI] [PubMed] [Google Scholar]

- 41.Buggenthin F, Marr C, Schwarzfischer M, Hoppe PS, Hilsenbeck O, Schroeder T, et al. An automatic method for robust and fast cell detection in bright field images from high-throughput microscopy. BMC Bioinformatics. 2013;14(1):297. doi: 10.1186/1471-2105-14-297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Yousef AK, Wiem L, William L, Badrinath R. Improved automatic detection and segmentation of cell nuclei in histopathology images. IEEE Trans Biomed Eng. 2010;57(4):841–52. doi: 10.1109/TBME.2009.2035102. [DOI] [PubMed] [Google Scholar]

- 43.Quan T, Zheng T, Yang Z, Ding W, Li S, Li J, et al. NeuroGPS: automated localization of neurons for brain circuits using l1 minimization model. Sci Rep. 2013;3:1414. doi: 10.1038/srep01414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Frasconi P, Silvestri L, Soda P, Cortini R, Pavone FS, Iannello G. Large-scale automated identification of mouse brain cells in confocal light sheet microscopy images. Bioinformatics. 2014;30(17):i587–93. doi: 10.1093/bioinformatics/btu469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Rodriguez A, Laio A. Clustering by fast search and find of density peaks. Science. 2014;344(6191):1492–6. doi: 10.1126/science.1242072. [DOI] [PubMed] [Google Scholar]

- 46.Wu JP, Guo CD, Chen SB, Jiang T, He Y, Ding WX, et al. Direct 3D Analyses Reveal Barrel-Specific Vascular Distribution and Cross-Barrel Branching in the Mouse Barrel Cortex. Cereb Cortex. 2014;26(1):23–31. doi: 10.1093/cercor/bhu166. [DOI] [PubMed] [Google Scholar]

- 47.Xu DL, Jiang T, Li AA, Hu BH, Feng Z, Gong H, et al. Fast optical sectioning obtained by structured illumination microscopy using a digital mirror device. J Biomed Opt. 2013;18(6):060503. doi: 10.1117/1.JBO.18.6.060503. [DOI] [PubMed] [Google Scholar]

- 48.Otsu N. A threshold selection method from gray-level histograms. Automatica. 1975;11(285-296):23–7. [Google Scholar]

- 49.Gonzales RC. Digital image processing. India: Pearson Education; 2009. [Google Scholar]

- 50.Wu G, Zhao X, Luo S, Shi H. Histological image segmentation using fast mean shift clustering method. Biomed Eng Online. 2015;14(1):24. doi: 10.1186/s12938-015-0020-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Cheewatanon J, Leauhatong T, Airpaiboon S, Sangwarasilp M. A new white blood cell segmentation using mean shift filter and region growing algorithm. Int J Appl Biomed Eng. 2011;4(1):31. [Google Scholar]

- 52.Comaniciu D, Meer P. Mean shift: A robust approach toward feature space analysis. IEEE Trans Pattern Anal Mach Intell. 2002;24(5):603–19. doi: 10.1109/34.1000236. [DOI] [Google Scholar]

- 53.Mann HB, Whitney DR. On a test of whether one of two random variables is stochastically larger than the other. Ann Math Stat. 1947;18(1):50–60. doi: 10.1214/aoms/1177730491. [DOI] [Google Scholar]

- 54.Xiong HQ, Zhou ZQ, Zhu MQ, Lv XH, Li AA, Li SW, et al. Chemical Reactivation of Quenched Fluorescent Protein Molecules Enables Resin-embedded Fluorescence Microimaging. Nat Commun. 2014;5:3992. doi: 10.1038/ncomms4992. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All the 2p-fMOST image datasets and the synthetic datasets supporting the conclusions of the article are available, and can be obtained via contacting the corresponding author (liuxiaomao2001@outlook.com) or visiting https://github.com/ShenghuaCheng/Touching-Cell-Localization. The Matlab package of this method is available via contacting the corresponding author or visiting https://github.com/ShenghuaCheng/Touching-Cell-Localization.