Abstract

This study demonstrates real-time scan plane control dependent on three-dimensional needle bending, as measured from magnetic resonance imaging (MRI)-compatible optical strain sensors. A biopsy needle with embedded fiber Bragg grating (FBG) sensors to measure surface strains is used to estimate its full 3-D shape and control the imaging plane of an MR scanner in real-time, based on the needle’s estimated profile. The needle and scanner coordinate frames are registered to each other via miniature radio-frequency (RF) tracking coils, and the scan planes autonomously track the needle as it is deflected, keeping its tip in view. A 3-D needle annotation is superimposed over MR-images presented in a 3-D environment with the scanner’s frame of reference. Scan planes calculated based on the FBG sensors successfully follow the tip of the needle. Experiments using the FBG sensors and RF coils to track the needle shape and location in real-time had an average root mean square error of 4.2 mm when comparing the estimated shape to the needle profile as seen in high resolution MR images. This positional variance is less than the image artifact caused by the needle in high resolution SPGR (spoiled gradient recalled) images. Optical fiber strain sensors can estimate a needle’s profile in real-time and be used for MRI scan plane control to potentially enable faster and more accurate physician response.

Keywords: Bragg gratings, magnetic resonance imaging (MRI), needle interventions, surgical guidance/navigation

I. Introduction

MAGNETIC resonance imaging (MRI) is an emerging modality for image-guided interventions, and increasing availability of the technology is making such procedures more feasible [1], [2]. Due to recent advances in T2-weighted, diffusion-weighted (DW) MRI and dynamic contrast-enhanced (DCE) MRI, the selective identification of clinically significant prostate cancer has also significantly improved [3]–[5]. Current needle-driven MRI-guided interventions include applications in neurosurgery; biopsy and tumor ablation (breast, prostate, kidney, liver); and radiation therapy (prostate, kidney).

One motivation for real-time imaging is due to organ and target motion. In neurosurgery, entering the cranial cavity to remove tumors can cause a change in pressure leading to significant brain shift [6]. Hartkens et al. showed up to 20 mm shift in actual lesion position compared to preoperative scans [7]. Real-time imaging can help locate the most current position of tumors leading to more effective therapies. Although CT (X-ray computed tomography) can be used for guidance, it exposes the patient and surgical team to ionizing radiation and is therefore not preferred for interventional procedures [8]. The neuroArm is an MRI-compatible robot for neurosurgery that includes real-time imaging software, and haptic feedback to relay interaction forces and delineate “no-go” zones [9]. This system enables MRI-guided neurosurgery; however, it cannot determine the exact shape of tools without relying on imaging. Moreover, the real-time image is interactive, but not autonomous in sense that scan planes are not automatically prescribed to follow the tools.

Although interactive scan plane control has been used for cardiac procedures [10]–[12], dynamic tool tracking and automatic scan plane control are not widely implemented in practice, and current hardware and software capabilities of MRI systems result in iterative processes of moving the patient in and out of the scanner for imaging and intervention [13], [14]. Furthermore, clear visualization of the entire minimally invasive tool and its intended trajectory is not always available intraoperatively through MR images.

In oncological interventions, including biopsy, cryoablation, and brachytherapy seed placement, needles are used to reach targets such as tumors in the prostate. These procedures are often complicated by needle deflection due to prostate motion and interactions with surrounding tissues of varying stiffness during insertion [15]. It has been shown that the success rate for intended radioactive seed dosage reaching a target in the prostate is 20%–30% due to tissue deformation and gland motion [16]. Furthermore, most dosimetry planning systems assume a straight needle path [17], even though this is not the case in reality. Stabilizing needles have been used to attempt to mitigate missed trajectories due to prostate motion, yet have been found ineffective [16]. Blumenfeld et al. found that the most significant cause of placement error was attributed to needle deflection, especially for needles with an asymmetrical bevel [18]. Various in vitro and simulated studies have characterized needle deflection as a function of insertion depth, needle gauge, and insertion force [17], [19]. When steering around obstacles, tip deflections can be up to 2 cm for a 20-ga 15-cm biopsy needle [20]. These deflections may necessitate reinserting the needle to reach a desired target.

Although real-time MR images can provide visual feedback, magnetic susceptibility artifacts makes it difficult to identify the exact tool profiles and tip deflections. Furthermore, gathering volumetric or multi-slice data is time consuming; hence, it is advantageous to directly image at the tool location. A major need in the interventional prostate therapy and diagnostic fields is a method to estimate needle deflections to allow for immediate compensation of the needle’s anticipated trajectory, to improve treatment time and efficacy while avoiding increased risks to the patient.

Previously, we presented a shape sensing biopsy needle with embedded fiber optic sensors [Fig. 1(A)] [21]. With the sensors, we estimated the three dimensional shape of the entire profile of the needle in real-time. In this paper, we use the needle shape information to automatically control the scan plane of an MRI scanner.

Fig. 1.

(A) Design of the 18 ga MRI compatible stylet with embedded FBG sensors. (B) Shape sensing needle in a live canine model as seen in MR images with 3-D plot of the needle shape according to fiber optic sensor data. At this instance, tip deflections of 2.0 mm and 2.5 mm along the XN and YN axes, respectively, were measured. Plot is scaled to highlight bending.

Methods in active tracking of devices in MRI environments [22]–[24] are increasingly fast and accurate, yet these techniques have limitations in regard to line-of-sight, heating, sensitive tuning, complex calibration, and expense. The use of electromagnetic trackers [25] for position tracking is limited to a small region fixed around a magnetic source, and furthermore, may be ineffective in the MR environment. Optical tracking methods such as the Polaris system (Northern Digital Inc., Waterloo, ON, Canada) may be used in the MR-suite, however their reliance on line-of-sight make them more suitable for out-of-bore and uncluttered environments.

Other methods to track tools in MRI-guided interventions include rapid MRI [26], MR-tracking with radio-frequency (RF) coils [23], [27], [28], and gradient-based tracking (such as the Endoscout by Robin Medical Inc.) [24], [29]. These methods are limited because they require the device to be within the homogeneous volume of the gradient fields used for imaging. Most of these tracking methods also require integration of electronic components with the interventional devices, which further increases complexity, including the need for appropriate patient isolaton. In addition, even MR-safe metallic parts may cause artifacts [30] on the MR images and lead to poor signal and/or inaccurate position information. Methods in passive device tracking have been introduced [13] in order to determine the position of interventional devices and change the scanning plane accordingly. The drawbacks to such methods include continual use of MRI scanning and bulky stereotactic frames or fiducials [14], [31], [32] that are attached to the interventional device. These methods generally only give point measurements of position, and are poor in determining orientation; hence, they cannot be used to estimate a tool’s full 3-D profile. Furthermore, typically these technologies are too large to incorporate into a minimally invasive tool [18]. However, we show that RF coils can be useful in the registration of rigid frames of the tool external to the patient.

Current tracking methods cannot detect the bending shape of the tools in real-time, and/or assume a straight tool. Optical shape sensing overcomes some limitations associated with other approaches, including the ability to be integrated into sub-millimeter size tools, no electromagnetic interference, and no reliance on the MR imager itself, allowing for accurate real-time tool shape detection.

In almost all currently performed needle-driven procedures, the planning, adjustment and initiation of MR scans are performed manually. An autonomous method for scan plane control could enable interventionalists to perform procedures quicker, more accurately and with less risk to the patient. Time is saved when the physician no longer needs to manually image and re-image an area in an attempt to search for the tool and target. In this paper, we demonstrate the feasibility of automatically controlling an imaging plane based on a 3-D shape-sensing needle, and quantitatively show the accuracy of the estimated needle position is comparable to that found from the needle artifact in MR images.

II. Methods and Materials

A. System Components

Image registration to anatomical landmarks is an important step in MR-guided interventions, however, there exist limited methods to register images to interventional tools [33]. Most registration methods employ rigid-body point based techniques to align pre-operative image data with patient anatomy and surgical tools [34]. Our approach is to register the rigid tool base to MR images relative to the scanner frame of reference. The registration and imaging are updated in real-time. Then, we visually display the nonrigid data including the profile of the shape-sensing biopsy needle and the patient anatomy. Based on the tool tip, we display specific planes through the needle tip. Because volumetric data is computationally expensive and time-consuming to acquire, we display only select planes of interest around the target anatomy in 3-D scanner space.

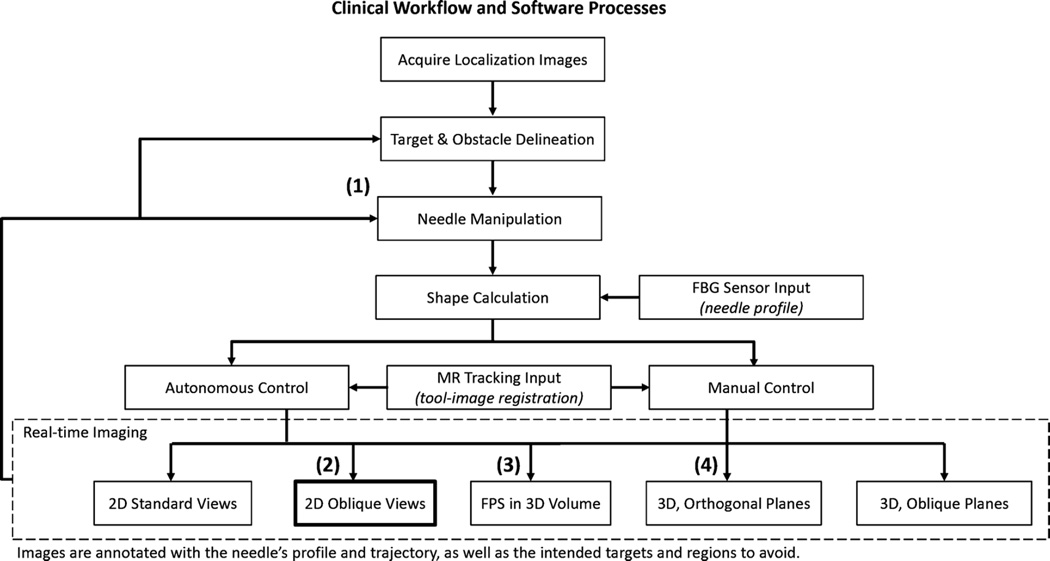

We used three MR tracking coils to define the needle coordinate system in relation to the scanner coordinate system [23]. Thus, the needle base position and orientation are always known. The fiber optic sensors provide the full profile of the needle shape and, using the MR tracking coils’ data, the needle profile is transformed into scanner coordinates. Subsequently, annotations of the needle are overlaid on MR images, and scan planes are prescribed to image through the needle tip. Fig. 2 illustrates the processes involved in the current registration and scan plane control method, as well as the clinical work flow in the ideal implementation of the real-time image guided system.

Fig. 2.

Process flow for tool-image registration and scan plane control. (1) Needle is manipulated either directly by hand or via a steering mechanism. Tool base is tracked by means of miniature MR tracking coils, eliminating the need for a separate calibration step. (2) These 2-D images represent the oblique views through the needle tip in the current implementation. (3) FPS = first person shooter. Mode in which the image is presented as looking down the barrel of the needle. (4) Presentation in 3-D space, where standard coronal, axial, and sagittal or other orthogonal views can be viewed together.

In the presented experiments, the scan plane moved as to find the nearest oblique sagittal and oblique coronal planes through the needle tip, the advantage being that the tip is always in view and any changes in trajectory in relation to surrounding target tissue are easily noted. However, further investigation and user testing will be performed to determine physicians’ preferred methods of viewing the planes. In the current implementation, we use a custom graphical user interface (GUI) to present the prescribed planes in 3-D based on the scanner’s coordinate frame.

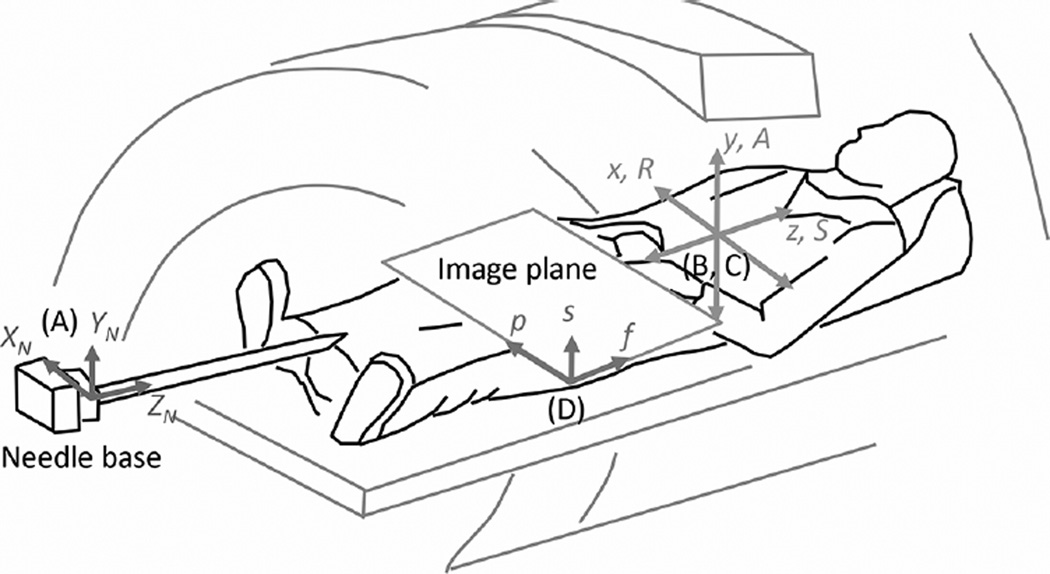

Fig. 3 illustrates the needle inside the scanner and the relevant coordinate frames: the needle frame (XN, YN, ZN), the patient frame (position dependent—R/L,A/P,S/I, with right, anterior, and superior being positive directions by convention), and the scanner frame (x,y,z). We describe the frequency (f) and phase (p) encoding directions as the axes that define the 2-D plane of the image, with the section or slice (s) direction being equal to the cross product of f × p.

Fig. 3.

Relevant coordinate frames, fixed to the (A) needle, (B) scanner, (C) patient, and (D) image. Needle is enlarged to show detail. Origins of the scanner, patient, and image frames are at the magnet’s isocenter. Origin of the needle frame is at its base (proximal) end.

The needle shape is estimated in reference to its base, in a coordinate frame with origin at the base [Fig. 3(A)]. In order to change the scan plane of the MR imager based on the needle shape, first, one needs to find the transformation matrix between the needle and scanner coordinate frames [Fig. 3(B)]. We track the needle base position and orientation using MR tracking coils, without the need for initial calibration.

B. Shape Sensing Needle

We developed an MRI-compatible shape-sensing needle that utilizes optical fiber Bragg grating (FBG) sensors [21]. FBG sensors work by reflecting specific wavelengths from an input broadband light source. The wavelengths shift in proportion to the mechanical and thermal strain to which the gratings are subjected. These sensors have applications in force sensing [35] and structural health monitoring [36]. Medical applications have incorporated FBGs on biopsy needles, catheters and other minimally invasive tools for shape detection and force sensing [37]–[43].

FBG sensors were embedded in a modified off-the-shelf MRI-compatible 18 ga biopsy needle.1 Three optical fibers with each fiber containing two FBGs were embedded 120 apart [Fig. 1(A)] to measure 3-D local curvatures along the needle and compensate for thermal effects. Using simple beam theory and modeling the needle as a cantilever beam [44], a deflection profile is estimated based on the local curvatures and assumed boundary conditions.

Preliminary in-scanner tests were performed to ascertain that no imaging artifact was produced by the optical sensors and that the sensor signal was not affected by the MRI scanner. There was no statistical difference in image artifact between unmodified and modified needles, and the positional accuracy was not compromised. Details on the fabrication, calibration, and positional accuracy of the needle can be found in [21].

A 3-D shape-sensing needle prototype was inserted in a canine model, during a study of MRI methods for transperineal prostate biopsy and cryosurgery, performed in a 3.0T MR 750 scanner (GE Healthcare, Waukesha, WI, USA). The objective of the test was to demonstrate the 3-D shape sensing ability of the needle in vivo2. 2-D multiplanar reformatted images along the plane of the needle were used to qualitatively compare with the deflection data calculated from the wavelength readings of the FBG sensors. Fig. 1(B) shows oblique coronal and sagittal reformatted MR-images of the prostate of the test subject with the needle prototype inserted. The deflection and bend shape were estimated using the FBG sensor signals and reconstructed graphically.

Our previous work tested the benchtop accuracy and MRI-compatibility of the shape-sensing needle. In this paper, we use the 3-D shape sensing needle for autonomous scan plane control to constantly image through the needle tip. Also, we provide real-time 3-D annotation of the needle shape overlaid on MR images.

C. Tool-to-Image Registration: MR Tracking

The transformation matrix between the needle and scanner frame of reference was accomplished with three MR tracking coils embedded in a holder at the needle base. As three points define a plane, the minimum number of coils necessary to determine the needle base frame is three.

We prototyped several coil loop sizes, wire gauges, number of loops, and signal sources for the MR tracking coils, based on techniques used in literature [45]. The optimal wire size was robust yet flexible. Smaller signal sources lead to higher positional resolution. The experiments presented here used 5-mm-diameter single looped 22 ga magnet wire coils, with a 3 mm spherical water gel bead3 as the signal source centered inside the coil.

The three tracking coils and two custom made surface imaging coils were connected to a 5-channel coil receiver box which allowed for simultaneous imaging and tracking via the GE scanner. The custom 5-channel coil receiver box had a Port A-type connector and pre-amplification circuits from GE to boost the received signal. Each coil had a protection diode and LC circuit to block current from flowing through the coils when the body coil was being used for RF transmit. The coils had BNC-type connections to the receiver box. Specific configuration files made for the 5-channel receiver allowed the scanner to use the box for research studies.

We employed an algorithm to find the best-fit plane through the three coils, while forcing the directions of the needle frame’s unit vectors. In the initial, unbent state, the oblique coronal plane of best fit is described as the plane in which the entire needle is in view, with ZN collinear with the positive frequency encoding direction, and XN collinear with the phase encoding direction. This plane consequently describes the needle coordinate system in terms of scanner coordinates. This type of data-fitting to orthogonal vectors is a variation of the orthogonal Procrustes problem, a subset of the absolute orientation problem, and similar methods have been demonstrated in [46] and [47].

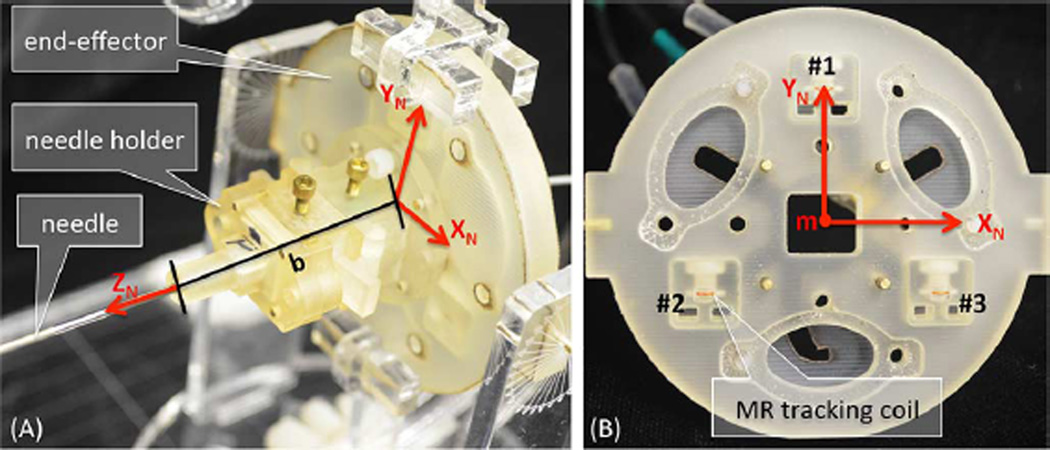

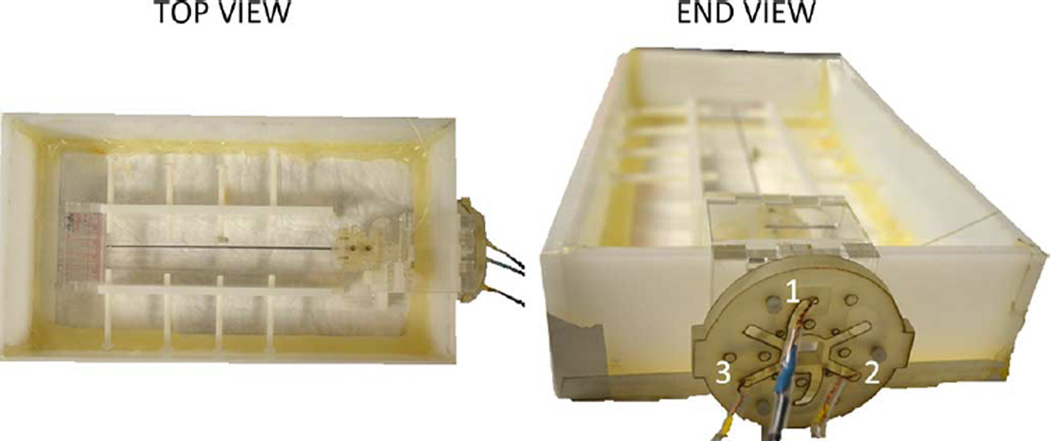

Fig. 4 shows the end-effector, which holds the embedded coils and is affixed to the needle base. The coils are numbered clockwise from 1 to 3 looking at the feet end. Using (1)–(4), we force the direction of XN to be from coil 2 to coil 3, and YN to point from the triangle base formed by coils 2 and 3 up to coil 1. Note that the final size of the end-effector was chosen to be large enough for accurate tracking of the needle frame, yet small enough for hand-held use.

Fig. 4.

Miniature tracking coils for needle base tracking in R, A, S coordinates. Coordinate frames are attached to the end-effector holding the needle base as defined by the triad coil locations. (A) End-effector to needle base distance “b” shown along ZN. (B) End-effector with face plate removed such that tracking coils can be seen.

The transformation matrix between the needle and scanner frame of reference was found using Hadamard encoded MR tracking of the three coils with phase-field dithering [23], [45], implemented with RTHawk (HeartVista Inc., Menlo Park, CA, USA). A custom server program and plugins for RTHawk made it possible to calculate the MR tracked coil positions, take in the FBG sensor data, and display 3-D images and the needle shape annotation in real-time. In the software, we controlled the center of the tracking slice to follow the center of the previously found coil positions. This way, we could decrease the tracking FOV and increase the resolution and positional accuracy of the tracked points.

The optimal pulse sequence parameters for accurate MR tracking of the needle base were set using the custom GUI, including: FOV = 40 cm, size 512 × 512, flip angle = 30°, and TR = 20 ms. Dithering gradients (1.4 cycles/cm) along six orthogonal directions were used to dephase bulk signal originating from outside the tracking coils [45]. The tracking speed and annotation update rate is dependent on the tracking sequence parameters and optical interrogator speed. In this case, needle shape information was sent at about 4 Hz and needle orientation was updated at about 0.6 Hz. A maximum signal algorithm was used to determine the Hadamard positions.

From the Hadamard algorithm, we know each MR tracked coil’s estimated location in (R, A, S) coordinates. Then, following a single iteration method to fix a coordinate frame to three points [48], the needle base orientation is described in scanner coordinates. Given the coil positions (for i = 1, 2, 3)

| (1) |

The needle frame’s unit axes in scanner coordinates are

| (2) |

| (3) |

| (4) |

Hence, the needle x-axis points from coil 2 to coil 3, and the y-axis is towards coil 1 radially from the needle at the center of the triad (Fig. 4). The needle frame origin is given by the mean point (m) of coils 1, 2, and 3.

Next, the rotation from the needle to scanner frames is given by the unit vectors just described

| (5) |

The translation from the needle to the scanner origin is given by the coils’ mean point (known as the triangle center), m, and the base offset, b, which is the distance along between the needle base and plane of the coils

| (6) |

Therefore, the full transformation from needle to scanner frame is

| (7) |

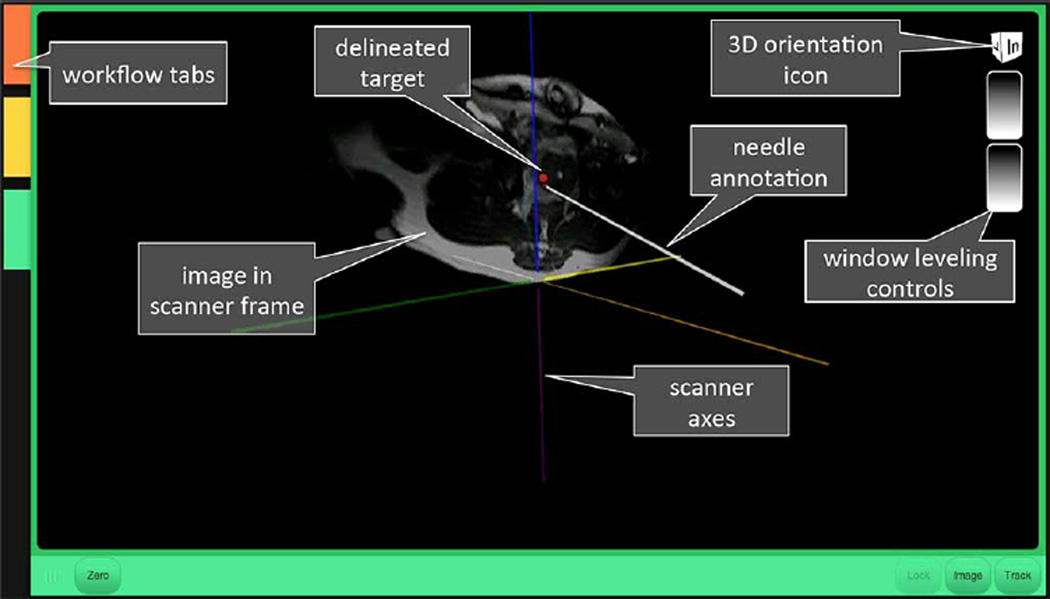

Points along the needle, known in the needle frame from the FBG sensors, are multiplied by to get the needle points in R, A, S. Then, an annotation of the needle is overlaid on the MR images in 3-D scanner space, as shown in Fig. 5.

Fig. 5.

Graphical user interface presenting MR image and needle annotation in the scanner frame of reference.

D. Scan Plane Control

As mentioned, the MR tracking software describes the position and orientation of the needle base in the scanner frame, and the FBG sensors and our custom software give the needle shape with respect to its base position and orientation. Then, as the needle base is manipulated during an interventional procedure, a new transformation (needle to scanner coordinates) is known from the MR tracking coils, without the need for any calibration or initial image registration step.

The scan plane algorithm uses the one-centimeter segment at the tip of the needle, assumed to be a straight segment, to determine the new oblique coronal and oblique sagittal planes to view the needle. The assumption that the tip segment is straight was justified by observing that the orientation does not differ in this region by a significant amount unless there is a concentrated moment at the tip, which is unlikely in practice. It was determined that this tip region will experience less than 0.5% change in slope, based on beam loading calculations to cause up to 2 cm tip deflection.

For the presented scan plane method, we took the plane which contains the tip 1-cm segment that is closest to the standard coronal and sagittal planes. Furthermore, the tip segment of the needle was kept at the center of the image during needle manipulation. The algorithm to find the oblique planes through the needle is as follows.

The needle points p1 and p2 are at the needle tip and one centimeter along the needle away from the tip, respectively. These points are known in R, A, S coordinates. Then, mp is the midpoint

| (8) |

A temporary frequency encoding vector is found, that points from p2 to p1

| (9) |

The midpoint is projected onto the coronal and sagittal planes

| (10) |

| (11) |

The new phase encoding vectors point from the projected midpoints on standard planes to the midpoint along the needle tip section

| (12) |

| (13) |

If the needle tip is more right or anterior to the needle tip, the terms in (12) or (13) are reversed, and the phase direction is from the midpoint to the respective plane.

Then, the slice vectors are found using the frequency vector defined by the needle tip

| (14) |

| (15) |

Finally, the new frequency encoding vectors are found

| (16) |

| (17) |

In this method, the new oblique planes keep the needle tip in view. Scan planes are chosen that cut through the needle tip and are closest to the standard sagittal and coronal planes. During manipulation, the needle will appear to move towards a target in subsequent images, and the oblique planes will not necessarily be orthogonal to each other. An alternative method which excludes (16) and (17), defines the needle tip section as the frequency encoding direction for both planes, and the closest oblique coronal and oblique sagittal planes are found, while keeping these planes orthogonal to each other. In the latter method, the needle may appear still, with objects moving towards it in subsequent images during manipulation. One can think of the encoding direction vectors for a given imaging plane, f, p, s as the orthonormal coordinate frame to describe the needle tip orientation.

E. Experimental Methods

A series of in-scanner experiments were performed to demonstrate the feasibility of controlling an imaging plane based on the 3-D shape-sensing needle, and to quantitatively show the accuracy of the estimated needle position to that found from MR images. Experiments included characterizing the accuracy of the MR tracking coils in the scanner, at various positions and orientations relative to the magnet isocenter. Scan plane control is then demonstrated while bending the needle in a water bath, to clearly show the chosen image planes. Finally, we demonstrate scan plane control in an ex vivo animal model.

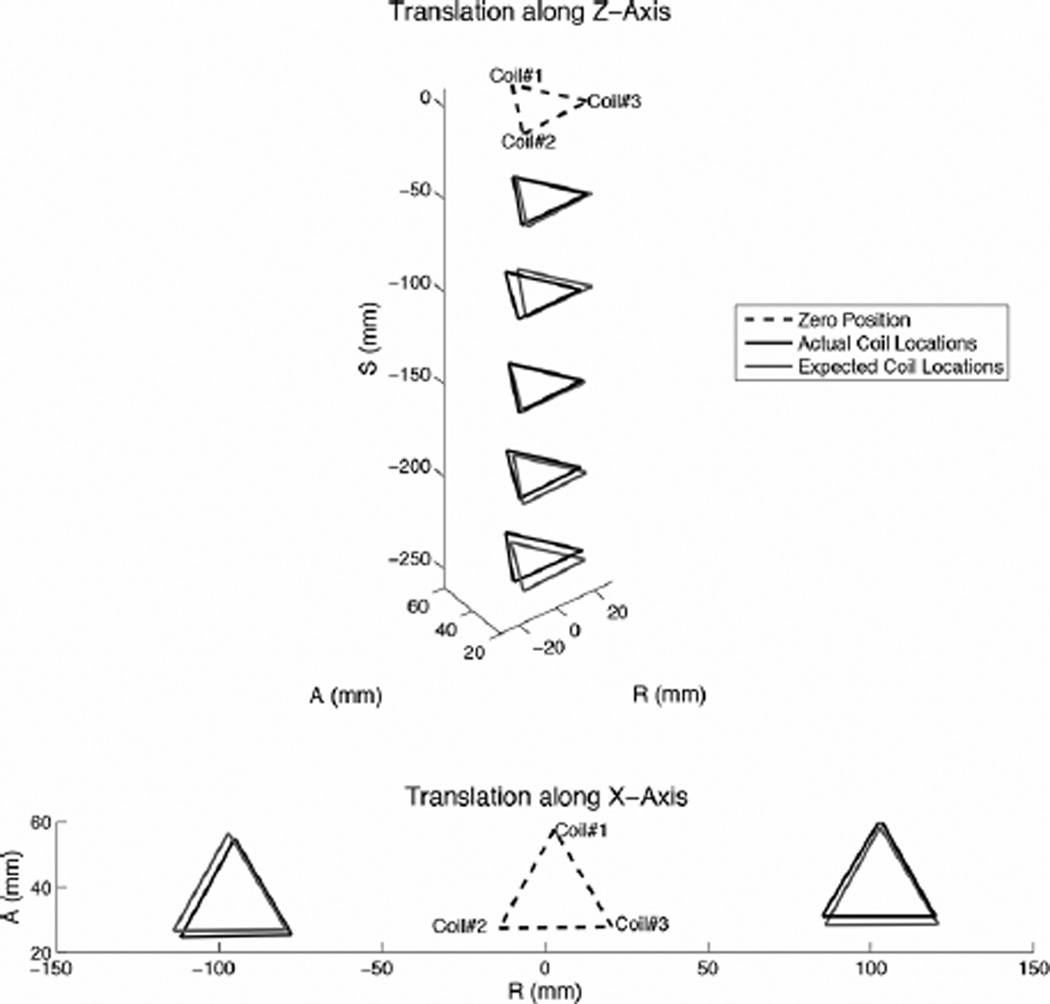

1) MR Tracking Accuracy due to Orientation

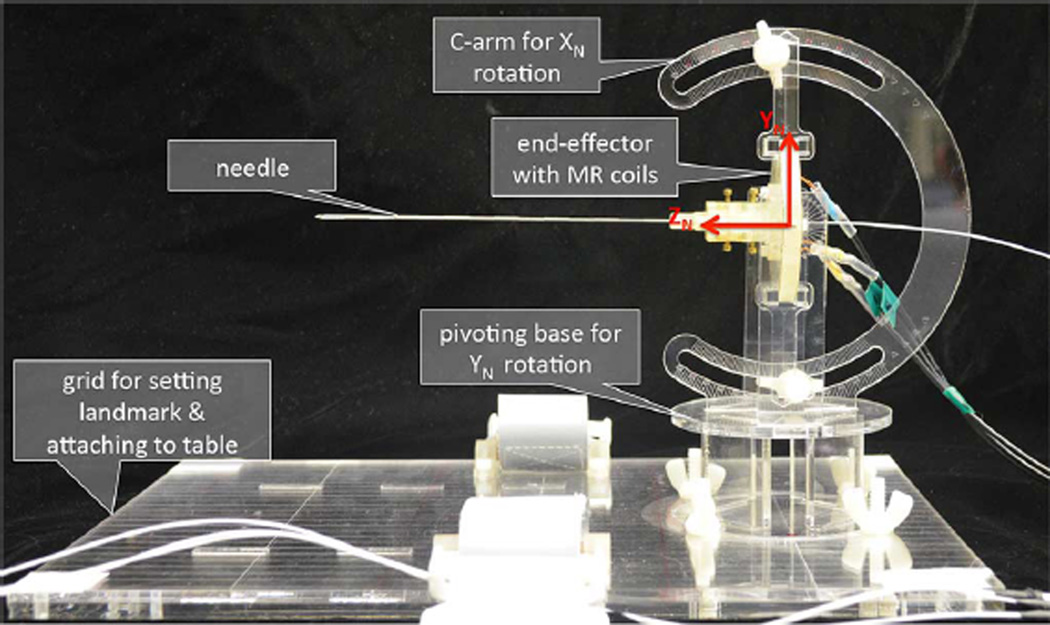

The end-effector with embedded MR tracking coils was installed on a rotating apparatus as shown in Fig. 6. The needle was attached to the end-effector, and a prostate phantom4 was placed under the needle to simulate a nearby signal source. For tracking and imaging, the apparatus was initially landmarked at the end-effector center. The apparatus had angular markings every degree to set rotations. The coil’s initial position (0 rotation) is measured with the end-effector aligned in the scanner XY plane, such that all coils are visible in a standard axial slice. Independent rotations about XN and YN were tested at −30° to +30° at 15° increments, and compared to the expected coil positions given the rotation about the initial position.

Fig. 6.

Apparatus to rotate the MR tracking plane about XN and YN.

2) MR Tracking Accuracy due to Translation From Isocenter

Next, we tested accuracy of the tracking coils while further from the isocenter, where the main magnetic field is most homogeneous. The end-effector was fixed parallel to a standard axial plane, and moved from the center landmarked location 25 cm along ZN in 5 cm increments and ±10 cm along. The apparatus base had grid lines every 1 cm along XN and every 5 cm along ZN for translation testing.

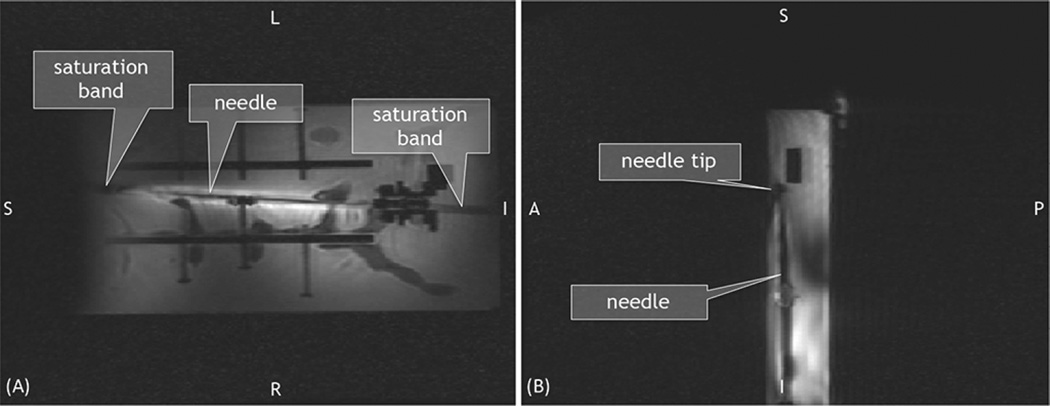

3) Scan Plane Control in Water Bath

The autonomous scan plane method was demonstrated in a water bath inside the MRI scanner (3T Signa MR750w5), so that the entire needle could be seen in the MR images. The needle base was secured in the water bath, with the MR tracking coils fixed a known distance from the needle base. The coils were outside the water bath to prevent damage from the water (Fig. 7). The needle was first imaged while straight, then bent via plastic screws and positioning nuts inside the water bath. For each position, the needle annotation coordinates, obtained using the FBG sensors and MR tracking coil data, were collected and compared to coordinates obtained from high resolution SPGR (FOV = 22 cm × 5.5 cm, size 512 × 128, TR = 5.5 ms, TE = 1.7 ms, thickness = 3 mm, spacing = 1 mm) axial images that were taken every 4 mm along the needle length using the GE scanner software. A coronal plane with a clear view of the needle was manually prescribed and later interleaved with an autonomously prescribed plane in order to demonstrate that the prescribed plane qualitatively agreed with the location of the needle. The two imaging planes were set to interleave as quickly as possible so that the spins in each plane would not have enough time to recover, and hence generate dark signal bands, known as saturation bands, in the coronal image. Finally, the image obtained from the autonomously prescribed plane shows a clear artifact from the needle demonstrating whether the FBG and tracking coil data accurately describes the location of the needle.

Fig. 7.

Apparatus used in scan plane experiments to bend the needle while visualizing its entire length in MRI. MR tracking coils were fixed a known distance from the needle base.

4) Scan Plane Control in an Ex Vivo Model

In order to show clinical relevance of the system, we demonstrated scan plane control in a ventilated ex vivo porcine model. The shape sensing needle was placed inside the model’s liver. The animal was intubated and placed on a respirator such that the rib cage and internal organs moved as if in normal respiration. Due to the ventilation, the diaphragmatic excursion caused the liver to slide in the cranial-caudal direction, similar to the physiological stresses that would be on the needle during respiration, which resulted in periodic needle flexing. Scan planes were automatically prescribed to follow the needle throughout the flexing cycle. Data was gathered at two cases: release and breath hold. At breath hold, the internal pressure in the model’s lung was 30 cm H2O, and resulted in a bent needle profile. At release, air in the lungs was emptied (5 cm H2O), resulting in a straighter needle. High resolution FSPGR images (FOV = 32 cm, size 512 × 512, flip angle = 10°, TR = 2.8 ms, TE = 1.5 ms, thickness = 0.5 mm, spacing = 0 mm) through the model for the two breathing cases were taken to compare the needle position as seen in the MR images to the estimated needle profile based on the optical sensors and MR tracking coils. Imaging parameters such as higher bandwidth and lower echo time were chosen as to minimize needle artifact while providing a clear image of the liver and internal organs. The FSPGR images were reformatted into 3-D multi-planar reconstructions (3D MPR) in OsiriX6, and points along the center of the needle artifact were chosen and compared to the needle annotation points. A supplementary video of this procedure is provided with the online article.

III. Results

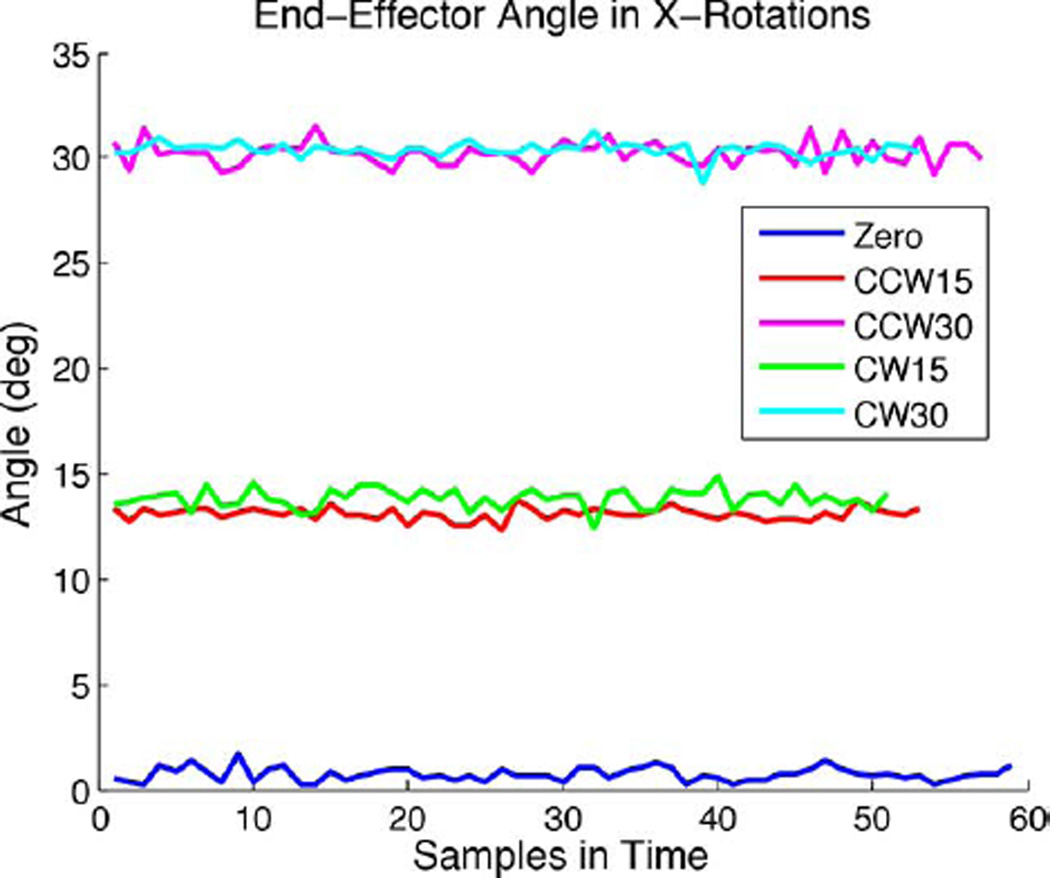

The end-effector with the needle attached was rotated and translated as described in the Methods and Materials section. At least 50 tracked points were collected per data set (approximately 30 s). During the acquisition, each component (R, A, S coordinate) of the coil positions have some uncorrelated noise. This is illustrated in Fig. 8, which shows the trace of the actual end-effector angle as measured at each sampled point for the rotations about XN.

Fig. 8.

Measured end-effector angle based on mean initial position during data acquisition for all rotation data about the X-axis.

In the rotation and translation tests, the positional error was calculated with respect to the expected coil position based on a given rotation or translation about the initial (zero) position. Positional error was defined as the distance between the measured and expected coil position.

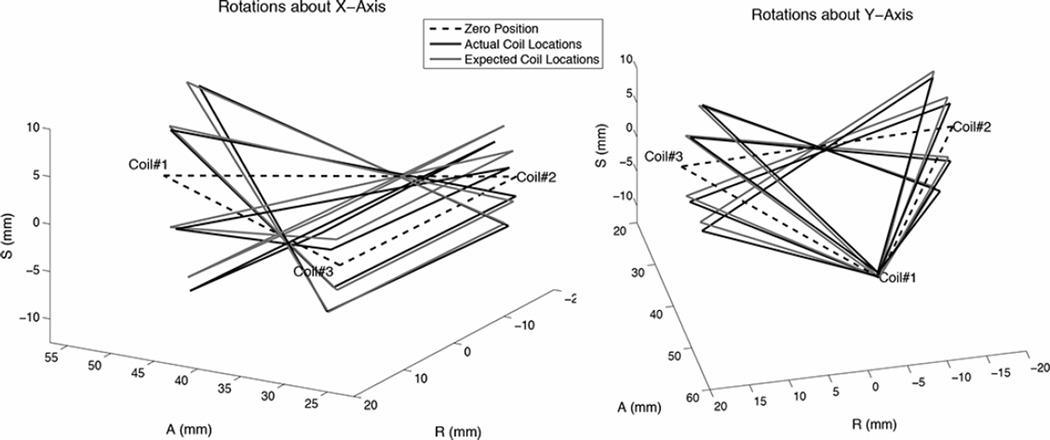

Fig. 9 shows the coil positions from the rotational tests as measured and expected using the mean coil position during data acquisition. The expected rotation was calculated based on the initial plane of the coils before rotation about the apparatus’ axes. Table I summarizes the average standard deviation and range of the measured coil positions’ (R, A, S) components for the rotation cases. The average standard deviation was 0.17 mm and the maximum standard deviation was 0.49 mm for all coils in the rotation tests. The average positional error for all coils in the rotation cases was 0.91 mm between the measured and expected positions; this corresponded to 1.20% of the expected coil positions relative to the isocenter. The maximum error was 1.92 mm or 3.09% for the rotation cases. The median difference in expected and actual angle was 0.4 and the maximum angular difference was 1.9.

Fig. 9.

Measured coil positions and expected positions in rotation tests, in which the landmarked isocenter was though the end-effector center.

TABLE I.

Standard Deviations and Range of the Coil Positions in Rotation Tests. Units in Millimeters

| Coil 1 | Coil 2 | Coil 3 | ||

|---|---|---|---|---|

| Y Rotations | Avg. Std. Dev. Range |

0.13 0.43 |

0.07 0.29 |

0.29 1.22 |

| X Rotations | Avg. Std. Dev. Range |

0.11 0.48 |

0.05 0.15 |

0.34 1.43 |

Fig. 10 shows the translation results as measured and expected. Table II shows the average positional error for each set of coils in all translation cases. Coordinates are reported relative to isocenter, and as mentioned, the end-effector initial (zero) position was set such that all coils could be seen in a standard axial plane. For the translation tests, the average and maximum positional errors between each coil’s measured and expected coordinates were 3.50 mm (1.33%) and 5.72 mm (4.06%).

Fig. 10.

Measured coil positions and expected positions in translation tests from landmarked isocenter.

TABLE II.

Average Positional Errors Between Expected and Measured Coil Triad Positions and Average Percent Errors Relative to Isocenter for Translation Cases

| Expected Translation (cm) | Position Error (mm) | Percent Error (%) |

|---|---|---|

| −5Z | 2.83 | 3.50 |

| −10Z | 4.96 | 0.49 |

| −15Z | 1.24 | 0.29 |

| −20Z | 3.60 | 1.15 |

| −25Z | 4.98 | 1.29 |

| −10X | 2.79 | 0.42 |

| +10X | 4.07 | 2.21 |

It was hypothesized that the positional standard deviations would be smaller when a coil was less tilted from its initial alignment with the Y-axis. Therefore, under rotations about Y, coils 2 and 3 would have relatively consistent signals, and coil 1 may be slightly better due to its proximity to isocenter. For X rotations, it was hypothesized that the positional standard deviations would be greater for all coil positions for larger angular deflections. However, results showed that during several cases (−30° about Y, and + 30°, −15° and −30° about X), coil 2 readings were very stable, with zero measurable standard deviation. However, generally, coil 3 position readings had a higher variance in most cases compared to coils 1 and 2. This suggests the signal-to-noise (SNR) was dependent on individual factors regarding each coil, diode, and receiver channel circuit. Within the range tested, rotations of the coils around isocenter made little difference in the measured positions. Translations away from isocenter resulted in higher difference between expected and measured coil locations. During testing, in order to increase SNR for the cases of Z-translation of 20 and 25 cm from isocenter, the tracking FOV was increased from 40 to 60 cm. With this adjustment, the coil was successfully tracked in all translations tested up to 25 cm along Z and 10 cm along X away from isocenter.

The intrinsic resolution of the MR images taken during scan plane control was directly calculated from the scan parameters (field-of-view, matrix acquisition size, and slice thickness). The positional uncertainty is ± half the resolution. The uncertainty of image points in the GRE image performed by the real-time GUI were ±0.39 mm, ±0.39 mm, and ±2.5 mm in the frequency, phase, and section directions, respectively, when FOV was 40 cm and ± (0.59, 0.59, 2.5) mm in (f, p, s) when FOV was 60 cm. The uncertainty of the positions in the high-resolution SPGR axial images was ± 0.39 mm, ±0.39 mm, and ± 1.5 mm in the frequency, phase, and section directions, respectively.

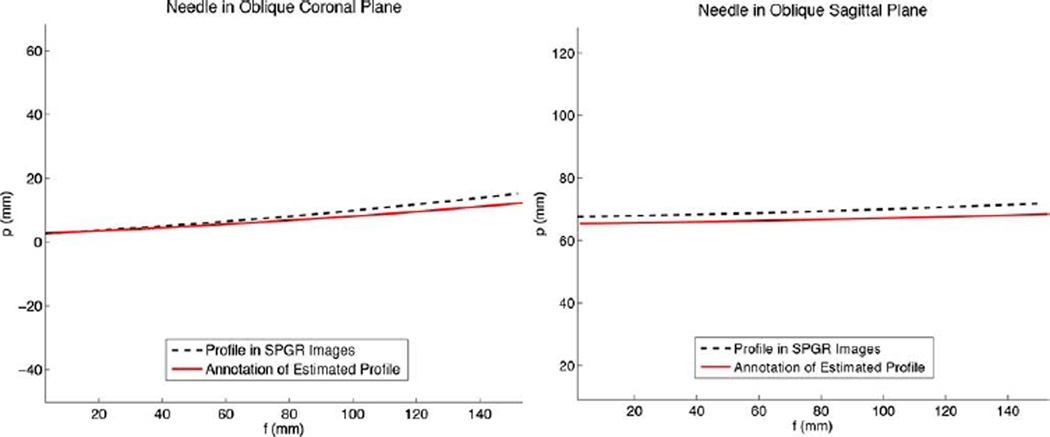

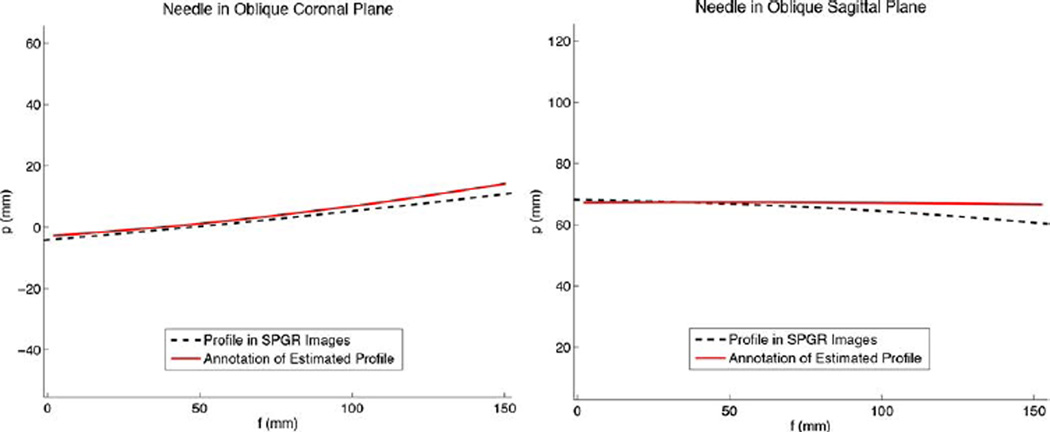

We successfully found oblique planes adjacent to the needle based on its deflected shape as estimated from the FBG sensors. Knowing the needle’s deflection, we were able to assign encoding vectors and the image center to move the scan plane as desired. For this preliminary test, half a centimeter from the tip was kept at the center of the image as a means to always keep the tool tip in view. The new scan planes and offsets were found based on the FBG sensor measurements (that estimated the needle profile) and the MR tracking coils (that estimated the needle orientation and base location). Since we used the last one centimeter of the needle tip to determine the oblique image planes, in the images acquired, this segment always appeared in the calculated oblique coronal and oblique sagittal planes.

In the water bath, the needle was bent via screws in varying amounts in both the XN ZN and YN ZN planes simultaneously, with tip deflections varying from 8 to 20 mm. Figs. 11 and 12 show the estimated needle profile compared to the profile as measured from the axial SPGR images for two different bending cases. The needle profile from the axial SPGR images was reconstructed using an image segmentation and Gaussian filter algorithm to automatically find the needle cross-section from the images of the water bath. In the smaller bend case (Fig. 11), rms error between the estimated and imaged profiles was 4.1 mm, and the tip position varied by 4.0 mm. In the larger bend case (Fig. 12), rms error between the estimated and imaged profiles was 7.1 mm, and the tip position varied by 6.3 mm. In the case of a straight needle, the rms error along the profile was 2.3 mm and tip position error was 2.7 mm.

Fig. 11.

Needle profile as estimated in real-time from the FBG sensors and MR tracking coils, compared to the needle profile as seen in high-resolution SPGR images. In this case, the needle tip was deflected approximately +10 mm primarily in the XN ZN plane.

Fig. 12.

Needle profile as estimated in real-time from the FBG sensors and MR tracking coils, compared to the needle profile as seen in high resolution SPGR images. In this case, the needle tip was deflected approximately −15 mm in the XN ZN plane and −10 mm in the YN ZN plane.

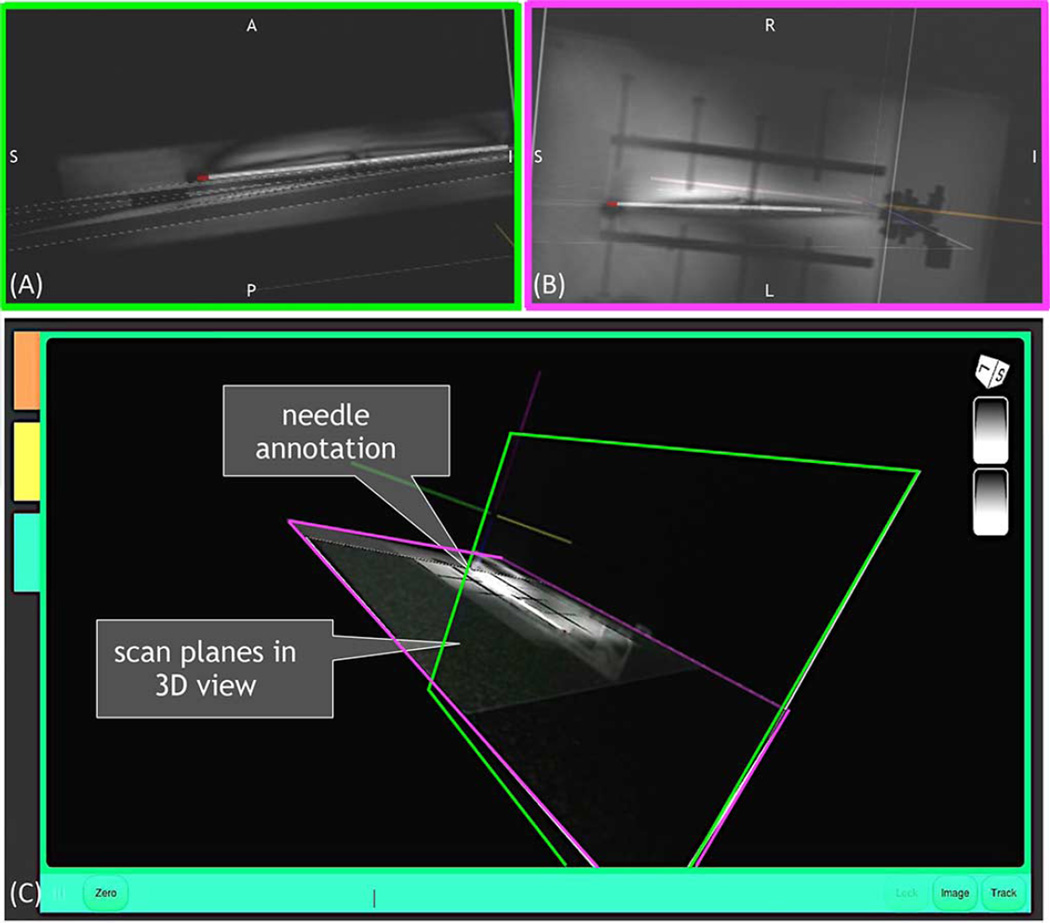

The annotated needle shape in the real-time GUI also gives insight as to how the scan plane followed the bent needle’s tip segment as expected (Fig. 13). In the 2-D images acquired from the RTHawk embedded GUI, the saturation band in the oblique coronal image can be seen, indicating the position of the interleaved oblique sagittal slice (Fig. 14).

Fig. 13.

Needle profile annotated in select views prescribed through the needle tip as displayed in 3-D on the real-time GUI: (A) oblique sagittal (green), (B) oblique coronal (purple), and (C) both oblique sagittal and oblique coronal views together.

Fig. 14.

2-D images saved from the real-time GUI showing the (A) set oblique coronal and (B) oblique sagttial view automatically prescribed based on needle tip deflection. Saturation band through image (A) is caused by the interleaved image (B).

In the ex vivo test, in the breath release case, the tip position error between the needle as seen in the high resolution 3-D MPR images and the estimated needle annotation profile was 4.6 mm. In the breath hold case, the tip position error was 5.9 mm.

The average needle artifact (measured as a width across the center of the needle) in the real-time GRE and high resolution SPGR images was 4.7 mm and 4.1 mm, respectively. The average artifact at the needle tip was 5.2 mm and 6.3 mm in the real-time GRE and high resolution SPGR images. In the reformatted 3-D MPR images from the ex vivo test, the average artifact across the needle diameter was 8.8 mm. We did not attempt to estimate errors that could potentially be introduced by nonlinear gradients.

IV. Discussion

To our knowledge, this is the first effort to integrate strain sensors on a biopsy needle to monitor its bent shape in real-time, such that tool shape detection and tracking is decoupled from imaging. This is also the first report of the use of optical FBG sensors to measure mechanical strains of a needle during MR imaging for autonomous scan plane control. Our approach uses miniaturized sensing elements embedded into the interventional tool itself and does not necessitate continual scanning for tracking the needle shape.

FBG sensors are flexible, small, and light, making them ideal for integration in minimally invasive devices such as needles, probes, and catheters. In addition, the glass-fiber technology is intrinsically MRI compatible. FBGs can be further miniaturized (as small as 40 m diameter), and can be used in interventional devices for tracking in conjunction with other imaging modalities such as ultrasound (US) and computed tomography (CT). Costs of FBGs and increasingly small optical fiber cables are dropping, enabling the use of the technology in disposable medical devices [49]. Due to the robustness of the fibers, these sensors are also easily sterilizable either by autoclave, ethylene oxide, ultra-violet radiation, or other methods [50].

The algorithms used to calculate the real-time views based on strain measurements from the FBG sensors and tracking measurements from the RF coils, resulted in the expected images in planes adjacent to the needle. Visually, the images are centered as expected in the water bath tests (Fig. 13). The average rms error between the needle profile as seen in the MR images and as estimated by the sensors was 4.2 mm for bending cases up to 20 mm tip deflection; this is less than the artifact at the needle tip measured in the high-resolution SPGR images (6.3 mm). Also, in the ex vivo tests, the average tip error (5.2 mm), is much less than the needle artifact in the high-resolution reformatted images (8.8 mm). Although the high resolution images were obtained as soon as possible after the ventilator was held, some change in pressure in the duration of image acquisition may have led to larger tip position errors when comparing image data to tracking and shape-sensing data. More accurate measurement of the needle position from artifact data may be obtained from criteria established by prior studies [51]. As reported in [21], the error in shape estimation relative to the needle base calculated from the FBG sensors is submillimeter—up to 0.38 mm error for deflections up to 15 mm. Therefore, the major cause of positional error is due to the MR tracking coils. Since the needle appears in the controlled planes as expected, and the estimated needle shape is within the needle artifact as seen in the images, this accuracy appears to be sufficient for interventional procedures.

The FBG interrogator7 used in these tests sampled at 4 Hz, allowing for fast updates of needle shape information. Images were obtained every 0.96 s per slice without updated orientation tracking, or 2.52 s per slice when interleaved with MR tracking. This speed seems sufficient for use in real-time interventions. However, we have the ability to shape-sense at much faster speeds (1+ kHz) with different interrogators, and play back shape-sensing data at video frame rates (60 fps). Feedback from clinicians using the prototype for phantom, ex vivo, and in vivo target tests will help inform whether the tracking update rate is sufficient.

When tracking without imaging, the sampling frequency was selected to be as quick as possible to allow for an appropriate spatial resolution (<1 mm) variation in our tracking position. When combined with imaging, the tracking sequence had to be further slowed down to allow for steady-state conditions to be reached in the imaging and tracking sequences to prevent imaging artifacts. Hence, the sequence is currently optimized for accuracy in tracking and undisturbed image quality. Future work includes optimizing the sequence for the ideal update rate for specific procedures as recommended by clinicians.

In the case that tracking speed needs to be improved, lower readout bandwidth could be used to complement the reduction in SNR that a reduced TR could cause. Our use of four Hadamard encoding directions should be sufficient to account for any off-resonance effects that can be associated with the lower bandwidth readout. Improvements to the peak detection algorithm include curve fitting to the expected Hadamard positions.

A possible contribution to the positional error due to the needle shape estimation is sensor drift of the nominal wavelengths (under no mechanical strain) of the FBGs. A drift in the nominal center wavelength would lead to incorrect strain measurements, and consequently, incorrect 3-D profile estimation. The amount and time period of drift is interrogator dependent and needs to be further characterized. Automated passive tracking of the needle tip position has been described based on detection of the needle artifact in MR images [52], [53]. While speed and accuracy is limited by this intrinsic MR-only approach, it is not sensitive to drift. However, purely MRI-based navigation methods can introduce much larger placement errors, due to the size of the needle artifact [54]. An on the fly calibration method to account for drift may be useful in the future.

Errors in the MR tracking positions can be attributed to the actual setup in the rotation and translational apparatus, and to varied SNR for each coil circuit. In our experiments, the FOV for tracking is fixed to the center of the triangle formed by the three tracking coils. A smaller FOV with the same number of readouts will give better resolution of the tracked points. However, SNR is worse when the FOV is small. When the coils were more than 20 cm from isocenter we had to increase the tracking FOV. However, this did not seem to affect accuracy of the tracked points for the translations tested. It can be assumed that the needle tip will not be more than 10 cm away from the target when tracking is desired, so the translations tested are relevant for clinical applications. During procedure, in order to minimize large deviations in the needle annotations due to noise from the MR tracking coils (Fig. 8), filtering can be used to smooth and average several gathered coil points before calculation of the needle base position and orientation.

In theory, tracking coils that are further spaced apart lead to better base position and orientation accuracy. As [55] shows, lower target registration error results from fiducial markers that surround the target. Such configurations would be difficult to achieve, as the position of additional tracking coils need to be known relative to the scanner bed, or extended from the rigid tool base in some manner. Furthermore, there is a practical limit to spreading the coils in order to keep them in an accurate region for tracking around the scanner isocenter. Although we have constraints on the end-effector size and weight, there is room for additional MR tracking coils on the current end-effector for improved tool to image registration. Our current hardware setup was limited to five receiver channels (three for tracking coils and two for surface imaging coils). However, to increase tracking accuracy, a receiver box with more channels for tracking coils could be used.

It remains to be seen if the accuracy of the needle tip estimation compared to the needle artifact is clinically beneficial. A potential experiment could be performed in phantoms with different lesion sizes to see how small of a lesion we can target. Measurements of the final location of the needle can be obtained from CT scans to prevent the inherent needle tip artifacts present in MR images.

V. Conclusion

Automatic scan plane control with a sensorized needle has potential for application in many areas of needle-based minimally invasive procedures including biopsy, brachytherapy, tumor ablation with injectables (e.g., alcohol), interstitial laser theromotherapy, and cryosurgery. The sensing technology can augment current work in MRI-compatible robots with kinematic position and orientation sensing capabilities [9], [14], [32], [56] in order to provide more spatial information about the interventional tool itself. We have developed and validated a system that allows independent and accurate tracking of a flexible needle to directly drive the scanner’s imaging plane during MRI-guided interventions. The average positional error of the estimated needle as compared to high resolution MR images in the water bath tests was 4.2 mm, which is comparable to the needle artifact and within the size of clinically significant tumors to be expected during procedure.

We will investigate whether there is beneficial synergy in using needle artifact data [52] to re-calibrate the optical position model without removing the needle from the patient. An extended Kalman filter (EKF) approach may be used to take the measured needle base position and orientation (from an instrumented holder), the estimated needle profile (the from FBG data), and the needle as it appears in the MR images, to update the optical model and maintain its accuracy during the procedure. User interface testing will confirm design assumptions on the preferred method to follow and display the tool in the MR images.

Supplementary Material

Acknowledgments

The authors would like to thank J. H. Bae for help running experiments with the shape-sensing needle; Dr. P. W. Worters for assistance in initial software methods for scan plane control; Dr. M. Alley for consultation on the GE Signa system and transforms concerning scanner and image coordinate frames; Dr. R. Black and Dr. B. Moslehi (IFOS) for sharing their expertise in optical sensing technologies; and Dr. P. Renaud for technical assistance in the writing of this paper.

This work was supported in part by the National Institutes of Health under Grant P01 CA159992 and in part by the National Science Foundation Graduate Research Fellowship Program. Asterisk indicates corresponding author.

Footnotes

Model MR1815, Bracco Diagnostics, Princeton, NJ, USA.

The protocol was approved by our Institutional Animal Care and Use Committee.

AquaGems, LLC, Idaho Falls, ID, USA.

Model 053-MM, Computerized Imaging Reference Systems, Inc., Norfolk, VA, USA

GE Healthcare, Waukesha, WI, USA

Pixmeo, Geneva, Switzerland

DSense, IFOS, Santa Clara, CA, USA

This paper has supplementary downloadable material available at http://iee-explore.ieee.org, provided by the authors.

Color versions of one or more of the figures in this paper are available online at http://ieeexplore.ieee.org

Contributor Information

Santhi Elayaperumal, Center for Design Research, Department of Mechanical Engineering, Stanford University, Stanford, CA 94305 USA, santhie@stanford.edu.

Juan Camilo Plata, Department of Radiology, Stanford University, Stanford, CA 94305 USA, jplata@stanford.edu.

Andrew B. Holbrook, Department of Radiology, Stanford University, Stanford, CA 94305 USA, aholbrook@stanford.edu

Yong-Lae Park, Robotics Institute, Carnegie Mellon University, Pittsburgh, PA 15213 USA, ylpark@cs.cmu.edu.

Kim Butts Pauly, Department of Radiology, Stanford University, Stanford, CA, 94305 USA, kbpauly@stanford.edu.

Bruce L. Daniel, Department of Radiology, Stanford University, Stanford, CA 94305 USA, bdaniel@stanford.edu

Mark R. Cutkosky, Center for Design Research, Department of Mechanical Engineering, Stanford University, Stanford, CA 94305 USA, cutkosky@stanford.edu.

References

- 1.Smith-Bindman R, Miglioretti DL, Larson EB. Rising use of diagnostic medical imaging in a large integrated health system. Health Affairs (Project Hope) 2008 Jan;27(6):1491–1502. doi: 10.1377/hlthaff.27.6.1491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Semelka RC, Armao DM, Elias J, Huda w. Imaging strategies to reduce the risk of radiation in ct studies, including selective substitution with mri. J. Magn. Reson. Imag. 2007 May;25(5):900–909. doi: 10.1002/jmri.20895. [DOI] [PubMed] [Google Scholar]

- 3.Haider MA, van der Kwast TH, Tanguay J, Evans AJ, Hashmi A-T, Lockwood G, Trachtenberg J. Combined T2-weighted and diffusion-weighted mri for localization of prostate cancer. Am. J. Roentgenol. 2007 Aug;189(2):323–328. doi: 10.2214/AJR.07.2211. [DOI] [PubMed] [Google Scholar]

- 4.Kozlowski P, Chang SD, Jones EC, Berean KW, Chen H, Goldenberg SL. Combined diffusion-weighted and dynamic contrastenhanced mri for prostate cancer diagnosis-correlation with biopsy and histopathology. J. Magn. Reson. Imag. 2006 Jul;24(1):108–113. doi: 10.1002/jmri.20626. [DOI] [PubMed] [Google Scholar]

- 5.Ahmed HU, Kirkham A, Arya M, Illing R, Freeman A, Allen C, Emberton M. Is it time to consider a role for MRI before prostate biopsy? Nature Rev. Clin. Oncol. 2009;6(4):197–206. doi: 10.1038/nrclinonc.2009.18. [DOI] [PubMed] [Google Scholar]

- 6.Comeau RM, Sadikot AF, Fenster A, Peters TM. Intraoperative ultrasound for guidance and tissue shift correction in image-guided neurosurgery. Med. Phys. 2000 Apr;27(4):787. doi: 10.1118/1.598942. [DOI] [PubMed] [Google Scholar]

- 7.Hartkens T, Hill DLG, Castellano-Smith AD, Hawkes DJ, Maurer CR, Martin AJ, Hall WA, Liu H, Truwit CL. Measurement and analysis of brain deformation during neurosurgery. IEEE Trans. Med. Imag. 2003 Jan;22(1):82–92. doi: 10.1109/TMI.2002.806596. [DOI] [PubMed] [Google Scholar]

- 8.Hausleiter J, Meyer T, Hermann F, Hadamitzky M, Krebs M, Gerber TC, McCollough C, Martinoff S, Kastrati A, Schömig A, Achenbach S. Estimated radiation dose associated with cardiac CT angiography. J. Am. Med. Assoc. 2009 Feb;301(5):500–507. doi: 10.1001/jama.2009.54. [DOI] [PubMed] [Google Scholar]

- 9.Sutherland GR, Latour I, Greer AD. Integrating an imageguided robot with intraoperative MRI: A review of the design and construction of neuroArm. IEEE Eng. Med. Biol. Mag. 2008 Jan;27(3):59–65. doi: 10.1109/EMB.2007.910272. [DOI] [PubMed] [Google Scholar]

- 10.Santos JM, Wright GA, Pauly JM. Flexible real-time magnetic resonance imaging framework. Proc. Annu. Int. Conf. IEEE EMBS. 2004 Jan;(2):1048–1051. doi: 10.1109/IEMBS.2004.1403343. [DOI] [PubMed] [Google Scholar]

- 11.Rickers C, et al. Applications of magnetic resonance imaging for cardiac stem cell therapy. J. Intervent. Cardiol. 2004 Feb;17(1):37–46. doi: 10.1111/j.1540-8183.2004.01712.x. [DOI] [PubMed] [Google Scholar]

- 12.Guttman MA, Ozturk C, Raval AN, Raman VK, Dick AJ, DeSilva R, Karmarkar P, Lederman RJ, McVeigh ER. Interventional cardiovascular procedures guided by real-time MR imaging: An interactive interface using multiple slices, adaptive projection modes and live 3-D renderings. J. Magn. Reson. Imag. 2007 Dec;26(6):1429–1435. doi: 10.1002/jmri.21199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Dimaio S, Samset E, Fischer G, Iordachita I, Fichtinger G, Jolesz F, Tempany C. Dynamic MRI scan plane control for passive tracking of instruments and devices. Proc. MICCAI. 2007:50–58. doi: 10.1007/978-3-540-75759-7_7. [DOI] [PubMed] [Google Scholar]

- 14.Krieger A, Metzger G, Fichtinger G, Atalar E, Whitcomb LL. A hybrid method for 6-DOF tracking of MRI-compatible robotic interventional devices. Proc. Int. Conf. Robot. Automat. 2006:3844–3849. [Google Scholar]

- 15.Abolhassani N, Patel R, Moallem M. Needle insertion into soft tissue: A survey. Med. Eng. Phys. 2007 May;29(4):413–431. doi: 10.1016/j.medengphy.2006.07.003. [DOI] [PubMed] [Google Scholar]

- 16.Taschereau R, Pouliot J, Roy J, Tremblay D. Seed misplacement and stabilizing needles in transperineal permanent prostate implants. Radiother. Oncol. 2000 Apr;55(1):59–63. doi: 10.1016/s0167-8140(00)00162-6. [DOI] [PubMed] [Google Scholar]

- 17.Abolhassani N, Patel RV. Deflection of a flexible needle during insertion into soft tissue; Proc. 28th Annu. Int. Conf. IEEE EMBS; 2006. Jan, pp. 3858–3861. [DOI] [PubMed] [Google Scholar]

- 18.Blumenfeld P, Hata N, DiMaio S, Zou K, Haker S, Fichtinger G, Tempany C. Transperineal prostate biopsy under magnetic resonance image guidance: A needle placement accuracy study. J. Magn. Reson. Imag. 2007;26:688–694. doi: 10.1002/jmri.21067. [DOI] [PubMed] [Google Scholar]

- 19.Kataoka H, Washio T, Audette M, Mizuhara K. A model for relations between needle deflection, force, and thickness on needle penetration. Proc. MICCAI. 2001:966–974. [Google Scholar]

- 20.DiMaio SP, Salcudean SE. Interactive simulation of needle insertion models. IEEE Trans. Biomed. Eng. 2005 Jul;52(7):1167–1179. doi: 10.1109/TBME.2005.847548. [DOI] [PubMed] [Google Scholar]

- 21.Park Y-L, Elayaperumal S, Daniel B, Ryu SC, Shin M, Savall J, Black RJ, Moslehi B, Cutkosky MR. Real-time estimation of 3-D needle shape and deflection for MRI-guided interventions. IEEE-ASME Trans. Mech. 2010 Dec;15(6):906–915. doi: 10.1109/TMECH.2010.2080360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Derbyshire JA, Wright GA, Henkelman RM, Hinks RS. Dynamic scan-plane tracking using MR position monitoring. J. Mag. Reson. Imag. 1998;8(4):924–932. doi: 10.1002/jmri.1880080423. [DOI] [PubMed] [Google Scholar]

- 23.Dumoulin CL, Souza SP, Darrow RD. Real-time position monitoring of invasive devices using magnetic resonance. Magn. Reson. Med. 1993;29:411–415. doi: 10.1002/mrm.1910290322. [DOI] [PubMed] [Google Scholar]

- 24.Hushek SG, Fetics B, Moser RM, Hoerter NF, Russel LJ, Roth A, Polenur D, Nevo E. Initial clinical experience with a passive electromagnetic 3-D locator system; Proc. 5th Intervent. MRI Symp.; 2004. pp. 73–74. [Google Scholar]

- 25.Wilson E, Yaniv Z, Lindisch D, Cleary K. A buyer’s guide to electromagnetic tracking systems for clinical applications. Proc. SPIE. 2008;(6918):69. 182B℃69 182B-12. [Google Scholar]

- 26.Kochavi E, Goldsher D, Azhari H. Method for rapid MRI needle tracking. Magn. Reson. Med. 2004;51:1083–1087. doi: 10.1002/mrm.20065. [DOI] [PubMed] [Google Scholar]

- 27.Leung DA, Debatin JF, Wildermuth S, Mckinnon GC, Holtz D, Dumoulin CL, Darrow RD, Hofmann E, von Schulthess GK. Intravascular MR tracking catheter: Preliminary experimental evaluation. Am. J. Roentgenol. 1995 May;164:1265–1270. doi: 10.2214/ajr.164.5.7717244. [DOI] [PubMed] [Google Scholar]

- 28.Wacker FK, Elgort D, Hillenbrand CM, Duerk JL, Lewin JS. The catheter-driven MRI scanner: A new approach to intravascular catheter tracking and imaging-parameter adjustment for interven-tional MRI. Am. J. Roentgenol. 2004 Aug;183:391–395. doi: 10.2214/ajr.183.2.1830391. [DOI] [PubMed] [Google Scholar]

- 29.Qing K, Pan L, Fetics B, Wacker FK, Valdeig S, Philip M, Roth A, Nevo E, Kraitchman DL, van der Kouwe AJ, Lorenz CH. A multi-slice interactive real-time sequence integrated with the EndoScout tracking system for interventional MR guidance. Proc. Int. Soc. Mag. Reson. Med. 2010 [Google Scholar]

- 30.Schenck JF. The role of magnetic susceptibility in magnetic resonance imaging: MRI magnetic compatibility of the first and second kinds. Med. Phys. 1996;23:815–850. doi: 10.1118/1.597854. [DOI] [PubMed] [Google Scholar]

- 31.Kazanzides P, Fichtinger G, Hager GD, Okamura AM, Whit-comb LL, Taylor RH. Surgical and interventional robotics—Core concepts, technology, and design. IEEE Robot. Automat. Mag. 2008;15:122–130. doi: 10.1109/MRA.2008.926390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Fischer GS, Iordachita I, Csoma C, Tokuda J, Dimaio SP, Tempany CM, Hata N, Fichtinger G. MRI-compatible pneumatic robot for transperineal prostate needle placement. IEEE-ASME Trans. Mech. 13:295–305. 2008. doi: 10.1109/TMECH.2008.924044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Fei B, Duerk JL, Boll DT, Lewin JS, Wilson DL. Sliceto-volume registration and its potential application to interventional MRI-guided radio-frequency thermal ablation of prostate cancer. IEEE Trans. Med. Imag. 2003 Apr;22(4):515–525. doi: 10.1109/TMI.2003.809078. [DOI] [PubMed] [Google Scholar]

- 34.Cleary K, Peters TM. Image-guided interventions: Technology review and clinical applications. Annu. Rev. Biomed. Eng. 2010 Aug;12:119–142. doi: 10.1146/annurev-bioeng-070909-105249. [DOI] [PubMed] [Google Scholar]

- 35.Park Y-L, Ryu SC, Black RJ, Chau KK, Moslehi B, Cutkosky MR. Exoskeletal force-sensing end-effectors with embedded optical fiber-Bragg-grating sensors. IEEE Trans. Robot. 2009 Dec;25(6):1319–1331. [Google Scholar]

- 36.Amano M, Okabe Y, Takeda N, Ozaki T. Structural health monitoring of an advanced grid structure with embeddedfiber Bragg grating sensors. Structural Health Monitor. 2007;6(4):309–324. [Google Scholar]

- 37.Iordachita I, Sun Z, Balicki M, Kang JU, Phee SJ, Handa J, Gehlbach P, Taylor R. A sub-millimetric, 0.25 mN resolution fully integrated fiber-optic force-sensing tool for retinal microsurgery. Int. J. CARS. 2009;4(4):383–390. doi: 10.1007/s11548-009-0301-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ledermann C, Hergenhan J, Weede O, Woern H. Combining shape sensor and haptic sensors for highly flexible single port system using Fiber Bragg sensor technology. IEEE/ASME Int. Conf. Mechatron. Embed. Syst. Appl. 2012 Jul;:196–201. [Google Scholar]

- 39.Mishra V, Singh N, Tiwari U, Kapur P. Fiber grating sensors in medicine: Current and emerging applications. Sensor Actuat A-Phys. 2011 Jun;167(2):279–290. [Google Scholar]

- 40.Park Y-L, Black RJ, Moslehi B, Cutkosky MR, Elayaperumal S, Daniel B, Yeung A, Sotoudeh V, inventors. Steerable shape sensing biopsy needle and catheter. 8 649 847 U.S. Patent. 2014 Feb 11;

- 41.Elayaperumal S, Bae JH, Christensen D, Cutkosky MR, Daniel BL, Black RJ, Costa JM. MR-compatible biopsy needle with enhanced tip force sensing. Proc. IEEE World Haptics Conf. 2013:109–114. doi: 10.1109/WHC.2013.6548393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Sun Z, Balicki M, Kang J, Handa J, Taylor R, Iordachita I. Development and preliminary data of novel integrated optical micro-force sensing tools for retinal microsurgery. Proc. ICRA. 2009:1897–1902. [Google Scholar]

- 43.Zhang L, Qian J, Zhang Y, Shen L. On SDM/WDM FBG sensor net for shape detection of endoscope. Proc. IEEE Int. Conf. Mechatron. Automat. 2005;4:1986–1991. [Google Scholar]

- 44.Timoshenko S, Young D. Engineering Mechanics. 4th. New York: McGraw-Hill; 1956. [Google Scholar]

- 45.Dumoulin CL, Mallozzi RP, Darrow RD, Schmidt EJ. Phase-field dithering for active catheter tracking. Magn. Reson. Med. 2010 May;63(5):1398–1403. doi: 10.1002/mrm.22297. [DOI] [PubMed] [Google Scholar]

- 46.Arun KS, Huang TS, Blostein SD. Least-squares fitting of two 3-D point sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987 Sep;9(5):698–700. doi: 10.1109/tpami.1987.4767965. [DOI] [PubMed] [Google Scholar]

- 47.Horn BKP, Hilden HM, Negahdaripour S. Closed-form solution of absolute orientation using orthonormal matrices. J. Opt. Soc. Am. A. 1988;5(7):1127–1135. [Google Scholar]

- 48.Horn BKP. Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. A. 1987 Apr;4:629–642. [Google Scholar]

- 49.Méndez A, Engineering MCH, Ave C. Fiber Bragg grating sensors: A market overview. Proc. SPIE. 2007;6619:661. 905–1–661 905–6. [Google Scholar]

- 50.Zen Karam L, Franco AP, Tomazinho P, Kalinowski HJ. Proc. Latin Am. Opt. Photon. Conf. Washington, D.C: 2012. Validation of a sterilization methods in FBG sensors for in vivo in experiments. LT2A.7. [Google Scholar]

- 51.DiMaio SP, Kacher D, Ellis R, Fichtinger G, Hata N, Zientara G, Panych LP, Kikinis R, Jolesz FA. Needle artifact localization in 3T MR images. Med. Meets Virt. Real. 14: Accelerat. Change Healthcare: Next Medical Toolkit. 2005:120–125. [PubMed] [Google Scholar]

- 52.De Oliveira A, Rauschenberg J, Beyersdorff D, Semmler W, Bock M. Automatic passive tracking of an endorectal prostate biopsy device using phase-only cross-correlation. Magn. Reson. Med. 2008;59(5):1043–1050. doi: 10.1002/mrm.21430. [DOI] [PubMed] [Google Scholar]

- 53.Krafft AJ, Zamecnik P, Maier F, de Oliveira A, Hallscheidt P, Schlemmer H-P, Bock M. Passive marker tracking via phase-only cross correlation (POCC) for MR-guided needle interventions: Initial in vivo experience. Physica Medica. 2013 Nov;29(6):607–614. doi: 10.1016/j.ejmp.2012.09.002. [DOI] [PubMed] [Google Scholar]

- 54.Dimaio SP, Fischer GS, Maker SJ, Hata N, Iordachita I, Tempany CM, Kikinis R, Fichtinger G. A system for MRI-guided prostate interventions. Proc. BioRob. 2006:68–73. [Google Scholar]

- 55.Fitzpatrick JM, West JB, Maurer CR. Predicting error in rigidbody point-based registration. IEEE Trans. Med. Imag. 1998 Oct;17(5):694–702. doi: 10.1109/42.736021. [DOI] [PubMed] [Google Scholar]

- 56.Song D, Petrisor D, Muntener M, Mozer P, Vigaru B, Patriciu A, Schar M, Stoianovici D. MRI-compatible pneumatic robot (MRBot) for prostate brachytherapy: Preclinical evaluation of feasibility and accuracy. Brachytherapy. 2008;7(2):177–178. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.