Abstract

In the past few decades, prevention scientists have developed and tested a range of interventions with demonstrated benefits on child and adolescent cognitive, affective, and behavioral health. These evidence-based interventions offer promise of population-level benefit if accompanied by findings of implementation science to facilitate adoption, widespread implementation, and sustainment. Though there have been notable examples of successful efforts to scale-up interventions, more work is needed to optimize benefit. Although the traditional pathway from intervention development and testing to implementation has served the research community well—allowing for a systematic advance of evidence-based interventions that appear ready for implementation—progress has been limited by maintaining the hypothesis that evidence generation must be complete prior to implementation. This sets up the challenging dichotomy between fidelity and adaptation, and limits the science of adaptation to findings from randomized trials of adapted interventions. The field can do better. This paper argues for the development of strategies to advance the science of adaptation in the context of implementation that would more comprehensively describe the needed fit between interventions and their settings, and embrace opportunities for ongoing learning about optimal intervention delivery over time. Efforts to build the resulting adaptome (pronounced “adapt-ohm”) will include the construction of a common data platform to house systematically captured information about variations in delivery of evidence-based interventions across multiple populations and contexts, and provide feedback to intervention developers, as well as the implementation research and practice communities. Finally, the article identifies next steps to jumpstart adaptome data platform development.

Introduction

Current Progress on Advancing Prevention Science

Since the landmark IOM report on prevention in 1994,1 a tremendous amount of progress has been made in building the evidence base for effective approaches to health promotion and disease prevention to improve child and adolescent behavioral health. Many evidence-based practices have been developed, tested, and chronicled in repositories such as the National Registry of Effective Programs and Practices (www.nrepp.samhsa.gov), reviewed in the Community Guide (www.thecommunityguide.org), and supported through targeted funding in federal, state, and local healthcare and education systems.2 Although many of these intervention repositories include materials, tools, and resources, information about how such interventions have been utilized and adapted in real-world settings is scarce.

In the 2010 Patient Protection and Affordable Care Act, prevention was prioritized amid large-scale health system reform, and calls to fully fund prevention services within insurance plans suggest greater access to prevention and health promotion than in the past. As a follow-up to the 1994 report,1 IOM convened an expert panel3 to develop recommendations that would push prevention science, policy, and practice into a new phase to concentrate on implementing evidence-based interventions that had been developed in the decade and elapsed since the original release.

Within the intervening 15 years, the field benefitted from a number of landmark studies on implementation, particularly those that tested models of community-level decision making on the integration of effective prevention interventions within education, social service, and health-oriented agencies. Hawkins et al. (2009)4 developed and tested the Communities that Care intervention, which mapped community-level needs for prevention and health promotion to a variety of evidence-based interventions, offering support for implementation of interventions that met local needs. Spoth and colleagues’ (2011)5 PROmoting School-community-university Partnerships to Enhance Resilience (PROSPER) made similar connections through state extension agencies, resulting in a systematic approach to the implementation of an evidence-based system of care. Additional studies also examined the implementation and scale-up of individual-level prevention interventions, such as the scale-up of The Incredible Years® by Webster-Stratton et al.6 and the spread of the good behavior game by Kellam and colleagues.7

Despite this notable progress, the 2009 IOM report3 called for increased action on the implementation and sustainability of evidence-based prevention and health promotion interventions for children and adolescents, and growth in the capacity of the research community to conduct the next generation of research at the intersection of implementation and prevention sciences.

Current Limitations on Implementation of Evidence-Based Interventions

Although progress has been made in advancing the science of implementation, too often the scientific community follows a linear, static, and simplified model of translating research into practice—one that often overlooks the complexity of pathways that better characterize research-to-practice processes.8 The implications of this traditional model of intervention development (i.e., the optimal path from research to practice proceeds linearly from intervention development to efficacy to effectiveness to implementation) are that the field reifies a set of assumptions that limit what is learned from implementing evidence-based approaches to prevention, and limit the degree to which the field seeks to enhance the fit between evidence-based interventions and delivery settings.9 Below, the authors spotlight three key assumptions that have not served prevention and implementation sciences well, and suggest these as evidence for the alternative concept and associated data platform proposed herein.

Program Drift

The first assumption is that deviation from a manualized intervention (i.e., program drift) will result in lower impact on patient- or client-level outcomes.9 This arises from an assumption that an evidence-based prevention intervention is sufficiently understood and codified after efficacy and effectiveness trials that it has reached its optimal level of impact. Following this assumption, each component of the intervention is deemed necessary for all recipients, and it is feared that adjustment in what is delivered and how it is delivered will have negative consequences. Although the field recognizes this is over-simplistic, and that it may be uncertainty rather than adverse effects that the field is trying to prevent, the designation of manualized interventions as evidence-based practices ultimately encourages a rigid view of fidelity. This designation decreases opportunities to learn from evidence-based intervention adaptations that result in improvements beyond what is expected. There has been recent discussion of identifying “exceptional responders,” individuals who have far superior outcomes than the average who receives a specific intervention.10 Perhaps an equivalent drive toward identifying “exceptional adaptations” might usefully refine the conceptualization of evidence-based practices.

Permanence of Evidence Base

The second assumption is that once evidence is established for a given intervention, the field has as much knowledge as it needs about that intervention and can safely proceed to implementation. The problem, however, is that individuals who participate in trials are a small (and likely not representative) sample of the full population of individuals who could benefit from the intervention. For example, even if the field has a total of 30,000 participants across 30 prevention trials, full implementation of the intervention might reach hundreds of thousands or even millions of people, who may respond quite differently than the 30,000 participants given the methodologic limitations inherent in intervention trials, including selection bias and over-reliance on convenience versus random sampling strategies. The field should assume that the evidence base is ever evolving, and that exposure to the intervention following implementation can refine one's understanding of how well the intervention works.

In addition, the field needs to anticipate that new, better, and more cost-effective interventions will be developed and should replace existing evidence-based practices. For example, with the long time lag between development of an evidence-based intervention and its implementation, modes of intervention delivery will change and need to be accounted for over time. Riley et al. (2015)11 capture this dynamism with regards to technologic platforms, and raise the concern that interventions may quickly be obsolete before they are ready to be scaled-up (e.g., interventions delivered via PDAs or pagers versus text messaging and smartphones; interventions delivered via CD-ROM versus secure Internet site or portal). Similarly, Mohr and colleagues (2016)12 propose trials of intervention principles rather than “locked-down” interventions. Researchers can better design for the long-term viability of prevention interventions if they expect that adaptations will need to occur and they plan for them as part of development, testing, and implementation.

Dissemination and Implementation “Comes After Everything Else”

The third and final assumption worth challenging is that dissemination and implementation comes at the tail end of the pathway from intervention development to evidence. Though there is certainly merit in the argument that the field should establish the evidence base for a prevention intervention prior to advocating for its widespread use, the challenge is in over-interpreting this maxim to mean that the field fails to consider dissemination and implementation until it has first established efficacy and effectiveness, and fails to develop interventions that build existing resources and capture local knowledge to enhance the intervention setting fit.13 When this assumption is reified, the field sees interventions that are developed without early consideration of whether they can be broadly delivered, interventions that do not meet the needs of individuals excluded from the original efficacy or effectiveness trials, and the emergence of interventions that do not fit settings in which they could reach the target populations.

The consequence of these assumptions is a conceptual divide between fidelity and adaptation. Indeed, one of the key outcomes of implementation is that of fidelity, the degree to which an intervention is delivered as designed.14,15 Fidelity measures are developed for the purpose of monitoring and maintaining integrity to the intervention, often focusing predominantly on the “core components” or “active ingredients” of the intervention—elements that are assumed to be necessary to lead to positive patient- or client-level outcomes.16 Intervention developers often designate components as “core” based on the underlying behavior change theory of the intervention,16 given challenges with conducting fully factorial trials to test the relative impact of each intervention component. Questions remain as to whether near-perfect fidelity will always result in optimal patient-level outcomes, but conventional wisdom still encourages the “one-size-fits-all” approach to evidence-based practice delivery, rather than allowing—or even encouraging—adaptation within the context of implementation.

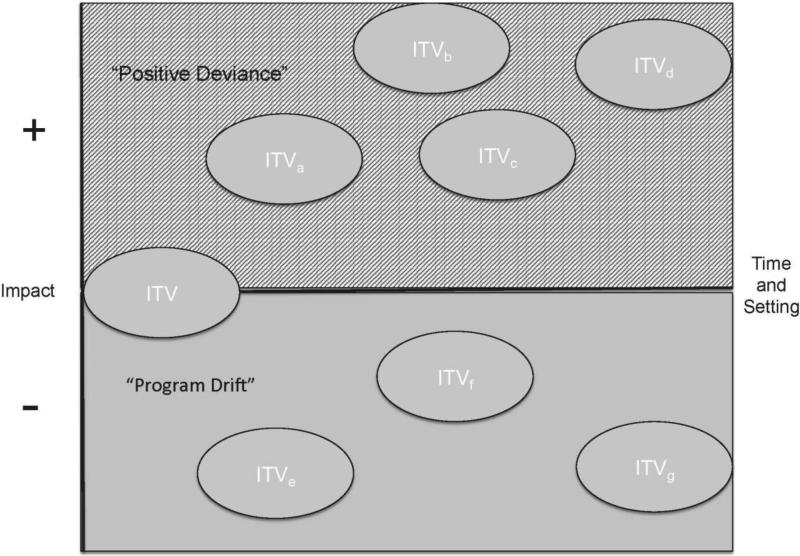

From these three assumptions, the field loses the opportunity to fully explore the range of ways in which adaptation can affect patient outcomes, both positive and negative (Figure 1), and usually limits adaptation during implementation, fearing that adaptation will have a deleterious effect on patient outcomes.

Figure 1.

Association between intervention adaptation and impact.

Note: ITV, intervention; Time and Setting = variability of intervention characteristics over time and setting. The subscript refers to an adapted version of the same base intervention.

Advancing the Science of Intervention Adaptation

There is ample documentation of mismatches among interventions, the populations they target, the communities they serve, and the service systems where they are delivered. The documented mismatch can result from multiple factors where the context and target population differs from the original intervention testing,17 including age, race, ethnicity, culture, organization, language, accessibility, dosage, intensity of intervention, staffing, and resource limitations.

Frequently, the scientific response to this is to start with a given intervention, identify discordance between that intervention and the context or population being targeted, and create an adapted version of that intervention that is hypothesized to fit better with the new circumstances. The adapted intervention is then studied in a new effectiveness trial, compared either to the original intervention, some alternate intervention, or care as usual. Although these adapted intervention studies add to the evidence base and expand the reach of evidence-based practices, they do little to address a more complex reality—that evidence-based practices are being adapted all the time17–20 and that understanding the impact of those ongoing adaptations on implementation and individual outcomes is minimal.

The field has an enormous opportunity within the context of dissemination and implementation research to elucidate a full science of intervention adaptation. Rather than assuming that adaptation of a manualized intervention is at odds with good implementation, the field can systematically collect information on the impact of adaptation to individuals, organizations, and communities, and use this information to extend the knowledge base of implementation of evidence-based practices as well as ongoing improvement of the evidence-based practices themselves. For example, a recent Robert Wood Johnson Foundation initiative has awarded several grants to researchers to systematically study local adaptation of evidence-based practices.21

This may be especially appropriate for multicomponent prevention and health promotion interventions, scores of which have been implemented in thousands of settings. The Blueprints for Violence Prevention,22 National Registry of Effective Programs and Practices (www.nrepp.samhsa.gov), CDC's Effective Interventions: HIV Prevention that Works (www.effectiveinterventions.org; Collins et al.23), and the National Cancer Institute's Research-Tested Intervention Programs (http://rtips.cancer.gov/rtips/index.do) are repositories of evidence-based preventive interventions available for the settings and populations that have relevant behavioral health needs. How does the field harness all of this local implementation (and likely adaptation) to learn more about the effectiveness and optimization of the evidence-based practices than would ever be possible through efficacy and effectiveness trials?

Developing the Concept of an Adaptome

The NIH's program announcements on dissemination and implementation research (e.g.,24) call for research that assesses meaningful components of intervention fidelity, allowing for the examination of multiple features through which interventions can be adapted over time. More studies that examine how interventions are adapted to improve the fit between interventions and contexts9 would go a long way to informing strategies for adaptation within the context of implementation. This practice-based evidence25 would in many cases dwarf the evidence gathered about evidence-based practices through clinical trials, leading to a more robust understanding of how to optimize effective interventions over time.

Following the nomenclature of the -omics fields, the authors propose the creation and aggregation of a systematic and robust body of knowledge that chronicles the many types of adaptations to interventions and their impacts on implementation, service, and health outcomes.14 The proposed adaptome (pronounced “adapt-ohm”) extends the work of several implementation scientists (e.g.,26,27) to capture positive deviance (e.g., where adaptation leads to better outcomes compared to the original trials) as well as circumstances in which program drift was deleterious to intervention effectiveness.

The adaptome takes a long-term perspective of an intervention's need to evolve within and across contexts, consistent with the Dynamic Sustainability Framework.9 This views the intervention across a life cycle, where emergent evidence and changing contexts and needs will alter its identity over time. The adaptome supports this evolutionary approach, providing information on how different versions of the intervention may exist, how each version may provide benefits and disadvantages to the systems and populations that receive it, and that, over time, evidence on adaptation can help inform optimal design and implementation of future intervention.

Characterizing Types of Adaptation

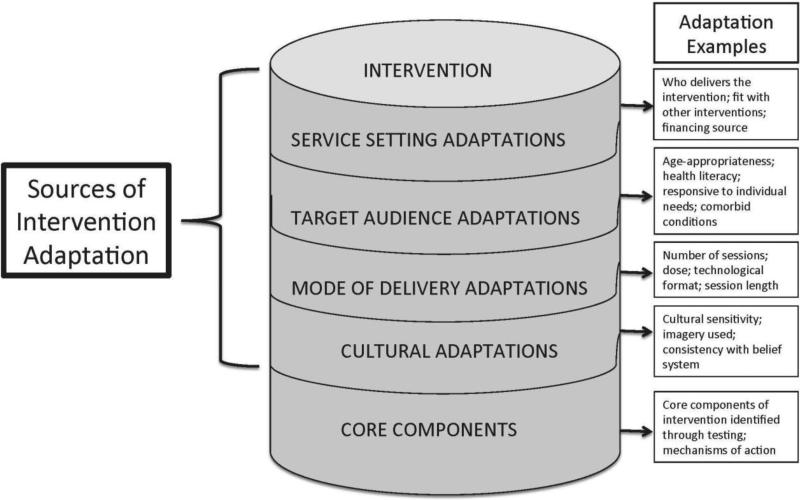

Many interventions are supported by fidelity measures, which help to track whether active ingredients of the intervention are delivered as designed or, as is often the case in resource-limited settings, if intervention components are dropped altogether.28,29 Few efforts have been made to go beyond fidelity to understand how interventions routinely used in practice are adapted. Below, the authors highlight three specific models that help one to think more consistently about the sources and types of intervention adaptations.

The first, proposed by Wiltsey-Stirman and colleagues (2013),27 organizes types of adaptations into five categories:

by whom modifications are made;

what is modified;

at what level of delivery;

context modifications; and

the nature of the content modification.

A second effort, characterized by Aarons et al. (2012)26 as part of the Dynamic Adaptation Process model, identifies core components and adaptable characteristics, and then lays out five stimuli for adaptations: client-emergent issues, provider knowledge, provider skills and abilities, available resources, and organization adaptation. This echoes concepts from the Consolidated Framework for Implementation Research.30 The third initiative that points to more research on adaptation is the Managing and Adapting Practice approach to evidence-based practice (www.practicewise.com/community/map). This approach, based on prior work on common factors and elements of evidence-based practices, disaggregates evidence-based practices into their core components, allows for the matching of the components to need, and thus results in infinite adaptations of evidence-based practices with the ability to track the impact of various combinations of components on client outcomes. The adaptome can build from these and other models to identify a taxonomy of adaptations that can be measured and aggregated across multiple clinical and community settings.

Standardized Measures

Inherent in the concept of the adaptome is the need for standardized measures to capture types of adaptation, and individual and contextual influences that require those adaptations to occur. Fundamentally, such a knowledge base would include core components of the intervention and variations in which components are delivered and how they are delivered. It would require data capture of the impact of exposure of the adapted intervention to an individual to determine whether any adaptations were beneficial or harmful and, importantly, include a feedback mechanism to implementers to inform future use of the intervention, as well (e.g., the Strategic Prevention Framework; www.samhsa.gov/spf). Data resources developed from this concept would include standardized measures of relevant individual and contextual characteristics that could be used to explain the case for adaptation and the impact of those adaptations on the target population. Much of this could come from existing work to standardize measures, including those from intervention adaptations,27 implementation science,31,32 and contextual characteristics (e.g.,15).

Three Streams of Data for the Adaptome

Intervention Trials

The science of adaptation already benefits from a range of trials testing adapted interventions and their impact on intervention outcomes.32 These trials, as previously stated, typically build from an adaptation process that is exogenous to the trial. Imagine, instead, if each one of these trials systematically captured data on the nature of the adaptation, the characteristics of the population and settings, and the outcomes from the intervention. Further, if the trial allowed for flexible delivery of the intervention, capturing the variation within which the intervention was delivered would further support new evidence on the impact of intervention adaptation.

Implementation Trials

As stated above, the modal implementation trial emphasizes level of fidelity as a targeted implementation outcome. In some cases, high levels of fidelity are associated with better outcomes,33,34 but often this detailed information is lacking. As there is ample evidence that effective practices are being adapted over time,18,20 and variation in fidelity measures by site across multiple settings and practices, there is great opportunity to augment knowledge on adaptation impact within the context of implementation trials (Brown and colleagues35 provide a review of relevant research and evaluation designs for implementation trials that may measure intervention adaptations). If implementation studies can more completely capture types of local adaptations, individual outcomes, and contextual characteristics, the adaptome will benefit from hundreds if not thousands of adaptation instances.

Practice-Based Evidence

Perhaps the largest source of data to advance the science of adaptation will arise if the field can consistently and systematically capture how various organizations and communities are integrating the full complement of evidence-based practices. With a number of states in recent years turning to mandates for the delivery of evidence-based practices, researchers have extensive opportunities to learn “where the rubber meets the road.” The current scientific enterprise is largely missing out on the ability to learn from these local implementation efforts, both to understand where adaptations lead to negative outcomes and to capture those positive deviants. This is consistent with the notion of a learning healthcare system,36 and with efforts in some areas of medicine to view guideline implementation as evolving and improving (e.g.,37).

Complexities in Moving From Concept to Data Platform

Although this article is primarily intended to be conceptual, the authors recognize that there are challenges in moving toward a tangible adaptome data platform. Each of these issues, though not insurmountable, will require dedicated time and resources. Existing knowledge management platforms and software tools may provide useful examples of how to build and manage the proposed adaptome platform; specific examples in the health sector (e.g., National Database for Autism Research; (https://ndar.nih.gov) may be particularly instructive. Nonetheless, noteworthy challenges are expected, including:

Data privacy, security, and access. What are the ethical issues associated with pulling data on intervention adaptations and outcomes across settings? Who would have access to these data and how would such access be provided?

Reporting adaptations. What eligibility criteria would be used to determine relevant studies and associated data? How should adaptations be reported and by whom? What additional information may be needed to validate reported adaptations?

Maintaining the platform. Who would house the adaptation platform and how would it be maintained? What incentives would encourage appropriate, continuous, and meaningful contribution to and use of the adaptome platform?

Adaptations versus new interventions. At what point does an intervention become distinct from the original, given the potential number and magnitude of adaptations, and how would the system classify or capture this information? When might an efficacy or effectiveness trial of the adapted intervention be warranted?

Next Steps

An adaptome data platform could serve as a mechanism for expanding the science of adaptation in the context of implementation. It may require the development of capacity to capture contextual characteristics, intervention characteristics (including core components and types of adaptations), and client outcomes within the sites where interventions are delivered; centralize data into a repository; develop analytic strategies for interpreting the wealth of data that will emerge; and then create a set of adaptation decision-support tools that will aid with more thoughtful adaptation and improvement over time.

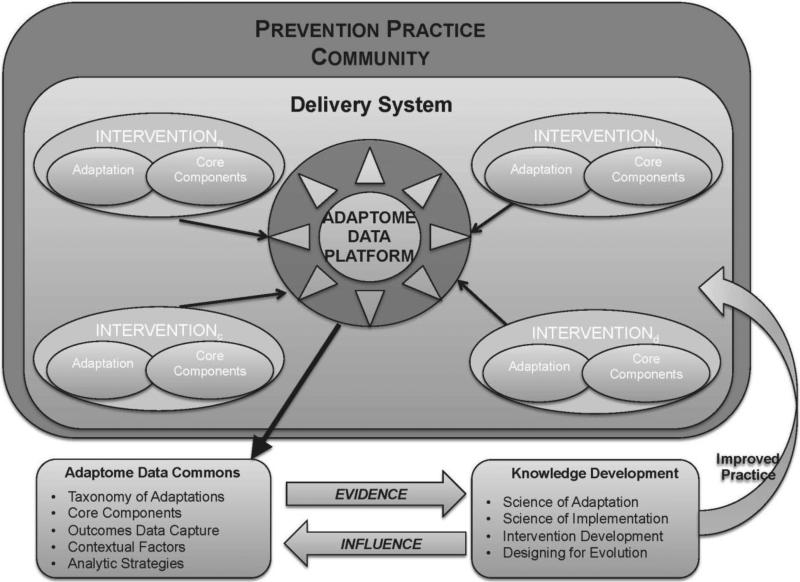

As Figure 3 depicts, a multidisciplinary team of researchers, practitioners, implementers, and consumers would leverage the adaptome data platform to pull common data from across systems implementing a range of evidence-based interventions, along with detailed data on contextual factors, client outcomes, and type(s) of adaptation. The data would come into the adaptome data commons where it could be analyzed to better understand the impact of a range of adaptations. Ideally, such information would not only inform practice but also highlight areas in need of additional research or knowledge development in order to advance the science of adaptation, implementation, and ultimately guide the development of better interventions in the future that can be designed to evolve over time.

Figure 3.

The adaptome model and data platform.

Note: This figure depicts data from multiple efforts to implement a preventive intervention (shown here within one delivery system) captured by a data platform, aggregated and analyzed within a data commons to build a knowledge base that fuels ongoing research and supports improved practice.

Although much of this vision may require longer-term investment, there are a few proximal things that prevention and implementation scientists could do to build toward the adaptome:

Choosing one specific evidence-based intervention, the range of adaptations could be captured (e.g., using the classification system of Wiltsey-Stirman et al.27), a data repository could be enhanced (i.e., data systems are already in use to support technical assistance and fidelity monitoring for select evidence-based practices), and analysis of adaptations could be completed.

Developing and piloting an adaptome measurement battery across existing prevention and health promotion implementation initiatives.

Providing standardized reporting of adaptation processes used to refine prevention interventions with existing studies of adapted interventions.

Employing methodologic and statistical expertise to design analytic strategies to generalize outcomes produced from local adaptations of evidence-based practices.

As healthcare policies have created a more fertile ground for implementation of prevention interventions, and a range of community and clinical settings are incentivized to provide high-quality services, there is no better time to advance understanding of adaptations to and delivery of evidence-based interventions. Setting aside preconceived notions of where the evidence on interventions is generated and moving to a system where all evidence informs practice is far more possible in the current technology age than ever before. Though some of the steps to use these data will require significant advancement, the adaptome is proposed as one conceptual piece of the drive for a learning healthcare system; where adaptation and learning are iterative and mutually beneficial, research evidence will be the most beneficial to practice.

Figure 2.

Sources of intervention adaptations.

Acknowledgments

The authors would like to thank Drs. C. Hendricks Brown and William Beardslee for their helpful comments on an earlier draft of the manuscript.

The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the National Cancer Institute.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

No financial disclosures were reported by the authors of this paper.

References

- 1.Mrazek PJ, Haggerty RJ. Reducing Risks for Mental Disorders: Frontiers for Preventive Intervention Research. National Academies Press; 1994. [PubMed] [Google Scholar]

- 2.Chambers DA, Ringeisen H, Hickman EE. Federal, state, and foundation initiatives around evidence-based practices for child and adolescent mental health. Child Adolesc Psychiatr Clin N Am. 2005;14(2):307–327. doi: 10.1016/j.chc.2004.04.006. http://dx.doi.org/10.1016/j.chc.2004.04.006. [DOI] [PubMed] [Google Scholar]

- 3.O'Connell ME, Boat T, Warner KE. Preventing Mental, Emotional, and Behavioral Disorders Among Young People: Progress and Possibilities. National Academies Press; 2009. [PubMed] [Google Scholar]

- 4.Hawkins JD, Oesterle S, Brown EC, et al. Results of a type 2 translational research trial to prevent adolescent drug use and delinquency: a test of Communities That Care. Arch Pediatr Adolesc Med. 2009;163(9):789–798. doi: 10.1001/archpediatrics.2009.141. http://dx.doi.org/10.1001/archpediatrics.2009.141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Spoth R, Guyll M, Redmond C, Greenberg M, Feinberg M. Six-year sustainability of evidence-based intervention implementation quality by community-university partnerships: the PROSPER study. Am J Community Psychol. 2011;48(3-4):412–425. doi: 10.1007/s10464-011-9430-5. http://dx.doi.org/10.1007/s10464-011-9430-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Webster-Stratton C, McCoy KP. Bringing The Incredible Years® Programs to Scale. New Dir Child Adolesc Dev. 2015;2015(149):81–95. doi: 10.1002/cad.20115. http://dx.doi.org/10.1002/cad.20115. [DOI] [PubMed] [Google Scholar]

- 7.Kellam SG, Mackenzie AC, Brown CH, et al. The good behavior game and the future of prevention and treatment. Addict Sci Clin Pract. 2011;6:73–84. [PMC free article] [PubMed] [Google Scholar]

- 8.Westfall JM, Mold J, Fagnan L. Practice-based research--“Blue Highways” on the NIH roadmap. JAMA. 2007;297(4):403–406. doi: 10.1001/jama.297.4.403. http://dx.doi.org/10.1001/jama.297.4.403. [DOI] [PubMed] [Google Scholar]

- 9.Chambers DA, Glasgow RE, Stange KC. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8:117. doi: 10.1186/1748-5908-8-117. http://dx.doi.org/10.1186/1748-5908-8-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.U.S. DHHS, NIH . NIH exceptional responders to cancer therapy study launched. news release; 2014. [May 5, 2016]. www.nih.gov/news-events/news-releases/nih-exceptional-responders-cancer-therapy-study-launched. [Google Scholar]

- 11.Riley WT, Serrano KJ, Nilsen W, Atienza AA. Mobile and Wireless Technologies in Health Behavior and the Potential for Intensively Adaptive Interventions. Curr Opin Psychol. 2015;5:67–71. doi: 10.1016/j.copsyc.2015.03.024. http://dx.doi.org/10.1016/j.copsyc.2015.03.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mohr DC, Schueller SM, Riley WT, et al. Trials of Intervention Principles: Evaluation Methods for Evolving Behavioral Intervention Technologies. J Med Internet Res. 2015;17(7):e166. doi: 10.2196/jmir.4391. http://dx.doi.org/10.2196/jmir.4391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Atkins MS, Rusch D, Mehta TG, Lakind D. Future Directions for Dissemination and Implementation Science: Aligning Ecological Theory and Public Health to Close the Research to Practice Gap. J Clin Child Adolesc Psychol. 2016;45(2):215–226. doi: 10.1080/15374416.2015.1050724. http://dx.doi.org/10.1080/15374416.2015.1050724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Adm Policy Ment Health. 2009;36(1):24–34. doi: 10.1007/s10488-008-0197-4. http://dx.doi.org/10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lewis CC, Fischer S, Weiner BJ, Stanick C, Kim M, Martinez RG. Outcomes for implementation science: an enhanced systematic review of instruments using evidence-based rating criteria. Implement Sci. 2015;10:155. doi: 10.1186/s13012-015-0342-x. http://dx.doi.org/10.1186/s13012-015-0342-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Blase K, Fixsen D. ASPE Research Brief. U.S. DHHS; 2013. Core Intervention Components: Identifying and Operationalizing What Makes Programs Work. [Google Scholar]

- 17.Bernal GE, Domenech Rodríguez MM. Cultural adaptations: Tools for evidence-based practice with diverse populations. American Psychological Association; 2012. http://dx.doi.org/10.1037/13752-000. [Google Scholar]

- 18.Rohrbach LA, Dent CW, Skara S, Sun P, Sussman S. Fidelity of implementation in Project Towards No Drug Abuse (TND): a comparison of classroom teachers and program specialists. Prev Sci. 2007;8:125–132. doi: 10.1007/s11121-006-0056-z. http://dx.doi.org/10.1007/s11121-006-0056-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Castro FG, Barrera Jr M, Martinez Jr CR. The cultural adaptation of prevention interventions: Resolving tensions between fidelity and fit. Prev Sci. 2004;5(1):41–45. doi: 10.1023/b:prev.0000013980.12412.cd. http://dx.doi.org/10.1023/B:PREV.0000013980.12412.cd. [DOI] [PubMed] [Google Scholar]

- 20.Rohrbach LA, Grana R, Sussman S, Valente TW. Type II translation: transporting prevention interventions from research to real-world settings. Eval Health Prof. 2006;29(3):302–333. doi: 10.1177/0163278706290408. http://dx.doi.org/10.1177/0163278706290408. [DOI] [PubMed] [Google Scholar]

- 21.Leviton L, Henry B. American Public Health Association. Washington, D.C.: 2011. Better information for generalizable knowledge: Systematic study of local adaptation. [Google Scholar]

- 22.Mihalic S, Irwin K, Elliott D, Fagan A, Hansen D. Blueprints for violence prevention. In for the Study and Prevention of Violence, University of Colorado National Scientific Council on the Developing Child. Citeseer. 2004 http://dx.doi.org/10.1037/e302992005-001.

- 23.Collins CB, Sapiano TN. Lessons learned from dissemination of evidence-based intervention for HIV prevention. Am J Prev Med. 2016 doi: 10.1016/j.amepre.2016.05.017. In press. [DOI] [PubMed] [Google Scholar]

- 24.Dissemination and Implementation Research in Health (R01) http://grants.nih.gov/grants/guide/pa-files/PAR-16-238.html.

- 25.Green LW. Making research relevant: if it is an evidence-based practice, where's the practice-based evidence? Fam Pract. 2008;25(Suppl 1):i20–24. doi: 10.1093/fampra/cmn055. http://dx.doi.org/10.1093/fampra/cmn055. [DOI] [PubMed] [Google Scholar]

- 26.Aarons GA, Green AE, Palinkas LA, et al. Dynamic adaptation process to implement an evidence-based child maltreatment intervention. Implement Sci. 2012;7:32. doi: 10.1186/1748-5908-7-32. http://dx.doi.org/10.1186/1748-5908-7-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Stirman SW, Miller CJ, Toder K, Calloway A. Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implement Sci. 2013;8:65. doi: 10.1186/1748-5908-8-65. http://dx.doi.org/10.1186/1748-5908-8-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kalichman SC, Hudd K, Diberto G. Operational fidelity to an evidence-based HIV prevention intervention for people living with HIV/AIDS. J Prim Prev. 2010;31(4):235–245. doi: 10.1007/s10935-010-0217-5. http://dx.doi.org/10.1007/s10935-010-0217-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Rebchook GM, Kegeles SM, Huebner D. Translating research into practice: the dissemination and initial implementation of an evidence-based HIV prevention program. AIDS Educ Prev. 2006;18(Suppl):119–136. doi: 10.1521/aeap.2006.18.supp.119. http://dx.doi.org/10.1521/aeap.2006.18.supp.119. [DOI] [PubMed] [Google Scholar]

- 30.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. http://dx.doi.org/10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139. doi: 10.1186/1748-5908-8-139. http://dx.doi.org/10.1186/1748-5908-8-139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Rabin BA, Purcell P, Naveed S, et al. Advancing the application, quality and harmonization of implementation science measures. Implement Sci. 2012;7:119. doi: 10.1186/1748-5908-7-119. http://dx.doi.org/10.1186/1748-5908-7-119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Schoenwald SK, Chapman JE, Sheidow AJ, Carter RE. Long-term youth criminal outcomes in MST transport: The impact of therapist adherence and organizational climate and structure. J Clin Child Adolesc Psychol. 2009;38(1):91–105. doi: 10.1080/15374410802575388. http://dx.doi.org/10.1080/15374410802575388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Schoenwald SK, Carter RE, Chapman JE, Sheidow AJ. Therapist adherence and organizational effects on change in youth behavior problems one year after multisystemic therapy. Adm Policy Ment Health. 2008;35:379–394. doi: 10.1007/s10488-008-0181-z. http://dx.doi.org/10.1007/s10488-008-0181-z. [DOI] [PubMed] [Google Scholar]

- 35.Brown CH, Curran G, Palinkas L, et al. An overview of research and evaluation designs for dissemination and implementation. Ann Rev Public Health. doi: 10.1146/annurev-publhealth-031816-044215. In press. http://dx.doi.org/10.18131/G35W23. [DOI] [PMC free article] [PubMed]

- 36.Smith M, Saunders R, Stuckhardt L, McGinnis JM. Best care at lower cost: the path to continuously learning health care in America. National Academies Press; 2013. [PubMed] [Google Scholar]

- 37.Farias M, Jenkins K, Lock J, et al. Standardized Clinical Assessment And Management Plans (SCAMPs) provide a better alternative to clinical practice guidelines. Health Aff (Millwood) 2013;32(5):911–920. doi: 10.1377/hlthaff.2012.0667. http://dx.doi.org/10.1377/hlthaff.2012.0667. [DOI] [PMC free article] [PubMed] [Google Scholar]