Abstract

Objective. There are many smartphone-based applications (apps) for cardiopulmonary resuscitation (CPR) training. We investigated the conformity and the learnability/usability of these apps for CPR training and real-life supports. Methods. We conducted a mixed-method, sequential explanatory study to assess CPR training apps downloaded on two apps stores in South Korea. Apps were collected with inclusion criteria as follows, Korean-language instruction, training features, and emergency supports for real-life incidents, and analyzed with two tests; 15 medical experts evaluated the apps' contents according to current Basic Life Support guidelines in conformity test, and 15 nonmedical individuals examined the apps using System Usability Scale (SUS) in the learnability/usability test. Results. Out of 79 selected apps, five apps were included and analyzed. For conformity (ICC, 0.95, p < 0.001), means of all apps were greater than 12 of 20 points, indicating that they were well designed according to current guidelines. Three of the five apps yielded acceptable level (greater than 68 of 100 points) for learnability/usability. Conclusion. All the included apps followed current BLS guidelines and a majority offered acceptable learnability/usability for layperson. Current and developmental smartphone-based CPR training apps should include accurate CPR information and be easy to use for laypersons that are potential rescuers in real-life incidents. For Clinical Trials. This is a clinical trial, registered at the Clinical Research Information Service (CRIS, cris.nih.go.kr), number KCT0001840.

1. Introduction

Sudden cardiac arrest (SCA) remains a leading cause of death in developed countries, including South Korea, despite efforts devoted to prevention of SCA [1–3]. Although there are many factors that dictate the outcomes of SCA, it is well known that survival rates are up to three times higher when cardiopulmonary resuscitation (CPR) is performed immediately after SCA [4, 5]. Various methods for demonstrating high quality CPR and immediate recognition of cardiac arrest, including face-to-face training and video-based instruction for bystanders, have yielded improvement in participation rates during incidents of SCA [6, 7]. However, only 12–42% of cardiac arrest patients witnessed by the layperson received CPR during out-of-hospital cardiac arrest (OHCA) [8–10]. The low rate of layperson intervention may be due to a failure to recognize cardiac arrest or a lack of confidence due to insufficient CPR training/education [11].

Recently, many medical and healthcare applications (apps) have been developed and registered in online mobile apps stores, because there is no limitation in time and space [12, 13]. In particular, a number of smartphone-based apps have been developed by public institutions and companies in order to enhance CPR education [14–17]. Smartphone-based apps could be an important and epochal medium, as they overcome the limitations of traditional CPR training and remind users, particularly layperson, of CPR. However, one flaw in app-based CPR training and education is that some apps may not adequately reflect current guidelines, potentially resulting in the transmission of incorrect information. Even some apps adhering to current guidelines may not be useful for layperson, as the apps might be difficult to operate and users may have low interest in their use. In one study, Kalz et al. [14] reported that very few apps reflect current BLS guidelines and offer an acceptable level of usability for layperson rescue.

As of January 2016, 85.2% of the South Korean population owned smartphones, a number that is steadily on the rise [18]. Additionally, many smartphone-based CPR training apps have been downloaded in South Korea, though no study has systematically investigated the CPR training apps. We assessed the conformity of smartphone-based CPR training apps to current CPR guidelines and evaluated the learnability and usability of the apps in incidents of SCA.

2. Materials and Methods

2.1. Setting and Participants

This mixed-method, sequential explanatory design study was approved by the Institutional Review Board of Hanyang University Hospital (Seoul, South Korea) (IRB HYUH2015-08-012-001) and was conducted in September 2015. The mixed-methods sequential design consisted of identification of smartphone-based CPR training apps, examination of conformity of apps to the 2010 American Heart Association Basic Life Support (AHA BLS) guidelines, and learnability and usability testing. Fifteen AHA BLS-certified healthcare providers and fifteen laypersons with no CPR training were recruited for the first and second phases of the study, respectively. Participants were recruited voluntarily by a notice on a bulletin from September 21, 2015, to September 30, 2015. Each potential participant received written information regarding the purpose of the study, and all participants provided written informed consent.

2.2. Materials and Experimental Methods

A Galaxy S4 smartphone (Samsung Electronics Co., Seoul, South Korea) with android (mobile operating system of Google) and an iPhone 5 (Apple Inc., Cupertino, CA, USA) with iOS (mobile operating system of Apple) were used for our investigation. Both mobile operating systems have a 99.8% market share in Korea (iOS 23.1% and android 76.7%) [19]. Therefore, we searched for and identified mobile apps from the Google Play Store and the Apple App Store, the two largest online stores for mobile apps (as of September 2015). Search terms included were “cardiopulmonary resuscitation” OR “CPR” OR “chest compression” OR “basic life support” in both English and Korean languages. In South Korea, the proportion of trueborn Korean is 97.8% [20], and almost all use and speak Korean language with low diversity of languages [21]. Therefore, we excluded apps with no Korean language in screening. And selected versions in the Google Play Store that were also present in the Apple App Store. From the selected apps, we excluded apps that did not contain CPR-related content and had error for operation of apps. Finally, we included apps that contained the following features: (1) training features and (2) emergency support for real-life incidents. “Emergency support for real-life incidents” means that layperson could be served guidance or accurate information for CPR within apps in real cardiac arrest situation; we selected this as mandatory feature. The identification and selection of apps included in this study are based on Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) guidelines [22].

First Test: Conformity Test of Smartphone-Based CPR Apps to 2010 AHA BLS Guidelines Checklist. We made the conformity checklist using the AHA BLS checklist for education by authors. The conformity checklist contained 10 items as follows: (1) how to check a patient's response and abnormal breathing, (2) how to activate the emergency medical system, (3) how to get someone to bring an automatic external defibrillator (AED), (4) correct CPR sequence (chest compression, airway, breathing, C-A-B), (5) existence of hands-only CPR for lay-rescue, (6) how to begin CPR rapidly, (7) proper compression position of the chest (i.e., lower half of the sternum), (8) adequate chest compression depth (i.e., at least 5 cm or 5-6 cm), (9) proper chest compression rate (i.e., at least 100 or 100–120 numbers/min), and (10) mention of complete chest decompression. Each item was scored on a numeric scale (0; nonexistent or incorrect information, 1; insufficient information, 2; sufficient information), with a maximum possible score of 20 points.

Second Test: Learnability and Usability Test of Smartphone-Based CPR Training Apps Using the System Usability Scale (SUS). For learnability and usability evaluations, we used the modified System Usability Scale (SUS), a simple but reliable method for evaluating the usability of a technological product or service [23–25]. The SUS consists of 10 questions: five positively worded questions (odd-numbered domain) and five negatively worded question (even-numbered domain) as follows:

Modified System Usability Scale (SUS) Questions

I think that I would like to use this product frequently.

I found the product unnecessarily complex.

I thought the product was easy to use.

I think that I would need the support of a technical person to able to use this product.

I found the various functions in this product were well integrated.

I thought there was too much inconsistency in this product.

I would imagine that most people would learn to use this product very quickly.

I found the system very awkward to use.

I felt very confident using the product.

I needed to learn a lot of things before I could get going with the product.

Questions (4) and (10) represent a value of learnability for laypersons, while the other questions represent a value of usability. The SUS showed the domains as five scales numbered from 1 (strongly disagree) to 5 (strongly agree). To obtain a score, the following formulas are used:

Positively worded domains = (score – 1).

Negatively worded domains = (5 – score).

After summing then ten domains, multiply by 2.5 = total SUS.

2.3. Data Collection

We recorded background information pertinent to the apps included in this investigation. Basic information consisted of (1) manufacturer, (2) number of downloads, (3) purchase cost, (4) last update, (5) type of content (video instruction, text instruction, audio instruction, video simulation, animation, and graphics), (6) purpose of the app, (7) underlying guideline, (8) target user (including pediatric), (9) detection of AED location, (10) supply of auditory guidance, (11) feedback system (compression rate and/or depth), and (12) direct connection to activate for Emergency Medical Service (EMS).

In the conformity test, each participant had 10 minutes of evaluation time for each app and five minutes of resting time before each evaluation. In the SUS learnability and usability test, each layperson had 30 minutes of evaluation time for each app and 10 minutes of resting time before each evaluation in the silent room with one observer. If layperson was not familiar with the device or had problems operating or controlling the device, observer helped them providing guidance. The order in which apps were evaluated was randomized for each participant.

2.4. Statistical Analysis

Data were compiled using a standard spreadsheet program (Excel; Microsoft, Redmond, WA, USA) and were analyzed using the Statistical Package for the Social Sciences (SPSS) 18.0 for Windows (SPSS Inc., Chicago, IL, USA). We generated descriptive statistics, and data are presented as the mean ± standard deviation (SD). We calculated an intraclass correlation coefficient (ICC) for all questions in tests of both phases. p values of < 0.05 were considered statistically significant. In the conformity test, we assessed the results by 5 Likert scales: (1) very high; 20 ≥ score ≥ 16 points, (2) high; 16 > score ≥ 12, (3) moderate; 12 > score ≥ 8, (4) low; 8 > score ≥ 4, (5) very low; 4 > score ≥ 0. A mean score of SUS > 68 is an acceptable value of learnability and usability, based on the current literature [14, 26].

3. Results

3.1. Apps Selection

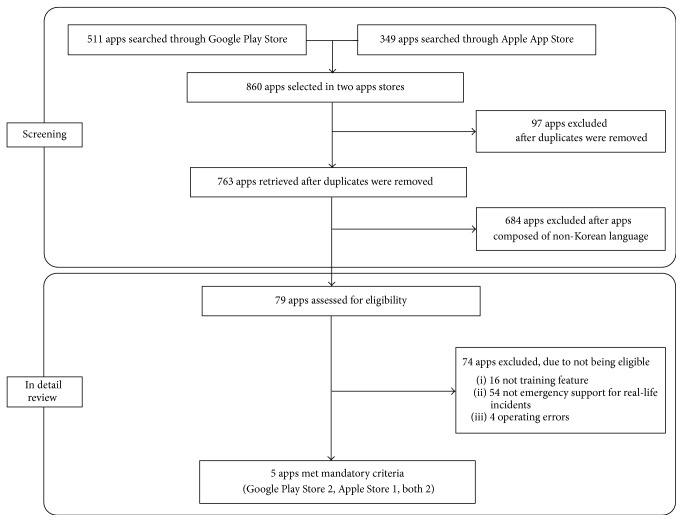

A total of 511 apps and 349 apps were identified through the Google Play Store and the Apple App Store, respectively. After removing duplicates, we selected apps that consisted of CPR training in the Korean language. 79 apps were retrieved after screening, and then we operated and evaluated these apps in detail. 16 apps did not have CPR training feature, 54 apps did not have emergency support for real-life incidents, and 4 apps had errors when they were operated. Finally, five apps met our mandatory criteria (Figure 1). Two apps were registered in the Google Play Store, one was registered in the Apple App Store, and two were registered in both stores. Notable attributes of these apps are presented in Table 1, including basic app information, mandatory features, and feedback systems. Three apps had auditory guidance for the compression rate (by metronome), and only the “UCPR” app had feedback systems for the compression rate and depth (by accelerometer). There were no apps for pediatric BLS.

Figure 1.

Flowchart of apps screening and selection.

Table 1.

Characteristics of included apps.

| Title | Cardiopulmonary resuscitation (CPR) | Cardiopulmonary resuscitation | Information for emergency medicine | Management for medical emergencies | UCPR |

|---|---|---|---|---|---|

| App information | |||||

| Manufacturer | Academica | INOVIEW network | Ministry of Health and Welfare | Fantalog Interactive Co., Ltd. | MELab |

| Icon image |

|

|

|

|

|

| Number of downloads | Unknown | 10,000–50,000 (android) | 500,000–1,000,000 (android) | 500,000–1,000,000 | 1,000–5,000 |

| Purchase cost | Free | Free | Free | Free | Free |

| Last update | March 5, 2013 | April 24, 2013 | July 7, 2015 | June 8, 2011 | December 3, 2014 |

| Distributor | iOS | Android, iOS | Android, iOS | Android | Android |

| Language | Korean | Korean | Korean | Korean | Korean |

| Mandatory features | |||||

| Training feature | |||||

| Educational video or animations | — | O | O | — | O |

| Real-incident animation instructions | — | O | — | — | — |

| Real-incident picture instructions | O | O | O | O | O |

| Real-incident audio instructions | — | O | O | — | O |

| Emergency support for real incidents | O | O | O | O | O |

| Special features | |||||

| AED location | — | — | O | — | O |

| Auditory guidance | — | O | O | — | O |

| Feedback | |||||

| Compression rate | — | — | — | — | O |

| Compression depth | — | — | — | — | O |

| Pediatric BLS | — | — | — | — | — |

| Direct connection to activate for Emergency Medical Service (EMS) | O | O | O | O | O |

3.2. Results of Conformity to CPR Guidelines

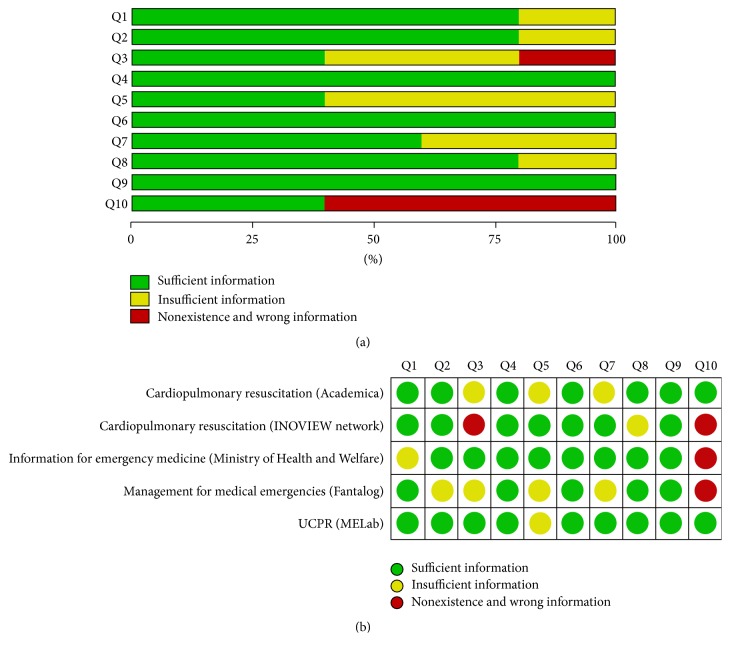

The intraclass correlation (ICC) for the conformity checklist was 0.95 (p < 0.001, 95% Confidence Interval (CI) 0.93–0.97). The results of conformity to AHA 2010 BLS guideline testing are shown in Table 2. The apps we investigated, whose mean scores in conformity to the AHA 2010 BLS guidelines evaluation (in parentheses) were as follows: “UCPR” (MELab) (17.80 ± 1.01), “cardiopulmonary resuscitation” (Academica) (16.40 ± 1.88), “information for emergency medicine” (Ministry of Health and Welfare) (16.13 ± 1.24), “cardiopulmonary resuscitation” (INOVIEW network) (14.73 ± 1.09), and “management for medical emergencies” (Fantalog) (13.47 ± 2.94). Analyses of the conformity scores for each question are shown in Figure 2. Three questions fulfilled all of the apps (Q4, Q6, and Q9). Three apps did not fulfill “mention of complete chest decompression” (Q10).

Table 2.

Mean, standard deviation, and rank of conformity checklist score to the AHA 2010 BLS guidelines and modified System Usability Scale (SUS) score.

| Title (manufacturer) | Conformity | Learnability and usability | ||

|---|---|---|---|---|

| Mean ± SD | Rank | Mean, SD | Rank | |

| Cardiopulmonary resuscitation (Academica) | 16.40 ± 1.88 | 2 | 56.67 ± 23.58 | 5 |

| Cardiopulmonary resuscitation (INOVIEW network) | 14.73 ± 1.09 | 5 | 78.17 ± 20.49 | 2 |

| Information for emergency medicine (Ministry of Health and Welfare) | 16.13 ± 1.24 | 3 | 81.17 ± 19.01 | 1 |

| Management for medical emergencies (Fantalog) | 13.47 ± 2.94 | 4 | 61.50 ± 19.54 | 4 |

| UCPR (MELab) | 17.80 ± 1.00 | 1 | 70.50 ± 24.33 | 3 |

SD: standard deviation.

Figure 2.

Analysis of information fulfillment for the conformity checklist. Fulfillment of sufficient information (a) in each question and (b) in each app. Q1, how to check the patient's response and abnormal breathing; Q2, how to activate the emergency medical system; Q3, how to get someone to bring an automatic external defibrillator (AED); Q4, correct cardiopulmonary resuscitation (CPR) sequence [chest compression, airway, breathing, C-A-B]; Q5, existence of hands-only CPR for lay-rescue; Q6, how to begin the CPR rapidly; Q7, proper compression position of chest (i.e., lower half of sternum); Q8, adequate chest compression depth (i.e., at least 5 cm or 5-6 cm); Q9, proper chest compression rate (i.e., at least 100 or 100–120 numbers/minute); Q10, mention of complete chest decompression.

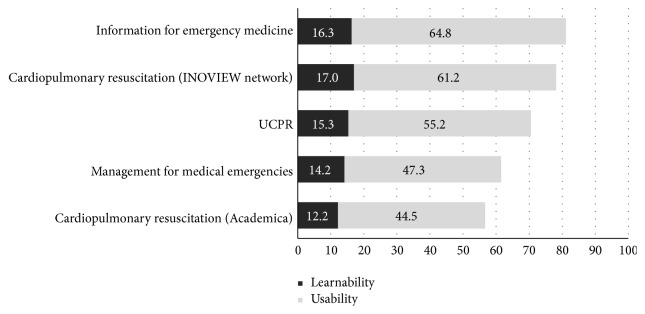

3.3. Results of Learnability and Usability Evaluation Using SUS

Three apps earned well over 68 points in learnability and usability testing, and the “information for emergency medicine” app had the highest score (81.17 points) (Table 2). For learnability, the “cardiopulmonary resuscitation” (INOVIEW network) app had 17.00 points, less than one point more than the “information for emergency medicine” app (16.30 points) (Figure 3).

Figure 3.

Mean learnability and usability testing scores of five apps using the System Usability Scale (SUS).

4. Discussion

Although there are various methods for CPR training, most methods are not comprehensive [27]. Effectiveness and accuracy of CPR training are important factors, and retention of skills and knowledge is essential [28]. A reminder CPR video clip on a mobile phone was effective for education retention by trainees at three months after initial training [29]. A CPR animation instruction on a mobile phone was also effective in checklist assessment and time-interval compliance in trainees [30]. Smartphones are easy to access for civilians, and smartphone-based apps could provide both text and video clips for CPR. Alternatively, CPR training apps could be used for both CPR training and education retention after training. In this study, five of 79 smartphone-based CPR training apps met our mandatory criteria. The total download numbers of CPR training apps have been counted to be about several hundred thousands, ranging from about 1,000 to 1,000,000 times for each app. CPR training apps with incorrect or insufficient CPR information could result in layperson unintentionally harming the victim in real incidents. Thus, these apps should be examined by experts prior to public release.

Smartphone-based CPR training apps could provide auditory guidance through speakers, feedback for high quality chest compression using accelerometers, and the nearest AED location using global positioning system (GPS) sensors [15–17, 31]. Three of the five apps we examined incorporated auditory guidance of chest compression rate using a metronome, though just one app had an audiovisual feedback system for both the chest compression rate and depth (using an accelerometer). Several simulation studies have demonstrated that both smartphones and smartwatches with an accelerometer could be good alternative devices [32–34]. Two of the five apps were able to locate the nearest AED. The addition of audiovisual feedback is advisable in smartphone-based CPR training apps.

We included support for pediatric BLS as a special feature of this study. However, no app solely supports pediatric BLS. Although there are few pediatric arrest patients compared to adults, CPR training apps should also include an explanation of pediatric BLS.

All five apps analyzed in this study were designed well, yielding more than 12 points in conformity testing. For the tenth question, however, only two apps had sufficient explanation of complete chest decompression, which is a factor as important as chest compression [35, 36]. In October 2015, international CPR guidelines were changed, and CPR training apps should be updated according to new guidelines [36, 37]. High scores on the SUS scale indicate that the product or service is easy for the user to learn and handle. Three of the five apps we examined yielded SUS scores greater than 68 points. Some apps with high scores in conformity testing did not yield high scores in learnability and usability testing. In the future, easy-to-use, accurate CPR training apps should be developed.

There are several limitations to this study. First, user interest in CPR training apps improves educational transmission, and we did not attempt to find the interest factor in this study [14]. Second, the resident population of foreigners in Korea is growing every year, according to the South Korean Census. An investigation of CPR training apps that consist of various languages would be required for further examination. Not all CPR training apps evaluated in this study offered training in other languages. Finally, we conducted this study with two types of smartphones. An individual's skill or familiarity with a particular type of smartphone might have biased learnability and usability scores.

5. Conclusion

In conclusion, five CPR training apps followed current BLS guidelines, and three offered an acceptable level of learnability and usability for layperson. Current and developmental smartphone-based CPR training apps should include accurate CPR information (considering new international guidelines) and should be easy to use for laypeople that are potential rescuers in real-life incidents of SCA.

Acknowledgments

This work was supported by a Research Fund of the Hanyang University (HY-2015).

Competing Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

Authors' Contributions

Chiwon Ahn and Jaehoon Oh conceived the study, designed the trial, and obtained the research funding. Yeongtak Song, Juncheol Lee, Hyunggoo Kang, and Tae Ho Lim supervised the trial conduct and data collection. Yeongtak Song, Juncheol Lee, Hyunggoo Kang, and Tae Ho Lim analyzed the data statistically. Chiwon Ahn and Yongtak Cho drafted the manuscript, and all authors contributed substantially to its revision. Jaehoon Oh takes responsibility for the paper as a whole. Chiwon Ahn and Yongtak Cho contributed equally to this work.

References

- 1.Marijon E., Bougouin W., Tafflet M., et al. Population movement and sudden cardiac arrest location. Circulation. 2015;131(18):1546–1554. doi: 10.1161/CIRCULATIONAHA.114.010498. [DOI] [PubMed] [Google Scholar]

- 2.Mehra R. Global public health problem of sudden cardiac death. Journal of Electrocardiology. 2007;40(6):S118–S122. doi: 10.1016/j.jelectrocard.2007.06.023. [DOI] [PubMed] [Google Scholar]

- 3.Ro Y. S., Shin S. D., Song K. J., et al. A trend in epidemiology and outcomes of out-of-hospital cardiac arrest by urbanization level: a nationwide observational study from 2006 to 2010 in South Korea. Resuscitation. 2013;84(5):547–557. doi: 10.1016/j.resuscitation.2012.12.020. [DOI] [PubMed] [Google Scholar]

- 4.Travers A. H., Rea T. D., Bobrow B. J., et al. Part 4: CPR overview 2010 American Heart Association guidelines for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation. 2010;122(18, supplement 3):S676–S684. doi: 10.1161/circulationaha.110.970913. [DOI] [PubMed] [Google Scholar]

- 5.Waalewijn R. A., Tijssen J. G. P., Koster R. W. Bystander initiated actions in out-of-hospital cardiopulmonary resuscitation: results from the Amsterdam Resuscitation Study (ARRESUST) Resuscitation. 2001;50(3):273–279. doi: 10.1016/s0300-9572(01)00354-9. [DOI] [PubMed] [Google Scholar]

- 6.Vance K., Howe W., Dellavalle R. P. Social internet sites as a source of public health information. Dermatologic Clinics. 2009;27(2):133–136. doi: 10.1016/j.det.2008.11.010. [DOI] [PubMed] [Google Scholar]

- 7.Murugiah K., Vallakati A., Rajput K., Sood A., Challa N. R. YouTube as a source of information on cardiopulmonary resuscitation. Resuscitation. 2011;82(3):332–334. doi: 10.1016/j.resuscitation.2010.11.015. [DOI] [PubMed] [Google Scholar]

- 8.Sasson C., Magid D. J., Chan P., et al. Association of neighborhood characteristics with bystander-initiated CPR. The New England Journal of Medicine. 2012;367(17):1607–1615. doi: 10.1056/nejmoa1110700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kitamura T., Kiyohara K., Matsuyama T., et al. Is survival after out-of-hospital cardiac arrests worse during days of national academic meetings in Japan? A population-based study. Journal of Epidemiology. 2016;26(3):155–162. doi: 10.2188/jea.je20150100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ro Y. S., Shin S. D., Kitamura T., et al. Temporal trends in out-of-hospital cardiac arrest survival outcomes between two metropolitan communities: Seoul-Osaka resuscitation study. BMJ Open. 2015;5(6) doi: 10.1136/bmjopen-2015-007626.e007626 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Koster R. W., Baubin M. A., Bossaert L. L., et al. European resuscitation council guidelines for resuscitation 2010 section 2 adult basic life support and use of automated external defibrillators. Resuscitation. 2010;81(10):1277–1292. doi: 10.1016/j.resuscitation.2010.08.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Payne K. F. B., Wharrad H., Watts K. Smartphone and medical related App use among medical students and junior doctors in the United Kingdom (UK): a regional survey. BMC Medical Informatics and Decision Making. 2012;12, article 121 doi: 10.1186/1472-6947-12-121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Könings K. D., Gijselaers W. H. Bringing learning to the workplace: a smartphone app for reflection and increased authenticity of learning. In: Dailey-Hebert A., Dennis K. S., editors. Transformative Perspectives and Processes in Higher Education. Vol. 6. Berlin, Germany: Springer; 2015. pp. 117–135. (Advances in Business Education and Training). [DOI] [Google Scholar]

- 14.Kalz M., Lenssen N., Felzen M., et al. Smartphone apps for cardiopulmonary resuscitation training and real incident support: a mixed-methods evaluation study. Journal of Medical Internet Research. 2014;16(3, article e89) doi: 10.2196/jmir.2951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Chan T., Wan K., Chan J., et al. New era of CPR: application of i-technology in resuscitation. Hong Kong Journal of Emergency Medicine. 2012;19(5):p. 305. [Google Scholar]

- 16.Song Y., Oh J., Chee Y. A new chest compression depth feedback algorithm for high-quality CPR based on smartphone. Telemedicine and e-Health. 2015;21(1):36–41. doi: 10.1089/tmj.2014.0051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Semeraro F., Taggi F., Tammaro G., Imbriaco G., Marchetti L., Cerchiari E. L. ICPR: a new application of high-quality cardiopulmonary resuscitation training. Resuscitation. 2011;82(4):436–441. doi: 10.1016/j.resuscitation.2010.11.023. [DOI] [PubMed] [Google Scholar]

- 18.Ministry of Science. Korean Fixed and Mobile Telecommunication Service Statistics. http://www.itstat.go.kr/stat.it?no=1149.

- 19.Korea Internet & Security Agency (KISA) Investigation of domestic internet environment, 2015. http://www.koreahtml5.kr/

- 20.Statistics Korea. 100 indexes of Korean Statistical Information Service (KOSIS) http://kosis.kr/nsportalStats/nsportalStats_0101Body.jsp?menuId=all.

- 21.Ethnologue. Ethnologue: Languages of the World, Statistics. http://www.ethnologue.com/statistics/country.

- 22.Liberati A., Altman D. G., Tetzlaff J., et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. Annals of Internal Medicine. 2009;339, article b2700 doi: 10.7326/0003-4819-151-4-200908180-00136. [DOI] [PubMed] [Google Scholar]

- 23.Brooke J. SUS-a quick and dirty usability scale. Usability Evaluation in Industry. 1996;189(194):4–7. [Google Scholar]

- 24.McLellan S., Muddimer A., Peres S. C. The effect of experience on System Usability Scale ratings. Journal of Usability Studies. 2012;7(2):56–67. [Google Scholar]

- 25.Bangor A., Kortum P., Miller J. Determining what individual SUS scores mean: adding an adjective rating scale. Journal of Usability Studies. 2009;4(3):114–123. [Google Scholar]

- 26.Bangor A., Kortum P. T., Miller J. T. An empirical evaluation of the system usability scale. International Journal of Human-Computer Interaction. 2008;24(6):574–594. doi: 10.1080/10447310802205776. [DOI] [Google Scholar]

- 27.Baskett P. J. F., Nolan J. P., Handley A., Soar J., Biarent D., Richmond S. European resuscitation council guidelines for resuscitation 2005: section 9 principles of training in resuscitation. Resuscitation. 2005;67(supplement 1):S181–S189. doi: 10.1016/j.resuscitation.2005.10.006. [DOI] [PubMed] [Google Scholar]

- 28.Chamberlain D. A., Hazinski M. F. Education in resuscitation. Resuscitation. 2003;59(1):11–43. doi: 10.1016/j.resuscitation.2003.08.011. [DOI] [PubMed] [Google Scholar]

- 29.Ahn J. Y., Cho G. C., Shon Y. D., Park S. M., Kang K. H. Effect of a reminder video using a mobile phone on the retention of CPR and AED skills in lay responders. Resuscitation. 2011;82(12):1543–1547. doi: 10.1016/j.resuscitation.2011.08.029. [DOI] [PubMed] [Google Scholar]

- 30.Choa M., Park I., Chung H. S., Yoo S. K., Shim H., Kim S. The effectiveness of cardiopulmonary resuscitation instruction: animation versus dispatcher through a cellular phone. Resuscitation. 2008;77(1):87–94. doi: 10.1016/j.resuscitation.2007.10.023. [DOI] [PubMed] [Google Scholar]

- 31.Yonekawa C., Suzukawa M., Yamashita K., et al. Development of a first-responder dispatch system using a smartphone. Journal of Telemedicine and Telecare. 2014;20(2):75–81. doi: 10.1177/1357633X14524152. [DOI] [PubMed] [Google Scholar]

- 32.Lee S., Oh J., Kang H., et al. Proper target depth of an accelerometer-based feedback device during CPR performed on a hospital bed: a randomized simulation study. The American Journal of Emergency Medicine. 2015;33(10):1425–1429. doi: 10.1016/j.ajem.2015.07.010. [DOI] [PubMed] [Google Scholar]

- 33.Song Y., Oh J., Chee Y., Cho Y., Lee S., Lim T. H. Effectiveness of chest compression feedback during cardiopulmonary resuscitation in lateral tilted and semirecumbent positions: a randomised controlled simulation study. Anaesthesia. 2015;70(11):1235–1241. doi: 10.1111/anae.13222. [DOI] [PubMed] [Google Scholar]

- 34.Song Y., Chee Y., Oh J., Ahn C., Lim T. H. Smartwatches as chest compression feedback devices: a feasibility study. Resuscitation. 2016;103:20–23. doi: 10.1016/j.resuscitation.2016.03.014. [DOI] [PubMed] [Google Scholar]

- 35.Bellamy R. F., DeGuzman L. R., Pedersen D. C. Coronary blood flow during cardiopulmonary resuscitation in swine. Circulation. 1984;69(1):174–180. doi: 10.1161/01.CIR.69.1.174. [DOI] [PubMed] [Google Scholar]

- 36.Kleinman M. E., Brennan E. E., Goldberger Z. D., et al. Part 5: adult basic life support and cardiopulmonary resuscitation quality: 2015 American Heart Association guidelines update for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation. 2015;132(18):S414–S435. doi: 10.1161/cir.0000000000000259. [DOI] [PubMed] [Google Scholar]

- 37.Monsieurs K. G., Nolan J. P., Bossaert L. L., et al. European Resuscitation Council guidelines for resuscitation 2015. Resuscitation. 2015;95:1–80. doi: 10.1016/j.resuscitation.2015.07.038. [DOI] [PubMed] [Google Scholar]