Abstract

Remaining undetected is often key to survival, and camouflage is a widespread solution. However, extrinsic to the animal itself, the complexity of the background may be important. This has been shown in laboratory experiments using artificially patterned prey and backgrounds, but the mechanism remains obscure (not least because ‘complexity’ is a multifaceted concept). In this study, we determined the best predictors of detection by wild birds and human participants searching for the same cryptic targets on trees in the field. We compared detection success to metrics of background complexity and ‘visual clutter’ adapted from the human visual salience literature. For both birds and humans, the factor that explained most of the variation in detectability was the textural complexity of the tree bark as measured by a metric of feature congestion (specifically, many nearby edges in the background). For birds, this swamped any effects of colour match to the local surroundings, although for humans, local luminance disparities between the target and tree became important. For both taxa, a more abstract measure of complexity, entropy, was a poorer predictor. Our results point to the common features of background complexity that affect visual search in birds and humans, and how to quantify them.

Keywords: camouflage, background complexity, visual search, clutter metrics

1. Introduction

Avoiding detection is frequently important, whether for prey avoiding predators, predators approaching prey, males seeking mating opportunities, or subordinate individuals avoiding harassment by dominants. Camouflage is one of the most widespread adaptations for concealment, although it is perhaps more usefully thought of as, not one process, but a collection of mechanisms that interfere with detection, recognition, and successful attack [1–4].

Various factors have been proposed to affect the detectability of an animal: the similarity in colour and pattern between prey and background (the degree of background matching) both in terms of local contrast with the immediate background and coarse-grained similarity to the features of the habitat as a whole [5–9], the coherence of shape and outline (as opposed to disruptive colouration) [10–12], distraction of attention from salient features ([13,14], but see [15,16]), salience of distinctive body parts such as eyes [17], symmetry or repetition of features [18–20], and also as a factor extrinsic to the prey, the complexity of the background [7]. The latter has been investigated in laboratory experiments using artificially patterned prey and backgrounds, with birds [14,21,22] and fish [23]. There is value in replicating experiments on the effects of background complexity under more natural conditions; natural textures differ from the types of artificial textures used in these experiments in many ways, such as the contrast range and how deterministic or periodic the pattern is [24]. Importantly, although these studies have demonstrated effects of background ‘complexity’ (‘high variability or complexity in shapes of the elements constituting the background’ [21]), it is not clear which perceptual aspects of complexity interfere with visual search, nor how one could translate this, intuitively reasonable, verbal description into a numerical measure of complexity. With camouflaged targets and complex natural textures, this is a challenge because the targets (and any objects or features in the background that they are similar to) have not been segmented (visually separated) from the background and the features that might be used to discriminate between target and background are not pre-specified by the experimenter.

In this study, we tested the effect of background complexity on the detection success of wild birds preying on artificial prey on various trees in natural woodland. All prey were identical, allowing us to attribute differences in detection success to features of the background and the relationship between the target and the background. We used prey of a single colour, the mean of the background, because introducing patterning on the prey would greatly increase the number of possible dimensions of difference from the different tree backgrounds. The same experiment was replicated, using the exact same targets in the exact same locations, using human subjects. This allowed us to identify similarities and differences between humans and birds searching for the same targets against the same backgrounds under the same lighting conditions. To our knowledge, this is the first time this has been done. Photographs were taken of all targets with a calibrated digital camera, so that human and avian detection could be related to visual features of the prey and their immediate background in situ. These were measures of background complexity, plus species-specific estimates of local colour contrast.

We used two measures of background complexity: entropy, an information theoretic metric popular in signal processing, and feature congestion [25]. The latter, based on the variation in features encoded in the early stages of vision, (luminance, colour, orientation of edges) has proved successful at predicting interference with human visual search [26], with applications in applied contexts such as the detection of warning signals in complex visual displays. Other approaches, for example, based on spatial sampling [27] are conceptually similar to feature congestion, but we take the approach based on low-level vision. We adapted the model of Rosenholtz et al. [25,26] for avian colour vision.

2. Material and methods

The experimental design was similar to Cuthill et al. [10]; both human and bird field predation experiments were conducted using the same general techniques described for this and similar experiments, but with a different target colour. Targets were triangular in shape (42 mm wide × 20 mm high) in order to resemble a non-specific Lepidopteran. Only one colour was used (see the electronic supplementary material), with 130 replicates: the average colour of tree bark taken from calibrated photos of 100 trees in exactly the same area of the woods as the experiment (Leigh Woods National Nature Reserve, North Somerset, UK, 2°38.6′ W, 51°27.8′ N). The targets were printed so as to match the average bark colour as viewed by a passerine bird, the blue tit (Cynanistes caeruleus), as determined by single and double cone photon catches (i.e. photon catch paper = photon catch bark). This and other passerine species have been seen predating the artificial prey in previous experiments. The colour was quantified using spectrophotometry and avian colour space modelling (following [28]).

This experiment was conducted from November 2011 to January 2012. For avian predation, a dead mealworm (Tenebrio molitor larva, frozen overnight at −80°C, then thawed) was attached underneath the artificial ‘moth wings’, then pinned on a tree with approximately 5 mm protruding. Trees were selected according to constraints important for the human experiment, as follows. All targets had to be in open view of the path, potentially visible from at least 20 m distance but passed no further than 2 m distant, and between 1.5 and 1.8 m height on the tree trunk (about the average eye level of an adult). Not every tree was used, so participants could not guess whether a target was present. Trees were not initially selected for the experiment with respect to species, but five were retrospectively identified as having been used: ash (Fraxinus excelsior) N = 9, beech (Fagus sylvatica) N = 32, cherry (Prunus avium) N = 3, oak (Quercus robur) N = 84, and yew (Taxus baccata) N = 2. With the highly unbalanced and, for some species, low sample sizes, effects of tree species per se cannot be determined with great reliability but the analysis did consider possible species effects because they may be confounded with other predictors (e.g. oak bark is markedly more patterned than that of beech). Targets were checked at 24, 48, and 72 h; disappearance of the mealworm was scored as ‘predation’, predation by invertebrates (spiders, ants, and slugs) and survival to 72 h were scored as ‘censored’ and results were analysed using Cox proportional hazards regression [29]. This is a semi-parametric form of survival analysis that allows analysis of the effects of risk factors on survival; we used the survival package [30] in R v. 2.14.0 (R Development Core Team 2011).

In the human detection experiment, run immediately after the bird experiment, the same targets on exactly the same trees were used. The total transect, about 1 km long, took participants from 40 to 60 min to walk. Twenty human participants, none colour-blind and all with normal or corrected-to-normal vision, were shown an example target and instructed to walk slowly along the path, with an experimenter following behind, and to stop when they had detected a target. Participants used a laser range finder (Leica Disto D5; Leica Geosystems GmbH, Munich, Germany) to measure detection distance if the target was detected. The experimenter walked just behind the participant, so as not to influence them, and recorded the distances and any targets missed. The use of the laser rangefinder also dissuaded participants from guessing, as the laser dot confirmed correct target location (there were no false positives in any trials). Human detection was estimated by two response variables: detection (binary) and, if it was detected, the detection distance to the nearest centimetre.

Calibrated photos of the targets were taken in the field at a distance of approximately 1 m at 1 : 1 size reproduction using a Nikon D70 digital camera (Nikon Corporation, Tokyo, Japan). These were used to derive measures of target-background contrast at the immediate target boundary and with a broader area of bark around the target (both based on the difference between the mean for the targets’ pixels and the mean of the pixels of the respective bark area); measures of visual ‘clutter’, both entropy and feature congestion, were also calculated. The computation of these metrics is described in the electronic supplementary material.

Relationships between the bird predation data (survival time, but measured at only three time points so not a continuous variable) and human detection data (binary detect/miss and continuous detection distance) were analysed using non-parametric correlation (Kendall's tau using the R function cor. test and the large sample size normal approximation of the test statistic). Results for the human experiment, in terms of the proportion of subjects detecting a given target, and the mean detection distance were analysed using generalized linear mixed models (GLMMs) with the R package lme4 [31]. Binomial error was used for the detect/miss data and normal error for log-transformed distance. For each predictor, two models were fitted using maximum-likelihood (both with participant as random intercepts), the first with the predictor in question, the second without the predictor, then these two models were compared using the change in deviance, for the binomial models tested against a χ2 distribution or F test for the normal models [32]. Model assumptions (e.g. normality and homogeneity of variance), convergence and fit (residual deviance < degrees of freedom) were all validated, following [31,32]. Because of the relatively large number of candidate predictors to be investigated, with concomitant risk of elevated type I errors and over-fitting, model selection (‘training’) and model evaluation (‘testing’) were carried out on different data [33]. This is a better approach than simply controlling type I error, because the outcome of any exploratory data analysis is best considered a ‘hypothesis’ to be tested with independent data. The 130 targets/cases were therefore randomly split into two sets of 65 using R's sample function. The first, training, set was used to build a model starting with all candidate predictors and then eliminating non-significant (p > 0.05) predictors in a stepwise fashion [32]. The candidate model was then tested using the other 65 cases. We present the results of the latter tests in the results section; the statistics associated with the training phase are provided in the electronic supplementary material. Effect sizes are presented as odds ratios for the Cox regressions (bird experiment) or standardized regression coefficients (β, the change in the response variable for a change of 1 s.d. in the predictor) for the GLMMs, both with 95% confidence intervals (abbreviated CI).

3. Results

(a). Correlations between bird and human detection performance

In this analysis, if birds and humans found the same targets easy/hard to detect, we expected the correlation between the survival time under bird predation and the two human detection measures of detectability (proportion of, and distance, detected) to be negatively correlated (cryptic targets that survived longer under bird predation would be detected less often by humans and, if detected, at a shorter distance; i.e. closer to the tree on which they were placed). A modest negative association was indeed apparent for both probability of detection (τ = −0.18, z = −2.53, d.f. = 129, p = 0.0011) and distance (τ = −0.22, z = −3.27, d.f. = 129, p = 0.0115).

(b). Analysing predictors of bird predation

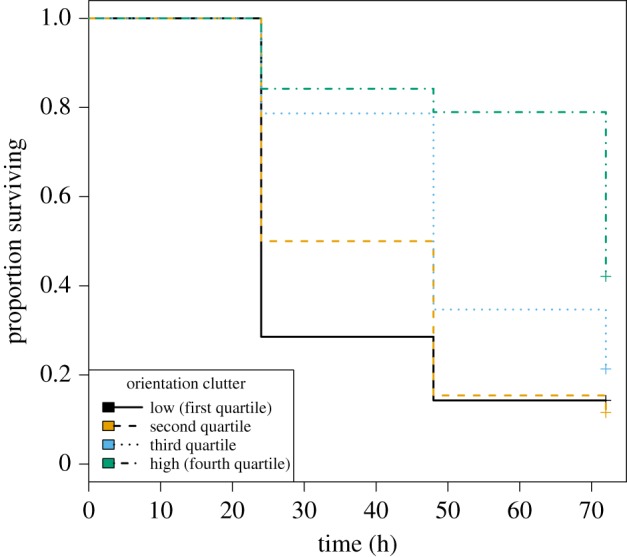

Candidate predictors of detectability by birds were the luminance and chromatic contrast between the target (a constant, as all were the same colour) and the surrounding bark at both its boundary and the broader tree background (so two contrast measures each at two spatial scales), measures of bark complexity based on three aspects of feature congestion (luminance, chroma, and edge orientation) and sub-band entropy, plus the number of correct triangle edges detected by the Hough transform. Orientation clutter and sub-band entropy were the only significant predictors left in the model training phase, but only orientation clutter was significant when tested on the independent dataset (odds ratio = 0.62 (95% CI 0.48, 0.81), χ2 = 10.4, d.f. = 1, p = 0.0013); mortality was lower on trees with more orientation clutter (figure 1).

Figure 1.

Positive effect of bark orientation clutter on survival under bird predation. To plot the graph, orientation clutter was divided into four categories, from low to high, with approximately equal sample sizes in each (using the cut2 function in the Hmisc package in R, [34]). (Online version in colour.)

As mentioned before, if birds foraged more on some species than others, then the apparent effect of feature congestion could be an artefact of differences between tree species unrelated to bark texture. Certainly, the species differed significantly in bark complexity, with oak the highest on all metrics (electronic supplementary material). However, in a series of models with both tree species and orientation congestion, and using all data (training and test) to maximize power, only orientation clutter was significant (electronic supplementary material).

For comparison with Stevens & Cuthill [28], edge detection analysis was carried out using a Laplacian-of-Gaussian edge detector followed by the Hough transform as a straight line (and thus triangle outline) detector. Mortality was higher the greater the number of correct triangle sides detected by the Hough transform (odds ratio = 1.22 (95% CI 1.00–1.48), χ2 = 3.88, d.f. = 1, p = 0.0488). However, this edge salience measure had no significant predictive power in a model that included orientation clutter (lines: χ2 = 2.10, d.f. = 1, p = 0.1477; orientation: χ2 = 10.99, d.f. = 1, p = 0.0009).

(c). Analysing predictors of human detection

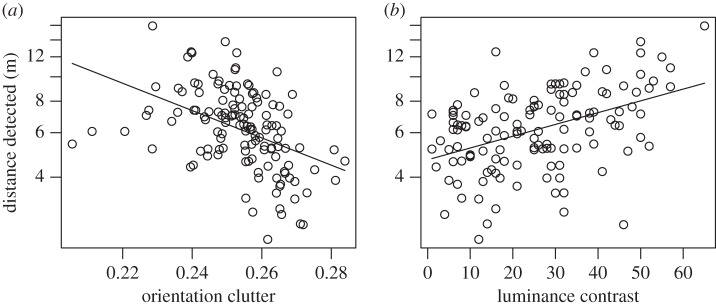

All predictors used in the analysis of bird predation were also used in training models for both detection distance and probability of detection. However, the calculations of these metrics were based on human, not bird, colour vision. For detection distance, orientation clutter and border luminance contrast were the only significant predictors left in the model training phase (electronic supplementary material). Both were also significant when tested on the independent data: targets were detected at a greater distance when the luminance contrast at their border with the bark was higher (figure 2; β = 0.10 (95% CI 0.02, 0.19), F1,62 = 6.02, p = 0.0170) and orientation clutter was lower (figure 2; β = −0.10 (95% CI −0.02, −0.19), F1,62 = 5.69, p = 0.0201). For detection probability (0.84 of the prey were detected), the final model in the training phase contained orientation clutter, chromatic contrast, and Hough lines (electronic supplementary material, table S4). However, when testing these predictors on the other 50% of the data, none were significant (orientation clutter: χ2 = 1.77, p = 0.1832, chromatic contrast: χ2 = 3.42, p = 0.0646, Hough lines: χ2 = 0.85, p = 0.3565). However, it is worth noting that the non-significant patterns were consistent with those observed for detection distance (electronic supplementary material).

Figure 2.

The distance at which targets were detected by humans was greater when orientation clutter was lower and luminance contrast between target and tree was higher. Distance is plotted on a log scale and so the lines represent linear regressions of log(distance) of the predictors.

Edge detection analysis was also carried out. The result shows that detection distance and detection probability were both higher the greater the number of correct triangle sides detected by the Hough transform (distance: β = 0.08 (95% CI 0.05, 0.11), F1,128 = 6.55, p = 0.0117; detection probability: β = 0.08 (95% CI 0.05, 0.11), χ2 = 18.183, d.f. = 1, p < 0.0001). However, for detection distance, this edge salience measure ceased to be significant when the significant predictors from the main analysis, orientation clutter and border luminance contrast, were included in the same model (single term deletions: Hough lines: F1,126 = 0.16, p = 0.6920; orientation clutter: F1,126 = 20.30, p < 0.0001; border luminance contrast: F1,127 = 19.70, p < 0.0001). For detection probability, there were no significant predictors in the main analysis and so no formal justification for including any of these possible alternative predictors for the relationship between detection probability and Hough lines. However, for interest's sake, if one fits the same model as for detection distance, the result is similar: Hough lines cease to be a significant predictor when orientation clutter and border luminance contrast are included in the same model (single term deletions: Hough lines: χ2 = 1.68, d.f. = 1, p = 0.1944; orientation clutter: χ2 = 6.97, d.f. = 1, p = 0.0083; border luminance contrast: χ2 = 21.70, d.f. = 1, p < 0.0001).

4. Discussion

The results show that it is more difficult to find objects against a complex background, and that detectability is both correlated between humans and birds and explained by similar (although not identical) factors. Furthermore, we have demonstrated that we can quantify complexity in a meaningful way using metrics from computational vision. While previous studies on non-humans (e.g. [20–22]) show that search time increases when searching against a complex background, or animals (killifish Heterandria formosa) [23] have a preference for hiding in visually heterogeneous habitats, these studies lack any quantification of ‘complexity’; analysis of what the features of any of the presumed distractors are that make search difficult, or whether the visual system codes different feature dimensions independently. In this experiment, we have shown that the application of metrics, particularly feature congestion, from machine vision, can provide insights to this question. It is perhaps notable that the more abstract, information-theory based, measure of ‘complexity’, sub-band entropy is not as good a predictor of search performance, in either birds or humans, as the metrics are based on features in low-level vision (as also shown previously in humans in [26]). This would suggest that it is important for biologists to define what they mean by ‘complexity’ of a background. Indeed, we prefer not to use that term for the effects observed in our study, preferring Rosenholtz's term of feature congestion (see also Endler's similar metrics [27]). It is not the general complexity of the visual scene that affects visual search, it is higher density or variance in some or all of the features of the object that is being sought. This parallels the conclusions Duncan & Humphreys [35] drew when reviewing the literature on human search for targets among discrete distractors, as opposed to the sort of continuous textures that bark, in our study, represents. It is also consistent with target-distractor search results with birds, showing that density and distractor shape complexity affect search [21,22].

Our results also show that contrasts in luminance between target and background negatively affect their detectability by humans, i.e. as the similarity between target and the immediately adjacent bark decreases, the targets can be detected from further away. We had anticipated that this would be the main effect for both humans and birds in the experiment because the similarity in colour (luminance and chroma) of the targets to the background adds more noise in object segmentation at an early stage, facilitates the detection of boundaries [28], and makes feature grouping even more difficult [36,37]. Indeed, considering the textures of targets and bark, as opposed to colour, the results we obtained are opposite to the predictions of background pattern matching: targets survived longer (birds) or were detected less easily (humans) on bark with greater levels of feature congestion, particularly oriented lines (edges). The targets, being a homogeneous colour, have a ‘feature congestion’ of close to zero and so are most different from the backgrounds on which they are least detectable. Complexity of the background is more important than precise matching of the background in the situation studied: relatively simple targets and, at least in terms of pattern if not colour, complex backgrounds. We would expect this to reverse when targets are a very different colour from the background (e.g. bright white or yellow targets would stand out regardless of background complexity) or when backgrounds are simple and visually uncluttered.

Why was target-bark border contrast only significant for human detection distance and not for bird predation? While it is the case that the targets were designed to match the average bark as perceived by a bird, not a human, this is unlikely to be a major factor as luminance contrasts for bird and human measures were highly correlated (Pearson's r128 = 0.9937). One possible factor is lower sensitivity to luminance contrast differences in birds [38]. However, the main factor is probably that our measure of bird detection (predation checked at 24, 48, and 72 h) is less precise than the human measure, detection distance to the nearest 0.1 cm. Another factor is that human participants had one task—spotting triangles—whereas foraging birds were searching for multiple prey and had other behaviours competing for attention.

In both our bird and human experiments, the detectability of a target's edge, as measured by the Hough transform's performance, did predict target detection, but not independently of the contrast and congestion metrics. This is because the Hough transform is designed to detect linear edges of high contrast in luminance (or chroma), so edges in the background ‘compete’ with those on the triangular target's edges for the Hough transform's signal (if a straight line in the background is stronger than on the triangle's boundary, then the Hough transform will detect it preferentially). Therefore, the Hough transform's predictive success is completely specified by both target-bark boundary contrast and the number of strong edges in the background.

In summary, humans and birds were shown to be affected by similar visual properties of the background when searching for cryptic targets. However, contrast between target and background seemed less important to birds on these backgrounds. That said, ‘survival’ is a crude measure compared to detection distance (as used for humans). Clutter metrics are related to saliency models of human vision, saliency being the attributes of an object that attract attention. Therefore, this approach also offers an opportunity to understand the predictors of human and avian attention in the same framework, so that we can have further understanding of what components of visual scenes are important in driving visual search in different species.

Supplementary Material

Acknowledgements

We are grateful to Sami Merilaita, an anonymous referee, and everyone in CamoLab for critical comments and valuable advice. We also thank all the participants involved; they were not financially remunerated.

Ethics

The human research had ethical approval from the University of Bristol, Faculty of Science Research Ethics Committee and the bird experiments from the Animal Welfare and Ethical Review Body.

Data accessibility

All data are available from Dryad doi:10.5061/dryad.gm0cb [39].

Authors' contributions

F.X. and I.C.C. had equal roles in experimental design, execution, analysis, and manuscript writing.

Competing interests

The authors declare no competing interests.

Funding

The research was funded by the University of Bristol. I.C.C. also thanks the Wissenschaftskolleg zu Berlin for support during part of the study.

References

- 1.Endler JA. 1981. An overview of the relationships between mimicry and crypsis. Biol. J. Linn. Soc. 16, 25–31. ( 10.1111/j.1095-8312.1981.tb01840.x) [DOI] [Google Scholar]

- 2.Stevens M. 2007. Predator perception and the interrelation between different forms of protective coloration. Proc. R. Soc. B 274, 1457–1464. ( 10.1098/rspb.2007.0220) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Stevens M, Merilaita S. 2011. Animal camouflage: mechanisms and function. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 4.Osorio D, Cuthill IC. 2015. Camouflage and perceptual organization in the animal kingdom. In The Oxford handbook of perceptual organization (ed. Wagemans J.). Oxford, UK: Oxford University Press. [Google Scholar]

- 5.Bond AB, Kamil AC. 2006. Spatial heterogeneity, predator cognition, and the evolution of color polymorphism in virtual prey. Proc. Natl Acad. Sci. USA 103, 3214–3219. ( 10.1073/pnas.0509963103) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Merilaita S. 2001. Habitat heterogeneity, predation and gene flow: colour polymorphism in the isopod, Idotea baltica. Evol. Biol. 15, 103–116. ( 10.1023/a:1013814623311) [DOI] [Google Scholar]

- 7.Merilaita S. 2003. Visual background complexity facilitates the evolution of camouflage. Evolution 57, 1248–1254. ( 10.1111/j.0014-3820.2003.tb00333.x) [DOI] [PubMed] [Google Scholar]

- 8.Merilaita S, Stevens M. 2011. Crypsis through background matching. In Animal camouflage (eds Stevens M, Merilaita S), pp. 17–33. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 9.Merilaita S, Tuomi J, Jormalainen V. 1999. Optimization of cryptic coloration in heterogeneous habitats. Biol. J. Linn. Soc. 67, 151–161. ( 10.1111/j.1095-8312.1999.tb01858.x) [DOI] [Google Scholar]

- 10.Cuthill IC, Stevens M, Sheppard J, Maddocks T, Párraga CA, Troscianko TS. 2005. Disruptive coloration and background pattern matching. Nature 434, 72–74. ( 10.1038/nature03312) [DOI] [PubMed] [Google Scholar]

- 11.Merilaita S. 1998. Crypsis through disruptive coloration in an isopod. Proc. R. Soc. Lond. B 265, 1059–1064. ( 10.1098/rspb.1998.0399) [DOI] [Google Scholar]

- 12.Webster RJ, Hassall C, Herdman CM, Godin J-G, Sherratt TN. 2013. Disruptive camouflage impairs object recognition. Biol. Lett. 9, 20130501 ( 10.1098/rsbl.2013.0501) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cott HB. 1940. Adaptive coloration in animals. London, UK: Methuen & Co. Ltd. [Google Scholar]

- 14.Dimitrova M, Stobbe N, Schaefer HM, Merilaita S. 2009. Concealed by conspicuousness: distractive prey markings and backgrounds. Proc. R. Soc. B 276, 1905–1910. ( 10.1098/rspb.2009.0052) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Stevens M, Graham J, Winney IS, Cantor A. 2008. Testing Thayer's hypothesis: can camouflage work by distraction? Biol. Lett. 4, 648–650. ( 10.1098/rsbl.2008.0486) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Stevens M, Marshall KLA, Troscianko J, Finlay S, Burnand D, Chadwick SL. 2013. Revealed by conspicuousness: distractive markings reduce camouflage. Behav. Ecol. 24, 213–222. ( 10.1093/beheco/ars156) [DOI] [Google Scholar]

- 17.Cuthill IC, Szekely A. 2009. Coincident disruptive coloration. Phil. Trans. R. Soc. B 364, 489–496. ( 10.1098/rstb.2008.0266) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cuthill IC, Hiby E, Lloyd E. 2006. The predation costs of symmetrical cryptic coloration. Proc. R. Soc. B 273, 1267–1271. ( 10.1098/rspb.2005.3438) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cuthill IC, Stevens M, Windsor AMM, Walker HJ. 2006. The effects of pattern symmetry on detection of disruptive and background-matching coloration. Behav. Ecol. 17, 828–832. ( 10.1093/beheco/arl015) [DOI] [Google Scholar]

- 20.Dimitrova M, Merilaita S. 2012. Prey pattern regularity and background complexity affect detectability of background-matching prey. Behav. Ecol. 23, 384–390. ( 10.1093/beheco/arr201) [DOI] [Google Scholar]

- 21.Dimitrova M, Merilaita S. 2010. Prey concealment: visual background complexity and prey contrast distribution. Behav. Ecol. 21, 176–181. ( 10.1093/beheco/arp174) [DOI] [Google Scholar]

- 22.Dimitrova M, Merilaita S. 2014. Hide and seek: properties of prey and background patterns affect prey detection by blue tits. Behav. Ecol. 25, 402–408. ( 10.1093/beheco/art130) [DOI] [Google Scholar]

- 23.Kjernsmo K, Merilaita S. 2012. Background choice as an anti-predator strategy: the roles of background matching and visual complexity in the habitat choice of the least killifish. Proc. R. Soc. B 279, 4192–4198. ( 10.1098/rspb.2012.1547) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Aviram G, Rotman SR. 2000. Evaluating human detection performance of targets and false alarms, using a statistical texture image metric. Opt. Eng. 39, 2285–2295. ( 10.1117/1.1304925) [DOI] [Google Scholar]

- 25.Rosenholtz R, Li Y, Mansfield J, Jin Z. 2005. Feature congestion: a measure of display clutter. In Proc. of the SIGCHI Conference on Human Factors in Computing Systems, ACM, pp. 761–770.

- 26.Rosenholtz R, Li Y-Z, Nakano L. 2007. Measuring visual clutter. J. Vis. 7, 1–22. ( 10.1167/7.2.17) [DOI] [PubMed] [Google Scholar]

- 27.Endler JA. 2012. A framework for analysing colour pattern geometry: adjacent colours. Biol. J. Linn. Soc. 107, 233–253. ( 10.1111/j.1095-8312.2012.01937.x) [DOI] [Google Scholar]

- 28.Stevens M, Cuthill IC. 2006. Disruptive coloration, crypsis and edge detection in early visual processing. Proc. R. Soc. B 273, 2141–2147. ( 10.1098/rspb.2006.3556) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Cox DR. 1972. Regression models and life-tables. J. R. Stat. Soc. B 34, 187–220. [Google Scholar]

- 30.Therneau T. 2014. A Package for Survival Analysis in S. R package version 2.37-7. See http://CRAN.R-project.org/package=survival. [Google Scholar]

- 31.Bates D, Maechler M, Bolker B, Walker S. 2014. lme4: linear mixed-effects models using ‘Eigen’ and S4. R package version 1.1-7. See http://CRAN.R-project.org/package=lme4&per;3E. [Google Scholar]

- 32.Crawley MJ.2007. The R book. Chichester, UK: John Wiley & Son. [Google Scholar]

- 33.Lantz B. 2013. Machine learning with R. Birmingham, UK: Packt Publishing Ltd. [Google Scholar]

- 34.Harrell FEJ. 2015. Hmisc: Harrell Miscellaneous. R package version 3.16-0. See http://CRAN.R-project.org/package=Hmisc. [Google Scholar]

- 35.Duncan J, Humphreys GW. 1989. Visual search and stimulus similarity. Psych. Rev. 96, 433–458. ( 10.1037/0033-295X.96.3.433) [DOI] [PubMed] [Google Scholar]

- 36.Farmer EW, Taylor RM. 1980. Visual search through color displays: effects of target-background similarity and background uniformity. Percept. Psychophys. 27, 267–272. ( 10.3758/BF03204265) [DOI] [PubMed] [Google Scholar]

- 37.Espinosa I, Cuthill IC. 2014. Disruptive colouration and perceptual grouping. PLoS ONE 9, e87153 ( 10.1371/journal.pone.0087153) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ghim M, Hodos W. 2006. Spatial contrast sensi- tivity of birds. J. Comp. Physiol. A 192, 523–534. ( 10.1007/s00359-005-0090-5) [DOI] [PubMed] [Google Scholar]

- 39.Xiao F, Cuthill I. 2016 doi: 10.5061/dryad.gm0cb. Data from: Background complexity and the detectability of camouflaged targets by birds and humans. Dryad Digital Repository. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data are available from Dryad doi:10.5061/dryad.gm0cb [39].