Abstract

Premise of the study:

Low-elevation surveys with small aerial drones (micro–unmanned aerial vehicles [UAVs]) may be used for a wide variety of applications in plant ecology, including mapping vegetation over small- to medium-sized regions. We provide an overview of methods and procedures for conducting surveys and illustrate some of these applications.

Methods:

Aerial images were obtained by flying a small drone along transects over the area of interest. Images were used to create a composite image (orthomosaic) and a digital surface model (DSM). Vegetation classification was conducted manually and using an automated routine. Coverage of an individual species was estimated from aerial images.

Results:

We created a vegetation map for the entire region from the orthomosaic and DSM, and mapped the density of one species. Comparison of our manual and automated habitat classification confirmed that our mapping methods were accurate. A species with high contrast to the background matrix allowed adequate estimate of its coverage.

Discussion:

The example surveys demonstrate that small aerial drones are capable of gathering large amounts of information on the distribution of vegetation and individual species with minimal impact to sensitive habitats. Low-elevation aerial surveys have potential for a wide range of applications in plant ecology.

Keywords: aerial drone (micro-UAV, UAS); aerial survey; digital elevation model (DEM); digital surface model (DSM); orthomosaic; vegetation mapping

Quantifying the distribution and abundance of plants is of fundamental importance to plant ecology. Our ability to estimate plant distributions over large areas (i.e., several hectares) using traditional approaches (transect or quadrat methods) is generally limited because of the time and expense required. Intensive plant surveys may also result in unacceptable levels of disturbance to sensitive ecosystems due to soil compaction, disruption of soil organic layers, trampling, and vegetation damage. While remote sensing via satellites provides information on landforms and the general distribution of vegetation types over large areas, it is unlikely to provide adequate spatial or temporal resolution for determining the distributions of individual species or fine-scale differentiation among landscape features and vegetation types. Moreover, available satellite images may not represent optimal phenological stages for the identification of different species and vegetation types. Manned aircraft and large drone surveys can have increased resolution, but are prohibitively expensive for most investigations and generally do not provide a high enough resolution to assess the distributions and compositions of plant communities. Utilization of micro–unmanned aerial vehicles (UAVs, unmanned aerial systems [UAS], small aerial drones) may provide adequate levels of image detail to estimate the distribution of individual plant species or vegetation types over several hectares at a relatively low cost (Anderson and Gaston, 2013). Our goal in this article is to describe the advantages and limitations of small aerial drone surveys for estimating the distributions of individual plant species and vegetation types at fine spatial scales. We describe a number of factors researchers should consider for planning aerial drone surveys. An example is provided to illustrate the application of micro-UAV surveys for the estimation of the distribution and abundances of vegetation types and plant species across a 16-ha area of vernal pool habitat in southern Oregon, USA.

METHODS

General considerations for data collection with UAVs

The prospect of using drones for vegetation sampling is exciting because of the large amount of information that can be collected with minimal effort. On the other hand, there are many limitations to this approach that should be considered before investing in the equipment necessary to conduct micro-UAV surveys. Researchers should begin by carefully considering whether their goals are a good match for the acquisition of data from aerial images obtained from aerial drones. Suitable applications include surveys of the distribution and abundance of individual species or vegetation types, and aerial sampling of sensitive habitats or terrain that is difficult to access. These methods are particularly suitable in cases where researchers wish to develop accurate vegetation maps over moderately large areas (i.e., up to 40 ha). Conducting aerial surveys using small drones greatly expands the size of the area that can be assessed with minimal disruption of sensitive plants and vegetation. By obtaining a high density of images, researchers can construct composite images (orthomosaic) and digital elevation models (DEMs). DEMs of elevation differences of the vegetation and landforms over a geographic area are referred to as digital surface models (DSMs).

Before engaging in drone usage, researchers should be careful to obtain permission from land owners and managers. Regulations for drone use differ among nations and are constantly in flux in response to new technologies and applications. Researchers should check with local and federal agencies before engaging in research activities with UAVs. In the following sections, we discuss some general advantages and limitations of using small drones in ecological research.

Equipment considerations

The diversity of small drones available for recreational use has increased dramatically since 2013, and many of these are suitable for research. Of these, we specifically focus on hovering UAVs with four (quadcopter), six (hexacopter), or eight (octocopter) propellers because they are the easiest to fly and have a number of features that are advantageous over fixed-wing aircraft for conducting aerial surveys. These aircraft are extremely stable in flight. They are relatively safe and easy to pilot and can be maneuvered for aerial surveys with a minimal amount of training and experience. More expensive models have GPS tracking systems that allow them to maintain position at a specific location and altitude. The drone’s flight time on a single charge will set an upper limit to the area that can be sampled during a flight. Quadcopters tend to be the most efficient, and at the time of this writing some models can fly up to 30 min on a single battery.

At a minimum, you will need a drone that is capable of carrying a small camera that can be programmed to take photographs every few seconds. The camera also needs to be capable of taking photos directly below the drone’s position. The cameras on many inexpensive drones have limited angle rotation and are not suitable for vegetation surveys because of their inability to point downward at 90 degrees from horizontal. More sophisticated UAVs have cameras mounted on gimbal systems that stabilize the camera as the craft pitches during flight. These more expensive UAVs also provide video feeds from the onboard camera to the pilot along with information on altitude, flight speed, and distance from the point of origin (home) for the current flight; some also have automatic object avoidance systems to reduce the chance of collisions. Other features that can be useful include automatic homing when the battery charge falls too low and when the signal from the controller is lost. In some models, the home location can either be set as a fixed point or can move with the location of the controller. Some manufacturers implement additional safety features in the drone’s firmware such as “no-fly” zones within 8 km of airports and federal buildings. A list of necessary and desirable features can be found in Appendix 1.

The second major consideration for equipment is the camera. Many ready-to-fly drones have integrated high-resolution cameras that are very suitable for research. These systems have the advantage of constant video feeds to the pilot and provide manual control of the camera position and image capture during flight. Cameras need to be as light as possible because small drones have a limited payload capacity and additional weight will reduce flight time. A wide-angle lens provides coverage of a large area at a moderate elevation and provides side views of peripheral features that are useful for generating accurate DSMs. The images captured from wide-angle lenses can be corrected for distortion with standard software applications. Some cameras may be customized to remove internal filters to allow detection of a broader spectral range, which is particularly important for some types of vegetation classification methods that use ratios of reflectance in the visual and ultraviolet ranges (e.g., normalized difference vegetation index [NDVI]; Bannari et al., 1995; Xie et al., 2008). Cameras capable of capturing images in a number of different formats (e.g., JPEG and raw formats such as TIFF [Tagged Image File Format] or DNG [Digital NeGative]) as well as video capture are the most suitable for research applications. For construction of orthomosaic images, it is imperative that the camera is capable of storing metadata with each image including GPS coordinates and flight elevation. Considerations of image resolution along with elevation and limitations on flight duration are discussed below.

Geographic scale

The most important factor to consider is whether the geographic scale of your study is suitable for micro-UAV surveys. The majority of small aerial drones are currently limited to a flight time of 15 to 30 min for each battery. Depending on the flight elevation and density of photographs, several batteries may be required to perform surveys. Furthermore, depending on local and federal regulations, the flight distance from the pilot is limited because the vehicle usually must be maintained within line-of-sight at all times. Depending on the characteristics of the drone used, line-of-sight flight distances will generally be limited to less than 200 m from the pilot. This distance could be extended if the pilot could move in the direction of the flight path, but this will generally not be advisable on foot, as walking in rough terrain while trying to track the drone would be difficult and dangerous. Depending on the number, spacing, and elevation of photographs (see below), and under current regulations in the United States, the feasible limit to ecological surveys using micro-UAVs is probably less than 40 ha at the upper limit, and more reasonably would be within 5 to 20 ha. Surveys of larger areas are feasible with the use of mini-UAVs, which are commonly used in agricultural applications. These vehicles are considerably more expensive than micro-UAVs and in the United States require special waivers from federal agencies. In this article we focus on applications of small copter drones, but many of the principles we discuss can be extended for the use of larger vehicles.

Identification of organisms and objects from aerial images

There is a large amount of literature discussing the analysis of remote imaging data from satellites and manned aircraft (Nagendra, 2001; Kerr and Ostrovsky, 2003; Rango et al., 2009; Anderson and Gaston, 2013). The typical goal of these applications is vegetation and landform classification over relatively large geographic areas. The ability to discriminate among plant species and habitats may depend on the phenological stage of plants, which may require images captured at finer spatial and temporal scales (Richardson et al., 2009). Researchers can take advantage of the distinctive spectral values for leaves, flowers, and fruiting structures by conducting multiple surveys over the growing season. Incorporating spectra outside the visible range may require using specialized photographic equipment, but may also be achieved by simple modifications of standard cameras as described above. Testing spectral profiles of the vegetation and plant species of interest with hand-held cameras is advisable prior to investing in micro-UAV technologies. Species identification that requires morphological measurement or nonspectral characters such as leaf shape or growth form may be less suited to aerial surveys.

Strategies for image collection

One of the primary considerations for ecological surveys with small UAVs is the density and elevation of images over the region of interest. Reconstruction of orthomosaics and DSMs requires a high density of images that provide overlapping views of landscape features from multiple angles. The typical sampling strategy establishes a grid of aerial transects. The UAV is either flown at a constant speed along transects in automatic image capture mode, or is flown manually between imaging grid points. The former strategy can be facilitated by software that allows programming flights based on multiple GPS coordinates. Manual flights may be necessary if the time required to store large images is greater than a few seconds. This is particularly true of raw image formats, which may require five seconds or more to process. JPEG images use averaging algorithms to reduce image size, and consequently have lower resolution. Whether lower-resolution image formats such as JPEG can be used will depend on the flight elevation and the level of detail needed to identify objects from images. Some small UAVs may not allow the association of the metadata needed for orthomosaic construction (i.e., GPS coordinates and flight elevation) with all image formats, so it may be necessary to use raw images.

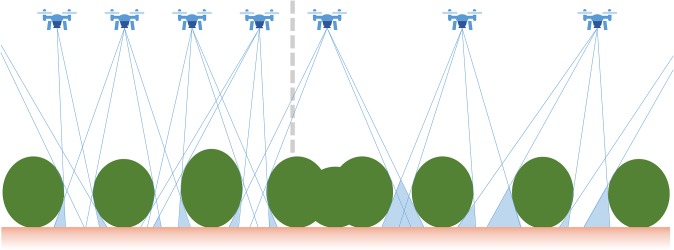

Minimal imaging densities will depend on the flight elevation along with the topographic relief and complexity of vegetation elevation. Higher densities of images may be needed to ensure that there is adequate image overlap in portions of the landscape that are between large bushes or trees (Fig. 1). In this depiction, larger gaps in the orthomosaic will be generated when the density of aerial images is lower. Higher densities of images are necessary to cover landscapes that include gaps between shrubs or trees, and this problem becomes more acute when openings in the vegetation are smaller than the height of shrubs.

Fig. 1.

Considerations for using small aerial drones for vegetation surveys. A schematic of the predicted effects of aerial imaging density on shadowing (gaps) due to trees and shrubs for the orthomosaic and digital surface model (DSM) generated from aerial surveys. Lower densities of aerial images results in larger areas of image shadows around closely spaced shrubs or trees.

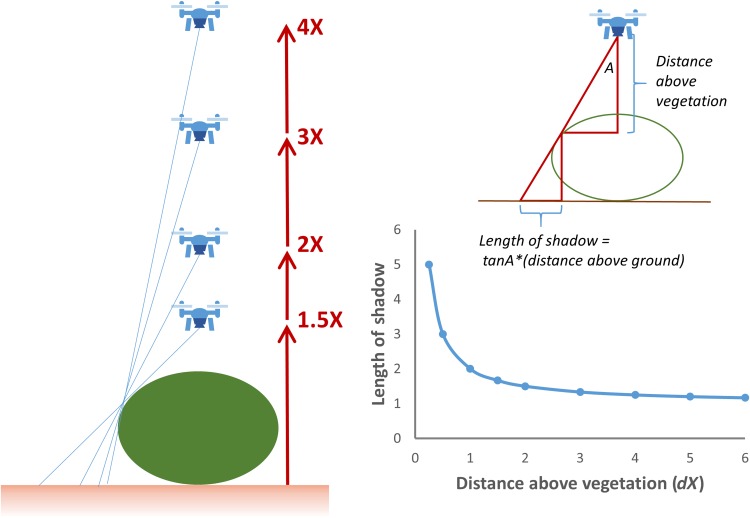

The potential for taller trees and shrubs to block the view of vegetation features within gaps can be minimized by flying at an elevation that is at least three times the average height of the canopy (Fig. 2). While larger areas are covered by images as the elevation increases, there is also a loss of resolution of smaller objects on the ground. The loss of acuity at higher elevations can be compensated by capturing images with higher pixel densities; however, image size is limited by the characteristics of the charge-coupled device (CCD) image sensor within the camera, and larger images will require longer times for processing and storage. The optimal flight elevation for aerial surveys will necessarily be a compromise between maximum coverage, image acuity for the identification of vegetation and landform features, and the image overlap requirements of the software used to process and generate orthomosaics and DSMs.

Fig. 2.

Considerations for using small aerial drones for vegetation surveys. The effects of the distance above shrub or tree elevation (dX, where d is the multiplier of canopy height, X) on shadowing for aerial images. Diminishing returns in shadow reduction is obtained for elevations greater than 2X.

The combination of flight elevation, spacing between images along transects, and limitations of processing times for large images need to be considered to determine optimal sampling strategies. The distance between images on the sampling grid should be half the flight elevation or less, and depending on the complexity of the vegetation topography, it may be necessary to use higher densities of sampling points. Researchers should also consider the fact that coverage is necessarily lower at the edge of the sampling grid, so the area sampled should exceed the geographic region of interest. Some of these factors may not need to be considered if low-resolution images with rapid capture times can be used. The image capture and data storage characteristics of the UAV and resolution of small objects at different elevations and image capture modes should be tested before designing the sampling grid. In any case, it is better to err on the side of higher-density sampling grids and higher-resolution images to ensure adequate coverage of and identification of vegetation and landform features within the geographic region of interest.

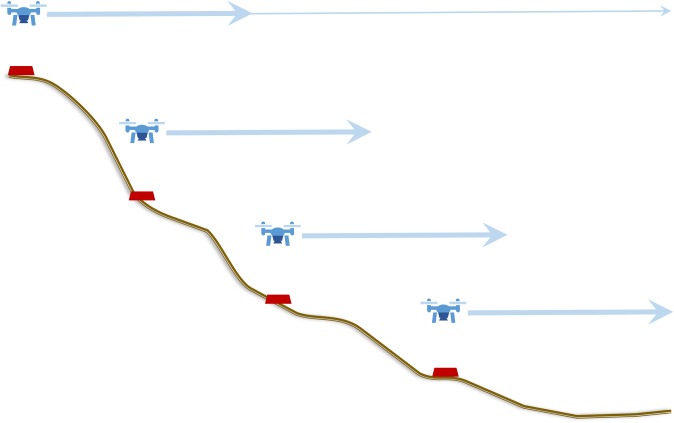

Aerial imaging grid designs will also depend on the topography of the terrain being sampled. It is easiest to sample relatively flat areas where a single-elevation grid can be established. Conducting aerial surveys over flat terrain is relatively easy when using UAVs that have GPS positioning capabilities, which are capable of holding a relatively constant elevation above sea level. For sloping terrains, it may be necessary to conduct stratified sampling at different elevations along the slope (Fig. 3). Each sampling grid should have good overlap with others to facilitate orthomosaic image and DSM construction. The goal is to keep the distance between the camera and the ground within a range that allows adequate resolution of vegetation features, so steeper slopes will require more overlapping grids. More complicated terrains such as valleys and curved slopes may require larger numbers of sampling grids to provide adequate coverage and resolution of objects on the ground.

Fig. 3.

Strategies for aerial surveys using small drones in rough terrain. Starting from the highest elevation, the entire area should be imaged at low density (thin arrow). Stratified surveys at each elevation are indicated by thicker arrows. Note that the elevation of each survey is constrained and that there is considerable overlap among surveys.

When developing sampling strategies, researchers should keep in mind the limits imposed by the flight duration of the aircraft on a single battery charge and the maximum distance that can be flown while maintaining visual contact. For example, a small drone such as the DJI Phantom models (DJI, Shenzhen, China) flying under 50 m elevation can be visually tracked up to 200 m distance from the operator under most weather conditions. By flying in both directions away from the controller, transects that are 400 m in length can be sampled. Using programmed flight plans at slow speeds (e.g., 3 m per second), low-resolution images can be captured at frequencies of 10 m or less. Capturing of high-resolution images will require more time for processing and positioning of the aircraft, although it is not necessary to sample exact positions and grid locations can be approximate. Transect sampling is facilitated by using a drone with GPS positioning capabilities as the distance between the aircraft and the operator are provided during flight. Manual sampling can also be made more efficient by capturing images at alternate grid locations during flight along transects in two directions. Taking into account the amount of time required to position the aircraft at the proper elevation at the initiation of sampling and for landing upon return, a single battery charge may be enough to sample two to three 400-m transects in automatic mode and perhaps one to two transects when manually capturing high-resolution images at a spacing of 10 to 20 m. Batteries generally require several hours to charge, so several batteries and flights over several days may be required to sample larger areas.

Sampling procedures

Prior to data collection, the UAV should be flown to test its imaging and flight capabilities. The drone should only be flown in dry weather and calm wind conditions in areas that are clear of buildings and large concentrations of people. Operators should practice flying the drone to test the camera’s image resolution at different elevations. Drones with GPS and integrated cameras generally provide a manual flight controller and are capable of video/data feeds to a digital device during flight. At least two people will be required to safely fly the UAV; one person should act as pilot and maintain visual contact with the aircraft and the second should use the digital device to monitor the video feed, operate the camera, and track the drone’s position. Under some circumstances, a third person may be required to help maintain visual contact with the drone, to watch for potential hazards, and to ensure that the drone remains clear of other aircraft, buildings, tall trees, and people.

Once a preliminary sampling strategy has been developed, it is best to image a portion of your area of interest to determine whether the imaging density provides adequate coverage for construction of orthomosaics and DSMs. Researchers should also record GPS coordinates and elevation of major features and of representative plants or vegetation cover to allow for ground-truthing of these points with the aerial images. These reference points can provide valuable confirmation of the appearance of objects, plant species, and vegetation types on the orthomosaic and DSM images.

Processing and analysis of image data

The analysis of drone photography can be separated into two stages: (1) orthomosaic and DSM generation, and (2) habitat classification and estimation of species distributions and densities. Most UAVs with integrated camera systems return images that are sequentially numbered and tagged with spatial and temporal data. The goal is to turn these images into a single stitched map known as an orthomosaic and to estimate the DSM. Individual images are first corrected for lens distortion and then stitched together with a computer vision technique known as structure-through-motion to create an orthomosaic (Dandois and Ellis, 2010). Point features are extracted from each image, and the algorithm searches for the greatest number of overlaps between images for each of the point features. More extensive overlap in images will lead to a greater number of matching features and create a more complete final orthomosiac. Insufficient overlap will lead to grainy or ragged orthomosaic with holes where the algorithm was unable to find a match among the pool of supplied images. In the example provided below, we used AgiSoft PhotoScan software (AgiSoft LLC, St. Petersburg, Russia) to create the orthomosaic and DSM of a region of a prairie and vernal pool landscape in southern Oregon.

Once the orthomosaic is created, it can be used to extract information on the distribution of species and habitats. Habitat classification can be conducted manually or can be automated. For manual classification, a number of different GIS and image applications can be used to draw shape borders using differences in vegetation color, vegetation height (based on a DSM), or a combination of these. For automated habitat classification, cells in the spectral raster are analyzed individually. There are two main approaches for automated landscape classification: unsupervised and supervised methods. Unsupervised methods use multivariate clustering algorithms to group similar cells based on spectral values. These ordination approaches, such as k-means classification, depend on defining a preset number of groups. In the example described below, we were interested in delineating tree, shrub, swale, and hummock vegetation types. The k-means algorithm iteratively looks for the best grouping of pixels that minimizes the mean similarity among k classes. This method is robust to derivations and requires no training data, but will fail when confronted with highly overlapping or nonsymmetrical classes (Pielou, 1984). Alternatively, supervised classification methods are a family of machine-learning techniques that use training data to learn about classes. Supervised methods, such as neural networks, are gaining popularity due their flexibility and greater accuracy (Guisan and Zimmermann, 2000; Elith et al., 2008). In a supervised classification, a researcher collects GPS points from the field for each of the classes. The algorithm is trained on these data points, and then classifies the spectral raster based on the characteristics of those training sets (Papes et al., 2013). Although supervised methods are often more accurate than unsupervised methods, the need for training data can be difficult in sparsely sampled ecological landscapes.

The orthomosaic and DSM may provide adequate information to estimate the distribution and cover of individual species. The success of this approach will depend on whether one species can be adequately discriminated from others based on a combination of spectral and elevation data. For species that present an adequate contrast, the automated classification methods described above may be successfully implemented. Grouping of pixels could then be used to generate raster data containing percent cover, which can then be displayed as heat maps or isoclines using standard GIS tools. For example, below we describe the distribution of shrub habitat, which is equivalent to the distribution of Ceanothus cuneatus (Hook.) Nutt., and the coverage and distribution of the vernal pool annual, Lasthenia californica DC. ex Lindl. Accuracy of the resulting species distribution maps will depend on the degree to which reliable nonoverlapping classification methods for individual pixels or groups of pixels can be established.

Example: Creating a vegetation map of the Whetstone Savanna

Methods

To illustrate some of the procedures for conducting vegetation mapping with small aerial drones, we obtained aerial images that surveyed the Whetstone Savanna Preserve, which is an upland prairie vernal pool site in southern Oregon that is managed by The Nature Conservancy. We used images that provided coverage of a 16-ha (400 × 400 m) region of savanna that consists of a mosaic of vernal pools (swales) that are separated by hummocks (higher-elevation areas that are not routinely inundated and not covered by shrubs), hedges of buckbrush (Ceanothus cuneatus) with an understory of a nonnative herbaceous umbel (Anthriscus caucalis M. Bieb.), and scattered Oregon oaks (Quercus garryana Douglas ex Hook.). Hummock habitats support a diverse flora including Plagiobothrys nothofulvus (A. Gray) A. Gray, Lomatium utriculatum (Nutt. ex Torr. & A. Gray) J. M. Coult. & Rose, Lupinus bicolor Lindl., Lithophragma parviflorum (Hook.) Nutt., and Saxifraga integrifolia Hook. Swale (vernal pool) floras had little species overlap with hummocks and were characterized by Lasthenia californica, Downingia yina Applegate, Navarretia leucocephala Benth., Plagiobothrys bracteatus (Howell) I. M. Johnst., and Limnanthes pumila Howell. Our goal was to generate a vegetation map of the region for the analysis of seed- and pollen-mediated gene flow within species using landscape genetic methods.

A small aerial drone equipped with an integrated camera (DJI Phantom 2 Vision+ with a high-definition camera mounted on a motion-stabilized gimbal) was flown at 40-m elevation along 400-m transects that were spaced every 15 m. The camera mode was set to single capture with 14.4-megapixel image size (Appendix 2), and images were captured manually using the DJI application installed on a Samsung Galaxy Tab 4 (Samsung Electronics, Seoul, Korea). The center point of each transect was located using a hand-held GPS and used as the home location for each flight. From this point, the drone was flown in a straight line using a distant landmark for orientation and images were captured every 15 m along transects. For each photo, the drone was flown to a measured distance from the home base, hovered in place using the DJI controller, and a raw image in DNG format was captured using the tablet application. The capture and processing of each image required five to 10 seconds. Consequently, sampling the entire area required multiple flights and used eight to 10 batteries for each of two, 4-h sampling sessions that were conducted over a two-day period. All flights were conducted during the morning on partly cloudy days with calm winds.

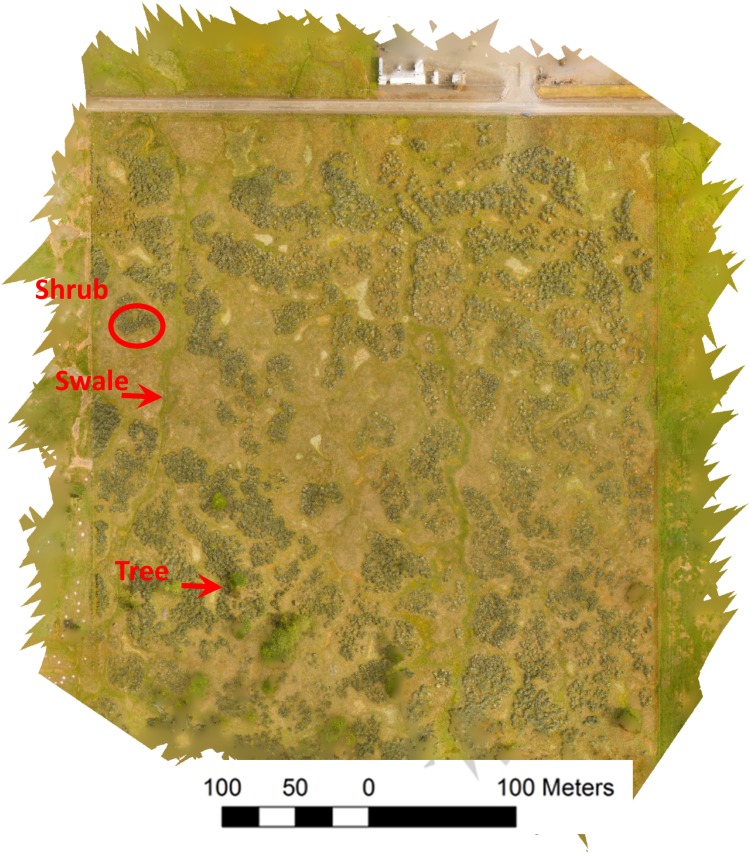

We created an orthomosaic and DSM using a total of 457 aerial images with the Agisoft PhotoScan software (Agisoft LLC; Appendix 2). The DNG images were converted to TIFF using Adobe Photoshop (Adobe Systems, San Jose, California, USA) prior to processing. PhotoScan corrected each image for fisheye (barrel) distortion prior to stitching them together (Appendix 3, Fig. A1) to create the orthomosaic (Fig. 4) and DSM (Fig. 5). These layers were reprojected to the North American Datum 1983 (NAD83) Universal Transverse Mercator (UTM) Zone 10N projection. A geodatabase file (.gdb) was created to classify the three distinct habitat types as polygons. These geodatabase features were used to draw polygons following perceived habitat boundaries. By combining these layers, the habitat type could be classified with greater confidence than using either the aerial orthomosaic or the DSM on its own. Polygons were created using the Editor tool in ArcGIS (release 10; Esri [Environmental Systems Research Institute], Redlands, California, USA), with a streaming tolerance of 1 map unit (1 m). A true topology of adjoining polygons was achieved using the Snapping feature in the Editor tool. For the manual classification, we pooled shrub and tree habitat, and discriminated this vegetation type from hummock and swale (Fig. 6).

Fig. 4.

An orthomosaic of the vernal pool region of the Whetstone Savanna Preserve in southern Oregon generated from aerial images collected using a small drone (DJI Phantom 2 Vision+). To the north is a rural road and light industrial complex. There is a fence line along the west side that is evident as a linear disruption in the vegetation. Along the east side is an agricultural field, and to the south is oak savanna. Examples of shrub, swale (vernal pools), and trees are indicated. Hummocks are regions that generally border between swales and shrubs.

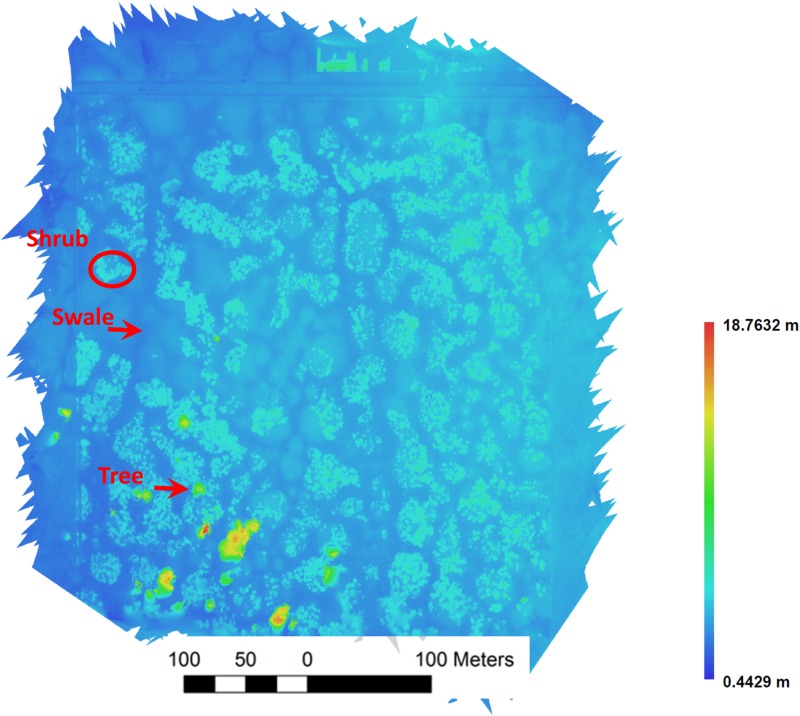

Fig. 5.

A DSM of the vernal pool region of the Whetstone Savanna Preserve in southern Oregon generated from aerial images collected using a small drone. The same examples of shrub, swale, and trees used in Fig. 4 are indicated. The heat map represents elevation of vegetation above the land surface across the prairie.

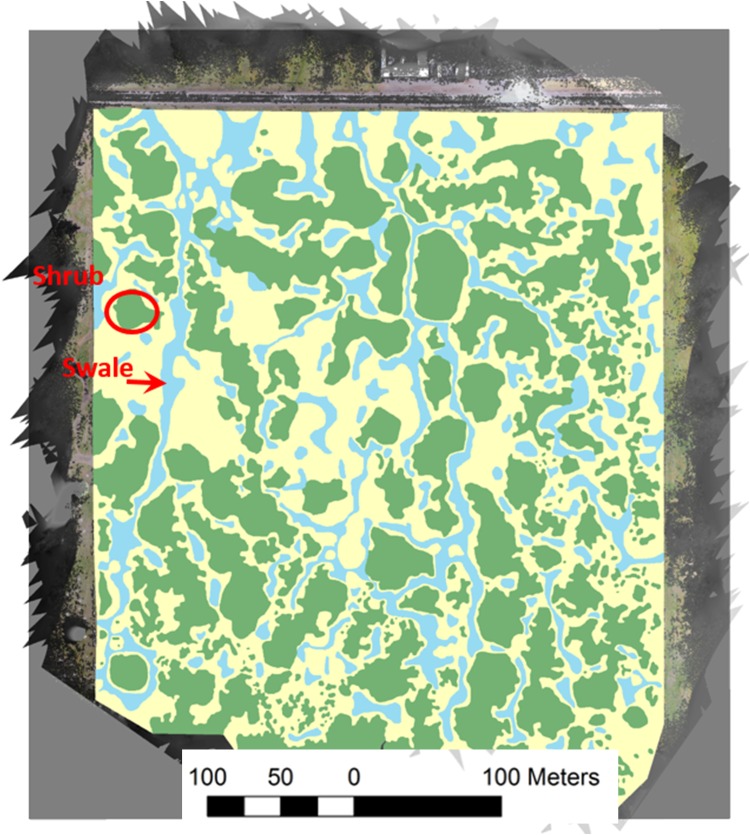

Fig. 6.

Results of a manual habitat classification based on the orthomosaic and DSM (Figs. 4 and 5, respectively). The same examples of shrub and swale used in Fig. 4 are indicated.

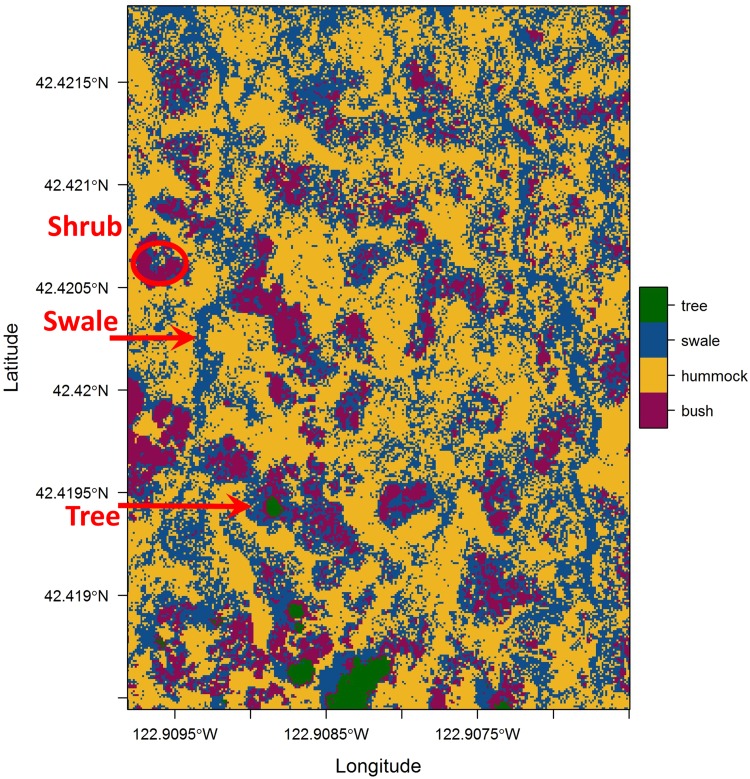

The automated procedure described above was applied to determine the distribution of habitats within the region of interest using a k-means classification based on a combination of the DSM and spectral values to delineate four classes (trees, shrubs, swale, and hummock; Fig. 7). We first separated the DSM into three classes (tree, shrub, swale/hummock), and then separated the areas classified as swale/hummock based on the spectral values. The resulting representation has four classes and provides a high-resolution habitat map based on the drone-collected images. The R scripts used for automated habitat delineation are available on GitHub (https://github.com/bw4sz/Drone/blob/master/Kmean.md).

Fig. 7.

Results of an automated habitat classification based on the orthomosaic and DSM (Figs. 4 and 5, respectively). The same examples of shrub, swale, and trees used in Fig. 4 are indicated.

To illustrate the use of micro-UAVs to estimate the cover of individual species, we evaluated the distribution and percent coverage of L. californica at peak flowering based on the presence of flowers in a single aerial image (Appendix 3, Fig. A1). Flowers of this species are easily identified by their distinctive yellow color. We started by segregating pixels using image manipulation functions in Python (v3.5.1; Appendix 3). The converted image (Appendix 3, Fig. A2) was then imported into ArcGIS to generate a heat map representing the density of pixels within the reflectance range of flowers of L. californica (Appendix 3, Fig. A3).

RESULTS AND DISCUSSION

We successfully surveyed the 16-ha vernal pool region of the Whetstone Savanna Preserve for one phenological period by obtaining images that were collected using a small aerial drone that was flown over two consecutive days. The orthomosaic and DSM created from this survey contained a high level of detail and provided accurate information for the delineation of habitats in this prairie using both automated and manual methods. The manually mapped habitats displayed strong correspondence with high concentrations of image cells automatically identified as the same habitat types.

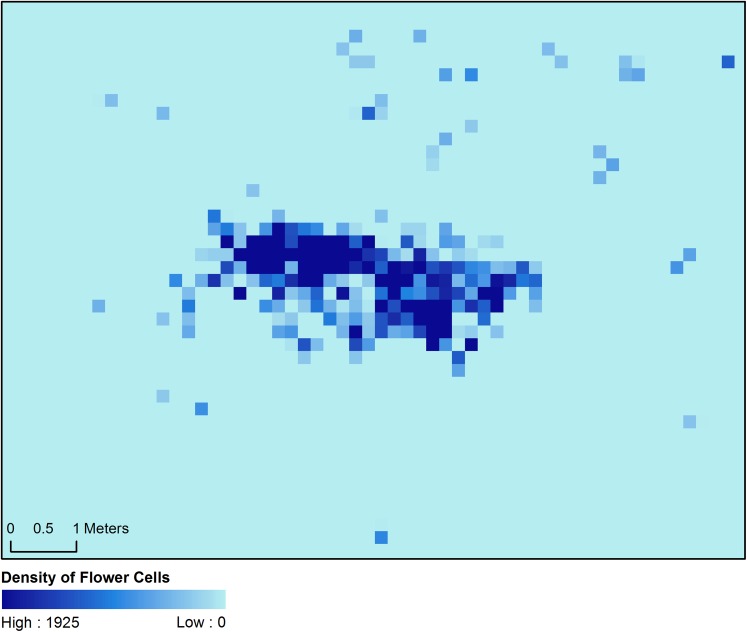

The estimates of the distribution and density of L. californica for one sample area were adequate as the large majority of filtered pixels were within this species’ swale habitat. A low density of pixels that were classified as L. californica flowers appeared in the adjacent hummock and hedge habitats (Appendix 3, Figs. A1, A2, A3). Because these pixels were outside of the swale habitat, they may represent artifacts or other yellow-flowered species. These artifactual pixels could be removed to produce a cleaner heat map by using filters based on habitat classification or vegetation elevation.

Our results indicate that low-elevation aerial surveys with small drones can be a highly efficient and relatively accurate method for conducting vegetation surveys over moderately large areas. This approach was particularly useful for the survey of the vernal pool habitat as traditional mapping methods using GPS and transects would have required many hundreds of hours, would have generated more substantial habitat disturbance, and would have produced lower-accuracy maps. The resulting habitat maps from aerial micro-UAV surveys were relatively accurate and will prove useful for subsequent landscape genetic analyses.

Aerial surveys with a micro-UAV worked quite well for habitat mapping in the Whetstone Savanna Preserve and for estimating the density of L. californica in one sample area. Our habitat classification success was facilitated by distinctive elevation and spectral characteristics associated with each vegetation type. The difference in spectral qualities can be seen from the hummock and swale classification of image cells (Fig. 7), but note that misclassification of cells was also quite common compared to the manual classification (Fig. 6). We note that the manual classification is probably more accurate in this case because of the elevation difference between these habitats, and the automated classification could be improved in this case by incorporating the elevation data to discriminate between hummock and swale habitats.

The task of vegetation classification was more difficult for discriminating between the hummock and shrub habitats because vegetation characteristic of hummocks often occurred in small gaps between shrubs. These gaps were difficult to identify from the orthomosaic and DSM due to the unevenness of the shrub canopy height, and because the density of aerial images was not always high enough to detect them. Such gaps did not show up in the orthomosaic and DSM when the interpolation option was enabled in the PhotoScan software (Appendix 2). When conducting manual classification, the small islands of hummock habitat were difficult to discern from the images and were often ignored. The automated habitat mapping probably more accurately identified hummock islands within hedges in many cases, but still may have misclassified vegetation in instances where the shrub canopy was low or where the canopy was interpolated. A more accurate classification could probably be derived by integrating the automated classification map with the orthomosaic and the DSM to manually delineate habitats. Using an orthomosaic that was created with the interpolation option disabled may have provided additional information to locate the smaller gaps between shrubs.

Conclusions

Small aerial drones have considerable potential for a number of applications in plant ecology. For the example described above, relatively accurate habitat maps were generated with minimal effort and habitat disruption. While a single phenological stage provided adequate contrast for accurate habitat discrimination and the distribution of some species, it would generally be desirable to conduct aerial surveys at several different times over the growing season. For example, the vernal pools may have been easier to discriminate earlier in the season when they were inundated with water. Applying these methods for habitat mapping will be feasible in many other types of vegetation, but only if associated floras and landforms display strong spectral or elevation differences.

Small drone surveys provide a mechanism for plant ecologists to collect large amounts of information with minimal effort. As illustrated above, the distribution and density of individual species can be derived from aerial images if the foliage, flowers, or fruiting structures could be adequately distinguished from other species in the area. Aerial surveys conducted at different times during plant flowering could provide valuable information on differences in phenology for different areas of the survey region. A number of other applications may also be possible, including surveys of plant diseases using infrared spectra (e.g., Mutka and Bart, 2014), surveys of damage to vegetation due to natural and human-mediated disturbance, monitoring restoration efforts, and other management practices (Morgan et al., 2010). Many of the methodologies used from manned aerial surveys and satellite imaging can be adapted for use with small drone surveys (Rango et al., 2009; Anderson and Gaston, 2013).

Appendix 1.

Recommendations for features of aerial drones used in plant ecology.

| Feature* | Necessary | Desirable |

| Enough power to carry a camera | Yes | – |

| High-resolution camera that can be oriented straight down | Yes | – |

| Flight time greater than 10 min | Probably | Yes |

| Onboard GPS for hovering in place and flight stabilization | Probably | Yes |

| Camera capable of adding GPS and altitude metadata to images | Probably | Yes |

| Camera capable of saving images in raw formats | Probably | Yes |

| Gimbal with camera stabilization | No | Yes |

| Mobile app with camera controls and video feed from the drone | No | Yes |

| Flight information on altitude, speed, and distance from origin | No | Yes |

| Programmable to fly to multiple GPS waypoints | No | Yes |

| Automatic homing on low battery and control signal loss | No | Yes |

| Automatic no-fly zone avoidance and altitude limit | No | Yes |

| Ground detection for landing assistance | No | Yes |

| Object proximity detection and avoidance | No | Yes |

At the time of this publication, the Phantom series from DJI (Phantom 2 Vision+, and Phantom 3 and 4 models; DJI, Shenzen, China) and the Solo micro-UAV from 3D Robotics (Berkeley, California, USA) meet all of the necessary and, depending on the specific model, most of the desirable criteria listed here.

Appendix 2.

Tables of the camera settings used for aerial surveys and the PhotoScan software options used for generation of the orthomosaic and DSM in Figs. 4 and 5, respectively.

Camera specifications (DJI Phantom 2 Vision+ integrated camera):

| Sensor size | 1/2.3″ |

| Pixels | 14 MP |

| Resolution | 4384 × 3288 |

| HD recording | 1080p30/1080i60/720p60 |

| Recording FOV | 110/85 degrees |

Note: FOV = field of vision; HD = high definition.

Camera settings:

| Capture mode | Single capture |

| Photo size | Large: 4384 × 3288, 4:3, 14.4 MP |

| Photo format | RAW |

| ISO | 400 |

| White balance | Cloudy |

| Exposure metering | Center |

| Exposure compensation | 0 |

| Sharpness | Standard |

| Antiflicker | 60 Hz |

Agisoft PhotoScan orthomosaic creation settings:

| Parameter | Location | Setting |

| Camera type | Tools > Camera calibration | Fisheye |

| Align photos: Accuracy | Workflow > Align photos | High |

| Align photos: Pair preselection | Disabled | |

| Align photos: Key point limit | 400,000 | |

| Align photos: Tie point limit | 0 | |

| Build dense cloud: Quality | Workflow > Build dense cloud | High |

| Build dense cloud: Depth filtering | Mild | |

| Build mesh: Surface type | Workflow > Build mesh | Height field |

| Build mesh: Source | Dense cloud | |

| Build mesh: Face count | Medium | |

| Build mesh: Interpolation | Enabled |

Appendix 3

Method used to convert aerial images into plant density.

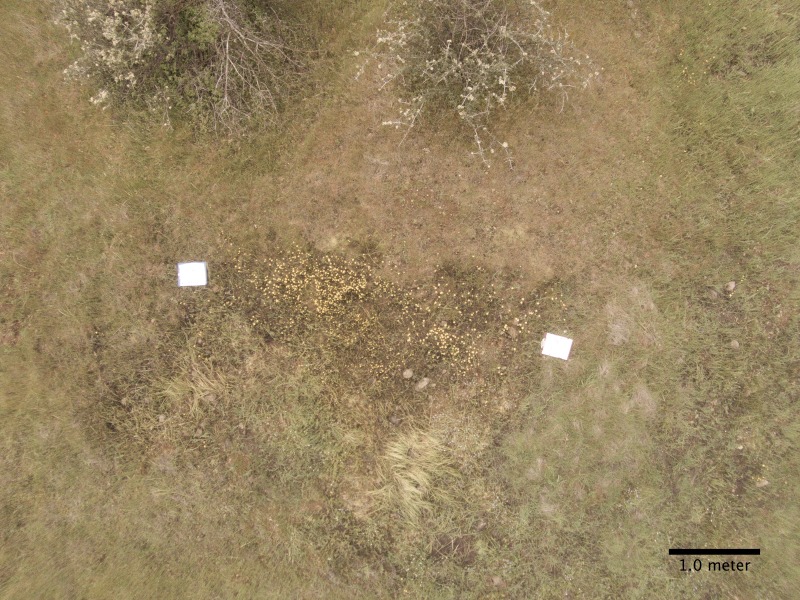

We started with a single aerial image captured at 8-m elevation over a patch of Lasthenia californica (Fig. A1). Adobe Photoshop Elements (v14; Adobe Systems, San Jose, California, USA) was used to convert the raw image (DNG) into a TIFF file and corrected for barrel distortion. Percent coverage estimates and contours around each flower were generated in Python (v3.5.1; www.python.org) with the Anaconda (v4.0.0) platform (Continuum Analytics, Austin, Texas, USA) using the following packages: SciPy’s SciKit-Image (SKImage v0.12.3), NumPy (v1.10.4), and OpenCV (v3.1.0) (Bradski, 2000; van der Walt et al., 2011, 2014).

Fig. A1.

A lens distortion–corrected image taken at 8-m elevation using the DJI Phantom 2 Vision+ aerial drone.

After importing the image with SciKit-Image, the gamma was adjusted to a value of 10 with the gain remaining at a value of 1. These specific values segregated the L. californica from the surrounding vegetation in the image and could be adjusted to best accentuate the color of the flower in question. We separated the color channels to further isolate L. californica (Fig. A2). The flowers and reference clipboards only appeared in the red channel, and the clipboards only appeared in the blue channel. As analyzing L. californica using only the red channel would result in error from the clipboards, we subtracted the blue channel from the red channel in the final image to isolate flower pixels.

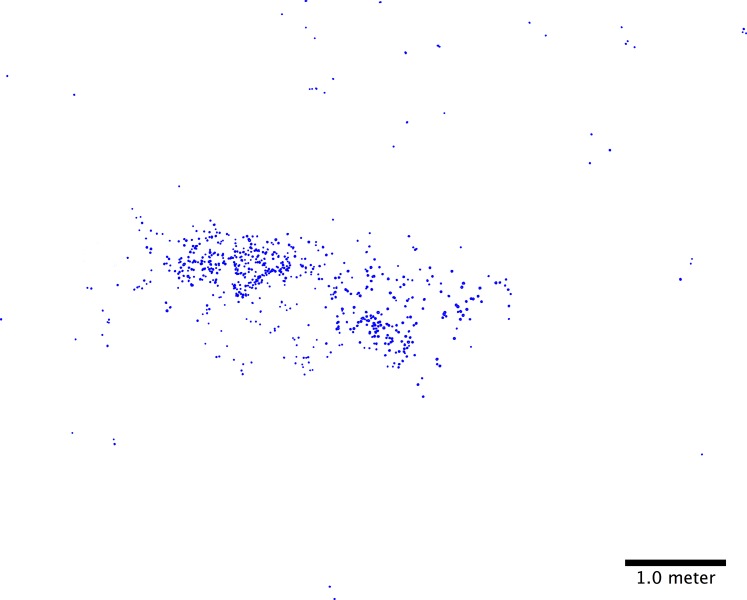

Fig. A2.

The same image as Fig. A1 after pixels representing the distribution of Lasthenia californica flowers have been segregated.

To generate the percent coverage of the flower pixels in the image, we counted any non-black pixels in the red and blue channels. The pixels of flowers are assumed to be the final number of pixels found in the blue channel subtracted from the red channel. We used this number to find the percent coverage for the entire image.

Drawing contours on the image around the flowers allows for other programs, such as ArcGIS (ArcGIS Desktop, release 10; Esri [Environmental Systems Research Institute], Redlands, California, USA), to use the percent coverage information. To reduce noise when drawing the contours, we applied an opening Morphology operation found in OpenCV. The threshold function of OpenCV further defines the flowers. Next, we determined the contours using OpenCV with a Tree Contour Retrieval Mode and the Simple Contour Approximation method. Finally, the contours are drawn on a blank image generated by NumPy, which is exported as a TIFF file (Fig. A3).

Fig. A3.

A heat map of plant density generated in ArcGIS using the image shown in Fig. A2. Values represent number of pixels representing Lasthenia californica flowers out of 5625 pixels in each grid area.

We converted the RGB channel data in the TIFF image to indicate flower presence or absence (1 or 0) for each pixel using the Raster Calculator in ArcMap (Esri). To visualize the percent cover of plants based on the presence of flowers, we used Esri’s Aggregate tool to sum the number of flower pixels in a 75 × 75-cell grid. This parameter reflects percent cover of flowers and functions as a proxy for the likelihood of plants existing in each aggregated cell.

LITERATURE CITED

- Anderson K., Gaston K. J. 2013. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Frontiers in Ecology and the Environment 11: 138–146. [Google Scholar]

- Bannari A., Morin D., Bonn F., Huete A. R. 1995. A review of vegetation indices. Remote Sensing Reviews 13: 95–120. [Google Scholar]

- Bradski G. 2000. OpenCV_Library. Dr. Dobb’s Journal of Software Tools for the Professional Programmer 25: 120–125. [Google Scholar]

- Dandois J. P., Ellis E. C. 2010. Remote sensing of vegetation structure using computer vision. Remote Sensing 2: 1157–1176. [Google Scholar]

- Elith J., Leathwick J. R., Hastie T. 2008. A working guide to boosted regression trees. Journal of Animal Ecology 77: 802–813. [DOI] [PubMed] [Google Scholar]

- Guisan A., Zimmermann N. E. 2000. Predictive habitat distribution models in ecology. Ecological Modelling 135: 147–186. [Google Scholar]

- Kerr J. T., Ostrovsky M. 2003. From space to species: Ecological applications for remote sensing. Trends in Ecology & Evolution 18: 299–305. [Google Scholar]

- Morgan J. L., Gergel S. E., Coops N. C. 2010. Aerial photography: A rapidly evolving tool for ecological management. BioScience 60: 47–59. [Google Scholar]

- Mutka A. M., Bart R. S. 2014. Image-based phenotyping of plant disease symptoms. Frontiers in Plant Science 5: 734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagendra H. 2001. Using remote sensing to assess biodiversity. International Journal of Remote Sensing 22: 2377–2400. [Google Scholar]

- Papes M., Tupayachi R., Martínez P., Peterson A. T., Asner G. P., Powell G. V. N. 2013. Seasonal variation in spectral signatures of five genera of rainforest trees. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 6: 339–350. [Google Scholar]

- Pielou E. C. 1984. The interpretation of ecological data: A primer on classification and ordination. Wiley-Interscience, New York, New York, USA. [Google Scholar]

- Rango A., Laliberte A., Herrick J. E., Winters C., Havstad K., Steele C., Browning D. 2009. Unmanned aerial vehicle-based remote sensing for rangeland assessment, monitoring, and management. Journal of Applied Remote Sensing 3: 033542–033515. [Google Scholar]

- Richardson A. D., Braswell B. H., Hollinger D. Y., Jenkins J. P., Ollinger S. V. 2009. Near-surface remote sensing of spatial and temporal variation in canopy phenology. Ecological Applications 19: 1417–1428. [DOI] [PubMed] [Google Scholar]

- van der Walt S., Colbert S. C., Varoquaux G. 2011. The NumPy Array: A structure for efficient numerical computation. Computing in Science & Engineering 13: 22–30. [Google Scholar]

- van der Walt S., Schönberger J. L., Nunez-Iglesias J., Boulogne F., Warner J. D., Yager N., Yu T. 2014. scikit-image: Image processing in Python. PeerJ 2: e453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie Y., Sha Z., Yu M. 2008. Remote sensing imagery in vegetation mapping: A review. Journal of Plant Ecology 1: 9–23. [Google Scholar]