Abstract

Purpose

The use of electronic tools in teaching is growing rapidly in all fields, and there are many options to choose from. We present one such platform, Learning Catalytics™ (LC) (Pearson, New York, NY, USA), which we utilized in our oral and maxillofacial radiology course for second-year dental students.

Materials and Methods

The aim of our study was to assess the correlation between students' performance on course exams and self-assessment LC quizzes. The performance of 354 predoctoral dental students from 2 consecutive classes on the course exams and LC quizzes was assessed to identify correlations using the Spearman rank correlation test. The first class was given in-class LC quizzes that were graded for accuracy. The second class was given out-of-class quizzes that were treated as online self-assessment exercises. The grading in the self-assessment exercises was for participation only and not accuracy. All quizzes were scheduled 1-2 weeks before the course examinations.

Results

A positive but weak correlation was found between the overall quiz scores and exam scores when the two classes were combined (P<0.0001). A positive but weak correlation was likewise found between students' performance on exams and on in-class LC quizzes (class of 2016) (P<0.0001) as well as on exams and online LC quizzes (class of 2017) (P<0.0001).

Conclusion

It is not just the introduction of technological tools that impacts learning, but also their use in enabling an interactive learning environment. The LC platform provides an excellent technological tool for enhancing learning by improving bidirectional communication in a learning environment.

Keywords: Assessments, Educational; Education, Dental; Radiology; Computer-Assisted Instruction

Introduction

Evolving methods of student learning necessitate the evolution of teaching methods. Computers have become an integral part of both our personal and professional lives, and an obvious result of this is the introduction of computers and personal electronic devices into the domains of teaching and learning. The body of literature discussing technology in education is expanding, including studies on the use of technology in education in the health fields.1,2 Currently, much attention is paid to guided learning, rather than simply presenting information in the traditional 'sage on the stage' method. Knowles3 based his proposed learning theory on the premise that learning is most likely to occur when learners are self-directed and motivated, with a perceived need to acquire information relevant to their own area of experience. A blended learning approach is a combination of traditional lecture-format classroom teaching with use of self-directed online and technology-enabled learning tools. The use of technologically based learning tools enables interaction and informal self-testing in a non-threatening way.4,5,6

Tools that allow the learner to actively engage in their own learning, such as quizzes with feedback, have the advantage of being perceived as low-stakes assessments, rather than a high-stakes assessments that use pass/fail criteria.4,7,8,9 Classroom response systems are a set of technology tools that can enhance student participation and learning while enabling educators to ascertain what their students are learning.10 With the use of classroom response systems such as clickers or other web-based systems, continuous feedback can be obtained on student understanding. One such technology is Learning Catalytics™ (LC) (Pearson, New York, NY, USA), which is a 'bring-your-own-device' student engagement, assessment, and classroom intelligence system. LC allows faculty to obtain real-time or out-of-class responses to open-ended or critical thinking questions, in order to determine which areas require further discussion and clarification. The comprehensive analytics help faculty better understand student performance.

At our institution, we adopted LC in the pre-doctoral oral and maxillofacial radiology (OMFR) curriculum for dental students in academic year 2013-2014. We have utilized this technology tool for the last two years in this course for the DMD classes of 2016 and 2017. The OMFR course is taught in the second year of the dental school curriculum. It spans 8 months and the class meets once a week for an hour. The course is divided into 3 sections. The first section addresses concepts in radiation physics, biology and protection, and radiographic techniques. The second section addresses the identification of normal radiographic anatomy, the detection of dental caries, and periodontal pathology. The third section introduces the radiographic interpretation of pathology, culminating in the generation of differential diagnoses for various entities spanning developmental anomalies and benign, malignant, systemic, and dysplastic lesions. Since this is the first time the dental students are introduced to radiography and radiographic interpretation, it is typically a challenging experience. The introduction of LC in this class had the primary goal of evaluating students' understanding of the material on an ongoing basis and providing feedback or clarification as needed. Since the inclusion of any additional teaching tool necessitates curriculum revision with time allotment for these exercises, this study aimed to evaluate the effectiveness of LC as a specific learning enhancement tool in the field of the diagnostic sciences. The objective of this study was to assess the correlation between students' performance on course exams and LC quizzes. The hypothesis was that the students' performance in course exams and LC quizzes in the second-year predoctoral OMFR course would not vary.

Materials and Methods

This study was a retrospective analysis of the OMFR grades obtained by the dental classes (DMD) of 2016 and 2017 in their second year of study. The OMFR course taught in academic years 2013-2014 and 2014-2015 utilized the LC program to administer quizzes during the course. In academic year 2013-2014 (class of 2016), inclass LC quizzes were graded for the accuracy of the answers and were administered 1-2 weeks before the course exams. The material included in the quiz was the same as the topics included in the following course exam. The course contained a total of 3 examinations and 3 quizzes.

In academic year 2014-2015 (class of 2017), the LC quizzes were given outside of class as online self-assessment exercises. The grading was based on participation only and not accuracy. The online LC quizzes were likewise made available 1-2 weeks before the course examinations, and the course contained 3 examinations and 5 quizzes. The maximum of the allotted points for each quiz for both classes was 5. The number of students in the classes of 2016 and 2017 who completed all quizzes and exams was 171 and 183, respectively. This study was a retrospective analysis of the correlation of the students' grades obtained on examinations 1-3 and the LC quiz grades in the OMFR course in both academic years (classes of 2016 and 2017). Institutional Review Board approval was obtained for this study.

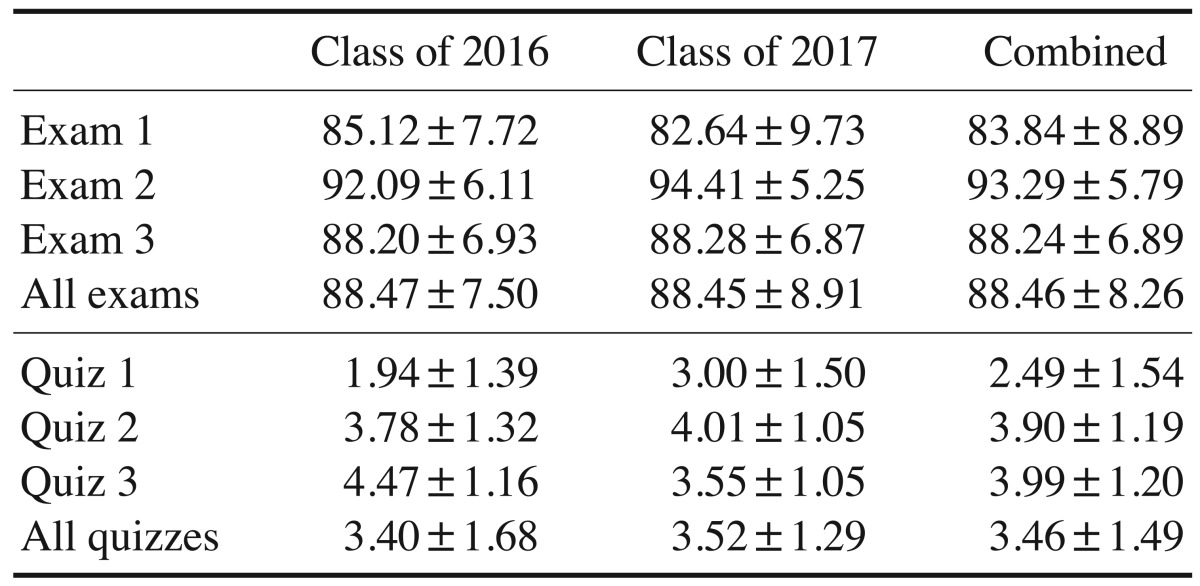

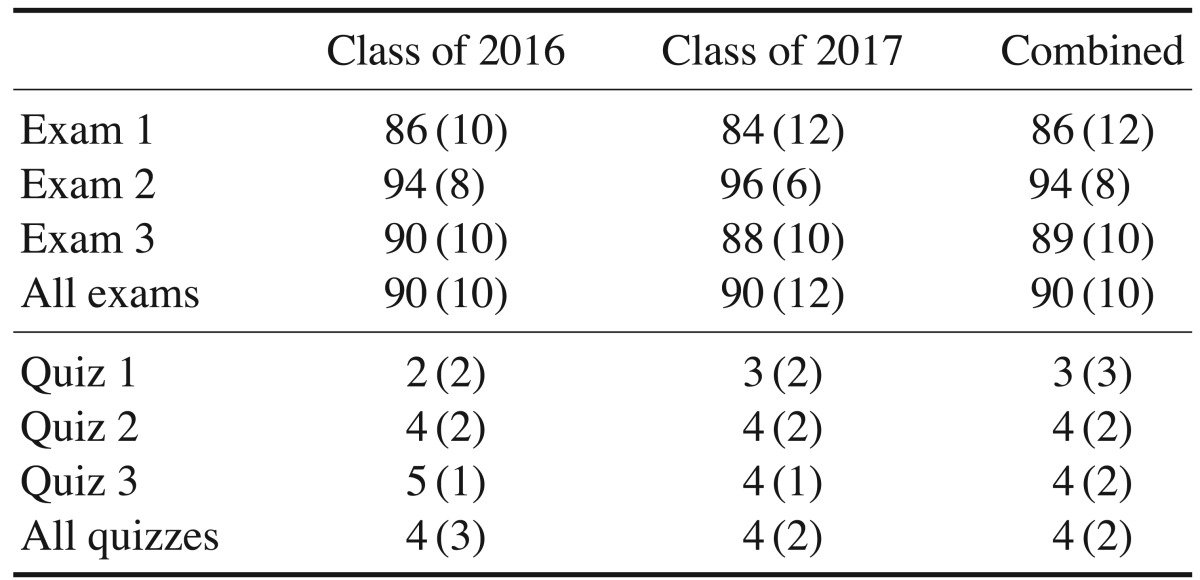

A convenience sample of 354 subjects was obtained from the DMD students in the classes of 2016 and 2017. Descriptive statistics (means, standard deviations, and medians for categorical variables) are presented in Tables 1 and 2. Statistical significance for the association between LC quiz scores and exam scores was assessed using the Spearman rank correlation test, as the data were not normally distributed. The assumption of normality was examined graphically and by the Shapiro-Wilk test. P-values <.05 were considered to indicate statistical significance. Stata version 13.1 (StataCorp LP, College Station, TX, USA) was used for the analysis. The class of 2016 had 3 LC quizzes and the class of 2017 had 5 LC quizzes. For the purposes of our analysis, in light of the topics covered and the timing of the quizzes, the average scores of quizzes 2 and 3 and the average scores of quizzes 4 and 5 were used as single items for the class of 2017.

Table 1. Descriptive statistics comparing the classes of 2016 and 2017 (mean±standard deviation).

Table 2. Descriptive statistics comparing the classes of 2016 and 2017 (median and interquartile range).

Results

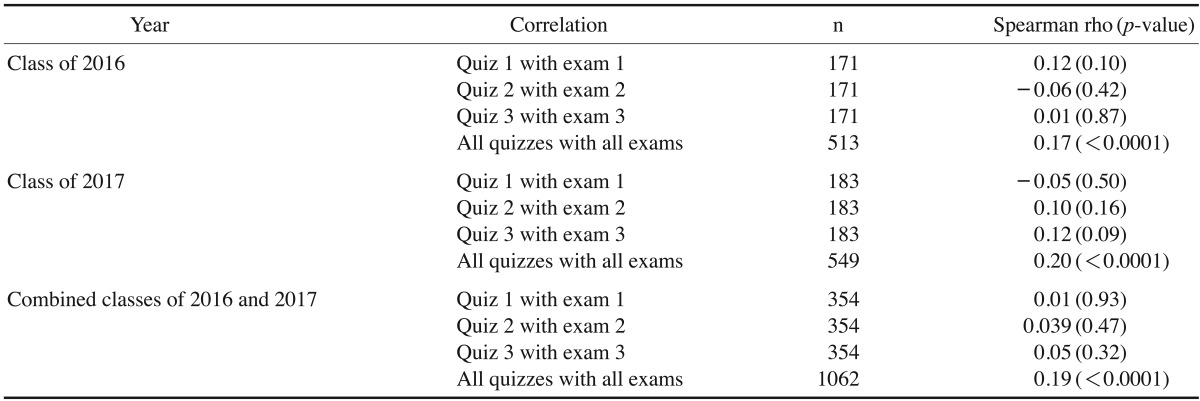

A positive but weak correlation was found between overall quiz scores and exam scores when the 2 classes were combined; that is, higher quiz scores were associated with higher exam scores. A positive but weak correlation was also found between students' performance on examinations and in-class LC quizzes (class of 2016), as well as between students' performance on examinations and online LC quizzes (class of 2017). No correlation was found between the exam and quiz scores for individual exams and quizzes; the correlations were only found for aggregated exam and quiz scores. These data are presented in Table 3.

Table 3. Correlation between the exams and the Learning Catalytics™ quizzes using the Spearman rank correlation.

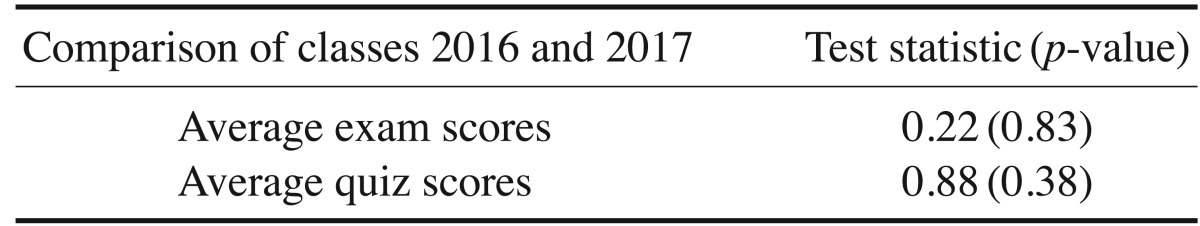

A comparison of the average exam score per student per class with the average quiz score found no significant differences in the median average exam score or median average quiz score between the classes of 2016 and 2017 (Table 4).

Table 4. Comparison of average exam and quiz scores for the classes of 2016 and 2017.

Discussion

The integration of technological tools into everyday life has increased the availability and use of such tools in education as well. The second-year predoctoral students in the OMFR course used their own smartphones or devices to log in to the LC program and access the quiz modules. The primary goal of introducing the quizzes before each exam in the course was to encourage earlier preparation for exams and to provide an opportunity for self-assessment and clarifying the subject matter with the instructors, leading to better understanding of the material. Our objective in this study was to evaluate the correlation between students' performance on exams and the LC quizzes. We found a direct overall correlation between quiz and exam performance, indicating that LC quizzes served as predictors of performance on the course exams. Our results are in agreement with those of Nayak and Erinjeri,11 who compared the use of the Audience Response System (ARS) and end-of-course examinations and reported that no significant differences were observed between the ARS results and those of end-of-course examinations (2.38 versus 2.04, P<.165).

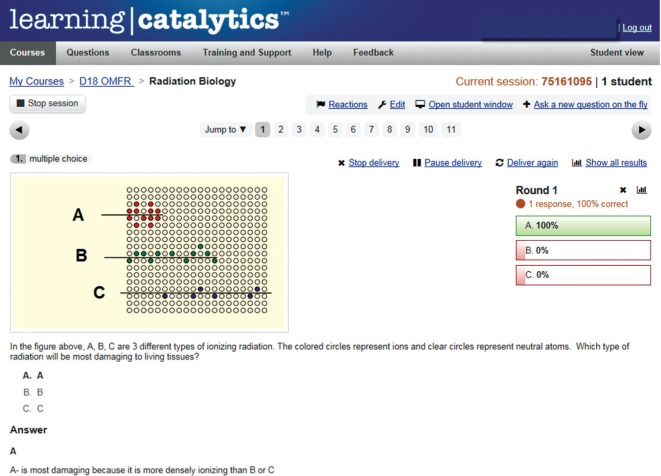

These quizzes also served as feedback to the students, as they could perform self-assessment and improve their understanding. Feedback has been listed as a major influence in learning. Figure 1 presents a screenshot of an LC window showing a question, the student's response and its accuracy, the number of students who responded to this question, and an explanation of why option A is the correct answer. Hattie and Timperley12 defined feedback as a "consequence" of performance and as information provided by an agent (e.g., a teacher, a book, a parent, oneself, or experience) regarding aspects of one's performance or understanding. To quote Kulhavy's13 1977 formulation, "Feedback has no effect in a vacuum; to be powerful in its effect, there must be a learning context to which feedback is addressed. It is but part of the teaching process and is that which happens second, after a student has responded to initial instruction, when information is provided regarding some aspect(s) of the student's task performance." The feedback model that we adopted for both the classes of 2016 and 2017 was aligned with this philosophy. For the class of 2016, immediate in-class feedback was directed at clarifying concepts relating to the incorrect responses and reinforcing the correct responses.

Fig. 1. The Learning Catalytics™ module shows the student view with the correct response and explanation.

For the class of 2017, students were allowed to respond to each question multiple times as they completed the quiz outside of class; students received feedback on each attempt, but only the last response was recorded. This allowed for continuous feedback as they completed the quiz. For both years, the students had access to the LC quiz module throughout the course, including during preparation for exams. Since the LC quiz grades and exam grades were directly correlated, albeit weakly, we are unsure whether the feedback had a direct impact on exam performance. The evaluation we performed in this study did not allow us to determine whether students' performance improved between the quiz and exam, but only found a correlation between these 2 parameters. However, the students indicated that the quizzes and feedback they received were excellent learning experiences. Similar to this study, Nayak and Erinjeri11 found that when comparing the use of ARS and end-of-course examinations to recognize students' mastery of course material, students reported no significant difference (1.77 versus 1.85, P< .712). In the same study, in terms of how to study for a course, students reported significantly increased insights from the use of an ARS rather than hearing verbal responses to questions asked by the lecturer (2.38 versus 3.27, P<.002).11

The LC platform can be used to obtain real-time feedback during class. In our study, for the class of 2016, the quizzes were scheduled and administered in class, followed by a brief feedback session. In contrast, for the class of 2017, the quizzes were completed online, the quizzes could be attempted more than once, and the students had a week to complete them. Despite these differences in the LC quiz formats; no statistically significant difference was found in the correlation of the quizzes with exam scores between the 2 classes. It is interesting that the class of 2016 was graded on accuracy while the class of 2017 was only graded for participation. Despite this seemingly major discrepancy, no significant difference was observed in the quiz grades for these two classes. The accuracy of the quiz grades for class of 2017 was obtained and compared to the accuracy of the class of 2016 for the purposes of the study. The longer time frame to complete the quiz, ability to take the quiz at their own convenience, and the potentially lower anxiety related to grading only for participation and not accuracy did not seem to have made any difference in how the LC quiz grades related to exam grades. This leads to the question of whether it is necessary to grade self-assessment quizzes, especially if immediate feedback is provided; however, how seriously the quizzes would be taken by students if they are not graded is a question worth considering. The reason for our switch to online quizzes for the class of 2017 was that management of the LC module in class took away from classroom instruction time allotted traditional lectures. This has been observed as an issue by other authors.14 Since no difference was found in student performance and learning between the in-class and online quiz models, class time may be structured to include more interactive or case-based education if quizzes do not take up class time.

ARSs have been in use for almost half a century and various surveys have found medical residents enjoy ARS lectures more than other didactic techniques. Many authors have shown that ARSs not only boost attentiveness, but also improve the short and long-term retention of lecture material. While most studies relating to ARSs and other technological tools have focused on medical education, 15,16,17,18 our study focused on dental education, and specifically oral and maxillofacial radiology. Our students also had positive comments regarding the use of LC modules in our course. Meckfessel et al.19 assessed student perspectives on the use of e-learning tools in dental radiology and received favorable feedback. The authors also reported a positive correlation between the 'e-program' and improved test scores. Their e-program provided an independent interactive learning tool for the students. The LC tool that we used was more of an extension of what the lecturers taught in class and allowed us to continuously monitor how the students were learning, which enabled continuous modifications in our teaching. One of the biggest advantages of using any audience response technology is the anonymity it provides students. It allows for a bidirectional flow of questions between the instructor and students. Students may feel uncomfortable exposing their lack of knowledge when answering or asking questions in the presence of their peers. LC is useful in this regard, as the instructor can send out spontaneous, on-the-fly questions to the class and vice versa, fairly quickly. In a large class, such as in our course with more than 170 students, reading and responding to all questions posted in class did require a substantial time investment outside of class for the instructors. Nonetheless, it did open up a channel of effective communication between the class as a whole and the instructors.

In the final course evaluations, both the classes of 2016 and 2017 had positive feedback about the use of LC in the radiology course. With only 3 exams in the course, the additional quizzes in LC were perceived as aids to understanding the material. Many students in the class of 2017 commented positively on the online LC quizzes. Two of the assessment statements in the evaluation survey used for all didactic courses at the school that specifically addressed learning in the course are: "This course effectively promoted learning" and "The course material enhanced previous learning." The students used a 6-point scale in the evaluation (1, strongly agree; 2, agree; 3, neutral; 4, disagree; 5, strongly disagree; 6, not applicable). The mean scores of the class of 2016 for these 2 statements were 1.59 and 1.64 respectively, while the mean scores of the class of 2017 for these 2 statements were 1.56 and 1.57 respectively. The difference in the format of the LC quizzes did not impact student-perceived learning in the course. Of course, the LC modules were a small part of the entire course and these student assessments dealt with the entire course structure. Despite the large class size, every attempt was made by the course instructors to make the course as interactive as possible by encouraging class participation and discussion. These student evaluations refer to this interactive course model, which also includes the LC modules. We are in agreement with Richardson16 in our strong belief that learning cannot be affected just by the introduction of a technological tool alone, but the impact that technological tools can have in enabling an interactive learning environment is impressive. The LC platform provides an excellent technological tool for enhancing learning by improving bidirectional communication in a learning environment.

References

- 1.Petty J. Interactive, technology-enhanced self-regulated learning tools in healthcare education: a literature review. Nurse Educ Today. 2013;33:53–59. doi: 10.1016/j.nedt.2012.06.008. [DOI] [PubMed] [Google Scholar]

- 2.Ray K, Berger B. Challenges in healthcare education: a correlation study of outcomes using two learning techniques. J Nurses Staff Dev. 2010;26:49–53. doi: 10.1097/NND.0b013e3181d4782c. [DOI] [PubMed] [Google Scholar]

- 3.Knowles MS. The modern practice of adult education: from pedagogy to andragogy. New York: Cambridge Book Co; 1989. [Google Scholar]

- 4.Nichol D. E-assessment by design: using multiple-choice tests to good effect. J Further High Educ. 2007;31:53–64. [Google Scholar]

- 5.Schneiderman J, Corbridge S, Zerwic JJ. Demonstrating the effectiveness of an online, computer-based learning module for arterial blood gas analysis. Clin Nurse Spec. 2009;23:151–155. doi: 10.1097/NUR.0b013e3181a075bc. [DOI] [PubMed] [Google Scholar]

- 6.Dennison HA. Creating a computer-assisted learning module for the non-expert nephrology nurse. Nephrol Nurs J. 2011;38:41–53. [PubMed] [Google Scholar]

- 7.Nicol DJ, Macfarlane-Dick D. Formative assessment and self-regulated learning: a model and seven principles of good feedback practice. Stud High Educ. 2006;31:199–218. [Google Scholar]

- 8.Sandahl SS. Collaborative testing as a learning strategy in nursing education. Nurs Educ Perspect. 2010;31:142–147. [PubMed] [Google Scholar]

- 9.JISC. Effective practice with e-assessment - an overview of technologies, policies and practice in further and higher education [Internet] JISC; 2007. [cited 2016 Mar 15]. Available from http://www.webarchive.org.uk/wayback/archive/20140615085433/http://www.jisc.ac.uk/media/documents/themes/elearning/effpraceassess.pdf. [Google Scholar]

- 10.Bruff D. Teaching with classroom response systems: creating active learning environments. San Francisco: Jossey-Bass; 2009. [Google Scholar]

- 11.Nayak L, Erinjeri JP. Audience response systems in medical student education benefit learners and presenters. Acad Radiol. 2008;15:383–389. doi: 10.1016/j.acra.2007.09.021. [DOI] [PubMed] [Google Scholar]

- 12.Hattie J, Timperley H. The power of feedback. Rev Educ Res. 2007;77:81–112. [Google Scholar]

- 13.Kulhavy RW. Feedback in written instruction. Rev Educ Res. 1977;47:211–232. [Google Scholar]

- 14.Tsai FS, Yuen C, Cheung NM. Interactive learning in preuniversity mathematics; IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE) 2012 (TALE2012); 2012 Aug 20-23; Hong Kong. IEEE: 2012. p. T2D-8-13. [Google Scholar]

- 15.Richardson ML. Audience response techniques for 21st century radiology education. Acad Radiol. 2014;21:834–841. doi: 10.1016/j.acra.2013.09.026. [DOI] [PubMed] [Google Scholar]

- 16.Bhargava P, Lackey AE, Dhand S, Moshiri M, Jambhekar K, Pandey T. Radiology education 2.0 - on the cusp of change: part 1. Tablet computers, online curriculums, remote meeting tools and audience response systems. Acad Radiol. 2013;20:364–372. doi: 10.1016/j.acra.2012.11.002. [DOI] [PubMed] [Google Scholar]

- 17.Schackow TE, Chavez M, Loya L, Friedman M. Audience response system: effect on learning in family medicine residents. Fam Med. 2004;36:496–504. [PubMed] [Google Scholar]

- 18.Latessa R, Mouw D. Use of an audience response system to augment interactive learning. Fam Med. 2005;37:12–14. [PubMed] [Google Scholar]

- 19.Meckfessel S, Stühmer C, Bormann KH, Kupka T, Behrends M, Matthies H, et al. Introduction of e-learning in dental radiology reveals significantly improved results in final examination. J Craniomaxillofac Surg. 2011;39:40–48. doi: 10.1016/j.jcms.2010.03.008. [DOI] [PubMed] [Google Scholar]