Significance

A core objective of trials comparing alternative medical treatments is to inform treatment choice in clinical practice, and yet conventional practice in designing trials has been to choose a sample size that yields specified statistical power. Power, a concept in the theory of hypothesis testing, is at most loosely connected to effective treatment choice. This paper develops an alternative principle for trial design that aims to directly benefit medical decision making. We propose choosing a sample size that enables implementation of near-optimal treatment rules. Near optimality means that treatment choices are suitably close to the best that could be achieved if clinicians were to know with certainty mean treatment response in their patient populations.

Keywords: clinical trials, sample size, medical decision making, near optimality

Abstract

Medical research has evolved conventions for choosing sample size in randomized clinical trials that rest on the theory of hypothesis testing. Bayesian statisticians have argued that trials should be designed to maximize subjective expected utility in settings of clinical interest. This perspective is compelling given a credible prior distribution on treatment response, but there is rarely consensus on what the subjective prior beliefs should be. We use Wald’s frequentist statistical decision theory to study design of trials under ambiguity. We show that ε-optimal rules exist when trials have large enough sample size. An ε-optimal rule has expected welfare within ε of the welfare of the best treatment in every state of nature. Equivalently, it has maximum regret no larger than ε. We consider trials that draw predetermined numbers of subjects at random within groups stratified by covariates and treatments. We report exact results for the special case of two treatments and binary outcomes. We give simple sufficient conditions on sample sizes that ensure existence of ε-optimal treatment rules when there are multiple treatments and outcomes are bounded. These conditions are obtained by application of Hoeffding large deviations inequalities to evaluate the performance of empirical success rules.

A core objective of randomized clinical trials (RCTs) comparing alternative medical treatments is to inform treatment choice in clinical practice. However, the conventional practice in designing trials has been to choose a sample size that yields specified statistical power. Power, a concept in the statistical theory of hypothesis testing, is at most loosely connected to effective treatment choice.

This paper develops an alternative principle for trial design that aims to directly benefit medical decision making. We propose choosing a sample size that enables implementation of near-optimal treatment rules. Near optimality means that treatment choices are suitably close to the best that could be achieved if clinicians were to know with certainty mean treatment response in their patient populations. We report exact results for the case of two treatments and binary outcomes. We derive simple formulas to compute sufficient sample sizes in clinical trials with multiple treatments.

Whereas our immediate concern is to improve the design of RCTs, our work contributes more broadly by adding to the reasons why scientists and the general public should question the hegemony of hypothesis testing as a methodology used to collect and analyze sample data. It has become common for scientists to express concern that evaluation of empirical research by the outcome of statistical hypothesis tests generates publication bias and diminishes the reproducibility of findings. See, for example, ref. 1 and the recent statement by the American Statistical Association (2). We call attention to a further deficiency of testing. In addition to providing an unsatisfactory basis for evaluation of research that uses sample data, testing also is deficient as a basis for the design of data collection.

Background

The Conventional Practice.

The conventional use of statistical power calculations to set sample size in RCTs derives from the presumption that data on outcomes in a classical trial with perfect validity will be used to test a specified null hypothesis against an alternative. A common practice is to use the outcome of a hypothesis test to recommend whether a patient population should receive a status quo treatment or an innovation. The usual null hypothesis is that the innovation is no better than the status quo and the alternative is that the innovation is better. If the null hypothesis is not rejected, it is recommended that the status quo treatment should continue to be used. If the null is rejected, it is recommended that the innovation should replace the status quo as the treatment of choice.

The standard practice has been to perform a test that fixes the probability of rejecting the null hypothesis when it is correct, called the probability of a type I error. Then sample size determines the probability of rejecting the alternative hypothesis when it is correct, called the probability of a type II error. The power of a test is defined as one minus the probability of a type II error. The convention has been to choose a sample size that yields specified power at some value of the effect size deemed clinically important.

The US Food and Drug Administration (FDA) uses such a test to approve new treatments. A pharmaceutical firm wanting approval of a new drug (the innovation) performs RCTs that compare the new drug with an approved drug or placebo (the status quo). An FDA document providing guidance for the design of RCTs evaluating new medical devices states that the probability of a type I error is conventionally set to 0.05 and that the probability of a type II error depends on the claim for the device but should not exceed 0.20 (3). The International Conference on Harmonisation has provided similar guidance for the design of RCTs evaluating pharmaceuticals, stating (ref. 4, p. 1923) “Conventionally the probability of type I error is set at 5% or less or as dictated by any adjustments made necessary for multiplicity considerations; the precise choice may be influenced by the prior plausibility of the hypothesis under test and the desired impact of the results. The probability of type II error is conventionally set at 10% to 20%.”

Trials with samples too small to achieve conventional error probabilities are called “underpowered” and are regularly criticized as scientifically useless and medically unethical. For example, Halpern et al. (ref. 5, p. 358) write “Because such studies may not adequately test the underlying hypotheses, they have been considered ‘scientifically useless’ and therefore unethical in their exposure of participants to the risks and burdens of human research.” Ones with samples larger than needed to achieve conventional error probabilities are called “overpowered” and are sometimes criticized as unethical. For example, Altman (ref. 6, p. 1336) writes “A study with an overlarge sample may be deemed unethical through the unnecessary involvement of extra subjects and the correspondingly increased costs.”

Deficiencies of Using Statistical Power to Choose Sample Size.

There are multiple reasons why choosing sample size to achieve specified statistical power may yield unsatisfactory results for medical decisions. These include the following:

-

i)

Use of conventional asymmetric error probabilities: As discussed above, it has been standard to fix the probability of type I error at 5% and the probability of type II error for a clinically important alternative at 10–20%, which implies that the probability of type II error reaches 95% for alternatives close to the null. The theory of hypothesis testing gives no rationale for selection of these conventional error probabilities. In particular, it gives no reason why a clinician concerned with patient welfare should find it reasonable to make treatment choices that have a substantially greater probability of type II than type I error.

-

ii)

Inattention to magnitudes of losses when errors occur: A clinician should care about more than the probabilities of types I and II error. He should care as well about the magnitudes of the losses to patient welfare that arise when errors occur. A given error probability should be less acceptable when the welfare difference between treatments is larger, but the theory of hypothesis testing does not take this welfare difference into account.

-

iii)

Limitation to settings with two treatments: A clinician often chooses among several treatments and many clinical trials compare more than two treatments. However, the standard theory of hypothesis testing contemplates only choice between two treatments. Statisticians have struggled to extend it to deal sensibly with comparisons of multiple treatments (7, 8).

Bayesian Trial Design and Treatment Choice.

With these deficiencies in mind, Bayesian statisticians have long criticized the use of hypothesis testing to design trials and make treatment decisions. The literature on Bayesian statistical inference rejects the frequentist foundations of hypothesis testing, arguing for superiority of the Bayesian practice of using sample data to transform a subjective prior distribution on treatment response into a subjective posterior distribution. See, for example, refs. 9 and 10.

The literature on Bayesian statistical decision theory additionally argues that the purpose of trials is to improve medical decision making and concludes that trials should be designed to maximize subjective expected utility in decision problems of clinical interest. The usefulness of performing a trial is expressed by the expected value of information (11), defined succinctly in Meltzer (ref. 12, p. 119) as “the change in expected utility with the collection of information.” The Bayesian value of information provided by a trial crucially depends on the subjective prior distribution. The sample sizes selected in Bayesian trials may differ from those motivated by testing theory. See, for example, refs. 13 and 14.

The Bayesian perspective is compelling when a decision maker feels able to place a credible subjective prior distribution on treatment response. However, Bayesian statisticians have long struggled to provide guidance on specification of priors and the matter continues to be controversial. See, for example, the spectrum of views expressed by the authors and discussants of ref. 9. The controversy suggests that inability to express a credible prior is common in actual decision settings.

Uniformly Satisfactory Trial Design and Treatment Choice with the Minimax-Regret Criterion.

When it is difficult to place a credible subjective distribution on treatment response, a reasonable way to make treatment choices is to use a decision rule that achieves uniformly satisfactory results, whatever the true distribution of treatment response may be. There are multiple ways to formalize the idea of uniformly satisfactory results. One prominent idea motivates the minimax-regret (MMR) criterion.

Minimax regret was first suggested as a general principle for decision making under uncertainty by Savage (15) within an essay commenting on the seminal Wald (16) development of statistical decision theory. Wald considered the broad problem of using sample data to make decisions when one has incomplete knowledge of the choice environment, called the state of nature. He recommended evaluation of decision rules as procedures, specifying how a decision maker would use whatever data may be realized. In particular, he proposed measurement of the mean performance of decision rules across repetitions of the sampling process. This method grounds the Wald theory in frequentist rather than Bayesian statistical thinking. See refs. 17 and 18 for comprehensive expositions.

Considering the Wald framework, Savage defined the regret associated with choice of a decision rule in a particular state of nature to be the mean loss in welfare that would occur across repeated samples if one were to choose this rule rather than the one that is best in this state of nature. The actual decision problem requires choice of a decision rule without knowing the true state of nature. The decision maker can evaluate a rule by the maximum regret that it may yield across all possible states of nature. He can then choose a rule that minimizes the value of maximum regret. Doing so yields a rule that is uniformly satisfactory in the sense of yielding the best possible upper bound on regret, whatever the true state of nature may be.

It is important to understand that maximum regret as defined by Savage is computed ex ante, before one chooses an action. It should not be confused with the familiar psychological notion of regret, which a person may perceive ex post after choosing an action and observing the true state of nature.

A decision made by the MMR criterion is invariant with respect to increasing affine transformations of welfare, but it may vary when welfare is transformed nonlinearly. The MMR criterion shares this property with expected utility maximization.

The MMR criterion is sometimes confused with the maximin criterion. A decision maker using the maximin criterion chooses an action that maximizes the minimum welfare that might possibly occur. Someone using the MMR criterion chooses an action that minimizes the maximum loss to welfare that can possibly result from not knowing the welfare function. Whereas the maximin criterion considers only the worst outcome that an action may yield, MMR considers the worst outcome relative to what is achievable in a given state of nature. Savage (15), when introducing the MMR criterion, distinguished it sharply from maximin, writing that the latter criterion is “ultrapessimistic” whereas the former is not.

Since the early 2000s, various authors have used the MMR criterion to study how a decision maker might use RCT data to subsequently choose treatments for the members of a population (19–27). In these studies, the decision maker’s objective has been expressed as maximization of a welfare function that sums treatment outcomes across the population. For example, the objective may be to maximize the 5-y survival rate of a population of patients with cancer or the average number of quality-adjusted life years of a population with a chronic disease.

The MMR criterion is applicable in general settings with multiple treatments. Regret is easiest to explain when there are two treatments, say A and B. If treatment A is better, regret is the probability of a type I error (choosing B) times the magnitude of the resulting loss in population welfare due to assigning the inferior treatment. Symmetrically, if treatment B is better, regret is the probability of a type II error (choosing A) times the magnitude of the resulting loss in population welfare due to foregoing the superior treatment. In contrast to the use of hypothesis testing to choose a treatment, the MMR criterion views types I and II error probabilities symmetrically and it assesses the magnitudes of the losses that errors produce.

Whereas the work cited above has used the MMR criterion to guide treatment choice after a trial has been performed, the present paper uses it to guide the design of RCTs. We focus on classical trials possessing perfect validity that compare alternative treatments relevant to clinical practice. Treatments may include placebo if it is a relevant clinical option or if it is considered equivalent to prescribing no treatment (28, 29). In particular, we study trials that draw subjects at random within groups of predetermined size stratified by covariates and treatments. Trials Enabling Near-Optimal Treatment Rules summarizes the major findings. Supporting Information provides underlying technical analysis.

Trials Enabling Near-Optimal Treatment Rules

General Ideas.

An ideal objective for trial design would be to collect data that enable subsequent implementation of an optimal treatment rule in a population of interest—one that always selects the best treatment, with no chance of error. Optimality is too strong a property to be achievable with trials having finite sample size, but near-optimal rules exist when classical trials with perfect validity have large enough size.

Given a specified ε > 0, an ε-optimal rule is one whose mean performance across samples is within ε of the welfare of the best treatment, whatever the true state of nature may be. Equivalently, an ε-optimal rule has maximum regret no larger than ε. Thus, an ε-optimal rule exists if and only if the MMR rule has maximum regret no larger than ε.

Choosing sample size to enable existence of ε-optimal treatment rules provides an appealing criterion for design of trials that aim to inform treatment choice. Implementation of the idea requires specification of a value for ε. The need to choose an effect size of interest when designing trials already arises in conventional practice, where the trial planner must specify the alternative hypothesis to be compared with the null. A possible way to specify ε is to make it equal the minimum clinically important difference (MCID) in the average treatment effect comparing alternative treatments.

Medical research has long distinguished between the statistical and the clinical significance of treatment effects (30). Although the idea of clinical significance has been interpreted in various ways, many writers call an average treatment effect clinically significant if its magnitude is greater than a specified value deemed minimally consequential in clinical practice. The International Conference on Harmonisation (ICH) put it this way (ref. 4, p. 1923): “The treatment difference to be detected may be based on a judgment concerning the minimal effect which has clinical relevance in the management of patients.”

Research articles reporting trial findings sometimes pose particular values of MCIDs when comparing alternative treatments for specific diseases. For example, in a study comparing drug treatments for hypertension, Materson et al. (31) defined the outcome of interest to be the fraction of subjects who achieve a specified threshold for blood pressure. The authors took the MCID to be the fraction 0.15, stating that this fraction is “the difference specified in the study design to be clinically important,” and reported groups of drugs “whose effects do not differ from each other by more than 15 percent” (ref. 31, p. 916).

Findings with Binary Outcomes, Two Treatments, and Balanced Designs.

Determination of sample sizes that enable near-optimal treatment is simple in settings with binary outcomes (coded 0 and 1 for simplicity), two treatments, and a balanced design that assigns the same number of subjects to each treatment group. Table 1 provides exact computations of the minimum sample size that enables ε optimality when a clinician uses one of three different treatment rules, for various values of ε.

Table 1.

Minimum sample sizes per treatment enabling ε-optimal treatment choice: binary outcomes, two treatments, balanced designs

| ε | ES rule | One-sided 5% z test | One-sided 1% z test |

| 0.01 | 145 | 3,488 | 7,963 |

| 0.03 | 17 | 382 | 879 |

| 0.05 | 6 | 138 | 310 |

| 0.10 | 2 | 33 | 79 |

| 0.15 | 1 | 16 | 35 |

The first column in Table 1 shows the minimum sample size (per treatment arm) that yields ε optimality when a clinician uses the empirical success (ES) rule to make a treatment decision. The ES rule chooses the treatment with the better average outcome in the trial. The rule assigns half the population to each treatment if there is a tie. It is known that the ES rule minimizes the maximum regret rule in settings with binary outcomes, two treatments, and balanced designs (25).

The second and third columns in Table 1 display the minimum sample sizes that yield ε optimality of rules based on one-sided 5% and 1% hypothesis tests. There is no consensus on what hypothesis test should be used to compare two proportions. We report results based on the widely used one-sided two-sample z test, which is based on an asymptotic normal approximation (32).

The findings are remarkable. A sample as small as 2 observations per treatment arm makes the ES rule ε optimal when ε = 0.1 and a sample of size 145 suffices when ε = 0.01. The minimum sample sizes required for ε optimality of the test rules are orders of magnitude larger. If the z test of size 0.05 is used, a sample of size 33 is required when ε = 0.1 and 3,488 when ε = 0.01. The sample sizes have to be more than double these values if the z test of size 0.01 is used.

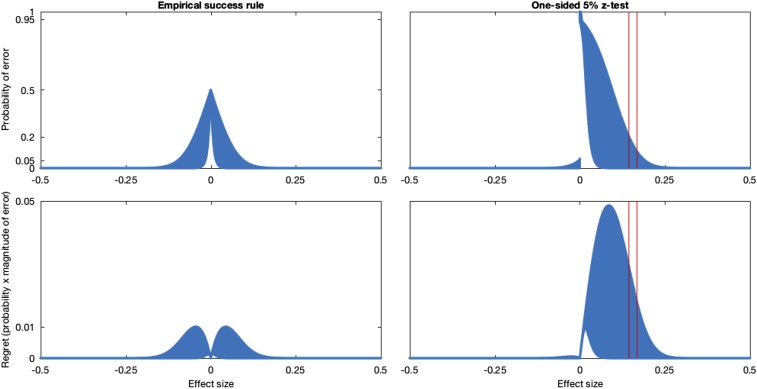

Fig. 1 illustrates the difference between error probabilities and regret incurred by the ES rule and the 5% z-test rule for a sample size of 145 per arm, the minimum sample size yielding ε optimality when ε = 0.01. Fig. 1, Upper shows how the probability of error varies with the effect size for all possible distributions of treatment response with effect sizes in the range [−0.5, 0.5]. Fig. 1, Lower displays the regret (probability of error times the effect size) of the same treatment rules. Maximum regret occurs at intermediate effect sizes. For small effect sizes, regret is small because choosing the wrong treatment is not clinically significant. Regret is also small for large effect sizes, because the probability of error eventually starts declining rapidly with the effect size. Traditional power calculations are not informative about the maximum regret of a test-based rule. Two red vertical lines in Fig. 1 mark effect sizes at which the z test has at least 80% and 90% power. Neither size corresponds to the effect size where regret is maximal.

Fig. 1.

Error probabilities and regret for empirical success and one-sided 5% z-test rules.

Findings with Bounded Outcomes and Multiple Treatments.

In principle, the existence of ε-optimal treatment rules under any design can be determined by computing the maximum regret of the minimax-regret rule. In practice, determination of the minimax-regret rule and its maximum regret may be burdensome. To date, exact minimax-regret decision rules have been derived only for the case of two treatments with equal or nearly equal sample sizes (24–26). Hence, it is useful to have simple sufficient conditions that ensure existence of ε-optimal rules more generally. The conditions we derive below hold in all settings where outcomes are bounded. Our findings apply to situations in which there are multiple treatments, not just two. They also apply when trials stratify patients into groups with different observable covariates, such as demographic attributes and risk factors.

To show that a specified trial design enables ε-optimal treatment rules, it suffices to consider a particular rule and to show that this rule is ε optimal when used with this design. We focus on empirical success rules for both practical and analytical reasons. Choosing a treatment with the highest reported mean outcome is a simple and plausible way in which a clinician may use the results of an RCT. Two analytical reasons further motivate interest in ES rules when outcomes are bounded. First, these rules either exactly or approximately minimize maximum regret in various settings with two treatments when sample size is moderate (25, 26) and asymptotic (23). Second, large-deviations inequalities derived in ref. 33 allow us to obtain informative and easily computable upper bounds on the maximum regret of ES rules applied with any number of treatments. These upper bounds on maximum regret immediately yield sample sizes that ensure an ES rule is ε optimal.

Propositions 1 and 2 (Supporting Information) present two alternative upper bounds on the maximum regret of an ES rule. Proposition 1 extends findings of Manski (19) from two to multiple treatments whereas Proposition 2 derives a new large-deviations bound for multiple treatments. When the design is balanced, these bounds are

| [1] |

| [2] |

where n is the sample size per arm, K is the number of treatment arms, and M is the width of the range of possible outcomes. Proposition 3 (Supporting Information) shows that the bounds on maximum regret derived in Propositions 1 and 2 are minimized by balanced designs. Table S1 gives numerical calculations for K ≤ 7. Trials Stratified by Observed Covariates extends these findings to settings where patients have observable covariates.

Table S1.

Bounds in Propositions 1 and 2 for balanced designs with n subjects per treatment

Propositions 1 and 2 imply sufficient conditions on sample sizes for ε optimality of ES rules. Proposition 1 implies that an ES rule is ε optimal if the sample size per treatment arm is at least

| [3] |

Proposition 2 implies that an ES rule is ε optimal if the sample size per treatment arm is at least

| [4] |

We find that when the design is balanced, Proposition 1 provides a tighter bound than Proposition 2 for two or three treatments. Proposition 2 gives a tighter bound for four or more treatments.

To illustrate the findings, consider the Materson et al. (31) study of treatment for hypertension. The outcome is binary with the range of possible outcomes M = 1. The study compared seven drug treatments and specified 0.15 as the MCID. We cannot know how the authors of the study, who reported results of traditional hypothesis tests, would have specified ε had they sought to achieve ε optimality. If they were to set ε = 0.15, application of bound 4 shows that an ES rule is ε optimal if the number of subjects per treatment arm is at least (ln 7) ⋅ (0.15)−2 = 86.5. The actual study has an approximately balanced design, with between 178 and 188 subjects in each treatment arm. Application of bound 2 shows that a study with at least 178 subjects per arm is ε optimal for ε = (ln 7)1/2(178)–1/2 = 0.105.

It is important to bear in mind that Propositions 1 and 2 imply only simple sufficient conditions on sample sizes for ε optimality of ES rules, not necessary ones. These sufficient conditions use only the weak assumption that outcomes are bounded and they rely on Hoeffding large-deviations inequalities for bounded outcomes. In the special case with binary outcomes and two treatments and a balanced design, the sufficient sample sizes provided by Proposition 1 are roughly 10 times the size of the exact minimum sample sizes, depending on the value of ε. This result strongly suggests that it is worthwhile to compute exact minimum sample sizes whenever it is tractable to do so.

Trials Stratified by Observed Covariates.

Clinical trials often stratify participants by observable covariates, such as demographic attributes and risk factors, and report trial results separately for each group. We consider ε optimality of the ES rule that assigns individuals with covariates ξ to the treatment that yielded the highest average outcome among trial participants with covariates ξ.

There are at least two reasonable ways that a planner may wish to evaluate ε optimality in this setting. First, he may want to achieve ε optimality within each covariate group. This interpretation requires no new analysis. The planner should simply define each covariate group to be a separate population of interest and then apply the analysis of Findings with Binary Outcomes, Two Treatments, and Balanced Designs and Findings with Bounded Outcomes and Multiple Treatments to each group. The design that achieves group-specific ε optimality with minimum total sample size equalizes sample sizes across groups.

Alternatively, the planner may want to achieve ε optimality within the overall population, without requiring that it be achieved within each covariate group. Bounds 1 and 2 extend to the setting with covariates. With a balanced design assigning nξ individuals from covariate group ξ to each treatment, the maximum regret of an ES rule is bounded above by

| [5] |

| [6] |

The design that minimizes bound 5 or 6 for a given total sample size generally neither equalizes sample sizes across groups nor makes them proportional to the covariate distribution P(x = ξ). Instead, the relative sample sizes for any pair (ξ, ξ′) of covariate values have the approximate ratio

| [7] |

Such trial designs make the covariate-specific sample size increase with the prevalence of the covariate group in the population, but less than proportionately. Covariate-specific maximum regret commensurately decreases with the prevalence of the covariate group.

Conclusion

Choosing sample sizes in clinical trials to enable near-optimal treatment rules would align trial design directly with the objective of informing treatment choice. In contrast, the conventional practice of choosing sample size to achieve specified statistical power in hypothesis testing is only loosely related to treatment choice. Our work adds to the growing concern of scientists that hypothesis testing provides an unsuitable methodology for collection and analysis of sample data.

We share with Bayesian statisticians who have written on trial design the objective of informing treatment choice. We differ in our application of the frequentist statistical decision theory developed by Wald, which does not require that one place a subjective probability distribution on treatment response. We use the concept of ε optimality, which is equivalent to having maximum regret no larger than ε.

There are numerous potentially fruitful directions for further research of the type initiated here. One is analysis of other types of trials. We have focused on trials that draw subjects at random within groups of predetermined size stratified by covariates and treatments. With further work, the ideas developed here should be applicable to trials where the numbers of subjects who have particular covariates and receive specific treatment are ex ante random rather than predetermined.

Our analysis assumed no prior knowledge restricting the variation of response across treatments and covariates. This assumption, which has been traditional in frequentist study of clinical trials, is advantageous in the sense that it yields generally applicable findings. Nevertheless, it is unduly conservative in circumstances where some credible knowledge of treatment response is available. One may, for example, think it credible to maintain some assumptions on the degree to which treatment response may vary across treatments or covariate groups. When such assumptions are warranted, it may be valuable to impose them.

We mentioned at the outset that medical conventions for choosing sample size pertain to classical trials possessing perfect validity. However, practical trials usually have only partial validity. For example, the experimental sample may be representative only of a part of the target treatment population, because experimental subjects typically are persons who meet specified criteria and who consent to participate in the trial. Due to this and other reasons, experimental data may only partially identify treatment response in the target treatment population. The concept of ε optimality extends to such situations.

Finally, we remark that our analysis followed the longstanding practice in medical research of evaluating trial designs by their informativeness about treatment response, without consideration of the cost of conducting trials. The concept of ε optimality can be extended to recognize trial cost as a determinant of welfare.

Principles for Evaluation of Trial Designs and Treatment Rules

The Decision Problem.

The setup is as in refs. 19 and 22. A planner must assign one of K treatments to each member of a treatment population, denoted J. Let T denote a finite set of feasible treatments. Each j ∈ J has a response function uj(⋅): T → U mapping treatments t ∈ T into individual welfare outcomes uj(t) ∈ R. Treatment is individualistic; that is, a person’s outcome may depend on the treatment he is assigned but not on the treatments assigned to others. The population is a probability space (J, Ω, P), and the probability distribution P[u(⋅)] of the random function u(⋅): T → R describes treatment response across the population. The population is “large”; formally J is uncountable and P(j) = 0, j ∈ J.

Let person j have observable covariates xj taking a value in a covariate space X; thus, x: J → X is the random variable mapping persons into their covariates. We suppose that X is finite with P(x = ξ) > 0, ∀ ξ ∈ X. We also suppose that the covariate distribution P(x) is known. The planner can systematically differentiate persons with different observed covariates, but he cannot distinguish among persons with the same observed covariates.

A statistical treatment rule (STR) maps sample data into a treatment allocation. Let Q denote the sampling distribution generating the available data and let Ψ denote the sample space; that is, Ψ is the set of data samples that may be drawn under Q. A feasible treatment rule is a function that assigns all persons with the same observed covariates to one treatment or, more generally, a function that randomly allocates such persons across the different treatments. Let Δ now denote the space of functions that map T × X × Ψ into the unit interval and that satisfy the adding-up conditions: δ ∈ Δ ⇒ ∑t∈Tδ(t, ξ, ψ) = 1, ∀ (ξ, ψ) ∈ X × Ψ. Then each function δ ∈ Δ defines a statistical treatment rule.

The planner wants to maximize population welfare, which adds welfare outcomes across persons. Given data ψ, the population welfare that would be realized if the planner were to choose rule δ is

| [S1] |

where μtξ ≡ E[u(t)|x = ξ] is the mean outcome of treatment t among individuals with covariates ξ. Inspection of [S1] shows that, whatever value ψ may take, it is optimal to set δ(t, ξ, ψ) = 0 if μtξ < maxt′∈Tμt′ξ.

The problem of interest is treatment choice when one does not have enough knowledge of P[u(⋅)|x] to determine the optimal treatment that maximizes μtξ for each ξ ∈ X.

Evaluating Treatment Rules by Their State-Dependent Welfare Distributions.

The starting point for development of implementable criteria for treatment choice under uncertainty is specification of a state space, say S. Thus, let {(Ps, Qs), s ∈ S} be the set of (P, Q) pairs that the planner deems possible.

Considered as a function of ψ, U(δ, Ps, ψ) is a random variable with state-dependent sampling distribution Qs[U(δ, Ps, ψ)]. Following Wald’s view of statistical decision functions as procedures, we use the vector {Qs[U(δ, Ps, ψ)], s ∈ S} of state-dependent welfare distributions to evaluate rule δ. In principle this vector is computable, whatever the state space and sampling process may be. Hence, in principle, a planner can compare the vectors of state-dependent welfare distributions yielded by different STRs and base treatment choice on this comparison.

How might a planner compare the state-dependent welfare distributions yielded by different STRs? The planner wants to maximize welfare, so it seems self-evident that he should weakly prefer rule δ to an alternative rule δ′ if, in every s ∈ S, Qs[U(δ, Ps, ψ)] equals or stochastically dominates Qs[U(δ′, Ps, ψ)]. It is less obvious how one should compare rules whose state-dependent welfare distributions are not uniformly ordered in this manner, as is typically the case.

Wald evaluated statistical decision functions by their mean performance across realizations of the sampling process and this has become the standard practice in the subsequent literature. The expected welfare yielded by rule δ in state s, denoted W(δ, Ps, Qs), is

| [S2] |

Here Es[δ(t, ξ, ψ)] ≡ ∫Ψδ(t, ξ, ψ)dQs(ψ) is the expected (across potential samples) fraction of persons with covariates ξ who are assigned to treatment t. We add subscript s to μstξ because mean treatment response varies across s ∈ S.

Optimality and ε Optimality of Treatment Rules.

A planner must confront the fact that the true state of nature is unknown. The maximum welfare achievable in each state s is

| [S3] |

We define rule δ to be mean optimal if W(δ, Ps, Qs) = U*(Ps) for all s ∈ S. Mean optimality is desirable, but it is too strong to be achievable in general. The concept of mean ε optimality relaxes mean optimality, yielding a property that may be achievable in practice.

We define rule δ to be mean ε optimal for a specified ε > 0 if W(δ, Ps, Qs) ≥ U*(Ps) − ε for all s ∈ S. Randomized Trials with Sample Sizes Enabling ε-Optimal Treatment shows that mean ε-optimal treatment rules exist when treatment outcomes are bounded and classical trials have sufficient finite size, whatever the state space may be. This finding makes ε optimality a practical criterion for trial design.

Stating that an STR is ε optimal is equivalent to stating that it has maximum regret no larger than ε. By definition, the regret of rule δ in state s is U*(Ps) − W(δ, Ps, Qs). The maximum regret of δ across all states is maxs∈S[U*(Ps) − W(δ, Ps, Qs)]. Thus, maximum regret is less than or equal to ε if and only if U*(Ps) − W(δ, Ps, Qs) ≤ ε for all s ∈ S. It follows that mean ε-optimal STRs exist with a specified design if and only if the maximum regret of the minimax-regret rule is less than or equal to ε.

Randomized Trials with Sample Sizes Enabling ε-Optimal Treatment

We now investigate the existence of ε-optimal treatment rules when the data are generated by classical randomized trials. We specifically consider trials that draw subjects at random within groups stratified by covariates and treatments. Thus, for (t, ξ) ∈ T × X, the experimenter draws ntξ subjects at random from the subpopulation with covariates ξ and assigns them to treatment t. The set nTX ≡ [ntξ, (t, ξ) ∈ T × X] of stratum sample sizes defines the design. Let N(t, ξ) be the realized sample of subjects with covariates ξ who are assigned to treatment t. The data are the sample outcomes ψ = [uj, j ∈ N(t, ξ); (t, ξ) ∈ T × X]. We suppose throughout that the state space S contains all distributions of treatment response. Thus, the planner has no prior knowledge restricting the variation of response across treatments and covariates.

In principle, the existence of ε-optimal STRs under any design can be determined by computing the maximum regret of the MMR rule. As noted earlier, ε-optimal rules exist if and only if the MMR rule has maximum regret less than or equal to ε. In practice, determination of the MMR rule and computation of its maximum regret may be burdensome. Hence, it is useful to have simple sufficient conditions that ensure existence of ε-optimal rules. This section provides such conditions in settings where outcomes are bounded.

Sufficient Conditions for ε Optimality of Empirical Success Rules.

To show that a specified trial design enables ε-optimal STRs, it suffices to consider a particular STR and to show that this rule is ε optimal when used with this design. We focus on ES rules, which use the empirical distribution of the sample data to estimate the population distribution of treatment response. Formally, let mtξ(ψ) be the average outcome in treatment-covariate subsample N(t, ξ); that is, mtξ(ψ) ≡ (1/ntξ)∑j∈N(t,ξ)uj. An ES rule δ assigns all persons with covariates ξ to treatments that maximize mtξ(ψ) over T. Thus, δ(t, ξ, ψ) = 0 if mtξ(ψ) < maxt′∈T mt′ξ(ψ).

The case of two treatments has been studied previously in Manski (19), who exploited the large-deviations result of Hoeffding (33) to derive an upper bound on the maximum regret of a class of ES rules that condition treatment on alternative subsets of the observable covariates of population members. The bound takes a particularly simple form when one conditions on all observable covariates and the state space includes all distributions of treatment response.

Let outcomes lie in the bounded range [ul, uh], whose width we denote by M ≡ uh − ul. Label the two treatments t = a and t = b, and let S index all distributions of treatment response. Equation 23 in Manski (19) showed that the maximum regret of an ES rule δ is bounded from above as follows:

| [S4] |

Hence, an ES rule is ε optimal if the trial sample sizes satisfy the inequality

| [S5] |

When the design is balanced, with ntξ = n for all (t, ξ), inequality S5 reduces to (2e)–1/2 Mn–1/2 ≤ ε. Hence, an ES rule with a balanced design is ε optimal if n ≥ (2e)–1(M/ε)2.

In what follows we present findings that hold with any finite number K of treatments. Randomized Trials with Sample Sizes Enabling ε-Optimal Treatment, Large Deviation Bounds on Maximum Regret with Multiple Treatments considers trial design when members of the population have no observable covariates. Randomized Trials with Sample Sizes Enabling ε-Optimal Treatment, ε Optimality of Empirical Success Rules with Observable Covariates extends the analysis to settings with covariates.

Large Deviation Bounds on Maximum Regret with Multiple Treatments.

Propositions 1 and 2 present two alternative upper bounds on the maximum regret of an ES rule. Proposition 1 extends inequality S4 to multiple treatments whereas Proposition 2 derives a different type of bound. We find that when the design is balanced, with nt = n for all t, Proposition 1 provides a tighter bound than Proposition 2 when there are two or three treatments. Proposition 2 gives a tighter bound when there are four or more treatments. Proposition 3 shows that, for any given total sample size that is an integer multiple of K, the bounds on maximum regret derived in Propositions 1 and 2 are minimized by balanced designs.

In all propositions, a design is a vector of sample sizes (nt, t ∈ T) and t* ∈ argmint∈T nt denotes a treatment with the smallest sample size. In the proofs we let t(s) designate any one of the optimal treatments in state s; that is, μst(s) ≥ μst for all t ∈ T.

Proposition 1.

The maximum regret of an empirical success rule δ is bounded above as follows:

| [S6] |

When the design is balanced, with nt = n for all t, the bound is (2e)–1/2 M (K − 1)n–1/2.□

Proof:

Given that δ is an empirical success rule, δ(t, ψ) ≤ 1[mt ≥ mt′] for all (t, t′) in T and all ψ ∈ Ψ. Therefore, Es[δ(t, ψ)] ≤ Ps(mt ≥ mt′) in each state s. Hence, Es[δ(t, ψ)] ≤ Ps(mt ≥ mt(s)). The best achievable welfare in state s is U*(Ps) = μst(s). Hence, the regret of δ in state s is

Adaptation of the argument used by Manski (19) to obtain inequality S4 from Hoeffding’s theorem 2 (33) shows that

It follows that

Hence, maximum regret is bounded above as follows:

Finally, the summation ∑t≠t(s)(nt–1 + nt(s)–1)1/2 is maximized in a state s such that t(s) = t*, where t* is a treatment with the smallest sample size. This result holds because nt** ≥ nt* for any t** ≠ t*. Hence,

Thus, [S6] holds.

Q.E.D.

Proposition 2.

The maximum regret of an empirical success rule δ is bounded above by

| [S7] |

where N ≡ ∑t∈T nt is total sample size and pt ≡ nt/N. When the design is balanced, with nt = n for all t, [S7] implies the bound

| [S8] |

□

Proof:

The proof of [S7] is in four parts. Result S8 is then proved in part v.

-

i)

Fix state s and consider a treatment t(s) that is optimal in this state. Fix the sample data ψ. Let

denote the amount by which mt(ψ) – mt(s)(ψ) overestimates μst – μst(s). Note that Ds[t(s),t(s)](ψ) = 0. We first show that the welfare loss U*(Ps) – U(δ, Ps, ψ) is bounded above by

To prove this inequality, let t be any treatment and observe that a necessary condition for δ(t, ψ) > 0 is that mt(ψ) ≥ mt(s)(ψ). For any t such that δ(t, ψ) > 0,

Given that δ(t, ψ) ≥ 0 for all t and that ∑t∈Tδ(t, ψ) = 1, it follows that

-

ii)

Each variable Ds[t,t(s)](ψ) is a sum of independent mean zero variables

Inequality 4.16 of Hoeffding (33) applies to each element of both sums on the right-hand side. This inequality shows that, for any c > 0,

for each element of the first sum and

for each element of the second sum. The statistical independence of these elements implies that

-

iii)

The conclusion to part i implies that the regret of δ in state s is bounded above as follows:

We use the conclusion to part ii and a proof similar to lemma 1.3 of Lugosi (34) to obtain an upper bound on Es[maxt∈T Ds[t,t(s)](ψ)]. For any c > 0, by Jensen's inequality,

where the last inequality follows from the conclusion to part ii. Taking the logarithm of both sides and dividing by c yields

where d = N–1/2Mc.

-

iv)

The conclusion to iii holds in every state s. Hence, the maximum regret of δ is bounded above by

The summation ∑t≠t(s) exp[d2(pt–1 + pt(s)–1)/8] is maximized in a state s such that t(s) = t*, where t* is a treatment with the smallest sample size. This holds because pt** ≥ pt* for any t** ≠ t*. Hence,

The above shows that

Finally, observe that the above inequality holds for all d > 0. This yields result S7.

-

v)

If nt = n for all t, then pt = n/N for all t. It follows that

Hence, [S7] implies that

where h = (n/N)−1/2d. The minimum is obtained at h = 2(ln K)1/2. This implies result S8.

Q.E.D.

Proposition 3.

Consider any positive integer n. Among all designs with total sample size K⋅n:

-

i)

Bound S6 in Proposition 1 is minimized by a balanced design with nt = n for all t.

-

ii)

Bound S7 in Proposition 2 is minimized by a balanced design with pt = 1/K for all t.

□

Proof:

-

i)

Bound S6 of Proposition 1 established that maximum regret is less than

For a balanced design, the sum in the bound equals

For any design with ∑t∈T nt = K⋅n, the minimum sample size is nt* ≤ n. This and the fact that K ≥ 2 imply that

| [S9] |

Applying Jensen’s inequality to f(x) = x–1, which is convex for x > 0, yields nt–1 + nt*–1 ≥ 4(nt + nt*)–1. In the derivation below, we apply this inequality to the sum in bound S6; then apply Jensen’s inequality to the function f(x) = x–1/2, which is convex for x > 0; and then combine inequality S9 with the fact that f(x) = x–1/2 is a decreasing function:

This shows that the bound for any design with total sample size K⋅n is no smaller than the bound with a balanced design.

-

ii)

Bound S7 of Proposition 2 established that maximum regret is less than

For a balanced design and any d > 0, the sum in the bound equals

We show that for any (pt, t ∈ T) such that ∑t∈T pt = 1,

This result, which holds for all d > 0, and the fact that ln(⋅) is an increasing function show that the bound for any design with total sample size K⋅n is no smaller than the bound with a balanced design.

Applying Jensen’s inequality to f(x) = x–1, which is convex for x > 0, yields pt–1 + pt*–1 ≥ 4(pt + pt*)–1. Given that exp(⋅) is increasing, it follows that

| [S10] |

Applying Jensen’s inequality to the convex function f(x) = exp(x) yields

| [S11] |

Applying Jensen’s inequality to f(x) = x–1, which is convex for x > 0, yields

Given that K – 2 ≥ 0 and pt* ≤ 1/K, it follows that 1+ (K – 2)pt* ≤ 2(K – 1)/K. Given that f(x) = x–1 is a decreasing function, it follows that

| [S12] |

Q.E.D.

Table S1 presents the values of bounds S6, S7, and S8 for balanced designs. The three bounds vary identically with Mn–1/2 but differently with the number of treatments. Proposition 1 provides a better bound for K ≤ 3, whereas Proposition 2 provides a better bound for K ≥ 4. Bound S8 of Proposition 2 is simpler to compute than bound S7 and is only marginally larger.

We have also computed bounds S6 and S7 for various unbalanced designs. We again find that bound S6 is better for K ≤ 3 and bound S7 is better for K ≥ 4. These results are not shown in Table S1.

Propositions 1 and 2 imply sufficient conditions on sample sizes for ε optimality of ES rules. If the upper bound on maximum regret with a specified trial design is less than or equal to ε, then ES rules are ε optimal with this design.

The findings are particularly simple with balanced designs. Then bound S6 of Proposition 1 implies that an ES rule is ε optimal if n ≥ (2e)–1(K − 1)2(M/ε)2. Bound S8 of Proposition 2 implies that an ES rule is ε optimal if n ≥ ln K⋅(M/ε)2. Table S1 gives the threshold sample size for bound S7 of Proposition 2 for K ≤ 7, which is (M/ε)2 times the square of the relevant constant shown in Table S1.

It is important to bear in mind that Propositions 1 and 2 imply only simple sufficient conditions on sample sizes for ε optimality of ES rules, not necessary ones. Proposition 1, for example, could be sharpened for balanced designs by replacing Hoeffding’s inequality by theorem 1.2 of Bentkus (35) and further improvements should be possible. The Bentkus inequality is expressed in terms of a tail probability of a binomial distribution and the resulting regret bound has to be evaluated numerically for each n. For large values of n, the regret bound could be up to 23.5% smaller than [S6].

In general it is difficult to compute the exact maximum regret of ES rules and hence difficult to determine how conservative the propositions are. An exception occurs when there are two treatments and outcomes are binary. Then the maximum regret of various decision rules can be computed numerically without large-deviations bounds.

ε Optimality of Empirical Success Rules with Observable Covariates.

The above analysis has assumed that members of the population have no observable covariates that may be used to condition treatment choice. Suppose now that persons have observable covariates taking values in a finite set X and that the planner can execute a trial with (treatment, covariate)-specific sample sizes [ntξ, (t, ξ) ∈ T × X]. We consider the ES rule defined in Principles for Evaluation of Trial Designs and Treatment Rules, Optimality and ε Optimality of Treatment Rules, which assigns all persons with covariates ξ to treatments that maximize mtξ(ψ) over T, mtξ(ψ) being the average outcome in subsample N(t, ξ).

There are at least two reasonable ways that a planner may wish to evaluate ε optimality in this setting. First, he may want to achieve ε optimality within each covariate group. This interpretation requires no new analysis. The planner should simply define each covariate group to be a separate population of interest and then apply the analysis of Randomized Trials with Sample Sizes Enabling ε-Optimal Treatment, Large Deviation Bounds on Maximum Regret with Multiple Treatments to each group. The design that achieves group-specific ε optimality with minimum total sample size equalizes sample sizes across groups.

Alternatively, the planner may want to achieve ε optimality within the overall population, without requiring that it be achieved within each covariate group. This is the interpretation given in Principles for Evaluation of Trial Designs and Treatment Rules, Optimality and ε Optimality of Treatment Rules, when we defined rule δ to be ε optimal if W(δ, Ps, Qs) ≥ U*(Ps) − ε for all s ∈ S. In this case, the design that achieves ε optimality with minimum total sample size does not equalize sample sizes across groups.

Propositions 1 and 2 easily extend to provide sample sizes sufficiently large to yield the latter interpretation of ε optimality. Applying Proposition 1 to each group and aggregating the bounds across groups imply that the maximum regret of ES rules is bounded above by

| [S6'] |

where t*(ξ) ∈ argmint∈T ntξ denotes a treatment with the smallest sample size among individuals with covariate value ξ. When the design is balanced across treatments for each covariate, with ntξ = nξ for all t, the bound is

| [S13] |

The analogous extension of Proposition 2 yields

| [S7'] |

where Nξ ≡ ∑t∈T ntξ is total sample size for individuals with covariate value ξ and ptξ ≡ ntξ/Nξ. When the design is balanced across treatments for each covariate, Nξ–1/2 = K–1/2 nξ–1/2, ptξ = 1/K for all (t, ξ), and the bound in [S7′] simplifies to

| [S14] |

Bounds S13 and S14 can easily be evaluated for any candidate treatment-balanced design to verify whether it suffices to enable ε-optimal treatment rules. The constants preceding ∑ξ∈X P(x = ξ)⋅nξ–1/2 in these bounds are given in Table S1 for K ≤ 7.

Given a predetermined maximum total sample size N, minimizing bounds S13 and S14 is achieved by choosing (nξ, ξ ∈ X) to minimize ∑ξ∈X P(x = ξ)·nξ–1/2 subject to the constraint ∑ξ∈X nξ ≤ N/K. Given that the objective function is decreasing in each nξ, the constraint binds. The Lagrangian expression of the constrained minimization problem is

| [S15] |

A simple approximation to the minimization problem results if one treats (nξ, ξ ∈ X) as continuous variables rather than as integer sample sizes. Then the first-order conditions for minimization of L(⋅, ⋅) yield

| [S16] |

This implies that nξ = (2λ)–2/3P(x = ξ)2/3. It follows that, to solve problem S16, the relative sample sizes for any pair (ξ, ξ′) of covariate values have the approximate ratio

| [S17] |

For the case when the covariate takes two values, a similar result is obtained by Schlag (24).

A planner who uses [S17] to choose the trial design makes the covariate-specific sample size increase with the prevalence of the covariate group in the population, albeit less than proportionately. Covariate-specific maximum regret commensurately decreases with the prevalence of the covariate group.

Acknowledgments

We have benefited from the comments of Joerg Stoye and from the opportunity to present this work in a seminar at Cornell University.

Footnotes

The authors declare no conflict of interest.

This work was presented in part on September 15, 2015 at the Econometrics Seminar of the Department of Economics, Cornell University, Ithaca, NY.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1612174113/-/DCSupplemental.

References

- 1.Ioannidis JP. Why most published research findings are false. PLoS Med. 2005;2(8):e124. doi: 10.1371/journal.pmed.0020124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wasserstein R, Lazar N. The ASA’s statement on p-values: Context, process, and purpose. Am Stat. 2016;70(2):129–133. [Google Scholar]

- 3.US Food and Drug Administration 1996 Statistical Guidance for Clinical Trials of Nondiagnostic Medical Devices. Available at www.fda.gov/MedicalDevices/DeviceRegulationandGuidance/GuidanceDocuments/ucm106757.htm. Accessed August 24, 2016.

- 4.International Conference on Harmonisation ICH E9 Expert Working Group. Statistical principles for clinical trials: ICH harmonized tripartite guideline. Stat Med. 1999;18(15):1905–1942. [PubMed] [Google Scholar]

- 5.Halpern SD, Karlawish JH, Berlin JA. The continuing unethical conduct of underpowered clinical trials. JAMA. 2002;288(3):358–362. doi: 10.1001/jama.288.3.358. [DOI] [PubMed] [Google Scholar]

- 6.Altman DG. Statistics and ethics in medical research: III. How large a sample? BMJ. 1980;281(6251):1336–1338. doi: 10.1136/bmj.281.6251.1336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Dunnett C. A multiple comparison procedure for comparing several treatments with a control. JASA. 1955;50(272):1096–1121. [Google Scholar]

- 8.Cook R, Farewell V. Multiplicity considerations in the design and analysis of clinical trials. J R Stat Soc Ser A. 1996;159(1):93–110. [Google Scholar]

- 9.Spiegelhalter D, Freedman L, Parmar M. Bayesian approaches to randomized trials (with discussion) J R Stat Soc Ser A. 1994;157(3):357–416. [Google Scholar]

- 10.Spiegelhalter D. Incorporating Bayesian ideas into health-care evaluation. Stat Sci. 2004;19(1):156–174. [Google Scholar]

- 11.Claxton K, Posnett J. An economic approach to clinical trial design and research priority-setting. Health Econ. 1996;5(6):513–524. doi: 10.1002/(SICI)1099-1050(199611)5:6<513::AID-HEC237>3.0.CO;2-9. [DOI] [PubMed] [Google Scholar]

- 12.Meltzer D. Addressing uncertainty in medical cost-effectiveness analysis implications of expected utility maximization for methods to perform sensitivity analysis and the use of cost-effectiveness analysis to set priorities for medical research. J Health Econ. 2001;20(1):109–129. doi: 10.1016/s0167-6296(00)00071-0. [DOI] [PubMed] [Google Scholar]

- 13.Cheng Y, Su F, Berry D. Choosing sample size for a clinical trial using decision analysis. Biometrika. 2003;90(4):923–936. [Google Scholar]

- 14.Berry D. Bayesian statistics and the efficiency and ethics of clinical trials. Stat Sci. 2004;19(1):175–187. [Google Scholar]

- 15.Savage L. The theory of statistical decision. JASA. 1951;46(253):55–67. [Google Scholar]

- 16.Wald A. Statistical Decision Functions. Wiley; New York: 1950. [Google Scholar]

- 17.Ferguson T. Mathematical Statistics: A Decision Theoretic Approach. Academic; New York: 1967. [Google Scholar]

- 18.Berger J. Statistical Decision Theory and Bayesian Analysis. 2nd Ed Springer; New York: 1985. [Google Scholar]

- 19.Manski C. Statistical treatment rules for heterogeneous populations. Econometrica. 2004;72(4):1221–1246. [Google Scholar]

- 20.Manski C. Social Choice with Partial Knowledge of Treatment Response. Princeton Univ Press; Princeton: 2005. [Google Scholar]

- 21.Manski C. Minimax-regret treatment choice with missing outcome data. J Econom. 2007;139(1):105–115. [Google Scholar]

- 22.Manski C, Tetenov A. Admissible treatment rules for a risk-averse planner with experimental data on an innovation. J Stat Plan. 2007;137(6):1998–2010. [Google Scholar]

- 23.Hirano K, Porter J. Asymptotics for statistical treatment rules. Econometrica. 2009;77(5):1683–1701. [Google Scholar]

- 24.Schlag K. 2006 ELEVEN – Tests needed for a recommendation, EUI Working Paper ECO no. 2006/2 (European University Institute, Florence, Italy). Available at cadmus.eui.eu//handle/1814/3937. Accessed August 24, 2016.

- 25.Stoye J. Minimax regret treatment choice with finite samples. J Econom. 2009;151(1):70–81. [Google Scholar]

- 26.Stoye J. Minimax regret treatment choice with covariates or with limited validity of experiments. J Econom. 2012;166(1):138–156. [Google Scholar]

- 27.Tetenov A. Statistical treatment choice based on asymmetric minimax regret criteria. J Econom. 2012;166(1):157–165. [Google Scholar]

- 28.Hróbjartsson A, Gøtzsche PC. Is the placebo powerless? An analysis of clinical trials comparing placebo with no treatment. N Engl J Med. 2001;344(21):1594–1602. doi: 10.1056/NEJM200105243442106. [DOI] [PubMed] [Google Scholar]

- 29.Lichtenberg P, Heresco-Levy U, Nitzan U. The ethics of the placebo in clinical practice. J Med Ethics. 2004;30(6):551–554. doi: 10.1136/jme.2002.002832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sedgwick P. Clinical significance versus statistical significance. BMJ. 2014;348:g2130. [Google Scholar]

- 31.Materson BJ, et al. The Department of Veterans Affairs Cooperative Study Group on Antihypertensive Agents Single-drug therapy for hypertension in men. A comparison of six antihypertensive agents with placebo. N Engl J Med. 1993;328(13):914–921. doi: 10.1056/NEJM199304013281303. [DOI] [PubMed] [Google Scholar]

- 32.Fleiss J. Statistical Methods for Rates and Proportions. Wiley; New York: 1973. [Google Scholar]

- 33.Hoeffding W. Probability inequalities for sums of bounded random variables. JASA. 1963;58(301):13–30. [Google Scholar]

- 34.Lugosi G. Pattern classification and learning theory. In: Gyorfi L, editor. Principles of Nonparametric Learning. Springer; Vienna: 2002. pp. 1–56. [Google Scholar]

- 35.Bentkus V. On Hoeffding’s inequalities. Ann Probab. 2004;32(2):1650–1673. [Google Scholar]