Abstract

Importance

Instituting widespread measurement of outcomes for cancer hospitals using administrative data is difficult due to the lack of cancer specific information such as disease stage.

Objective

To evaluate the performance of hospitals that treat cancer patients using Medicare data for outcome ascertainment and risk adjustment, and to assess whether hospital rankings based on these measures are influenced by the addition of cancer-specific information.

Design

Risk adjusted cumulative mortality of patients with cancer captured in Medicare claims from 2005–2009 nationally were assessed at the hospital level. Similar analyses were conducted in the Surveillance, Epidemiology and End Result (SEER)-Medicare data for the subset of the US covered by the SEER program to determine whether the exclusion of cancer specific information (only available in cancer registries) from risk adjustment altered measured hospital performance.

Setting

Administrative claims data and SEER cancer registry data

Participants

Sample of 729,279 fee-for-service Medicare beneficiaries treated for cancer in 2006 at hospitals treating 10+ patients with each of the following cancers, according to Medicare claims: lung, prostate, breast, colon. An additional sample of 18,677 similar patients in SEER-Medicare administrative data.

Main Outcomes and Measures

Risk-adjusted mortality overall and by cancer type, stratified by type of hospital; measures of correlation and agreement between hospital-level outcomes risk adjusted using Medicare data alone and Medicare data with SEER data.

Results

There were large outcome differences between different types of hospitals that treat Medicare patients with cancer. At one year, cumulative mortality for Medicare-prospective-payment-system exempt hospitals was 10% lower than at community hospitals (18% versus 28%) across all cancers, the pattern persisted through five years of follow-up and within specific cancer types. Performance ranking of hospitals was consistent with or without SEER-Medicare disease stage information (weighted kappas of at least 0.81).

Conclusions and Relevance

Potentially important outcome differences exist between different types of hospitals that treat cancer patients after risk adjustment using information in Medicare administrative data. This type of risk adjustment may be adequate for evaluating hospital performance, as the additional adjustment for data only available in cancer registries does not seem to appreciably alter measures of performance.

INTRODUCTION

Cancer is a leading cause of mortality.1 Decades of research have demonstrated that outcomes of cancer treatment vary widely in relation to where patients receive their care, and there are widespread concerns about cancer care costs.2–5 As a result, a number of initiatives are underway. The Center for Medicare and Medicaid Innovation (CMMI) has published its plans to bundle reimbursements in oncology.6 United HealthCare has tied payment changes in cancer to quality measurement in a pilot program that is now being expanded.7 Anthem has implemented programs where oncologists are paid a bonus for following some treatment approaches, but not others.8

Quality measures suitable for these initiatives, although rising in number, have shortcomings.9, 10 In the list of 60 quality measures relevant to cancer endorsed by the National Quality Forum (NQF), the majority (82%) describe processes of care. Of these, a sizable fraction (14%) are purely retrospective, focusing on care received by patients prior to their death, rather than on health outcomes.11–13 In the NQF database, 93% of the measures related to cancer require chart review to ascertain detailed information about patients’ diagnosis, such as cancer stage.14

Although survival is perhaps the most important outcome to cancer patients, and can be readily ascertained from administrative data, there is hesitation regarding the use of administratively-derived data for risk-adjustment, and thus concerns that comparisons between hospitals will be confounded by underlying differences in treated populations. In large part, these concerns emanate from the fact that potentially critical information about a patient’s cancer, such as stage and timing of a cancer diagnosis, are generally unavailable in administrative data. While it is true that this information predicts outcomes at the individual level, it is not known whether this information would have a large influence on risk-adjusted performance at a more aggregate level, such as at the level of the treating hospital. If hospitals varied systematically in the stage distributions of their cancer patient populations, this may hold true; however, if either observable factors or true differences in performance have larger effects it may not. Our objective was to evaluate risk adjusted performance (as measured by survival) of different types of hospitals that treat cancer patients using information from health insurance claims for claims for risk-adjustment, and then assess in a parallel dataset whether the risk adjusted estimated performance of hospitals is robust to the inclusion and exclusion of patient level information on cancer stage and date of diagnosis.

METHODS

Data Source

We analyzed two parallel datasets: a national dataset of fee-for-service Medicare claims across the United States which covers 100% of the United States population enrolled in the program, and the Surveillance, Epidemiology and End Results (SEER)-Medicare database, which links the National Cancer Institute (NCI)-sponsored consortium of population-based cancer registries linked to the Medicare claims and enrollment information that covers almost 26% of the US population.15 The two analyses were run in parallel using identical methods, and we assume that findings in the SEER-Medicare program regarding the robustness of hospital-level evaluation are generalizable to the analyses of the nationwide Medicare program.

Cohort Selection and Provider Assignment

Both analytic cohorts are comprised of individuals who appeared to be beginning cancer treatment or beginning management of recurrent disease in 2006, indicated by an absence of claims for cancer in 2005, and either: inpatient or outpatient claims with a primary or secondary diagnosis code for cancer on a claim for a cancer-related service in 2006, or a primary diagnosis code of cancer on a claim for a service not clearly cancer related in 2006. Cancer services were identified by Healthcare Common Procedure Coding System (HCPCS). The type of cancerwas determined from hierarchical algorithms evaluating listed diagnoses that prioritized inpatient claims, then outpatient hospital claims, then physician claims, where the predominant diagnosis was selected within each category. The type of cancer identified by this algorithm was nearly always identical to the cancer diagnosis recorded in SEER (eTable 1) in that analysis. We limited our analysis to subjects with only one type of cancer listed.

Patients who were not continuously enrolled in Part A and B Medicare in 2005 were excluded, as were those not continuously enrolled from 2006 to either their death or December 31, 2009, whichever came first. Patients were assigned to a single hospital provider based on claims within the 180 days following the first claim for cancer treatment in 2006. We used a hierarchical approach to assignment (eTable 2,). Primary assignment to a hospital that delivered some portion of the patient’s care was possible in 89% of patients; 8% were assigned to the hospital where their physician accrued the greatest amount of Medicare reimbursement; 3% were assigned through physicians who shared patients with the study subject’s physician. We then eliminated hospitals (and those subjects assigned to them) if they had fewer than 10 patients treated for any of the four major cancer diagnoses (lung, breast, colorectal, prostate), yielding a study cohort of 18,677 patients. For the SEER-Medicare analyses we searched the ‘non-cancer’ file, which is a representative subset of patients without a cancer diagnosis recorded in the SEER registry, for patients with a Medicare claim containing a diagnosis code for cancer. We found very few such subjects (for 94% of the hospitals, the count of additional patients found was 1 or zero), so they would not have influenced our findings. Because of the very small numbers and that the essence of our analysis was to incorporate the cancer-specific data on patients which these subjects by definition lacked because they had no record of cancer diagnosis in SEER.

Risk Adjustment

Analyses were risk adjusted based on information available in claims using the 3M™ Clinical Risk Group (CRG) software. The CRG classification system is used by payers and health authorities for risk adjustment in quality reporting, rate setting and utilization review. The software has been used by Medicare and various state Medicaid programs for purposes ranging from quality reporting to payment policy.16, 17 The CRG algorithm assigns individuals to one of 1080 mutually exclusive groups that reflect overall health status and the presence and severity of specific diseases and health conditions using diagnostic and procedure codes, beneficiary age and sex. To this risk adjustment we also controlled for median household income in the ZIP code of residence, classified in tertiles of the national household income distribution from US census data. We empirically selected the period of time we would assess to capture risk adjustment data, settling on a 180 day period after the first cancer treatment claim, a stopping point that assured that 99% of patients sampled were no longer assigned CRG Status 1 (healthy).

In the SEER-Medicare data, we additionally stratified for the stage of the person’s cancer, and whether their date of cancer diagnosis was around the time of their initial claim for treatment. Cancer stage was divided into five categories stages I, II, III, IV and Unknown based on the American Joint Committee on Cancer (AJCC) stage classification (6th revision)18 We created a binary indicator to distinguish cancers as ‘incident’ if the date of diagnosis occurred within four months prior to the first claim we found for cancer treatment in 2006, otherwise they were ‘prevalent’.

Analysis

Expected and observed overall survival were compared from the date of the first cancer treatment claim in 2006 to end of follow-up (12/31/2009) or the date of death from any cause. In Medicare analyses we compare the survival between mutually exclusive categories of hospital type: free-standing cancer hospitals that are exempt from the Medicare’s prospective payment system (n=11); the remaining NCI-designated cancer centers that had adequate numbers of patients (n=32); other academic teaching hospitals (n=252); and remaining hospitals, labeled ‘community hospitals’ (n=4,873). In SEER-Medicare analyses we compared observed to expected overall survival outcomes that were “Medicare risk adjusted” to those that were “SEER-Medicare risk adjusted”. The expected rates of survival were determined for each individual patient based upon the average for patients like that subject stratified by cancer type, CRG category,, sociodemographic characteristics, three age groupings (66 to 69, 70 to 79, and 80 or older at time of initial treatment), sex, and median income tertile. Individual level and grouped hospital performance was then evaluated by taking the quotient of the sum of the actual deaths and the sum of the expected deaths for each hospital or hospital category.

To assess the impact of cancer-specific information on risk-adjusted hospital performance, we evaluated the correlation of hospital rankings within the SEER-Medicare data between their Medicare and SEER-Medicare risk adjusted outcomes, divided into quintiles of survival at three years and five years. We then assessed the stability of ranking by determining the proportion of hospitals that moved either one or two quintiles in rank between the two risk adjustment approaches, and measured overall agreement in ranks using Cohen’s weighted Kappa statistic, with weights proportional to the square of the distance from the diagonal (i.e. quadratic weighting). Kappa ranges from zero to 1, with higher values reflecting higher correlation.19, 20Authors vary on exactly how high kappas need to be to reflect acceptable correlation. Landis and Koch argued that values of 0.81 or higher constitute ‘near perfect agreement’, Fleiss characterized values greater than 0.75 as ‘excellent.’21, 22,23, 24 the proportion of between hospital variation explained,, and the linear correlation of rankings, all displayed in the appendix. We compared ranking overall and within each of four common cancer sites, and in an ‘other’ category, to parallel our analyses of performance by different cancer hospital types.

The study was deemed exempt research by the Institutional Review Board at Memorial Sloan Kettering Cancer Center, and the SEER-Medicare files were used in accordance with a data use agreement from the NCI.

RESULTS

Medicare analysis of different types of cancer hospitals

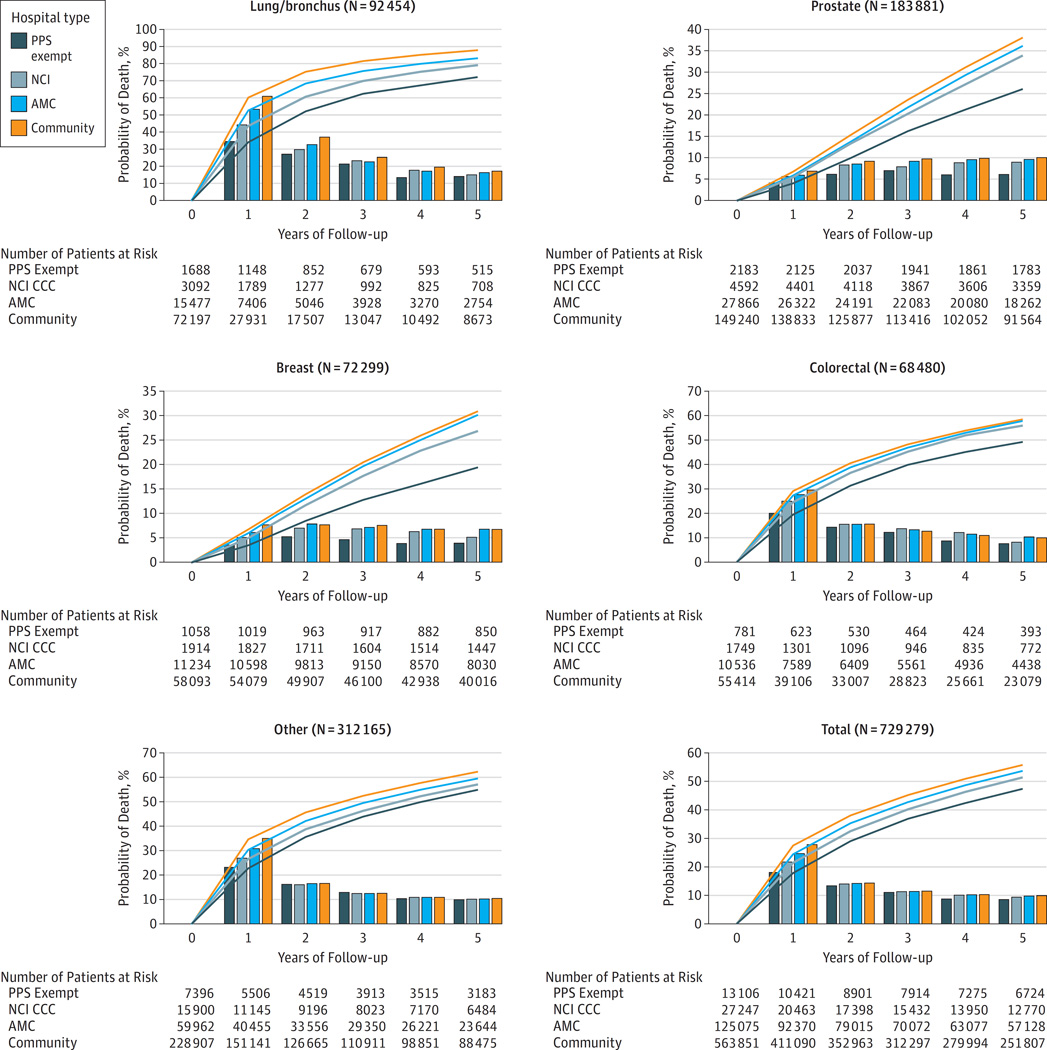

Figure 1 shows the sample size and the outcomes of risk-adjusted death over five years across the cancer diagnoses included in the analysis, for each of the four major cancer types, and for the ‘other cancer’ category, both cumulatively and annually according to the type of hospital providing cancer care. In general, both the annual probability of death and the cumulative probabilities of death line up with the category of hospital in a direction consistent with the expectation of prior outcome studies, both within each cancer type and overall.4, 25, 26 The risk-adjusted probability of death at one year for all patients under the care of cancer hospitals that are exempt from prospective payment is 10% lower than that at community hospitals (18% versus 28%), with other types of hospitals falling between the two extremes. Successive years follow a similar pattern, with the overall survival gap persisting and the conditional probabilities of death in each year being lowest among patients cared for at the PPS-exempt hospitals, highest in community hospitals. A similar pattern is seen in each of the cancer-specific analyses.

Figure 1.

Risk of death each year (bars) and over time (curve) under the care of different categories of hospitals that treat patients with cancer after Medicare risk adjustment.* Note y-axes are scaled to improve visibility. (PPS = prospective payment system exempt; NCI = hospitals designated by the National Cancer Institute that are not PPS; AMC = Academic hospitals that are not PPS or NCI; Comm = other types of hospitals, *Adjusted for age, sex, median income in the ZIP code of residence and for comorbidity using the 3M CRG software as explained in Methods.)

Comparison of Medicare risk-adjusted and SEER-Medicare risk-adjusted outcomes

For these analyses there were 18,677 patients who met eligibility criteria, received treatment for cancer in 2006, had no claim for care associated with cancer in 2005, could be assigned to a hospital or physician provider, and whose cancer stage was known. Of them, 36% had cancer of the lung, 33% prostate, 23% breast, 6% colorectal and 2% other sites (Table 1).

Table 1.

Characteristics and mode of assignment of Medicare patients included in SEER-Medicare analysis.

| Lung/Bronchus | Prostate | Breast | Colorectal | Other | Total | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| N | % | N | % | N | % | N | % | N | % | N | % | ||

| 66–74 | 2,853 | 42% | 3,366 | 55% | 1,939 | 45% | 268 | 26% | 166 | 42% | 8,592 | 46% | |

| 75+ | 3,958 | 58% | 2,725 | 45% | 2,388 | 55% | 781 | 74% | 233 | 58% | 10,085 | 54% | |

| White | 5,902 | 87% | 5,034 | 83% | 3,868 | 89% | 907 | 86% | 344 | 86% | 16,055 | 86% | |

| Black | 559 | 8% | 590 | 10% | 230 | 5% | 84 | 8% | 27 | 7% | 1,490 | 8% | |

| Other | 350 | 5% | 467 | 8% | 229 | 5% | 58 | 6% | 28 | 7% | 1,132 | 6% | |

| Married | 3,305 | 49% | 4,285 | 70% | 2,018 | 47% | 461 | 44% | 200 | 50% | 10,269 | 55% | |

| Not Married | 3,506 | 51% | 1,806 | 30% | 2,309 | 53% | 588 | 56% | 199 | 50% | 8,408 | 45% | |

| Region | |||||||||||||

| Midwest | 1,049 | 15% | 794 | 13% | 523 | 12% | 237 | 23% | 52 | 13% | 2,655 | 14% | |

| Northeast | 1,727 | 25% | 1,461 | 24% | 1,155 | 27% | 391 | 37% | 142 | 36% | 4,876 | 26% | |

| South | 1,579 | 23% | 1,075 | 18% | 710 | 16% | 217 | 21% | 67 | 17% | 3,648 | 20% | |

| West | 2,456 | 36% | 2,761 | 45% | 1,939 | 45% | 204 | 19% | 138 | 35% | 7,498 | 40% | |

| Stage | |||||||||||||

| I | 1,653 | 24% | 491 | 8% | 2,435 | 56% | 248 | 24% | 205 | 51% | 5,032 | 27% | |

| II | 214 | 3% | 5,216 | 86% | 1,128 | 26% | 340 | 32% | 53 | 13% | 6,951 | 37% | |

| III | 1821 | 27% | 139 | 2% | 225 | 5% | 288 | 27% | 52 | 13% | 2,525 | 14% | |

| IV | 2988 | 44% | 220 | 4% | 89 | 2% | 169 | 16% | 82 | 21% | 3,548 | 19% | |

| Unknown | 135 | 2% | 25 | 0% | 450 | 10% | 4 | 0% | 7 | 2% | 621 | 3% | |

Note: “Other” category includes: Corpus Uteri, Kidney/Renal Pelvis/Ureter, Liver/Intrahepatic Bile Duct/Gall Bladder, Ovary and Other Female Genital, Pancreas/Billiary Tract

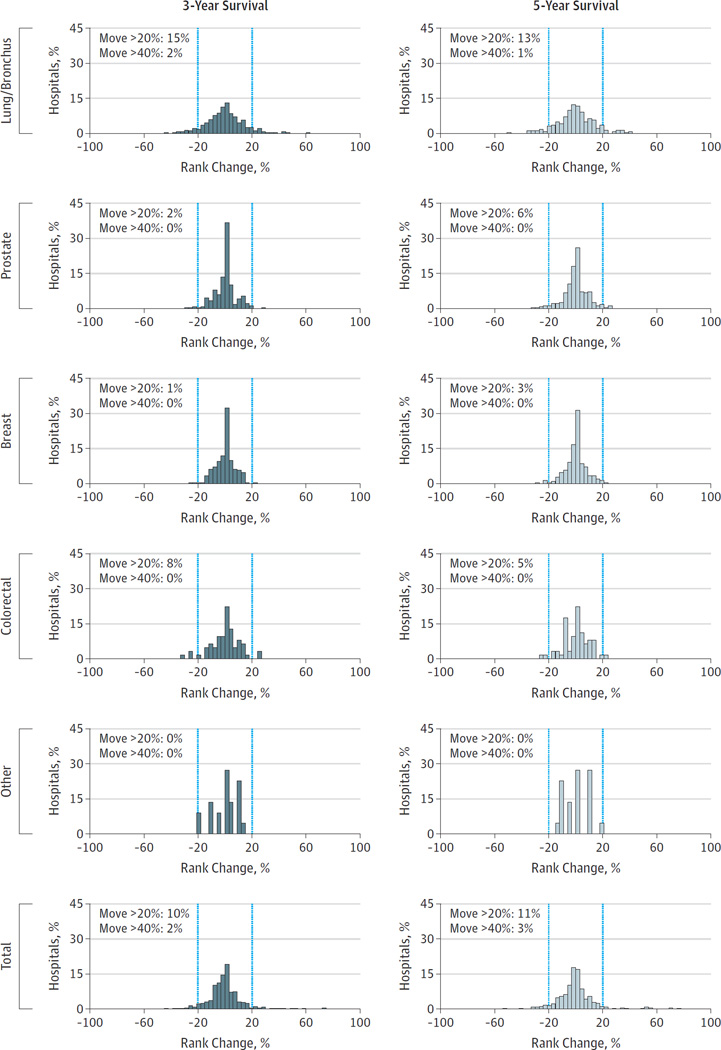

When comparing risk-adjusted hospital performance for these patients, we found that for each cancer, and for all cancers combined, correlation in quintiles of rank between Medicare adjusted and SEER-Medicare adjusted outcomes had very high kappa statistics for three year and five year survival (Table 2). All exceeded the 0.81 cutoff proposed by Landis and Koch for ‘near perfect’ correlation. Consistent with this finding, empiric shifts in rank of hospitals under the alternative methods of risk adjustment were uncommon (Figure 2). For instance, with respect to five-year survival, overall only 3% of hospitals moved 2 quintiles or more, 11% moved by at least one quintile. In the four major cancer types, in no case did more than 2% of hospitals move two or more quintiles in ranking, and between 1% and 15% moved at least one quintile.

Table 2.

Weighted kappa statistics for the comparison of the rankings of hospitals treating cancer patients based on their patients’ three and five year survival, alternatively adjusted based on “Medicare risk adjusted adjustment” and “SEER-Medicare risk adjustment”. Kappa reflect the correlation across quintiles of rankings: Landis and Koch cite Kappa statistics of 0.81 and greater as ‘near perfect’ correlation; Fleiss has labeled Kappa statistics greater than 0.75 as ‘excellent’.21, 22

| Three-Year Mortality | Five-Year Mortality | |

|---|---|---|

| Lung/Bronchus | 0.93 | 0.90 |

| Prostate | 0.92 | 0.92 |

| Breast | 0.84 | 0.86 |

| Colo-Rectal | 0.86 | 0.81 |

| Other | 0.91 | 0.93 |

| Total | 0.89 | 0.87 |

Figure 2.

Comparison of ranks of hospitals with Medicare risk adjustment and SEER risk adjustment. The vertical bars delineate rank changes of more than 20% and more than 40%.

Other metrics, such as the extent of between hospital variation explained, and correlation of performance measures, also showed agreement (eFigures 1 and 2). For instance, for all cancers combined, there was an unadjusted gap between 25th and 75th percentile survival probabilities of 24%. Risk adjustment led to reductions in the magnitude of variation to a similar degree with either approach with 25th to 75th percentile gaps of 10% after Medicare risk-adjustment and 8% after SEER-Medicare risk-adjustment. In terms of absolute correlation, all R-squared values were 0.80 or greater.

Sensitivity analysis

Excluding the 11% of patients who were assigned to a hospital indirectly in the SEER-Medicare data, through association with a doctor who treated other patients in the assigned hospital, or through the admitting patterns of that doctor’s other patients, did not change our findings meaningfully (data not shown).

DISCUSSION

A recent report from the Institute of Medicine (IOM) raised far reaching questions about the quality of cancer care available to patients.27 The scrutiny is appropriate. Measures such as hospital-specific volume for particular types of cancer care have regularly been associated with variations in both short- and long-term outcomes, as have other umbrella designations, such as hospital teaching status or NCI designation.2, 4, 26 In these prior analyses, risk-adjustment generally included cancer-specific data on stage and timing of diagnosis, and so the geographic scope of these analyses is limited to the few parts of the country where such information has been routinely linked to Medicare claims. The SEER-Medicare dataset is the most widely used resource for these types of studies, and it currently covers about 26% of the US population.

In our analysis, we also show large and persistent risk-adjusted differences in outcomes of cancer patients associated with the type of treating hospital. The findings suggest that when compared to care at community hospitals, there appear to be superior survival for patients treated at those cancer hospitals that are exempt from the prospective payment system, at NCI designated cancer centers and at academic teaching hospitals – all findings consistent with prior reports. But because these analyses only use Medicare data for risk adjustment, a critical question is whether the lack of cancer-specific data on each treated patient, which can only be obtained readily from cancer registries, matters for performance assessment at the hospital level.

Our findings examining SEER-Medicare data strongly suggest that the disease specific information available in cancer registries, although undoubtedly influential on individual patient outcomes, may not be routinely needed for risk adjustment of performance measures at the hospital level. Comparing outcomes adjusted for only Medicare data and then with both Medicare and SEER data, we found that hospital ranks were stable, weighted kappa statistics signaled very high agreement, the explanatory power for overall variation was similar, and linear measures of performance scores were highly correlated.

In other words, using Medicare claims alone to identify, risk adjust, and evaluate outcomes may be an adequate way of understanding cancer care at the hospital level. If true, this would bring cancer care outcome evaluation alongside many measures for other conditions that rely on administrative data alone and have been endorsed by the National Quality Forum and incorporated by the Centers for Medicare and Medicaid Services in programs for assessing quality and calculating value-based payment rates.14, 28 Some cover cardiovascular disease outcomes, others in development focus on orthopedic care and pneumonia.29, 30 Each relies on administrative claims to both capture events and to risk adjust.31 Comorbidities are usually taken directly from ICD-9 codes, or alternatively from a pre-specified clustering such as the CMS hierarchical classification coding system. Some adjust for socio-economic variables as we do, others do not.

An early validation step of all of these approved and widely used measures parallel what we have done in this analysis. Risk-adjusted outcomes based on administrative data alone was compared with outcomes that were risk adjusted additionally with more granular data, such as that found in medical records.32 Demonstration of high correlation of measures with and without the additional medical records based information was one of the critical steps in validating the administrative data based measures. Using parallel logic, our analyses suggest that survival can be assessed at the hospital level with risk adjustment that lacks information on individual patient’s cancer timing and stage. At a minimum, our analyses suggest that the blanket assumption that patient level cancer information is required for risk adjustment of performance measures in cancer may be incorrect.

Some limitations should be noted. SEER-Medicare data are not perfectly representative of the US population of cancer patients, but this should not have biased the findings.15 Our method depends both on the ability to assign patients to a particular hospital, and then to ascertain their risk-adjusted outcomes. Using a method analogous to that employed by the Dartmouth Atlas of Healthcare, we found that the vast majority of patients can be assigned to a dominant provider or hospital unambiguously, and that findings at the hospital level were robust to the exclusion or inclusion of patients who could only be assigned inferentially.33

Comorbidity and other types of risk-adjustment are intrinsically controversial. Perhaps the most common rebuke to any outcome measure is the concern that patients are sicker than the method could capture. The comorbidity grouper used here relies on commercial software from 3M that is designed for gauging outcomes and forecasting expenditures, the purposes for which we are using it here. Although it is proprietary, its highly detailed documentation is in the public domain, and, as previously mentioned, the software is already in use by the Medicare program and several state Medicaid programs for various purposes.16, 17, 34

Performance measurement and ranking itself are also subjects of controversy. Critics of the approach might argue that even a reclassification of a handful of hospitals under alternative risk adjustment approaches undermines the credibility of the endeavor. We believe that instead our findings support cautious movement towards profiling hospitals for their performance, and perhaps using those assessments for quality assessment and payment initiatives. We believe this because we gauge the impact on cancer patients of the outcome differences we report to be of much more importance than the possible small impact of a very occasional misclassification of a hospital, particularly given that the outcome of our analyses showing hospital differences involve the death of patients, while the anticipated impact of hospital misclassification would be modest and economic. A focus on risk adjusted outcomes would also direct more attention to care processes that might affect outcomes even if they are not currently measured or subject to public reporting.

In our analyses we include factors that adjust for a patient's socioeconomic status while CMS risk adjustment in some of its programs does not. This divergence from their methods reflects a philosophical difference of opinion. Ours is that economic status predicts outcomes and thus should be considered. We also capture comorbidities after diagnosis, while other approaches only look to the year prior to the hospitalization for risk adjustment.29, 30, 32, 35 We do so based on the desire to assign CRG’s to all patients, and an a priori expectation that comorbidities and other predictors of outcome specific to cancer are likely ascertained in a more accurate fashion during the process of initial management of a patient’s cancer rather than before their diagnosis.36

Our findings suggest an opportunity to use administrative data to assess the quality of cancer care provided by US hospitals. The major impediment to doing so has been a long held belief that adequate risk-adjustment could not be accomplished without information regarding stage of disease and other details included in cancer registries and medical records. We find no support for that belief. Rather, information on cancer stage and timing of diagnosis adds little to insights garnered from administrative data on hospital performance.

That there are very sizable differences in outcomes between hospitals may explain why stage data seem not to be important – the actual differences are of much greater magnitude than small differences in stage mix could explain. These large differences reinforce the conclusion drawn by the IOM that the quality of cancer care in the United States is inconsistent and should be improved. In order to do so, we must first be able to observe, measure and compare it both reliably and efficiently. The methodology we describe provides a possible starting point.

Supplementary Material

Acknowledgments

Funding/Support: This study was funded by internal Memorial Sloan Kettering Cancer Center funds and by Memorial Sloan Kettering Cancer Center Support Grant/Core Grant P30 CA 008748.

Role of the Funder/Sponsor: The funder had no role in the design and conduct of the study; collection, management, analysis and interpretation of the data; preparation, review or approval of the manuscript; decision to submit the manuscript for publication.

Additional Contributions: We would like to thank Geoffrey Schnorr, BS (Memorial Sloan Kettering Cancer Center), for research assistance, and Norbert Goldfield, MD (3M), for his insight and guidance with respect to the 3M CRG software, neither of whom were compensated for their contributions.

Footnotes

Author Contributions: Mr Rubin had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

Study concept and design: Pfister, Rubin, Neill, Duck, Radzyner, Bach.

Acquisition, analysis, or interpretation of data: Pfister, Rubin, Elkin, Neill, Duck, Radzyner, Bach.

Drafting of the manuscript: Pfister, Rubin, Elkin, Neill, Duck, Radzyner, Bach.

Critical revision of the manuscript for important intellectual content: Pfister, Rubin, Elkin, Neill, Duck, Radzyner, Bach.

Statistical analysis: Rubin, Duck, Radzyner.

Administrative, technical, or material support: Pfister, Rubin, Neill, Duck, Radzyner, Bach.

Study supervision: Pfister, Radzyner, Bach.

Conflict of Interest Disclosures: All authors are employed by a PPS-exempt cancer hospital, Memorial Sloan Kettering Cancer Center. No other conflicts are reported.

References

- 1.Murphy SL, Xu J, Kochanek KD. Deaths: final data for 2010. National vital statistics reports. 2013;61(4):1–118. [PubMed] [Google Scholar]

- 2.Birkmeyer NJ, Goodney PP, Stukel TA, Hillner BE, Birkmeyer JD. Do cancer centers designated by the National Cancer Institute have better surgical outcomes? Cancer. 2005 Feb 1;103(3):435–441. doi: 10.1002/cncr.20785. [DOI] [PubMed] [Google Scholar]

- 3.Cheung MC, Hamilton K, Sherman R, et al. Impact of teaching facility status and high-volume centers on outcomes for lung cancer resection: an examination of 13,469 surgical patients. Ann Surg Oncol. 2009 Jan;16(1):3–13. doi: 10.1245/s10434-008-0025-9. [DOI] [PubMed] [Google Scholar]

- 4.Petitti D, Hewitt M. Interpreting the volume-outcome relationship in the context of cancer care. National Academies Press; 2001. [PubMed] [Google Scholar]

- 5.Clough JD, Patel K, Riley GF, Rajkumar R, Conway PH, Bach PB. Wide variation in payments for medicare beneficiary oncology services suggests room for practice-level improvement. Health Aff (Millwood) 2015 Apr 1;34(4):601–608. doi: 10.1377/hlthaff.2014.0964. [DOI] [PubMed] [Google Scholar]

- 6.Centers for Medicare & Medicaid Services CfMMI. Preliminary design for an oncology-focused model. 2014 [Google Scholar]

- 7.Newcomer LN, Gould B, Page RD, Donelan SA, Perkins M. Changing Physician Incentives for Affordable, Quality Cancer Care: Results of an Episode Payment Model. J Oncol Pract. 2014 Jul 8; doi: 10.1200/JOP.2014.001488. [DOI] [PubMed] [Google Scholar]

- 8.Matthews AW. Insurers Push to Rein In Spending on Cancer Care. [Accessed 4/13/2015]; http://www.wsj.com/articles/insurer-to-reward-cancer-doctors-for-adhering-to-regimens-1401220033. [Google Scholar]

- 9.Consumer Reports. Hospital Rankings by State. [Accessed 8/27/2014]; http://www.consumerreports.org/health/doctors-hospitals/hospital-ratings.htm. [Google Scholar]

- 10.U.S. News & World Report. Top-Ranked Hospitals for Cancer. [Accessed 8/27/2014]; http://health.usnews.com/best-hospitals/rankings/cancer. [Google Scholar]

- 11.Cassel CK. Health Care Quality: The Path Forward. [Accessed 8/29/2014];Testimony prepared for the Senate Finance Committee. 2013 Jun 26; http://www.qualityforum.org/News_And_Resources/Press_Releases/2013/New_National_Quality_Forum_%28NQF%29_CEO_Dr__Christine_Cassel_Testifies_at_U_S__Senate_Finance_Committee_Hearing_on_June_26th.aspx. [Google Scholar]

- 12.Bach PB, Schrag D, Begg CB. Resurrecting treatment histories of dead patients: a study design that should be laid to rest. Jama. 2004 Dec 8;292(22):2765–2770. doi: 10.1001/jama.292.22.2765. [DOI] [PubMed] [Google Scholar]

- 13.Werner RM, Bradlow ET. Relationship between Medicare's hospital compare performance measures and mortality rates. Jama. 2006 Dec 13;296(22):2694–2702. doi: 10.1001/jama.296.22.2694. [DOI] [PubMed] [Google Scholar]

- 14.National Quality Forum. Quality Positioning System. [Accessed 8/22/2014]; http://www.qualityforum.org/QPS/QPSTool.aspx. [Google Scholar]

- 15.Warren JL, Klabunde CN, Schrag D, Bach PB, Riley GF. Overview of the SEER-Medicare data: content, research applications, and generalizability to the United States elderly population. Med Care. 2002 Aug;40(8 Suppl):Iv-3–Iv-18. doi: 10.1097/01.MLR.0000020942.47004.03. [DOI] [PubMed] [Google Scholar]

- 16.Hughes JS, Averill RF, Eisenhandler J, et al. Clinical Risk Groups (CRGs): a classification system for risk-adjusted capitation-based payment and health care management. Med Care. 2004 Jan;42(1):81–90. doi: 10.1097/01.mlr.0000102367.93252.70. [DOI] [PubMed] [Google Scholar]

- 17.Systems MHI. 3M Clinical Risk Grouping Software. [Accessed 2/24/2015]; http://solutions.3m.com/wps/portal/3M/en_US/Health-Information-Systems/HIS/Products-and-Services/Products-List-A-Z/Clinical-Risk-Grouping-Software/ [Google Scholar]

- 18.Greene FL. AJCC cancer staging manual. Vol. 1. Springer; 2002. [Google Scholar]

- 19.Brenner H, Kliebsch U. Dependence of weighted kappa coefficients on the number of categories. Epidemiology. 1996 Mar;7(2):199–202. doi: 10.1097/00001648-199603000-00016. [DOI] [PubMed] [Google Scholar]

- 20.Cohen J. Weighted kappa: nominal scale agreement with provision for scaled disagreement or partial credit. Psychol Bull. 1968 Oct;70(4):213–220. doi: 10.1037/h0026256. [DOI] [PubMed] [Google Scholar]

- 21.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977 Mar;33(1):159–174. [PubMed] [Google Scholar]

- 22.Fleiss JL, Levin B, Paik MC. Statistical methods for rates and proportions. John Wiley & Sons; 2013. [Google Scholar]

- 23.Fisher ES, Wennberg DE, Stukel TA, Gottlieb DJ, Lucas FL, Pinder EL. The implications of regional variations in Medicare spending. Part 1: the content, quality, and accessibility of care. Ann Intern Med. 2003 Feb 18;138(4):273–287. doi: 10.7326/0003-4819-138-4-200302180-00006. [DOI] [PubMed] [Google Scholar]

- 24.Fisher ES, Wennberg DE, Stukel TA, Gottlieb DJ, Lucas FL, Pinder EL. The implications of regional variations in Medicare spending. Part 2: health outcomes and satisfaction with care. Ann Intern Med. 2003 Feb 18;138(4):288–298. doi: 10.7326/0003-4819-138-4-200302180-00007. [DOI] [PubMed] [Google Scholar]

- 25.Begg CB, Riedel ER, Bach PB, et al. Variations in morbidity after radical prostatectomy. N Engl J Med. 2002 Apr 11;346(15):1138–1144. doi: 10.1056/NEJMsa011788. [DOI] [PubMed] [Google Scholar]

- 26.Ayanian JZ, Weissman JS. Teaching hospitals and quality of care: a review of the literature. Milbank Q. 2002;80(3):569–593. v. doi: 10.1111/1468-0009.00023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Levit L, Balogh E, Nass S, Ganz PA. Delivering High-Quality Cancer Care: Charting a New Course for a System in Crisis. National Academies Press; 2013. [PubMed] [Google Scholar]

- 28.Services CfMM. Claims-Based Measures. [Accessed 10/1/2014]; http://www.qualitynet.org/dcs/ContentServer?c=Page&pagename=QnetPublic%2FPage%2FQnetTier2&cid=1228763452133. [Google Scholar]

- 29.Krumholz HM, Wang Y, Mattera JA, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with an acute myocardial infarction. Circulation. 2006 Apr 4;113(13):1683–1692. doi: 10.1161/CIRCULATIONAHA.105.611186. [DOI] [PubMed] [Google Scholar]

- 30.Bratzler DW, Normand SL, Wang Y, et al. An administrative claims model for profiling hospital 30-day mortality rates for pneumonia patients. PLoS One. 2011;6(4):e17401. doi: 10.1371/journal.pone.0017401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bach PB. A map to bad policy--hospital efficiency measures in the Dartmouth Atlas. N Engl J Med. 2010 Feb 18;362(7):569–573. doi: 10.1056/NEJMp0909947. discussion p 574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Grosso L, Schreiner G, Wang Y. 2009 Measures Maintenance Technical Report: Acute Myocardial Infarction, Heart Failure, and Pneumonia 30-Day Risk-Standardized Mortality Measures. 2009 [Google Scholar]

- 33.Morden NE, Chang CH, Jacobson JO, et al. End-of-life care for Medicare beneficiaries with cancer is highly intensive overall and varies widely. Health Aff (Millwood) 2012 Apr;31(4):786–796. doi: 10.1377/hlthaff.2011.0650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Averill RF, Goldfield N, Eisenhandler J, et al. Development and evaluation of clinical risk groups (CRGs) Wallingford, CT: 3M Health Information Systems. 1999 [Google Scholar]

- 35.Horwitz L, Partovian C, Lin Z, et al. Hospital-wide (all-condition) 30-day risk-standardized readmission measure. [Retrieved September. 2011];Yale New Haven Health Services Corporation/Center for Outcomes Research & Evaluation. 2012 10 [Google Scholar]

- 36.Song Y, Skinner J, Bynum J, Sutherland J, Wennberg JE, Fisher ES. Regional variations in diagnostic practices. N Engl J Med. 2010;363(1):45–53. doi: 10.1056/NEJMsa0910881. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.