Abstract

Visual motion responses in the brain are shaped by two distinct sources: the physical movement of objects in the environment and motion resulting from one's own actions. The latter source, termed visual reafference, stems from movements of the head and body, and in primates from the frequent saccadic eye movements that mark natural vision. To study the relative contribution of reafferent and stimulus motion during natural vision, we measured fMRI activity in the brains of two macaques as they freely viewed >50 hours of naturalistic video footage depicting dynamic social interactions. We used eye movements obtained during scanning to estimate the level of reafferent retinal motion at each moment in time. We also estimated the net stimulus motion by analyzing the video content during the same time periods. Mapping the responses to these distinct sources of retinal motion, we found a striking dissociation in the distribution of visual responses throughout the brain. Reafferent motion drove fMRI activity in the early retinotopic areas V1, V2, V3, and V4, particularly in their central visual field representations, as well as lateral aspects of the caudal inferotemporal cortex (area TEO). However, stimulus motion dominated fMRI responses in the superior temporal sulcus, including areas MT, MST, and FST as well as more rostral areas. We discuss this pronounced separation of motion processing in the context of natural vision, saccadic suppression, and the brain's utilization of corollary discharge signals.

SIGNIFICANCE STATEMENT Visual motion arises not only from events in the external world, but also from the movements of the observer. For example, even if objects are stationary in the world, the act of walking through a room or shifting one's eyes causes motion on the retina. This “reafferent” motion propagates into the brain as signals that must be interpreted in the context of real object motion. The delineation of whole-brain responses to stimulus versus self-generated retinal motion signals is critical for understanding visual perception and is of pragmatic importance given the increasing use of naturalistic viewing paradigms. The present study uses fMRI to demonstrate that the brain exhibits a fundamentally different pattern of responses to these two sources of retinal motion.

Keywords: free viewing, macaque, MT, reafference, stimulus motion, V1

Introduction

When animals use their vision to perceive and act upon their surroundings, their visual cortex draws upon retinal signals whose structure is strongly shaped by self-generated actions. For example, each time a human or nonhuman primate makes a saccadic eye movement, which typically occurs 2–3 times per second, the image is abruptly swept across the retinal surface, with velocities that overlap with the movement of real objects (Westheimer, 1954; Zuber et al., 1965). Self-induced retinal stimulation caused by saccadic, head, or bodily movements, collectively termed visual reafference, has been shown to elicit neural responses in the retina and the brain (Wurtz, 1968; Noda and Adey, 1974; Toyama et al., 1984; Galletti et al., 1990; Thier and Erickson, 1992; Bair and O'Keefe, 1998; Leopold and Logothetis, 1998; Vinje and Gallant, 2000). How the brain treats these self-generated signals is a long-standing, though often ignored, question in visual neuroscience. In some areas of the primate visual cortex, reafferent neural responses are known to closely resemble those elicited by external stimuli (Wurtz, 1968; Galletti et al., 1984, 1988; Toyama et al., 1984; Bair and O'Keefe, 1998; Leopold and Logothetis, 1998; Vinje and Gallant, 2000). Moreover, reafferent events often occur in conjunction with real stimulus motion, as when an agent shifts their gaze over a dynamic scene. A fundamental challenge of the brain is to compensate for, or ignore, self-induced visual activity to accurately perceive the movement of objects in the external world (Holst and Mittelstaedt, 1950; Moeller et al., 2007; Ibbotson et al., 2008; Bremmer et al., 2009; Inaba and Kawano, 2014).

In contrast to conventional visual testing paradigms, where a subject typically fixates a small spot while well-defined visual patterns are briefly flashed onto a screen, natural visual input entails a rich and dynamic mixture of external stimuli combined with self-determined reafferent events. In recent years, visual neuroscience has increasingly recognized the need to address aspects of vision that are not captured by conventional testing (Einhäuser and König, 2010). Several laboratories have begun to use free viewing paradigms, often in combination with dynamic video stimuli (DiCarlo and Maunsell, 2000; Vinje and Gallant, 2000; Hasson et al., 2004, 2010; Hanson et al., 2007; Bartels et al., 2008; Berg et al., 2009; Huth et al., 2012; Marsman et al., 2013; McMahon et al., 2015; Russ and Leopold, 2015). These approaches complement more traditional fixation paradigms and offer new perspectives on the brain's integration of visual information during active vision. In a previous study, we investigated the brain's processing of multiple visual features that were simultaneously present in videos depicting a range of social interactions (Russ and Leopold, 2015). We found that the motion content of the video drove fMRI responses in the extrastriate visual cortex more strongly than any other identified stimulus feature. The predominance of motion extended even to face patches, which were identified by tracking the face content of the movies, but were more strongly driven by the motion content. In that study, we briefly acknowledged the potentially important contribution of eye movements.

Here we turn our attention to the basic question of how reafferent input from eye movements affects brain activity during natural vision. Using a large amount of free viewing data from the scanner (Russ and Leopold, 2015), we compare brain responses due to reafferent motion with those due to stimulus motion. In contrast to the previous study, where the analyses were performed on the mean fMRI response over a large number of presentations, the current study evaluates each presentation separately to assess the unique contribution of stimulus versus self-generated motion. The results show that reafferent motion generates a fundamentally different, and complementary, pattern of fMRI responses compared with the motion inherent in the stimulus. Reafferent responses were pronounced in the early retinotopic visual cortex, where stimulus motion responses were minimal. Stimulus motion was much stronger in known motion-sensitive areas in and around area MT. We discuss these findings in the context of natural vision and potential mechanisms for the active modulation of visual processing by eye movements.

Materials and Methods

Subjects.

Two adult female rhesus monkeys (ages 5–6 years at the time of data collection) participated in the current study. All procedures were approved by the Animal Care and Use Committee of the United States National Institutes of Health, National Institutes of Mental Health, and followed National Institutes of Health guidelines. Monkeys were pair housed when possible and kept on a 12 hour light/d cycle with access to food 24 hours/d. After collar and chair training, custom-designed fiberglass headposts were implanted on the animals' skulls to immobilize their heads during data acquisition. Animals were gradually acclimated to head immobilization using positive reinforcement with juice and treats. During periods of experimental testing, access to water was restricted before testing 5 d/week, such that the animals were motivated to earn liquid reward for free viewing of the video stimuli. Weights and hydration levels were continuously monitored to ensure the animals' health and well-being.

Video stimuli.

Each 5 min movie was edited together from a set of commercially produced nature documentaries. In our primary analysis, we used data from movies that depicted other animals, primarily macaques, engaged in natural behaviors including, but not limited to, grooming, aggression, feeding, sleeping, copulating, and climbing. A total of 15 such movies were presented over the course of the experimental sessions. In addition to these “social” movies featuring animals, the monkeys also viewed “nonsocial” movies, which we evaluated as part of control analyses. These nonsocial movies contained scenes that also featured visual motion, including, but not limited to, storms, rivers, and natural disasters, but no animals. All movies were encoded at 30 frames/s and a frame resolution of 640 × 480 pixels. Further details on movie content are given by Russ and Leopold (2015).

Free viewing task.

Eye position was monitored and recorded using a 60 Hz MR-compatible infrared camera (MRC Systems) and transmitted to an eye tracking system (SensoMotoric Instruments). Animals were first calibrated through serial fixation to small spots of light at predetermined points on the screen that encompassed the movies' field of view. Eye position was calibrated multiple times throughout the experimental session, minimally after every second movie presentation, to ensure accurate eye movement data. After calibration, subjects engaged in the free-viewing task, in which they were permitted to direct their eyes to features of interest while watching 5 min videos. The animals were rewarded every 2 s for maintaining their gaze within the 10° × 8° area subtended by the video stimulus. The amount of liquid per reward instance grew steadily during periods of continuous movie watching. The animals were permitted to blink normally without penalty. However, breaks in movie viewing lasting >128 ms, in the form of prolonged eye closure or direction of gaze away from the stimulus, led to an automatic resetting of the reward volume to the initial amount. We (McMahon et al., 2015) and others (e.g., Mosher et al., 2011) have previously reported that the animals often attend to movies without reward, especially when presented with novel movies. However, as the current paradigm involved the repeated presentation of the same movies, we found that this reward scheme helped to maintain the animals' consistent motivation while having no discernible effect on gaze behavior. During an fMRI session lasting on average 2.5 h, each subject typically viewed three different movies between three and four times each, in pseudorandom order. The two monkeys participated in a total of 602 viewings of 15 five-min videos (347 in Monkey M1, 255 in Monkey M2, average 20 viewings per video). The subjects' gaze was directed consistently toward the video stimulus (Subject M1, 87%; Subject M2, 87%).

fMRI scanning.

Structural and functional magnetic resonance images were collected using a 4.7 tesla, 60 cm vertical scanner (Bruker Biospec) equipped with a Bruker S380 gradient coil. Functional EPIs were acquired using an 8 channel transmit and receive RF coil system (Rapid MR International). During each session (M1, 50 sessions; M2, 44 sessions), whole-brain images were acquired as 40 sagittal slices, with isotropic voxels of 1.5 mm and a TR of 2.4 s. Monocrystalline iron oxide nanoparticles (8–10 mg/kg), which act as a T2* contrast agent, were administered intravenously before the start of each scanning session (Leite et al., 2002).

fMRI analysis.

fMRI data were analyzed using custom-written MATLAB (The MathWorks) programs as well as the AFNI/SUMA software package developed at National Institutes of Health (Cox, 1996). Raw images were first converted from Bruker into AFNI data file format. Motion correction algorithms were applied to each EPI time course using the AFNI function 3dvolreg, followed by correction for static magnetic field inhomogeneities using the PLACE algorithm (Xiang and Ye, 2007). Each session was then registered to a template session, allowing for the combination of data across multiple testing days. The first 7 TRs (16.8 s) of each movie were not considered in the analysis so as to eliminate the hemodynamic onset response associated with the initial presentation of each video. Surface and flat maps were generated using a combination of AFNI (Cox, 1996) and CARET (Van Essen et al., 2001) software packages. The high-resolution anatomy volume was skull stripped and normalized using AFNI and then imported in CARET. Within CARET, surface and flattened maps were generated from a white matter mask, and exported to SUMA for viewing.

Models of self-induced motion and stimulus motion.

For each viewing, we created a set of time-varying models to investigate the influence of self-induced and stimulus motion from each presentation of a movie using programs custom written in MATLAB (The MathWorks). We based the model of self-induced (reafferent) motion on the eye movements expressed on each trial. Following extensive pilot experimentation with different reafference models, we settled on a straightforward estimation of reafference based on the moment-to-moment velocity of the eyes. Specifically, we calculated the time-varying 2D velocity vector during each 5 min viewing period. Because reafferent sweeps of the stimulus are coherent across the retina during eye movements, this velocity measure served as good approximation of mean reafferent motion speed, similar to earlier approximations of reafferent stimulation using eye position parameters (Thiele et al., 2002). Across all viewings of the social movie set, the median saccade amplitude was 3.1° and median peak velocity was 82.4°/s, consistent with previous findings (Berg et al., 2009). Averaged over time, including periods of fixation, the mean speed of this self-induced motion across all movies was 10.5°/s. Because eye movements differed across viewings of the same movie clip, each data session was associated with its unique model of self-induced motion.

To estimate stimulus motion, we computed the mean instantaneous speed of motions in the video on the image plane (Elias et al., 2006; Russ and Leopold, 2015) for each frame. To minimize the contributions of small luminance changes, movies were first down-sampled to ¼ their original image size and to 10 frames per second. We then computed an image intensity differential at each pixel within a frame (horizontally and vertically) and across consecutive frames of the movies. These differentials were combined, using the sum of their squares, to calculate the velocity of change at each pixel, with the magnitude of the velocities averaged to compute the mean speed (in degrees per second) for each frame. For repeated presentations of the same movie, the time course stimulus-motion models were identical.

Each model (self-induced motion and stimulus motion) was subsequently down-sampled and low-pass filtered using the decimate function in MATLAB to match the temporal resolution of the fMRI data (i.e., 1 sample per 2.4 s), convolved with an estimation of the hemodynamic monocrystalline iron oxide nanoparticle response function, and concatenated across all presentations for comparison with the fMRI data (see Fig. 1). It is important to note that such convolution acts as a low-pass filter and, together with the hemodynamic constraints of the fMRI signal, naturally places emphasis on slow changes. In the case of self-induced motion, changes in eye velocity correlated relatively strongly with slow changes in saccade frequency (r = ∼0.75). However, as eye velocity is more directly related to visual reafference, we used this as the basis of our reafference model.

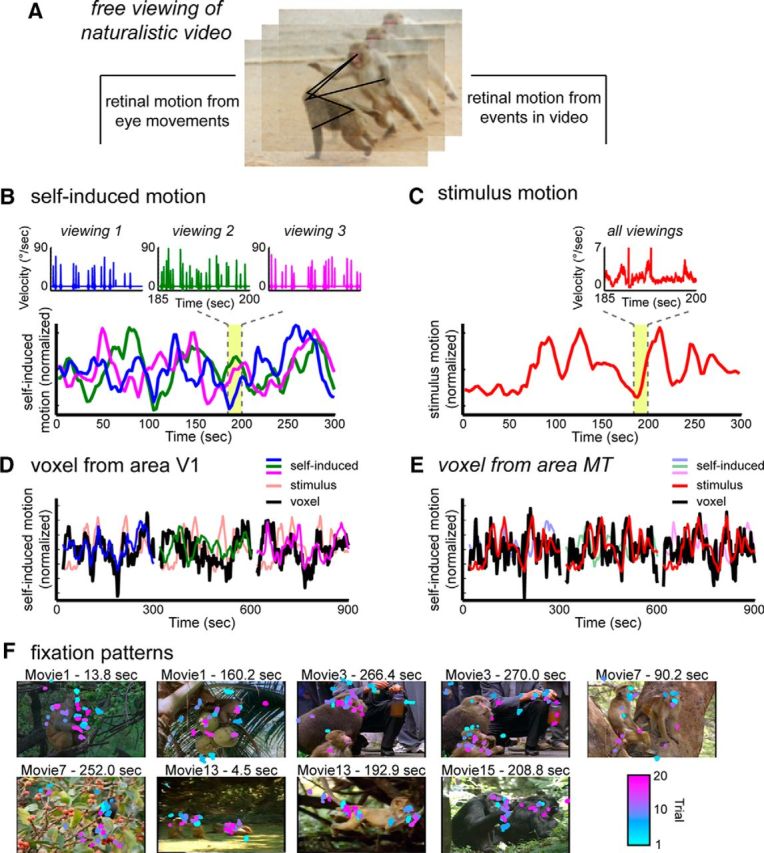

Figure 1.

Creation of time course models for self-induced motion and stimulus motion from the eye movement traces and video stimulus, respectively. A, Subjects freely viewed the events in dynamic movies; thus, two types of visual motion events were present on the retina. These two motion components were decomposed into time courses to create functional maps using the simultaneously recorded fMRI data. B, Self-induced (reafferent) motion was approximated using the measured eye velocity. Eye traces differed for different viewings. Inset, Detailed example. Consequently, a unique self-induced motion function was applied to each dataset (different colored traces). C, Stimulus motion was computed based on the optic flow within the video. For each frame, the mean speed of optic flow was calculated across the entire screen. For all viewings, the self-induced motion and stimulus motion time courses were convolved with a hemodynamic response function and then decimated to match the sampling rate of the fMRI scans. D, Example of self-induced motion responses in area V1 over a 15 min period. The V1 voxel time course closely follows that of the self-induced motion derived from the eye movements. E, Example of stimulus motion responses over same 15 min period in MT. The MT voxel time course closely follows that of the stimulus motion derived from the content of the video stimulus. F, Representative still frames from the presented movies overlaid with the eye position from between 12 and 20 viewings of each movie. The color of each fixation represents the trial from which it came. Fixations were taken within a 200 ms window overlapping the exhibited frame.

Creation of functional maps.

To create functional maps of the relative contribution of reafferent and stimulus motion, we computed the partial correlation for each of the two motion models for all voxels in the brain. In contrast to our previous study (Russ and Leopold, 2015), this procedure was performed on raw fMRI data for each viewing, rather than on data that was averaged over multiple viewings. Analyzing each viewing independently is necessary because of the unique pattern of eye movements that contribute to the fMRI responses. In our present analysis, the fMRI data from the large number of viewings for each movie were concatenated rather than averaged, and then matched with the corresponding reafference model and stimulus motion model (in the case of the stimulus motion, the model was repeated for multiple viewings of the same movie). Together, we analyzed concatenated time courses of 102,600 s in Monkey M1 and 77,100 s for Monkey M2. The partial correlation method allowed us to map the unique variance attributed to each of the motion models, by first regressing out the variance explained by the other model. Thus, the analysis presented here emphasized how each variable uniquely contributes to a given voxel's response. The resulting partial correlation r values served as the basis for functional activity maps, revealing voxels that were more strongly associated with one or the other motion model.

Determination of fMRI voxel speed tuning.

To assess the stimulus-based speed tuning of fMRI responses for individual voxels, we computed the percentage signal change during movie viewing as a function of the mean speed at each point in time. The stimulus motion model was binned into 10 different velocity ranges. For each velocity range, the average fMRI percentage signal change was calculated independently for each voxel. This analysis was applied to the main movies analyzed in this study, which were diverse in content but rich in social interaction (“social”), as well as to a dataset collected during the viewing of an additional set of movies, in which there was considerable motion but no animals were present (“nonsocial”).

Results

Extracting separate time courses for self-induced and stimulus motion

Two monkey subjects viewed 15 different 5 min videos, on average 20 times per video, for a total of >50 hours of video viewing in the MRI scanner (see Materials and Methods). The videos were composed of multiple, spliced segments taken from nature documentaries. The segments depicted animals, primarily macaque monkeys, exhibiting a range of social behaviors. Previous reports have shown that macaque gaze patterns show consistency across viewings related to movie content and that rhesus monkeys' viewing patterns tend to fall on regions of social interest, such as faces (Shepherd et al., 2010; Mosher et al., 2011; McMahon et al., 2015). The specific gaze trajectories differed across presentations; however, within a given scene, the eyes were often directed to particular features of interest, and most often to animals and social interactions (Fig. 1F). Thus, each presentation's unique combination of self-directed eye movements and fixed video content allowed us to investigate how these two determinants of retinal motion simultaneously affected fMRI responses throughout the brain (Fig. 1A).

For self-induced motion, we created a model time course using the recorded eye velocity on each trial as a surrogate (Fig. 1B). This provided a good approximation of the induced image velocity because each eye movement sweeps the entire image coherently across the retina. This phase of the analysis ignored the motion content of the movie itself in an attempt to isolate the reafferent component of the input. By contrast, for stimulus motion, we created a model time course based on the analysis of the video content, ignoring trial-specific patterns of eye movements (Fig. 1C). As in our previous study, we subjected each video stream to an optic flow algorithm (Elias et al., 2006), from which we computed the mean speed on the screen over time (Russ and Leopold, 2015) (see Materials and Methods).

Following the independent extraction of the two models (self-induced motion and stimulus motion) for each of the sessions, the time course of each was convolved with the hemodynamic response function for comparison with fMRI voxel time courses. Examples of the relationships between these motion models and the fMRI activity of single voxels are shown in Figure 1, D and E. Here, the raw time courses of voxels from cortical areas V1 and MT are shown with the motion time course models for the viewing of three different 5 min video clips. These examples highlight the correspondence between the temporal fluctuations of voxel time courses in V1 (Fig. 1D) and MT (Fig. 1E), and the self-induced (eye movement-based) motion model and the stimulus (video-based) motion models. We next investigate how these two determinants of fMRI responses are expressed in voxels across the brain in the form of whole-brain functional maps.

Distinct areas showing fMRI responses to self-induced and stimulus motion

The comparison of fMRI responses associated with the self-induced and stimulus motion time courses revealed a striking dissociation in the spatial patterns of responses (Fig. 2A,B; shown for Subject M2). Analysis of self-induced motion demonstrated prominent fMRI correlation in a large swath of the occipital cortex, corresponding to early retinotopic areas serving central vision (more detailed description below). Weak positive correlations were also observed in the caudal superior temporal sulcus (STS), in caudal aspects of the lateral inferotemporal cortex, and in parietal and prefrontal structures. The pattern of strong stimulation of early visual cortex resembled our previous findings related to the contrast and luminance of these naturalistic videos (Russ and Leopold, 2015), raising the possibility that the eye movement based reafference might indeed relate to those low-level features. However, further analysis revealed that the eye movement-based reafference model was not appreciably correlated with either feature (Table 1), suggesting that the model captured independent self-induced motion signals that robustly activated early visual areas.

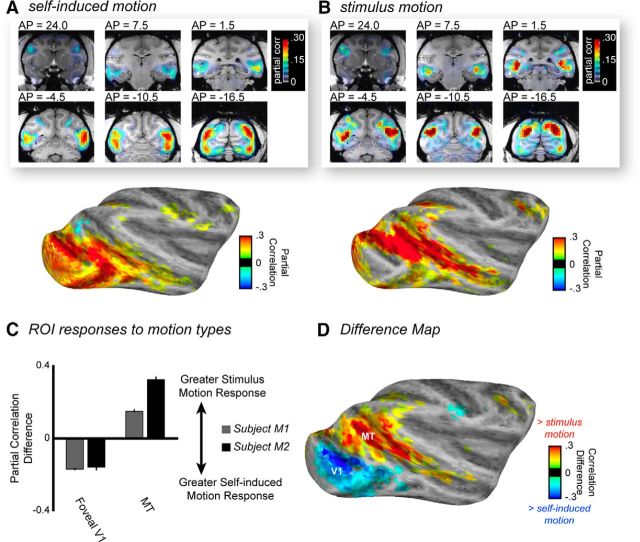

Figure 2.

Comparison of functional responses to self-induced motion versus stimulus motion throughout the brain. A, fMRI responses, from Subject M2, to self-induced motion, expressed as the partial correlation of voxel time courses throughout the brain over all sessions, are largely restricted to the occipital visual areas and caudolateral aspects of the inferotemporal cortex. B, fMRI responses to stimulus motion were nearly absent on the lateral surfaces of the occipital and temporal cortex but were strong throughout the STS. C, Quantitative comparison of the partial correlation of V1 and MT time courses with both motion types over all data sessions for both subjects. Error bars indicate SEM across voxels. D, fMRI map of the z-transformed difference in partial correlations, computed over all sessions for M2. There are distinct foci in the occipital and STS regions with opposite preferences for self-induced motion versus stimulus motion.

Table 1.

Self-induced motion's correlation with stimulus features

| Self-induced motion | Stimulus motion | Stimulus contrast | Stimulus luminance | Stimulus faces |

|---|---|---|---|---|

| Monkey 1 | 0.18 | 0.04 | −0.00 | 0.00 |

| Monkey 2 | 0.00 | 0.10 | −0.00 | −0.00 |

Independence of self-induced motion and other stimulus attributes in the videos. Values indicate the Pearson correlation coefficients for reafference motion feature of both subjects to stimulus motion and three additional stimulus features (contrast, luminance, and faces) that gave rise to prominent fMRI responses in our previous work (Russ and Leopold, 2015). Importantly for the present study, the self-induced motion model is not strongly correlated with any of these stimulus parameters, suggesting that the measured functional maps reflect reafferent input rather than a physical movie feature.

Stimulus motion gave rise to a very different functional correlation map. Stimulus motion contributed minimally to fMRI responses in the central representation of the retinotopic visual areas and instead was the principal determinant of activity within the motion areas of the STS, consistent with our previous natural viewing results (Russ and Leopold, 2015). In addition, moderate correlation was observed in regions of the intraparietal sulcus (area LIP) and arcuate sulcus (area F5). Importantly, the maps for the stimulus motion analysis and the self-induced motion analysis were derived from the same datasets, demonstrating the brain's separate processing of the two types of input despite their co-occurrence in time. Further analysis revealed a fundamental difference in the nature of motion responses in two regions of interest (ROIs), namely, foveal V1 and MT (Fig. 2C). The V1 ROI responded significantly more to self-induced motion, whereas the MT ROI responded significantly more to stimulus motion (two-way ANOVA: interaction between cortical area and motion type, M1: F(1,390) = 117.7, p < 0.01; M2: F(1,186) = 176.1, p < 0.01; main effect of cortical area, with MT showing stronger responses than V1, M1: F(1,390) = 355.5, p < 0.01; M2: F(1,186) = 140.0, p < 0.01).

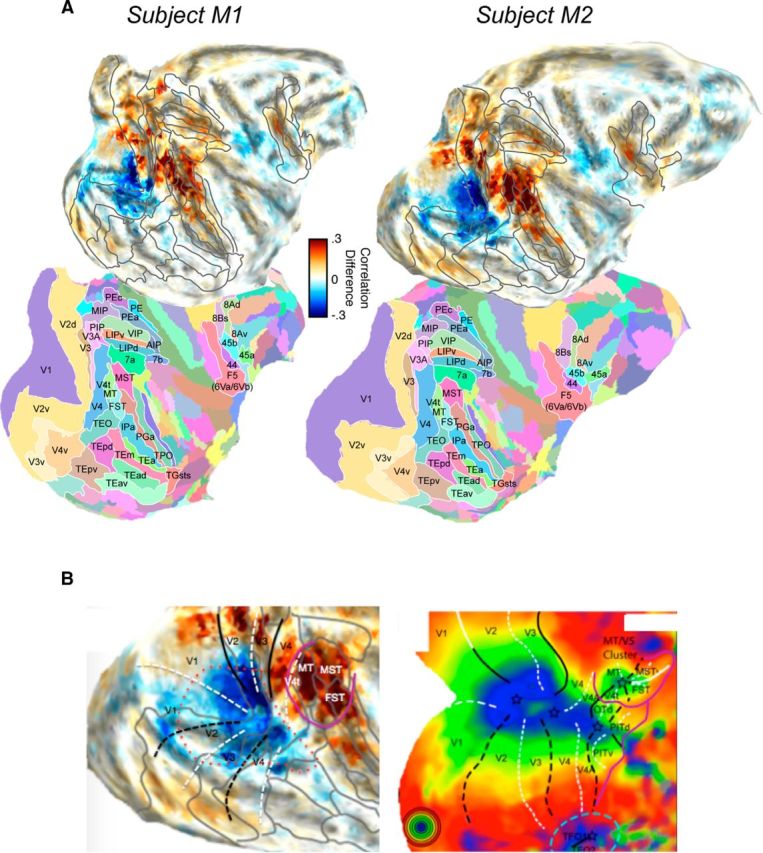

Across the brain, the relative preference for the two motion components is illustrated by the difference between the correlation maps (Fig. 2D), which shows reafference-dominated responses to be concentrated in a posterior ventral region and stimulus motion responses to be concentrated in an anterior dorsal region. The maps represent the difference of the Fischer z-transformed partial correlations on the inflated (Fig. 2D) or flattened (Fig. 3) cortical surface, with yellow/red areas representing regions more responsive to the stimulus motion component and cyan/blue areas representing regions more responsive to the reafferent motion component. In the case of the flattened maps, the Saleem and Logothetis histological atlas (Saleem and Logothetis, 2012; Reveley et al., 2016) was registered to the brain of each subject for further examination of the cortical areas differentially affected by the two types of motion. Approximations of areal boundaries from the atlas are superimposed on the unthresholded difference scores, allowing for area-by-area evaluation of the relative contribution of stimulus motion and self-induced motion during free viewing of the video.

Figure 3.

Detailed areal description of preference for self-induced motion versus stimulus motion for the two monkey subjects. A, Raw (unthresholded) differential activity is shown atop the corresponding areal atlas boundaries from the Saleem and Logothetis atlas (Saleem and Logothetis, 2012), registered independently to the two monkeys through warping of the 3D digital atlas volume (Reveley et al., 2016). Cyan/blue represent more responsivity to self-induced motion. Orange/red represent more responsivity to stimulus motion. B, Diagram comparison showing opposite biases for self-induced versus stimulus motion processing in two broad cortical regions representations of central vision. Left, The difference map from monkey M2, overlaid with a coarse approximate meridian boundaries based on the atlas transitions. Right, fMRI activity map in macaque showing the visual eccentricity for the same regions (adapted from Orban et al., 2014). There are two foci for central vision, corresponding to the continual foveal representation of the early retinotopic areas (red dotted line) and the caudal STS regions (surrounded by purple solid line). White dashed lines indicate the horizontal meridian. Black dashed lines indicate the vertical meridian.

Overall, the pattern of activity across the two subjects is markedly similar. The maps in Figure 3A reveal three potentially important aspects of the data that were observed in both animals. First, the activity associated with the two types of motion highlight the central visual representation of two distinct cortical foci (Kolster et al., 2009, 2014; Orban et al., 2014): one corresponding to the early retinotopic areas and one corresponding to caudal STS areas. While self-induced motion dominated the foveal representations that form a continuous chain from V1 to V4, stimulus motion dominated MT and its satellite areas in the STS (MST and FST), although as can be seen in Figure 2, self-induced motion did also activate these latter regions. A comparison of these two foci to the two visual representations of central vision emphasizes their opposite motion preferences (Fig. 3B). Second, other rostral areas within the STS responded to stimulus motion, including subregions of areas PGa, IPa, and TPO (Fig. 3A), which is consistent with high motion sensitivity demonstrated in face- and body-selective regions located in these areas (Polosecki et al., 2013; Fisher and Freiwald, 2015; Russ and Leopold, 2015). Third, the difference maps for both animals demonstrate a preference for stimulus motion in frontal cortex, localized to area F5, a multimodal region implicated in the processing of dynamic social stimuli and action observation (Rizzolatti et al., 2001; Nelissen et al., 2005). Together, these findings suggest consistent differentiation between self-induced and stimulus motion in cortical regions that contribute to the processing of socially relevant motion signals.

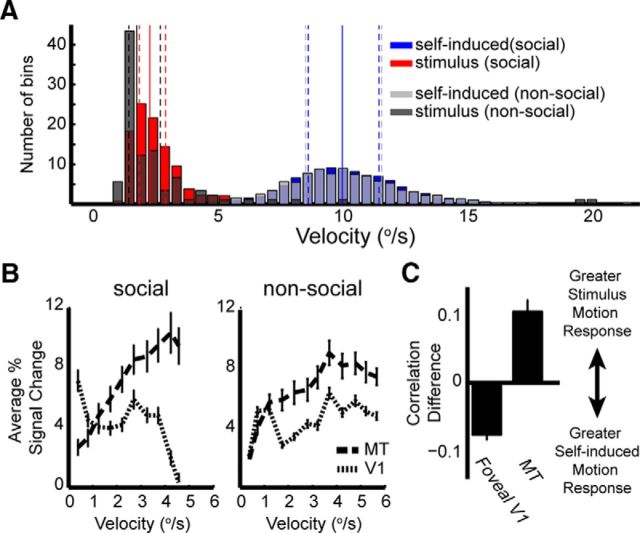

To further investigate the difference between V1 and MT, we asked whether the observed preferences for reafferent versus stimulus motion, respectively, might arise simply from the inherent speed tuning of voxels in the two areas. We reasoned that such speed preferences could act as a natural filter for the saccade- versus stimulus-related motion if the two motion types exhibit speeds over very different ranges. We thus computed the distribution of mean self-induced and stimulus velocities within 10 s epochs (Fig. 4A). This analysis revealed that the velocities of stimulus motion (0.20–5.4°/s) were, on average, considerably lower than those of self-induced motion (0.05–26.28°/s). We performed these analyses for the main movies in the study, here termed “social” to reflect their rich social content, as well as for an additional set of movies with motion content but no animals present (“nonsocial”). The results showed clearly that speed tuning could not account for the observed response differences between V1 and MT. Voxels in area MT responded preferentially to higher speeds than those in V1 (Fig. 4B), consistent with previous electrophysiological and fMRI findings (Mikami et al., 1986; Chawla et al., 1999), but incompatible with the preference of V1 for self-generated motion and the preference of MT for stimulus motion (Figs. 2C, 4C). Together, these results indicate that mechanisms other than straightforward speed tuning must account for the observed distribution of responses to reafferent versus stimulus motion during natural vision.

Figure 4.

ROI speed tuning. A, The mean velocities for each 10-s-long epoch of the two motion models for two sets of movies. The proportion of epochs (y-axis) for each motion type are shown for 0.5°/s bins (x-axis). Solid vertical lines indicate the mean velocity across all bins. Dashed lines indicate the associated quartiles for each motion model. B, ROI motion velocity tuning for MT and V1, from Subject 2, based on the stimulus motion model. Stimulus motion velocities were grouped into 10 equally spaced bins (x-axis). The average percentage signal change across all voxels in the ROIs is plotted for each bin. Left, Data from the original 15 social movies presented to the subjects. Right, Same analysis for an additional set of three movies that contained no animals but had a larger range of motions. C, Quantitative comparison of the correlation of V1 and MT time courses during the nonsocial movies with both motion types. Error bars indicate SEM across voxels.

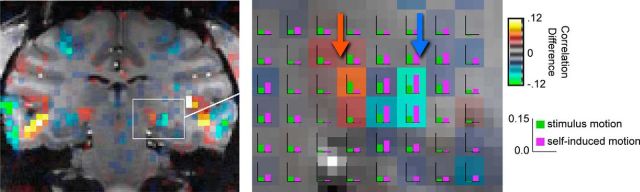

Finally, we investigated the results of the current analysis within the visual thalamus, in particular in the lateral geniculate nucleus (LGN) and the pulvinar. Both nuclei showed significant correlation with the two motion components. However, in the pulvinar, stimulus motion produced a stronger relative contribution than did reafferent motion in both subjects. In the LGN, the contribution of the two motion components differed between the two subjects. Whereas the two components led to similar LGN stimulation in Subject M2, the activity related to self-induced motion was stronger in Subject M1, revealing a double dissociation between the two thalamic nuclei that resembled the pattern in the cortex (Fig. 5).

Figure 5.

Thalamic responses to stimulus and self-induced visual motion in Subject M1. Left, The coronal image is overlaid with difference scores of the partial correlation analysis, including the pulvinar and LGN in each hemisphere. White box represents the region depicted in greater detail on the right. Bar plots in each voxel represent the partial correlation values for the self-induced motion (purple) versus stimulus motion (green). The pulvinar (orange arrow) has the opposite motion preference of the LGN (cyan arrow).

Discussion

In this study, we found a strong segregation of cortical areas responding preferentially to reafferent versus stimulus motion during free viewing. Preferred responses for self-induced motion were strongest in the central visual field representation of the early retinotopic areas and some portions of the lateral temporal cortex, whereas preferred responses for stimulus motion were strongest throughout most of the STS. These two foci with opposite preferences for the two types of motion are notably similar to the two principal central visual field representations in the visual cortex (Fig. 3B) (Kolster et al., 2009, 2014; Orban et al., 2014), indicating that they may be specialized differently in their treatment of self-generated motion signals during natural vision.

It is long known that moving visual stimuli elicit responses throughout the brain, with areas in the STS showing particular selectivity for simple (Bruce et al., 1981; Maunsell and Van Essen, 1983) or complex (Nelissen et al., 2006; Bartels et al., 2008; Jastorff et al., 2012) movement. Although motion sensitivity is traditionally associated with the dorsal processing stream, anatomical and functional studies indicate the existence of multiple parallel pathways within the ventral stream, including a motion-sensitive ventral pathway originating in the caudal motion-sensitive areas (Boussaoud et al., 1990; Kravitz et al., 2013; Fisher and Freiwald, 2015). Supporting the view that motion is a central feature of ventral stream processing, our previous fMRI investigation of free viewing of naturalistic videos identified motion as the primary driver of activity throughout the inferior temporal cortex, beyond the early retinotopic areas (Russ and Leopold, 2015). In the present study, we found that the self-induced motion derived from eye movements is approximately complementary in its spatial distribution, primarily influencing responses in central visual representations of the occipital cortex. In the next section, we address possible reasons for this difference in motion processing.

One possible interpretation of the current findings relates to the active compensation of self-induced motion signals in certain areas ascribed to extraretinal modulation, such as saccadic suppression, in which sensitivity to visual stimuli is diminished around the time of saccades. Evidence from several studies suggest that such compensation would be more prominent in the STS motion areas than elsewhere in early retinotopic areas or the ventral visual pathway. Such modulation, which likely originates in oculomotor structures, such as the superior colliculus, and is passed to the cortex via pathways through the thalamus, is thought to diminish the effects of self-induced motion during saccades and contribute to the perceptual stability during shifts of gaze (Sommer and Wurtz, 2002; Crapse and Sommer, 2008; Berman and Wurtz, 2011; Ibbotson and Krekelberg, 2011; Wurtz et al., 2011; Krock and Moore, 2014). Notably, neurons in several of the areas we found to be driven by stimulus motion exhibit presaccadic modulation, a hallmark of extraretinal input. For example, electrophysiological studies of areas MT and MST (Ibbotson et al., 2008; Bremmer et al., 2009; Inaba and Kawano, 2014) have demonstrated that neuronal activity can be suppressed just before saccade onset, suggesting sensitivity to internal signals related to eye movement generation. Similarly, the contribution of extraretinal oculomotor signals to these stimulus-driven areas has also been suggested by a 2-deoxyglucose study, where memory-guided saccades in complete darkness led to increased glucose utilization in MT, MST, and V4t (Bakola et al., 2007). By contrast, perisaccadic neural activity in early retinotopic areas is primarily postsaccadic and likely to be reafferent in nature (Wurtz, 1969; Moeller et al., 2007). While there is some evidence of extraretinal input to V1 (Sylvester and Rees, 2006; McFarland et al., 2015; Leopold and Logothetis, 1998), modulation is less widely reported, and has been shown to be weaker, than in the STS areas. Thus, one interpretation of the present results is that the STS regions incorporate extraretinal signals that make them less responsive to reafference, whereas the occipital region is driven more strongly by such input.

Our fMRI results in early visual cortex are also broadly consistent with neurophysiological studies investigating responses in V1, V2, and V3a to motion signals (Galletti et al., 1984, 1988, 1990). In those studies, which focused on stimulus- versus self-generated signals in the context of smooth pursuit eye movement, stronger responses for stimulus motion were uncommon in areas V1 and V2 (<15% of neurons) (Galletti et al., 1984, 1988), and were more frequently observed in area V3a (∼50% of neurons) (Galletti et al., 1990). We similarly found V3a to be more responsive to stimulus motion than self-induced motion in both animals, whereas this was not the case in V1 and V2 (Fig. 3A). These findings, together with a study showing a high level of perisaccadic receptive field remapping in V3a but not in V1 (Nakamura and Colby, 2002), further indicates an increasing extraretinal influence of retinal motion signals beyond primary visual cortex.

Our analysis placed most emphasis on the two foci showing maximal differences to the two motion components, located in the occipital cortex and motion-sensitive regions of the STS. It is worth mention that other structures, such as the LIP and FEF regions known to be involved in eye movements, showed responses to both self-induced and stimulus motion, albeit stronger responses to stimulus motion (Fig. 2B,D). The dominance of stimulus motion in these areas may be somewhat surprising, given these regions are often associated with saccade generation and planning (Bruce et al., 1985; Schiller and Tehovnik, 2005; Ipata et al., 2006; Bisley and Goldberg, 2010). However, it is important to reiterate the fundamental distinction between self-induced motion that we studied here (i.e., reafferent visual stimulation of the retina) and signals associated with saccade generation arising from oculomotor structures, such as efference copy, which we did not study. Cortical areas involved in saccade generation also show sensory responses to stimulus motion (Shadlen and Newsome, 1996; Shadlen et al., 1996; Eskandar and Assad, 2002; Fanini and Assad, 2009), which may account for the strong motion responses we observed. It may also be possible that the dominance of motion in areas, such as LIP and FEF, may be for similar reasons as the dominance in the STS, as they have also been shown to exhibit significant saccadic suppression that has been attributed to corollary discharge signals (Bremmer et al., 2009; Joiner et al., 2013). Further investigation will be necessary to discern potential similarities and differences among these cortical regions during natural vision.

fMRI is well suited for questions involving large-scale spatial mapping, such as in the present study, as it allows one the opportunity to investigate whole-brain responses. However, its reliance on hemodynamic signals limits its capacity to follow fast neural events; thus, functional mapping requires certain assumptions. It is therefore important to consider other interpretations of the present results as well. We have already addressed one possibility, namely, that the putative reafferent responses in posterior cortex are indeed driven by some feature of the video. This was of particular concern because the spatial pattern of contrast and luminance responses we observed in our previous study resembled the pattern seen here for self-induced motion (Russ and Leopold, 2015). However, we showed that this is unlikely because the eye velocity time course was nearly uncorrelated with the contrast and luminance models (Table 1).

Another possibility is that differential responsiveness to the two motion types arises not from extraretinal compensation, but from the basic receptive field sensitivity of neurons in the different areas. Our analysis of inherent speed tuning (Fig. 4) is inconsistent with the idea that this property could readily explain the observed differences between V1 and MT. However, it is also possible that the differences in receptive field size among areas might act as a type of selective filter, although with predictions that are less clear than those offered by speed tuning. In this scenario, the effective temporal frequency of contrast sweeping through a receptive field might differentially stimulate areas based on the receptive field size alone. The precise manner in which speed, receptive field size, and temporal frequency might determine the overall fMRI response is difficult to estimate. However, our results indicate that receptive field size alone cannot account for the differences we observe throughout the brain. For example, neurons in areas TEO an ventral V4 have receptive fields that are larger than those in central MT (Maguire and Baizer, 1984; Gattass et al., 1988; Boussaoud et al., 1991), but like foveal V1, they responded more to reafferent motion than to stimulus motion. Although we cannot exclude that the receptive field size has an influence on responses in these areas during natural vision, there is no straightforward explanation of the observed results based on receptive field size alone. Thus, at present, we tentatively favor the explanation that extraretinal signals serve to minimize the contribution of self-induced motion in the STS, with the acknowledgment that future studies are clearly needed.

In conclusion, by applying two fundamentally different modes of analysis to the same, extensive free-viewing datasets, we found that the visual motion caused by eye movements impacts the brain in a manner that is fundamentally different from visual motion that is physically present in a viewed stimulus. The two foci showing opposite preference for self-induced motion versus stimulus motion correspond to the two, circumscribed central-vision retinotopic representations in the visual cortex, suggesting that they may be specialized for different aspects of motion processing. Whether the basis of these results is due to the distribution of extraretinal influences, the selective processing of motion velocity, or an entirely different mechanism, our findings draw attention to the important consideration of eye movements in the accounting of visual responses during naturalistic viewing paradigms. Future studies that use both naturalistic and more standard visual paradigms will be important for attaining a deeper understanding of the mechanistic basis of the observed activity dissociation, as well as how this apparent division of labor in motion processing contributes to visual perception.

Footnotes

This work was supported by the Intramural Research Program of the National Institute of Mental Health Grants ZIA-MH002898 and ZIA-MH002838. Functional and anatomical MRI scanning was performed in the Neurophysiology Imaging Facility Core (National Institute of Mental Health, National Institute of Neurological Disorders and Stroke, National Eye Institute). We thank Dr. Daniel Glen for assistance with creating flat maps; George Dold and David Ide for design and machining related to data collection; and Dr. Frank Ye, Charles Zhu, David Yu, and Alex Clark for technical assistance.

The authors declare no competing financial interests.

References

- Bair W, O'Keefe LP. The influence of fixational eye movements on the response of neurons in area MT of the macaque. Vis Neurosci. 1998;15:779–786. doi: 10.1017/s0952523898154160. [DOI] [PubMed] [Google Scholar]

- Bakola S, Gregoriou GG, Moschovakis AK, Raos V, Savaki HE. Saccade-related information in the superior temporal motion complex: quantitative functional mapping in the monkey. J Neurosci. 2007;27:2224–2229. doi: 10.1523/JNEUROSCI.4224-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartels A, Zeki S, Logothetis NK. Natural vision reveals regional specialization to local motion and to contrast-invariant, global flow in the human brain. Cereb Cortex. 2008;18:705–717. doi: 10.1093/cercor/bhm107. [DOI] [PubMed] [Google Scholar]

- Berg DJ, Boehnke SE, Marino RA, Munoz DP, Itti L. Free viewing of dynamic stimuli by humans and monkeys. J Vis. 2009;9(19):1–15. doi: 10.1167/9.5.19. [DOI] [PubMed] [Google Scholar]

- Berman RA, Wurtz RH. Signals conveyed in the pulvinar pathway from superior colliculus to cortical area MT. J Neurosci. 2011;31:373–384. doi: 10.1523/JNEUROSCI.4738-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Attention, intention, and priority in the parietal lobe. Annu Rev Neurosci. 2010;33:1–21. doi: 10.1146/annurev-neuro-060909-152823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boussaoud D, Ungerleider LG, Desimone R. Pathways for motion analysis: cortical connections of the medial superior temporal and fundus of the superior temporal visual areas in the macaque. J Comp Neurol. 1990;296:462–495. doi: 10.1002/cne.902960311. [DOI] [PubMed] [Google Scholar]

- Boussaoud D, Desimone R, Ungerleider LG. Visual topography of area TEO in the macaque. J Comp Neurol. 1991;306:554–575. doi: 10.1002/cne.903060403. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Kubischik M, Hoffmann KP, Krekelberg B. Neural dynamics of saccadic suppression. J Neurosci. 2009;29:12374–12383. doi: 10.1523/JNEUROSCI.2908-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruce CJ, Goldberg ME, Bushnell MC, Stanton GB. Primate frontal eye fields: II. Physiological and anatomical correlates of electrically evoked eye movements. J Neurophysiol. 1985;54:714–734. doi: 10.1152/jn.1985.54.3.714. [DOI] [PubMed] [Google Scholar]

- Bruce C, Desimone R, Gross CG. Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. J Neurophysiol. 1981;46:369–384. doi: 10.1152/jn.1981.46.2.369. [DOI] [PubMed] [Google Scholar]

- Chawla D, Buechel C, Edwards R, Howseman A, Josephs O, Ashburner J, Friston KJ. Speed-dependent responses in V5: A replication study. Neuroimage. 1999;9:508–515. doi: 10.1006/nimg.1999.0432. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Crapse TB, Sommer MA. Corollary discharge circuits in the primate brain. Curr Opin Neurobiol. 2008;18:552–557. doi: 10.1016/j.conb.2008.09.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DiCarlo JJ, Maunsell JH. Form representation in monkey inferotemporal cortex is virtually unaltered by free viewing. Nat Neurosci. 2000;3:814–821. doi: 10.1038/77722. [DOI] [PubMed] [Google Scholar]

- Einhäuser W, König P. Getting real-sensory processing of natural stimuli. Curr Opin Neurobiol. 2010;20:389–395. doi: 10.1016/j.conb.2010.03.010. [DOI] [PubMed] [Google Scholar]

- Elias DO, Land BR, Mason AC, Hoy RR. Measuring and quantifying dynamic visual signals in jumping spiders. J Comp Physiol A Neuroethol Sens Neural Behav Physiol. 2006;192:785–797. doi: 10.1007/s00359-006-0116-7. [DOI] [PubMed] [Google Scholar]

- Eskandar EN, Assad JA. Distinct nature of directional signals among parietal cortical areas during visual guidance. J Neurophysiol. 2002;88:1777–1790. doi: 10.1152/jn.2002.88.4.1777. [DOI] [PubMed] [Google Scholar]

- Fanini A, Assad JA. Direction selectivity of neurons in the macaque lateral intraparietal area. J Neurophysiol. 2009;101:289–305. doi: 10.1152/jn.00400.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher C, Freiwald WA. Contrasting specializations for facial motion within the macaque face-processing system. Curr Biol. 2015;25:261–266. doi: 10.1016/j.cub.2014.11.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galletti C, Squatrito S, Battaglini PP, Grazia Maioli M. “Real-motion” cells in the primary visual cortex of macaque monkeys. Brain Res. 1984;301:95–110. doi: 10.1016/0006-8993(84)90406-2. [DOI] [PubMed] [Google Scholar]

- Galletti C, Battaglini PP, Aicardi G. “Real-motion” cells in visual area V2 of behaving macaque monkeys. Exp Brain Res. 1988;69:279–288. doi: 10.1007/BF00247573. [DOI] [PubMed] [Google Scholar]

- Galletti C, Battaglini PP, Fattori P. “Real-motion” cells in area V3A of macaque visual cortex. Exp Brain Res. 1990;82:67–76. doi: 10.1016/S0079-6123(08)62591-1. [DOI] [PubMed] [Google Scholar]

- Gattass R, Sousa AP, Gross CG. Visuotopic organization and extent of V3 and V4 of the macaque. J Neurosci. 1988;8:1831–1845. doi: 10.1523/JNEUROSCI.08-06-01831.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanson SJ, Hanson C, Halchenko Y, Matsuka T, Zaimi A. Bottom-up and top-down brain functional connectivity underlying comprehension of everyday visual action. Brain Struct Funct. 2007;212:231–244. doi: 10.1007/s00429-007-0160-2. [DOI] [PubMed] [Google Scholar]

- Hasson U, Nir Y, Levy I, Fuhrmann G, Malach R. Intersubject synchronization of cortical activity during natural vision. Science. 2004;303:1634–1640. doi: 10.1126/science.1089506. [DOI] [PubMed] [Google Scholar]

- Hasson U, Malach R, Heeger DJ. Reliability of cortical activity during natural stimulation. Trends Cogn Sci. 2010;14:40–48. doi: 10.1016/j.tics.2009.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holst Von E, Mittelstaedt H. The principle of reafference: interactions between the central nervous system and the peripheral organs [Translated] Die Naturwissenschften. 1950;37:464–476. doi: 10.1007/BF00622503. [DOI] [Google Scholar]

- Huth AG, Nishimoto S, Vu AT, Gallant JL. A continuous semantic space describes the representation of thousands of object and action categories across the human brain. Neuron. 2012;76:1210–1224. doi: 10.1016/j.neuron.2012.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibbotson M, Krekelberg B. Visual perception and saccadic eye movements. Curr Opin Neurobiol. 2011;21:553–558. doi: 10.1016/j.conb.2011.05.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibbotson MR, Crowder NA, Cloherty SL, Price NS, Mustari MJ. Saccadic modulation of neural responses: possible roles in saccadic suppression, enhancement, and time compression. J Neurosci. 2008;28:10952–10960. doi: 10.1523/JNEUROSCI.3950-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Inaba N, Kawano K. Neurons in cortical area MST remap the memory trace of visual motion across saccadic eye movements. Proc Natl Acad Sci U S A. 2014;111:7825–7830. doi: 10.1073/pnas.1401370111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ipata AE, Gee AL, Goldberg ME, Bisley JW. Activity in the lateral intraparietal area predicts the goal and latency of saccades in a free-viewing visual search task. J Neurosci. 2006;26:3656–3661. doi: 10.1523/JNEUROSCI.5074-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jastorff J, Popivanov ID, Vogels R, Vanduffel W, Orban GA. Integration of shape and motion cues in biological motion processing in the monkey STS. Neuroimage. 2012;60:911–921. doi: 10.1016/j.neuroimage.2011.12.087. [DOI] [PubMed] [Google Scholar]

- Joiner WM, Cavanaugh J, Wurtz RH. Compression and suppression of shifting receptive field activity in frontal eye field neurons. J Neurosci. 2013;33:18259–18269. doi: 10.1523/JNEUROSCI.2964-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolster H, Mandeville JB, Arsenault JT, Ekstrom LB, Wald LL, Vanduffel W. Visual field map clusters in macaque extrastriate visual cortex. J Neurosci. 2009;29:7031–7039. doi: 10.1523/JNEUROSCI.0518-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolster H, Janssens T, Orban GA, Vanduffel W. The retinotopic organization of macaque occipitotemporal cortex anterior to V4 and caudoventral to the middle temporal (MT) cluster. J Neurosci. 2014;34:10168–10191. doi: 10.1523/JNEUROSCI.3288-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz DJ, Saleem KS, Baker CI, Ungerleider LG, Mishkin M. The ventral visual pathway: an expanded neural framework for the processing of object quality. Trends Cogn Sci. 2013;17:26–49. doi: 10.1016/j.tics.2012.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krock RM, Moore T. The influence of gaze control on visual perception: eye movements and visual stability. Cold Spring Harb Symp Quant Biol. 2014;79:123–130. doi: 10.1101/sqb.2014.79.024836. [DOI] [PubMed] [Google Scholar]

- Leite FP, Tsao D, Vanduffel W, Fize D, Sasaki Y, Wald LL, Dale AM, Kwong KK, Orban GA, Rosen BR, Tootell RB, Mandeville JB. Repeated fMRI using iron oxide contrast agent in awake, behaving macaques at 3 Tesla. Neuroimage. 2002;16:283–294. doi: 10.1006/nimg.2002.1110. [DOI] [PubMed] [Google Scholar]

- Leopold DA, Logothetis NK. Microsaccades differentially modulate neural activity in the striate and extrastriate visual cortex. Exp Brain Res. 1998;123:341–345. doi: 10.1007/s002210050577. [DOI] [PubMed] [Google Scholar]

- Maguire WM, Baizer JS. Visuotopic organization of the prelunate gyrus in rhesus monkey. J Neurosci. 1984;4:1690–1704. doi: 10.1523/JNEUROSCI.04-07-01690.1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marsman JB, Renken R, Haak KV, Cornelissen FW. Linking cortical visual processing to viewing behavior using fMRI. Front Syst Neurosci. 2013;7:109. doi: 10.3389/fnsys.2013.00109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maunsell JH, Van Essen DC. Functional properties of neurons in middle temporal visual area of the macaque monkey: I. Selectivity for stimulus direction, speed, and orientation. J Neurophysiol. 1983;49:1127–1147. doi: 10.1152/jn.1983.49.5.1127. [DOI] [PubMed] [Google Scholar]

- McFarland JM, Bondy AG, Saunders RC, Cumming BG, Butts DA. Saccadic modulation of stimulus processing in primary visual cortex. Nat Commun. 2015;6:8110. doi: 10.1038/ncomms9110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McMahon DB, Russ BE, Elnaiem HD, Kurnikova AI, Leopold DA. Single-unit activity during natural vision: diversity, consistency, and spatial sensitivity among AF face patch neurons. J Neurosci. 2015;35:5537–5548. doi: 10.1523/JNEUROSCI.3825-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mikami A, Newsome WT, Wurtz RH. Motion selectivity in macaque visual-cortex: 1. Mechanisms of direction and speed selectivity in extrastriate area MT. J Neurophysiol. 1986;55:1308–1327. doi: 10.1152/jn.1986.55.6.1308. [DOI] [PubMed] [Google Scholar]

- Moeller GU, Kayser C, König P. Saccade-related activity in areas 18 and 21a of cats freely viewing complex scenes. Neuroreport. 2007;18:401–404. doi: 10.1097/WNR.0b013e3280125686. [DOI] [PubMed] [Google Scholar]

- Mosher CP, Zimmerman PE, Gothard KM. Videos of conspecifics elicit interactive looking patterns and facial expressions in monkeys. Behav Neurosci. 2011;125:639–652. doi: 10.1037/a0024264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamura K, Colby CL. Updating of the visual representation in monkey striate and extrastriate cortex during saccades. Proc Natl Acad Sci U S A. 2002;99:4026–4031. doi: 10.1073/pnas.052379899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelissen K, Luppino G, Vanduffel W, Rizzolatti G, Orban GA. Observing others: multiple action representation in the frontal lobe. Science. 2005;310:332–336. doi: 10.1126/science.1115593. [DOI] [PubMed] [Google Scholar]

- Nelissen K, Vanduffel W, Orban GA. Charting the lower superior temporal region, a new motion-sensitive region in monkey superior temporal sulcus. J Neurosci. 2006;26:5929–5947. doi: 10.1523/JNEUROSCI.0824-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noda H, Adey WR. Retinal ganglion cells of the cat transfer information on saccadic eye movement and quick target motion. Brain Res. 1974;70:340–345. doi: 10.1016/0006-8993(74)90323-0. [DOI] [PubMed] [Google Scholar]

- Orban GA, Zhu Q, Vanduffel W. The transition in the ventral stream from feature to real-world entity representations. Front Psychol. 2014;5:695. doi: 10.3389/fpsyg.2014.00695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polosecki P, Moeller S, Schweers N, Romanski LM, Tsao DY, Freiwald WA. Faces in motion: selectivity of macaque and human face processing areas for dynamic stimuli. J Neurosci. 2013;33:11768–11773. doi: 10.1523/JNEUROSCI.5402-11.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reveley C, Gruslys A, Ye FQ, Glen D, Samaha J, Russ BE, Saad Z, Seth A, Leopold DA, Saleem KS. Three-dimensional digital template atlas of the macaque brain. Cerebral Cortex. 2016 doi: 10.1093/cercor/bhw248. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci. 2001;2:661–670. doi: 10.1038/35090060. [DOI] [PubMed] [Google Scholar]

- Russ BE, Leopold DA. Functional MRI mapping of dynamic visual features during natural viewing in the macaque. Neuroimage. 2015;109:84–94. doi: 10.1016/j.neuroimage.2015.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saleem KS, Logothetis NK. A combined MRI and histology atlas of the rhesus monkey brain in stereotaxic coordinates. San Diego: Academic; 2012. [Google Scholar]

- Schiller PH, Tehovnik EJ. Neural mechanisms underlying target selection with saccadic eye movements. Prog Brain Res. 2005;149:157–171. doi: 10.1016/S0079-6123(05)49012-3. [DOI] [PubMed] [Google Scholar]

- Shadlen MN, Newsome WT. Motion perception: seeing and deciding. Proc Natl Acad Sci U S A. 1996;93:628–633. doi: 10.1073/pnas.93.2.628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadlen MN, Britten KH, Newsome WT, Movshon JA. A computational analysis of the relationship between neuronal and behavioral responses to visual motion. J Neurosci. 1996;16:1486–1510. doi: 10.1523/JNEUROSCI.16-04-01486.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shepherd SV, Steckenfinger SA, Hasson U, Ghazanfar AA. Human-monkey gaze correlations reveal convergent and divergent patterns of movie viewing. Curr Biol. 2010;20:649–656. doi: 10.1016/j.cub.2010.02.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sommer MA, Wurtz RH. A pathway in primate brain for internal monitoring of movements. Science. 2002;296:1480–1482. doi: 10.1126/science.1069590. [DOI] [PubMed] [Google Scholar]

- Sylvester R, Rees G. Extraretinal saccadic signals in human LGN and early retinotopic cortex. Neuroimage. 2006;30:214–219. doi: 10.1016/j.neuroimage.2005.09.014. [DOI] [PubMed] [Google Scholar]

- Thiele A, Henning P, Kubischik M, Hoffmann KP. Neural mechanisms of saccadic suppression. Science. 2002;295:2460–2462. doi: 10.1126/science.1068788. [DOI] [PubMed] [Google Scholar]

- Thier P, Erickson RG. Responses of visual-tracking neurons from cortical area MST-I to visual, eye and head motion. Eur J Neurosci. 1992;4:539–553. doi: 10.1111/j.1460-9568.1992.tb00904.x. [DOI] [PubMed] [Google Scholar]

- Toyama K, Komatsu Y, Shibuki K. Integration of retinal and motor signals of eye movements in striate cortex cells of the alert cat. J Neurophysiol. 1984;51:649–665. doi: 10.1152/jn.1984.51.4.649. [DOI] [PubMed] [Google Scholar]

- Van Essen DC, Drury HA, Dickson J, Harwell J, Hanlon D, Anderson CH. An integrated software suite for surface-based analyses of cerebral cortex. J Am Med Inform Assoc. 2001;8:443–459. doi: 10.1136/jamia.2001.0080443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vinje WE, Gallant JL. Sparse coding and decorrelation in primary visual cortex during natural vision. Science. 2000;287:1273–1276. doi: 10.1126/science.287.5456.1273. [DOI] [PubMed] [Google Scholar]

- Westheimer G. Mechanism of saccadic eye movements. Arch Ophthalmol. 1954;52:710–724. doi: 10.1001/archopht.1954.00920050716006. [DOI] [PubMed] [Google Scholar]

- Wurtz RH. Visual cortex neurons: response to stimuli during rapid eye movements. Science. 1968;162:1148–1150. doi: 10.1126/science.162.3858.1148. [DOI] [PubMed] [Google Scholar]

- Wurtz RH. Comparison of effects of eye movements and stimulus movements on striate cortex neurons of the monkey. J Neurophysiol. 1969;32:987–994. doi: 10.1152/jn.1969.32.6.987. [DOI] [PubMed] [Google Scholar]

- Wurtz RH, McAlonan K, Cavanaugh J, Berman RA. Thalamic pathways for active vision. Trends Cogn Sci. 2011;15:177–184. doi: 10.1016/j.tics.2011.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xiang QS, Ye FQ. Correction for geometric distortion and N/2 ghosting in EPI by phase labeling for additional coordinate encoding (PLACE) Magn Reson Med. 2007;57:731–741. doi: 10.1002/mrm.21187. [DOI] [PubMed] [Google Scholar]

- Zuber BL, Stark L, Cook G. Microsaccades and the velocity-amplitude relationship for saccadic eye movements. Science. 1965;150:1459–1460. doi: 10.1126/science.150.3702.1459. [DOI] [PubMed] [Google Scholar]