Abstract

People look at what they are interested in, and their emotional expressions tend to indicate how they feel about the objects at which they look. The combination of gaze direction and emotional expression can therefore convey important information about people’s evaluations of the objects in their environment, and can even influence the subsequent evaluations of those objects by a third party, a phenomenon known as the emotional gaze effect. The present study extended research into the effect of emotional gaze cues by investigating whether they affect evaluations of the most important aspect of our social environment–other people–and whether the presence of multiple gaze cues enhances this effect. Over four experiments, a factorial within-subjects design employing both null hypothesis significance testing and a Bayesian statistical analysis replicated previous work showing an emotional gaze effect for objects, but found strong evidence that emotional gaze cues do not affect evaluations of other people, and that multiple, simultaneously presented gaze cues do not enhance the emotional gaze effect for either the evaluations of objects or of people. Overall, our results suggest that emotional gaze cues have a relatively weak influence on affective evaluations, especially of those aspects of our environment that automatically elicit affectively valenced reactions, including other humans.

Introduction

Humans are experts at interpreting the non-verbal signals produced by others [1, 2]. Among the many ways in which we signal with our bodies, the direction of our gaze–which indicates the object, person or location that we are currently interested in—appears to be a particularly salient cue [3, 4]. Recent research suggests that paying attention to others’ gaze cues can do more than simply alert us to the existence of objects in our environment that we have previously overlooked; it can affect how much we like those objects [3, 5–8].

The importance of gaze cues and the ability to interpret them is reflected in a wide range of evidence. The ability to detect and follow another’s gaze is present from infancy and contributes to the development of joint attention, in which the infant (or adult) following the other’s gaze is aware that both are focussing on the same object in the environment [9–11]. Both humans and primates appear to have specialised brain areas for the processing of gaze information, including the superior temporal sulcus [10, 12–14]. Recent studies indicate that adult patients with cortical blindness (e.g., due to destruction of the primary visual cortex) can detect both gaze direction and emotional expressions through subcortical structures including the amygdala, suggesting a prominent evolutionary role for processing emotion and gaze cues [15–17].

In combination, this evidence suggests that the ability to follow and decode others’ gaze cues is a crucial aspect of social life [18]. Despite this, there is little direct evidence of how gaze cues affect our evaluations of the most important aspect of our social environment–other people. The present study aimed to address this gap in the literature by examining how gaze cues and the emotional expressions that sometimes accompany them influence our initial impressions of others.

Gaze cues orient attention and influence affective evaluations of objects

Early work on gaze cues examined their effects on the orientation of attention. Friesen and Kingstone [19] found that participants were quicker to detect a target (a letter) when it was presented on the side of the screen at which a nonpredictive (valid 50% of the time), emotionally neutral, centrally presented cue face had gazed. This finding has proved to be highly robust, and there is now a large body of evidence that people orient attention automatically in response to gaze cues, both overtly (e.g., measured by saccades [20–24]) and covertly (e.g., measured using reaction time data [3, 5, 25–27]). Participants appear to be unable to suppress their responses to gaze cues even when they are instructed that the cues are counterpredictive (i.e., when the target will appear in the non-cued location more often than in the cued location [22, 28]. Galfano et al. [29] demonstrated this inability to suppress particularly clearly by observing a gaze cueing effect even after participants were told with 100% certainty where the target would appear before the presentation of a gaze or arrow cue.

Interestingly, while one might expect gaze direction to be a particularly salient cue given its biological significance, evidence from the gaze cueing literature indicates that symbolic cues such as arrows orient attention in a very similar fashion, including when they are counterpredictive [22, 23, 29–31]; though cf. [28]. Results using neuroimaging techniques are also equivocal; while some studies report evidence that gaze and arrow cues are processed by distinct networks [32], others have found substantial overlap [33]. Birmingham, Bischof and Kingstone [34] suggest that one way to distinguish between the effects of gaze and arrow cues is to examine which form of spatial cue participants attend to when both are embedded in a complex visual scene. The authors had participants freely view street scenes that included both people and arrows, and found a strong tendency for participants to orient to people’s eye regions rather than arrows.

Another extension of the gaze cueing paradigm which suggests that people might process gaze cues differently than symbolic cues comes from Bayliss et al. [3], in which participants had to classify laterally presented common household objects (e.g., a mug, a pair of pliers). A photograph of an emotionally neutral face served as a central, nonpredictive cue. Bayliss et al. [3] observed the standard gaze cueing effect; participants were quicker to classify those objects that had been gazed at by the cue face. In addition, they asked participants to indicate how much they liked the objects, and found that those objects that were consistently looked at by the cue face received higher ratings than uncued objects. Arrow cues, on the other hand, produced a cueing effect on reaction times, but had no effect on object ratings. This “liking effect” has since been replicated in a number of similar experiments [6–8]. Together, these findings suggest that we might seek out and orient ourselves in response to the gaze of others in part because gaze cues help us “evaluate the potential value of objects in the world” (p. 1065) [3].

The role of emotional expressions

The superior temporal sulcus, which is thought to be involved in processing both gaze direction [12, 35, 36] and emotional expression [37, 38], is highly interconnected with the amygdala, which is also involved in processing both emotions and gaze direction [17, 35, 39, 40]. Behavioural evidence for a possible link between processing of gaze cues and emotional expressions comes from studies using Garner’s [41] dimensional filtering task. A number of studies have shown that in certain circumstances (e.g., depending on how difficult to discriminate each dimension is), processing of gaze direction and emotional expression interfere with each other [40, 42–44].

Despite the foregoing, studies investigating the interaction between gaze cues and emotional expressions in the attention cueing paradigm have generated mixed evidence. In a comprehensive series of experiments, Hietanen and Leppanen [27] tested whether cue faces expressing different emotions (cue faces were photographs of neutral, happy, angry, or fearful faces) would lead to differences in attentional orienting. They found that attentional cueing effects were similar regardless of emotional expression. A number of other studies have also failed to find any modulation of reaction times by the interaction of gaze cue and emotional expression (see, e.g., Bayliss et al. [5], who found no difference in cueing comparing happy and disgusted cue faces; Galfano et al. [45], who used fearful, disgusted and neutral cues; and Holmes, Mogg, Garcia, & Bradley [46] and Rigato et al. [47], who used neutral, fearful and happy cues). The failure to observe any significant influence of emotion on gaze cueing effects is particularly puzzling in relation to fearful expressions, because both theory and some empirical findings suggest that people should be especially responsive to stimuli that signal a potential threat in the environment (the behavioural urgency hypothesis [48–50]). Strengthening the evidence against the application of the behavioural urgency hypothesis to the gaze cueing paradigm, both Galfano et al. [45] and Holmes et al. [46] reported no significant enhancement of cueing by fearful gaze even among participants measuring higher in trait anxiety.

However, other studies have found enhanced cueing effects for fearful gaze cues (compared to happy or neutral cues) among subsets of participants high in trait fearfulness and anxiety [51–53]; still others have shown that participants are more responsive to fearful gaze cues in certain experimental contexts. For example, Kuhn et al. [49] showed that when fearful cue faces appear only rarely (in this experiment, on two trials out of every 97), they do enhance attentional orienting compared with (equally rare) happy cue faces. The nature of the stimuli and the evaluative context of the task also appear to be important. There is evidence that people orient more quickly in response to fearful cues when target stimuli include threatening items, like snarling dogs [54, 55]. Pecchinenda, Pes, Ferlazzo and Zoccolotti [56] reported stronger cueing effects of fearful and disgusted (compared to neutral and happy) cue faces when participants were asked to rate target words as positive or negative; however, when the task was simply to determine whether the letters of the target words were upper- or lower-case, the cue face’s emotion had no impact on gaze cueing effects.

Further evidence that experimental context affects how participants process emotional gaze cues comes from Bayliss et al. [5]. In this extension of Bayliss et al. [3], participants were asked to rate kitchen and garage items that had been consistently cued or gazed away from by emotionally expressive cue faces. The authors did not observe any difference in cueing effects (measured by reaction time) for happy versus disgusted cue faces; there was, however, an interaction when it came to object ratings, with objects cued with a happy expression receiving the highest ratings, objects cued with a disgusted expression receiving the lowest ratings, and uncued objects being rated in between regardless of the cue face’s emotion. This interaction indicates that participants integrated gaze cues with emotional expressions when they were evaluating target objects. Bayliss et al. [5] reported a larger liking effect than Bayliss et al. [3], suggesting that emotionally expressive gaze cues enhanced the liking effect compared with neutral gaze cues. This makes intuitive sense; for example, one would expect a happy gaze towards an object to be a stronger signal of liking than a neutral gaze.

Together, the findings outlined above suggest that the human response to gaze cues is sophisticated and complex, and that careful experimental design is necessary to uncover the subtleties of the process. If a cue face’s emotional expressions are meaningless in an experimental paradigm, one should not necessarily expect them to have any effect; likewise, if an experiment is devoid of any social context, arrow cues appear to orient attention just as strongly as gaze cues [34, 54]. While researchers have begun to elucidate how contextual details such as the nature of stimuli and the meaningfulness of emotion influence orientation of attention in response to gaze cues, there is still much room for exploration of how similar contextual details might affect the way in which gaze cues influence evaluations.

The effect of gaze cues on evaluations of other people

As noted above, a number of studies have replicated Bayliss and colleagues’ findings that gaze cues can influence participants’ affective evaluations of objects. However, the majority of this work has employed both neutral cue faces and target stimuli; for example, stimuli have included common household objects [3, 5, 57]; paintings specifically chosen for their neutrality [58]; alphanumeric characters [7]; and unknown brands of bottled water [8]; and, with the exception of Bayliss et al. [5], each of these studies used emotionally neutral cue faces. In the present study, we sought to extend this work by examining the influence of gaze cues on evaluations of other people; that is, we were interested in testing whether seeing a cue face gaze towards a target face with a positive expression would result in that target face being considered more likeable than a target face gazed at with a negative expression.

There is reason to think that faces might be less susceptible to a liking effect than the neutral stimuli discussed above. Unlike mugs and bottled water, faces evoke strong, affectively valenced evaluations automatically. Willis and Todorov [59] have shown that stable inferences about traits such as attractiveness, likeability, trustworthiness and competence are made after exposure to unfamiliar faces of only 100 milliseconds. In these circumstances, the effect of gaze cues might be undetectable unless they are quite large. However, there is evidence to suggest that evaluations of affectively valenced items and other people can be influenced by gaze cues. Soussignan et al. [60] found that gaze cues from emotionally expressive cue faces (joyful, neutral, and disgusted) had a small effect on ratings of familiar food items. Like faces, food automatically triggers valenced evaluations; the “pleasantness” of food products is automatically processed and is linked to autonomic processes such as mouth-watering and lip-sucking [61, 62]. Jones et al. [63] reported that evaluations of other people are influenced by emotional gaze cues in the context of mate selection. In that study, two male target faces were presented in each trial; a female cue face gazed towards one of them with a positive expression, and ignored the other. Participants were then asked to indicate which of the two target faces they found more attractive. Female participants rated a man who had been smiled at by a female cue face as more attractive than a man who had been ignored; male participants showed the opposite effect. Jones et al. [63] suggested that female participants were exhibiting “mate choice copying effects,” while males were responding to within-sex competition (p. 899).

There are also theoretical reasons to expect that emotional gaze cues affect evaluations. Accurately evaluating other people is very important; aligning ourselves with others who are uncooperative is costly, as is the failure to identify likely collaborators [64, 65]. It would therefore make sense for people to use all potential sources of information–including emotional gaze cues–when evaluating other people so as to make the best possible decisions. Evidence from the gossip literature shows that people do indeed use information about others to update their evaluations and guide behaviour [66–68].

For these reasons, we predicted that emotional gaze cues would affect participants’ evaluations of novel others in the present study. That is, we expected to observe an interaction effect between the cue face’s emotional expression and gaze direction, such that target faces looked at with a positive expression would be liked more than target faces looked at with a negative expression, and that target faces that were looked away from would receive medium ratings, regardless of the cue face’s emotional expression (Hypothesis 1). Our method was largely similar to that of Bayliss et al. [5], with the exception of a multiple cue face condition (discussed below), and the expressions of our cue faces. Because the stimuli we used were not threatening or disgusting, fearful and disgusted cue faces were not appropriate. Most relevant to the evaluative context of our experiment were expressions of liking and disliking; as such, cue face models were instructed to pretend that they were looking at someone they liked and were happy to see for the positive expression, and to pretend they were looking at someone they disliked and mistrusted for the negative expression. Unlike Jones et al. [63], our target faces were diverse in gender and age, and participants rated how much they liked target faces individually rather than comparing their attractiveness.

Given our experimental context (i.e., no threatening or aversive stimuli, no fearful or disgusted cue faces), we did not expect to see an effect of emotion on reaction times; however, reaction times were recorded and tested for a standard gaze cueing effect (i.e., faster reaction times at cued locations) as a manipulation check.

The effect of multiple cue faces

We also integrated recent developments in the gaze cueing literature by including a multiple cue face condition. As Capozzi et al. noted [57], a single person’s positive attitude towards an object conveys information of somewhat limited value. The object might indeed be generally desirable, or it might be desirable only to the individual observing it for idiosyncratic reasons. When a number of people react in the same way, however, their consensus is more likely to provide valuable information. To test this, Capozzi et al. [57] modified the gaze cueing paradigm of Bayliss et al. [3] such that some objects were consistently cued by the same emotionally neutral face (the single cue condition), while other objects were cued by a different cue face in each of the task’s seven blocks (the multiple cue condition). Capozzi et al. [57] found that gaze cues exerted a stronger effect on evaluations in the multiple cue condition.

In the present study, we extended the work of Capozzi et al. [57] in two ways. Firstly, we examined the effect of gaze cues using emotionally expressive rather than neutral cue faces. Secondly, in order to reduce the memory burden on participants and enable them to more clearly distinguish between the single and multiple cue conditions, our multiple cue face condition involved presenting the multiple cues faces simultaneously rather than individually in separate blocks. In line with Capozzi et al. [57], we expected this emotional gaze effect to be stronger when there were multiple cue faces (Hypothesis 2).

Experiment 1

Method

This research was approved by the Psychological Sciences Human Ethics Advisory Group (HEAG) at the University of Melbourne (Ethics ID: 1543939). All participants gave written consent to participate in the experiment after reading a 'Plain Language Statement' outlining the nature of the experiment in a manner approved by the HEAG. Participants were tested for normal or corrected-to-normal vision and received course credit for participating.

Participants were first year undergraduate students in the School of Psychological Sciences at the University of Melbourne, some of whom may not have turned 18. These students were considered competent to give informed consent given that the experiments were simple with no known risks. This procedure was approved by the HEAG. Participants for all subsequent experiments were recruited in the same way.

Participants

Thirty-six participants (32 females) with a mean age of 18.8 years (SD = 1.12, range = 17–22 years) were recruited for this experiment.

Apparatus and stimuli

Stimuli presentation and data collection took place in a lab containing 12 PCs. Participants were seated approximately 60 cm away from the screen, with refresh rate set at 70 hertz.

Photographs (dimensions were 9.8 degrees of visual angle (°) x 10.2°) of three males aged 21 to 24 were used as cue faces. There were five versions of each cue face: looking straight ahead with a neutral expression; looking left and right with a positive expression; and looking left and right with a negative expression (Fig 1).

Fig 1. Cue face emotional expressions.

Cue face exhibiting a positive (left) and negative (right) expression. All individuals whose images are published in this paper gave written informed consent (as outlined in PLOS consent form) to the publication of their image.

Where cue faces were directing their gaze to one side, the whole head was turned (i.e., the orientation of the head as well as eye gaze indicated direction of gaze). This was to ensure that there was no ambiguity about where the cue face’s attention was directed [63]. All male cue faces were used for consistency. While there is evidence that females respond more strongly to gaze cues than males, no studies that we are aware of indicate that the gender of the cue face modulates the gaze cueing effect [69–71].

Target faces (14.9° x 10.6°) were taken from a database of facial photographs compiled by Bainbridge, Isola, and Oliva [72]. Sixty-eight male and 68 female faces that had received average (from 4 to 6 on a 9-point Likert-type scale) ratings on attractiveness and trustworthiness in Bainbridge et al.’s [72] study were selected as target faces. Attractiveness and trustworthiness are particularly highly correlated with judgments of likeability [73, 74]; as such, we selected for average ratings on these traits to avoid floor and ceiling effects on likeability and maximise the possibility of observing a gaze cueing effect. All target faces had a neutral expression and were gazing at the camera. Ages of target faces ranged from 20 to 60 years. In order to facilitate categorisation of the target faces, a letter (either “x” or “c” in size 14 lowercase font) was superimposed between the eyes using the image manipulation program “GIMP”. This method of categorisation was chosen because we considered that categorising by an inherent characteristic such as sex, age, or race might prime in-group/out-group biases that would introduce additional noise into the data, making any effect of gaze cueing more difficult to detect [75, 76].

Design

There were three within-subjects factors, each with two levels. The gaze cue factor manipulated the cue face’s gaze direction; in the cued condition, the cue face looked toward the target face, while in the uncued condition the cue face looked away from the target face, toward the empty side of the screen. The emotion factor was the manipulation of the cue face’s emotional expression (either positive or negative). The number of cues factor was the single or multiple cue face manipulation. There was one cue face in the single cue face condition. All three cue faces were presented in the multiple cue face condition. Finally, the primary dependent variable was the participants’ affective evaluations of the target faces on a nine point scale. Reaction times were also measured to ensure that participants were completing the task as instructed.

Procedure

Participants were instructed to ignore the nonpredictive cue face and indicate (by pressing the “x” or “c” key on the keyboard) as quickly as possible whether the target face had an “x” or “c” on it. Framing the task as a measure of reaction time was intended to obscure the study’s hypotheses from participants [3, 5].

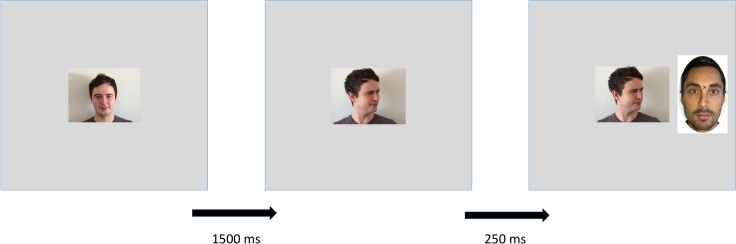

For each trial of the categorisation task, the cue face first appeared in the centre of the screen gazing straight ahead with a neutral expression for 1500 ms. It then turned to the left or right with either a positive or negative emotional expression for 250 ms before the target face appeared to one side of the screen. The cue and target faces then remained on screen until the participant’s response (Fig 2). After response, participants were given feedback as to the correctness of their answer, and asked to press any key to begin the next trial. Participants were informed of the number of trials remaining in each block.

Fig 2. Categorisation trial.

Example of a categorisation trial in which a single cue face gazes at a target face with a negative expression.

After receiving instructions, participants completed a practice block of four trials, which were not included in the analysis. They then did two blocks of 64 trials each of the categorisation task, where all 64 target faces not used in the practice trial were displayed once in randomised order. Target faces were displayed under the same cueing, emotion, and number of cue conditions each of the three times they appeared to ensure robust encoding of target faces and cueing conditions [5]. The same cue face was used for each single cue face trial throughout the task. Selection of this “main” cue face was counterbalanced across participants.

The rating block followed the two categorisation blocks. In this block, after categorising each target face, participants were presented with a column of numbers from 9 (at the top of the screen) to 1 (at the bottom of the screen), with the message “How much did you like that person?” at the top of the screen. The prompt “Like very much” was just above the 9, and the prompt “Didn’t like at all” was just below the 1. Reaction times were not collected in the rating block. The entire experiment took approximately 30 minutes to complete.

Results

As hypotheses were clearly directional and based on previous research, and there was no theory to suggest that effects in the unpredicted direction might be observed, one-tailed tests were used [77–79]. Two-tailed tests were used for all effects not pertaining to the hypotheses. Although the F distribution is asymmetrical, this does not prevent the use of a one-tailed test; it simply requires adjusting the p value to reflect the probability of correctly predicting the direction of an effect [79–81].

Raw data for this experiment can be found in supporting information file S1 Experiment 1 Dataset.

Reaction times

Reaction times were analysed using a within-subjects ANOVA. There was evidence of moderate positive skew in the data (maximum ratio of skewness to standard error = 5.1). However, ANOVA is generally robust to skew when means come from distributions with similar shapes [82, 83]. As this was the case here, no transformation was undertaken. This mirrors the approach taken in previous studies in the gaze cueing literature [3, 5, 19, 27]. Average reaction times were calculated using only data from trials in which the correct classification decision was made. Participants were generally accurate (error rate was 5.9%), and there was no effect of the within-subjects factors on error rates.

Results of a within-subjects ANOVA with reaction time as the dependent measure are shown in Table 1. As expected, there was a main effect of gaze cue, but no evidence of a main effect of emotion or an emotion by gaze cue interaction. Cued target faces (M = 650 ms, SE = 14) were classified more quickly than uncued target faces (M = 695 ms, SE = 14) regardless of the cue face’s emotional expression. Reaction times were also quicker in the multiple cue face condition (M = 677 ms, SE = 14) than the single cue condition (M = 667 ms, SE = 13); however, because this did not interact with the gaze cue factor this result simply indicated a general tendency for participants to respond more quickly when there were multiple cues present, regardless of whether the gaze cues were valid or not.

Table 1. Results of within-subjects ANOVA on reaction times.

| Effect | F(1, 35) | p | ηp2 |

|---|---|---|---|

| Gaze cue | 73.25 | < .001#*** | .68 |

| Emotion | 0.02 | .88 | < .01 |

| Number of cues (“Number”) | 7.82 | .008** | .18 |

| Emotion x Gaze cue | 0.67 | .42 | .02 |

| Emotion x Number | 0.05 | .82 | < .01 |

| Gaze cue x Number | 0.08 | .78 | < .01 |

| Gaze cue x Emotion x Number | 0.57 | .46 | .02 |

# = one-tailed test.

** = significant at alpha = .01.

*** = significant at alpha = .001.

Evaluations

Across all cueing conditions, faces received ratings very close to the mid-point of the scale (M = 5.12, SD = 0.80) and data were approximately normal. A within-subjects ANOVA on ratings showed a significant main effect of emotion, with target faces appearing alongside positive cue faces receiving higher ratings than target faces alongside negative cue faces, M = 5.20 (SE = 0.11) versus M = 5.05 (SE = 0.11) (Table 2). There was no main effect of gaze cue or the number of cue faces. The hypothesised emotion x gaze cue interaction was not observed, nor was the emotion x gaze cue x number of cues interaction.

Table 2. Results of Within-Subjects ANOVA on Target Face Ratings.

| Effect | F(1, 35) | P | ηp2 |

|---|---|---|---|

| Emotion | 5.53 | .01* | .14 |

| Gaze cue | 1.0 | .32 | .03 |

| Number of cues (“Number”) | 0.71 | .41 | .02 |

| Gaze cue x Number | 2.08 | .16 | .06 |

| Emotion x Number | 1.60 | .22 | .04 |

| Emotion x Gaze cue (H1) | 0.59 | .45# | .02 |

| Emotion x Gaze cue x Number (H2) | 0.07 | .80# | < .01 |

# = one-tailed test.

* = significant at alpha = .05. “H1” and “H2” (in bold) indicate that the effects are the subjects of Hypotheses 1 and 2, respectively.

Discussion

Neither of our hypotheses were supported. While emotion had a main effect on ratings as has previously been observed [5], this did not interact with the cue face’s gaze direction in the expected manner, nor did the number of cue faces enhance the emotion x gaze cue interaction.

The fact that target faces generally received ratings very close to the mid-point of the scale confirmed that our set of target faces was suitable for the task and that floor and/or ceiling effects were unlikely to be the reason for the failure to observe the hypothesised effects. Likewise, the reasonably low error rate and the strong effect of gaze cues on reaction times indicated that participants were attending to the task and orienting in response to the gaze cues in line with previous research.

In response to these results, a direct replication of Bayliss et al. [5] was undertaken. We reasoned that a successful replication would provide evidence that the null results in Experiment 1 were due to the nature of the target stimuli rather than a more general issue with the replicability of the gaze cueing effect reported by Bayliss et al. [5].

Experiment 2

Method

Participants

Thirty-six participants (26 females) with a mean age of 19.6 years (SD = 1.07, range = 17–23 years) were recruited.

Apparatus, stimuli, design and procedure

The method for Experiment 2 was the same as that for Experiment 1 with minor differences. First, pictures of objects rather than faces were the target stimuli. Following Bayliss et al. [5], thirty-four objects commonly found in a household garage and 34 objects commonly found in the kitchen were used as target stimuli. Pictures of the objects were sourced from the internet (Fig 3). Participants classified the objects as kitchen or garage items by pressing the “k” or “g” buttons on the keyboard. Because it was not necessary for the categorisation task and because this experiment was intended to be a direct replication of Bayliss et al. [5], letters were not superimposed on the objects as was done with the target faces in Experiment 1.

Fig 3. Object stimuli.

Examples of a kitchen item (left) and a garage item (right) used as target stimuli in Experiment 2.

Results

Data from two participants whose average reaction times were more than three standard deviations slower than the mean were excluded. Exclusion of this data did not change the statistical significance of any of the results reported below.

The approach to data analysis in this experiment and the two that followed was the same as that in Experiment 1. Hypotheses remained the same for all four experiments (though in Experiments 2 and 3 objects were the target stimuli rather than faces). All effects relating to hypotheses were tested with one-tailed tests, while tests of those effects not pertaining to the specific hypotheses were two-tailed. Skew in reaction time data was similar in all four experiments; transformations were not undertaken for the reasons provided above. Finally, error rates were low (from 6.7% to 7.7%) and unrelated to the independent variables in all experiments.

Raw data for this experiment can be found in supporting information file S2 Experiment 2 Dataset.

Reaction times

Though objects looked at by the cue face were classified more quickly (mean = 699 ms, SE = 18) than those the cue face looked away from (mean = 711 ms, SE = 19), a within-subjects ANOVA did not provide evidence to suggest that this difference was significant (see Table 3).

Table 3. Results of within-subjects ANOVA for reaction times.

| Effect | F(1, 33) | p | ηp2 |

|---|---|---|---|

| Gaze cue | 1.97 | .085# | .06 |

| Emotion | 0.52 | .48 | .02 |

| Number of cues (“Number”) | 0.38 | .54 | .01 |

| Emotion x Gaze cue | 3.24 | .08 | .09 |

| Emotion x Number | 0.45 | .51 | .01 |

| Gaze cue x Number | 0.09 | .76 | < .01 |

| Emotion x Gaze cue x Number | 0.77 | .39 | .02 |

# = one-tailed test

Evaluations

There was a significant main effect of emotion; objects alongside positive cue faces were rated higher (M = 5.29, SE = 0.19) than objects alongside negative cue faces (M = 4.90, SE = 0.18). This was qualified by the predicted two-way interaction between emotion and gaze cue. However, there was no evidence of a three-way interaction between emotion, gaze, and number of cue faces (Table 4).

Table 4. Results of Within-Subjects ANOVA on Object Ratings.

| Effect | F(1, 33) | p | ηp2 |

|---|---|---|---|

| Emotion | 5.08 | .03* | .13 |

| Gaze cue | 0.03 | .87 | < .01 |

| Number of cues (“Number”) | 0.43 | .52 | .01 |

| Gaze cue x Number | 0.04 | .85 | < .01 |

| Emotion x Number | 0.07 | .79 | < .01 |

| Emotion x Gaze cue (H1) | 3.44 | .04#* | .09 |

| Emotion x Gaze cue x Number (H2) | 0.01 | .94# | < .01 |

# = one-tailed test.

* = significant at alpha = .05.

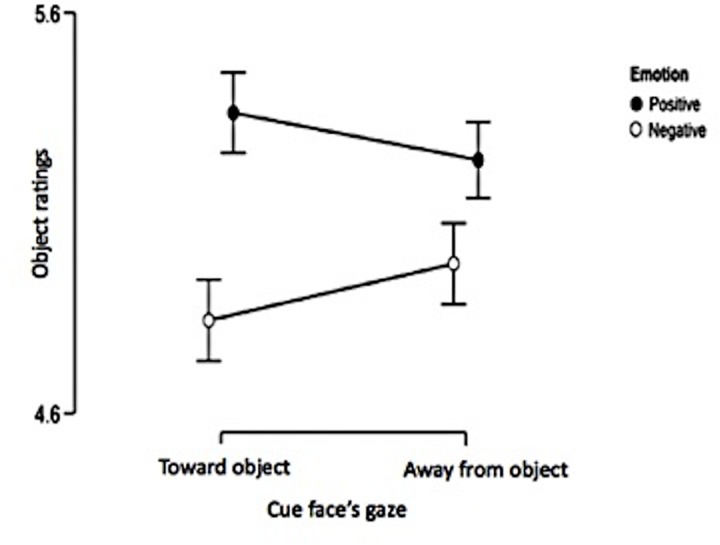

Emotion x gaze cue interaction

Inspection of sample means showed that the emotion x gaze cue interaction was in the expected direction (Fig 4). As expected, the difference between the emotion expression was significant for the cued objects (t(33) = 2.71, p = 0.005 (one-tailed), Cohen’s d = 0.47) but not for the uncued objects (t(33) = 1.43, p = 0.16, Cohen’s d = 0.25).

Fig 4. Emotion x gaze cue interaction.

Points represent marginal means, bars represent standard errors.

Discussion

The results replicated those of Bayliss et al. [5] with respect to evaluations; participants’ evaluations of the objects were in line with cue faces’ emotionally expressive gaze cues. Interestingly (and unlike Bayliss et al. [5]), this effect of gaze cues on evaluations was seen despite the lack of any significant effect of gaze cues on reaction times. However, counter to Hypothesis 2, there was no evidence that the evaluation effect was strengthened in the multiple cue condition. The successful replication of Bayliss et al.’s [5] finding suggested that the failure to observe an effect of gaze cues on evaluations in Experiment 1 might have been due to the nature of the stimuli. This may have been because stimuli were faces rather than objects. However, it may also have been because target stimuli had letters superimposed on them. Participants in Experiment 1 may have selectively attended to the letters (and not the faces they were superimposed upon) because only the letters were relevant to the categorisation task [84, 85]. Limited processing of target faces might have resulted in the faces being rated more or less at random, or meant that additional information, such as gaze cues, was not integrated when participants encoded the target faces [86]. In order to investigate this possibility, a further experiment was run in which letters were superimposed on objects.

As the effect size of the emotion x gaze cue interaction in Experiment 2 was smaller than that reported by Bayliss et al. [5] (ηp2 = .09 compared with .19), the sample size was increased to 48 participants in Experiments 3 and 4; this was a substantially larger sample size than the 26 recruited by Bayliss et al. [5] or the 28 recruited by Jones et al. [63].

Experiment 3

Method

Participants

Forty-eight participants (37 females) with a mean age of 20.0 years (SD = 5.46, range = 17–45 years) were recruited.

Apparatus, stimuli, design and procedure

The method for Experiment 3 was the same as that for Experiment 2 with one change; objects had letters superimposed on them using the image manipulation program GIMP. As in Experiment 1, the letters “x” and “c” in size 14 lowercase font were used (Fig 5 below). Half of the kitchen objects were marked with an “x” and the other half with a “c”; the same approach was taken with garage objects.

Fig 5. Examples of target objects used in Experiment 3.

Results

Reaction times

Participants were significantly quicker to classify cued objects (M = 637 ms, SE = 17) than uncued objects (M = 678 ms, SE = 17). No other main effects or interactions were significant (see Table 5).

Table 5. Results of within-subjects ANOVA on reaction times.

| Effect | F(1, 47) | p | ηp2 |

|---|---|---|---|

| Gaze cue | 44.65 | < .001#*** | .49 |

| Emotion | 0.12 | .73 | < .01 |

| Number of cues (“Number”) | 0.14 | .71 | < .01 |

| Emotion x Gaze cue | 1.30 | .26 | .03 |

| Emotion x Number | 0.23 | .63 | < .01 |

| Gaze cue x Number | 2.87 | .10 | .06 |

| Emotion x Gaze cue x Number | 0.76 | .39 | .02 |

# = one-tailed test.

*** = significant at alpha = .001.

Evaluations

There was a main effect of emotional expression, with positive cue faces eliciting higher ratings (M = 5.36, SE = 0.11) than negative cue faces (M = 5.18, SE = 0.13), but no other significant main effects or interactions (see Table 6).

Table 6. Results of Within-Subjects ANOVA on Object Ratings.

| Effect | F(1, 47) | p | ηp2 |

|---|---|---|---|

| Emotion | 5.54 | .02* | .11 |

| Gaze cue | 0.42 | .52 | < .01 |

| Number cue faces (“Number”) | 2.23 | .14 | .05 |

| Gaze cue x Number | 0.46 | .50 | .01 |

| Emotion x Number | <0.01 | .97 | < .01 |

| Emotion x Gaze cue (H1) | 0.15 | .70# | < .01 |

| Emotion x Gaze cue x Number (H2) | 0.54 | .22# | .01 |

# = one-tailed test.

* = significant at alpha = .05.

In order to investigate the influence of the superimposed letters across Experiments 2 and 3, Experiment was included as a between-subjects factor in a mixed ANOVA as per Nieuwenhuis et al. [87]. We found that the emotion x gaze interaction effect was not significantly different across experiments (F(1,80) = 2.37, p = .13, ηp2 = .03) nor was the emotion x gaze cue x number of cues interaction (F(1,80) = 0.31, p = .58, ηp2 < .01).

Raw data for this experiment can be found in supporting information file S3 Experiment 3 Dataset.

Discussion

The primary aim of this experiment was to determine whether the letters superimposed on target stimuli might have interfered with the way in which participants processed target stimuli, and thereby nullified the effect of cue faces’ gaze cues. Although the emotion x gaze cue interaction was significant in Experiment 2 and non-significant in Experiment 3, the difference between these two interaction effects was itself not statistically significant [87, 88]. As such, the impact of the superimposed letters on the results of Experiment 1 remains ambiguous. There was also no evidence to suggest that the emotion x gaze x number of cues interaction was affected by the superimposed letters; however, this was of less interest because that interaction had not been significant in either of the first two experiments. Despite the lack of clear evidence about the effect of the superimposed letters, we adopted a conservative approach and repeated Experiment 1 with the potentially problematic letters removed from the target faces.

Experiment 4

Method

Participants

Forty-eight participants (38 females) with a mean age of 20.3 years (SD = 5.72, range = 18–47 years) were recruited.

Apparatus, stimuli, design and procedure

The method for Experiment 4 was the same as that for Experiment 1 with one change; target faces did not have letters superimposed on them. Participants classified target faces based on sex using the “m” and “f” keys. Sex was chosen as the characteristic for classification because there is less potential for ambiguity about sex than there is about age or race.

Results

One participant’s data were excluded due to mean reaction times more than three standard deviations slower than the mean. Exclusion of these data did not change the results of any significance tests.

Reaction times

Once again, participants were significantly quicker to react to cued faces (M = 590 ms, SE = 14) than uncued faces (M = 607 ms, SE = 14). There was also a main effect of the number of gaze cues, with participants faster to classify faces in the multiple cue face condition (M = 591 ms, SE = 14 compared with M = 606 ms, SE = 14 in the single cue face condition). No other main effects or interactions were significant (see Table 7).

Table 7. Results of within-subjects ANOVA on reaction times.

| Effect | F(1, 46) | p | ηp2 |

|---|---|---|---|

| Gaze cue | 12.87 | < .001#*** | .22 |

| Emotion | 0.05 | .82 | < .01 |

| Number of cues (“Number”) | 11.23 | .002 | .20 |

| Emotion x Gaze cue | 0.09 | .77 | < .01 |

| Emotion x Number | 0.07 | .79 | < .01 |

| Gaze cue x Number | 0.24 | .63 | < .01 |

| Emotion x Gaze cue x Number | 0.19 | .67 | < .01 |

# = one-tailed test.

*** = significant at alpha = .001.

Raw data for this experiment can be found in supporting information file S4 Experiment 4 Dataset.

Evaluations

There was a main effect of emotional expression, with positive cue faces eliciting higher ratings (M = 4.93, SE = 0.17) than negative cue faces (M = 4.73, SE = 0.17), but no other significant main effects or interactions (see Table 8). The emotion x gaze cue interaction was in the expected direction but did not reach statistical significance.

Table 8. Results of Within-Subjects ANOVA on Ratings of Target Faces.

| Effect | F(1, 46) | P | ηp2 |

|---|---|---|---|

| Emotion | 14.00 | < .001*** | .23 |

| Gaze cue | 2.29 | .14 | .05 |

| Number of cues (“Number”) | 0.17 | .68 | < .01 |

| Gaze cue x Number | 0.39 | .54 | < .01 |

| Emotion x Number | 0.29 | .59 | < .01 |

| Emotion x Gaze cue (H1) | 1.53 | .11# | .03 |

| Emotion x Gaze cue x Number (H2) | < 0.01 | .94# | < .01 |

# = one-tailed test.

*** = significant at alpha = .001.

A between-subjects comparison across Experiments 1 and 4 was undertaken to determine whether removing the superimposed letters made a difference to the emotion x gaze cue interaction effect when faces were the target stimuli. As with objects, there was no significant difference across experiments, F(1, 82) = 2.07, p = .15, ηp2 = .03. On this basis, we then combined the Experiment 1 and 4 data sets. Operating on this combined data set we still found no evidence for either an emotion x gaze cue interaction (F(1,83) = 0.138, p = .71, ηp2 = .002) or an emotion x gaze cue x number interaction (F(1,83) = 0.008, p = .930, ηp2 < .001).

Discussion

There was no evidence to suggest that facial evaluations were affected by the gaze cues and emotional expressions of the cue faces. Although the effect was in the expected direction, it was not significantly different from the emotion x gaze cue interaction observed in Experiment 1; as such, there was once again no clear evidence to suggest that the superimposed letters interfered with the gaze cueing effect. There was also no evidence that participants were more affected by the emotion x gaze cue interaction in the multiple cue face condition than they were in the single cue face condition.

Bayesian Analysis of Null Results

A limitation of null hypothesis significance testing (NHST) is that it does not permit inference about the strength of evidence in favour of the null hypothesis. Bayesian inference does not suffer from this limitation [89, 90]. Given the large number of null findings in the experiments reported here (see Table 9), additional analysis using Bayesian statistics was undertaken in order to quantify the strength of evidence for the null hypothesis. The Bayesian null hypothesis examined here is one of no effect in either direction since we wished to evaluate the level of evidence that there is no effect at all, not just no effect in a particular direction. All null findings were analysed with Bayesian repeated measures ANOVAs using the software platform JASP [91]. A conservative approach was taken by adopting JASP’s uninformative default prior in all analyses [90, 92].

Table 9. Summary of Results Across All Four Experiments.

| Experiment | Hypothesis 1 | Hypothesis 2 |

|---|---|---|

| 1—Faces with letters | N | N |

| 2 –Objects | Y | N |

| 3—Objects with letters | N | N |

| 4 –Faces | N | N |

Y = Hypothesis supported by significant result at alpha = .05 (one-tailed); N = Hypothesis not supported. Hypothesis 1: There will be a gaze x emotion interaction. Hypothesis 2: There will be a gaze x emotion x number interaction.

Bayes factors for inclusion (BFIncs) were computed to compare the evidence that a hypothesised effect was non-zero with the evidence that the effect was zero (i.e., the null hypothesis). The BFIncs therefore represents the odds ratio in support of the alternative hypothesis relative to the null hypothesis [93]. Conversely, a large 1/ BFInc represents the odds ratio in support of the null hypothesis relative to the alternative hypothesis. As shown in Table 10, for the data sets of Experiments 1 and 4 combined, the odds ratio for the null hypothesis relative to the alternative hypothesis was 34.5:1, which represents “strong” support for the null hypothesis [91]. This suggests that the emotional gaze effect does not occur for face stimuli. In other words, the likeability of a face is not influenced by the gaze direction and emotional expression of a third party.

Table 10. Bayesian analysis of null results in relation to hypothesized gaze x emotion interaction.

* = experiment in which targets had letters superimposed.

The value for 1/ BFinc indicates support for the null hypothesis.

In relation to Hypothesis 2—that the gaze x emotion interaction will be larger when there are more onlookers—1/BFIncs indicate “extreme” [91] evidence in favour of the null hypothesis that the number of gaze cues had no effect on the emotional gaze effect, regardless of whether those stimuli were faces or objects (Table 11). Across all four experiments, the minimum odds ratio was 323:1 in favour of the null hypothesis.

Table 11. Bayesian analysis of null results in relation to the hypothesized gaze x emotion x number interaction.

| Experiment | BFInc | 1/ BFInc |

|---|---|---|

| 1* | 0.0031 | 323 |

| 2 | 9.9e-4 | 1,014 |

| 3* | 4.3e-4 | 2,352 |

| 4 | 0.0012 | 833 |

| 1* & 4 | 1.6e-4 | 6398 |

* = experiment in which targets had letters superimposed.

The value for 1/ BFinc indicates support for the null hypothesis.

General Discussion

Evaluations

The impact of emotionally expressive gaze cues on the affective evaluations of target stimuli was investigated over four experiments. Although Bayliss et al.’s [5] finding that the affective evaluations of common household objects could be modulated by emotionally expressive gaze cues was replicated in Experiment 2, this effect was not seen when faces were the target stimuli. A follow-up Bayesian analysis of the results from Experiments 1 and 4 found an odds ratio of 34.5:1 in favour of the null hypothesis, indicating that in our experiments the emotional gaze effect did not occur for faces. Similarly, our Bayesian analysis showed that increasing the number of onlookers did not increase the emotional gaze effect for evaluations of either face or object stimuli. Analysis of reaction times suggested that these null results were not due to a failure of the gaze cues to manipulate participants’ attention. Strong gaze cueing effects were observed in three of the four experiments, and the one experiment in which gaze cueing effects were marginal (Experiment 2) was the one in which the evaluation effect was significant.

The pattern of results seen both here and in other work suggests that gaze cues–whether accompanied by emotional expressions or not—are most likely to affect evaluations of mundane, everyday objects that do not automatically elicit valenced reactions. Small- to medium-sized effects of gaze cueing have been reliably observed when target stimuli are affectively neutral objects (e.g., this study’s Experiment 2; see also [3, 5, 8]; though c.f. this study’s Experiment 3 for no effect and Treinen et al. [58] for a larger effect). When stimuli are affectively valenced, however, the effect of gaze cues appears to be weaker. For example, the effect of gaze cues on evaluations of food in Soussignan et al. [60] was smaller than any of the effect sizes reported with neutral stimuli, and the present study failed to demonstrate evidence of a gaze cueing effect on faces. The exception to this trend is Jones et al. [63], in which participants’ evaluations of the attractiveness of target faces were influenced by emotionally expressive gaze cues, with effect sizes similar to those seen with neutral objects.

There are important procedural differences between Jones et al. [63] and the broader gaze cueing literature (the present study included). Firstly, Jones et al. [63] investigated the effects of gaze cues in the context of mate selection. A number of authors have suggested that social transmission of mate preferences is a sophisticated process that may differ from transmission of preferences more generally [94, 95]; as such, the results of Jones et al. [63] may not generalise beyond that context.

Secondly, participants in Jones et al. [63] were asked to rate how much more attractive they found one target face compared with another, rather than indicate how attractive they found each target face individually. This may have prompted participants to think more carefully about their ratings and integrate additional sources of information–such as gaze cues–into the decision-making process. Kahneman [96] has suggested that “System 2” thinking, which involves slow, effortful, and deliberate thought processes, is more likely to be engaged when it is necessary to compare alternatives and make deliberate choices between options. Evaluation of individual faces in a context like the present study’s, on the other hand, has been characterised as a “System 1” process, involving rapid, effortless judgments that occur without conscious deliberation [59, 97].

Viewing the results described above through this theoretical lens can reconcile the apparently contradictory findings. When stimuli are neutral objects, gaze cues do not compete with an initial impression and are thus more likely to influence how those objects are evaluated. However, when stimuli are affectively valenced, like food or faces, people may tend to rely largely on their initial impressions such that the effect of emotional gaze cues from third parties is limited. Furthermore, human faces may evoke even stronger automatic evaluations than food due to humans’ highly social nature [98]. The exception may occur when they are prompted to update those impressions with additional information by a specific context or a need to choose between options.

One further possible reason for our failure to observe an effect of emotional gaze cues on face evaluations is the emotional expressions we used. Bayliss et al. [5] compared the effect of happy and disgusted expressions; in this study, our cue face models were asked to express liking and disliking. While this was arguably a more ecologically valid approach given that there was nothing inherently disgusting about our target stimuli (we acknowledge, of course, that one can feel disgust for another person without that other person actually having a disgusting appearance), it is possible that our cue faces’ emotional expressions were somewhat ambiguous or otherwise less strong than Bayliss et al.’s [5]. However, the replication of Bayliss et al.’s [5] central finding in Experiment 2 (albeit with a smaller effect size) suggests that it is unlikely that our stimuli were particularly problematic.

Our findings in relation to the effect of multiple cues contrast with what was reported by Capozzi et al. [57]. Again, there were important procedural differences between the present study and Capozzi et al. [57] that may have contributed to the divergent results. The first is that Capozzi et al.’s [57] multiple cue condition involved seven different cues, compared to three in this study. The second difference was the way in which the multiple cues were presented. In Capozzi et al. [57], different cue faces were presented individually over seven different trials. Here, all three cue faces were presented at once. This simultaneous presentation of multiple cue faces may have led participants to infer that the cue faces were not independent sources of information, which may have reduced their net influence. A third difference was that in Capozzi et al. [57] all the cue faces had relatively neutral expressions, with the result that the emotional expression of a single cue face may have appeared to the participants to be ambiguous. Multiple cue faces would therefore have been needed to provide an unambiguous signal. Conversely, in our study the expression of each cue face was deliberately chosen to be unambiguous which may have obviated the benefit of having multiple cue faces.

Because gender differences were not a focus of this study, we did not vary the gender of cue faces or recruit a balanced sample of participants. We note that the use of exclusively male cue faces and mostly female participants (the proportion of female participants ranged from a low of 72% in Experiment 2 to a high of 89% in Experiment 1) across each of the four studies may have contributed to our findings. However, it is not entirely clear what role gender might have played. A number of studies have shown that women respond more strongly to gaze cues than men when the dependent measure is reaction time, but there is no suggestion in the literature that this is modulated by the sex of the cue face. Bayliss et al. [70] investigated differences in gaze cueing as a function of both participant and cue face gender. In that study, female participants displayed stronger gaze cueing effects than males; however, there was no modulation of gaze cueing by the gender of the cue face. Alwall et al. [69] observed larger gaze cueing effects in female participants in a study in which only a female cue face was used. Deaner et al. [71] used all male cue faces and once again found that women showed larger gaze cueing effects than male participants, with the effect being particularly pronounced when the female participants were familiar with the male cue faces. Our findings with respect to gaze cueing of attention are largely in agreement with this research. Using mostly female participants, we observed strong effects of gaze cueing on reaction times in three of our four studies; and the one study in which this effect was marginal was the study with the smallest proportion of female participants (Experiment 2). It is of course possible that while gaze cues exert a stronger influence on the orientation of attention in women than men, the same relationship does not hold with respect to evaluations. To our knowledge there is no research addressing this question, and it may be worth pursuing in future work.

It is also important to acknowledge the difficulty of interpreting null results, even with (or, perhaps, because of) the added flexibility offered by Bayesian statistics [99]. While our Bayesian analyses suggest that the evaluations of faces are not susceptible to the influence of gaze cues, and that multiple, simultaneous gaze cues do not enhance the effect of gaze cues on evaluations, further evidence is needed to firm up these conclusions. It could be that our results apply only to our specific paradigm and may not generalize to different paradigms.

Reaction times

Results of reaction time analyses were broadly consistent with the literature. With the exception of Experiment 2, participants were quicker to classify cued objects and target faces even though they knew that these gaze cues did not predict the location of target stimuli. Given the weight of evidence in both this study and the literature more broadly, the most plausible explanation for the non-significant effect of gaze cues on reaction time in Experiment 2 would appear to be Type II error. As in Bayliss et al. [5] and a number of other studies [27, 45, 46], the emotion of the cue face (or faces) did not appear to play a role in this gaze cueing effect. This was not a surprise given that cue faces did not display either of the emotions that have led to stronger gaze cueing effects in previous research (disgust and fear) [54–56].

Conclusion

Previous research and theory suggest that gaze cues can affect how we evaluate both everyday objects and more significant aspects of our environment, such as other people. In the present study, however, there was no evidence that emotionally expressive gaze cues influenced evaluations of unfamiliar faces, nor was there evidence that the effect of gaze cues became more pronounced as the number of sources increased. Although our hypotheses were not supported, this study’s results are nonetheless important. Firstly, they identify the need for direct replication and systematic extension of previously reported effects in order to better understand their strength and boundary conditions. Secondly, the suggestion that gaze cues might have a stronger effect on affective evaluations when circumstances encourage System 2 thinking generates clear predictions that can be tested by modifying this study’s procedure. For example, the effect of gaze cues should be stronger when participants are required to compare stimuli rather than rate them individually. Finally, our reaction time data add further support to a growing consensus in the literature that only certain emotional expressions in certain experimental contexts lead to enhanced effects of gaze cues on the orientation of attention.

Supporting Information

(XLSX)

(XLSX)

(XLSX)

(XLSX)

Acknowledgments

The authors acknowledge the helpful comments of two anonymous reviewers and the editor.

Data Availability

All relevant data are within the paper and its Supporting information files.

Funding Statement

The authors received no specific funding for this work.

References

- 1.Ambady N, Weisbuch M. (2010). Nonverbal behavior In Fiske S. T., Gilbert D. T., & Lindzey G. (Eds.), Handbook of social psychology (pp. 464–497). Hoboken, NJ: John Wiley & Sons; 10.1002/9780470561119.socpsy001013 [DOI] [Google Scholar]

- 2.Frith CD, Frith U. (2007). Social cognition in humans. Current Biology, 17(16), R724–R732. 10.1016/j.cub.2007.05.068 [DOI] [PubMed] [Google Scholar]

- 3.Bayliss AP, Paul MA, Cannon PR, Tipper SP. (2006). Gaze cuing and affective judgments of objects: I like what you look at. Psychonomic Bulletin & Review, 13(6), 1061–1066. 10.3758/BF03213926 [DOI] [PubMed] [Google Scholar]

- 4.Ulloa JL, George N. (2013). A Cognitive Neuroscience View on Pointing: What Is Special About Pointing with the Eyes and Hands? Humanamente. Journal of Philosophical Studies, 24, 203–228. [Google Scholar]

- 5.Bayliss AP, Frischen A, Fenske MJ, Tipper SP. (2007). Affective evaluations of objects are influenced by observed gaze direction and emotional expression. Cognition, 104(3), 644–653. 10.1080/02724980443000124 [DOI] [PubMed] [Google Scholar]

- 6.Manera V, Elena MR, Bayliss AP, Becchio C. (2014). When seeing is more than looking: Intentional gaze modulates object desirability. Emotion, 14(14), 824–832. 10.1037/a0036258 [DOI] [PubMed] [Google Scholar]

- 7.Ulloa JL, Marchetti C, Taffou M, George N. (2015). Only your eyes tell me what you like: Exploring the liking effect induced by other's gaze. Cognition and Emotion, 29(3), 460–470. 10.1080/02699931.2014.919899 [DOI] [PubMed] [Google Scholar]

- 8.van der Weiden A, Veling H, Aarts H. (2010). When observing gaze shifts of others enhances object desirability. Emotion, 10(6), 939–943. 10.1037/a0020501 [DOI] [PubMed] [Google Scholar]

- 9.Baron-Cohen S. (1995). The eye direction detector (EDD) and the shared attention mechanism (SAM): Two cases for evolutionary psychology In Moore C. & Dunham P. J. (Eds.), Joint attention: Its origins and role in development (pp. 41–59). Hillsdale, NJ: Erlbaum; 10.1111/j.2044-835x.1997.tb00726.x [DOI] [Google Scholar]

- 10.Emery NJ. (2000). The eyes have it: the neuroethology, function and evolution of social gaze. Neuroscience & Biobehavioral Reviews, 24(6), 581–604. 10.1016/S0149-7634(00)00025-7 [DOI] [PubMed] [Google Scholar]

- 11.Pfeiffer UJ, Schilbach L, Jording M, Timmermans B, Bente G, Vogeley K. (2012). Eyes on the mind: investigating the influence of gaze dynamics on the perception of others in real-time social interaction. Frontiers in Psychology, 3, 537 10.3389/fpsyg.2012.00537 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hoffman EA, Haxby JV. (2000). Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nature Neuroscience, 3(1), 80–84. [DOI] [PubMed] [Google Scholar]

- 13.Jenkins R, Beaver JD, Calder AJ. (2006). I thought you were looking at me: Direction-specific aftereffects in gaze perception. Psychological Science, 17, 506–513. [DOI] [PubMed] [Google Scholar]

- 14.Frischen A, Bayliss AP, Tipper SP. (2007). Gaze cueing of attention: visual attention, social cognition, and individual differences. Psychological Bulletin, 133(4), 694 10.1037/0033-2909.133.4.694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Burra N, Hervais-Adelman A, Kerzel D, Tamietto M, De Gelder B, Pegna AJ. (2013). Amygdala activation for eye contact despite complete cortical blindness. The Journal of Neuroscience, 33(25), 10483–10489. 10.1523/JNEUROSCI.3994-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Celeghin A, de Gelder B, Tamietto M. (2015). From affective blindsight to emotional consciousness. Consciousness and Cognition, 36, 414–425. 10.1016/j.concog.2015.05.007 [DOI] [PubMed] [Google Scholar]

- 17.Adams RB, Gordon HL, Baird AA, Ambady N, Kleck RE. (2003). Effects of gaze on amygdala sensitivity to anger and fear faces. Science, 300, 1536 [DOI] [PubMed] [Google Scholar]

- 18.Shepherd S, Cappuccio M. (2011). Sociality, Attention, and the Mind’s Eyes In Seemann A. (Ed.), Joint attention: New developments in psychology, philosophy of mind, and social neuroscience (pp. 205–235). Boston: MIT Press; 10.4135/9781452276052.n190 [DOI] [Google Scholar]

- 19.Friesen CK, Kingstone A. (1998). The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonomic Bulletin & Review, 5(3), 490–495. 10.3758/BF03208827 [DOI] [Google Scholar]

- 20.Hermens F, Walker R. (2010). Gaze and arrow dis- tractors influence saccade trajectories similarly. Quarterly Journal of Experimental Psychology, 63, 2120–2140. [DOI] [PubMed] [Google Scholar]

- 21.Kuhn G, Benson V. (2007). The influence of eye-gaze and arrow pointing distractor cues on voluntary eye movements. Perception & Psychophysics, 69, 966–971. [DOI] [PubMed] [Google Scholar]

- 22.Kuhn G, Kingstone A. (2009). Look away! Eyes and arrow engage oculomotor responses automatically. Attention, Perception & Psychophysics, 71(2), 314–327. 10.3758/APP [DOI] [PubMed] [Google Scholar]

- 23.Kuhn G, Benson V, Fletcher-Watson S, Kovshoff H, McCormick CA, Kirkby J, et al. (2010). Eye movements affirm: Automatic overt gaze and arrow cueing for typical adults and adults with autism spectrum disorder. Experimental Brain Research, 201(2), 155–165. 10.1007/s00221-009-2019-7 10.1007/s00221-009-2019-7 [DOI] [PubMed] [Google Scholar]

- 24.Nummenmaa L, Hietanen JK. (2006). Gaze distractors influence saccadic curvature: Evidence for the role of the oculomotor system in gaze-cued orienting. Vision Research, 46(21), 3674–3680. [DOI] [PubMed] [Google Scholar]

- 25.Downing P, Dodds C, Bray D. (2004). Why does the gaze of others direct visual attention? Visual Cognition, 11(1), 71–79. 10.1080/13506280344000220 [DOI] [Google Scholar]

- 26.Driver J, Davis G, Ricciardelli P, Kidd P, Maxwell E, Baron- Cohen S. (1999). Gaze perception triggers reflexive visuospatial orienting. Visual Cognition, 6, 509–540. 10.1080/135062899394920 [DOI] [Google Scholar]

- 27.Hietanen JK, Leppänen JM. (2003). Does facial expression affect attention orienting by gaze direction cues? Journal of Experimental Psychology. Human Perception and Performance, 29(6), 1228–1243. 10.1037/0096-1523.29.6.1228 [DOI] [PubMed] [Google Scholar]

- 28.Friesen CK, Ristic J, Kingstone A. (2004). Attentional effects of counterpredictive gaze and arrow cues. Journal of Experimental Psychology: Human Perception and Performance, 30(2), 319 10.1037/0096-1523.30.2.319 [DOI] [PubMed] [Google Scholar]

- 29.Galfano G, Dalmaso M, Marzoli D, Pavan G, Coricelli C, Castelli L. (2012). Eye gaze cannot be ignored (but neither can arrows). The Quarterly Journal of Experimental Psychology, 65(10), 1895–1910. 10.1080/17470218.2012.663765 [DOI] [PubMed] [Google Scholar]

- 30.Tipples J. (2002). Eye gaze is not unique: Automatic orienting in response to uninformative arrows. Psychonomic Bulletin & Review, 9(2), 314–318. 10.3758/BF03196287 [DOI] [PubMed] [Google Scholar]

- 31.Tipples J. (2008). Orienting to counterpredictive gaze and arrow cues. Perception & Psychophysics, 70(1), 77–87. 10.3758/PP [DOI] [PubMed] [Google Scholar]

- 32.Hietanen JK, Nummenmaa L, Nyman MJ, Parkkola R, Hämäläinen H. (2006). Automatic attention orienting by social and symbolic cues activates different neural networks: An fMRI study. NeuroImage, 33(1), 406–413. 10.1016/j.neuroimage.2006.06.048 [DOI] [PubMed] [Google Scholar]

- 33.Tipper CM, Handy TC, Giesbrecht B, Kingstone A. (2008). Brain responses to biological relevance. Journal of Cognitive Neuroscience, 20(5), 879–91. 10.1162/jocn.2008.20510 10.1162/jocn.2008.20510 [DOI] [PubMed] [Google Scholar]

- 34.Birmingham E, Bischof WF, Kingstone A. (2009). Get real! Resolving the debate about equivalent social stimuli. Visual Cognition, 17(6–7), 904–924. 10.1080/13506280902758044 [DOI] [Google Scholar]

- 35.Hadjikhani N, Hoge R, Snyder J, de Gelder B. (2008). Pointing with the eyes: The role of gaze in communicating danger. Brain and Cognition, 68, 1–8. 10.1016/j.bandc.2008.01.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Hoffman KL, Gothard KM, Schmid MC, Logothetis NK. (2007). Facial- expression and gaze-selective responses in the monkey amygdala. Current Biology, 17, 766–772. [DOI] [PubMed] [Google Scholar]

- 37.Engell AD, Haxby JV. (2007). Facial expression and gaze-direction in human superior temporal sulcus. Neuropsychologia, 45, 3234–3241. [DOI] [PubMed] [Google Scholar]

- 38.Furl N, van Rijsbergen NJ, Treves A, Friston KJ, Dolan RJ. (2007). Experience-dependent coding of facial expression in superior temporal sulcus. In Proceedings of the National Academy of Sciences 104, (pp. 13485–13489). [DOI] [PMC free article] [PubMed]

- 39.Sato W, Kochiyama T, Uono S, Yoshikawa S. (2010). Amygdala integrates emotional expression and gaze direction in response to dynamic facial expressions. Neuroimage, 50, 1658–1665. 10.1016/j.neuroimage.2010.01.049 [DOI] [PubMed] [Google Scholar]

- 40.Graham R, LaBar KS. (2012). Neurocognitive mechanisms of gaze-expression interactions in face processing and social attention. Neuropsychologia, 50(5), 553–566. 10.1016/j.neuropsychologia.2012.01.019 10.1016/j.neuropsychologia.2012.01.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Garner WR. (1974). The processing of information and structure Potomac, MD: Lawrence Erlbaum. [Google Scholar]

- 42.Ganel T. (2011). Revisiting the relationship between the processing of gaze direc- tion and the processing of facial expression. Journal of Experimental Psychology: Human Perception and Performance, 37(1), 48–57. 10.1037/a0019962 [DOI] [PubMed] [Google Scholar]

- 43.Ganel T, Goshen-Gottstein Y, Goodale MA. (2005). Interactions between the processing of gaze direction and facial expression. Vision Research, 45, 1191–1200. [DOI] [PubMed] [Google Scholar]

- 44.Graham R, LaBar KS. (2007). The Garner selective attention paradigm reveals dependencies between emotional expression and gaze direction in face percep- tion. Emotion, 7, 296–313. [DOI] [PubMed] [Google Scholar]

- 45.Galfano G, Sarlo M, Sassi F, Munafò M, Fuentes LJ, Umiltà C. (2011). Reorienting of spatial attention in gaze cuing is reflected in N2pc. Social Neuroscience, 6(3), 257–269. 10.1080/17470919.2010.515722 10.1080/17470919.2010.515722 [DOI] [PubMed] [Google Scholar]

- 46.Holmes A, Mogg K, Garcia LM, Bradley BP. (2010). Neural activity associated with attention orienting triggered by gaze cues: A study of lateralized ERPs. Social Neuroscience, 5(3), 285–295. 10.1080/17470910903422819 10.1080/17470910903422819 [DOI] [PubMed] [Google Scholar]

- 47.Rigato S, Menon E, Gangi VD, George N, Farroni T. (2013). The role of facial expressions in attention-orienting in adults and infants. International Journal of Behavioral Development, 37(2), 154–159. 10.1177/0165025412472410 [DOI] [Google Scholar]

- 48.Franconeri SL, Simons DJ. (2003). Moving and looming stimuli capture attention. Perception & Psychophysics, 65, 999–1010. 10.3758/BF03194829 [DOI] [PubMed] [Google Scholar]

- 49.Kuhn G, Pickering A, Cole GG. (2015). “Rare” Emotive Faces and Attentional Orienting. Emotion, 16(1), 1–5. 10.1037/emo0000050 10.1037/emo0000050 [DOI] [PubMed] [Google Scholar]

- 50.Lin JY, Franconeri S, Enns JT. (2008). Objects on a collision path with the observer demand attention. Psychological Science, 19, 686–692. 10.1111/j.1467-9280.2008.02143.x 10.1111/j.1467-9280.2008.02143.x [DOI] [PubMed] [Google Scholar]

- 51.Fox E, Mathews A, Calder AJ, Yiend J. (2007). Anxiety and sensitivity to gaze direction in emotionally expressive faces. Emotion, 7, 478–486. 10.1037/1528-3542.7.3.478 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Mathews A, Fox E, Yiend J, Calder A. (2003). The face of fear: Effects of eye gaze and emotion on visual attention. Visual Cognition, 10(7), 823–835. 10.1080/13506280344000095 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Tipples J. (2006). Fear and fearfulness potentiate automatic orienting to eye gaze. Cognition & Emotion, 20(2), 309–320. 10.1080/02699930500405550 [DOI] [Google Scholar]

- 54.Friesen CK, Halvorson KM, Graham R. (2011). Emotionally meaningful targets enhance orienting triggered by a fearful gazing face. Cognition & Emotion, 25(1), 73–88. 10.1080/02699931003672381 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Kuhn G, Tipples J. (2011). Increased gaze following for fearful faces. It depends on what you’re looking for! Psychonomic Bulletin & Review, 18, 89–95. 10.3758/s13423-010-0033-1 [DOI] [PubMed] [Google Scholar]

- 56.Pecchinenda A, Pes M, Ferlazzo F, Zoccolotti P. (2008). The combined effect of gaze direction and facial expression on cueing spatial attention. Emotion, 8(5), 628 10.1037/a0013437 [DOI] [PubMed] [Google Scholar]

- 57.Capozzi F, Bayliss AP, Elena MR, Becchio C. (2015). One is not enough: Group size modulates social gaze-induced object desirability effects. Psychonomic Bulletin & Review, 22(3), 850–855. 10.3758/s13423-014-0717-z [DOI] [PubMed] [Google Scholar]

- 58.Treinen E, Corneille O, Luypaert G. (2012). L-eye to me: The combined role of Need for Cognition and facial trustworthiness in mimetic desires. Cognition, 122(2), 247–251. 10.1016/j.cognition.2011.10.006 [DOI] [PubMed] [Google Scholar]

- 59.Willis J, Todorov A. (2006). First impressions: Making up your mind after a 100-ms exposure to a face. Psychological Science, 17(7), 592–598. 10.1111/j.1467-9280.2006.01750.x [DOI] [PubMed] [Google Scholar]

- 60.Soussignan R, Schaal B, Boulanger V, Garcia S, Jiang T. (2015). Emotional communication in the context of joint attention for food stimuli: Effects on attentional and affective processing. Biological Psychology, 104, 173–183. 10.1016/j.biopsycho.2014.12.006 [DOI] [PubMed] [Google Scholar]

- 61.Soussignan R, Schaal B, Boulanger V, Gaillet M, Jiang T. (2012). Orofacial reactivity to the sight and smell of food stimuli. Evidence for anticipatory liking related to food reward cues in overweight children. Appetite, 58(2), 508–516. 10.1016/j.appet.2011.12.018 [DOI] [PubMed] [Google Scholar]

- 62.Soussignan R, Schaal B, Rigaud D, Royet JP, Jiang T. (2011). Hedonic reactivity to visual and olfactory cues: rapid facial electromyographic reactions are altered in anorexia nervosa. Biological Psychology, 86(3), 265–272. 10.1016/j.biopsycho.2010.12.007 [DOI] [PubMed] [Google Scholar]

- 63.Jones BC, DeBruine LM, Little AC, Burriss RP, Feinberg DR. (2007). Social transmission of face preferences among humans. Proceedings of the Royal Society of London B: Biological Sciences, 274(1611), 899–903. 10.1098/rspb.2006.0205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Boone RT, Buck R. (2003). Emotional expressivity and trustworthiness: The role of nonverbal behavior in the evolution of cooperation. Journal of Nonverbal Behavior, 27(3), 163–182. 10.1023/A:1025341931128 [DOI] [Google Scholar]

- 65.Cosmides L, Tooby J. (2000). The cognitive neuroscience of social reasoning In Gazzaniga M. S. (Ed.), The new cognitive neuroscience (pp. 1259–1276). Cambridge, Massachusetts: MIT Press. [Google Scholar]

- 66.Anderson E, Siegel EH, Bliss-Moreau E, Barrett LF. (2011). The visual impact of gossip. Science, 332(6036), 1446–1448. 10.1126/science.1201574 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Laidre ME, Lamb A, Shultz S, Olsen M. (2013). Making sense of information in noisy networks: Human communication, gossip, and distortion. Journal of Theoretical Biology, 317, 152–160. 10.1016/j.jtbi.2012.09.009 [DOI] [PubMed] [Google Scholar]

- 68.Sommerfeld RD, Krambeck HJ, Semmann D, Milinski M. (2007). Gossip as an alternative for direct observation in games of indirect reciprocity. Proceedings of the National Academy of Sciences, 104(44), 17435–17440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Alwall N, Johansson D, Hansen S. (2010). The gender difference in gaze-cueing: Associations with empathizing and systemizing. Personality and Individual Differences, 49(7), 729–732. 10.1016/j.paid.2010.06.016 [DOI] [Google Scholar]

- 70.Bayliss AP, di Pellegrino G, Tipper SP. (2005). Sex differences in eye gaze and symbolic cueing of attention. The Quarterly Journal of Experimental Psychology, 58(4), 631–650. 10.1080/02724980443000124 [DOI] [PubMed] [Google Scholar]

- 71.Deaner RO, Shepherd SV, Platt ML. (2007). Familiarity accentuates gaze cuing in women but not men. Biology Letters, 3(1), 64–67. 10.1098/rsbl.2006.0564 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Bainbridge WA, Isola P, Oliva A. (2013). The intrinsic memorability of face photographs. Journal of Experimental Psychology: General, 142(4), 1323 10.1037/a0033872 [DOI] [PubMed] [Google Scholar]

- 73.Fiske ST, Cuddy AJ, Glick P. (2007). Universal dimensions of social cognition: Warmth and competence. Trends in Cognitive Sciences, 11(2), 77–83. 10.1016/j.tics.2006.11.005 [DOI] [PubMed] [Google Scholar]

- 74.Oosterhof NN, Todorov A. (2008). The functional basis of face evaluation. Proceedings of the National Academy of Sciences, 105(32), 11087–11092. 10.1073/pnas.0805664105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Macrae CN, Bodenhausen GV, Milne AB, Jetten J. (1994). Out of mind but back in sight: Stereotypes on the rebound. Journal of Personality and Social Psychology, 67(5), 808 10.1037//0022-3514.67.5.808 [DOI] [Google Scholar]

- 76.Rudman LA, Goodwin SA. (2004). Gender differences in automatic in-group bias: why do women like women more than men like men? Journal of Personality and Social Psychology, 87(4), 494 10.1037/0022-3514.87.4.494 [DOI] [PubMed] [Google Scholar]

- 77.Dijksterhuis A. (2004). Think different: the merits of unconscious thought in preference development and decision making. Journal of Personality and Social Psychology, 87(5), 586 10.1037/0022-3514.87.5.586 [DOI] [PubMed] [Google Scholar]

- 78.Kimmel HD. (1957). Three criteria for the use of one-tailed tests. Psychological Bulletin, 54(4), 351–353. [DOI] [PubMed] [Google Scholar]

- 79.Walther E. (2002). Guilty by mere association: evaluative conditioning and the spreading attitude effect. Journal of Personality and Social Psychology, 82(6), 919 10.1037//0022-3514.82.6.919 [DOI] [PubMed] [Google Scholar]

- 80.Howell DC. (2002). Statistical methods for psychology (5th ed.). Belmont, CA: Wadsworth. [Google Scholar]

- 81.Rice WR, Gaines SD. (1994). ‘Heads I win, tails you lose': testing directional alternative hypotheses in ecological and evolutionary research. Trends in Ecology and Evolution, 9(6), 235–236. 10.1016/0169-5347(94)90258-5 [DOI] [PubMed] [Google Scholar]

- 82.Baguley T. (2012). Serious stats: A guide to advanced statistics for the behavioral sciences Palgrave Macmillan. [Google Scholar]

- 83.Wilcox RR, Keselman HJ. (2003). Modern robust data analysis methods: measures of central tendency. Psychological Methods, 8(3), 254 [DOI] [PubMed] [Google Scholar]

- 84.Rock I, Gutman D. (1981). The effect of inattention on form perception. Journal of Experimental Psychology: Human Perception and Performance, 7(2), 275 10.1037/0096-1523.7.2.275 [DOI] [PubMed] [Google Scholar]

- 85.Scholl BJ. (2001). Objects and attention: The state of the art. Cognition, 80(1), 1–46. 10.1016/S0010-0277(00)00152-9 [DOI] [PubMed] [Google Scholar]