We examined the neural correlates of crossmodal prediction error in audiovisual speech in schizophrenia. Interestingly, we found intact audiovisual incongruence detection in the auditory cortex for patients with schizophrenia (SZP) and healthy matched control participants (HC). However, we found that the enhanced frontal theta-band power, which reflects crossmodal prediction error processing in HC, was absent in SZP.

Keywords: predictive coding, audiovisual speech, neural synchrony, multisensory processing, oscillatory activity

Abstract

Our brain generates predictions about forthcoming stimuli and compares predicted with incoming input. Failures in predicting events might contribute to hallucinations and delusions in schizophrenia (SZ). When a stimulus violates prediction, neural activity that reflects prediction error (PE) processing is found. While PE processing deficits have been reported in unisensory paradigms, it is unknown whether SZ patients (SZP) show altered crossmodal PE processing. We measured high-density electroencephalography and applied source estimation approaches to investigate crossmodal PE processing generated by audiovisual speech. In SZP and healthy control participants (HC), we used an established paradigm in which high- and low-predictive visual syllables were paired with congruent or incongruent auditory syllables. We examined crossmodal PE processing in SZP and HC by comparing differences in event-related potentials and neural oscillations between incongruent and congruent high- and low-predictive audiovisual syllables. In both groups event-related potentials between 206 and 250 ms were larger in high- compared with low-predictive syllables, suggesting intact audiovisual incongruence detection in the auditory cortex of SZP. The analysis of oscillatory responses revealed theta-band (4–7 Hz) power enhancement in high- compared with low-predictive syllables between 230 and 370 ms in the frontal cortex of HC but not SZP. Thus aberrant frontal theta-band oscillations reflect crossmodal PE processing deficits in SZ. The present study suggests a top-down multisensory processing deficit and highlights the role of dysfunctional frontal oscillations for the SZ psychopathology.

NEW & NOTEWORTHY

We examined the neural correlates of crossmodal prediction error in audiovisual speech in schizophrenia. Interestingly, we found intact audiovisual incongruence detection in the auditory cortex for patients with schizophrenia (SZP) and healthy matched control participants (HC). However, we found that the enhanced frontal theta-band power, which reflects crossmodal prediction error processing in HC, was absent in SZP.

learning stimulus regularities allow us to correctly predict future events. In case of a mismatch between predicted and actual events neural activity that relates to the processing of the prediction error (PE) is observed (Rao and Ballard 1999). Failures in correctly predicting future events likely contribute to the schizophrenia (SZ) psychopathology, including delusions and hallucinations (Fletcher and Frith 2009).

Previous studies using unisensory stimuli, such as auditory speech, have shown altered PE processing in SZ (Ford et al. 2014; Ford and Mathalon 2012). In our environment, however, stimuli occur in different sensory modalities and predictions need to be generated across the senses. Whether SZ patients (SZP) show alterations in crossmodal PE processing is unknown. Given that an increasing number of studies report abnormal multisensory processing in SZP, the investigation of crossmodal PE processing will further our understanding of the neural mechanisms underlying impaired multisensory integration in this patient group. Overall, there are thus far still relatively few studies that have investigated multisensory processing in SZP (Tseng et al. 2015). One behavioral study in SZP revealed a diminished benefit of viewing lip movements for the recognition of auditory speech that is presented at an intermediate noise level (Ross et al. 2007). Moreover, an electroencephalography (EEG) study suggested altered audiovisual processing, as reflected in event-related potentials (ERPs), using nonspeech stimuli (Stekelenburg et al. 2013). Together, this suggests a multisensory integration deficit in SZP (Ross et al. 2007; Stekelenburg et al. 2013; but see Martin et al. 2013).

A neural mechanism that has been linked to multisensory processing in healthy participants is synchronized oscillatory activity (Van Atteveldt et al. 2014; Senkowski et al. 2008). Neural oscillations are involved in perceptual processing (Engel and Fries 2010) and contribute to the computation of crossmodal PE in audiovisual speech (Arnal et al. 2011; Arnal and Giraud 2012). Using magnetoencephalography (MEG), Arnal et al. (2011) observed an enhancement of theta-band (i.e., 4–7 Hz) phase synchrony in higher speech processing areas during the presentation of congruent audiovisual speech in healthy participants. The same study revealed elevated beta-band (i.e., 14–15 Hz) phase synchrony and gamma power (i.e., 60–80 Hz) in higher multisensory areas when crossmodal predictions were violated. Recently, Lange et al. (2013) found increased theta-band power in the auditory cortex during the processing of incongruent compared with congruent audiovisual speech. Interestingly, altered oscillatory responses in unisensory paradigms have been reported previously in SZP (Grützner et al. 2013; Popov et al. 2014, 2015; Uhlhaas and Singer 2010). For example, SZP show altered frontal theta-band power during the processing of color-word incongruence (Popov et al. 2015). This finding is in line with the proposed crucial role of dysfunctional oscillations in the frontal cortex for the SZ psychopathology (Senkowski and Gallinat 2015). Notably, a recent study indicated that altered neural oscillations also contribute to multisensory processing deficits in SZ (Stone et al. 2014). Hence, dysfunctional neural oscillations might underlie altered crossmodal PE processing in SZ.

In this high-density EEG study we investigated crossmodal PE processing in SZP and healthy control participants (HC). We adapted an audiovisual speech paradigm for which robust crossmodal PE has previously been reported in healthy participants (Arnal et al. 2011). Exploiting the temporal delay between visual and auditory information in natural audiovisual speech enables the formation of phonological predictions based on visual information before the auditory onset. The scaling of PE is reflected by predictability. High predictability induces strong expectations, and low predictability induces weak expectations. The expectation is violated in the incongruent condition and results in a stronger or weaker PE. This allows examination of the neural responses elicited by visually induced predictions and PEs emerging during audio-visual integration when predictions are violated. In agreement with Arnal et al. (2011) our main hypothesis was that oscillatory activity in low and high frequencies contributes to different aspects of crossmodal PE processing. Moreover, we expected crossmodal PE processing, as reflected in oscillatory activity, to be disturbed in SZP.

In the present study we investigated crossmodal PE processing in ERPs and oscillatory power in SZP and HC. With respect to oscillatory activity, we investigated power in lower (i.e., 4–30 Hz) and higher (i.e., 30–100 Hz) frequencies and explored whether any deviances in SZP relate to the psychopathology. The present study revealed similar detection of audiovisual incongruence in the auditory cortex of SZP and HC in ERPs. In addition, we found group differences in theta-band oscillations that were source localized in the frontal cortex and linked to the SZ psychopathology.

METHODS

Participants.

Twenty-two patients with SZ were recruited from outpatient units of the Charité-Universitätsmedizin Berlin. All patients fulfilled the DSM-IV and ICD-10 criteria for SZ and no other psychiatric axis I disorder. Severity of symptoms was obtained by the Positive and Negative Syndrome Scale (PANSS) (Kay et al. 1987). In accordance with the five-factor model, symptoms were grouped into factors “positive,” “negative,” “depression,” “excitement,” and “disorganization” (Wallwork et al. 2012). Because of an insufficient number of trials in EEG data (i.e., at least 30 trials per condition), five patients were excluded from the analysis. Data from 17 patients (5 women, 12 men; 35.24 ± 7.73 yr) and 17 education-, handedness-, sex-, and age-matched HC (4 women, 13 men; 36.00 ± 8.29 yr), who were screened for psychopathology with the German version of the Structured Clinical Interview for DSM-4-R Non-Patient Edition (SCID), were subjected to the analysis (Table 1). In all participants the Brief Assessment of Cognition in Schizophrenia (BACS) was performed (Keefe et al. 2004). All participants gave written informed consent and had normal hearing as well as normal or corrected to normal vision and no record of neurological disorders. None of them met criteria for alcohol or substance abuse. A random sample of 45% of participants underwent a multidrug-screening test. The study was performed in accordance with the Declaration of Helsinki, and the local ethics commission of the Charité-Universitätsmedizin Berlin approved the study.

Table 1.

Overview of demographic data

| Patients |

Control Participants |

Statistics |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | n | Mean | SD | n | t-Values | χ2-Values | P values | |

| Age, yr | 35.24 | 7.73 | 36.00 | 8.29 | −0.278 | 0.783 | |||

| Education, yr | 11.00 | 1.66 | 11.18 | 1.47 | 0.329 | 0.745 | |||

| Illness duration, yr | 8.24 | 4.47 | |||||||

| Chlorpromazine Eq. (daily dosage), mg | 381.12 | 191.65 | |||||||

| Sex (M/F) | 12/5 | 13/4 | 0.15 | 0.70 | |||||

| Handedness (R/L) | 14/3 | 16/1 | 1.13 | 0.29 | |||||

| Antipsychotic med.* | 17 | ||||||||

| Comedication† | 3 | ||||||||

| BACS | |||||||||

| Verbal memory | 41.24 | 13.80 | 48.06 | 10.63 | −1.615 | 0.116 | |||

| Digit | 19.76 | 4.47 | 20.29 | 4.55 | −0.342 | 0.734 | |||

| Motor | 65.53 | 12.33 | 75.71 | 9.41 | −2.705 | 0.011 | |||

| Fluency | 47.18 | 13.87 | 53.24 | 14.06 | −1.265 | 0.215 | |||

| Symbol coding | 54.82 | 15.00 | 58.35 | 14.05 | −0.708 | 0.484 | |||

| ToL | 17.12 | 3.04 | 17.82 | 2.60 | −0.727 | 0.472 | |||

| Total score | 245.65 | 43.31 | 273.47 | 37.37 | −2.005 | 0.053 | |||

| PANSS | |||||||||

| Negative | 18.65 | 3.12 | |||||||

| Positive | 16.59 | 1.97 | |||||||

| General | 37.18 | 2.83 | |||||||

| Total score | 72.41 | 5.80 | |||||||

Only 2 SZP took clozapine.

Comedication of antipsychotics and mood stabilizers. Interestingly, there were no substantial differences in cognitive performances between SZP and HC, except for the motor token test. Nevertheless, the BACS Total score indicated a trend toward a reduced cognitive performance in patients.

Stimuli.

Video clips of an actress uttering the syllables /Pa/, /La/, /Ta/, /Ga/, and /Fa/ were recorded with a digital camera (Canon 60D, 50 frames/s, 1,280 × 720 pixels, 44.1 kHz stereo audio) and exported with 30 frames/s (Apple Quicktime Player, version 7). The clips of the syllables were converted to grayscale and equalized in their luminance with the SHINE toolbox (Willenbockel et al. 2010). Visual stimuli were presented on a 21-in. CRT screen at a distance of 120 cm, and auditory stimuli were presented via a single, centrally positioned speaker (Bose Companion 2). To minimize eye movements, a small white fixation cross above the mouth at the philtrum was added to all clips. The video clips were presented with a frontal view displaying the face (visual angle = 5.95° × 7.36°) on a black background. All syllables were presented with the real audiovisual onset asynchrony at 65 dB (SPL) (Table 2).

Table 2.

Overview of physical features of presented syllables

| /Pa/ | /La/ | /Ga/ | /Ta/ | /Fa/ | |

|---|---|---|---|---|---|

| POA | Bilabial | Alveolar | Velar | Alveolar | Labiodental |

| Visual duration, ms | 858 | 1,089 | 891 | 891 | 702 |

| Auditory duration, ms | 329 | 460 | 348 | 360 | 448 |

| AO − MO, ms | 337 | 461 | 427 | 336 | 100 |

The different places of articulation (POA) are indicated in the top row. Differences in latency between visual and auditory onset (AO − MO) are indicated in the bottom row.

Experimental design.

The experiment consisted of 640 trials that were presented in 16 blocks. Each block had a duration of ∼3 min. Stimuli were controlled via Psychtoolbox (Brainard 1997). Different types of congruent and incongruent audiovisual syllable combinations were presented (Table 3). Congruent syllable trials contained matching audiovisual syllables (e.g., visual /Pa/ and auditory /Pa/), whereas incongruent syllable trials contained nonmatching audiovisual syllables (e.g., visual /Pa/ and auditory /Ta/). Pilot data verified that syllables differed with respect to the predictive power of visual on auditory stimuli. In visual speech the concept of predictability refers to the lip movements and speech gestures, which depend on the place of articulation in the vocal tract. Hence, lip movements convey phonological predictions, which differ in their degree of specificity across different syllables. For instance, syllables including consonants formed at the front of the mouth (i.e., /Pa/) convey more specific predictions than those including consonants formed at the back of the mouth (i.e., /Ga/). In accordance with a previous study (Arnal et al. 2011) and in line with our pilot data, we selected syllables in which the visual input was high (e.g., /Pa/ and /La/) or low (e.g., /Ga/ and /Ta/) predictive for the auditory input (Fig. 1). Four types of audiovisual stimuli were presented: high-predictive congruent (e.g., /Pa/ + /Pa/); high-predictive incongruent (e.g., /Pa/ + /La/); low-predictive congruent (e.g., /Ga/ + /Ga/); low-predictive incongruent (e.g., /Ga/ + /Ta/). The neural correlates of the PE are expressed in the difference between congruent and incongruent trials within each level of stimulus prediction, i.e., low and high. Thus the factor Predictability reflects the scaling of the PE, which was expected to be larger in high-predictive compared with low-predictive trials. The strongest PE results from incongruent and high-predictive syllables. A weaker PE results from the presentation of incongruent and low-predictive syllables. We included audiovisual and visual-only catch trials to warrant sustained auditory and visual attention during the experimental blocks. Audiovisual catch trials comprised 96 trials in which the visual syllables /Fa/ (n = 48), /Pa/ (n = 12), /La/ (n = 12), /Ga/ (n = 12), and /Ta/ (n = 12) were combined with the auditory syllable /Fa/. Visual-only catch trials (n = 64) consisted of a white circle that appeared randomly for 270 ms during audiovisual syllable presentation at the position of the fixation cross. Participants had to press a button with their right index finger when they heard the syllable /Fa/ or when they detected the white circle. The EEG data of catch trials were not analyzed.

Table 3.

Overview of presented congruent and incongruent, high- and low-predictive trials

| Condition | Visual | Audio | n |

|---|---|---|---|

| Congruent HP | Pa | Pa | 80 |

| Congruent HP | La | La | 80 |

| Congruent LP | Ga | Ga | 80 |

| Congruent LP | Ta | Ta | 80 |

| Incongruent HP | Pa | La | 80 |

| Incongruent HP | La | Pa | 80 |

| Incongruent LP | Ga | Ta | 80 |

| Incongruent LP | Ta | Ga | 80 |

| Congruent /Fa/ | Fa | Fa | 48 |

| Incongruent /Fa/ | Pa | Fa | 12 |

| Incongruent /Fa/ | La | Fa | 12 |

| Incongruent /Fa/ | Ga | Fa | 12 |

| Incongruent /Fa/ | Ta | Fa | 12 |

The center columns show the visual and auditory syllables. The audiovisual Fa catch trials comprised 96 trials. Additionally, 64 visual-only catch trials were presented. In total, 640 trials were presented. HP, high predictive; LP, low predictive.

Fig. 1.

Trial sequence of high- and low-predictive incongruent audiovisual syllables. Each trial started with the presentation of the first static frame of a video clip that was presented for a random interval ranging from 500 to 800 ms. After this frame the video clip, in which a female speaker uttered a syllable, was presented for 2,000 ms. ISI, interstimulus interval. The participants' tasks were to respond to the occasional auditory syllable /fa/ and to an occasional change of the fixation cross, which turned into a white circle. The tasks ensured that participants were attending to the sensory inputs.

Acquisition and processing of EEG data.

Data were recorded with a 128-channel active EEG system (EasyCap, Herrsching, Germany), including one horizontal and one vertical EOG electrode (online recording: 1,000-Hz sampling rate with a 0.016- to 250-Hz band-pass filter; offline filtering: downsampling to 500 Hz, 1- to 125-Hz FIR band-pass filtering and 49.1- to 50.2-Hz, 4th-order Butterworth notch filtering). The 128-electrode montage covered electrodes in the usual 10–20 System space. No electrode was placed below the horizontal plane. To correct for EOG and ECG artifacts, independent component analyses were conducted (Lee et al. 1999). After visual inspection, 14 ± 0.6 (SE) independent components for SZP and 15 ± 0.9 components for HC were rejected. Remaining noisy channels (SZP = 16 ± 1.2; HC = 15 ± 1.4) were interpolated with spherical interpolation. Extracted epochs of −1,000 ms to 1,000 ms around auditory onset were rereferenced to common average. For ERP analysis epochs were filtered (2 Hz high pass, 2nd order, 35 Hz low pass, 12th order 2-pass Butterworth filter) and baseline corrected using an interval from −500 ms to −100 ms prior to sound onset. In the analysis of oscillatory power we explored a range from 4 to 100 Hz. Since we did not find robust modulations relative to baseline of high-frequency (i.e., >30 Hz) responses, we focused the analysis on 4–30 Hz. For the analysis of oscillations multitaper convolution transformation with frequency-dependent Hanning windows was computed in 1-Hz steps (time window: Δt = 3/f; spectral smoothing: f = 1/Δt). Averaged oscillatory power was baseline corrected (relative change) from −500 to −100 ms prior to sound onset.

Analysis of behavioral data.

For the analysis of reaction times (RTs) to audiovisual catch trials, a repeated-measures ANOVA with factors Group (SZP vs. HC) and Condition (congruent vs. incongruent) was conducted. The factor Predictability was not investigated, because of a lack of systematic variation in audiovisual catch trials. For the analysis of RTs to visual catch trials, a t-test was conducted between groups. Since hit rates (HRs) for audiovisual and visual catch trials were at ceiling level, nonparametric Mann-Whitney U-tests were calculated to compare between groups. Wilcoxon signed-rank tests were computed to compare congruent and incongruent HRs within groups. The significance level in Wilcoxon signed-rank tests was set to a Bonferroni-corrected P value of 0.0125.

Analysis of EEG data.

In line with previous work (Johnson et al. 2005; Schneider et al. 2008, 2011), our analysis of event-related activity focused on global field power (GFP; Esser et al. 2006). The reason for examining GFP instead of a priori-defined ERP components was that we did not have specific hypotheses on when and where differences between groups would occur. A more global measure, such as GFP, only reflects strong and global effects, i.e., effects that are concurrently present at a large number of electrodes. The analysis of oscillatory responses focused on oscillatory power to high- and low-predictive congruent and incongruent trials. For each condition 84 ± 10 trials for SZP and 88 ± 12 trials for HC were entered into the analysis. For the statistical analysis, GFP and oscillatory responses to incongruent and congruent conditions for SZP and HC were subtracted (e.g., responses to high-predictive congruent syllables were subtracted from responses to high-predictive incongruent syllables). We focused the analysis on group differences with respect to the PE, which was expressed as the difference between neural responses to incongruent vs. congruent trials. Importantly, within each condition (high predictability, low predictability) the auditory and visual syllables were identical across incongruent and congruent trials. The analysis of differences between incongruent and congruent trials for the high- and low-predictive conditions also ensured that physical differences between syllables used for the high- and low-predictive conditions could not account for the findings. The differences between congruent and incongruent syllables were entered into running repeated-measures ANOVAs with the factors Group (SZP vs. HC) and Predictability (low vs. high). GFP was calculated across all scalp electrodes as a measure of electric field strength separately for all conditions. Similar to previous studies (Kissler and Herbert 2013; Kissler and Koessler 2011), we applied a running ANOVA approach. Based on the analysis of Arnal et al. (2011), running ANOVAs for GFP data were computed for each sample point in a 0 to 500 ms interval following auditory syllable onset. To account for multiple testing, a time stability criterion of at least 20 ms was applied (Guthrie and Buchwald 1991; Picton et al. 2000). Cortical sources of main effects or interactions in the analysis were visualized by a local autoregressive average (LAURA) analysis using Cartool (Brunet et al. 2011). To this end, grand average EEG data were fitted into a realistic head model comprising 4,024 nodes. Source space was restricted to gray matter of the Montreal Neurological Institute (MNI) average brain, divided into a regular source grid with 6-mm spacing. For each group and predictability level the source solutions of the congruent condition were subtracted from the incongruent condition.

To examine effects in neural oscillations, we followed the experimental protocol of our recent audiovisual speech processing study (Roa Romero et al. 2015). Oscillatory power (4–30 Hz) was analyzed for a region of interest (ROI) encompassing 10 fronto-central electrodes. This fronto-central ROI was selected in agreement with previous studies, which have shown that activity at fronto-central electrodes reflects auditory processing in specific auditory paradigms (Giard et al. 1994; Hermann et al. 2014; Potts et al. 1998). Running ANOVAs with the same factors as in the GFP analysis were computed for each sample point and frequency in a 0 to 1,000 ms interval following auditory syllable onset (Schurger et al. 2008). Because of low temporal resolution of the time-frequency transformation, a time stability criterion of at least 100 ms was applied. For illustrative purpose, we calculated the sources of this scalp-level effect. First we applied a fast Fourier transform to the significant time-frequency windows obtained in the ANOVA (i.e., 7.5 ± 2 Hz). Then, source estimations of oscillatory responses were computed for the center of this frequency window (7.5 Hz). For each participant oscillatory power was projected into source space with a dynamic imaging of coherent sources (DICS) algorithm (Gross et al. 2001). To this end, all conditions were combined and sensor-level cross-spectral density was calculated for an interval between 100 and 500 ms after auditory stimulus onset and a baseline interval between −500 and −100 ms prior to auditory syllable onset. A common spatial filter was computed on the combined data, and boundary element models based on individual magnetic resonance images (MRIs) were calculated. Each condition was projected into source space through the common filter. Finally, source activity was interpolated onto individual anatomical MRIs, normalized to the MNI brain and averaged over subjects. Pearson correlations were computed between PANSS scores, antipsychotic medication, and EEG data in SZP. To examine the influence of antipsychotic medication, medication dosage was converted to chlorpromazine equivalent level (Gardner et al. 2010) and entered as covariate into partial correlation analyses. For the correlation analyses Bonferroni correction was applied to control for multiple comparisons (Bonferroni-corrected α = 0.05/5 = 0.01).

RESULTS

Behavioral results of visual catch trials.

The analysis of RTs to visual-only catch trials revealed no significant differences between SZP (1,020 ms) and HC (1,239 ms) [t(32) = −1.387, P = 0.175]. The HRs to visual-only catch trials (average across conditions: SZP = 96.54%, HC = 98.82%) were at ceiling level. Finally, HRs for visual catch trials did not significantly differ between groups (U = 94.5, z = −1.81, P = 0.07), indicating that both groups similarly attended to visual inputs.

Behavioral results of audiovisual catch trials.

The ANOVA for RTs to audiovisual catch trials revealed a significant main effect of Group [F(1,32) = 11.24, P = 0.002] due to faster responses in HC compared with SZP. Furthermore, there was a significant main effect of Condition [F(1,32) = 22.40, P < 0.001], indicating faster RTs for congruent compared with incongruent syllables [t(32) = −4.80, P < 0.001].

The HRs to audiovisual catch trials (average across conditions: SZP = 93.48%, HC = 96.90%) were at ceiling level. The analysis of HRs to audiovisual trials revealed significantly lower HRs in SZP compared with HC for congruent stimuli (U = 53.5, z = −3.29, P = 0.001). No significant group difference was found for incongruent stimuli (U = 134.5, z = −0.35, P = 0.726). Within the HC group HRs were larger in congruent compared with incongruent audiovisual trials (z = −2.878, P = 0.004). No differences in HRs between congruent and incongruent trials were found in the SZP (z = −0.535, P = 0.593).

Global field power.

The running ANOVA with the factors Group (SZP vs. HC) and Condition (low vs. high predictability) revealed a significant main effect of Condition between 206 and 250 ms after auditory onset [F(1,32) = 17.25, P < 0.001; Fig. 2]. This effect, which was observed in both groups, was due to larger GFP for high (average across groups = 0.70 μV2)- compared with low (0.65 μV2)-predictive syllables. Additionally, post hoc tests between high- and low-predictive syllables for congruent and incongruent trials were computed within each group. The tests revealed higher GFP in incongruent trials for high (0.70 μV2)- compared with low (0.58 μV2)-predictive syllables in SZP [t(1,16) = 3.52, P = 0.003]. Similarly, matched HC showed higher GFP in response to incongruent high (0.41 μV2)- compared with incongruent low (0.33 μV2)-predictive syllables [t(1,16) = 2.65, P = 0.018]. However, after correction for multiple comparisons this difference did not remain significant (Bonferroni-corrected threshold P < 0.00625). For congruent trials the results revealed lower GFP for high (0.57 μV2)- compared with low (0.66 μV2)-predictive syllables in SZP [t(1,16) = 3.52, P = 0.030]. Again, after correction for multiple comparisons this difference did not remain significant (Bonferroni-corrected threshold P < 0.00625). Finally, matched HC showed no significant differences for GFP in response to congruent high (0.37 μV2)- compared with low (0.33 μV2)-predictive syllables [t(1,16) = 1.32, P = 0.204].

Fig. 2.

Global field power (GFP) and event-related potentials (ERPs) at fronto-central scalp electrodes. A: traces of GFP in HC (left) and SZP (right) for congruent (red lines) and incongruent (blue lines) trials. Dashed lines represent low- and solid lines represent high-predictive trials. Time 0 denotes the onset of the auditory syllable. The analysis of GFP revealed a significant condition effect in the 206–250 ms interval (highlighted in gray). B: traces of ERPs in HC (left) and SZP (right) for congruent (red lines) and incongruent (blue lines) trials. Dashed lines represent low- and solid lines high-predictive trials. ERPs revealed a typical N1-P2 amplitude pattern to the auditory syllable onset, with smaller amplitudes in SZP compared with HC.

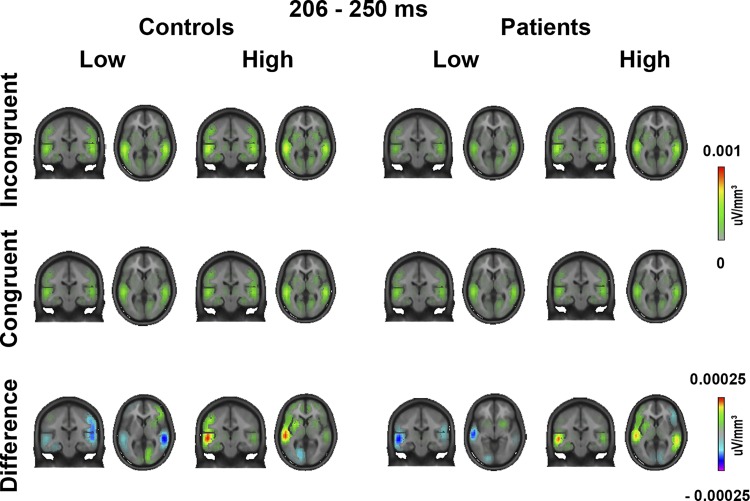

ERP topographies for the time window of the significant GFP main effect indicate a stronger fronto-central activation during high- compared with low-predictive trials (Fig. 3). LAURA analysis identified the sources of this effect in a region encompassing the auditory cortex (Fig. 4). No other main effects of Group [F(1,32), all F values < 2.05, all P values > 0.16] or interactions [F(1,32), all F values < 3.34, all P values > 0.08] were found at the same latency.

Fig. 3.

Topographies of event-related potentials to the 4 experimental stimulus types, as well as for the difference between incongruent and congruent trials during the 206–250 ms interval.

Fig. 4.

Local autoregressive average source estimation of ERPs for the 4 experimental stimulus types and the difference between incongruent and congruent trials during the 206–250 ms interval. Figure shows similar incongruence detection in SZP and HC that was localized in the auditory cortex.

Oscillatory activity.

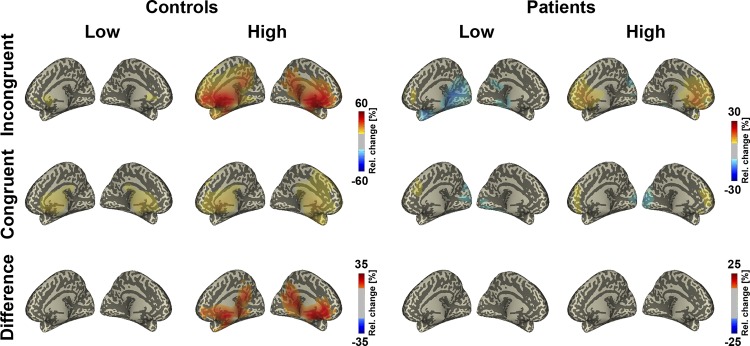

The running ANOVA with the factors Group (SZP vs. HC) and Condition (low vs. high predictability) revealed a significant main effect of Group between 230 and 370 ms in the theta band, centered at 7.5 Hz [F(1,32) = 7.66, P = 0.015]. Across conditions, theta-band power was stronger in HC compared with SZP. The ANOVA revealed an additional main effect of Condition between 210 and 380 ms, centered at 7.5 Hz [F(1,32) = 13.73, P = 0.008]. This effect was due to a stronger theta-band power in high- compared with low-predictive trials. Most relevant, the ANOVA revealed a Group × Condition interaction between 230 and 370 ms, centered at 7.5 Hz [F(1,32) = 7.42, P = 0.011; Fig. 5 and Fig. 6] Follow-up analyses, which were conducted separately for each group, revealed stronger theta-band power in high- compared with low-predictive trials, particularly in HC [t(16) = −4.06, P < 0.001]. No such effect was found in SZP [t(16) = −0.67, P = 0.507]. Further analyses revealed that frontal theta-band power was larger in HC compared with SZP in high-predictive [t(32) = 3.21, P = 0.003] but not in low-predictive [t(32) = 0.26, P = 0.796] trials. Within SZP the comparison between incongruent low- and incongruent high-predictive trials revealed significant differences [t(16) = −3.50, P = 0.003]. In contrast, the comparison between congruent low- and congruent high-predictive trials showed no significant differences [t(16) = −1.24, P = 0.233]. Similarly, within matched HC, the analysis revealed significant differences between incongruent low- and incongruent high-predictive trials [t(16) = −5.10, P < 0.001]. For congruent trials the comparison between low- and high-predictive trials revealed no significant differences [t(16) = −1.06, P = 0.307].

Fig. 5.

Time-frequency responses and topographies of oscillatory power. A: time-frequency responses of 4- to 30-Hz power to the 4 experimental stimulus types and the difference between incongruent and congruent trials at fronto-central scalp electrodes. Time 0 denotes the onset of the auditory syllable. The differences between incongruent and congruent trials (bottom) revealed enhanced theta-band power (i.e., 7 Hz) around 300 ms, particularly in the control group. B: topographies of fronto-central theta-band power. Bold dots at bottom (differences) denote the electrode group in which a significant Group × Condition interaction was found in the 230–370 ms interval. Theta-band power was enhanced in the control group, especially in the incongruent high condition.

Fig. 6.

Traces of fronto-central theta-band power: traces of theta-band power in HC (left) and SZP (right) for congruent (red lines) and incongruent (blue lines) trials at fronto-central electrodes. Dashed lines represent low- and solid lines high-predictive trials. The time course of theta-band power was similar in HC and SZP, while the amplitude was substantially reduced in SZP, most strongly in the incongruent high condition.

Additionally, we calculated the difference between SZP and matched HC of theta power at baseline level. The running ANOVA with the factors Group and Predictability revealed no significant effects of Group in baseline (−500 to −100 ms; 7–8 Hz) theta-band power between SZP and HC (all P values > 0.522). Moreover, no main effects of Condition (all P values > 0.185) or interactions between Group × Condition (all P values > 0.103) were found in baseline theta-band power.

Finally, DICS source analysis revealed that the theta-band power effects are localized in frontal cortex, encompassing anterior cingulate cortex (ACC) and medial frontal cortex (MFC) (Fig. 7). Frontal theta-band power between high- and low-predictive trials differed in HC but not in SZP. Figure 8 provides an overview of the effects obtained in GFP and oscillatory responses.

Fig. 7.

DICS source analysis of oscillatory power in the theta band. The source of theta-band power was localized in the medial frontal gyrus and anterior cingulate cortex. In HC crossmodal PE processing was found in frontal areas, particularly in the high-predictive condition. No such effect was observed in SZP.

Fig. 8.

Summary of main results. Amplitudes of GFP (left) and 7.5-Hz power (right) at fronto-central electrodes. Amplitudes of GFP are depicted for the interval of 206–250 ms. GFP differences (incongruent minus congruent trials) were positive in high- and negative in low-predictive trials. The pattern of effects did not differ between groups (bottom left). Amplitudes of 7.5-Hz power are depicted for the 230–370 ms interval. Power differences between incongruent and congruent trials were stronger in high- compared with low-predictive trials, particularly in the HC group (bottom right).

Relationships between EEG data, medication, and clinical symptoms.

The role of the frontal theta-band power deficit for the SZ psychopathology was investigated by calculating partial correlations between the difference (i.e., incongruent minus congruent) of frontal theta-band power in the high-predictive condition, medication dosage, and PANSS scores. We used the average frontal theta-band power (7.5 Hz), which was calculated across the 10 electrodes of the central ROI. Medication dosage did not correlate with frontal theta-band power (P > 0.33). Importantly, partial correlation analyses between the theta-band power difference and PANSS scores revealed a trend toward a negative relationship with the factor Excitement (r = −0.593, P = 0.015). No other significant relationships were found (all P values > 0.21).

DISCUSSION

In this study we examined the neural signatures of crossmodal PE processing in SZP and HC. In both groups we observed effects of incongruence detection in GFP, which were localized in the auditory cortex. Moreover, we obtained group differences in frontal theta-band power. Theta-band power was larger for PE processing of high- compared with low-predictive audiovisual syllables in HC, whereas no such effect was found in SZP.

Audiovisual stimulus congruence modulates behavioral performance in patients and control subjects.

RTs to audiovisual target syllables were longer in SZP than in HC. This is in line with previous audiovisual processing studies using simple (e.g., beeps and flashes) and complex (e.g., natural speech) audiovisual stimuli, showing prolonged RTs (Williams et al. 2010) and decreased HRs (de Gelder et al. 2005; de Jong et al. 2009) in SZP, indicating a general slowing in this patient group. This is in accordance with the observation of prolonged RTs in SZP compared with HC during motor-planning tasks (Grootens et al. 2009; Jogems-Kosterman et al. 2001). Additionally, we observed longer RTs to incongruent compared with congruent audiovisual targets in both groups. Previous studies in healthy participants have also reported facilitation effects for congruent compared with incongruent audiovisual speech stimuli (Grant and Seitz 2000). Notably, only HC showed a higher HR to congruent compared with incongruent audiovisual targets. The HRs in SZP did not differ between conditions. This indicates that stimulus incongruence had a stronger impact on behavioral performance in HC than in SZP, which is in line with the previous finding that SZP do not benefit from congruent audiovisual information in audiovisual speech perception (Martin et al. 2013). Finally, the HR in visual catch trials revealed no differences between SZP and HC. Consequently, we assume that both groups showed similar visual attention in the present task, which contradicts a general sensory processing deficit in SZP.

Intact audiovisual incongruence detection in auditory cortex of schizophrenia patients.

The analysis of GFP revealed differences between high- and low-predictive audiovisual syllables at 206–250 ms after auditory onset. In both groups GFP amplitude differences between incongruent and congruent trials were larger in high- compared with low-predictive stimuli. Source estimation revealed that the effect of visual predictability on audiovisual speech processing is localized in the auditory cortex. Recent functional (f)MRI research has suggested that PEs in primary sensory areas relate to temporal predictions (Lee and Noppeney 2011, 2014). With respect to predictive coding, Lee and Noppeney hypothesized that the brain predicts the temporal evolution of audiovisual inputs and their temporal relationship. In addition, the authors predicted that the place of PE generation depends on the directionality of the audiovisual asynchrony. In our study, we used natural speech stimuli where the visual precedes the auditory signal. Hence, the leading visual signal generates the temporal prediction and the delayed auditory input is expected to generate the PE signal in the auditory cortex when the prediction is invalid. Similar to our effects in the auditory cortex, Lee and Noppeney (2014) reported that visual leading asynchrony elicits a PE signal in auditory cortices. According to the predictive coding framework, our results suggest that natural audiovisual speech stimuli evolve based on temporal regularities and enable crossmodal temporal predictions, which first become manifest in the auditory cortex. Interestingly, the pattern of effects was similar in SZP and HC. More specifically, the strength of visually induced prediction influences the processing of audiovisual speech in auditory cortex similarly in both groups. This observation is in contrast with a previous study showing differences in early processing of congruent and incongruent audiovisual speech in HC and SZP (Magnée et al. 2009). However, in this previous study the strength of the visual prediction upon the auditory signal was not systematically varied. Similar to the present GFP effect, Stekelenburg et al. (2013) found no differences in ERPs to congruent and incongruent audiovisual speech around 200 ms after auditory onset between HC and SZP. Hence, the present finding indicates an intact detection of audiovisual stimulus incongruence in the auditory cortex of SZP.

Previously, Klucharev et al. (2003) examined late phonetic audiovisual interactions in healthy participants and found larger ERPs for incongruent compared with congruent audiovisual speech stimuli at 235–325 ms. Further studies revealed that sources of this late phonetic audiovisual interaction are localized in the auditory cortex (Möttönen et al. 2002, 2004). The present findings also correspond with evidence from an ERP study on audiovisual speech processing in SZP and HC (Stekelenburg et al. 2013). This study found no group differences in P2 amplitudes in response to congruent and incongruent audiovisual speech stimuli.

Taken together, the present findings suggest that high- and low-predictive visual speech information influences stimulus processing in the auditory cortex similarly in SZP and HC. However, the degree of crossmodal prediction induced by visual speech information, i.e., high vs. low predictive, differentially modulates audiovisual processing in the auditory cortex. High-predictive visual information leads to stronger auditory evoked responses to incongruent stimuli than low-predictive visual information, indicating that predictive visual information facilitates audiovisual mismatch detection. The present study suggests that this process is widely intact in SZP.

Theta oscillations reflect impaired crossmodal prediction error processing in schizophrenia.

Frontal theta-band power between 230 ms and 370 ms differed significantly between SZP and HC. We observed stronger theta-band power in high- compared with low-predictive trials in HC, whereas no such effect was found in SZP. Specifically, SZP lacked an enhanced frontal PE processing in the high-predictive condition. The effect in HC was source localized in the frontal cortex, encompassing MFC and ACC. This observation is interesting considering the results of previous fMRI studies, which focused on the anatomical structures underlying crossmodal PE processing in healthy participants (Noppeney et al. 2007, 2010). Noppeney et al. (2007) proposed that crossmodal PE processing in audiovisual speech is expressed at multiple hierarchical levels, including the STG at a first phonological level, the angular gyrus at a semantic level, and the MFC reflecting a higher conceptual level. Similar to Noppeney et al. (2007), our study shows that the audiovisual PE is found at different hierarchical levels, e.g., in the auditory cortex, presumably reflecting a first phonological level, and later in the frontal cortex, likely reflecting a higher conceptual level. In line with Noppeney et al. (2007), we suggest that the PE signal propagates through multiple hierarchical levels during various consecutive processing stages.

Frontal areas including ACC and MFC play an important role in top-down control of attention, which is presumably reflected in modulations of theta-band oscillations (Cavanagh and Frank 2014). Frontal theta-band oscillations have been related to cognitive control induced by conflicting and erroneous information that required behavioral adaptation (Cohen and van Gaal 2013; Hanslmayr et al. 2008). In this regard, it is important to differentiate the concepts of cognitive control and PE. The former relates to the inhibition of a prepotent behavioral response to adapt behavior. The latter process refers to the internal update of sensory prediction in order to optimize upcoming PE but does not necessarily involve behavioral adaptation. Hence, the absence of enhanced frontal theta-band effects during crossmodal PE processing in the present study points toward altered top-down processing, i.e., insufficient update and resolution of violated predictions, in the frontal cortex of SZP.

We also observed a trend-level negative relationship between the PE-induced frontal theta-band enhancements and the SZ psychopathology, a finding that requires validation in a larger sample. Nevertheless, the present data indicate a link between frontal theta-band oscillation deficits and SZ psychopathology. To date, the results of EEG studies with respect to correlations between positive symptoms and EEG measures are mixed. As we recently discussed in a study relating sensory gating in gamma-band power to positive symptoms (Keil et al. 2016), the mixed results might be due to the various different approaches that have been applied in the analysis of EEG data. It could be that less temporal stability in stimulus-related EEG signals in SZP results in noisier ERP measures. The power of induced oscillatory activity is less affected by temporal variability and might thus be better suited to relate EEG activity to clinical symptoms.

Numerous fMRI studies in SZP have found relationships between altered frontal cortex activity and impairments in executive functions (Goghari et al. 2010). With emphasis on audiovisual speech processing, visual lip movements not only provide information about the content, i.e., “what,” but also about the onset, i.e., “when,” of the upcoming auditory input (Arnal and Giraud 2012). A recent study compared the McGurk fusion rate in SZP and HC at varying onset asynchronies between visual and auditory inputs (Martin et al. 2013). The study showed that SZP compared with HC have a larger temporal window in which asynchronous audiovisual speech stimuli are perceived as synchronous. This is in agreement with the finding of enhanced simultaneity perception rates in SZP obtained in simultaneity judgment tasks using basic, i.e., semantically meaningless, unisensory (Giersch et al. 2009, 2013) and multisensory (Foucher et al. 2007) stimuli. Thus the lack of frontal theta-band power enhancement in the present study might reflect, at least in part, temporal processing deficits at later processing stages in SZP. The mere detection of audiovisual incongruence, as reflected in auditory cortex activity, seems to be much less affected. Across conditions frontal theta-band power was reduced in SZP. Recently, Popov et al. (2015) found reduced frontal theta-band oscillations in SZP during the processing of color-word incongruence. Another study revealed diminished frontal theta-band power in SZP during the processing of visual motion and top-down perceptual switching (Mathes et al. 2016). This suggests that altered theta-band oscillations play an important role in top-down processing deficits in SZP.

Interestingly, an MEG study in healthy participants revealed violations of crossmodal predictions that were expressed in stronger coupling of beta-band (14–15 Hz) and gamma-band (60–80 Hz) oscillations (Arnal et al. 2011). In contrast, in this study we obtained oscillatory responses in the EEG and did not find clear modulations in gamma-band oscillations. MEG compared with EEG has a higher sensitivity in the measurement of high frequency oscillations (Muthukumaraswamy and Singh 2013). Additionally, numerous studies have revealed reduced gamma-band oscillations in SZP (Gallinat et al. 2004; Leicht et al. 2010; Uhlhaas and Singer 2010). These factors might have contributed to the absence of gamma-band modulations in the present study. It would be interesting to use MEG to examine gamma-band oscillations during crossmodal PE processing in SZP.

Summary and conclusions.

The present study demonstrates a crossmodal PE processing deficit in SZP. In auditory areas we observed similar processing of audiovisual stimulus incongruence in SZP and HC. The increase of GFP in high- compared with low-predictive syllables suggests that the crossmodal prediction strength modulates audiovisual incongruence detection in the auditory cortex. The key novel finding is that SZP lack a crossmodal PE processing-related enhancement of frontal theta-band oscillations. The reduced frontal theta-band power presumably reflects a top-down crossmodal processing deficit. Aberrant frontal theta-band oscillations are conducive to dysfunctional crossmodal PE processing in SZ and might contribute to the recently proposed multisensory processing deficits in this patient group.

GRANTS

This work was supported by the European Union (ERC-2010-StG-20091209 to D. Senkowski) and the German Research Foundation (SE1859/4-1 to D. Senkowski and KE1828/2-1 to J. Keil).

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

Y.R.R., J.K., and D.S. conception and design of research; Y.R.R. and J.B. performed experiments; Y.R.R. and J.K. analyzed data; Y.R.R., J.K., and D.S. interpreted results of experiments; Y.R.R. prepared figures; Y.R.R., J.K., and D.S. drafted manuscript; Y.R.R., J.K., and D.S. edited and revised manuscript; Y.R.R., J.B., J.G., and D.S. approved final version of manuscript.

ACKNOWLEDGMENTS

We thank Markus Koch, Paulina Schulz, and Christiane Montag for their assistance in data collection.

REFERENCES

- Arnal LH, Giraud AL. Cortical oscillations and sensory predictions. Trends Cogn Sci 16: 390–398, 2012. [DOI] [PubMed] [Google Scholar]

- Arnal LH, Wyart V, Giraud AL. Transitions in neural oscillations reflect prediction errors generated in audiovisual speech. Nat Neurosci 14: 797–801, 2011. [DOI] [PubMed] [Google Scholar]

- Van Atteveldt N, Murray M, Thut G, Schroeder CE. Multisensory integration: flexible use of general operations. Neuron 81: 1240–1253, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis 10: 433–436, 1997. [PubMed] [Google Scholar]

- Brunet D, Murray MM, Michel CM. Spatiotemporal analysis of multichannel EEG: CARTOOL. Comput Intell Neurosci 2011: 813870, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavanagh JF, Frank MJ. Frontal theta as a mechanism for cognitive control. Trends Cogn Sci 18: 414–421, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen MX, van Gaal S. Dynamic interactions between large-scale brain networks predict behavioral adaptation after perceptual errors. Cereb Cortex 23: 1061–1072, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel AK, Fries P. Beta-band oscillations–signalling the status quo? Curr Opin Neurobiol 20: 156–65, 2010. [DOI] [PubMed] [Google Scholar]

- Esser SK, Huber R, Massimini M, Peterson MJ, Ferrarelli F, Tononi G. A direct demonstration of cortical LTP in humans: a combined TMS/EEG study. Brain Res Bull 69: 86–94, 2006. [DOI] [PubMed] [Google Scholar]

- Fletcher PC, Frith CD. Perceiving is believing: a Bayesian approach to explaining the positive symptoms of schizophrenia. Nat Rev Neurosci 10: 48–58, 2009. [DOI] [PubMed] [Google Scholar]

- Ford JM, Mathalon DH. Anticipating the future: automatic prediction failures in schizophrenia. Int J Psychophysiol 83: 232–239, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ford JM, Palzes VA, Roach BJ, Mathalon DH. Did I do that? Abnormal predictive processes in schizophrenia when button pressing to deliver a tone. Schizophr Bull 40: 804–812, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foucher JR, Lacambre M, Pham B, Giersch A, Elliott MA. Low time resolution in schizophrenia. Lengthened windows of simultaneity for visual, auditory and bimodal stimuli. Schizophr Res 97: 118–127, 2007. [DOI] [PubMed] [Google Scholar]

- Gallinat J, Winterer G, Herrmann CS, Senkowski D. Reduced oscillatory gamma-band responses in unmedicated schizophrenic patients indicate impaired frontal network processing. Clin Neurophysiol 115: 1863–1874, 2004. [DOI] [PubMed] [Google Scholar]

- Gardner DM, Murphy AL, O'Donnell H, Centorrino F, Baldessarini RJ. International consensus study of antipsychotic dosing. Am J Psychiatry 167: 686–693, 2010. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Vroomen J, de Jong SJ, Masthoff ED, Trompenaars FJ, Hodiamont P. Multisensory integration of emotional faces and voices in schizophrenics. Schizophr Res 72: 195–203, 2005. [DOI] [PubMed] [Google Scholar]

- Giard MH, Perrin F, Echallier JF, Thévenet M, Froment JC, Pernier J. Dissociation of temporal and frontal components in the human auditory N1 wave: a scalp current density and dipole model analysis. Electroencephalogr Clin Neurophysiol 92: 238–252, 1994. [DOI] [PubMed] [Google Scholar]

- Giersch A, Lalanne L, van Assche M, Elliott MA. On disturbed time continuity in schizophrenia: an elementary impairment in visual perception? Front Psychol 4: 281, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giersch A, Lalanne L, Corves C, Seubert J, Shi Z, Foucher J, Elliott MA. Extended visual simultaneity thresholds in patients with schizophrenia. Schizophr Bull 35: 816–825, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goghari V, Sponheim S, MacDonald W. The functional neuroanatomy of symptom dimensions in schizophrenia: a qualitative and quantitative review of a persistent question. Neurosci Biobehav Rev 34: 468–486, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grant KW, Seitz PF. The use of visual speech cues for improving auditory detection of spoken sentences. J Acoust Soc Am 108: 1197–1208, 2000. [DOI] [PubMed] [Google Scholar]

- Grootens KP, Vermeeren L, Verkes RJ, Buitelaar JK, Sabbe BG, van Veelen N, Kahn RS, Hulstijn W. Psychomotor planning is deficient in recent-onset schizophrenia. Schizophr Res 107: 294–302, 2009. [DOI] [PubMed] [Google Scholar]

- Gross J, Kujala J, Hamaleinen M, Timmermann L, Schnitzler A, Salmelin R. Dynamic imaging of coherent sources: studying neural interactions in the human brain. Proc Natl Acad Sci USA 98: 694–699, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grützner C, Wibral M, Sun L, Rivolta D, Singer W, Maurer K, Uhlhaas PJ. Deficits in high- (>60 Hz) gamma-band oscillations during visual processing in schizophrenia. Front Hum Neurosci 7: 88, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guthrie D, Buchwald JS. Significance testing of difference potentials. Psychophysiology 28: 240–244, 1991. [DOI] [PubMed] [Google Scholar]

- Hanslmayr S, Pasto B, Ba K, Gruber S, Wimber M, Klimesch W. The electrophysiological dynamics of interference during the Stroop task. J Cogn Neurosci 20: 215–225, 2008. [DOI] [PubMed] [Google Scholar]

- Hermann B, Schlichting N, Obleser J. Dynamic range adaptation to spectral stimulus statistics in human auditory cortex. J Neurosci 34: 327–331, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jogems-Kosterman BJ, Zitman FG, van Hoof JJ, Hulstijn W. Psychomotor slowing and planning deficits in schizophrenia. Schizophr Res 48: 317–333, 2001. [DOI] [PubMed] [Google Scholar]

- Johnson SC, Lowery N, Kohler C, Turetsky BI. Global-local visual processing in schizophrenia: evidence for an early visual processing deficit. Biol Psychiatry 58: 937–946, 2005. [DOI] [PubMed] [Google Scholar]

- de Jong JJ, Hodiamont PP, Van den Stock J, de Gelder B. Audiovisual emotion recognition in schizophrenia: reduced integration of facial and vocal affect. Schizophr Res 107: 286–293, 2009. [DOI] [PubMed] [Google Scholar]

- Kay SR, Fiszbein A, Opler LA. The Positive and Negative Syndrome Scale (PANSS) for Schizophrenia. Schizophr Bull 13: 261–276, 1987. [DOI] [PubMed] [Google Scholar]

- Keefe RS, Goldberg TE, Harvey PD, Gold JM, Poe MP, Coughenour L. The Brief Assessment of Cognition in Schizophrenia: reliability, sensitivity, and comparison with a standard neurocognitive battery. Schizophr Res 68: 283–297, 2004. [DOI] [PubMed] [Google Scholar]

- Keil J, Roa Romero Y, Balz J, Henjes M, Senkowski D. Positive and negative symptoms in schizophrenia relate to distinct oscillatory signatures of sensory gating. Front Hum Neurosci 10: 104, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kissler J, Herbert C. Emotion, Etmnooi, or Emitoon? Faster lexical access to emotional than to neutral words during reading. Biol Psychol 92: 464–479, 2013. [DOI] [PubMed] [Google Scholar]

- Kissler J, Koessler S. Emotionally positive stimuli facilitate lexical decisions—an ERP study. Biol Psychol 86: 254–264, 2011. [DOI] [PubMed] [Google Scholar]

- Klucharev V, Möttönen R, Sams M. Electrophysiological indicators of phonetic and non-phonetic multisensory interactions during audiovisual speech perception. Cogn Brain Res 18: 65–75, 2003. [DOI] [PubMed] [Google Scholar]

- Lange J, Christian N, Schnitzler A. Audio-visual congruency alters power and coherence of oscillatory activity within and between cortical areas. Neuroimage 79: 111–120, 2013. [DOI] [PubMed] [Google Scholar]

- Lee H, Noppenney U. Long-term music training tunes how the brain temporally binds signals from multiple senses. Proc Natl Acad Sci USA 108: 1441–1450, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee H, Noppenney U. Temporal prediction errors in visual and auditory cortices. Curr Biol 24: R309–R310, 2014. [DOI] [PubMed] [Google Scholar]

- Lee TW, Girolami M, Sejnowski TJ. Independent component analysis using an extended infomax algorithm for mixed subgaussian and supergaussian sources. Neural Comput 11: 417–41, 1999. [DOI] [PubMed] [Google Scholar]

- Leicht G, Kirsch V, Giegling I, Karch S, Hantschk I, Möller H, Pogarell O, Hegerl U, Rujescu D, Mulert C. Reduced early auditory evoked gamma-band response in patients with schizophrenia. Biol Psychiatry 67: 224–231, 2010. [DOI] [PubMed] [Google Scholar]

- Magnée MJ, Oranje B, van Engeland H, Kahn RS, Kemner C. Cross-sensory gating in schizophrenia and autism spectrum disorder: EEG evidence for impaired brain connectivity? Neuropsychologia 47: 1728–1732, 2009. [DOI] [PubMed] [Google Scholar]

- Martin B, Giersch A, Huron C, van Wassenhove V. Temporal event structure and timing in schizophrenia: Preserved binding in a longer “now.” Neuropsychologia 51: 358–371, 2013. [DOI] [PubMed] [Google Scholar]

- Mathes B, Schmiedt-Fehr C, Kedilaya S, Strüber D, Brand A, Basar-Eroglu C. Theta response in schizophrenia is indifferent to perceptual illusion. Clin Neurophysiol 127: 419–430, 2016. [DOI] [PubMed] [Google Scholar]

- Möttönen R, Krause CM, Tiippana K, Sams M. Processing of changes in visual speech in the human auditory cortex. Brain Res Cogn Brain Res 13: 417–425, 2002. [DOI] [PubMed] [Google Scholar]

- Möttönen R, Schürmann M, Sams M. Time course of multisensory interactions during audiovisual speech perception in humans: a magnetoencephalographic study. Neurosci Lett 363: 112–115, 2004. [DOI] [PubMed] [Google Scholar]

- Muthukumaraswamy SD, Singh KD. Visual gamma oscillations: the effects of stimulus type, visual field coverage and stimulus motion on MEG and EEG recordings. Neuroimage 69: 223–230, 2013. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Josephs O, Hocking J, Price CJ, Friston KJ. The effect of prior visual information on recognition of speech and sounds. Cereb Cortex 18: 598–609, 2007. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Ostwald D, Werner S. Perceptual decisions formed by accumulation of audiovisual evidence in prefrontal cortex. J Neurosci 30: 7434–7446, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picton TW, Bentin S, Berg P, Donchin E, Hillyard SA, Johnson R, Miller GA, Ritter W, Ruchkin DS, Rugg MD, Taylor MJ. Guidelines for using human event-related potentials to study cognition: recording standards and publication criteria. Psychophysiology 37: 127–152, 2000. [PubMed] [Google Scholar]

- Popov T, Rockstroh B, Popova P, Carolus A, Miller G. Dynamics of alpha oscillations elucidate facial affect recognition in schizophrenia. Cogn Affect Behav Neurosci 14: 364–377, 2014. [DOI] [PubMed] [Google Scholar]

- Popov T, Wienbruch C, Meissner S, Miller GA, Rockstroh B. A mechanism of deficient interregional neural communication in schizophrenia. Psychophysiology 52: 648–656, 2015. [DOI] [PubMed] [Google Scholar]

- Potts GF, Dien J, Hartry-Speiser AL, McDougal LM, Tucker DM. Dense sensor array topography of the event-related potential to task-relevant auditory stimuli. Electroencephalogr Clin Neurophysiol 106: 444–456, 1998. [DOI] [PubMed] [Google Scholar]

- Rao RP, Ballard DH. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat Neurosci 2: 79–87, 1999. [DOI] [PubMed] [Google Scholar]

- Roa Romero Y, Senkowski D, Keil J. Early and late beta-band power reflect audiovisual perception in the McGurk illusion. J Neurophysiol 13: 2342–2350, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross LA, Saint-Amour D, Leavitt VM, Molholm S, Javitt DC, Foxe JJ. Impaired multisensory processing in schizophrenia: deficits in the visual enhancement of speech comprehension under noisy environmental conditions. Schizophr Res 97: 173–183, 2007. [DOI] [PubMed] [Google Scholar]

- Schneider TR, Debener S, Oostenveld R, Engel AK. Enhanced EEG gamma-band activity reflects multisensory semantic matching in visual-to-auditory object priming. Neuroimage 42: 1244–1254, 2008. [DOI] [PubMed] [Google Scholar]

- Schneider TR, Lorenz S, Senkowski D, Engel AK. Gamma-band activity as a signature for cross-modal priming of auditory object recognition by active haptic exploration. J Neurosci 31: 2502–2510, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schurger A, Cowey A, Cohen JD, Treisman A, Tallon-Baudry C. Distinct and independent correlates of attention and awareness in a hemianopic patient. Neuropsychologia 46: 2189–2197, 2008. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Gallinat J. Dysfunctional prefrontal gamma-band oscillations reflect working memory and other cognitive deficits in schizophrenia. Biol Psychiatry 77: 1010–1019, 2015. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Schneider TR, Foxe JJ, Engel AK. Crossmodal binding through neural coherence: implications for multisensory processing. Trends Neurosci 31: 401–409, 2008. [DOI] [PubMed] [Google Scholar]

- Stekelenburg JJ, Pieter J, Van Gool AR, Sitskoorn M, Vroomen J, Maes JP. Deficient multisensory integration in schizophrenia: an event-related potential study. Schizophr Res 147: 253–261, 2013. [DOI] [PubMed] [Google Scholar]

- Stone DB, Coffman BA, Bustillo JR, Aine CJ, Stephen JM, Moran RJ. Multisensory stimuli elicit altered oscillatory brain responses at gamma frequencies in patients with schizophrenia. Front Hum Neurosci 8: 788, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tseng H, Bossong MG, Modinos G, Chen K, Mcguire P, Allen P. A systematic review of multisensory cognitive-affective integration in schizophrenia. Neurosci Biobehav Rev 55: 444–452, 2015. [DOI] [PubMed] [Google Scholar]

- Uhlhaas PJ, Singer W. Abnormal neural oscillations and synchrony in schizophrenia. Nat Rev Neurosci 11: 100–113, 2010. [DOI] [PubMed] [Google Scholar]

- Wallwork RS, Fortgang R, Hashimoto R, Weinberger DR, Dickinson D. Searching for a consensus five-factor model of the Positive and Negative Syndrome Scale for schizophrenia. Schizophr Res 137: 246–250, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willenbockel V, Sadr J, Fiset D, Horne GO, Gosselin F, Tanaka JW. Controlling low-level image properties: the SHINE toolbox. Behav Res Methods 42: 671–684, 2010. [DOI] [PubMed] [Google Scholar]

- Williams LE, Light GA, Braff DL, Ramachandran VS. Reduced multisensory integration in patients with schizophrenia on a target detection task. Neuropsychologia 48: 3128–3136, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]