Abstract

Rapid eye movements (REMs) are a peculiar and intriguing aspect of REM sleep, even if their physiological function still remains unclear. During this work, a new automatic tool was developed, aimed at a complete description of REMs activity during the night, both in terms of their timing of occurrence that in term of their directional properties. A classification stage of each singular movement detected during the night according to its main direction, was in fact added to our procedure of REMs detection and ocular artifact removal. A supervised classifier was constructed, using as training and validation set EOG data recorded during voluntary saccades of five healthy volunteers. Different classification methods were tested and compared. The further information about REMs directional characteristic provided by the procedure would represent a valuable tool for a deeper investigation into REMs physiological origin and functional meaning.

I. Introduction

Rapid eye movements (REMs) represent a peculiar feature of REM sleep. They episodically occur mostly grouped in bursts and correspond to rapid saccades, similar to those occurring in the awake state when visual inputs are absent but imagined [1].

In normal subjects, REMs timing seems to be governed by a nonlinear deterministic process [2]; moreover, their time density increases from early to late REM sleep episodes and it shows a cyclical pattern within each REM episode with periodical peaks, the first one occurring 5-10 minutes after REM sleep onset. Since the discovery of REM sleep, REMs have been associated to the scanning of dream scene. From then on, many authors have highlighted several issues concerning this hypothesis, and many conjectures on their functional meaning have been proposed. However, REMs physiological function remains controversial and poorly understood and this makes these ocular movements and their particular organization during the night an intriguing sleep aspect to be analyzed. Several studies on humans, have shown how some REM parameters, such as the REM density and REMs tendency to cluster into bursts, may have a clinical relevance [3].

For this reason, we have developed a fully automated procedure aimed at identifying each individual REM [4], even within bursts, in order to characterize the whole REM activity in terms of global aspects (REMs number, REM density etc.) and of their temporal organization (number and characteristics of bursts). In [4], the detection procedure was used for develop a new adaptive-filter method for REM ocular artefact correction: this method modifies the signal, only during the EEG epochs in which a movement is detected and produces improved reconstructed EEG signals compared to the state of art.

In order to complete the REM analysis we present a classification stage aimed at discriminating the main spatial direction of each REM. To achieve this purpose, an ad hoc dataset has been populated using data obtained from electrooculographic (EOG) recordings. A list of possible classification features has been drawn up. Different classification methods have been then applied and for each one the optimal subset of features has been extracted using the Pudil’s sequential forward floating selection method (PSFFS) [5]. The dataset and the supervised classifiers is described in section II. The performance of the classifiers obtained during a cross-validation stage will be illustrated and compared in section III.

II. Methods

A. Experimental Settings

The dataset for training and validation of the supervised classifiers has been experimentally obtained by using EOG recordings from five healthy volunteers (24-35 years old), instructed to perform eye movements in response to a trigger sound. In order to make the kinematics parameters as much similar as possible to REMs occurring during sleep [1], volunteers were kept in the dark, lying on a bed and with their eyes closed. Each participant was requested to start and end individual eye movements at the centre of visual field as expected during REM sleep and to perform eye movements in four main directions (vertical, horizontal, oblique and circular), defining the four classes of movements. A 128 electrodes HydroCel Geodesic Sensor Net (HCGSN) and a Geodesic Net Amps 300 (GES300) system were used for signal recordings, keeping electrode impedance below 50 kΩ. The HCGSN included electrodes for electroencephalogram, eye movements and face/neck muscle contractions. Data were collected with a sampling rate of 500 Hz, resolution of 24 bits, precision of 70 nV/bit (0.07 μV), vertex Cz (in the international 10/20 system) as recording reference electrode. Two EOG bipolar derivations were extracted, representing respectively the horizontal (HEOG) and vertical (VEOG) component of the signal generated by the eyeball rotation. After acquisition, all data were 0.1-35 Hz band-pass filtered.

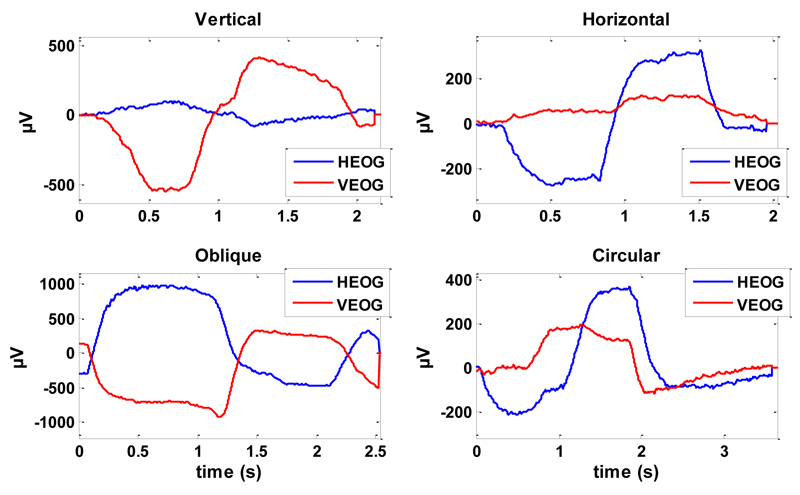

The time location of each trigger was used to identify a series of reference points on the EOG signals, in order to mark onset and end of each movement. In this way, it was possible to extract the signals related to individual eye movements (trial) to be inserted in the classification dataset. Fig. 1 shows an example of the VEOG and HEOG trials for an eye movement from each class.

Figure 1.

VEOG and HEOG signals for an eye movement of each class (vertical, horizontal, oblique and circular).

B. Classification methods

Three different classification methods were tested and compared. The first one is the K-nearest neighbor algorithm (KNN): the classification of each new object is based on the classes of the k objects closest to it in the feature-space. As a case study, we adopt k = 5 and the Euclidean distance as metric [6].

The other two methods are linear and quadratic discriminant analysis (LDA and QDA): they define a scalar projection of the multi-dimensional space of the features, in which the distances between the different classes are maximized. This is done under the assumption that each class has a multivariate normal distribution, whose statistical parameters are estimated from the dataset. Discriminant Analysis is referred as Linear when the covariance matrices of the classes are assumed to be identical, whereas is referred as Quadratic in the case of covariance estimates stratified for each class [7].

C. Features definition

As candidate features for the classifiers, we took into account two main set of features. The first set groups the features derived from the spectral analysis of horizontal and vertical EOG signals. Features from the spectral analysis would allow taking into account the high frequency activity associated to REMs, such as muscle contraction correlates. The second one groups the features describing the trajectory of eye movement in the frontal plane (VEOG/HEOG plane). Describing the time course of each movement as a point moving thought this plane is very effective, and gives a direct idea of the directional properties of the movement.

For each eye movement in the dataset, the features derived from EOG spectral analysis consisted in: 1) the power of VEOG and HEOG signals in five different frequency bands δ (0.5-4 Hz), θ (4-8 Hz), α (8-12 Hz), σ (12-15 Hz), β (15-25 Hz) and over the whole band of interest (broadband, 0.5-25 Hz); 2) the ratio between the vertical and horizontal power evaluated in the same frequency bands. The usage of this ratio took into account the fact that the power evaluated on single derivations (HEOG or VEOG) greatly depends on the amplitude of the movement and varies within subjects. Power values were expressed in logarithmic scale.

For each individual eye movement in the dataset, the features derived from EOG trajectory analysis consisted in:

-

1)

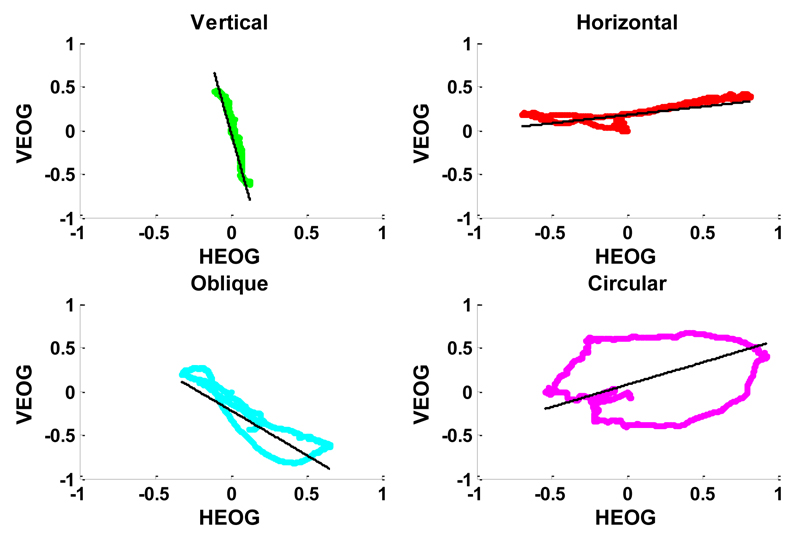

The area within each trajectory defined from the onset to the end point of the movement (the trajectory is close since it starts from the centre of the visual field and come back to the centre). The area within the trajectory was normalized by the maximum absolute distance of the trajectory from the origin: the normalization was introduced in order to make the area independent from the movement amplitude. As intuition suggests, circular movements draw trajectories very similar to a circumference, even if irregular, because of cerebral activity volume propagation and different location of EOG electrodes from the ocular dipole. Circular trajectories always show a wider area compared to the more flattened ones generated by the other classes of movements, as can be noted in Fig.2.

-

2)The analysis of trajectory variance performed with the Principal Component Analysis (PCA) applied on the VEOG and HEOG time sequences obtained for each movement:

where pc1 and pc2 represent the first and second principal component, ranked by highest variance, and the aij coefficients represent the loadings that map the original space into the new orthogonal one.(1)

Figure 2.

Observation of four eye movements reported in Fig.1, represented in the normalized VEOG/HEOG plane. The black line represents the direction of maximum variance, evaluated by PCA. For each movement, the correspondent values of the features derived from EOG trajectory are reported in Table I.

We considered as features for the classification both the ratio of the variance explained by the two principal components (var(pc1)/var(pc2)) and the slope of the direction of maximum variance. The ratio of variances enables to discriminate if the data in the VEOG/HEOG plane are concentrated mainly along a single direction, or if both the orthogonal axes described by the principal components account for similar portions of the data variance.

The slope of the maximum variance axis is defined as the ratio between the two loadings of the first principal component (a21/a11) and determine the main direction of each movement. Preliminary analysis has shown that this variable is very useful to discriminate between non-circular eye movements (horizontal, vertical and oblique). Fig. 2 shows the direction of maximum variance for samples of each class of eye movement, while Table I reports the corresponding numerical values of the slope, area and ratio of variances.

Table I.

Features from EOG Trajectory

| Slope of Maximum Variance | Within Trajectory Area | Ratio of pc-variances | |

|---|---|---|---|

| Vertical | 3.7303 | 0.0529 | 0.0096 |

| Horizontal | 0.1892 | 0.0571 | 0.0575 |

| Oblique | 1.0178 | 0.2145 | 0.0239 |

| Circular | 0.5095 | 1.0686 | 0.3131 |

The main idea behind the introduction of these classification features is that the relative amplitude of the VEOG and HEOG signals may be indicative of the correspondent geometrical vertical and horizontal component of each eye movement. However, the dispositions of HEOG and VEOG electrodes around the eyes are different and this could cause a different proportionality between the electrical potential recorded and the real projection of the movements in the correspondent direction. In order to overcome this problem, before the evaluation of the classification features, each HEOG and VEOG trials was normalized respectively to the maximum correspondent HEOG and VEOG value recorded among all the movements (independently from the class, separately for each subject).

D. Features selection

The performance of any classifier is strongly dependent on the number and the specific set of features; it does not necessarily increases with the number of features, and redundancy in the dataset can reduce it.

In order to cope with this issue, an optimal subset of the candidate features was derived for each classification method (herein we recall that we take into account KNN, LDA and QDA) using a suboptimal search method (the number of candidate feature was too high for performing an exhaustive search). Among features selection algorithms, the Pudil’s Sequential Forward Floating Selection (PSFFS) method [5] has been chosen, mainly because of its capacity of treating the nesting problem, namely the fact that in sequential standard methods once a feature has been added or discarded it cannot be re-excluded or reselected, respectively. On contrary, the PSFFS is a bottom up search procedure which includes new features by means of applying roles similar to the plus l-take away-r algorithm, but with a number of forward and backward steps dynamically controlled, instead of fixed as in the original plus l-take away-r approach [7].

E. Classifiers comparison

In order to objectively measure and compare the performance of each classifier, a stratified k-fold cross-validation was performed. The dataset was then randomly partitioned into six equal folds, each containing the same fractions of observations from classes of the original dataset. The misclassification error was obtained as the mean value of those evaluated on each fold, using the remaining ones as training-set. However, the procedure does not test the capacity of the classifiers to overcome variability between different subjects. The validation procedure was then repeated, dividing the dataset in five folds, each containing signals belonging to only one subject.

III. Results

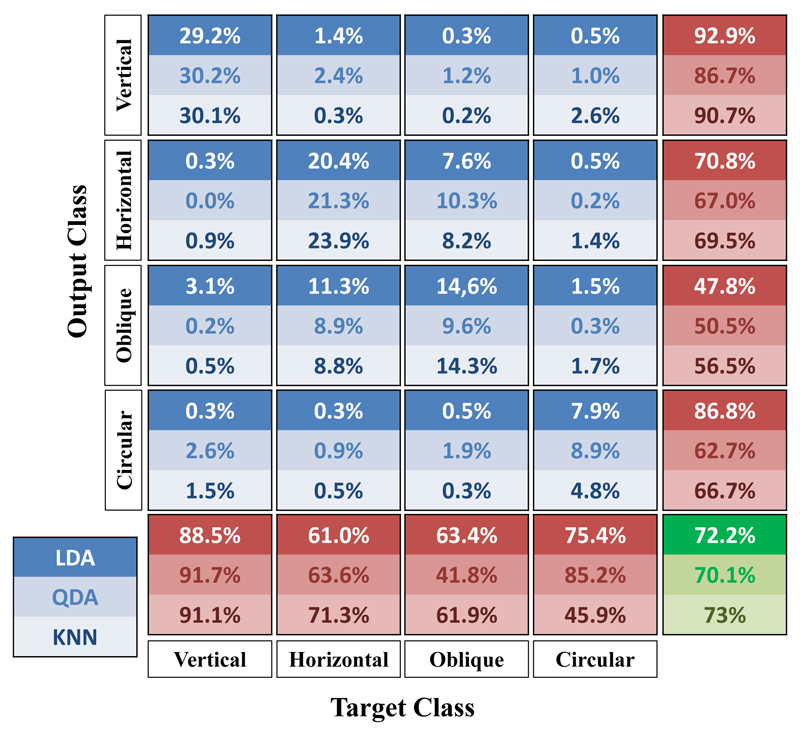

The results of the stratified k-fold cross-validation revealed no significant difference in the performance of the classifiers, since all of them reached a total percentage of correct classifications close to 100%, independent on the movement class. However, it is worth noting that this result does not take into account inter-subjects variability, in fact, performing the inter-subject cross-validation in disjoined training end test set (different subjects in the two sets) provided global performances significantly lower, as shown in Table II.

Table II.

Inter-Subject Cross-Validation

| KNN | LDA | QDA | |

|---|---|---|---|

| Misclassification Error | 27% | 27.8% | 29.9% |

The best global performance has been obtained using the KNN method, however the QDA showed the best capacity of identifying circular eye movements. This is shown in detail in Fig.3, where are reported the confusion matrices of inter-subject validation for the three classification methods. The matrix shows along columns the original class of the movement, while rows indicate the correspondent class assigned by the classifier. Irrespective of the classification method, best results are obtained in identifying vertical movements, whereas the greatest classification errors are related to the identification of oblique movements. Based on the global performance we adopted the KNN as the best classification method.

Figure 3.

Confusion matrices obtained by inter-subject cross-validation for LDA, QDA and KNN. The columns of the matrix indicate the original class of the movements, while rows show the output class assigned by the classifier.

For the KNN classifier, the subset of features selected by means of PSFFS method were: 1) Horizontal Broadband Power, 2) Vertical Broadband Power, 3) Horizontal δ Power, 4) Horizontal θ Power, 5) Horizontal σ Power, 6) Vertical δ Power, 7) H/V β Power Ratio, 8) Slope of Maximum Variance, 9) Within Trajectory Area. The KNN classification was based on a features subset composed by 4 out of 9 features were related to power band distribution in the HEOG, whereas only one feature was derived from the VEOG. The highest frequency band improves the classification performance by means of H/V β Power Ratio. The analysis of the movement trajectory in the VEOG/HEOG plane provided an important contribution to the classification by means of two features introduced in the optimal subset. The Within Trajectory Area and the Ratio of principal component variances describe a similar aspect of the movement, and for this reason, only one of them was selected in the optimal subset.

IV. Conclusion

The combination of the detection algorithm [4] and the present procedure for the automatic eye movements classification represents a new tool for the complete analysis of REMs activity during sleep, from the time series of REM occurrence to the directional properties.

In order to fine-tuning the classification algorithm we have compared different classification methods and we have adopted the KNN method as the best solution, because it has provided the better global classification performances and the better classification performance for the horizontal direction movements, which are the most frequent during REM sleep.

The proposed classification method could represent a valuable tool for the research on REMs physiological origin and functional significance. Until now in fact many studies have tried to analyze REMs directional aspect, for example retrospectively associating the direction of REMs to dream recall [8], or showing how ocular activity during wakefulness can affect directional properties of REMs during the night [9].

Moreover, the new tool could have clinical relevance since some neurodegenerative disorders are associated to impairment or alteration of REMs temporal pattern and directional properties [10].

Acknowledgment

The work was partially supported by University of Pisa and by ECSPLAIN-FP7-IDEAS-ERC-ref.338866.

References

- [1].Sprenger A, Lappe-Osthege M, Talamo S, Gais S, Kimmig H, Helmchen C. Eye movements during REM sleep and imagination of visual scenes. Neuroreport. 2010 Jan;21(1):45–9. doi: 10.1097/WNR.0b013e32833370b2. [DOI] [PubMed] [Google Scholar]

- [2].Trammell J, Ktonas P. A simple nonlinear deterministic process may generate the timing of rapid eye movements during human REM sleep. First International IEEE EMBS Conference on Neural Engineering, 2003. Conference Proceedings; 2003. pp. 324–327. [Google Scholar]

- [3].Buysse DJ, Hall M, Begley A, Cherry CR, Houck PR, Land S, Ombao H, Kupfer DJ, Frank E. Sleep and treatment response in depression: new findings using power spectral analysis. Psychiatry Res. 2001;103(1):51–67. doi: 10.1016/s0165-1781(01)00270-0. [DOI] [PubMed] [Google Scholar]

- [4].Betta M, Gemignani A, Landi A, Laurino M, Piaggi P, Menicucci D. Detection and removal of ocular artifacts from EEG signals for an automated REM sleep analysis. Conf Proc … Annu Int Conf IEEE Eng Med Biol Soc IEEE Eng Med Biol Soc Annu Conf. 2013 Jan;2013:5079–82. doi: 10.1109/EMBC.2013.6610690. [DOI] [PubMed] [Google Scholar]

- [5].Pudil P, Novovičová J, Kittler J. Floating search methods in feature selection. Pattern Recognit Lett. 1994 Nov;15(11):1119–1125. [Google Scholar]

- [6].Laurino M, Piarulli A, Bedini R, Gemignani A, Pingitore A, L’Abbate A, Landi A, Piaggi P, Menicucci D. Comparative study of morphological ECG features classificators: An application on athletes undergone to acute physical stress. 2011 11th International Conference on Intelligent Systems Design and Applications; 2011. pp. 242–246. [Google Scholar]

- [7].Webb AR. Statistical Pattern Recognition. Vol. 9 John Wiley & Sons, Ltd; Chichester, UK: 2002. [Google Scholar]

- [8].Leclair-Visonneau L, Oudiette D, Gaymard B, Leu-Semenescu S, Arnulf I. Do the eyes scan dream images during rapid eye movement sleep? Evidence from the rapid eye movement sleep behaviour disorder model. Brain. 2010 Jun;133(Pt 6):1737–46. doi: 10.1093/brain/awq110. [DOI] [PubMed] [Google Scholar]

- [9].De Gennaro L, Ferrara M. Effect of a presleep optokinetic stimulation on rapid eye movements during REM sleep. Physiol Behav. 2000 Jun;69(4–5):471–475. doi: 10.1016/s0031-9384(99)00263-2. [DOI] [PubMed] [Google Scholar]

- [10].Autret A, Lucas B, Mondon K, Hommet C, Corcia P, Saudeau D, de Toffol B. Sleep and brain lesions: a critical review of the literature and additional new cases. Neurophysiol Clin. 2001 Dec;31(6):356–75. doi: 10.1016/s0987-7053(01)00282-9. [DOI] [PubMed] [Google Scholar]