Abstract

Introduction:

Health literacy has been shown to be an important determinant of outcomes in numerous disease states. In an effort to improve health literacy, the Canadian Urological Association (CUA) publishes freely accessible patient information materials (PIMs) on common urological conditions. We sought to evaluate the readability of the CUA’s PIMs.

Methods:

All PIMs were accessed through the CUA website. The Flesch Reading Ease Score (FRES), the Flesch-Kincaid Grade Level (FKGL), and the number of educational graphics were determined for each PIM. Low FRES scores and high FKGL scores are associated with more difficult-to-read text. Average readability values were calculated for each PIM category based on the CUA-defined subject categorizes. The five pamphlets with the highest FKGL scores were revised using word substitutions for complex multisyllabic words and reanalyzed. The Kruskal-Wallis test was used to identify readability differences between PIM categories and paired t-tests were used to test differences between FKGL scores before and after revisions.

Results:

Across all PIMs, FRES values were low (mean 47.5, standard deviation [SD] 7.47). This corresponded to an average FKGL of 10.5 (range 8.1–12.0). Among PIM categories, the infertility and sexual function PIMs exhibited the highest average FKGL (mean 11.6), however, differences in scores between categories were not statistically significant (p=0.38). The average number of words per sentence was also highest in the infertility and sexual function PIMs and significantly higher than other categories (mean 17.2; p=0.01). On average, there were 1.4 graphics displayed per PIM (range 0–4), which did not vary significantly by disease state (p=0.928). Simple words substitutions improved the readability of the five most difficult-to-read PIMs by an average of 3.1 grade points (p<0.01).

Conclusions:

Current patient information materials published by the CUA compare favourably to those produced by other organizations, but may be difficult to read for low-literacy patients. Readability levels must be balanced against the required informational needs of patients, which may be intrinsically complex.

Introduction

Health literacy is defined by the Canadian Expert Panel on Health Literacy as, “the ability to access, understand, evaluate, and communicate information as a way to promote, maintain, and improve health in a variety of settings across the life-course.”1 Inherent to the concept of health literacy is the requirement of patients to use prose literacy, document literacy, numeracy, and problem-solving skills simultaneously.2 Therefore, a patient’s health literacy is intimately related to his/her prose literacy. Both prose literacy and health literacy have been linked to patient outcomes in numerous disease states.3–6

Recent multinational studies have established that 42% of Canadian adults aged 16–65 function below a Level III prose literacy level, with Level III literacy representing the internationally accepted literacy level required to function in a modern society.7 Urology patients, whom frequently represent an elderly population, may have literacy levels even lower. Based on the International Adult Literacy and Skills Survey, it is estimated that 60% of Canadians lack the capacity to obtain, understand, and act upon health information and services and to make appropriate health decisions without aid.8 Similar results from these same surveys in the U.S. have prompted several organizations to publish readability guidelines for patient information materials based on prose literacy levels. The American Medical Association, the National Institutes of Health, and the United States Department of Health and Human Services all recommend that patient informational materials (PIMs) be written between the fourth and six-grade reading level.

In an effort to improve health literacy among Canadian urology patients, the Canadian Urological Association (CUA) publishes freely accessible PIMs on a breadth of urological conditions and procedures. In the 2014–15 fiscal year, due to the redesign of the CUA PIMs, there was a large increase in the number of pamphlets distributed to CUA members, as well as an increased cost due to the redesign. A total of over 320 000 pamphlets were distributed at a total cost of over $68 000 to the CUA. To date, there has been no formal evaluation of the readability of these materials. We sought to evaluate the readability of the CUA PIMs to determine if they meet current readability guidelines. In addition, we sought to determine if the readability of these materials varied by disease state and whether it is conceivable that their readability could be improved.

Methods

All CUA PIMs were accessed through the CUA website (http://www.cua.org/en/patient-information). The informational content of the PIM was then copied and pasted directly into a word processing application (Microsoft Word, Redmond, U.S.). The readability of the material was determined using the Flesch Reading Ease Score (FRES) and the Flesch Kincaid Grade Level (FKGL) score. The FKGL is a well-established and validated measure of prose literacy. It was developed by the U.S. Navy for use in the development of text-based training materials.9 It calculates an average grade reading level based upon the average syllable and word content contained within the text. Low FRES scores and high FKGL scores are associated with more difficult-to-read text.

Graphics have been shown to improve patient understanding and retention of medical information.10–12 Therefore, the number of graphics contained within each PIM was tabulated. PIMs were categorized according to anatomical disease site and subject area, as displayed on the CUA website. A Kruskall Wallis test was used to determine if differences existed in the average readability level, sentence structure, and average number of displayed graphics between disease sites.

We then performed an exploratory analysis of selected PIMs. We chose the five PIMs with the highest FKGL scores. The text of these documents was manually examined and complex words were individually identified. Less complex words were substituted for these words in an attempt to improve readability scores. The readability of the document was then reanalyzed. A paired t-test was used to determine if the revised PIMs had significantly lower FKGL scores.

Results

The average readability of the CUA PIMs was a FKGL of 10.5. Table 1 displays the characteristics of the sentence structures among the seven categories of PIM. When stratified by subject matter, there was no significant difference in the readability among PIM groups (p=0.38). The infertility and sexual function PIMs exhibited the highest average reading grade level of 11.6. The same PIM group had a significantly higher number of words per sentence than the other the other categories (p=0.01). There was an average of 1.4 graphics used for each PIM (range 0–4). This value did not vary by subject category (p=0.928).

Table 1.

Analysis of readability of CUA PIMs grouped by anatomic disease site

| General | Pediatric | Bladder | Prostate | Infertility/sexual function | Kidney-ureter | Genitals | p value | |

|---|---|---|---|---|---|---|---|---|

| Sentences | 49.9 | 46.6 | 52.6 | 55.9 | 51.7 | 53.7 | 50.1 | 0.83 |

| Passive sentences (%) | 33.8 | 30.6 | 26.7 | 31.9 | 26.7 | 34.6 | 29.0 | 0.67 |

| Graphics | 1.6 | 1.3 | 1.4 | 1.2 | 1.3 | 1.3 | 1.7 | 0.93 |

| Words/sentence | 16.0 | 15.2 | 15.1 | 16.9 | 17.2 | 16.3 | 15.0 | 0.01 |

| Characters/word | 5.0 | 5.0 | 5.2 | 5.0 | 5.3 | 5.0 | 5.1 | 0.33 |

| FRES | 47.9 | 49.8 | 44.8 | 48.4 | 40.1 | 48.8 | 47.5 | 0.55 |

| FKGL | 10.3 | 10.0 | 10.7 | 10.6 | 11.6 | 10.4 | 10.2 | 0.38 |

CUA: Canadian Urological Association; FKGL: Flesch Kincaid Grade Level; FRES: Flesch Reading Ease Score; PIM: patient information material.

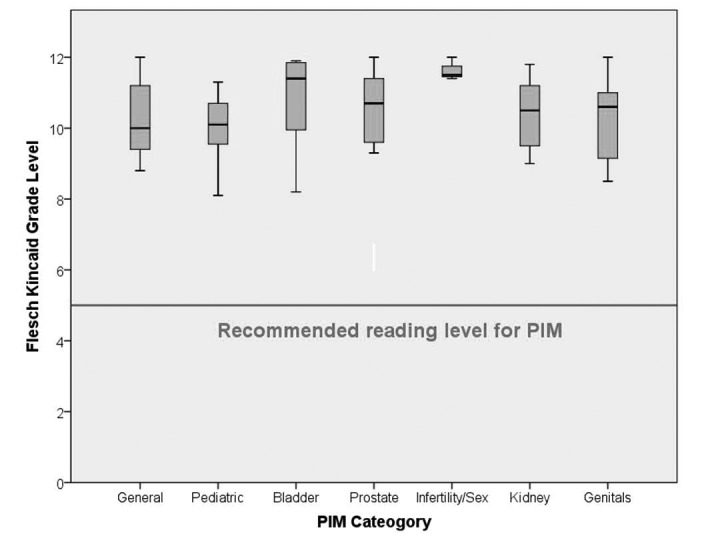

Fig. 1 displays and box and whisker plot of the average readability of the CUA PIM. No individual PIM had a readability level lower than the eighth grade (range 8.1–12.0).

Fig. 1.

Box and whisker plot of the readability of Canadian Urological Association’s patient information materials based on anatomical disease site.

The five PIMs with the highest grade level readability scores are displayed in Table 2. All five original PIM required a 12th-grade reading for interpretation. After revision of these PIM to less complex language, the readability levels were improved by an average of 3.1 grade points (p<0.01).

Table 2.

Analysis of the readability of select CUA PIMs after revisions for obstructionist vocabulary

| PIM title | Readability of original version (FKGL) | Readability of revised version (FKGL) | p value |

|---|---|---|---|

| Laparoscopic surgery | 12.0 | 9.6 | <0.01 |

| Radiation therapy for prostate cancer | 12.0 | 8.7 | |

| Active surveillance for prostate cancer | 12.0 | 8.0 | |

| Male hormone supplementation | 12.0 | 8.4 | |

| Scrotal pain | 12.0 | 9.9 |

CUA: Canadian Urological Association; FKGL: Flesch Kincaid Grade Level; FRES: Flesch Reading Ease Score; PIM: patient information material.

Discussion

This study had several principal findings. Our first principal finding was that the reading level required for interpretation of the CUA PIMs is higher than that recommended by guidelines. The average reading grade level required for interpretation of the text was 10.5 and all of the PIMs were written above an eighth-grade reading level. This is consistent with previous reports examining the readability of PIMs published by other institutions and organizations. Colaco et al analyzed the readability of online patient education materials from the American Urological Association and from 17 academic urology departments located in the northeastern U.S.13 Their results were similar to our own. None of the online resources demonstrated mean readability levels consistent with guideline recommendations and most required at least a university level education in order to interpret. In contrast, although our CUA PIMs are written above guideline recommendations, the mean grade level readability is lower than any of the online resources analyzed by Colaco et al (FKGR range 10.7–17.7).

This phenomenon appears to be consistent across all surgical specialties and is not isolated to urology. Hansberry et al conducted an analysis of PIMs published on the websites of 14 major surgical subspecialty organizations within the U.S.14 None of the subspecialties had average grade level readability that met guideline recommendations. The average readability levels of the PIMs from different subspecialty organizations ranged from 8.7–19.0. Consistently high grade level scores may represent intrinsically complex medical information that might not easily be simplified to match guideline readability levels. This was the rationale behind performing our exploratory analysis of the five PIMs with the highest grade reading levels.

Our second principal finding is that the readability of these PIMs can be improved. Although likely influenced by the complexity of the information, we hypothesized that the readability may reflect the complexity of the sentence structure and the words used. The results of our exploratory analysis support this hypothesis. The sentence structures within these PIMs were not altered during this analysis. We examined the content of these PIMs and individually substituted single-syllable words where complex multisyllabic words were found. These simple substitutions provided an average of a 3.1-grade level improvement in readability. We believe that the reduction in grade level readability accomplished with simple word alterations provides a proof of concept that the medical content of these PIMs is not the sole factor limiting the readability. We hypothesize that additional reductions in grade level readability would be possible by further alterations to sentence structure and this is supported by the existing literature.15,16

Horner et al developed a framework for revision of PIMs based on targeting specific obstructions to comprehension.15 Their framework provided a four-step process to improve upon existing PIMs. These steps included improving the ease of reading, revising the language content, improving comprehension, and formally evaluating the revised text. By using such a framework, they were able to decrease the readability of their PIMs from the 10th-grade to the fifth- to sixth-grade reading level. Our exploratory analysis relied solely upon the substitution of multisyllabic words for more simple words. We would expect that further reductions in readability may be possible with additional alterations to the text.

Our third principal finding is that the readability of our PIMs does not vary by subject matter. This has several implications. First, it further supports our hypothesis that the complexity of the subject matter is not the primary determinant of the readability of these materials. The wide variation of readability within the same subject matter implies that differences in prose format may be more responsible for readability levels then differences in medical information. Second, it suggests that the authors of the PIMs may introduce variability into the readability of these materials based upon their own writing style. Further, it implies that prospective evaluation of the readability of these PIMs at the time of their revision may be helpful to account for variability in authorship.

This study has several limitations. Our PIM categories for analysis were based on anatomical disease site and subject matter, as they are displayed on the CUA website. This may not be the most appropriate grouping to determine complexity of subject matter. Analysis of these PIMs based on alternative groupings may have shown significant variations in readability between topics. Measuring readability on these PIMs only determines the prose literacy required for interpretation. Our analysis did not examine the appropriateness of medical content, the cultural appropriateness, the influence on self-efficacy, or the content presentation or layout. Although our exploratory analysis did significantly improve the readability level of select PIMs, we did not demonstrate that such refinements are possible for all PIMs, nor did we improve the readability levels to the point where they match guideline recommendations. However, we believe that this study does provide a proof of concept from which further work can be done to improve the readability of these PIMs. Finally, the CUA PIMs exist in both English and French versions. This analysis was limited to the English-language versions.

Despite these limitations, we believe this study represents a valuable addition to the literature. We have established that the CUA PIMs are written at a level that may be too complex for many urology patients to comprehend. However, these PIMs do score favourably when compared against PIMs produced by other institutions covering the same topics. Further work will explore additional methods to improve readability and formally assess and revise informational content with patients in an iterative fashion.

Conclusion

The current CUA PIMs compare favourably to PIMs written by other organizations, but may be written at a level that is difficult for many patients to interpret. Revision of this material in a patient-centered iterative process may improve readability, but must be balanced against the informational needs of patients, which may be intrinsically complex.

Footnotes

Competing interests: Dr. Dalziel declares no competing personal or financial interests. Dr. Leveridge has served as an Advisory Board member for Astellas and Janssen. Dr. Steele has served as an Advisory Board member for Allergan, Astellas, Ferring, Merck, and Pfizer; has received grants/honoraria from Astellas; and has participated in clinical trials for Allergan, Astellas, and Pfizer. Dr. Izard has served as an Advisory Board member for Astellas, Janssen, and Sanofi.

This paper has been peer-reviewed.

References

- 1.Rootman I, Gordon-El-Bihbety D. A vision for a health literate Canada: Report of the Expert Panel on Health Literacy. Ottawa, ON: Canadian Public Health Association; 2008. http://www.cpha.ca/uploads/portals/h-l/report_e.pdf. Accessed April 26, 2016. [Google Scholar]

- 2.Murray S. Health literacy in Canada: Initial results from the international adult literacy and skills survey. Ottawa, ON: Canadian Council on Learning; 2007. [Google Scholar]

- 3.Bennett CL, Ferreira MR, Davis TC, et al. Relation between literacy, race, and stage of presentation among low-income patients with prostate cancer. J Clin Oncol. 1998;16:3101–4. doi: 10.1200/JCO.1998.16.9.3101. [DOI] [PubMed] [Google Scholar]

- 4.Wolf MS, Knight SJ, Lyons EA, et al. Literacy, race, and PSA level among low-income men newly diagnosed with prostate cancer. Urology. 2006;68:89–93. doi: 10.1016/j.urology.2006.01.064. [DOI] [PubMed] [Google Scholar]

- 5.Berkman ND, Sheridan SL, Donahue KE, et al. Low health literacy and health outcomes: An updated systematic review. Ann Intern Med. 2011;155:97–107. doi: 10.7326/0003-4819-155-2-201107190-00005. [DOI] [PubMed] [Google Scholar]

- 6.Smith AL, Nissim HA, Le TX, et al. Misconceptions and miscommunication among aging women with overactive bladder symptoms. Urology. 2011;77:55–9. doi: 10.1016/j.urology.2010.07.460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Murray S, Jones S, Willms D, et al. Reading the future: A portrait of literacy in Canada. Ottawa, Ontario: Statistics Canada; 2008. http://www.literacy.ca/content/uploads/2012/02/LiteracyReadingFutureReportE.pdf. Accessed April 26, 2016. [Google Scholar]

- 8.Murray T, Hagey J, Willms D, et al. Health literacy in Canada: A healthy understanding 2008. Ottawa (ON): Canadian Council on Learning; 2008. http://www.bth.se/hal/halsoteknik.nsf/bilagor/HealthLiteracyReportFeb2008E_pdf/$file/HealthLiteracyReportFeb2008E.pdf. Accessed April 26, 2016. [Google Scholar]

- 9.Kincaid JP, Fishburne RP, Jr, Rogers RL, et al. Derivation of new readability formulas (automated readability index, fog count and flesch reading ease formula) for navy enlisted personnel: DTIC Document. 1975.

- 10.Houts PS, Witmer JT, Egeth HE, et al. Using pictographs to enhance recall of spoken medical instructions II. Patient Educ Couns. 2001;43:231–42. doi: 10.1016/S0738-3991(00)00171-3. [DOI] [PubMed] [Google Scholar]

- 11.Houts PS, Bachrach R, Witmer JT, et al. Using pictographs to enhance recall of spoken medical instructions. Patient Educ Couns. 1998;35:83–8. doi: 10.1016/S0738-3991(98)00065-2. [DOI] [PubMed] [Google Scholar]

- 12.Mansoor LE, Dowse R. Effect of pictograms on readability of patient information materials. Ann Pharmacother. 2003;37:1003–9. doi: 10.1345/aph.1C449. [DOI] [PubMed] [Google Scholar]

- 13.Colaco M, Svider PF, Agarwal N, et al. Readability assessment of online urology patient education materials. J Urol. 2013;189:1048–52. doi: 10.1016/j.juro.2012.08.255. [DOI] [PubMed] [Google Scholar]

- 14.Hansberry DR, Agarwal N, Shah R, et al. Analysis of the readability of patient education materials from surgical subspecialties. Laryngoscope. 2014;124:405–12. doi: 10.1002/lary.24261. [DOI] [PubMed] [Google Scholar]

- 15.Horner SD, Surratt D, Juliusson S. Improving readability of patient education materials. J Community Health Nurs. 2000;17:15–23. doi: 10.1207/S15327655JCHN1701_02. [DOI] [PubMed] [Google Scholar]

- 16.Sheppard ED, Hyde Z, Florence MN, et al. Improving the readability of online foot and ankle patient education materials. Foot Ankle Int. 2014;35:1282–6. doi: 10.1177/1071100714550650. [DOI] [PubMed] [Google Scholar]