Abstract

Background

The Hospital Value-Based Purchasing Program measures value of care provided by participating Medicare hospitals while creating financial incentives for quality improvement and fostering increased transparency. Limited information is available comparing hospital performance across healthcare business models.

Study Design

2015 hospital Value-Based Purchasing Program results were used to examine hospital performance by business model. General linear modeling assessed differences in mean total performance score, hospital case mix index, and differences after adjustment for differences in hospital case mix index.

Results

Of 3089 hospitals with Total Performance Scores (TPS), categories of representative healthcare business models included 104 Physician-owned Surgical Hospitals (POSH), 111 University HealthSystem Consortium (UHC), 14 US News & World Report Honor Roll (USNWR) Hospitals, 33 Kaiser Permanente, and 124 Pioneer Accountable Care Organization affiliated hospitals. Estimated mean TPS for POSH (64.4, 95% CI 61.83, 66.38) and Kaiser (60.79, 95% CI 56.56, 65.03) were significantly higher compared to all remaining hospitals while UHC members (36.8, 95% CI 34.51, 39.17) performed below the mean (p < 0.0001). Significant differences in mean hospital case mix index included POSH (mean 2.32, p<0.0001), USNWR honorees (mean 2.24, p 0.0140) and UHC members (mean =1.99, p<0.0001) while Kaiser Permanente hospitals had lower case mix value (mean =1.54, p<0.0001). Re-estimation of TPS did not change the original results after adjustment for differences in hospital case mix index.

Conclusions

The Hospital Value-Based Purchasing Program revealed superior hospital performance associated with business model. Closer inspection of high-value hospitals may guide value improvement and policy-making decisions for all Medicare Value-Based Purchasing Program Hospitals.

Keywords: Value-based Purchasing Program, Healthcare business model, Total Performance Score, Centers for Medicare and Medicaid

Introduction

Escalating costs, uncertain quality and efficiency, and desire for transparency in healthcare led to the Patient Protection and Affordable Care Act of 2010 (ACA). Section 3001 of ACA established the Hospital Value-Based Purchasing Program (VBPP), further defined in Section 1886(o) of the Social Security Act. 1-2 The VBPP defines the value of healthcare provided by Medicare-participating acute care hospitals as patient outcomes per dollar expended and establishes a pay-for-performance program to promote quality improvement and efficiency. For fiscal year (FY) 2015, the Centers for Medicare & Medicaid (CMS) withheld 1.5% of base-operating diagnosis-related group annual payments to participating hospitals-approximately $1.4 billion- to create the VBPP’s financial framework and remain a budget neutral mandate.3

Recent approaches to healthcare reform involve alignment of payment incentives to drive the efficient and appropriate adoption of technological advances, transition to patient-centered delivery models, and the incorporation of outcome measures in care valuation.4-5 Christensen outlined the innovative disruption necessary among healthcare business models, based on how patients access care.5 Careful examination of the application of definitions of value across healthcare business models may provide insight into how this alignment might impact acute care hospitals. We hypothesized that the methodology utilized by the CMS VBPP program to assign Total Performance Score (TPS), developed as a proxy for value of care provided, would result in the stratification of participating hospitals based on business model.6

Methods

Value-Based Purchasing Program Methodology

CMS publishes the outcomes of the FY2015 Hospital Value-Based Purchasing Program on the CMS Hospital Compare Website.3 Publicly available data includes hospital name, address, unadjusted and adjusted process measures, unadjusted and adjusted outcome measures, patient satisfaction, cost, and total performance scores. The CMS Hospital Compare Website describes the quality indicators comprising four normalized, annually revised domains: processes, outcomes, patient satisfaction, and efficiency. 3,7-11 The baseline and performance time periods for the reported measures vary on domain as well as clinical indicator. The baseline period for FY2015 ranges from October 2010 to December 2011 while the performance period was from October 2012 to December 2013.

The definitions of each clinical indicator specify minimum requirements with regard to number of cases treated, surveys, claims, or episodes of care. The number of clinical indicators and weights are as follows: 12 Clinical Process of Care measures (20%), 8 Patient Experience of Care measures (30%), 5 Outcome measures (30%), and 1 Efficiency measure (20%), for a 100 maximum TPS.3 The Efficiency domain is each hospital’ s risk adjusted per-episode spending level compared to either baseline and performance periods of the same hospital, or the hospital’s performance period to the baseline period across all Medicare hospitals. Thus, TPS compares the hospital’s performance relative to other Medicare hospitals as well as its improvement over time. The TPS produces a value-based incentive payment adjustment factor for each eligible hospital3 which is then multiplied by the withheld amount of the estimated annual CMS payment12 for redistribution to the corresponding Medicare-participating hospital.

Exclusions from the program include hospitals subject to payment reductions under the Hospital Inpatient Quality Reporting (IQR) Program, hospitals excluded from the Inpatient Prospective Payment System (IPPS), hospitals paid under Section 1814(b)(3) and exempted from the VBPP by the Secretary of the Department of Health and Human Services (HHS), hospitals cited by the HHS Secretary for deficiencies during the applicable fiscal year, and hospitals not meeting the minimum number of cases, measures, or surveys, as determined by the HHS Secretary.13

The Quality Net and Hospital Compare Websites provide additional information regarding CMS methodology.3,13 CMS Hospital Compare representatives provided further comments (10/2015, email communication).

Healthcare business models

Hospitals were grouped for comparison as readily identifiable types based on business model. General hospitals are characterized as “solution shops,” employing multi-disciplinary teams and the latest technology for characterization and treatment of complex diagnoses. Most general hospitals blend business models. Christensen suggests that lack of distinction of healthcare business model is a significant source of inefficiency.

“Value-adding process (VAP) businesses” are specialty centers concentrating on delivery of defined services with standardized procedure lines. “Facilitated networks” serve a finite membership within a mostly singular insurance plan and focusing on prevention and management of chronic illnesses. It provides coordinated ambulatory services, rehabilitation, long-term care facilities, home services including nursing and equipment support, as well as online or institutional support groups uniting patients with similar conditions.5

Among participating hospitals, the solution shop was best approximated by University HealthSystem Consortium (UHC) members- comprehensive multi-disciplinary teaching institutions. Some general hospitals have moved to systematize specialty service lines creating “hospitals within hospitals” to compete for patients and reduce costs.14 The effectiveness of this approach is not well-studied.15 US News and World Report “Honor Roll” (USNWR) honorees were included to assess the success of this type of reengineering within the solution shop group based on the hospital VBPP’s TPS. 14,16

The VAP approach is represented by Physician-Owned Surgical Hospitals (POSH). A comprehensive POSH list is not available though estimates propose greater than 230 POSH members nationwide.17 POSH members were identified using the Physician Hospital Association’s directory and online searches.18 Each POSH was cross-referenced with online resources or direct contact to confirm physician-ownership, status of mergers, and closures. Additional information collected includes type of specialty services, number of beds, and Emergency Room availability.

Finally, Kaiser Permanente hospitals (Kaiser) function within a facilitated network in a limited geographical distribution and fixed patient membership. Kaiser’s advantage is its long-term establishment as a healthcare provider and largely single payer system. Accountable Care Organizations (ACO) represent the federal effort to develop facilitated networks, with the early adopter Pioneer ACOs serving as the most highly developed example of this business model. To date, mixed evidence is available evaluating their effectiveness.19 Pioneer ACO data was obtained for Performance Year 2013 ACO affiliated hospitals to reflect the FY2015 VBPP performance periods.

Statistical Analysis

Hospitals were grouped by business model and were ranked by TPS. General linear modeling (SAS v9.2) estimated the mean TPS reported for each hospital category compared to all remaining Medicare-participating hospitals. Inclusion into a hospital category was not exclusive and hospitals were grouped with all applicable models. The F-test statistic determined statistical significance between TPS and hospital category. Hospital Case Mix Index (CMI) served as a control and the F-test statistic assessed differences in mean hospital CMI by hospital category. 3 Finally, mean TPS differences for each hospital category were estimated after concurrent adjustments for differences in the hospital CMI. A p-value of < 0.05 determined statistical significance.

Results

For FY2015, Total Performance Scores were available for 3,089 eligible Medicare-participating hospitals (range = 6.6-92.9). The hospital categories included 104 POSH members, 111 UHC affiliated, 33 Kaiser Permanente hospitals, USNWR 14 leading institutions with “hospital within hospital” restructuring, and 124 affiliated ACO hospitals.

Table 1 summarizes the differences in mean TPS by hospital category compared to all remaining hospitals not in the respective group. The estimated mean TPS for POSH hospitals (64.11, 95% CI 61.83, 66.38) and Kaiser (60.79, 95% CI 56.56, 65.03) were significantly higher resulting in an estimated mean difference of 23.18 points (p < 0.0001) and 19.3 points (p< 0.0001), respectively. Meanwhile, the UHC hospitals TPS mean (36.84, 95% CI 34.51, 39.17) was significantly lower with a mean difference of 5.03 points (p < 0.0001). The estimated TPS mean for hospitals designated by USNWR and ACO affiliated hospitals were 3.47 point higher (p= 0.30) and −0.38 points lower (p= 0.74), respectively, suggesting USNWR honorees and ACO affiliated hospitals performed similarly to all remaining hospitals.

Table 1.

Difference in Total Performance Score by Hospital Category

| Hospital Category |

Mean TPS |

Standard Deviation |

95% CI for Mean TPS |

F-test statistic p-value |

Mean TPS Difference between healthcare delivery models (95% CI) |

|

|---|---|---|---|---|---|---|

| POSH | < 0.0001 | |||||

| Yes | 64.11 | 14.13 | 61.83 to 66.38 | 23.18 | (20.87 to 25.50) | |

| No | 40.92 | 11.74 | 40.49 to 41.34 | −23.18 | (−25.50 to −20.87) | |

| Kaiser Owned | < 0.0001 | |||||

| Yes | 60.79 | 10.51 | 56.56 to 65.03 | 19.30 | (15.04 to 23.55) | |

| No | 41.49 | 12.41 | 41.06 to 41.93 | −19.30 | (−23.55 to −15.04) | |

| USNWR | 0.3007 | |||||

| Yes | 45.16 | 6.56 | 38.59 to 51.74 | 3.47 | (−3.11 to 10.07) | |

| No | 41.68 | 12.56 | 41.24 to 42.13 | −3.47 | (−10.07 to 3.11) | |

| UHC affiliated | < 0.0001 | |||||

| Yes | 36.84 | 8.33 | 34.51 to 39.17 | −5.03 | (−7.40 to −2.66) | |

| No | 41.88 | 12.64 | 41.43 to 42.33 | 5.03 | (2.66 to 7.40) | |

| ACO Hospital | 0.7350 | |||||

| Yes | 41.33 | 12.92 | 39.12 to 43.54 | −0.38 | (−2.64 to 1.86) | |

| No | 41.72 | 12.53 | 41.27 to 42.17 | 0.38 | (−1.86 to 2.64) | |

TPS- Total Performance Score; CI- Confidence interval; POSH- Physician-owned surgical hospital; USNWR- US News and World Report; UHC- University HealthSystem Consortium; ACO-Accountable Care Organization

POSH members had the following characteristics: 33 beds and 7 operating rooms on average, urban location, and 50% had Emergency Departments. Specialty services provided varied from one surgical specialty area up to five. The most commonly marketed services were “Other” (83.7% including Radiology, Interventional Radiology, Cardiology, or Gastrointestinal procedures) followed by Orthopedic Surgery (80.8%), Spine (68.3%), General (51.9%), OBGYN (40.4%), Otolaryngology (37.5%) and lastly, Cardiac Surgery (17.3%).20,21

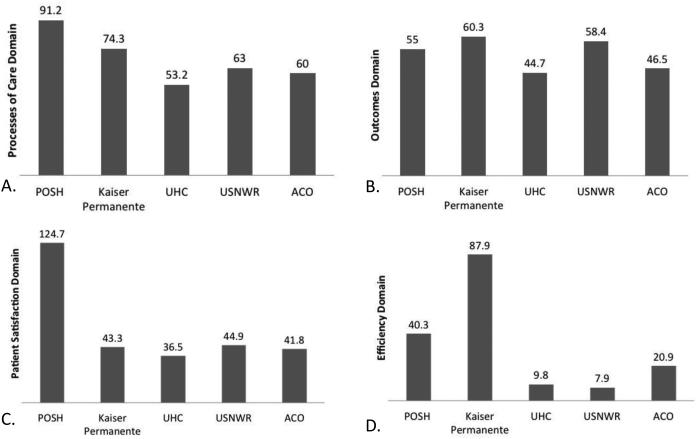

Figure 1(a-d) depicts the adjusted score per domain for each hospital category. Approximately eighty-five percent of POSH members did not meet eligible criteria for the Outcomes Domain leading to a redistributing of 30% of the TPS for these cases resulting in an inflated Patient Satisfaction Domain of greater than a 100%. Meanwhile, scores from 95.9% of the remaining three domains were available. The Efficiency domain received a “0” score in 35/104 (33.7%). In these cases, the percentage score was not redistributed and the maximum TPS for that Medicare hospital was decreased to either 70 or 80 of 100 depending on the domain-assigned weight.

Figure 1.

Domain Scores by Hospital Category. Average hospital category score is shown on top of the corresponding bar graph. A: Processes of Care Domain B: Outcomes Domain C: Patient Satisfaction Domain D: Efficiency Domain.

Forty-three Kaiser Permanente and affiliate hospitals were not included in the CMS hospital VBPP program. Reasons for exclusion: Children’s hospitals, rehabilitation or long-term care facilities, or Maryland hospital. Domain scores were available in 96.2%. Kaiser Permanente had the highest outcome and efficiency domain scores, 60.3% and 87.9% respectively.

Hospital VBPP data was available for 111 of 116 UHC affiliated hospitals. Data was available for 96.2% of this hospital category. 52/111 (46.8%) of the Efficiency scores received a “0.” The VBPP requires hospitals meet the 50th percentile national benchmark in this domain to be awarded a score resulting in disproportionate penalizing of UHC hospitals.

The mean TPS of USNWR honorees did not differ significantly from the mean TPS of all remaining hospitals but was significantly different compared to UHC hospitals (p =0.0004). Again, 6/14 hospitals received a “0” score; the remaining efficiency scores were low (range 2-6). Data was available for only 124 of a possible 209 ACO affiliated hospitals with 97.6% of the domains receiving a score.

Table 2 demonstrates significant differences in the mean hospital CMI by hospital category. POSH had the highest case mix value (mean =2.32 95% CI 1.39, 3.85) compared to other hospitals (p<0.0001), followed by USNWR honorees (mean =2.24 95% CI 2.16, 2.33 p =0.0140) and UHC affiliated hospitals (mean =1.99 95% CI 1.94, 2.05 p<0.0001). Kaiser Permanente hospitals had significantly lower CMI (mean =1.54 95% CI 1.53, 1.56 p<0.0001). Meanwhile, ACO affiliated hospitals had an equivalent CMI (mean =1.58, p=0.22).

Table 2.

Difference in Case Mix Index (CMI) by Hospital Category

| Hospital Category |

Mean CMI |

Standard Deviation |

95% CI for Mean CMI |

F test statistic p-value |

Mean Difference in CMI between healthcare delivery models (95% CI) |

|

|---|---|---|---|---|---|---|

| POSH | <0.0001 | |||||

| Yes | 2.32 | 0.37 | 1.39 to 3.85 | 0.80 | (0.74 to 0.86) | |

| No | 1.52 | 0.28 | 0.79 to 2.89 | −0.80 | (−0.86 to −0.74) | |

| Kaiser Owned | 0.0140 | |||||

| Yes | 1.54 | 0.32 | 1.53 to 1.56 | −0.14 | (−0.25 to −0.03) | |

| No | 1.68 | 0.20 | 1.61 to 1.76 | 0.14 | (0.03 to 0.25) | |

| USNWR | <0.0001 | |||||

| Yes | 2.24 | 0.15 | 2.16 to 2.33 | 0.70 | (0.53 to 0.87) | |

| No | 1.54 | 0.31 | 1.53 to 1.55 | −0.70 | (−0.87 to −0.54) | |

| UHC | <0.0001 | |||||

| Yes | 1.99 | 0.24 | 1.94 to 2.05 | 0.46 | (0.40 to 0.52) | |

| No | 1.53 | 0.31 | 1.52 to 1.54 | −0.46 | (−0.52 to −0.40) | |

| ACO Hospital | 0.2236 | |||||

| Yes | 1.58 | 0.25 | 1.53 to 1.62 | 0.03 | (−0.02 to 0.09) | |

| No | 1.54 | 0.32 | 1.53 to 1.55 | −0.03 | (−0.09 to 0.02) | |

CI- Confidence interval; POSH- Physician-owned surgical hospital; USNWR- US News and World Report; UHC- University HealthSystem Consortium; ACO- Accountable Care Organization

Table 3 presents the differences in TPS by hospital category after adjustments for differences in hospital CMI. Re-estimation of the differences with concurrent adjustments for case mix did not change the original results. The adjusted mean TPS difference was 6.42 points lower for UHC affiliated hospitals (mean= 30.84 95% CI 27.15, 34.54 p <0.0001), while 27.19 points higher for POSH members (mean=75.72 95% CI 71.65, 79.79 p <0.0001), and 19.05 points higher for Kaiser hospitals (mean= 57.90 CI 95% 53.02, 62.78 p <0.0001). The differences in mean TPS for USNWR hospitals was 2.14 (p =0.5274) and −0.45 (p =0.6935) for ACO affiliated hospitals.

Table 3.

Difference in Total Performance Score by Hospital Category, Adjusted for Case Mix Index

| Hospital Category |

Adjusted Mean TPS |

Standard Deviation |

95% CI for Mean TPS |

F-test statistic p-value |

Adjusted Mean TPS Difference between healthcare delivery models (95% CI) |

|

|---|---|---|---|---|---|---|

| POSH | < 0.0001 | |||||

| Yes | 75.72 | 2.07 | 71.65 to 79.79 | 27.19 | (24.62 to 29.78) | |

| No | 48.53 | 1.15 | 46.27 to 50.79 | −27.19 | (−29.77 to −24.62) | |

| CMI adjustment | 0.0147 | |||||

| Kaiser Owned | <0.0001 | |||||

| Yes | 57.90 | 2.20 | 53.02 to 62.78 | 19.05 | (14.73 to 23.37) | |

| No | 38.85 | 1.11 | 36.68 to 41.01 | −19.05 | (−23.37 to −14.73) | |

| CMI adjustment | 0.0076 | |||||

| USNWR | 0.5274 | |||||

| Yes | 40.87 | 3.39 | 33.58 to 48.16 | 2.14 | (−4.51 to 8.80) | |

| No | 38.73 | 1.13 | 36.51 to 40.94 | −2.14 | (−8.80 to 4.51) | |

| CMI adjustment | <0.0001 | |||||

| UHC | < 0.0001 | |||||

| Yes | 30.84 | 1.88 | 27.15 to 34.54 | −6.42 | (−8.88 to −3.97) | |

| No | 37.27 | 1.14 | 35.02 to 39.52 | 6.42 | (3.97 to 8.88) | |

| CMI adjustment | < 0.0001 | |||||

| ACO Hospital | 0.6935 | |||||

| Yes | 38.21 | 1.58 | 35.09 to 41.32 | −0.45 | (−2.70 to 1.80) | |

| No | 38.66 | 1.11 | 36.46 to 40.85 | 0.45 | (−1.80 to 2.70) | |

| CMI adjustment | 0.0054 | |||||

TPS- Total Performance Score; CI- Confidence interval; POSH- Physician-owned surgical hospital; CMI- case mix index; USNWR- US News and World Report; UHC- University HealthSystem Consortium; ACO- Accountable Care Organization

Discussion

Growing emphasis on patient outcomes and quality measurements created a foundation for change within health care.22 Ranking systems of hospitals including USNWR, HealthGrades, Leapfrog, and Consumer Reports, incorporate quality indicators into annual reports for guiding consumer choices. The government responded with various CMS initiatives including the hospital VBPP as the first large-scale budget-neutral attempt comparing Medicare-participating hospitals fairly by applying the same measures to all hospitals and neutralizing patient-specific factors to achieve meaningful comparisons.

Care Delivery Models

Certain models consistently outperformed others based on the VBPP methodology. In general, smaller physician-owned hospitals outscored larger tertiary centers, teaching hospitals, and safety-net providers.

Information from ACO and Kaiser-associated facilities including ambulatory clinics, long-term facilities, home health services, are not included in the CMS VBPP. The goal of facilitated networks is to maintain patients healthy. Thus, lower hospital CMI and high TPS may be reflective of the health of their patient population and success in utilizing healthcare services outside of the acute-care setting. However, the VBPP only captures acute care hospitals limiting the generalizability and interpretation of the categories’ performance.

Private industry efforts to explore other healthcare delivery models have resulted in government policies that impede further development. An example is lack of expansion of VAP businesses secondary to Certificate of Need clauses, Stark laws, ACA, and powerful lobbyists such as the American Hospital Association despite no evidence supporting initial claims. 20-21,23-24 POSH demonstrate better patient satisfaction related to specialized training of providers and staff, similar or better patient outcomes, and lower costs hypothesized to occur as a result of aligning manager and leadership salaries with growth and profit of the organization. 17,20

A CMS study showed patient severity levels varied significantly among POSH hospitals. The CMS VBPP data shows POSH hospitals have significantly higher mean CMI similar to UHC and USNWR hospitals, questioning the notion of “cherry picking.” 17,25 With the rise of POSH members, general hospitals have not experienced differences in their patient population. Leavitt et al found no difference in patient transfer patterns between POSH hospitals and general hospitals compared to between general hospitals.17 Instead, competition increased restructuring initiatives to streamline profitable services, expanded available treatment options, and reduced cost while improving outcomes.14

Efficiency was the most variable domain for FY2015. Accounting for cost remains a challenge in the healthcare system particularly in multi-payer systems and hospitals with blended business models. As such, Kaiser Permanente appears best equipped to link monetary value to specific services provided and likely, most capable to contain cost (Figure 1d).

The hospital categories included have contrasting visions of patient care, effecting provider recruitment, resource allocation, target patient population, and services provided. This highlights another possible limitation that across the various hospital categories, differences in interdisciplinary team dynamics and organizational culture are probably present. It may be part of the success of Kaiser Permanente and POSH members reflected within the VBPP can be attributed to cultural variance. Also, missing from the hospital VBPP are the demands of biomedical research, provider training, and indigent care. These institutions must balance the provision of high-quality care and cost containment with the inefficiencies introduced by the additional demands. UHC hospitals may benefit from restructuring and streamlining operations more in line with Kaiser Permanente and POSH hospitals as well as studying its cultural cohesiveness.

VBBP Methodological Limitations

The VBPP’s methodology has several limitations: reallocation of ineligible domain weights, reporting time delay, CMI risk-adjustment, claims data, payment incentive size, and clinical indicator overlap within CMS initiatives. These limitations are not initially evident as methodological details are disjointed and fragmented across fact sheets, excel files, and websites13 compromising transparency.

For domain inclusion, a minimum number of clinical indicators- or discharges in the case of the Efficiency domain- must be reported. The Outcome domain has five measures – three 30-day Mortality metrics (acute myocardial infarction, heart failure, and pneumonia), AHRQ PSI-90 Composite, and Healthcare-Associated Infections rates. At least 10 cases for two of the five Outcome metrics are required.12 When a hospital did not meet minimum requirements, the domain points were proportionally reallocated among the remaining eligible domains. In the case of an ineligible Outcomes domain, redistributed weighting is Processes of Care 28.5%, Patient Satisfaction 43%, and Efficiency 28.5%.13 The resulting effect places undue emphasis on Patient Satisfaction and Process measures, creating an advantage for POSH members while tending to disadvantage UHC members.

Substantial lag time occurs between baseline and performance periods, and when the data becomes actionable. It was enacted to facilitate within and between hospital comparisons and to permit revision for accuracy. An unintended consequence of the two-year period from performance period to when results incur a financial cost means measuring impact of initiatives take a two-year minimum for inclusion in the database during which time the quality measures will have changed. Already for FY2016, the domains and weights will be Clinical Process of Care 10%, Patient Experience of Care 25%, Outcome 40%, and Efficiency 25%. Two additional outcome measures and one process of care measure will be added while five outdated process measures were removed.13

Outcome and Efficiency measurements are risk-adjusted based on hospital CMI, a relative value of the complexity and resource utilization of the hospital’s patient population. The specialty-adjusted CMI takes into account severity of illness, age, and significant beneficiary comorbidities. However, it does not adjust for sex, race, ethnicity, education, transfer status, or socioeconomic status.3,13,26 The Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) surveys adjust for well-known biases associated with completing subjective experience surveys retrospectively.27-28 Peng et al showed a disproportionate increase in patient severity scores in surgical patients within the CMS VBPP. He found significant variation to HCAHPS responses based on service line – obstetric, surgical, and medical- that could influence patient selection. 29-31

Prior studies examined administrative versus clinical registry data in ability to provide the most relevant and accurate information to the consumer. 32 As the VBPP data comes from administrative claims, it contains these shortcomings and limitations in the interpretation of the findings presented. Claims reported to the hospital VBPP include only patients greater than 65 years old enrolled in Medicare Parts A and B. These reportable claims exclude dual Medicare and Medicaid beneficiaries, Medicare Advantage members, or patients with Medicare as a secondary payer. 3,13,26 Thus, generalizability of the hospital VBPP’s selected clinical indicators and results reported here may not be representative of all Medicare patients or the US patient population at acute care hospitals.

Significant overlap between the Hospital Acquired Conditions Reduction Program, Hospital VBPP, and Hospital Readmission Reduction Program results in double or triple penalties for poor performance on a single measure. Equally disheartening, the overlap in the CMS public reporting programs make it difficult to discern the individual effect of the hospital VBPP to incentivize improvement and improve transparency of performance results.

The size of the VBPP payment incentive is small compared to other CMS programs. This has the potential effect of detracting attention from the hospital VBPP towards other competing programs with more financial stake.

Defining Value

Specific clinical measures allow for targeted improvement efforts; however, it may have the unintended effect of neglecting other clinically relevant outcomes. Current quality measures in the CMS VBPP fail to correlate with improved outcomes. 11 Meanwhile, many important quality measures remain absent from popular ranking systems including the CMS VBPP. 33 The HCAHPS survey measures domains important to patients and correlates with surgical quality. 34 However, patient experience is neither a surrogate for patient-centered outcomes nor a substitute measure of clinical outcomes. The question becomes whether the hospital VBPP captures meaningful improvement in value of participating hospitals. Measuring value requires more than the combination of outdated processes of care, biased patient satisfaction responses, and outcomes not widely applicable across hospitals.

Conclusions

The CMS VBPP represents the first national effort to measure hospital value using scientific and statistical rigor. The CMS VBPP will continue to influence hospitals to focus on selected outcome measures, cost reduction, and patient-centered assessments of quality. Over time, the selection and revision of clinical and patient-centered quality indicators will serve as a key driver of organizational evolution. The impact of healthcare business models on performance across these domains is significant and should be considered as payment reform is further refined.

Acknowledgements

Dr. Jones and Dr. Ramirez had full access to all of the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

Dr. Ramirez was supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health [T32HL007849]. The funder had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; or decision to submit the manuscript for publication. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Abbreviations

- ACA

Patient Protection and Affordable Care Act

- VBPP

Hospital Value-based Purchasing Program

- FY

fiscal year

- CMS

Centers for Medicare and Medicaid

- TPS

Total Performance Score

- IQR

Hospital Inpatient Quality Reporting

- IPPS

Inpatient Prospective Inpatient Payment System

- HHS

Department of Health and Human Services

- VAP

value-adding process

- UHC

University HealthSystem Consortium

- USNWR

US News and World Report “Honor Roll”

- POSH

Physician-owned Surgical Hospitals

- Kaiser

Kaiser Permanente hospitals

- ACO

Accountable Care Organization

- CMI

Case Mix Index

- HCAHPS

Hospital Consumer Assessment of Healthcare Providers and Systems

Footnotes

Partial research results were presented at the 11th annual Academic Surgical Congress hosted by the Society of University Surgeons and the Association of Academic Surgery, at Jacksonville, Florida in February 2016.

Study concept and design: Ramirez, Turrentine, Jones.

Acquisition, analysis, or interpretation of data: All authors.

Drafting of the manuscript: Ramirez, Jones.

Critical revision of the manuscript for important intellectual content: All authors.

Statistical analysis: Ramirez, Stukenborg.

Obtained Funding: Ramirez.

Administrative, technical, or material support: Ramirez, Turrentine, Jones.

Study supervision: Jones.

References

- 1.Patient Protection and Affordable Care Act In: Congress . US Public Law 111-148. Washington DC: 2010. [Google Scholar]

- 2.Title XVIII of the Social Security Act In: Congress . US Public Law 114-115. Washington DC: 2015. (Section 1886) Payment to hospitals for inpatient hospital services. Amended and enacted. [Google Scholar]

- 3.US Department of Health and Human Services Hospital Compare. Available at: https://www.medicare.gov/hospitalcompare/data/hospital-vbp.html. Accessed August 8, 2015.

- 4.Porter ME. A Strategy for Health Care Reform — Toward a Value-Based System. N Engl J Med. 2009;361(2):109–112. doi: 10.1056/NEJMp0904131. [DOI] [PubMed] [Google Scholar]

- 5.Christensen CM, Grossman J, Hwang J. The Innovator's Prescription. McGraw Hill; 2009. [Google Scholar]

- 6.Porter ME. What Is Value in Health Care? N Engl J Med. 2010;363(26):2477–2481. doi: 10.1056/NEJMp1011024. [DOI] [PubMed] [Google Scholar]

- 7.Stulberg JJ, Delaney CP, Neuhauser DV, et al. Adherence to Surgical Care Improvement Project Measures and the Association With Postoperative Infections. JAMA. 2010;303(24):2479–2485. doi: 10.1001/jama.2010.841. [DOI] [PubMed] [Google Scholar]

- 8.Mabry CD. "Say it ain't so, Joe": Comment on "Hospital process compliance and surgical outcomes in medicare beneficiaries". Arch Surg. 2010;145(10):1004–1005. doi: 10.1001/archsurg.2010.189. [DOI] [PubMed] [Google Scholar]

- 9.Jones P, Shepherd M, Wells S, et al. Review article: what makes a good healthcare quality indicator? A systematic review and validation study. Emerg Med Australas. 2014;26(2):113–124. doi: 10.1111/1742-6723.12195. [DOI] [PubMed] [Google Scholar]

- 10.Hernandez AF, Fonarow GC, Liang L, et al. The need for multiple measures of hospital quality: results from the Get with the Guidelines-Heart Failure Registry of the American Heart Association. Circulation. 2011;124(6):712–719. doi: 10.1161/CIRCULATIONAHA.111.026088. [DOI] [PubMed] [Google Scholar]

- 11.Nicholas LH, Osborne NH, Birkmeyer JD, Dimick JB. Hospital process compliance and surgical outcomes in medicare beneficiaries. Arch Surg. 2010;145(10):999–1004. doi: 10.1001/archsurg.2010.191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Telligen. Centers for Medicare & Medicaid Services. U.S. Department of Health and Human Services How to Read Your FY 2015 Percentage Payment Summary Report. Quality Net. Available at: https://www.qualitynet.org. Published July 2013. Accessed November 2015. [Google Scholar]

- 13.Centers for Medicare & Medicaid Services Quality Net. Available at: http://www.qualitynet.org. Accessed August 8, 2015. [Google Scholar]

- 14.Walston SL, Burns LR, Kimberly JR. Does reengineering really work? An examination of the context and outcomes of hospital reengineering initiatives. Health Serv Res. 2000;34(6):1363–1388. [PMC free article] [PubMed] [Google Scholar]

- 15.Locock L. Redesigning health care: new wine from old bottles? J Health Serv Res Policy. 2003;8(2):120–122. doi: 10.1258/135581903321466102. [DOI] [PubMed] [Google Scholar]

- 16.Olmsted MG, Geisen E, Murphy J, et al. Methodology: US News & World Report Best Hospitals 2014-15. Available at: http://www.usnews.com/pubfiles/BH_2014_Methodology_Report_Final_Jul14.pdf. Accessed August 2015. [Google Scholar]

- 17.Leavitt MO. Study of Physician-owned Specialty Hospitals. Available at: https://www.cms.gov/Medicare/Fraud-and-Abuse/PhysicianSelfReferral/Downloads/RTC-StudyofPhysOwnedSpecHosp.pdf. Published May 2005. Accessed August 8, 2015. [Google Scholar]

- 18.Physician Hospitals of America Available at: http://www.physicianhospitals.org. Accessed August 24, 2015. [Google Scholar]

- 19.Adashi EY. A “doc fix” for the ages: the value proposition of tomorrow's Medicare. JAMA Surg. 2014;149(11):1101–1102. doi: 10.1001/jamasurg.2014.395. [DOI] [PubMed] [Google Scholar]

- 20.Babu MA, Rosenow JM, Nahed BV. Physician-owned hospitals, neurosurgeons, and disclosure: lessons from law and the literature. Neurosurgery. 2011;68(6):1724–32. doi: 10.1227/NEU.0b013e31821144ff. discussion1732. [DOI] [PubMed] [Google Scholar]

- 21.Baum N. Physician Ownership in Hospitals and Outpatient Facilities. Available at: http://www.chrt.org/publication/physician-ownership-hospitals-outpatient-facilities/. Published July 2013. Accessed August 2015. [Google Scholar]

- 22.Institute of Medicine (US) Committee on Quality of Health Care in America . To Err Is Human: Building a Safer Health System. In: Kohn LT, Corrigan JM, Donaldson MS, editors. Institute of Medicine (US) Committee on Quality of Health Care in America. National Academies Press (US); Washington (DC): 2000. [PubMed] [Google Scholar]

- 23.Trybou J, De Regge M, Gemmel P, et al. Effects of physician-owned specialized facilities in health care: a systematic review. Health Policy. 2014;118(3):316–340. doi: 10.1016/j.healthpol.2014.09.012. [DOI] [PubMed] [Google Scholar]

- 24.Iglehart JK. The emergence of physician-owned specialty hospitals. N Engl J Med. 2005;2005:352. doi: 10.1056/NEJMhpr043631. 78-84. [DOI] [PubMed] [Google Scholar]

- 25.Chang DC, Anderson JE, Yu PT, et al. Can hospitals “game the system” by avoiding high-risk patients? J Am Coll Surg. 2012;215(1):80–6. doi: 10.1016/j.jamcollsurg.2012.05.005. discussion87. [DOI] [PubMed] [Google Scholar]

- 26.Centers for Medicare & Medicaid Services Hospital Value-Based Purchasing Hospital (VBP) Available at: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/hospital-value-based-purchasing. Accessed August 8, 2015. [Google Scholar]

- 27.O'Malley AJ, Zaslavsky AM, Elliott MN, et al. Case-Mix Adjustment of the CAHPS® Hospital Survey. Health Serv Res. 2005;40(6p2):2162–2181. doi: 10.1111/j.1475-6773.2005.00470.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Elliott MN, Zaslavsky AM, Goldstein E, et al. Effects of survey mode, patient mix, and nonresponse on CAHPS hospital survey scores. Health Serv Res. 2009;44(2):501–518. doi: 10.1111/j.1475-6773.2008.00914.x. Pt 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Peng L. Patient Selection Under Incomplete Case Mix Adjustment: Evidence from the Hospital Value-based Purchasing Program. Available at: http://www.lehigh.edu/~lip210/Lizhong_Peng_JMP.pdf. Published October 2014. Accessed December 2015. [Google Scholar]

- 30.Werner RM, Asch DA. The unintended consequences of publicly reporting quality information. JAMA. 2005;293(10):1239–1244. doi: 10.1001/jama.293.10.1239. [DOI] [PubMed] [Google Scholar]

- 31.Evans MA, Pope GC, Kautter J, Ingber MJ. Evaluation of the CMS-HCC Risk Adjustment Model. CfMM Services. 2011 [Google Scholar]

- 32.Lawson EH, Zingmond DS, Hall BL, et al. Comparison between clinical registry and medicare claims data on the classification of hospital quality of surgical care. Ann Surg. 2015;261(2):290–296. doi: 10.1097/SLA.0000000000000707. [DOI] [PubMed] [Google Scholar]

- 33.Sofaer S, Crofton C, Goldstein E, et al. What do consumers want to know about the quality of care in hospitals? Health Serv Res. 2005;40(6):2018–2036. doi: 10.1111/j.1475-6773.2005.00473.x. Pt 2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Sacks GD, Lawson EH, Dawes AJ, et al. Relationship Between Hospital Performance on a Patient Satisfaction Survey and Surgical Quality. JAMA Surg. 2015;150(9):858–864. doi: 10.1001/jamasurg.2015.1108. [DOI] [PubMed] [Google Scholar]