Abstract

Evidence suggests that dental emergencies are likely to occur when preferred care is less accessible. Communicative barriers often exist that cause patients to receive sub-optimal treatment or remain in discomfort for extended lengths of time. Furthermore, limitations in the conventional approach for managing dental emergencies prevent dentists from receiving critical information prior to patient visits. We developed a mobile application to mediate the uncertainty of dental emergencies. Patient-provided information accompanied by high-resolution images may significantly help dentists predict urgency or prepare necessary treatment resources.

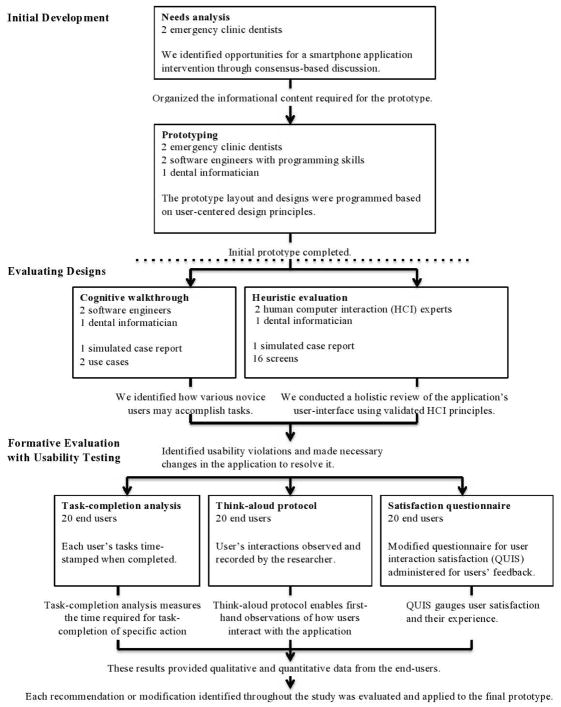

The development and study consisted of a needs analysis and quality assessment of intraoral images captured by smartphones; prototype development; refining the prototype through usability inspection methods; and formative evaluation through usability testing with prospective users.

The developed application successfully guided all users through a series of questions designed to capture clinically meaningful data using familiar smartphone functions. All participants were able to complete a report within 4 minutes and all clinical information was comprehendible by the users.

Our results illustrate the feasibility of patients utilizing smartphone applications to report dental emergencies. This technology allows dentists to remotely assess care when direct patient contact is less practical.

This study demonstrates that patients can use mobile applications to transmit clinical data to their dentists and suggests the possibility of expanding its use to enhance access to routine and emergency dental care. Here, we address how we can enable patients to directly communicate emergent needs to a dentist while relieving them of enduring emergency room visits.

Introduction

The American Dental Association’s Principles of Ethics states “dentists shall be obliged to make reasonable arrangements for the emergency care of their patients”.[1] Immediate assessments are vital for patients experiencing oral trauma, orofacial pain, infections, or similar conditions, however access to care is often limited by distance and time.[2] Many dental emergencies occur during the weekend and evening hours when dental offices are usually closed [3–7]. With few options, many patients will seek initial treatment at medical emergency clinics where physicians provide symptomatic treatment and may not necessarily address the cause.[5,8] Emergency departments have experienced a surge in patient visits with dental emergencies where the majority remain untreated and are referred to their dentist for proper care.[2,9–12] As a result, unnecessary hospital costs accrue while patients remain in discomfort for extended periods of time. In dental practices, dentists must deviate from routine workflow when caring for patients with emergencies. To prepare for these, many dental practices employ telephone-based services to manage out-of-office emergencies. Although effective for notifying dentists of a problem, these services generally do not detail the urgency of a patient’s needs nor adequate information required to effectively estimate the time and resources for emergency care.[13,14]

Teleconsultations using mobile technologies have proven to be a strong candidate for enhancing inadequate patient-provider communications in medical fields.[15,16] Ubiquitous smartphone usage among most demographics has created opportunities for clinicians to deliver various health-related interventions in real-time using universally available capabilities.[17,18] Since emergencies are often subject to delays in care, the smartphone may provide the ability to remotely triage dental patients prior to chairside assessments. For example, the high-resolution camera on smartphones permits visual interpretations of patient cases and avoids misleading verbal accounts native to telephone-based systems. The functions of smartphone cameras (autofocus, anti-movement, and white-balance) permit fine detail and color visualization in dental images.[19] Transmitting these images appended with patients’ symptoms to a dentist could improve patient-provider communication in real-time and enable dentists to make more timely treatment decisions.

Dentistry has recently begun to explore mobile technologies for a variety of purposes[20] as the smartphones’ capabilities for information capture and transmission continue to improve. To date, dental mobile applications have included various functions such as data collection,[21] symptom checking,[22] and photographing and transmitting high-quality dental images.[19,23] Additionally, Namakian[24] has shown that dentists can assess patients remotely, as well as in person if accurate information is available. Therefore, with proper information captured remotely, clinical decision-making such as treatment plans and resource allocations could be supported. Here, we address the development of a smartphone application and how it can enable patients to directly communicate emergent needs to a dentist with the goal of relieving prolonged treatments and enduring emergency room visits. For a health application to be effective, we need to first determine the technical feasibility of patients being able to utilize the application prior to determining how a dentist may choose to utilize the data.

Study goals

Our goal was to explore the feasibility of prospective patients using a smartphone to capture clinical information that help dentists make fundamental clinical decisions when they cannot examine them directly. We explored potential users experiences leveraging the camera and other means of data entry (voice/text) to develop a prototype application for exchanging information about dental emergencies between patients and dentists. We evaluated the prototype to determine the extent to which the application supported information capture by potential users involved in a simulated emergency.

Methods

The study consisted of four phases: 1) needs analysis and quality assessment of intraoral images captured by smartphones; 2) prototype development; 3) refining the prototype through usability inspection methods; and 4) formative evaluation through usability testing with 20 prospective users. Figure 1 provides a detailed description of these phases, which are described in the following sections.

Figure 1.

Prototype design process

Initial development

Needs Analysis

Prior to starting development, we spoke to dentists to understand their needs when patients present with emergency situations. Two dentists who worked in the emergency clinic at the University of Pittsburgh, School of Dental Medicine identified the common conditions for which their patients typically seek urgent care. For each condition, they compiled a list of information they typically review when providing care for these patients. With the request for visual aids, the research team independently evaluated images from different smartphone models as well as the device used in the study and reached consensus that the quality of displaying and identifying oral conditions is sufficient across different manufacturers.

Prototype Development

We first developed a paper prototype to design how information would flow through the application and incorporated all the necessary information identified by the dentists during the needs analysis. We included clinical questions intentionally written at an elementary reading level. Data entry was designed using conventional smartphone data-input methods such as text, radio button selections, voice recording, and capturing/appending photographs. For instance, the prototype application permits users to type additional comments about their symptoms, as well as the ability to leave a voice-message for dentists to respond to. The free-speech audio input provides an additional method to report the incident to a provider. In contrast to traditional telephone services, transmitting images through mobile devices allows a visual inspection of high-definition images and increases the ability to remotely evaluate care.

Design Evaluation

We refined and developed a high-fidelity prototype and evaluated it using two usability inspection methods[25]: 1) cognitive walkthrough to identify potential usability problems in the application’s functionality[25]; and 2) heuristic evaluation, to evaluate the extent to which the application’s screen design conformed with established design principles.[26] We then revised the prototype interface based on the results of these inspections.

The cognitive walkthrough was applied to determine how a novice user would navigate through the application.[22] The application’s instructions to complete a task are created and evaluated by the developer to determine any difficulty that a novice could experience. Following the instructions provided by the application, we compared the assumed novice user experience with the intended action sequence designed, as outlined in Table 1. Prior to every action, we asked specific questions proposed by Wharton et al[27] to analyze the success of task-completion. Each question focused on a description of the appropriate action and others that are available, how the action is executed, as well as any modified goals while completing a task. Any ‘no’ answer represented a failure in the sequence and identified a potential problem with completing the task.

Table 1.

Information needs identified as being critical to dentists

| • Chief complaint | • Sensations |

| • Anatomic location | • Presence of swelling |

| • Onset of discomfort | • Presence of bleeding |

| • Pain intensity | • Difficulty eating/drinking |

| • Pain frequency | • Medication taken |

| • Presence of fever | • Medication effect |

| • Triggers of pain | • Photograph |

| • Pain reliefs | • Miscellaneous comments |

While cognitive walkthrough identifies potential usability problems, heuristic evaluation evaluates the user interface’s compliance with established design principles.[28–31] Typically, 2–3 evaluators with knowledge in human-computer interaction examine the interface design layout and navigation to assess whether they comply with the ten heuristics as defined by Nielsen[32] (Appendix A). Three evaluators (TKS, TPT, CDS) independently inspected the application’s user-interface to identify heuristic violations and compiled all violations into a single list. The three evaluators then removed redundant findings through consensus driven discussion and rated the severity of violations as described below:

Low —Minor usability or cosmetic problem given low priority

Medium – Usability or cosmetic problem causing exhaustive thought or assumptions by the user, given medium priority

High – Major usability or cosmetic problem that may results in catastrophic task errors, given high priority.

Formative Evaluation with Usability Testing

Once the high-fidelity prototype was finalized, we performed formative evaluations with usability testing. An IRB exemption was obtained through the University of Pittsburgh and we recruited a convenience sample of 20 prospective users for the usability testing.[25] The inclusion criteria for study participants included individuals at least 18 years old, English literate, and had used any smartphone in the last six months. No participants had any previous dental education background.

To observe how users would navigate the application, a researcher (CDS) provided verbal instructions only on how to access the application from the home screen. Additionally, the researcher presented a ‘broken tooth’ scenario to the participant for which they would need to contact a dentist urgently. The researcher then asked the participant to try contacting the dentist using this application. No additional instructions regarding navigation through the application were provided as the application included all further instructions for them to complete the task of submitting a case-report.

Dental emergencies are time-sensitive needs and accordingly, it is essential to assess the time a potential patient may spend while using the application described.[33] We began the evaluation with a timed task-completion analysis, which examined the time required for completing a case report. [34] After initial instructions, participants were asked to create and submit a case report without interruption or assistance. All the participants completed the case report and each sub-task was time-stamped as they completed it.

Once the participant completed the timed task, we conducted usability testing using a retrospective think-aloud (RTA) protocol[35] to examine their experiences and uncover usability problems.[36,37] The RTA is described by Balatsoukas et al[38] as a “sensitive protocol for detecting unique usability problems related to users' cognitive behavior” applying both user verbalization and facilitator observation while users interact with a system interface.

Each participant revisited the screens from the timed-task exercise and recalled their experiences while navigating through the application.[39] We asked them to describe their thought processes for solving tasks and encouraged them to verbalize any positive or negative experiences with the application.[37,40] For instance, users described the comprehensibility of the user interface, as well as the difficulty or ease of completing tasks. We recorded detailed notes and subsequently analyzed the transcripts for usability problems experienced by the participants.

At the conclusion of usability testing, each participant completed the validated Questionnaire for User Interaction Satisfaction (QUIS) [41] on their experience with the application. The survey contains 39 questions to assess user satisfaction of the screen and layout, terminology and system information, learnability, system capabilities, overall reactions to the application, and an additional section for users to list any positive or negative aspects they perceived. Based on participant feedback during the pilot phase, we modified the language of some QUIS questions and reduced the Likert response scale from nine to six to reduce confusion.

Results

Needs Analysis

Table 1 lists the information we identified that dentists typically gather from patients who report urgent needs. We incorporated this information into the application to elucidate responses concerning the four main areas of emergency dental care: teeth, gums, jaws, and mouth.

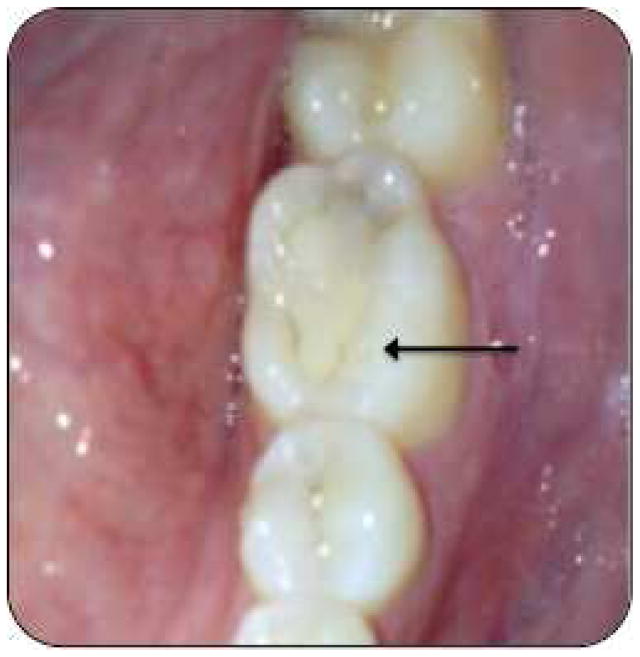

We also reached consensus that the quality of smartphone images for displaying oral conditions was sufficient. As displayed in Figure 2, the photographs captured with different smartphone devices clearly show the fine details of color/shade and shape of various tooth and gum conditions. This finding further supported the smartphone as a potential instrument to triage dental emergencies.

Figure 2.

Smartphone image of recurrent decay under composite restoration (photographed with Motorolla RAZR)

Prototype

The prototype application was developed on a Motorola Droid X smartphone with an Android operating system due to the convenience of programming in its open-source platform. At the time of the study, the specifications of the smartphone used included an 8 mega-pixel camera and a 480 x 854 pixel resolution (228 ppi) screen. Image quality continues to increase with each generation with some current smartphone specifications reaching beyond 41 mega-pixels of image resolution and screen resolution greater than 600 x 1024 pixels. A 2015 study by Boissin et al evaluated different smartphone camera images and concluded that Apple, Blackberry, and Android platforms could all replace digital cameras for the purposes of medical teleconsulation. [42]

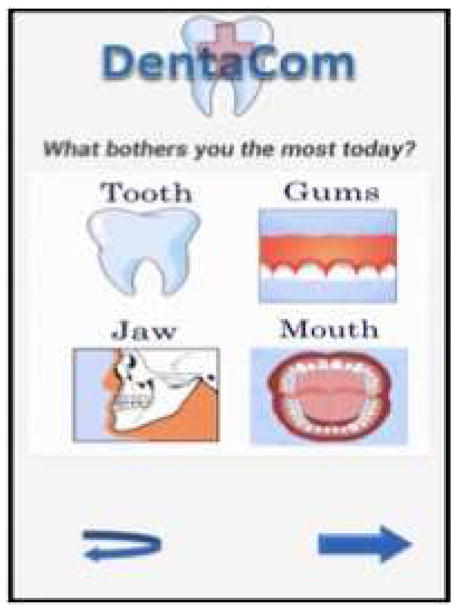

Upon opening the application, the completion of a single report requires navigation through approximately 13 individual screens (outlined in Table 2). Users begin with a standard login screen where a username and a personal identification number are entered. Next, the user selects “create a new report” and is prompted to select a dentist’s name where the reported data would be transmitted. A title screen asks the user “What bothers you the most today?” where the user can select their general area of concern: tooth, jaw, gums, or mouth (Figure 3). The subsequent screen asks the user to identify the most appropriate scenario that describes their discomfort. For example, under the selection for ‘Tooth’, the user is able to select ‘broken tooth’ or ‘displaced/lost dental work’.

Table 2.

Action sequence of completing a case report

| Case Report - Action sequence | |||

|---|---|---|---|

| Screen | Main Task | Screen | Main Task |

| 1 | Select 'Start New Report' | 9 | Select general tooth region |

| 2 | Select receiving dentist | 10 | Select specific tooth location |

| 3 | Select general concern | 11 | Choose to photograph an image |

| 4 | Select scenario | 11 | Photograph an image |

| 5 | Answer Primary Evaluation 1 | 12 | Insert text comment |

| 6 | Answer Primary Evaluation 2 | 12 | Record a comment |

| 7 | Answer Primary Evaluation 3 | 12 | Enter contact information |

| 8 | Answer Other Indicators | 13 | Submit report |

Figure 3.

Screen 3: Select General Concern

In the following four screens, the users enter their symptoms and physical conditions using slide indicators, radio buttons, and checkboxes. The user is then asked to upload or photograph two images of their present condition. The user also has the option to enter free text in the comments box, their contact preferences (email, telephone, text-message) and send voice messages. In the final screen, the user is able to submit the report and view a summary of their inputs to acknowledge the completed submission.

Usability Inspection Results

Cognitive Walkthrough

The cognitive walkthrough identified two potential usability problems that could interfere with submitting a report. Two separate screens where users select the regional and proximal location of their discomfort were identified as being too far apart given their similarity. These two screens were later relocated to create a more natural progression of tasks. The second observation prompted a revision to the ‘Likert scale faces’ to reduce ambiguous or indefinite responses. In screens where the user is instructed to select their level of pain, the six Likert-face options displayed were reduced to three (no pain, mild pain, severe pain).

Heuristic Evaluation

Of the twenty-four heuristic violations identified by the three evaluators, the most violated heuristics were ‘Consistency and standards’ and ‘Match between system and the real world’ with six violations for each heuristic. The evaluators rated four violations as high severity (described below) and the remaining as medium or low severity. Most of the medium and low severity violations included texts that required rephrasing and design issues that were reasonably simple to revise.

Consistency and Standards

Users identified the inconsistent use of checkboxes and radio buttons on two screens and were rated as high severity because they could confuse users who are unaware that they can enter more than one response for a question. Conventionally, checkboxes imply multiple-choice responses to selections while radio-buttons are preferred for single-choice responses. The answer choices on these screens were subsequently revised to reflect this standard.

Match Between System and the Real World

Previously, the question, ‘When did the pain begin?’ displayed intervals of time that overlapped each other. We rated this finding a High Severity violation because it is critical to have an accurate response on pain onset. The responses provided were not inclusive of all ranges of time and were inherently confusing.

Error Prevention

The user, when asked to choose a dentist to contact, had the option to select “none”. Clearly, this option is counter-intuitive because the user needs to send the report to a dentist. Therefore, it is a high severity violation and the “None’ option was subsequently removed. Evaluators also recommended clearly indicating mandatory questions to be completed using asterisks to prevent errors by not having complete answers.

Visibility of System Status

The application did not provide any feedback or visual cue to acknowledge that the user submitted a case report to the selected dentist or dental practice. Once this violation was discovered, a final confirmation screen was added into the interface to prevent future confusion.

Assessing End User Usability

We recruited twenty participants (nine male, eleven female) to participate in the usability study between the ages of 18–61 years old. The average age of the participants were 32.4 years old (Standard Deviation: 15.5 years), with 70% between 18–29 years of age. The participants had an average of 4.6 years’ experience (SD: 1.6 years) using smartphone devices. Only one participant was a novice user with one year or less of smartphone experience.

Timed task-completion analysis

All participants successfully completed the simulation of a case report in less than 4 minutes. The mean time for users to complete the report was 3.18 minutes (SD: 34.9 seconds), with a range from 2.28 to 3.88 minutes. Participants took the most time (average 73 seconds, SD: 6.4 seconds) completing information on four screens (screens 5–8) that required 50% of the total data entry. The average completion times for each screen are displayed on Table 3. The screen for ‘applying comments and contact information’ required users to spend the most time (30.75 seconds, SD: 11.17 seconds) for any individual screen and required four separate data elements for the user to input. At this screen, users were able to input both free-text and voice-input as a mode of data-entry.

Table 3.

Timed task-completion

| MEAN | MIN | MAX | SD | |||

|---|---|---|---|---|---|---|

|

|

||||||

| Years of participant smartphone experience: | 4.60 | 3 | 7 | 1.50 | ||

| Participant age: | 32.40 | 18 | 60 | 15.48 | ||

|

| ||||||

| Task | Elements (n) | Simulated tasks to complete 1 case-report | Time till completion (seconds) Non-think aloud | |||

| 1 | 1 | Initiate new report | 4.40 | 2 | 6 | 1.82 |

| 2 | 1 | Select receiving dentist | 9.35 | 3 | 17 | 5.10 |

| 3 | 1 | Select regional concern | 9.95 | 3 | 25 | 4.61 |

| 4 | 1 | Select proximal scenario | 7.65 | 3 | 16 | 4.02 |

| 5 | 2 | Answer Pain Severity Questionnaire | 14.35 | 7 | 25 | 4.99 |

| 6 | 2 | Answer Onset and Fever Questionnaire | 19.80 | 10 | 37 | 7.92 |

| 7 | 3 | Answer Pain Description Questionnaire | 22.00 | 8 | 32 | 7.41 |

| 8 | 5 | Answer Other Indicators | 16.45 | 11 | 32 | 5.28 |

| 9 | 1 | Select regional location | 13.35 | 5 | 19 | 5.30 |

| 10 | 1 | Select proximal location | 12.35 | 3 | 20 | 6.54 |

| 11 | 1 | Append photograph | 24.05 | 11 | 35 | 6.17 |

| 12 | 4 | Apply comments and contact info | 30.75 | 14 | 58 | 11.17 |

| 13 | 1 | Submit report | 6.65 | 3 | 20 | 4.51 |

|

| ||||||

| Total report completion time (seconds) | 191.10 | 137 | 233 | 34.90 | ||

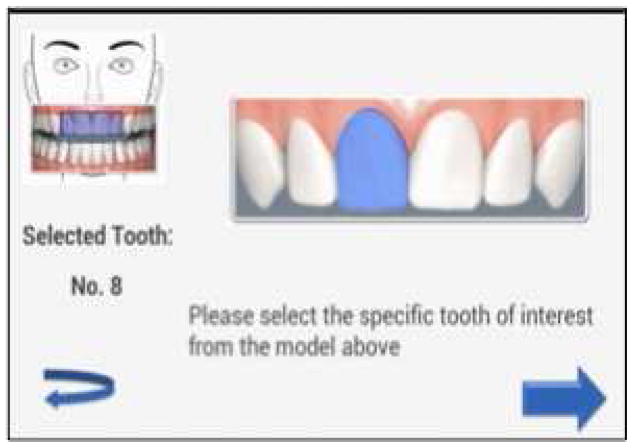

Retrospective think-aloud protocol

Of the thirteen screens outlined in Table 2, participants experienced the most difficulty in Screens 9 and 10 , which required the user to document the location of their problem (Screen 10 seen on Figure 4). We identified ten usability problems in these two screens. Here, users first selected the regional location of the simulated discomfort, followed by a larger image to select a more precise location on a mouth model. Seven users recognized this task as being redundant since they had already correctly selected the exact location in the previous screen. The participants recommended using only a single screen and utilizing a magnification function to record the exact location of their concern.

Figure 4.

Screen 10: Select specific tooth location

Participants reported their unfamiliarity with the use of slider indicators on Screens 5 and 6 in ten separate instances. Four users also expressed difficulty with the record-function button in the ‘Apply comments and contact information’ screen. Here, users were able to voice-record their input though these individuals required multiple attempts to complete the task of recording a voice-message. Users requested the words ‘record’, ‘play’, and ‘stop’ be included with the button images. The complete list of these usability problems observed are displayed in Appendix B.

User satisfaction

QUIS assessed users’ satisfaction of the application’s screen layout, terminology and system information, learnability, capability, and their overall reaction. The participants rated each of these categories on a scale of 1–6 with six indicating most satisfied. All participants indicated their satisfaction of the application’s usability with each category receiving scores greater than 5.3 with an average of 5.59 satisfaction. Table 4 shows the cumulative average responses of the twenty participants for each category.

Table 4.

User satisfaction scores of each QUIS component (Scale: 1–6, least to most satisfied)

| Component | Number of questions | Mean Satisfaction Score(Scale: 1–6, least to most satisfied | Average Standard deviation |

|---|---|---|---|

| Screen and layout | 5 | 5.58 | 0.67 |

| Terminology and system information | 11 | 5.56 | 0.78 |

| System learnability | 8 | 5.77 | 0.48 |

| System capabilities | 8 | 5.68 | 0.65 |

| Overall reaction to the software | 5 | 5.36 | 0.87 |

|

| |||

| Total | 37 | 5.59 | 0.32 |

The last two QUIS questions permitted participants to provide open-ended responses about their positive or negative perceptions of the application. However, only 11 (55%) participants responded to either or both questions. Five of the eleven participants (45%) expressed that the application was simple to use and easy to navigate. Other positive opinions included the application’s simply followed directions and its efficiency to use. In contrast, four participants commented that the application was slow or not smooth when transitioning between screens at times. Three users listed repetitive or redundant questions as a drawback of the application.

Discussion

The study results indicate the feasibility of patients using mobile applications to transmit relevant clinical data to their dental providers during an emergency. The results also highlight the ease of using the mobile application among individuals in any age group and with minimal smart phone use experience. The results are significant because they suggest the possibility of expanding mobile applications to enhance patient follow-up after initial dental appointments, patient understanding on seeking dental care and improving access to care for outside office hours’ dental emergencies. The participants’ high user satisfaction rating signifies the importance of performing needs analysis and incorporating user-centered design methods during the early phase of developing the technology than later. Previous studies on mobile applications have explored its use by providers to contact patients and for remotely monitoring patients for various health conditions. [15–18] To our knowledge, this is the first study in the United States that explores the development process and potential use of a mobile application that allows prospective patients to contact their dental providers.

Mobile applications have the potential to make a difference where dentistry is striving to make progress. Broken appointments are a huge problem in dentistry especially among socio-economically disadvantaged populations. Lack of transportation, finance and inflexible work schedules are major reasons for broken appointments among Medicaid enrolled patients [43–45]. Moreover, dental practices are concerned about the high attrition rate of patients following their initial treatments and the patients’ failure to comply with follow-up visits[46] . Establishing tele-consults and patient education modules through mobile applications may alleviate these issues to some extent[47,48]. While these numbers continue to increase, a Pew Research Center survey found that 50% of American low-income adults own a mobile phone and 62% of all mobile phone owners have used their phone to look up health information. An additional 7% of Americans are considered ‘smartphone dependent’ with no other means to access broadband Internet. [49]

In terms of health care communication, the paradigm is shifting towards clinicians receiving their clinical data on smartphones in e-mails, calls, and text messages.[50] For patients, this landscape is rapidly changing with the use of secure messaging through patient health portals that allow patients to access and exchange personal health information. However, dental patients are infrequently awarded the luxury to communicate their health information directly to their providers. The clinical relevance of this study is that we enabled a platform for patients to directly communicate with their dentist rather then going through other medical/emergency room avenues and not receiving the appropriate treatment protocols.

We designed the application through user-centered design methods and by leveraging the capability of a smartphone to take high quality images. The study results indicate the usefulness of our mobile application to contact and convey relevant information to dentists during dental emergencies and after-office hours. The range of participant’s ages (18–61 years old) and smartphone experience (1–7 years) further exhibited its versatility for accommodating users with varying smartphone use experience.

The drawbacks of integrating mobile applications with patient care have previously been acknowledged, due to lack of evaluations that impact both the user’s experience and well-being.[51,52] Implementation of poorly designed software can have costly repercussions for both providers and patients and valid assessments are necessary prior to clinical use.[41,53] Furthermore, many have detailed the value of robust design and evaluation techniques that we utilize here.[54] Specifically, inclusion of end-users is essential while developing patient-centric technologies.[55,56] Our study was an initial validation of the application’s usefulness with prospective dental patients. As a result of this evaluation, additional tests should assess users’ capabilities to select all oral anatomical locations supported by the application, as well as other approaches for obtaining the same clinical data. Furthermore, the significance of the anatomical data should be evaluated with dentists prior to any interface revisions.

Though smartphones may be useful for supporting the efficacy of remote examinations in dentistry, there is no definitive substitute for a chairside exam. The application described here only intends to exchange clinical information when other means of dental evaluations are less ideal. Our results warrant a clinical study to validate the utility of the clinical data captured, as well as the application’s true implementation in clinical practice. Hypothetically, the application would allow dentists to evaluate how urgent a patient’s conditions are and manage emergent situations in a more efficient manner than conventional methods. Smartphone use as a patient self-help tool may very well augment the current approach for receiving care when access is limited. Exploiting the utility of these mobile applications could relieve unwarranted patient visits, and expedite appropriate patient care.[3,4,10,22,57]

Limitations

The study reports an assessment of the technical feasibility of utilizing smartphones between dentists and patients and does not address the clinical implications. The twenty participants studied here only simulated a patient experiencing dental trauma and were in no state of dental emergency. Actual patients experiencing an emergency scenario may therefore significantly deviate from the reported completion times for navigating through the application.

Furthermore, investigating the application’s clinical utility is necessary to determine the efficacy of implementing a remote triaging service for patients while we only report potential user’s interactions. Further investigation should also assess the success and accuracy of user’s self-reported information, including the images taken of dental discomfort. This would determine the significance and value of the various data elements included in this application for triaging emergency dental care. Eventual real world implementation must also address reimbursement, legal, regulatory and policy issues, including appropriate clinical guidelines and treatment protocols.

Conclusion

We designed and implemented a test mobile application to facilitate optimal information exchange between dentists and patients with the goal of expediting emergency care. The application successfully supported clinical information capture by patients using ubiquitous smartphone inputs such as text and radio buttons, voice recording, and high-resolution photography. All participants successfully completed the instructed clinical tasks and the application was commended for being instructional and simple to use. The results of this study illustrate the feasibility of patients utilizing a smartphone application to self-report emergency dental conditions. Additionally, the results also support the ability for dentists’ to remotely assess care when direct patient contact is less practical.

Acknowledgments

We gratefully acknowledge the study participants for their valuable time and feedback. We would like to acknowledge Yuxin Liu and Jingtao Wang for their contribution while developing this application. We would also like to acknowledge Dr. Steven Handler for his feedback on the manuscript. This study was supported in part by the National Institutes of Health grant 5K08DE018957-02 (Thankam Thyvalikakath), the Lilly Endowment, Inc. Physician Scientist Initiative (Titus Schleyer), and by the National Library of Medicine training grant 5 T15 LM007059-24 awarded to the Department of Biomedical Informatics, University of Pittsburgh. The content of this manuscript along with the intellectual property of the application developed is solely the responsibility of the authors.

Appendix A. Nielsen's ten usability heuristics for evaluating user interfaces

| Nielsen's Ten Usability Heuristics | |

|---|---|

| Heuristic | Explanation |

|

| |

| 1 Visibility of system status | The system should always keep users informed about what is going on, through appropriate feedback within reasonable time. |

|

| |

| 2 Match between system and the real world | The system should speak the users' language, with words, phrases and concepts familiar to the user, rather than system- oriented terms. Follow real-world conventions, making information appear in a natural and logical order. |

|

| |

| 3 User control and freedom | The system should support undo and redo. |

|

| |

| 4 Consistency and standards | Users should not have to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions. |

|

| |

| 5 Error prevention | Even better than good error messages is a careful design, which prevents a problem from occurring in the first place. |

|

| |

| 6 Recognition rather than recall | Make objects, actions, and options visible. The user should not have to remember information from one part of the dialogue to another. |

|

| |

| 7 Flexibility and efficiency of use | Allow users to tailor frequent actions. |

|

| |

| 8 Aesthetic and minimalist design | Dialogues should not contain information that is irrelevant or rarely needed. |

|

| |

| 9 Help users recognize, diagnose, and recover from errors | Error messages should be expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution. |

|

| |

| 10 Help and documentation | Even though it is better if the system can be used without documentation, it may be necessary to provide help and documentation. Any such information should be easy to search, focused on the user's task, list concrete steps to be carried out, and not be too large. |

Appendix B. Observations identified by users through the retrospective think-aloud protocol

| Location | Issue | Frequency |

|---|---|---|

|

| ||

| Initiate new report | - | - |

|

| ||

| Select receiving dentist | Expected 'next' to be automatic | 1 |

|

| ||

| Select regional concern | Expected 'next' to be automatic | 1 |

|

| ||

| Select proximal concern | - | - |

|

| ||

| Evaluation metrics 1 | Missed slider | 3 |

| Issue with using slider | 2 | |

| Selected face (image) | 1 | |

| Missed question | 1 | |

|

| ||

| Evaluation metrics 2 | Issue with using slider | 5 |

| Required second look | 1 | |

|

| ||

| Evaluation metrics 3 | - | - |

|

| ||

| Evaluation metrics 4 | Confused by question | 1 |

|

| ||

| Select regional location | Required second look | 1 |

|

| ||

| Select proximal location | Initially chose correct tooth | 7 |

| Chose incorrect tooth | 2 | |

|

| ||

| Append photograph | - | - |

|

| ||

| Apply comments and contact info | Required two tries | 2 |

| Selected 'record button' to stop | 2 | |

| Did not select record | 2 | |

|

| ||

| Submit report | - | - |

Footnotes

Disclosure. None of the authors reported any disclosures.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.American Dental Association. Principles of Ethics and Code of Professional Conduct. 2012. [Google Scholar]

- 2.Seu K, Hall KK, Moy E. Healthcare Cost and Utilization Project (HCUP) Statistical 2012. Agency for Health Care Policy and Research; US: 2006–2012. Nov, Emergency Department Visits for Dental-Related Conditions, 2009: Statistical Brief #143. [PubMed] [Google Scholar]

- 3.Bae J, Kim Y, Choi Y. Clinical characteristics of dental emergencies and prevalence of dental trauma at a university hospital emergency center in Korea. Dent Traumatol. 2011;27(5):374–378. doi: 10.1111/j.1600-9657.2011.01013.x. [DOI] [PubMed] [Google Scholar]

- 4.Bai J, Ji A, Yu D. Clinical analysis of dental trauma patients in the emergency room. Beijing Da Xue Xue Bao. 2010;42(1):90–93. [PubMed] [Google Scholar]

- 5.Portman-Lewis S. An analysis of the out-of-hours demand and treatment provided by a general dental practice rotation over a five-year period. Prim Dent Care. 2007;14(3):98–104. doi: 10.1308/135576107781327043. [DOI] [PubMed] [Google Scholar]

- 6.Allareddy V, Nalliah R, Haque M, Johnson H, Rampa S, Lee M. Hospital-based Emergency Department Visits with Dental Conditions among Children in the United States: Nationwide Epidemiological Data. Pediatr Dent. 2014;36(5):393–399. [PubMed] [Google Scholar]

- 7.Walker A, Probst J, Martin A, Bellinger J, Merchant A. Analysis of hospital-based emergency department visits for dental caries in the United States in 2008. J Public Health Dent. 2004;74(3):188–194. doi: 10.1111/jphd.12045. [DOI] [PubMed] [Google Scholar]

- 8.Trivedy C, Kodate N, Ross A, et al. The attitudes and awareness of emergency department (ED) physicians towards the management of common dentofacial emergencies. Dent Traumatol. 2012;28(2):121–126. doi: 10.1111/j.1600-9657.2011.01050.x. [DOI] [PubMed] [Google Scholar]

- 9.Cohen L, Bonito A, Eicheldinger C, et al. Comparison of patient visits to emergency departments, physician offices, and dental offices for dental problems and injuries. J Public Health Dent. 2011;71(1):13–22. doi: 10.1111/j.1752-7325.2010.00195.x. [DOI] [PubMed] [Google Scholar]

- 10.Cohen LA, Manski RJ, Magder LS, Mullins CD. EDs grapple with surging demand from patients with dental problems. ED Manag. 2012;24(5):56–59. [PubMed] [Google Scholar]

- 11.Wall T, Nasseh K. American Dental Association 2013;Health Policy Resources Center, Research Brief. May, 2013. Dental-Related Emergency Department Visits on the Increase in the United States. [Google Scholar]

- 12.cdc.gov. Kim JE. QuickStats: Percentage of Emergency Department (ED) Visits That Were Dental-Related* Among Persons Aged <65 Years, by Age Group — National Hospital Ambulatory Care Survey, 1999–2000 to 2009–2010. National Hospital Ambulatory Care Survey. 2013;62(38):803. [Google Scholar]

- 13.Jeavons I. Triage protocols. [Comment on Out-of-hours dental care] Br Dent J. 2006;200(4):183–184. doi: 10.1038/sj.bdj.4813275. [DOI] [PubMed] [Google Scholar]

- 14.Anderson L. Availability of emergency dental treatment - a question of organization. Dent Traumatol. 2010;26(211) doi: 10.1111/j.1600-9657.2010.00901.x. [DOI] [PubMed] [Google Scholar]

- 15.Ebner C, Wurm EM, Binder B, Kittler H, Lozzi G, Massone C. Mobile teledermatology: a feasibility study of 58 subjects using mobile phones. J Telemed Telecare. 2008;14:2–7. doi: 10.1258/jtt.2007.070302. [DOI] [PubMed] [Google Scholar]

- 16.Yamada M, Watarai H, Andou T, Sakai N. Emergency image transfer system through a mobile telephone in Japan: technical note. Neurosurgery. 2003;52:986–990. doi: 10.1227/01.neu.0000053152.45258.74. [DOI] [PubMed] [Google Scholar]

- 17.Ozdalga E, Ozdalga A, Ahuja N. The Smartphone in Medicine: A Review of Current and Potential Use Among Physicians and Students. J Med Internet Res. 2012;14(5) doi: 10.2196/jmir.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Saleh A, Mosa M, Yoo I, Sheets L. A systematic review of healthcare applications for smartphones. BMC Medical Informatics and Decision Making. 2012;12(67) doi: 10.1186/1472-6947-12-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Park W, Lee H, Jeong J, Kwon G, Kim K. Optimal protocol for teleconsultation with a cellular phone for dentoalveolar trauma: an in-vitro study. Imaging Sci Dent. 2012;42(2):71–75. doi: 10.5624/isd.2012.42.2.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Stein C, Walker L. Top 15 Mobile Applications for Dental & Oral Health. 2012 http://www.medscape.com/features/slideshow/dentalapps.

- 21.Aziz S, Ziccardi V. Telemedicine using smartphones for oral and maxillofacial surgery consultation, communication, and treatment planning. J Oral Maxillofac Surg. 2009;67:2505–2509. doi: 10.1016/j.joms.2009.03.015. [DOI] [PubMed] [Google Scholar]

- 22.Favero L, Paven L, Arreghini A. Communication through telemedicine: home teleassistance in orthodontics. Eur J Paediatr Dent. 2009;10(4):163–167. [PubMed] [Google Scholar]

- 23.Park W, Kim D, Kim J, Yoo S. A portable dental image viewer using a mobile network to provide a tele-dental service. J Telemed Telecare. 2009;15(3):145–149. doi: 10.1258/jtt.2009.003013. [DOI] [PubMed] [Google Scholar]

- 24.Namakian M, Subar P, Glassman P, Quade R, Harrington M. In-person versus “virtual” dental examination: congruence between decision-making modalities. J Calif Dent Assoc. 2012;40(7):587–595. [PubMed] [Google Scholar]

- 25.Nielsen J, Landauer T. A mathematical model of the finding of usability problems. Proc. ACM INTERCHI'93 Conf; 1993; pp. 206–213. [Google Scholar]

- 26.Nielsen J. Summary of Usability Inspection Methods. Evidence-Based User Experience Research, Training, and Consulting. 1995 Jan 1; http://www.nngroup.com/articles/summary-of-usability-inspection-methods/

- 27.Wharton C, Rieman J, Lewis C, Polson P. The cognitive walkthrough method: A practitioner’s guide. Usability inspection methods. 1994:105–140. [Google Scholar]

- 28.Nielsen J. Enhancing the explanatory power of usability heuristics. Proc. ACM CHI'94 Conf; 1994; pp. 152–158. [Google Scholar]

- 29.Nielsen J, Rolf M. Heuristic evaluation of user interfaces. CHI '90 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; 1990; pp. 249–256. [Google Scholar]

- 30.Nielsen J. The usability engineering life cycle. IEEE Computer. 1992;25(3):12–22. [Google Scholar]

- 31.Thyvalikakath TP, Schleyer TK, Monaco V. Heuristic evaluation of clinical functions in four practice management systems: a pilot study. J Am Dent Assoc. 2007;138(2):209–210. 212–208. doi: 10.14219/jada.archive.2007.0138. [DOI] [PubMed] [Google Scholar]

- 32.Nielsen J. Ten usability heuristics. 2005:2008. [Google Scholar]

- 33.Miller R. Task description and analysis. New York, NY: Holt, Rinehart & Winston; 1962. [Google Scholar]

- 34.Jonassen DH, Tessmer M, Hannum VH. Task Analysis Methods for Instructional Design. Mahwah, NJ: Lawrence Erlbaum Associates, Inc; 1999. [Google Scholar]

- 35.Guan Z, Lee S, Cuddihy E, Ramey J. The Validity of the Stimulated Retrospective Think-Aloud Method as Measured by Eye Tracking. CHI '06 Proceedings of the ACM. 2006:1253–1262. [Google Scholar]

- 36.Van den Haak M, de Jong MDT, Schellen PJ. Retrospective vs. concurrent think-aloud protocols: Testing the usability of an online library catalogue. Behaviour & Information Technology. 2003;22(5):339–351. [Google Scholar]

- 37.van Someren MW, Barnard YF, Sandberg JAC. The Think Aloud Method. A practical guide to modelling cognitive processes. London: 1994. [Google Scholar]

- 38.Balatsoukas P, Ainsworth J, Williams R, et al. Verbal Protocols for Assessing the Usability of Clinical Decision Support: The Retrospective Sense Making Protocol. Studies in Health Technology and Informatics. 2013:192. [PubMed] [Google Scholar]

- 39.Jaspersa M, Steen T, van den Bosb B, Geenenb M. The think aloud method: a guide to user interface design. International Journal of Medical Informatics. 2004;73(11–12):781–795. doi: 10.1016/j.ijmedinf.2004.08.003. [DOI] [PubMed] [Google Scholar]

- 40.Van den Haak MJ, de Jong MDT, Schellen PJ. Employing think-aloud protocols and constructive interaction to test the usability of online library catalogues: a methodological comparison. Interact Comput. 2004;16(6):1153–1170. [Google Scholar]

- 41.Donahue G. Usability and the bottom line. Software IEEE. 2001;18(1):31–37. [Google Scholar]

- 42.Boissin C, Fleming J, Wallis L, Hasselberg M, Laflamme L. Can We Trust the Use of Smartphone Cameras in Clinical Practice? Laypeople Assessment of Their Image Quality. Telemed J E Health. 2015;21(11):887–892. doi: 10.1089/tmj.2014.0221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Horsley B, Lindauer S, Shroff B, et al. Appointment keeping behavior of Medicaid vs non-Medicaid orthodontic patients. Am J Orthod Dentofacial Orthop. 2007;132(1):49–53. doi: 10.1016/j.ajodo.2005.08.042. [DOI] [PubMed] [Google Scholar]

- 44.Iben P, Kanellis M, Warren J. Appointment-keeping behavior of Medicaid-enrolled pediatric dental patients in eastern Iowa. Pediatr Dent. 2000;22(4):325–329. [PubMed] [Google Scholar]

- 45.Makarem S, Coe J. Patient retention at dental school clinics: a marketing perspective. J Dent Educ. 2014;78(11):1513–1520. [PubMed] [Google Scholar]

- 46.Levin R. Developing lifetime relationships with patients: strategies to improve patient care and build your practice. J Contemp Dent Pract. 2008;9(1):105–112. [PubMed] [Google Scholar]

- 47.Glassman P. Interprofessional Practice in the Era of Accountability. CDA Journal. 2014;2(9):645. [PubMed] [Google Scholar]

- 48.McCormick A, Abubaker A, Laskin D, Gonzales M, Garland S. Reducing the burden of dental patients on the busy hospital emergency department. J Oral Maxillofac Surg. 2013;71(3):475–478. doi: 10.1016/j.joms.2012.08.023. [DOI] [PubMed] [Google Scholar]

- 49.Smith A. Pew Research Center. Apr 1, 2015. U.S. Smartphone Use in 2015. April 13. 2012 2015;Internet, Science, & Tech. [Google Scholar]

- 50.Tran K, Morra D, Lo V, Quan S, Wu R. The use of smartphones on General Internal Medicine wards: a mixed methods study. Appl Clin Inform. 2014;5(3):814–823. doi: 10.4338/ACI-2014-02-RA-0011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Choi J, Yi B, Park J, et al. The uses of the smartphone for doctors: an empirical study from samsung medical center. Healthc Inform Res. 2011;17(2):131–138. doi: 10.4258/hir.2011.17.2.131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Wolf J, Moreau J, Akilov O, et al. Diagnostic Inaccuracy of Smartphone Applications for Melanoma Detection. JAMA Dermatol. 2013;2013:1–4. doi: 10.1001/jamadermatol.2013.2382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Fairbanks R, Caplan S. Poor Interface Design and Lack of Usability Testing Facilitate Medical Error. Joint Commission Journal on Quality and Patient Safety. 2004;30(10) doi: 10.1016/s1549-3741(04)30068-7. [DOI] [PubMed] [Google Scholar]

- 54.Beyer H, Holtzblatt K. Contextual Design: Defining Customer-Centered Systems. Vol. 1. Morgan Kaufmann; 1997. [Google Scholar]

- 55.Wac K. Smartphone as a personal, pervasive health informatics services platform: literature review. Yearb Med Inform. 2012;7(1):83–93. [PubMed] [Google Scholar]

- 56.Årsand E, Demiris G. User-centered methods for designing patient-centric self-help tools. Informatics for Health and Social Care. 2008;33(3):158–169. doi: 10.1080/17538150802457562. [DOI] [PubMed] [Google Scholar]

- 57.Anderson R. Patient expectations of emergency dental services: a qualitative interview study. British Dental Journal. 2004;197:331–334. doi: 10.1038/sj.bdj.4811652. [DOI] [PubMed] [Google Scholar]