Abstract

Background:

Evidence-based indicators of quality of care have been developed to improve care and performance in Canadian emergency departments. The feasibility of measuring these indicators has been assessed mainly in urban and academic emergency departments. We sought to assess the feasibility of measuring quality-of-care indicators in rural emergency departments in Quebec.

Methods:

We previously identified rural emergency departments in Quebec that offered medical coverage with hospital beds 24 hours a day, 7 days a week and were located in rural areas or small towns as defined by Statistics Canada. A standardized protocol was sent to each emergency department to collect data on 27 validated quality-of-care indicators in 8 categories: duration of stay, patient safety, pain management, pediatrics, cardiology, respiratory care, stroke and sepsis/infection. Data were collected by local professional medical archivists between June and December 2013.

Results:

Fifteen (58%) of the 26 emergency departments invited to participate completed data collection. The ability to measure the 27 quality-of-care indicators with the use of databases varied across departments. Centres 2, 5, 6 and 13 used databases for at least 21 of the indicators (78%-92%), whereas centres 3, 8, 9, 11, 12 and 15 used databases for 5 (18%) or fewer of the indicators. On average, the centres were able to measure only 41% of the indicators using heterogeneous databases and manual extraction. The 15 centres collected data from 15 different databases or combinations of databases. The average data collection time for each quality-of-care indicator varied from 5 to 88.5 minutes. The median data collection time was 15 minutes or less for most indicators.

Interpretation:

Quality-of-care indicators were not easily captured with the use of existing databases in rural emergency departments in Quebec. Further work is warranted to improve standardized measurement of these indicators in rural emergency departments in the province and to generalize the information gathered in this study to other health care environments.

Providing equitable quality emergency care to rural citizens in a vast country with limited financial and human resources is a great challenge. Twenty percent of Quebec's population lives in rural regions,1 and rural emergency departments in the province receive an average of 19 000 visits per year.2-6 Given the limited access to diagnostic services, family doctors and other specialists in rural areas, rural emergency departments constitute an essential safety net for the rural population.2-4,7 Furthermore, in an effort to limit the inherent costs related to emergency departments in rural regions, several Canadian provinces have reduced or regionalized these services.8-10 As a result, numerous hospitals have been forced to reduce services or to close altogether.11 The impact of this situation on the quality of care is not well known. Timely attempts to measure and monitor quality of care in rural emergency departments are thus warranted.

The publication Development of a Consensus on Evidence-Based Quality of Care Indicators for Canadian Emergency Departments12 takes us a step closer to this objective. This document, which was published in 2010, was created by a panel of 24 Canadian experts including managers, clinicians, emergency medicine researchers, health information specialists and government representatives. The selected indicators are related to interventions for life-threatening conditions often treated in emergency departments, including myocardial infarction, stroke, sepsis, asthma and several pediatric problems related to infection (Appendix 1, available at www.cmajopen.ca/content/4/3/E398/suppl/DC1). Theseindicators were developed through an extensive modified Delphi process and are now considered the reference standard in Canada for evaluating quality of care in emergency departments.

It is expected that quality-of-care indicators will allow clinical staff, administrators and researchers to identify areas where improvement in clinical care is most needed, establish benchmarks and compare care across emergency departments in a valid and reliable way.13 These indicators could have a substantial impact on the quality of care provided to rural citizens.12,13 The implementation and regular follow-up of indicators could help standardize access to quality care in rural areas, identify the needs of the population and improve organization of care. The end goal is for rural patients to receive the standard treatment for their medical condition, rather than care that simply reflects the resources available in the area.

However, there are practical limitations related to measuring quality-of-care indicators in rural emergency departments. First, information on indicators may not be available in clinical databases in every rural emergency department. Second, collection of data on certain priority indicators could be difficult in rural establishments owing to lack of resources.14

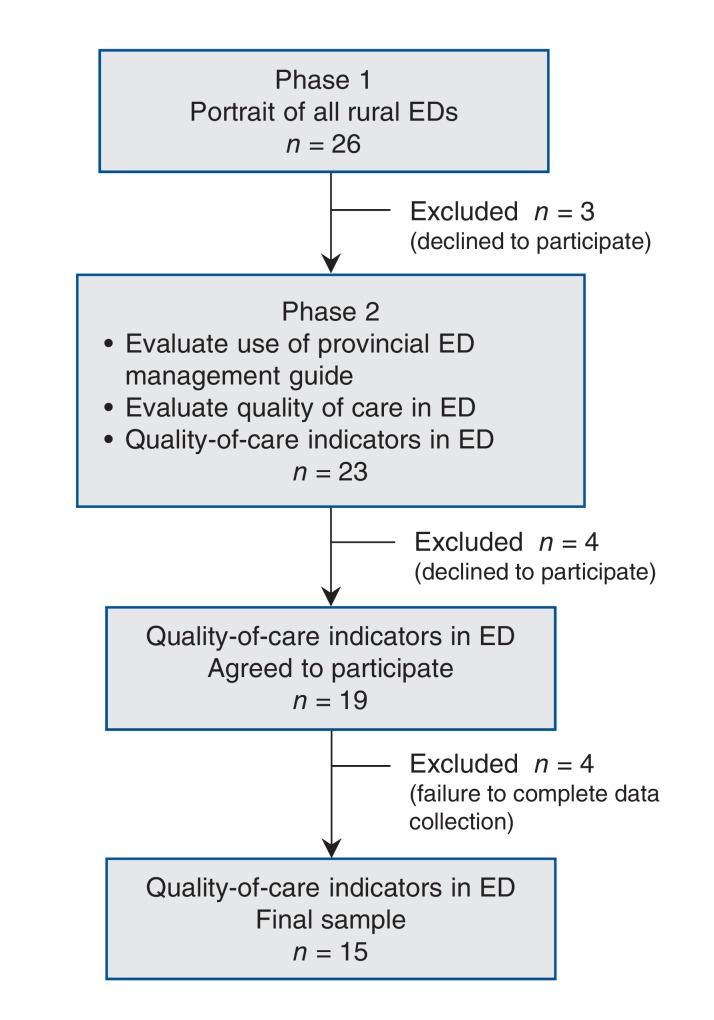

The primary objective of this study was to investigate the feasibility of measuring the quality-of-care indicators defined by Schull and colleagues12 in rural emergency departments in Quebec and to identify potential barriers to implementing the indicators. The study is a substudy of a larger cross-sectional multicentre research project4,15 (Figure 1).

Figure 1.

Flow chart of centres participating in project on rural emergency departments (EDs) in Quebec and use of provincial emergency department management guide.4,15,16

Setting And Study Design

Rural emergency departments in Quebec were previously identified.17,18 Emergency departments were selected if they had medical coverage with hospital beds 24 hours a day, 7 days a week and were situated in a rural region as per the Statistics Canada definition.17 Using these criteria, we identified 26 rural emergency departments in the province.

Source Of Data

Data were collected via email from June to December 2013. We described and explained the 27 indicators in a Microsoft Word document (Appendix 2, available at www.cmajopen.ca/content/4/3/E398/suppl/DC1) and created an Excel spreadsheet to standardize data collection from the emergency departments' patient databases. To ensure standardized measurement of the indicators, our institution's archivists pretested the protocol. Conference calls were held with the head of medical archives in each of the participating emergency departments to introduce the study and identify the person in charge of data collection at each emergency department. The data collection protocols were emailed to the medical archives specialists at each of the invited centres. Data were collected from databases and patient medical files by the person in charge at each participating emergency department. A graduate student/physician (G.L.) and a research nurse followed up weekly via telephone or email to ensure that proper procedures were followed (as described in the Word document).

Statistical analysis

The emergency departments were coded with a number from 1 to 15 as per protocol. We analyzed the data using descriptive statistics (mean, median, proportion). All analyses were conducted with SAS software.

The study was approved by the research ethics committee at the research centre of the Hôtel-Dieu de Lévis, a university-affiliated hospital in Lévis, Quebec.

Results

Sample size and participation rate

Seven of the 26 previously identified emergency departments declined to participate in this phase of the project, mainly owing to lack of human resources. Of the remaining 19 emergency departments, 4 were excluded for failure to complete data collection owing to lack of time and personnel. The final sample included 15 emergency departments, for a participation rate of 58% (Figure 1).

Measurement of quality-of-care indicators

All the indicators in the duration of stay, patient safety, cardiology and sepsis/infection categories were measurable in 7 or more (47%-73%) of the emergency departments (Table 1). For the remaining categories, the indicators were measurable in 6 (40%) or fewer emergency departments. One indicator in the respiratory care category, QCI 11.1 (number of patients with asthma [by age group] who received corticosteroids at the emergency department and at release [if released]), was not measurable in any of the emergency departments.

Table 1: Measurability of individual quality-of-care indicators12.

| Quality-of-care indicator | No. (%) of emergency departments (n = 15) |

|---|---|

| Duration of stay | |

| QCI 1 Mean duration of stay in the ED (patients on stretcher and ambulatory) (all categories combined) | 10 (67) |

| QCI 2-1 Triage level P1 | 9 (60) |

| QCI 2-2 Triage level P2 | 9 (60) |

| QCI 2-3 Triage level P3 | 10 (67) |

| QCI 2-4 Triage level P4 | 10 (67) |

| QCI 2-5 Triage level P5 | 10 (67) |

| Patient safety | |

| QCI 3-1 Number of pediatric patients released from the ED who returned unexpectedly and were admitted within 48-72 h of initial release | 10 (67) |

| QCI 3-2 Number of pediatric patients released from the ED who returned unexpectedly within 48-72 h of initial release | 9 (60) |

| QCI 4-1 Number of adult patients released from the ED who returned unexpectedly and were admitted within 48-72 h of initial release | 8 (53) |

| QCI 4-2 Number of adult patients released from the ED who returned unexpectedly within 48-72 h of initial release | 7 (47) |

| QCI 5 Percentage of headache patients released from the ED and admitted to the hospital for subarachnoid hemorrhage in the subsequent 14 d | 11 (73) |

| Pain management | |

| QCI 6 Delay before receiving first dose of analgesic for all pain conditions requiring analgesic | 4 (27) |

| Pediatrics | |

| QCI 7 Percentage of pediatric patients (0-28 d) with fever who received a complete sepsis investigation | 6 (40) |

| QCI 8 Percentage of pediatric patients (0-28 d) who received broad-spectrum antibiotics intravenously | 5 (33) |

| QCI 9 Percentage of pediatric patients (3 mo to 3 yr) with croup who were treated with steroids | 5 (33) |

| Cardiology | |

| QCI 10 Percentage of eligible patients with acute myocardial infarction who received thrombolytic therapy or interventional angioplasty | 9 (60) |

| Respiratory care | |

| QCI 11-1 Number of patients with asthma (by age group) who received corticosteroids at the ED and at release (if released) | 0 (0) |

| QCI 11-2 Number of patients with asthma (0-3 yr) who received corticosteroids at the ED and at release (if released) | 6 (40) |

| QCI 11-3 Number of patients with asthma (4-10 yr) who received corticosteroids at the ED and at release (if released) | 6 (40) |

| QCI 11-4 Number of patients with asthma (11-17 yr) who received corticosteroids at the ED and at release (if released) | 6 (40) |

| QCI 11-5 Number of patients with asthma (18-39 yr) who received corticosteroids at the ED and at release (if released) | 6 (40) |

| QCI 11-6 Number of patients with asthma (40-59 yr) who received corticosteroids at the ED and at release (if released) | 6 (40) |

| QCI 11-7 Number of patients with asthma (60-79 yr) who received corticosteroids at the ED and at release (if released) | 6 (40) |

| QCI 11-8 Number of patients with asthma (≥ 80 yr) who received corticosteroids at the ED and at release (if released) | 6 (40) |

| Stroke | |

| QCI 12 Percentage of eligible patients with acute stroke who received thrombolytic therapy | 6 (40) |

| Sepsis/infection | |

| QCI 13 Delay of antibiotic administration for patients with bacterial meningitis | 8 (53) |

| QCI 14 Percentage of patients with severe sepsis or septic shock who received broad-spectrum antibiotics within 4 h of arrival at the ED | 7 (47) |

Note: ED = emergency department.

Database use

The ability to measure the 27 quality-of-care indicators with the use of databases varied across emergency departments. Centres 2, 5, 6 and 13 used databases for at least 21 of the indicators (78%-92%), whereas centres 3, 8, 9, 11, 12 and 15 used databases for 5 (18%) or fewer of the indicators (Table 2). The average number of indicators that could be measured was 11.2 (41%). The centres searched 15 different databases and 15 combinations of databases (in cases in which the archivist was willing to use 2 or more databases for 1 or more indicators) (Appendix 3, available at www.cmajopen.ca/content/4/3/E398/suppl/DC1).

Table 2: Frequency of database use to measure quality-of-care indicators.

| Emergency department no. | Database use, no. (%) of quality-of-care indicators (n = 27) |

|---|---|

| 1 | 12 (44) |

| 2 | 23 (85) |

| 3 | 1 (4) |

| 4 | 15 (56) |

| 5 | 21 (78) |

| 6 | 24 (89) |

| 7 | 12 (44) |

| 8 | 5 (18) |

| 9 | 2 (7) |

| 10 | 13 (48) |

| 11 | 0 (0) |

| 12 | 0 (0) |

| 13 | 25 (92) |

| 14 | 12 (44) |

| 15 | 3 (11) |

Time required for measurement

The average total time required to measure the 27 indicators ranged from 5 to 88.5 minutes (data not shown). The median data collection time for each indicator was 15 minutes or less in most cases. However, for indicators 6, 9, 11.5 and 11.6, the median time was 30 minutes or more (Table 3).

Table 3: Median time to measure quality-of-care indicators.

| Quality-of-care indicator | Median time (and interquartile range), min |

|---|---|

| Duration of stay | |

| QCI 1 (n = 9) | 4 (3-5) |

| QCI 2-1 (n = 7) | 3 (1-6) |

| QCI 2-2 (n = 4) | 4 (2.5-6) |

| QCI 2-3 (n = 1) | 15 (15-15) |

| QCI 2-4 (n = 1) | 1 (1-1) |

| QCI 2-5 (n = 1) | 1 (1-1) |

| Patient safety | |

| QCI 3-1 (n = 7) | 9 (3-24) |

| QCI 3-2 (n = 5) | 4 (3-13) |

| QCI 4-1 (n = 5) | 6 (4-11) |

| QCI 4-2 (n = 4) | 3.5 (2-69.5) |

| QCI 5 (n = 8) | 13.5 (12-35) |

| Pain management | |

| QCI 6 (n = 2) | 78 (72-84) |

| Pediatrics | |

| QCI 7 (n = 4) | 12 (4.5-21) |

| QCI 8 (n = 2) | 4.5 (3-6) |

| QCI 9 (n = 2) | 115.5 (6-225) |

| Cardiology | |

| QCI 10 (n = 7) | 15 (12-25) |

| Respiratory care | |

| QCI 11-1 (n = 3) | 18 (6-54) |

| QCI 11-2 (n = 3) | 18 (6-54) |

| QCI 11-3 (n = 2) | 15 (6-24) |

| QCI 11-4 (n = 2) | 12 (6-18) |

| QCI 11-5 (n = 2) | 30 (6-54) |

| QCI 11-6 (n = 2) | 30 (6-54) |

| QCI 11-7 (n = 2) | 12 (6-18) |

| QCI 11-8 (n = 2) | 6 (6-6) |

| Stroke | |

| QCI 12 (n = 4) | 10 (4.5-23.5) |

| Sepsis/infection | |

| QCI 13 (n = 7) | 5 (1-12) |

| QCI 14 (n = 4) | 13.5 (6.5-16.5) |

Interpretation

We found that the existing emergency department databases did not permit measurement of several established evidence-based quality-of-care indicators. Specifically, the centres were able to measure only 41% of the indicators using heterogeneous databases and manual extraction. The 15 participating centres collected data from 15 different databases or combinations of databases, and the process of extracting the data was time-consuming. Our results suggest that the quality of databases and access to them are the most important feasibility considerations. Information on several different indicators was inaccessible, and archivists were obliged to conduct manual searches of paper patient files to extract data on indicators. Manual consultation of paper files is resource-intensive and in our study required the use of several intermediaries, which discouraged some participants, resulting in failure to measure several indicators. Furthermore, even for centres with access to databases, the information the databases contained was not useful for measuring the quality-of-care indicators, and the archivists had to resort to considerable data manipulation to measure the indicators.

Our results are consistent with those of Schull and colleagues,14 whose feasibility assessment in urban centres showed that 13 (27%) of 48 quality-of-care indicators could be measured with the use of current data elements in the Canadian Institute for Health Information's National Ambulatory Care Reporting System (NACRS) or the NACRS plus linkage with other administrative databases such as the Discharge Abstracts Database or death records. The 13 indicators did, however, include some higher-priority indicator categories for emergency department operations, such as patient safety and sepsis/infection.12 In an earlier study, Lindsay and colleagues13 found limited feasibility of calculating quality-of-care indicators using a routinely collected data set: of 29 indicators, only 8 were captured owing to lack of sufficient specificity within the NACRS and International Classification of Diseases, 9th revision coding systems to satisfy the operational definitions and owing to the need to link the emergency department visit to inpatient databases. While the Ontario health care system is recognized for the quality of its databases, such as those of the Institute for Clinical Evaluative Sciences, Toronto, other health care systems face challenges in measuring well-established quality-of-care indicators, especially those in small rural settings with limited resources/databases.

Limitations

Because of limited resources, we did not plan or conduct interrater reliability assessments of the capture of quality-of-care indicators conducted by the emergency department medical archivists. We initially thought this work would be straightforward with existing databases and provided a protocol to capture the indicators. This assumption was incorrect and is hence a major limitation of this study. Furthermore, because of the difficulties in collecting data experienced by archivists, the data collected was incomplete, which limited the use of multivariate statistical analyses. We could not calculate any correlations to determine whether a relation existed between the databases used and the quality of the data collected.

Conclusion

As presently defined, quality-of-care indicators were not easily captured with the use of existing databases in rural emergency departments in Quebec. Indicators concerning pediatrics, respiratory care and stroke were the most difficult to measure. Further work is warranted to improve standardized measurement of quality indicators in rural emergency departments in the province and to generalize the information gathered in this study to other health care environments.

Supplemental information

For reviewer comments and the original submission of this manuscript, please see www.cmajopen.ca/content/4/3/E398/suppl/DC1

Supplementary Material

Acknowledgements

The authors thank the rural emergency department staff across Quebec who participated in the study. The authors also thank Louisa Blair for editing assistance and Luc Lapointe for help formatting the manuscript.

Footnotes

Funding: This research project was supported by the Fonds de Recherche du Québec - Santé (grant number 22481) and the Public Health and the Agricultural Rural Ecosystem scholarship program. Géraldine Layani received a master's award from the University of Saskatchewan.

Disclaimer: To promote transparency, CMAJ Open publishes the names of public institutions in research papers that compare their performance or quality of care with that of other institutions. The authors of this paper have not linked individual institutions to outcome data. We decided to publish this paper without this information, as we felt that it was more important to communicate the finding that variation exists between institutions in the ability to measure quality-of-care indicators than to insist on transparency. -Editor, CMAJ Open

References

- 1.Ottawa: Statistics Canada. Population, urban and rural, by province and territory (Quebec). 2011. [accessed 2014 Nov. 18]. Available www.statcan.gc.ca/tables-tableaux/sum-som/l01/cst01/demo62f-eng.htm.

- 2.Fleet R, Poitras J, Maltais-Gigu, et al. re J, et al. A descriptive study of access to services in a random sample of Canadian rural emergency departments. BMJ Open. 2013;3:e003876. doi: 10.1136/bmjopen-2013-003876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fleet R, Pelletier C, Marcoux J, et al. Differences in access to services in rural emergency departments of Quebec and Ontario. PLoS One. 2015;10:e0123746. doi: 10.1371/journal.pone.0123746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Fleet R, Poitras J, Archambault P, et al. Portrait of rural emergency departments in Québec and utilization of the provincial emergency department management guide: cross sectional survey. BMC Health Serv Res. 2015;15:572. doi: 10.1186/s12913-015-1242-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fleet R, Archambault P, Plant J, et al. Access to emergency care in rural Canada: Should we be concerned? CJEM. 2013;15:191–3. doi: 10.2310/8000.121008. [DOI] [PubMed] [Google Scholar]

- 6.Adams GL, Campbell PT, Adams JM, et al. Effectiveness of prehospital wireless transmission of electrocardiograms to a cardiologist via hand-held device for patients with acute myocardial infarction (from the Timely Intervention in Myocardial Emergency, NorthEast Experience [TIME-NE]). Am J Cardiol. 2006;98:1160–4. doi: 10.1016/j.amjcard.2006.05.042. [DOI] [PubMed] [Google Scholar]

- 7.Fatovich DM, Phillips M, Langford SA, et al. A comparison of metropolitan vs rural major trauma in Western Australia. Resuscitation. 2011;82:886–90. doi: 10.1016/j.resuscitation.2011.02.040. [DOI] [PubMed] [Google Scholar]

- 8.Fleet R, Plant J, Ness R, et al. Patient advocacy by rural emergency physicians after major service cuts: the case of Nelson, BC. Can J Rural Med. 2013;18:56–61. [PubMed] [Google Scholar]

- 9.Thompson JM, McNair NL. Health care reform and emergency outpatient use of rural hospitals in Alberta, Canada. J Emerg Med. 1995;13:415–21. doi: 10.1016/0736-4679(95)00014-2. [DOI] [PubMed] [Google Scholar]

- 10.Victoria: Ministries of Health Services and Health Planning. Standards of accessibility and guidelines for provision of sustainable acute care services by health authorities. 2002. [Google Scholar]

- 11.Collier R. Is regionalization working? CMAJ. 2010;182:331–2. doi: 10.1503/cmaj.109-3167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Schull MJ, Hatcher CM, Guttmann A, et al. Toronto: Institute for Clinical Evaluative Sciences. Development of a consensus on evidence-based quality of care indicators for Canadian emergency departments: ICES investigative report. 2010. [Google Scholar]

- 13.Lindsay P, Schull M, Bronskill S, et al. The development of indicators to measure the quality of clinical care in emergency departments following a modified-Delphi approach. Acad Emerg Med. 2002;9:1131–9. doi: 10.1111/j.1553-2712.2002.tb01567.x. [DOI] [PubMed] [Google Scholar]

- 14.Schull MJ, Guttmann A, Leaver CA, et al. Prioritizing performance measurement for emergency department care: consensus on evidence-based quality of care indicators. CJEM. 2011;13:300–9. E28–43. doi: 10.2310/8000.2011.110334. [DOI] [PubMed] [Google Scholar]

- 15.Fleet R, Archambault P, Légaré F, et al. Portrait of rural emergency departments in Quebec and utilisation of the Quebec Emergency Department Management Guide: a study protocol. BMJ Open. 2013;3:e002961. doi: 10.1136/bmjopen-2013-002961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ministère de la Santé et des Services sociaux du Québec Québec: Gouvernement du Québec. Guide de gestion de l'urgence. 2006. [Google Scholar]

- 17.du Plessis V, Beshiri R, Bollman RD, et al. Definitions of rural. Rural Small Town Can Anal Bull. 2001;3:1–17. [Google Scholar]

- 18.Ottawa: Association canadienne des soins de santé. Guide des établissements de soins de santé du Canada 2009/2010. 2009. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.