Abstract

We evaluated research productivity of several graduate programs that provide Behavior Analyst Certification Board (BACB)-approved course sequences in behavior analysis. Considering the faculty of BACB training programs as a unit, in only about 50 %, programs have faculty combined to publish ten or more total articles in our field’s primary empirical journals. Among individual faculty members, a sizeable number have not published an article in any of the field’s top journals. To recognize major scholarly contributors, we provide top 10 lists of training programs and individual faculty members. We conclude by discussing the importance of research in an increasingly practice-driven marketplace.

Keywords: Research productivity, Behavior analysis, Behavior Analyst Certification Board

Applied behavior analysis (ABA) has grown substantially as a discipline over the past 10 years (Dorsey et al. 2009). In 2011, the Behavior Analysis Certification Board (BACB) reported that there were over 7419 certified behavior analysts providing ABA services (“Recent Developments,” n.d.). By 2013, this number had reached 13,026 (Behavior Analysis Certification 2013). Not surprisingly, increased demand for behavior analytic services has been accompanied by an increase in university programs providing the requisite training for individuals to practice as board certified behavior analysts (BCBAs). Currently, over 200 colleges and universities worldwide offer an approved BACB course sequence (“About the BACB,” n.d.) that allows graduates to sit for the certification examination following the completion of sufficient supervised practice.

As the quantity of programs increases, so too does the need for indices of quality that can distinguish among programs. Consumers, who include prospective graduate students and those who hire them upon graduation, have a right to know who is being trained well, but unfortunately, there is no universally accepted metric of training in behavior analysis. In certain other disciplines, ranking metrics for training programs exist and are freely available to prospective students, employers, and individual consumers. For example, each year, U.S. News and World Report provides a ranking of psychology graduate programs in the USA based on surveys completed by peer institutions. These rankings have come under criticism because they usually include little information about teaching and other elements of programs that matter to students (e.g., availability of financial aid) and because they are susceptible to bias resulting from incorrect information that has been intentionally or unintentionally provided by an institution (Altbach 2010). Nevertheless, a prospective student, employer, or consumer needs only access these rankings to know that universities like Stanford and Harvard have a reputation for offering quality training for psychologists. Another website, TopUniversities.com, exists for to provide information and rankings for academic and applied programs across several fields of study. A search for the field of “biology,” for example, yields a description of the field and a list of top programs using a rating metric based on academic reputation, employer reputation, and citations per article published by program faculty.

Importantly, U.S. News and World Report and TopUniversities.com do not provide rankings of behavior analysis programs, and only limited alternative information is available to consumers who may wish to assess the quality of training provided by various programs.

One key source of information is BACB, which was established in 1998 (“About the BACB,” n.d.). The BACB seeks to “protect consumers of behavior analysis services worldwide by systematically establishing, promoting, and disseminating professional standards” (“About the BACB,” n.d., para. 5). It does this in part by approving course sequences that are deemed to adequately inform about core behavior analytic practices. In addition, the certification board requires that students who go on to become BCBAs have some supervised experience delivering behavior analytic services, and it makes public the percentage of each academic program’s students who pass a certification examination, which covers many of the field’s traditional content areas. Passing the examination is required to become board certified. According to the BACB (2013), the average pass rate across programs was 58 % in 2013, with a range from under 25 to 100 %.

Another source of information about quality is program accreditation through the Association for Behavior Analysis International (ABAI), which was established in 1974. ABAI is the world’s largest behavior analysis membership organization and accredits programs that “support exemplary training of behavior scientists and scientist practitioners” (ABAI, “Guidelines for the Accreditation and Reaccreditation of Programs in Behavior Analysis”, 2015). Whereas the BACB emphasizes quality control of clinical practice and competence, ABAI is concerned with the “growth and vitality of the science of behavior analysis through research, education, and practice” (“Guidelines for the Accreditation and Reaccreditation of Programs in Behavior Analysis”). Its accreditation process considers a broader range of factors than that of the BACB, including curriculum, student progress through the program, employment success of graduates, and faculty curriculum vitae. Twenty-four graduate programs have been accredited by ABAI (“Guidelines”).

Endorsement by BACB or ABAI is informative in that it indicates that a graduate program has met minimal standards established by those bodies, and consumers presumably will prefer training programs that have been approved by an external body over ones that have not. Yet this is a limited form of guidance because details of BACB’s course-sequence reviews and ABAI program reviews are not made public and neither body ranks or otherwise publicly compares training opportunities (except through BACB’s list of passing rates on the BACB examination). Consumers may, therefore, profit from additional means of evaluating and comparing training opportunities.

Research Productivity as a Metric of Program Quality

One potentially informative metric is the research productivity of academic programs and the professors who work in them. ABA is an example of what has been called a knowledge-based industry “in which the primary concern is knowledge creation” (Williamson and Cable 2003, p. 25). Although the proximal goals of ABA involve changing socially important behavior, seminal descriptions of the field, such as that by Baer et al. (1968), clearly emphasize ABA’s grounding within a technological, analytic, and conceptually systematic framework in which “knowledge creation” is defined by empirical research. Across many disciplines, it is recognized that the competitiveness, reputation, and progress of a knowledge-based industry depend on the ability of its workers to create and disseminate quality research (Williamson and Cable 2003).

It should be obvious, then, that research is critical to ABA as a source of new ideas, with service delivery often advanced through a process of translation in which scientific insights are harnessed to create clinical innovations. This process has fueled many past advances in ABA (Mace and Critchfield 2010), and studies in other disciplines suggest that it is in fact the most prolific engine of clinical innovation (see Critchfield et al. 2015). A field like ABA thus requires workers who can create new research, and a longitudinal study by Williamson and Cable (2003) suggests that a professional’s research productivity is strongly predicted by his or her pre-appointment research productivity and by the research productivity and academic placement of his or her academic mentor.

In a practical field like ABA, not all professionals will conduct research, but a training program’s capacity to train its students in research should nevertheless be of interest to prospective students who hope to someday advance clinical practice, or to employers who hope to hire graduates to do the same. Clinicians who are research savvy are not bound to the state of knowledge that exists at the time they leave graduate school, because they can assimilate others’ innovations in clinical science throughout a career. Moreover, research-savvy clinicians may themselves innovate clinically by grasping the practical implications of scientific advances that others have not yet thought to extend to the clinical realm (Critchfield, et al. 2015). At issue is the means by which clinicians become research savvy, and it is reasonable to assume that graduate program faculty who are skilled in the research process can help students develop the needed expertise.

Exposure to research during graduate training may also have a direct impact on the subsequent routine delivery of behavior analysis services. Behavior analytic research and application are tightly intertwined (Sidman 2011) in a scientist-practitioner model whereby the interventionist must be data driven and scientific in hypothesis formation and clinically effective in implementation. The strategies for evaluating an intervention in practice are largely the same as those used in evaluating research effects (i.e., single-subject experiments with repeated measured based on direct observation; Bailey and Burch 2002).

Overall, a variety of reasons exist to believe that exposure to research is beneficial to future ABA practitioners, and faculty who conduct research are well positioned to provide this exposure. Faculty research productivity thus may constitute an important measure of training program quality. A form of convergent validity for this assumption comes by reference to other fields. Faculty research productivity has, for example, been shown to be a major predictor of the academic ranking of traditional psychology programs, and according to TopUniversities.com rankings, the highest publishing psychology graduate programs also receive the highest rankings of satisfaction among employers of their graduates. We propose, therefore, that research productivity ought to be considered in the comparison of academic program in ABA.

To provide a starting point for a disciplinary conversation about relative program quality on this dimension, we sought to evaluate where ABA training programs currently stand with respect to faculty research productivity. We determined the number of publications in major behavior analytic journals that were authored by faculty in ABA training programs in the USA that have achieved approval by the BACB. To be clear about the purpose of our analyses, we recognize that research productivity is only one metric among many that consumers may wish to consider when comparing programs (other metrics include affordability, quality of teaching, student access to fieldwork opportunities with expert supervision, student graduation rates, and postgraduation career success). Although intelligent people are likely to disagree about the relative importance of various quality metrics, it would be unwise to ignore the role of research in supporting our knowledge-based industry and its scientist-practitioner model.

Methods

Selection of Programs and Faculty

Analyses focused on graduate-level behavior analysis university training programs located in the USA that offer a BACB-approved course sequence. To ensure that the assessed programs were behavior analytic, rather than primarily based in some other discipline and also offering BACB-approved courses, several selection criteria were used. First, all programs that listed “behavior analysis” or “applied behavior analysis” as their academic department on the BACB-Approved Course Sequence webpage (http://bacb.com/index.php?page=100358) were included in the analysis. If a program was identified using this method, then all faculty from that program or department were included. Second, because several prominent behavior analysis programs are within other academic departments, programs that were included in the BACB Pass Rate Analysis (2013) were also included in the present study. These programs were included because there exists a precedent for using these programs in a quality assessment metric. Finally, programs that were accredited by ABAI prior to January 2015 were also included. Including these programs allowed for a comparison of programs that were and were not ABAI accredited in terms of research productivity. If programs were included in the study based on the latter two criteria, then only faculty involved in teaching or researching in behavior analysis were included from those programs. As an additional source of control, programs were not included if the program webpage did not indicate which faculty were involved in teaching or researching in behavior analysis. Several of the programs that met the search criterion above were embedded within other departments (e.g., psychology and education), and inclusion in the study required that the faculty that are specifically involved in behavior analytic teaching and research were publically available via the program webpage. Indicating faculty could have involved either having a separate page for behavior analysis faculty, indicating behavior analysis as a primary faculty research interest, or somehow indicating faculty members’ BACB status (e.g., John Smith, BCBA-D). The data obtained in this way were entered into a Microsoft Excel® database by behavior analysis graduate students, who also coded whether each program offered BACB-supervised experience hours or was jointly accredited by ABAI.

Quantification of Research Productivity

To quantify research productivity, we conducted computerized searches using the Google Scholar search engine (https://scholar.google.com), which was selected after pilot analyses suggested that it produced more comprehensive results than the alternative tools Web of Science and PsycINFO. We counted publications that appeared in journals published by two major behavior analysis publishers. The first was the Society for the Experimental Analysis of Behavior, which publishes Journal of the Experimental Analysis of Behavior (JEAB) and Journal of Applied Behavior Analysis (JABA). The second was ABAI, which publishes Behavior Analysis in Practice (BAP), The Behavior Analyst (TBA), The Psychological Record (TPR), and The Analysis of Verbal Behavior (AVB).

The name of each relevant faculty member, as it appeared on a program web page, was entered, within parentheses, into Google Scholar’s “Return articles authored by:” search box. This approach ensured that Google Scholar searched for the entirety of the name. For faculty with hyphenated last names, we also searched separately using each of the individual surnames.

Each faculty member was searched in combination with all target journal names, where target journals were input (e.g., “Behavior Analysis in Practice”) into the “Return articled published in:” search box. Finally, all searches included all available years of publication up to and including 2013 using the “Return articled dated between:” search box. The total number of published articles found for each faculty member in each journal was then entered into the Excel database. In cases of multiple authorship, each contributing author was credited with one published article.

Interobserver reliability (IOR) was calculated across 41.7 % of faculty publication counts using two approaches (via two independent coders using the methods described above). We first calculated the proportion of database cells with perfect matches (equivalent to trial-by-trial IOR); each cell contained one faculty member’s publication count for a target journal. Thus, for each faculty member, we assessed perfect agreement (a binary assessment of perfect or imperfect agreement) in each of the six cells representing each target journal. The proportion of cells with perfect agreement was .90. We then assessed partial agreement-within-faculty counts (equivalent to partial agreement-within-intervals IOR) by dividing each faculty’s lowest total count of publication (across all target journals) by the highest count, and averaged these proportions across all faculty. The partial-agreement-within faculty IOR was .82. For every cell featuring imperfect agreement, we redetermined the publication data search methods described above and replaced the initial values prior to data analysis.

Results

A total of 353 faculty from 74 behavioral programs were included in the study. The number of programs was lower than the number of BCBA-approved course sequences because programs located outside the USA were omitted, and because some programs offer multiple approved course sequences. Table 1 lists programs that were included. A comprehensive list of all faculty included in the analyses is not provided here, but interested readers may consult individual program websites for this information.

Table 1.

Behavior analysis programs that were included in the analysis

| Antioch University | Columbia University Teachers College | Montana State University—Billings | St. Cloud University | University of Florida | University of South Florida |

|---|---|---|---|---|---|

| Auburn University | Florida Institute of Technology | National University | Salve Regina University | University of Georgia | University of St. Joseph |

| Aurora University | Florida International University | Northern Arizona University | San Diego State University | University of Houston—Clearlake | University of Texas—Austin |

| Ball State University | Florida State University | Ohio State University | Simmons College | University of Kansas | University of the Pacific |

| Bay Path College | Forest Institute of Professional Psychology | Pennsylvania State University | Southern Illinois University | University of Maryland—Baltimore County | University of Washington |

| Caldwell College | Fresno Pacific University | Pennsylvania State University—Harrisburg | Spalding University | University of Massachusetts—Boston | University of Wisconsin—Milwaukee |

| California State University—Los Angeles | Georgian Court University | Queen’s College | Temple University | University of Massachusetts—Lowell | Utah State University |

| California State University—Northridge | Gwynedd—Mercy College | Regis College | Texas A&M University | University of Memphis | West Virginia University |

| California State University—Sacramento | Hunter College | Rider University | Texas Technological University | University of Minnesota | Western Michigan University |

| California State University—Stanislaus | Jacksonville State University | Rowan University | University of Arizona | University of Nevada—Reno | Western New England University |

| Cambridge College | McNeese State University | Rutgers University | University of Cincinnati | University of North Carolina—Wilmington | Westfield State University |

| Chicago School of Professional Psychology | Mercy College | Sage Colleges | University of Colorado—Denver | University of North Texas | Youngstown State University |

| Clemson University | Mercyhurst University |

Below, we present three analyses. First, we determined the total number of publications in each of the target behavior analysis journals that were authored by faculty in a given program. The purpose of this analysis was to identify the journals to which faculty in ABA programs tend to publish and to highlight programs whose faculty collectively have made noteworthy research contributions. Second, we determined the total number of publications authored by individual faculty members. The purpose of this analysis was to identify normative trends in research productivity by individuals and highlight individuals who have made noteworthy research contributions. Third, we examined two possible correlates of program-level research productivity, whether a program was also ABAI accredited and whether it offered supervised field experience that contributes to graduates’ eligibility to sit for the BACB certification examination

Publications by Program

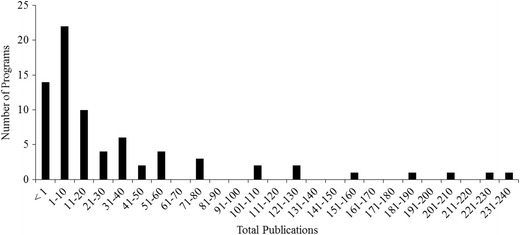

The mean number of faculty at each program was 4.7 (Mdn = 4.0, SD = 3.7), and the mean number of publications from each program, for all faculty combined, was 34.4 (Mdn = 11.5, SD = 54.3). Figure 1 displays a frequency distribution of publications per program faculty in intervals of ten articles (0, 1–10, 11–20, etc.). There was considerable variability in program-level research productivity. For example, in nine programs (12.1 % of the total), faculty were collectively responsible for 100 or more total articles in the target behavior analysis journals, whereas in roughly half of the programs, faculty collectively published fewer than ten papers (N = 36, 48.6 %). In 14 programs (18.9 %), faculty had published no articles in the target journals.

Fig. 1.

Histogram showing the distribution across behavior analysis programs (X) in the number of publications in major behavior analytic journals (Y)

Table 2 lists programs contributing the most publications in each target journal. Across all journals, the ten most prolific programs accounted for 60.4 % of the total publications.

Table 2.

Top 10 publishing programs by total publications across journals

| Journals | |||||||

|---|---|---|---|---|---|---|---|

| Total | Publications per professor | JABA | JEAB | TBA | BAP | TPR | AVB |

| University of Maryland—Baltimore County | University of Florida | University of Florida | West Virginia University | University of Kansas | University of Kansas | University of Nevada—Reno | Western Michigan University |

| University of Florida | California State University—Los Angeles | Western New England University | University of Maryland—Baltimore County | Western Michigan University | Western New England University | Southern Illinois University | University of Nevada—Reno |

| Western New England University | Southern Illinois University | University of Maryland—Baltimore County | Western Michigan University | West Virginia University | University of Wisconsin—Milwaukee | Western Michigan University | California State University—Sacramento |

| University of Kansas | West Virginia University | University of Kansas | Utah State University | University of Maryland—Baltimore County | Western Michigan University | University of Wisconsin—Milwaukee | California State University—Los Angeles |

| Western Michigan University | University of Wisconsin—Milwaukee | Southern Illinois University | University of Nevada—Reno | University of Wisconsin—Milwaukee | University of Maryland—Baltimore County | University of Kansas | University of Maryland—Baltimore County |

| West Virginia University | University of Maryland—Baltimore County | University of Houston—Clearlake | University of North Carolina—Wilmington | University of Nevada—Reno | Rutgers University | Queen’s College | University of North Texas |

| Southern Illinois University | University of Houston—Clearlake | Florida Institute of Technology | University of Kansas | Rutgers University | Southern Illinois University | Youngstown State University | Chicago School of Professional Psychology |

| University of Wisconsin—Milwaukee | University of Nevada—Reno | Ohio State University | University of North Texas | University of North Texas | University of Houston—Clearlake | Spalding University | University of the Pacific |

| University of Nevada—Reno | Western Michigan University | University of South Florida | University of Florida | California State University—Los Angeles | Seven schoolsa | California State University—Los Angeles | Westfield State University |

| Florida Institute of Technology | University of Texas—Austin | University of Wisconsin—Milwaukee | University of Wisconsin—Milwaukeeb | University of Florida | West Virginia Universityb | Southern Illinois Universityb | |

| Queen’s Collegeb | Columbia University Teachers Collegeb | Queen’s Collegeb | |||||

| Jacksonville State Universityb | Caldwell Collegeb | Western New England Universityb | |||||

| McNeese State Universityb | California State University—Stanislausb | ||||||

aMore than four programs are tied for tenth in total publications

bPrograms that are tied for tenth in total publications

There was a tendency for different programs to produce articles frequently in different journals, which may say something about the type of research being conducted within those programs, but for present purposes, we did not attempt to examine article content. Table 3 shows that there were also some program-level synchronicities across journals. In particular, program faculty who published frequently in BAP also published frequently in JABA and TBA. Program faculty publishing frequently in TBA also published frequently in JEAB and TPR. Table 3 displays details of correlations among journals.

Table 3.

Correlation matrix of publications by programs across major behavior analytic journals

| Total | JABA | JEAB | TBA | BAP | TPR | AVB | |

|---|---|---|---|---|---|---|---|

| Total | 1 | ||||||

| JABA | .892a | 1 | |||||

| JEAB | .631a | .302a | 1 | ||||

| TBA | .716a | .428a | .592a | 1 | |||

| BAP | .733a | .666a | .306a | .696a | 1 | ||

| TPR | .492a | .210 | .338a | .534a | .324b | 1 | |

| AVB | .459a | .239b | .360a | .410a | .193 | .577a | 1 |

aCorrelation is significant at the 0.01 level (2-tailed)

bCorrelation is significant at the 0.05 level (2-tailed)

Publications by Individual Faculty Members

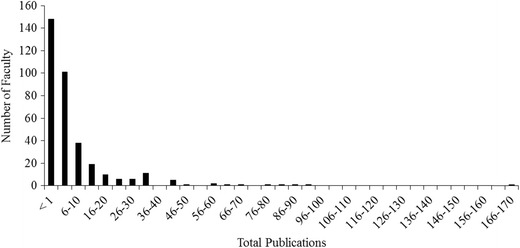

The mean number of publications authored by individual faculty members was 7.2 (Mdn = 1.0, SD = 15.6). Figure 2 displays a frequency distribution of number of publications authored by individual faculty members, in intervals of five articles (0, 1–5, 6–10, etc.). There was considerable variability in individual faculty member productivity. Eight faculty members (2.3 % of the total) each had published over 50 articles in the target behavior analysis journals, whereas more than two thirds of individual faculty had published between 0 and 5 articles in the target behavior analysis journals (N = 249, 70.5 %), with slightly less than half responsible for no articles in any of the target journals (N = 147, 41.6 %).

Fig. 2.

Histogram showing the distribution across behavior analysis faculty (X) in the number of publications in major behavior analytic journals (Y)

Table 4 lists the ten most prolific faculty members for each of the target behavior analysis journals. Across all journals, these individuals accounted for 29 % of the total articles, although there was a tendency for individual faculty to specialize in specific journals rather than publish frequently in several journals. Table 5 shows that there were some moderate correlations in article counts across journals. For example, top publishing faculty members in BAP also tended to be among the top publishers in JABA; top publishers in TBA also were among the top publishers in JEAB and TPR.

Table 4.

Top 10 publishing faculty across journals

| Journals | ||||||

|---|---|---|---|---|---|---|

| Total | JABA | JEAB | TBA | BAP | TPR | AVB |

| Brian A Iwata | Brian A Iwata | Kennon A Lattal | Edward K Morris | Derek D Reed | Linda J Parrott Hayes | Caio Miguel |

| Kennon A Lattal | Gregory Hanley | A Charles Catania | Kennon A Lattal | Gregory Hanley | Alan Poling | Henry Schlinger |

| Alan Poling | Dorothea C Lerman | Alan Poling | Alan Poling | Dorothea C Lerman | Mark Dixon | A Charles Catania |

| A Charles Catania | Nancy Neef | Steven C Hayes | Henry Schlinger | Douglas W Woods | Ruth Anne Rehfeldt | Richard Malott |

| Gregory Hanley | Rachel Thompson | Mark Galizio | A Charles Catania | Alan Polinga | John (Jay) C Moore | Alan Poling |

| Dorothea C Lerman | Raymond Miltenberger | Greg Madden | Steven C Hayes | Brian A Iwataa | Edward K Morris | John Eshleman |

| Edward K Morris | Timothy R Vollmer | Amy Odum | Jonathan Kanter | Mark Dixona | David Morgan | Matthew Normand |

| Mark Dixon | Iser DeLeon | Michael Perone | Sigrid Glenn | Claire S P Pipkina | Steven C Hayes | Roger Tudor |

| Nancy A Neef | Mark Dixon | John (Jay) C Moore | John (Jay) C Moore | Amanda Karstena | Michael Clayton | Steven C Hayes |

| Rachel Thompson | Jeffrey Tiger | Jesse Dallery | Brian A Iwata | Megan Heinickea | Kenneth Reeve | Ruth Anne Rehfeldta |

| Jeffrey Tigera | Sigrid Glenna | |||||

| Nicholas Vanselowa | ||||||

| Frank Birda | ||||||

aFaculty that are tied for tenth in total publications

Table 5.

Correlation matrix of publications by programs across major behavior analytic journals

| Total | JABA | JEAB | TBA | BAP | TPR | AVB | |

|---|---|---|---|---|---|---|---|

| Total | 1 | ||||||

| JABA | .810a | 1 | |||||

| JEAB | .521a | .024 | 1 | ||||

| TBA | .575a | .163a | .453a | 1 | |||

| BAP | .391a | .413a | .017 | .115b | 1 | ||

| TPR | .442a | .074 | .286a | .499a | .093 | 1 | |

| AVB | .324a | .038 | .305a | .348a | .065 | .317a | 1 |

aCorrelation is significant at the 0.01 level (2-tailed)

bCorrelation is significant at the 0.05 level (2-tailed)

Accreditation and Publications

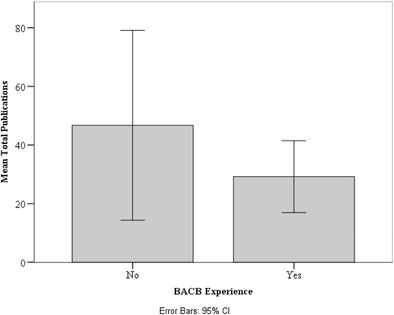

Figure 3 compares the mean number of publications in target journals authored by the faculty of programs offering BACB-supervised field experience hours and those that do not. Faculty of programs that offer BACB-supervised field experience published fewer articles on average (N = 52, M = 29.2, Mdn = 11.5, SD = 44.0) than faculty of programs that do not (N = 22, M = 46.7, Mdn = 11.0, SD = 73.0); however, the difference failed to show significance (t (72) = 1.27, p = .20).

Fig. 3.

The mean number of publications across programs that offer BACB experience hours and programs that do not. Error bars represent a 95 % confidence interval

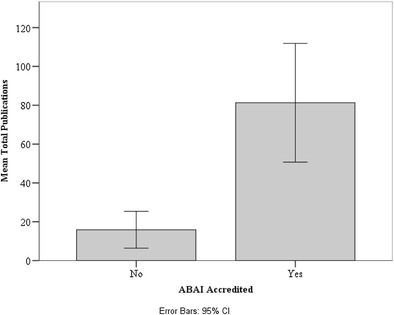

Figure 4 displays the mean number of publications by faculty of programs that are ABAI accredited and programs that are not. Faculty of accredited programs published significantly more articles (N = 21, M = 81.2, Mdn = 60, SD = 67.1) than faculty of nonaccredited programs (N = 53, M = 15.8, Mdn = 5, SD = 34.2) according to an independent samples t test (t (59) = 5.53, p < .001).

Fig. 4.

The mean number of publications across programs that are ABAI accredited and those that are not. Error bars represent a 95 % confidence interval

Discussion

In comparing graduate training programs, a critical feature of any proposed quality metric is that it distinguishes among programs. Our analyses show that research productivity, measured via counts of articles published in selected behavior analysis journals, clearly does this: There is a great deal of variation in the productivity of program faculty collectively and of individual program faculty members. For consumers who wish to determine which training programs offer a strong research culture, productivity measured in this way may be a useful place to start.

Although we maintain that a program’s research culture matters, the present analysis cannot speak to how much it matters. We are aware of no objective means by which to decide how much a program’s research culture should be valued relative to other considerations such as cost, teaching quality, and the success of graduate in obtaining desirable employment. All that can be asserted based on the present analyses is that the faculty of different programs appear to be differentially engaged in the process of “knowledge creation” that sustains a knowledge-based industry like ABA. It is reasonable to infer that the quality of research-related training that a student can expect to receive covaries with faculty research engagement. To the extent that this is true, programs with high research productivity may be judged as “better,” along this one important dimension, than programs with lower research productivity.

We hope that the data presented here will promote a thoughtful discussion among behavior analysts about the proper role of research training for future practitioners, as well as about the best ways of quantifying the research culture in training programs. In the latter case, it may well be possible to improve upon our analyses. For example, we quantified the productivity of individual faculty members in terms of cumulative career publication records. This approach is suitable for faculty members who spend all or most of their career at a single institution, but possibly not for those who move between institutions. Imagine, for example, a faculty member who has just begun working at University A after several years of working at University B. In our analyses, this person’s publication record while at University B would count within University A’s data. Moreover, our data reflect career accomplishments rather than snapshots of current research productivity. A faculty member who was productive in early career, but has recently ceased doing research, would be portrayed favorably in our results (similarly, an individual who has only recently become productive in research, perhaps after moving to an academic position from a purely clinical appointment, would be portrayed unfavorably). Such measurement ambiguities will need to be resolved before research productivity can be considered a reliable measure of program quality.

The preliminary nature of our data limits the conclusions that may be drawn from them, but we can illustrate some of the types of issues that such data can bring into focus. For example, once training programs are objectively distinguished in terms of research productivity, it is possible to debate the degree to which this information should affect program approval by external bodies. Our data, combined with the BACB and ABAI mission statements mentioned in the Introduction, suggest that research productivity is weighted more heavily in ABAI program accreditation than in BACB course-sequence approval. Whether this is a desirable state of affairs can be the topic of an interesting discussion.

The program-level variability in research productivity that we described raises questions about how the culture of our scientist-practitioner field may be affected by the inclusion of individuals who graduated from programs that provide relatively little research emphasis. Our data suggest that students in many BACB-approved course sequences will not be taught by faculty members who actively engage in behavior analytic research. It is, however, a matter of possible contention whether it is necessary for each ABA professional to embody the “scientist” in the scientist-practitioner model, and if so, to what degree.

It is also possible, of course, to debate what is implied and desired when discussing a program’s “research emphasis.” A student may learn about developed skills for critically evaluating research without necessarily conducting research, and our analyses emphasized the amount of publishable research that program faculty have been conducting, which can be different from what students in a program can expect to learn about research. Related to this concern is the reality that active researchers are not necessarily the best teachers. From our own experiences, we are aware of accomplished researchers who devoted too little effort to the teaching process, or who were unable to connect their specialized expertise to the educational needs of students. This raises the possibility that tradeoffs could exist between program research emphasis and other desirable features. Another example is that we found possible evidence that faculty in programs offer supervised practical experience to publish less than faculty in programs that do not. Why this may be the case is impossible to deduce from our data. Do the demands of supervision interfere with conducting research? Do faculty who conduct research tend to shy away from providing field supervision? The present analyses cannot answer such questions, but they are important questions to pose, because they begin an important process of better defining the contributions to practitioner training that active researchers are expected to make.

To summarize, the current data are presented not to serve as the final word on research productivity and its disparity across BACB training programs, but rather as a starting point for critical discussions in our field. The landscape of behavior analysis is dynamic, with increasing demand for qualified practitioners and new training programs coming online every year. Although the “knowledge creation” mission of behavior analysis continues to be met with new research, our data suggest that a large proportion of new research is being produced by a relatively small number of faculty members, which results in an unsettlingly large proportion of new practitioners being trained by faculty with little or no research track record. The conjunction of these factors raises thorny questions about the path that lies ahead for our field. For now, we can only identify a stark disconnect: To appreciate and understand research, clinicians in training require immersion in a research culture that many ABA graduate training programs currently do not possess. Now is an appropriate time for the behavior analysis community to begin a general dialog on the factors that define high-quality training programs, and a specific discussion of the role of research training in the development of future practitioners.

References

- About the BACB. (n.d.). Retrieved March 11, 2015, from http://www.bacb.com/index.php?page=1.

- Altbach, P. G. (2010, November 11). The state of the rankings. Inside Higher Ed.https://www.insidehighered.com/views/2010/11/11/altbach.

- Association for Behavior Analysis International. Guidelines for the Accreditation and Reaccreditation of Programs in Behavior Analysis. (2015). Retrieved from https://www.abainternational.org/media/67232/abai_accreditation_manual_2013.pdf.

- Baer DM, Wolf MM, Risley TR. Some current dimensions of applied behavior analysis. Journal of Applied Behavior Analysis. 1968;1(1):91–97. doi: 10.1901/jaba.1968.1-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bailey JS, Burch MR. Research methods in applied behavior analysis. Thousand Oaks: Sage; 2002. [Google Scholar]

- Behavior Analysis Certification Board (2013, May). BACB certificants now exceed 13,000 worldwide! BACB Newsletter. Retrieved from http://www.bacb.com/newsletter/BACB_Newsletter_5-13.pdf.

- Critchfield TS, Doepke KJ, Campbell RL. Origins of clinical innovations: why practice needs science and how science reaches practice. In: DeGenarro Reed FD, Reed DD, editors. Bridging the gap between science and practice in autism service delivery. New York: Springer; 2015. [Google Scholar]

- Dorsey MF, Weinberg M, Zane T, Guidi MM. The case for licensure of applied behavior analysts. Behavior Analysis in Practice. 2009;2(1):53. doi: 10.1007/BF03391738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guidelines for the Accreditation and Reaccreditation of Programs in Behavior Analysis. (2015). Association for Behavior Analysis International. Retrieved March 11, 2015.

- Mace FC, Critchfield TS. Translational research in behavior analysis: historical traditions and imperative for the future. Journal of the Experimental Analysis of Behavior. 2010;93(3):293–312. doi: 10.1901/jeab.2010.93-293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recent Developments. (n.d.). Retrieved March 11, 2015, from http://www.bacb.com/index.php?page=100179.

- Sidman M. Can an understanding of basic research facilitate the effectiveness of practitioners? Reflections and personal perspectives. Journal of Applied Behavior Analysis. 2011;44(4):973–991. doi: 10.1901/jaba.2011.44-973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williamson IO, Cable DM. Predicting early career research productivity: the case of management faculty. Journal of Organizational Behavior. 2003;24(1):25–44. doi: 10.1002/job.178. [DOI] [Google Scholar]