Abstract

Quality improvement (QI) efforts affect a broader range of people than we often assume. These are the potential stakeholders for QI and its evaluation, and they have valuable perspectives to offer when they are consulted in planning, conducting and interpreting evaluations. QI practitioners are accustomed to consulting stakeholders to assess unintended consequences or assess patient experiences of care, but in many cases there are additional benefits to a broad inclusion of stakeholders. These benefits are better adherence to ethical standards, to assure that all legitimate interests take part, more useful and relevant evaluation information and better political buy-in to improve impact. Balancing various stakeholder needs for information requires skill for both politics and research management. These challenges have few pat answers, but several preferred practices, which are illustrated with practical examples.

Keywords: Communication, Decision making, Evaluation methodology, Quality improvement

This article explains and illustrates the benefits of consulting a broad range of stakeholders in evaluation of quality improvement (QI). Stakeholders are defined as those with an interest or ‘stake’ in an activity or its evaluation.1 QI practitioners are already encouraged to think about all the people who might be affected by a project, especially those who are adversely affected. And pragmatically, they know QI needs the buy-in of other professionals to get anything done. It may seem obvious that stakeholders should be engaged in evaluation, and it may come naturally in many cases. In other cases it may seem ‘nice to have’ but not essential. Yet it is dangerous to assume this. More deliberate focus on stakeholder consultation often has many benefits, as we will illustrate.

Stakeholder consultation means involving stakeholders in the conduct of evaluation, a central guiding principle of the evaluation profession.2 3 Stakeholder consultation can include a wide variety of activities, including articulating the evaluation's purpose, selecting questions and methods, probing assumptions, facilitating data collection and interpreting results.4 Stakeholders may simply need information or they may need recognition of their specific interest in a QI effort. They may also want a role in interpreting and framing of the results. Given that ‘what gets measured gets done’, stakeholders may want to examine particular aspects that if recognised, might further their own agendas. Too often, consultation is confined to just a few groups, or getting pro forma input, after which stakeholders may receive the findings in already-digested form. Then the evaluators wonder why their reports sit on a shelf gathering dust.

Consulting more stakeholders would have helped: an example

Consider the case of a collaborative improvement initiative in 29 primary care centres in the USA.5 The project aimed to increase patient and family support for self-management of chronic conditions. The evaluation of this initiative revealed many positive changes in the delivery of care, and patients improved their goal-setting. However, the centres varied widely in terms of patient behavioural changes, satisfaction and the self-management services themselves. QI practitioners reported two factors affecting this variation: a lack of payment and limited staff time for self-management services. These factors threatened sustainability as well.

The evaluators concluded that many of the centres had not consulted officials, payers and managers to include a business case for the improvement. A business case would address questions such as: what is the ancillary cost and allocation of staff time for this improvement? Is it worth the additional cost and labour? Are there more cost-effective alternatives? Does the improvement disrupt other processes? By the end of the evaluation of this QI initiative, many practitioners reported an urgent need to present a business case. The QI practitioners had an opportunity to collect data on the business case, but only 48% did so, while an additional 16% were planning to do so. In retrospect, the importance of a business case may be obvious; but QI is difficult, and people were preoccupied with achieving an improvement, not justifying it.

Consulting a wider variety of stakeholders confers three kinds of benefits.1–4 6 7 First, ethical practice is better assured, given that QI affects a broader range of people than evaluators might assume. Second, an evaluation is more likely to be useful, given that various perspectives and evaluation questions are considered from the start and employed to make sense of the results.6 7 Third, as this example demonstrates, there is a better chance that evaluation will have impact, because political buy-in is more likely—or at least, the danger spots will be known well in advance.

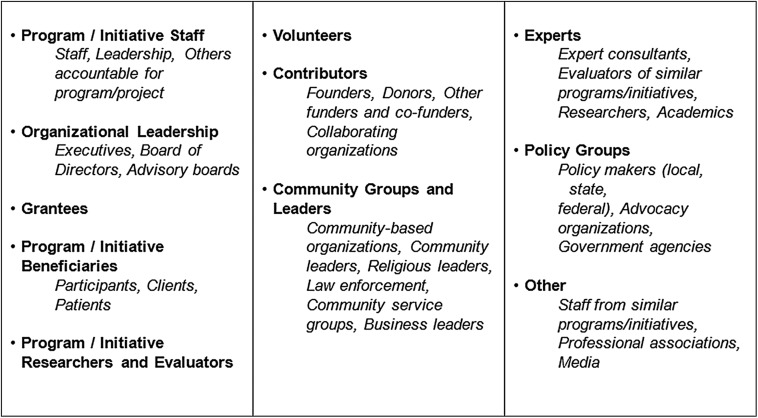

In the primary care example, the obvious stakeholders for QI evaluation were the healthcare organisations’ patients, front-line practitioners and line managers. Yet other stakeholders had power over the situation, both for the improvement and for sustainability. Powerful stakeholders in other situations might include tax payers through their political representatives, patient care advocacy groups and those who have fiduciary responsibility system-wide to assure high quality care. Even for highly specific, local QI evaluations, why not highlight the effort to others, such as QI teachers and researchers, or those who might want to adopt the QI strategy elsewhere? A generic list of stakeholders, from a useful guide, can be seen in figure 1.8

Figure 1.

Types of stakeholders. Adapted from Ref. 8.

Anticipating stakeholder conflicts

Some readers may object that such a preoccupation with stakeholders is overkill, especially for local QI efforts that appear completely straightforward. Yet stakeholder needs sometimes do conflict, sometimes with major consequences for the quality and usefulness of evaluations. In their postmortem of eight large-scale, multisite evaluations of QI initiatives in the USA, Mittman and Salem-Schatz interviewed the evaluators, programme directors and front-line QI practitioners.9 They described legitimate tensions over evaluation's purpose, priorities for data collection and consequently for the evaluations’ resources. Those doing QI often employed rapid-cycle improvement; therefore, they wanted to collect, learn and act on data addressing a variety of issues, in as close to ‘real-time’ as possible. The evaluators, by contrast, needed credible evaluation designs and uniform data collection in order to influence outside stakeholders: other practitioners who might adopt these QI methods and the policymakers who might encourage QI. In several cases, a conflict over the purpose of the evaluation and resources for data collection impaired both the data and its interpretation.

This is familiar ground in evaluation practice.1 In conducting and overseeing hundreds of evaluations, we have witnessed tensions among every possible combination of stakeholders. Political skill is needed to manage these tensions, but so is skill in managing evaluation resources. The very worst course of action is to ignore the tensions. They can be anticipated and managed through careful inclusion of stakeholders and transparency about decisions. By transparency, we mean careful and, if necessary, repeated explanations and updates about the choices being made for the study questions and methods.

Negotiating the use of evaluation resources

The stakeholders are potentially many, their questions and interests often differ and budgets for evaluation are limited. How then do we begin to balance stakeholder information needs? For managing evaluation resources, there are several potential avenues. Data collection is usually the biggest cost in an evaluation study. Although automation can reduce this cost, as in the case of electronic health records (EHR), the fact remains that data must be obtained, cleaned and often validated or audited. Furthermore, some important kinds of data may not be recorded in the EHR, thus adding to data collection cost. Sometimes it is acceptable for certain stakeholder questions to be addressed in less detail or with lower data quality than others. At a minimum, various stakeholder views can be better represented both in planning evaluations and interpretation of findings, which are minor components of evaluation cost for potentially large returns.

Negotiation can help address which stakeholders’ questions get answered, in what sequence and at what level of detail, quality and expense. A very positive example comes from a multisite evaluation of efforts to incorporate effective counselling about healthy behaviour into routine primary care: diet, physical activity, tobacco and alcohol use. Phase I supported the evaluation of individual, site-level approaches. Phase II integrated several of the most promising approaches from Phase I. By Phase II, practitioner-researchers had developed a common interest in data collection and conducted credible multisite studies of patient behavioural changes and cost-effectiveness of the interventions.10 11 We believe that there is great potential for such win-win situations, especially in QI evaluation, where the needs of various stakeholder groups are often logically linked.

Empowering the powerless: ethical and useful too

QI practitioners generally consult patients, families and communities as stakeholders in order to avoid harm, and they recognise patients’ experience of care as an important quality indicator. But the purpose of this article is to demonstrate additional benefits. For example, Salud America! is a research network to prevent Latino childhood obesity in the USA. The network started with input from hundreds of Latino community leaders from around the country, and now includes thousands. It was essential for Latino leaders to decide the priority topics for the network to study and evaluate. The ethical imperative was clear, given Latinos’ often marginal position in American society. Relevance and evaluation quality were equally served, because so little prevention had focused on Latinos to date, yet prevention strategies clearly needed to be tailored to Latino cultures and life situations. The wisdom of communities about their own situation could be tapped through their leaders, who participated in a nominal group process. Community processes, when authentic, can be extremely messy. Yet this effort was orderly—more than feasible, because the infrastructure was in place to obtain stakeholder input.12

Evaluation is usually a publicly financed or philanthropic activity, presumed to benefit society as a whole. In a representative democracy, the range of interests all contend in a marketplace of ideas.13 Yet social justice is at issue, too. We know that the power and privilege of some stakeholders tend to drown other voices out, frustrating a democratic process. This tension is acutely seen in healthcare today. Consensus is emerging that patients, their families and caretakers need a greater voice in the evaluation of QI, consistent with their greater participation in healthcare decision-making generally. Special effort is required precisely because patients tend to have fewer opportunities to get their voices heard, or may not have the expertise that some regard as necessary, so they can be marginalised.14 Because nurses and other healthcare workers can also be marginalised, evaluators committed to social justice would give priority to their concerns as well.

As seen in the Salud America! example, including such stakeholders can be beneficial for evaluation quality and relevance. Value judgements are inherent in every aspect of evaluation; by giving voice to marginalised groups, participatory evaluators aim to counteract the biases of the powerful.15

In evaluations of education and social welfare, challenging government officials’ bias has been found to be one of the most useful features of evaluation.1 It could be very helpful to QI: for example, let us say a hospital wants to translate patient education materials into the languages of recent immigrants. In most cases, there is a need to incorporate cultural meaning to assure understanding. If asked to evaluate the materials, immigrants might suggest revisions, but they might also challenge the assumption that a pamphlet would be effective; instead they might suggest that the hospital employ some immigrants to communicate directly and impart greater trust.

Writers on participatory evaluation offer useful strategies to give voice to marginalised stakeholders.15–17 First and foremost, they convey respect, eliminate the power differential between the professional and participants and recognise participants’ wisdom about their own needs and assets. They take the time necessary, carefully eliciting participants’ interests and questions, not just providing information but by their active involvement in every phase of the evaluation. Many helpful resources for participatory evaluation are available from the Community-Campus Partnerships for Health, https://ccph.memberclicks.net/.

Coping with complex systems, finding the leverage

Some elements of QI strategy transcend the project-level tactics: for example, how to create and support an organisational culture of QI. For such problems, we are still groping toward better strategy, and the opportunities are emerging, not fixed.18 19 Under these circumstances, broad stakeholder consultation is crucial, to make sense of findings from multiple perspectives. A recent multisite evaluation offers an example of sense-making together with stakeholders to better understand complexity. Thirteen US hospitals used a rapid-cycle approach to develop, test, modify and refine over 100 improvements in their medical and surgical units. As the work progressed, the evaluator realised that the approach was especially powerful to empower and engage nurses on the frontlines of QI. In consultation with stakeholders, the evaluator shifted to focus on nurse engagement in QI, as well as overall improvements in healthcare quality. The reported outcomes (in particular, reduced falls and reduced nurse turnover) provided a success story that allowed the approach to flourish and spread.20

Another example of finding leverage in complex systems comes from the evaluation of Aligning Forces for Quality.21 Over an 8-year period, the initiative offered financial support and technical assistance to multi-stakeholder alliances for QI in the USA. In preparing for the evaluation of the initiative, the evaluators surveyed participating alliance members about their priority questions, as well as federal stakeholders charged with strategy to support QI. The survey revealed that almost all stakeholders needed, urgently, to better understand the goals and meaning of patient-centred care, as well as strategies to achieve it. In the evaluation that followed, substantial attention was paid to this issue.22

Finding powerful motivations to implement a QI could also provide leverage. Low-income clinics in the USA often struggle with patients’ social needs that they are ill-equipped to handle. These painful problems (eg, lack of housing and transportation) impede health, make staff feel powerless to help and take precious time for little result. The Health Leads model recruits university volunteers to assist patients in finding help for these social needs, thus freeing up clinical staff time. Health Leads is currently undergoing evaluation. While Health Leads may demonstrate improved health outcomes, the model will likely spread more quickly if it is found to be effective in optimising clinic staff time and improving staff morale.

Conclusion

We would urge QI evaluators to think more broadly about the stakeholders they consult. Different levels of engagement are appropriate for different kinds of challenges. Sometimes it comes naturally, as when QI evaluates unintended side effects. Sometimes it is ‘nice to have, but not crucial’. In still other cases, as we have shown, it is more than that, satisfying an ethical imperative, improving the chances for useful evaluation, and helping to gain political buy-in for potential impact.

Acknowledgments

The authors are grateful to editors and anonymous reviewers for comments on an earlier draft of this paper.

Footnotes

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Shadish WR, Cook TD, Leviton LC. Foundations of program evaluation: theorists and their theories. Thousand Oaks, CA: Sage, 1991. [Google Scholar]

- 2.Yarbrough DB, Shulha LM, Hopson RK, et al. The program evaluation standards: a guide for evaluators and evaluation users. 3rd edn Thousand Oaks, CA: Sage, 2011. [Google Scholar]

- 3.American Evaluation Association. Guiding Principles for Evaluators (Revised) 2004. http://www.eval.org/Publications/GuidingPrinciples.asp (accessed 15 Aug 2015).

- 4.Brandon PR, Fukunaga LL. The state of the empirical research literature on stakeholder involvement in program evaluation. Amer J Eval 2014;35:26–44. 10.1177/1098214013503699 [DOI] [Google Scholar]

- 5.White Mountain Research Associates. Adoption, sustainability and spread of self-management supports: an evaluation of the impact of the new health partnerships and quality allies learning communities on systems of clinical care delivery and patient outcomes. Walpole, NH: Author, 2008. http://www.rwjf.org/en/library/research/2008/07/adoption--sustainability-and-spread-of-self-management-supports.html (accessed 10 Aug 2015). [Google Scholar]

- 6.Cousins JB, Leithwood KA. Current empirical research on evaluation utilization. Review Ed Research 1986;56:331–64. [Google Scholar]

- 7.Ottoson JM, Hawe P. Knowledge utilization, diffusion, implementation, transfer, and translation: implications for evaluation. New Directions for Evaluation 2009;2009(124).

- 8.Preskill H, Jones N. A practical guide for engaging stakeholders in developing evaluation questions. Princeton, NJ: Robert Wood Johnson Foundation, 2009. http://www.rwjf.org/en/library/research/2009/12/a-practical-guide-for-engaging-stakeholders-in-developing-evalua.html (accessed 22 Aug 2014). [Google Scholar]

- 9.Mittman B, Salem-Schatz S. Improving research and evaluation around continuous quality improvement in health care. Princeton, NJ: Robert Wood Johnson Foundation, 2012. http://www.rwjf.org/content/dam/farm/reports/reports/2012/rwjf403010 (accessed 25 Nov 2014). [Google Scholar]

- 10.Fernald DH, Froshaug DB, Dickinson LM, et al. Common measures, better outcomes (COMBO): a field test of brief health behavior measures in primary care. Am J Prev Med 2008;35(Suppl):S414–22. 10.1016/j.amepre.2008.08.006 [DOI] [PubMed] [Google Scholar]

- 11.Dodoo MS, Krist AH, Cifuentes M, et al. Start-up and incremental practice expenses for behavior change interventions in primary care. Am J Prev Med 2008;35(Suppl):S423–30. 10.1016/j.amepre.2008.08.007 [DOI] [PubMed] [Google Scholar]

- 12.Leviton LC, Lavizzo-Mourey R. A research network to prevent obesity among Latino children. Am J Prevent Med 2013;44(Suppl 3):S173–4. 10.1016/j.amepre.2012.11.021 [DOI] [PubMed] [Google Scholar]

- 13.Shadish WR, Leviton LC. Descriptive values and social justice. In: Benson A, Hinn DM, Lloyd C. Visions of quality: how evaluators define, understand, and represent program quality. Oxford, UK: JAI Press, 2001:181–200. [Google Scholar]

- 14.Smith M, Saunders R, McGinnis JM. Best care at lower cost: the path to continuously learning health care in America. Washington DC: National Academy Press, 2013. [PubMed] [Google Scholar]

- 15.House ER. Evaluating: values, biases and practical wisdom. Charlotte, NC: Information Age Publishing, 2014. [Google Scholar]

- 16.Greene JC. Evaluation, democracy and social change. In: Shaw I, Greene JC, Mark M. The SAGE Handbook of Evaluation. Thousand Oaks, CA: Sage, 2006:118–40. [Google Scholar]

- 17.Minkler M, Wallerstein N. Community-based participatory research for health: from process to outcomes. 2nd edn San Francisco, CA: John Wiley, 2008. [Google Scholar]

- 18.Dixon-Woods M, McNicol S, Martin G. Ten challenges in improving quality in healthcare: lessons from the Health Foundation's programme evaluations and relevant literature. BMJ Qual Saf 2012;21:876–84. 10.1136/bmjqs-2011-000760 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hawe P. Lessons from complex interventions to improve health. Annu Rev Public Health 2015; 36:307–23. 10.1146/annurev-publhealth-031912-114421 [DOI] [PubMed] [Google Scholar]

- 20.Pearson ML, Upenieks V, Yee T, et al. Spreading innovations in health care: approaches for disseminating transforming care at the bedside. Princeton, NJ: Robert Wood Johnson Foundation, 2008. http://www.rwjf.org/content/dam/farm/reports/issue_briefs/2008/rwjf35088 (accessed 13 Jan 2014). [Google Scholar]

- 21.Scanlon DP, Beich J, Alexander JA, et al. The aligning forces for quality initiative: background and evolution from 2005 to 2012. Am J Manag Care 2012;18(Suppl):s115–25. [PubMed] [Google Scholar]

- 22.McHugh M, Harvey JB, Kang R, et al. Community-level quality improvement and the patient experience for chronic illness care. Health Serv Res 2016;51:76–97. 10.1111/1475-6773.12315 [DOI] [PMC free article] [PubMed] [Google Scholar]