Abstract

Objective

To elicit prescribers' preferences for behavioural economics interventions designed to reduce inappropriate antibiotic prescribing, and compare these to actual behaviour.

Design

Discrete choice experiment (DCE).

Setting

47 primary care centres in Boston and Los Angeles.

Participants

234 primary care providers, with an average 20 years of practice.

Main outcomes and measures

Results of a behavioural economic intervention trial were compared to prescribers' stated preferences for the same interventions relative to monetary and time rewards for improved prescribing outcomes. In the randomised controlled trial (RCT) component, the 3 computerised prescription order entry-triggered interventions studied included: Suggested Alternatives (SA), an alert that populated non-antibiotic treatment options if an inappropriate antibiotic was prescribed; Accountable Justifications (JA), which prompted the prescriber to enter a justification for an inappropriately prescribed antibiotic that would then be documented in the patient's chart; and Peer Comparison (PC), an email periodically sent to each prescriber comparing his/her antibiotic prescribing rate with those who had the lowest rates of inappropriate antibiotic prescribing. A DCE study component was administered to determine whether prescribers felt SA, JA, PC, pay-for-performance or additional clinic time would most effectively reduce their inappropriate antibiotic prescribing. Willingness-to-pay (WTP) was calculated for each intervention.

Results

In the RCT, PC and JA were found to be the most effective interventions to reduce inappropriate antibiotic prescribing, whereas SA was not significantly different from controls. In the DCE however, regardless of treatment intervention received during the RCT, prescribers overwhelmingly preferred SA, followed by PC, then JA. WTP estimates indicated that each intervention would be significantly cheaper to implement than pay-for-performance incentives of $200/month.

Conclusions

Prescribing behaviour and stated preferences are not concordant, suggesting that relying on stated preferences alone to inform intervention design may eliminate effective interventions.

Trial registration number

NCT01454947; Results.

Keywords: discrete choice, conjoint analysis, stated preference, revealed preference, antibiotic prescribing

Strengths and limitations of this study.

In this discrete choice experiment, prescribers were asked about which interventions they felt would be most effective in reducing their rates of inappropriate antibiotic prescriptions for acute respiratory infections; such information can be valuable when developing clinical quality improvement interventions.

This is one of few studies in the healthcare literature that not only elicits prescriber preferences using a discrete choice experiment but also compares these stated preferences to actual prescribing behaviour as observed in a randomised controlled trial.

Results indicate that stated and revealed preferences are not concordant, suggesting that clinical quality improvement programmes should not rely solely on clinician input, but instead combine expert-driven and clinician-driven approaches.

The discrete choice experiment may not necessarily capture true preference, but instead be a reflection of convenience and ease of use of a specific clinical quality improvement intervention.

Stated preferences for this group of healthcare providers may not necessarily reflect those of a national sample of providers.

Introduction

According to the Centers for Disease Control and Prevention, up to 50% of antibiotics are not optimally prescribed, leading to an estimated 2 million illnesses and 23 000 deaths due to antibiotic resistance alone.1 Most antibiotics in the USA are prescribed for acute respiratory tract infections (ARIs), and about half of these prescriptions are issued to patients with non-bacterial diagnoses.2 3 Despite attempts to curb inappropriate antibiotic prescribing through interventions such as physician and patient education, electronic clinical decision support and financial incentives, these have only resulted in modest reductions in antibiotic prescribing rates for non-bacterial ARIs.4

Clinical quality improvement interventions frequently rely upon changing clinicians' practice behaviours, such as reducing orders for inappropriate treatments or diagnostic tests. The consensus-recommended best practices in design and implementation of quality improvement interventions include some component of ‘local participatory’ approaches, that is, working with frontline staff, including the target population.5–7 This engagement may directly or indirectly influence the intervention design, but it is unclear if this practice yields the optimal design. For example, theory and survey results suggest that physicians are likely to indicate preferences for direct financial incentives (bonuses).8–10 However, studies of effectiveness have shown mixed results of direct incentives in practice.10 11 This raises questions regarding whether stated preferences for intervention features elicited in a participatory process should be incorporated into design.

One approach to changing prescribing behaviour applies ideas from the behavioural sciences, using social cues and subtle changes in the clinic environment to influence clinical decision-making.12 13 In fact, the UK government implements behavioural economics ‘nudges’ into its health services, and a recent study in the UK found that social norm feedback from England's Chief Medical Officer was highly effective in reducing inappropriate antibiotic prescribing at a low cost.14–16 In the USA, the ‘Use of behavioral economics and social psychology to improve treatment of acute respiratory infections (BEARI)’ study, a multisite cluster randomised controlled trial (RCT), applied behavioural techniques and assessed the impact of various behavioural interventions on the rates of inappropriate antibiotic prescribing in various practices in Illinois, Massachusetts and Southern California.17 18 As part of the BEARI study, a discrete choice experiment (DCE) was conducted to elicit prescribers' stated preferences to evaluate prescriber preferences for one intervention compared to another.

The use of discrete methods to elicit preferences for various programmes and interventions in healthcare has significantly increased in recent years.19–22 These methods have been used in the context of health system reforms or quality improvement programmes, and healthcare policies, programmes, services, incentives and interventions.9 20 While they provide valuable feedback regarding factors that should be considered for a given programme or intervention, few studies in healthcare have evaluated how stated preferences compare to real-life behaviour. Those who have assessed external validity have generally found that stated preferences are consistent with actual decision-making behaviour on an aggregate level, while individual-level concordance is limited.23 24 Previous studies evaluating an individual's decisions for vaccination or disease screening indicated a positive predictive value for DCEs of 85%, but that the negative predictive value was only 26%; however, the authors noted that the majority of people opt for preventive healthcare in these situations, yielding the overall predictive values of the DCE.21 25 26 Furthermore, only one study has specifically considered physician decision-making—the ultimate driver of quality and cost of care.27

Based on these studies, DCE-elicited responses may generally reflect real-life behaviours. However, few studies have tested behaviour in a RCT and compared the results to DCE-elicited preferences for the same group of individuals (particularly physicians) to validate the DCE responses.28 Thus, the objective of this study was to elicit prescriber preferences for different behavioural economics interventions to reduce antibiotic prescribing, and compare these to actual prescribing behaviour as revealed in the BEARI study.

Methods

Design

Details for the BEARI study have been described elsewhere.17 Briefly, this was a multicentre trial conducted in Illinois, Massachusetts and Southern California to determine whether behavioural economic interventions could influence prescriber behaviour. The interventions implemented in the BEARI study included: (1) Suggested Alternatives (SA), which used computerised clinical decision support to suggest over-the-counter and prescription non-antibiotic treatment choices to clinicians prescribing an antibiotic for an ARI; (2) Accountable Justifications (JA), which prompted clinicians to enter an explicit justification when prescribing an antibiotic for an ARI that would then appear in the patient's electronic health record; and (3) Peer Comparison (PC), which was an email sent periodically to each prescriber that compared his/her rate of inappropriate antibiotic prescribing relative to top-performing peers.17 All clinicians received an education module reviewing ARI diagnosis and treatment guidelines, and each was assigned to 0, 1, 2 or all 3 interventions. Interventions were in place for 18 months at each practice site.

Upon completion of the study, all prescribers were asked to complete a computerised exit survey, which assessed prescribers' level of satisfaction with each of the interventions, and included the DCE. A DCE is used to determine an individual's preferences for specific alternatives given a specific scenario, rather than observing an individual's behaviour in real markets to determine his/her revealed preferences.29 The individual is presented with a pair of choices with varying levels of attributes and is asked to choose the preferred alternative based on the attributes presented (refer to Supplementary materials for details).

bmjopen-2016-012739supp.pdf (483.5KB, pdf)

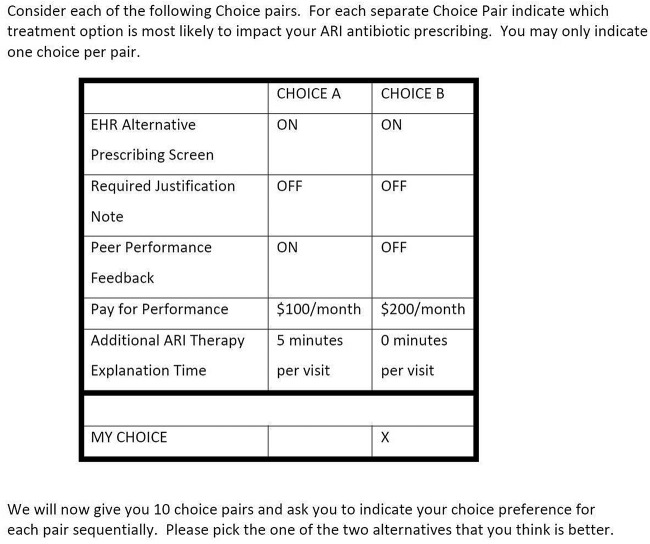

In the DCE, participants were presented with the following scenario, followed by a set of 10 choice pairs from which prescribers had to choose a preferred alternative that they felt would most likely reduce their ARI antibiotic prescribing:

Suppose that your Health Care Organization implements various measures to reduce overprescribing of antibiotics for acute respiratory infections (e.g. Acute bronchitis, Acute Pharyngitis, Sinusitis, etc.). Assume implementation with an electronic health record system with electronic prescribing options and physician monitoring that can be automatically enabled or disabled. You will be provided with a short patient handout that discusses antibiotic overprescribing for acute respiratory infections.

The five attributes included for each alternative were JA, SA, PC, pay-for-performance ($100, $200, or $0) and additional time spent with the patient (5 or 0 min) (table 1).

Table 1.

Attributes and descriptions

| Attribute | Description |

|---|---|

| SA | If you enter a target ARI diagnosis into the patient electronic health record, the EHR will prominently display a list of appropriate non-antibiotic prescription and non-prescription treatment alternatives to antibiotics |

| JA | If you order an antibiotic for a patient with an ARI, a new EHR screen will pop up that asks you to enter a short written justification for why the antibiotic prescription was necessary. What you write in this screen becomes part of the patient's permanent medical record, and therefore visible to other providers |

| PC | Each other week, you and your peers will receive in your work mailboxes (or email) an updated ranking comparing your antibiotic prescribing rate to the top-performing (ie, ‘best’) decile of your clinical peers |

| P4P | If you are able to reduce your rate of antibiotic prescribing for all of your patients with ARI to the lowest 10th centile of your peers, you will receive a predetermined monthly reward payment of either $100 or $200 |

| AT | Your productivity management record system will change the RVU/visit time allowed for each patient with ARI to increase by 5 min per visit as you respond to the patient's concerns regarding the alternative treatments for their ARI diagnosis. If you achieve the antibiotic prescription reduction goal of reducing your antibiotic prescriptions by 50%, this 5 min per visit time will be preserved |

ARI, acute respiratory tract infection; AT, additional time; EHR, Electronic Health Record; JA, Accountable Justifications; P4P, pay-for-performance; PC, Peer Comparison; RVU, relative value units; SA, Suggested Alternatives.

These attributes and their levels were chosen based on interviews with prescriber focus groups in Los Angeles. Each attribute was toggled on or off in the choice pairs presented to respondents (figure 1). Respondents were asked to evaluate a total of 10 choice pairs which were assigned using a fractional factorial design with orthogonality of main effects.30

Figure 1.

DCE treatment scenarios. DCE, discrete choice experiment.

A pilot survey was administered to a small number of prescribers in Los Angeles and Boston who were not involved in the BEARI study and thus not exposed to any of the interventions, and to a subset of prescribers in Chicago who did receive exposure to the BEARI interventions. The DCE was then administered as part of the exit survey for all prescribers involved in the BEARI study.

Data analysis

Baseline provider characteristics were collected at the start of the study, and information about the clinic environment and patient population mix were collected during the exit survey. Descriptive statistics were performed on these data.

Preference data from the DCE were analysed using multinomial logit and mixed logit models drawn 500 times to obtain a robust output. In addition, the models were run on the subset of responders who completed 6 or more of the 10 choice tasks, and the results compared to those of the entire sample. The impact of intervention assignment on stated preference was evaluated by including intervention assignment as an explanatory variable in the mixed logit model, and interacting intervention assignment on each programme. Willingness-to-pay (WTP) for each intervention type was calculated by taking the ratio of the estimated coefficient for a given attribute to the cost coefficient, for example:  . This calculation was performed for each intervention to quantify the monetary value of each attribute included in the DCE.31

. This calculation was performed for each intervention to quantify the monetary value of each attribute included in the DCE.31

All data were analysed in Stata V.12.0 (StataCorp. Stata Statistical Software: Release 12. StataCorp LP: College Station, Texas, 2011).

Results

Details for the outcomes of the BEARI study have been described elsewhere.18 Briefly, the BEARI study found that the most significant reduction in the rates of inappropriate antibiotic prescribing was achieved with JA and PC, when compared to the education control. SA yielded a non-significant reduction in inappropriate antibiotic prescribing rates.18 These results suggest that PC and JA may be the most effective interventions to influence prescribing.

Of the 253 prescribers recruited in the BEARI RCT study, n=234 responses were received for the DCE survey, although only 157 provided responses to all 10 treatment scenarios. Of the responders, 26 were exposed to all three BEARI interventions, 35 to SA and JA, 32 to SA and PC, and 23 to JA and PC in the BEARI RCT. Prescribers were primarily medical doctors with an average of 20 years of clinical practice, with more men in the control group (table 2).

Table 2.

Baseline demographics

| Total (n=234) | Control (n=24) | Treatment (n=210) | |

|---|---|---|---|

| Characteristics | Number (%) | Number (%) | Number (%) |

| Age (years, mean) | 48.5 | 47.8 | 48.6 |

| Gender | |||

| Female | 137 (58.5%) | 12 (50.0%) | 125 (59.5%) |

| Male | 81 (34.6%) | 11 (45.8%) | 70 (33.3%) |

| No response | 16 (6.8%) | 1 (4.2%) | 15 (7.1%) |

| Years of practice (mean) | 19.9 | 19.0 | 21.2 |

| Professional credentials | |||

| Medical doctor | 174 (74.4%) | 18 (75.0%) | 156 (74.3%) |

| Nurse practitioner | 24 (10.3%) | 2 (8.3%) | 22 (10.5%) |

| Physician assistant | 13 (5.6%) | 2 (8.3%) | 11 (5.2%) |

| Doctor of osteopathy | 6 (2.6%) | 1 (4.2%) | 5 (2.4%) |

| No response | 17 (7.3%) | 1 (4.2%) | 16 (7.6%) |

| Specialty | |||

| Internal medicine | 126 (53.5%) | 14 (58.3%) | 112 (53.3%) |

| Paediatrics | 23 (9.8%) | 2 (8.3%) | 21 (10%) |

| Family/general practice | 28 (12.0%) | 3 (12.5%) | 25 (11.9%) |

| Geriatrics | 3 (1.3%) | 0 | 3 (1.3%) |

| Rheumatology | 1 (0.43%) | 0 | 1 (0.43%) |

| Preventive medicine | 1 (0.43%) | 0 | 1 (0.43%) |

| Infectious disease | 1 (0.43%) | 0 | 1 (0.43%) |

| Midwife | 1 (0.43%) | 0 | 1 (0.43%) |

| No response/other | 50 (21.4%) | 5 (20.8%) | 45 (21.4%) |

Results of the mixed logit model are presented in table 3.

Table 3.

BEARI study mixed logit regression results

| Exposure group | OR (95% CI) | Coefficient | SE (95% CI) | p Value | p Value* |

|---|---|---|---|---|---|

| Full sample (n=239) | <0.001 | ||||

| Pay-for-performance | 1.007 (1.006 to 1.008) | 0.007 | 0.001 (0.006 to 0.008) | 0.000 | |

| SA | 2.108 (1.727 to 2.573) | 0.746 | 0.102 (0.546 to 0.945) | 0.000 | |

| JA | 1.092 (0.85 to 1.404) | 0.088 | 0.128 (−0.162 to 0.339) | 0.490 | |

| PC | 1.446 (1.184 to 1.766) | 0.369 | 0.102 (0.169 to 0.568) | 0.000 | |

| Additional time | 1.159 (1.105 to 1.216) | 0.147 | 0.024 (0.1 to 0.195) | 0.000 | |

| Controls (n=24) | 0.414 | ||||

| Pay-for-performance | 1.006 (1.003 to 1.009) | 0.006 | 0.002 (0.003 to 0.009) | 0.000 | |

| SA | 2.074 (1.302 to 3.303) | 0.729 | 0.237 (0.264 to 1.195) | 0.002 | |

| JA | 0.924 (0.512 to 1.67) | −0.079 | 0.302 (−0.67 to 0.513) | 0.794 | |

| PC | 1.563 (1.01 to 2.421) | 0.447 | 0.223 (0.01 to 0.884) | 0.045 | |

| Additional time | 1.085 (0.99 to 1.191) | 0.082 | 0.047 (-0.01 to 0.174) | 0.082 | |

| SA (n=135) | <0.001 | ||||

| Pay-for-performance | 1.007 (1.006 to 1.009) | 0.007 | 0.001 (0.006 to 0.009) | 0.000 | |

| SA | 2.199 (1.626 to 2.973) | 0.788 | 0.154 (0.486 to 1.09) | 0.000 | |

| JA | 1.009 (0.719 to 1.418) | 0.009 | 0.173 (-0.33 to 0.349) | 0.957 | |

| PC | 1.552 (1.173 to 2.054) | 0.440 | 0.143 (0.159 to 0.72) | 0.002 | |

| Additional time | 1.192 (1.117 to 1.274) | 0.176 | 0.034 (0.11 to 0.242) | 0.000 | |

| JA (n=121) | <0.001 | ||||

| Pay-for-performance | 1.006 (1.005 to 1.008) | 0.006 | 0.001 (0.005 to 0.008) | 0.000 | |

| SA | 1.955 (1.504 to 2.54) | 0.670 | 0.134 (0.408 to 0.932) | 0.000 | |

| JA | 1.451 (1.061 to 1.984) | 0.372 | 0.160 (0.059 to 0.685) | 0.020 | |

| PC | 1.429 (1.097 to 1.861) | 0.357 | 0.135 (0.093 to 0.621) | 0.008 | |

| Additional time | 1.174 (1.095 to 1.259) | 0.160 | 0.036 (0.09 to 0.23) | 0.000 | |

| PC (n=101) | <0.001 | ||||

| Pay-for-performance | 1.007 (1.005 to 1.009) | 0.007 | 0.001 (0.005 to 0.009) | 0.000 | |

| SA | 2.589 (1.819 to 3.685) | 0.951 | 0.180 (0.598 to 1.304) | 0.000 | |

| JA | 1.079 (0.684 to 1.703) | 0.076 | 0.233 (−0.38 to 0.532) | 0.744 | |

| PC | 1.285 (0.901 to 1.832) | 0.250 | 0.181 (−0.105 to 0.605) | 0.167 | |

| Additional time | 1.200 (1.103 to 1.305) | 0.182 | 0.043 (0.098 to 0.266) | 0.000 | |

Results indicate the OR of each alternative relative to the control group, broken down by exposure group (full sample, controls, SA, JA and PC).

*p Value for significance across all coefficients for that sample.

BEARI, use of behavioral economics and social psychology to improve treatment of acute respiratory infections; JA, Accountable Justifications; PC, Peer Comparison; SA, Suggested Alternatives.

χ2 statistic indicates that coefficients were significantly different from one another (p<0.001). Overall, prescribers tended to prefer any of the presented alternatives to prescriber education and guideline review, regardless of exposure group. Prescribers did not prefer PC as much as other interventions for reducing inappropriate antibiotic prescriptions. Instead, prescribers consistently preferred SA regardless of which intervention they were actually exposed to, including those in the control group. These results match those found in the pilot study; those who were not exposed to any of the BEARI interventions also strongly preferred SA (OR=2.75, p=0.003). Results were similar in the pilot group exposed to the interventions, though slightly less pronounced (OR=2.07, p=0.018) (see online supplementary table S1). These trends remained consistent whether prescribers were exposed to just one, two or all of the interventions, although the magnitude and significance of preference varied compared to the results by individual intervention (see online supplementary table S2).

In contrast, PC was not strongly preferred as an intervention most likely to reduce ARI antibiotic prescribing, though still statistically significant among controls (OR=1.56, p=0.045), and those exposed to SA (OR=1.55, p=0.002) and JA (OR=1.43, p=0.008). In fact, those who were exposed to PC had a non-significant preference for this intervention (OR=1.285, p=0.167), even though it was highly effective in reducing inappropriate antibiotic prescribing rates in the BEARI experiment. Overall, prescribers were either indifferent to or did not prefer JA (although this was non-significant), unless they were exposed to that intervention in the study, in which case there was a significant preference for the intervention (OR=1.45, p=0.02). In contrast, the pilot study showed that JA was non-preferred among non-exposed prescribers (OR=0.68, p=0.049), though preferred among those exposed (OR=1.79, p=0.247).

Pay-for-performance was marginally preferred among prescribers across all groups (control and treatment) with an OR=1.007, implying that each additional $100 in financial incentives would increase the probability of preferring pay-for-performance by 70%. This suggests that clinicians feel that every $100 increase in compensation would improve their inappropriate antibiotic prescribing rates by 70% relative to the control group. These results also generally held true in the pilot study. Additional office time was more strongly preferred than pay-for-performance, although this preference was non-significant in the control group (OR=1.085, p=0.082). Among those exposed to SA, JA and PC, additional office time was highly significantly preferred, with OR=1.19, 1.17 and 1.2, respectively (p<0.05). These results imply that overall, clinicians felt that additional time spent with a patient to form an appropriate diagnosis and treatment plan would be more effective than financial incentives to reduce inappropriate antibiotic prescribing.

When adjusted for intervention in the interacted model, trends in stated preferences remained stable (see online supplementary table S3). Those exposed to JA did not favour SA as strongly as those exposed to SA or PC (OR=1.889, p<0.001, vs OR=2.085, p<0.001 and OR=2.406, p<0.001, respectively), as in the base case model. In addition, JA remained non-preferred even when stratified by exposure group, except for those exposed to JA in the BEARI trial.

WTP estimates indicate the value of each intervention to prescribers (table 4).

Table 4.

WTP estimates

| WTP | Monthly | Annually |

|---|---|---|

| Controls | ||

| SA | −$120.52 | −$1446.28 |

| JA | $12.98 | $155.81 |

| PC | −$73.84 | −$886.09 |

| Additional time | −$13.55 | −$162.61 |

| SA | ||

| SA | −$108.21 | −$1298.56 |

| JA | −$1.29 | −$15.48 |

| PC | −$60.38 | −$724.56 |

| Additional time | −$24.17 | −$290.04 |

| JA | ||

| SA | −$109.21 | −$1310.46 |

| JA | −$60.65 | −$727.81 |

| PC | −$58.16 | −$697.90 |

| Additional time | −$26.10 | −$313.23 |

| PC | ||

| SA | −$129.43 | −$1553.13 |

| JA | −$10.34 | −$124.06 |

| PC | −$34.07 | −$408.80 |

| Additional time | −$24.79 | −$297.43 |

Negative values indicate the value of that particular alternative to the prescriber; thus, the absolute value is the amount the prescriber is willing to accept; a positive number implies that the prescriber would be willing to give up that dollar amount rather than use the alternative presented.

JA, Accountable Justifications; PC, Peer Comparison; SA, Suggested Alternatives; WTP, willingness-to-pay.

SA was equivalent to $1299–$1553 in financial incentives per year, whereas PC was only worth $408–$886 per year. Each minute of additional office time for patient education was only worth an average $265 per year. These estimates imply that prescribers could be paid an average of $1400, $650 or $265 per year to achieve the same results as SA, PC and additional time, respectively. These results also imply that SA is equivalent to an additional 5–6 min of office time, and PC 3 min. In contrast, prescribers in the control group would rather sacrifice $13 of pay per month (or $156 annually) than be required to enter a justification for every inappropriate antibiotic prescription (JA).

Compared to the results of the DCE, the exit survey yielded an overwhelmingly ambivalent attitude towards the BEARI study as a whole, and towards each individual intervention. While 60% of prescribers agreed that providing feedback on clinician performance and using electronic decision support tools are effective ways to improve quality care, 42% of prescribers felt that the BEARI interventions were neither useful nor useless in improving antibiotic prescribing practices. When asked about the usefulness of the PC emails, 37% of prescribers responded neutrally, while 14% felt the information was not at all useful, and only 7% found it very useful. For SA and JA, 30% of prescribers responded neutrally, ∼20% felt the interventions were not at all useful and only 10% found the interventions to be very useful.

Discussion

Overall, the DCE-elicited preferences did not reflect actual behaviour as revealed in the RCT of prescriber interventions to alter antibiotic prescribing. Although the DCE overwhelmingly favoured SA as the most effective method for reducing inappropriate antibiotic prescriptions across treatment and controls, this intervention yielded similar antibiotic prescribing rates to controls throughout the duration of the BEARI trial.18 On the other hand, most prescribers generally felt that JA was ineffective in reducing their ARI antibiotic prescribing, despite its significant impact on inappropriate antibiotic prescription rates in the trial. PC was generally significantly preferred to provider education, although this preference was not as strong as that for SA. Regardless of the intervention group to which participants were assigned in the trial, the general trends in stated preference were similar across all groups in the DCE survey, while the trial showed that the trajectory of antibiotic prescribing rates for JA and PC relative to the control group consistently decreased compared to SA.

Differences between stated and revealed preferences may be due to a number of factors. Prescribers did not get to choose their intervention in the randomised trial; therefore, prescribing behaviour may not represent the prescriber's true ‘revealed preference’. Another consideration is that in the DCE task, prescribers may not be choosing the intervention that best reduces inappropriate antibiotic prescribing; instead, the stated preference might be for the intervention that is the least inconvenient for the prescriber. The DCE results are also reflected in the exit survey, which indicated that the majority of prescribers rated the usefulness of each intervention as 1, 2 or 3 on a scale of 1–5 (1 being not at all useful, 5 being very useful). Only 10% of prescribers found SA to be very useful (rating of 5 on a scale of 1–5), while just 8% and 6% found JA and PC to be very useful interventions, respectively. Given the lack of enthusiasm in the survey responses, it is unsurprising that even SA, the most preferred intervention according to the DCE, was only worth up to $1500 annually to clinicians, and the other interventions even less.

SA may be the most preferred intervention overall because it is a clinical decision support tool, as opposed to a socially motivated intervention such as JA or PC. The stated preference for SA is consistent with design recommendations that if Electronic Health Record (EHR) alerts are used, they should include actionable information.32 33 On the other hand, socially motivated interventions force the prescriber to conform to ‘social norms’ due to a concern about his/her social reputation and/or an awareness of the social norm of cooperation.34 Rather than applying social pressure, SA bears no social consequences if the prescriber chooses to ignore the alternatives presented. In contrast, prescribers' dislike of JA is consistent with findings describing the phenomenon of ‘alert fatigue’, and relatively poor efficacy of alerts that do not include actionable recommendations.33 35 However, JA in BEARI was actually highly effective in the RCT, and differs from similar studies that do not include peer accountability.33

One limitation of the BEARI study is that there were many incomplete responses to the DCE task. However, results for the entire sample were compared to results for those who completed six or more choice tasks, and there were no significant differences. In addition, geographic variation in prescribing habits and patient attitudes may have affected the rate at which inappropriate antibiotics were prescribed, regardless of the presence of a behavioural economic intervention. However, it is assumed that such attitudes and behaviours would have been balanced among all treatment groups after randomisation. Responses may have been different had the DCE been administered before the RCT took place, since prescribers would not have actually experienced the interventions prior to being asked about them. The pilot DCE was administered to prescribers who did not receive any of the interventions, yet it showed similar statistically significant stated preferences to those elicited from prescribers who participated in the trial. This, along with the fact that actual intervention assignment did not affect the overall trend of stated preferences, suggests that prescribers appear to express the same preferences regardless of exposure to the BEARI interventions. Finally, contrary to consensus regarding the inclusion of an opt-out option in DCE to reflect a respondent's choice in real life, no opt-out option was included.36 37 However, one study empirically evaluated the effect of an opt-out option on attribute preference, and found that while attribute value estimates differed, there were no notable differences in the relative order of the attributes (when compared to each other).36 Based on their findings, the authors recommended that an opt-out option always be included in a DCE if non-participation is an option in real life as well. In BEARI, prescribers did not have the option to opt out of participation, and it is likely that if the BEARI interventions were implemented in practice, prescribers would also be unable to opt out.

Compared to previous studies attempting to evaluate the external validity of DCEs in healthcare, this study provides stronger evidence that stated and revealed preferences can be non-concordant. Specifically, revealed preferences were determined through a RCT, and the same individuals were then asked to complete the DCE. Participants were not given a choice as to which intervention they wished to be implemented in clinic; instead, real-life behaviour was captured in the trial. Prescribers were also from various types of practice settings across the country, increasing the external validity of the results, rather than eliciting responses from a single practice site. Previous studies did not use an experimental design to determine revealed preferences, and thus, the results are not as robust.

Ultimately, had the BEARI trial relied upon the DCE preferences of the clinicians unexposed to the interventions to inform intervention selection, the least effective intervention (SA) would have been adopted and one of the effective interventions (JA or PC) would have been rejected. Relying on the exit survey responses would have led to none of the interventions being adopted. Our findings are consistent with recommendations that quality improvement interventions combining local participatory approaches with expert-driven approaches are likely to be most effective.38 In particular, approaches that engage targets in the implementation strategy rather than the design and development of interventions may optimally ensure that interventions are deployed in a pragmatic way without being influenced by the stated preferences of clinicians.

Conclusion

This study is part of a growing body of literature comparing stated and revealed preferences in a healthcare setting. Consistent with similar studies evaluating the external validity of DCE-stated preferences, this study showed that they are not concordant, and suggests that quality improvement interventions should not rely on frontline staff input alone. Future work should continue to test the external validity of DCE preferences in healthcare and the factors that contribute to differences between stated and revealed preferences.

Footnotes

Contributors: CLG and JWH made substantial contributions to the conception and design of this project. CLG, JWH, DM and JND assisted with data acquisition, analysis and interpretation of data for this manuscript. CLG drafted the manuscript, and JWH, DM and JND revised it critically for important intellectual content. All authors approved of the final version to be published. CLG is the guarantor.

Funding: The original BEARI trial was supported by the American Recovery & Reinvestment Act of 2009 (RC4 AG039115) from the National Institutes of Health/National Institute on Aging and Agency for Healthcare Research and Quality (Dr Doctor, University of Southern California). The project also benefited from technology funded by the Agency for Healthcare Research and Quality through the American Recovery & Reinvestment Act of 2009 (R01 HS19913-01) (Dr Ohno-Machado, University of California, San Diego). Data for the project were collected by the University of Southern California's Medical Information Network for Experimental Research (Med-INFER) which participates in the Patient Scalable National Network for Effectiveness Research (pSCANNER) supported by the Patient-Centered Outcomes Research Institute (PCORI), Contract CDRN-1306-04819 (Dr Ohno-Machado). JND has support from NIH and AHRQ for the submitted work.

Competing interests: None declared.

Ethics approval: The study was approved by the University Park Institutional Review Board at the University of Southern California (HS 11-00249).

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1.Shapiro DJ, Hicks LA, Pavia AT et al. . Antibiotic prescribing for adults in ambulatory care in the USA, 2007–09. J Antimicrob Chemother 2014;69:234–40. 10.1093/jac/dkt301 [DOI] [PubMed] [Google Scholar]

- 2.Steinman M. Changing use of antibiotics in community-based outpatient practice, 1991–1999. Ann Intern Med 2003;138:525–33. 10.7326/0003-4819-138-7-200304010-00008 [DOI] [PubMed] [Google Scholar]

- 3.Grijalva CG, Nuorti JP, Griffin MR. Antibiotic prescription rates for acute respiratory tract infections in US ambulatory settings. JAMA 2009;302:758–66. 10.1001/jama.2009.1163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ranji SR, Steinman MA, Shojania KG et al. . Antibiotic prescribing behavior Vol. 4: closing the quality gap: a critical analysis of quality improvement strategies. Technical Review 9 (Prepared by Stanford University-UCSF Evidence-based Practice Center under Contract No. 290-02-0017 Rockville: (MD: ): Agency for Healthcare Research and Quality, 2006. [Google Scholar]

- 5.Van Bokhoven MA, Kok G, Van Der Weijden T. Designing a quality improvement intervention: a systematic approach. Qual Saf Health Care 2003;12:215–20. 10.1136/qhc.12.3.215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Demakis J, Mcqueen L, Kizer KW et al. . Quality Enhancement Research Initiative (QUERI): a collaboration between research and clinical practice. Med Care 2000;38(Suppl 1):I17 10.1097/00005650-200006001-00003 [DOI] [PubMed] [Google Scholar]

- 7.Rubenstein LV, Mittman BS, Yano EM et al. . From understanding health care provider behavior to improving health care: the QUERI framework for quality improvement. Quality Enhancement Research Initiative. Med Care 2000;38(Suppl 1):I129–41. 10.1097/00005650-200006001-00013 [DOI] [PubMed] [Google Scholar]

- 8.Robinson JC. Theory and practice in the design of physician payment incentives. Milbank Q 2001;79:149–77, III 10.1111/1468-0009.00202 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Scott A. Eliciting GPs’ preferences for pecuniary and non-pecuniary job characteristics. J Health Econ 2001;20:329–47. 10.1016/S0167-6296(00)00083-7 [DOI] [PubMed] [Google Scholar]

- 10.Armour BS, Pitts MM, Maclean R et al. . The effect of explicit financial incentives on physician behavior. Arch Intern Med 2001;161:1261–6. 10.1001/archinte.161.10.1261 [DOI] [PubMed] [Google Scholar]

- 11.Town R, Kane R, Johnson P et al. . Economic incentives and physicians’ delivery of preventive care: a systematic review. Am J Prev Med 2005;28:234–40. 10.1016/j.amepre.2004.10.013 [DOI] [PubMed] [Google Scholar]

- 12.Loewenstein G, Brennan T, Volpp K. Asymmetric paternalism to improve health behaviors. JAMA 2007;298:2415–17. 10.1001/jama.298.20.2415 [DOI] [PubMed] [Google Scholar]

- 13.Frolich A, Talavera JA, Broadhead P et al. . A behavioral model of clinician responses to incentives to improve quality. Health Policy 2007;80:179–93. 10.1016/j.healthpol.2006.03.001 [DOI] [PubMed] [Google Scholar]

- 14.Thaler RH, Sunstein CR. Nudge: improving decisions about health, wealth, and happiness. New York: Penguin Books, 2009. [Google Scholar]

- 15. Who We Are (cited 19 May 2016). http://www.behaviouralinsights.co.uk/about-us/ [Google Scholar]

- 16.Hallsworth M, Chadborn T, Sallis A et al. . Provision of social norm feedback to high prescribers of antibiotics in general practice: a pragmatic national randomised controlled trial. Lancet 2016;387:1743–52. 10.1016/S0140-6736(16)00215-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Persell S, Friedberg M, Meeker D et al. . Use of behavioral economics and social psychology to improve treatment of acute respiratory infections (BEARI): rationale and design of a cluster randomized controlled trial—study protocol and baseline practice and provider characteristics. BMC Infect Dis 2013;13:290–300. 10.1186/1471-2334-13-290 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Meeker D, Linder JA, Fox CR et al. . Effect of behavioral interventions on inappropriate antibiotic prescribing among primary care practices. JAMA 2016;315:562–70. 10.1001/jama.2016.0275 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ryan M, Gerard K. Using discrete choice experiments to value health care programmes: current practice and future research reflections. Appl Health Econ Health Policy 2003;2:55–64. [PubMed] [Google Scholar]

- 20.Whitty JA, Lancsar E, Rixon K et al. . A systematic review of stated preference studies reporting public preferences for healthcare priority setting. Patient 2014;7:365–86. 10.1007/s40271-014-0063-2 [DOI] [PubMed] [Google Scholar]

- 21.De Bekker-Grob E, Ryan M, Gerard K. Discrete choice experiments in health economics: a review of the literature. Health Econ 2012;21:145–72. 10.1002/hec.1697 [DOI] [PubMed] [Google Scholar]

- 22.Clark MD, Determann D, Petrou S et al. . Discrete choice experiments in health economics: a review of the literature. Pharmacoeconomics 2014;32:883–902. 10.1007/s40273-014-0170-x [DOI] [PubMed] [Google Scholar]

- 23.Krucien N, Gafni A, Pelletier-Fleury N. Empirical testing of the external validity of a discrete choice experiment to determine preferred treatment option: the case of sleep apnea. Health Econ 2015;24:951–65. 10.1002/hec.3076 [DOI] [PubMed] [Google Scholar]

- 24.Linley W, Hughes D. Decision-makers’ preferences for approving new medicines in wales: a discrete-choice experiment with assessment of external validity. Pharmacoeconomics 2013;31:345–55. 10.1007/s40273-013-0030-0 [DOI] [PubMed] [Google Scholar]

- 25.Ryan M, Watson V. Comparing welfare estimates from payment card contingent valuation and discrete choice experiments. Health Econ 2009;18:389–401. 10.1002/hec.1364 [DOI] [PubMed] [Google Scholar]

- 26.Lambooij MH, Irene A, Veldwijk J et al. . Consistency between stated and revealed preferences: a discrete choice experiment and a behavioural experiment on vaccination behaviour compared. BMC Med Res Methodol 2015;15:19 10.1186/s12874-015-0010-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Mark TL, Swait J. Using stated preference modeling to forecast the effect of medication attributes on prescriptions of alcoholism medications. Value Health 2003;6:474–82. 10.1046/j.1524-4733.2003.64247.x [DOI] [PubMed] [Google Scholar]

- 28.Arrow K, Solow R, Portney PR et al. . Report of the NOAA Panel on Contingent Valuation, US National Oceanic and Atmospheric Administration (NOAA), 1993. http://www.economia.unimib.it/DATA/moduli/7_6067/materiale/noaa%20report.pdf

- 29.Louviere J, Flynn T, Carson R. Discrete choice experiments are not conjoint analysis. J Choice Model 2010;3:57–72. 10.1016/S1755-5345(13)70014-9 [DOI] [Google Scholar]

- 30.Louviere J, Hensher D, Swait J. Stated choice methods analysis and applications. Cambridge: (UK: ): Cambridge University Press, 2000. [Google Scholar]

- 31.Lancaster KJ. A new approach to demand theory. J Polit Econ 1966;74:132–57. 10.1086/259131 [DOI] [Google Scholar]

- 32.Maviglia SM, Zielstorff RD, Paterno M et al. . Automating complex guidelines for chronic disease: lessons learned. J Am Med Inform Assoc 2003;10:154–65. 10.1197/jamia.M1181 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kawamoto K, Houlihan CA, Balas EA et al. . Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005;330:765 10.1136/bmj.38398.500764.8F [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kerr N. Anonymity and social control in social dilemmas. In: Foddy M, Smithson M, Schneider S et al.. eds Resolving social dilemmas: dynamics, structural, and intergroup aspects. Philadelphia: (PA: ): Psychology Press, 1999:103–9. [Google Scholar]

- 35.Kesselheim AS, Cresswell K, Phansalkar S et al. . Clinical decision support systems could be modified to reduce ‘alert fatigue’ while still minimizing the risk of litigation. Health Aff (Millwood) 2011;30:2310–7. 10.1377/hlthaff.2010.1111 [DOI] [PubMed] [Google Scholar]

- 36.Veldwijk J, Lambooij MS, De Bekker-Grob EW et al. . The effect of including an opt-out option in discrete choice experiments. PLoS ONE 2014;9:e111805 10.1371/journal.pone.0111805 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making: a user's guide. Pharmacoeconomics 2008;26:661–77. 10.2165/00019053-200826080-00004 [DOI] [PubMed] [Google Scholar]

- 38.Parker LE, Ritchie MJ, Kirchner JAE et al. . Balancing health care evidence and art to meet clinical needs: policymakers’ perspectives. J Eval Clin Pract 2009;15:970–5. 10.1111/j.1365-2753.2009.01209.x [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2016-012739supp.pdf (483.5KB, pdf)