ABSTRACT

Background

Implementation of the educational milestones benefits from mobile technology that facilitates ready assessments in the clinical environment. We developed a point-of-care resident evaluation tool, the Mobile Medical Milestones Application (M3App), and piloted it in 8 North Carolina family medicine residency programs.

Objective

We sought to examine variations we found in the use of the tool across programs and explored the experiences of program directors, faculty, and residents to better understand the perceived benefits and challenges of implementing the new tool.

Methods

Residents and faculty completed presurveys and postsurveys about the tool and the evaluation process in their program. Program directors were interviewed individually. Interviews and open-ended survey responses were analyzed and coded using the constant comparative method, and responses were tabulated under themes.

Results

Common perceptions included increased data collection, enhanced efficiency, and increased perceived quality of the information gathered with the M3App. Residents appreciated the timely, high-quality feedback they received. Faculty reported becoming more comfortable with the tool over time, and a more favorable evaluation of the tool was associated with higher utilization. Program directors reported improvements in faculty knowledge of the milestones and resident satisfaction with feedback.

Conclusions

Faculty and residents credited the M3App with improving the quality and efficiency of resident feedback. Residents appreciated the frequency, proximity, and specificity of feedback, and faculty reported the app improved their familiarity with the milestones. Implementation challenges included lack of a physician champion and competing demands on faculty time.

What was known and gap

Implementation of the educational milestones benefits from mobile technology to facilitate real-time assessments in clinical settings, yet few of these have been tested to date.

What is new

Examination of the implementation of a mobile phone–based milestone evaluation tool to assess variations in use as well as to understand benefits and implementation challenges.

Limitations

Single specialty study may reduce generalizability.

Bottom line

Faculty and residents reported enhanced efficiency of assessment and quality of feedback; challenges included lack of physician champions and competing demands.

Introduction

The new accreditation system requires programs to evaluate residents using the educational milestones. The potential volume of evaluations required presents a new challenge for programs and their clinical competency committees (CCCs).1 Busy resident educators need efficient tools to enhance the ability to provide feedback to residents in real time, and CCCs need information from multiple sources to complete the required semiannual milestone-based resident evaluations. The ubiquity of computers—especially mobile devices2—offers potential to achieve these goals. A few early pilots have shown promise in assessing progress toward milestones in emergency medicine3 and in providing real-time feedback to surgery residents.4 One recent Canadian study demonstrated successful use of narrative descriptions of competency-based behaviors in assessing residency performance.5

We developed the Mobile Medical Milestones Application (M3App) with 3 objectives: (1) to facilitate recording narrative descriptions of resident behavior in real time by multiple observers (preceptors, staff, peers) in multiple settings (outpatient clinic, inpatient service, home visits, conferences); (2) to link narrative observations to specific subcompetencies in the Accreditation Council for Graduate Medical Education Milestones; and (3) to compile observations for each resident by milestone and subcompetency for use by the CCC.

In piloting the M3App it became evident (from periodic consultations with program directors, formal surveys, and usage tracking) that there was substantial variation in the volume of app usage and in the way programs used M3App reports. Because implementation of the M3App involved considerable change for program directors, faculty, and residents, it is important to consider the pilot implementation of the app in the context of what is known about change management. Although implementing change in medicine is recognized as difficult,6 Kotter's 8 Steps for Leading Change7 provide an accepted framework for understanding change as a process.

In this article we describe the experience of program directors, faculty, and residents in implementing the M3App, with particular attention to how implementation varies and how that may be understood from the standpoint of change management.

Methods

The Mobile Application

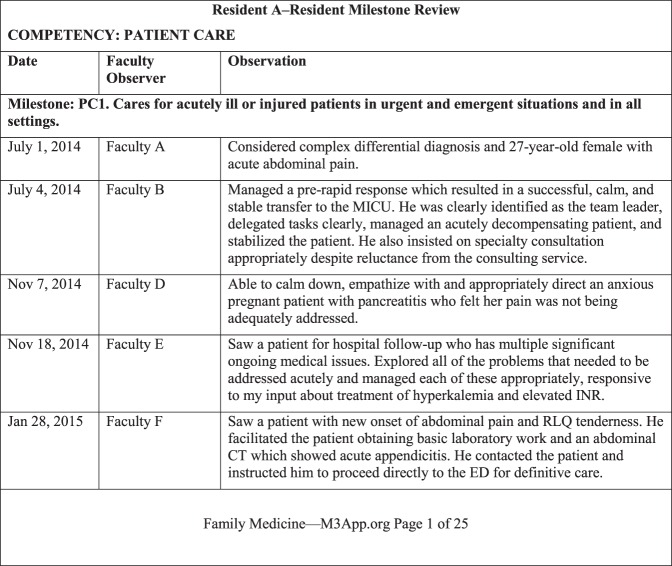

The M3App was developed and alpha-tested by the Department of Family Medicine at the University of North Carolina. It has an administrative interface and a user interface. The administrative interface allows programs to establish user accounts, store data, and generate reports. The user interface is accessible to faculty via any Internet-connected device. After logging in, a faculty member selects a resident, enters an observation, and is prompted to select and assess 1 or more competencies and appropriate subcompetencies (figure). Residency administrators generate monthly reports for each resident, organized by milestone subcompetency, listing all faculty observations (figure). These are sent monthly to each resident and are made available to the CCC for the twice-yearly resident reviews.

Figure.

Sample of the M3App Resident Report

Abbreviations: M3App, Mobile Medical Milestones Application; PC, patient care; MICU, medical intensive care unit; INR, international normalized ratio; RLQ, right lower quadrant CT, computed tomography; ED, emergency department.

Setting and Participants

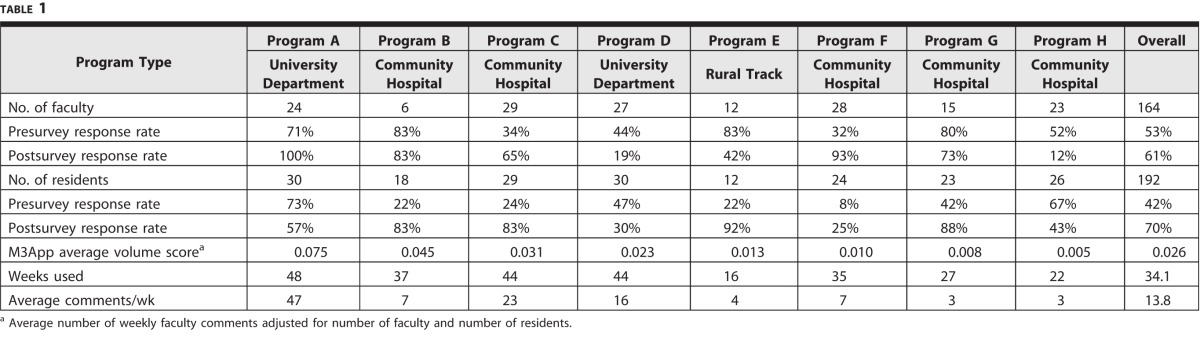

The M3App pilot ran from June 2014 to May 2015, and included residents and faculty from 8 family medicine residency programs across North Carolina (table 1).

Table 1.

Program Type, Survey Response Rates, and Mobile Medical Milestones Application (M3App) Volume Scoresa (In Descending Order of App Volume)

The University of North Carolina at Chapel Hill Institutional Review Board approved our research protocol.

Data and Analysis

Prior to implementation, we surveyed all residency faculty and trainees in each program via e-mail. The faculty survey included items about teaching settings, learner groups, and concluded with an open-ended item for comments on the program's existing evaluation process. Residents were asked to rate their satisfaction with written descriptive feedback they currently received, and to describe in an open-ended item changes they would like to see in the written feedback they received.

At the conclusion of the pilot in May 2015, 1 of the authors (C.L.C.) conducted 20-minute, semistructured, key informant telephone interviews with program directors. Using a standard interview guide developed by the core project team, the interviews addressed 4 areas: (1) program director's personal use of the M3App and its functionality; (2) resident evaluation and feedback processes prior to implementation of the M3App; (3) impressions of use, acceptability, and utility of the M3App and its use during CCC meetings; and (4) future directions for the tool. As key informants, program directors represented a range of perspectives in a single interview. Concurrently, faculty and residents were resurveyed. Items from the preimplementation survey were repeated for comparison, and faculty were asked to comment on desired changes to the M3App.

Program director interviews were transcribed for analysis. Under the supervision of 1 of the authors (C.L.C.), a graduate student with prior experience in qualitative methods developed a coding scheme, using the codebook method described by Crabtree and Miller.8 Coding of each interview transcript was reviewed for face validity and consistency. Coded transcripts were distributed among the core project team (C.P.P., A.R., C.L.C.) who met to develop themes. Responses to the open-ended survey items also were coded by program, and then sorted into the themes developed from the program director interviews. The principal investigator (C.P.P.) kept notes throughout the pilot of conversations with program directors as well as records of e-mail exchanges about the challenges and successes of the implementation process. These notes and records were not formally analyzed, but they provided additional context for triangulation of survey and interview data.

In the final step, the weekly number of faculty comments entered in the M3App by each program during 50 weeks of the project pilot phase was obtained from the M3App database. Weekly program numbers were divided by the number of faculty, then by the number of residents to produce an adjusted weekly volume score for each program.

Results

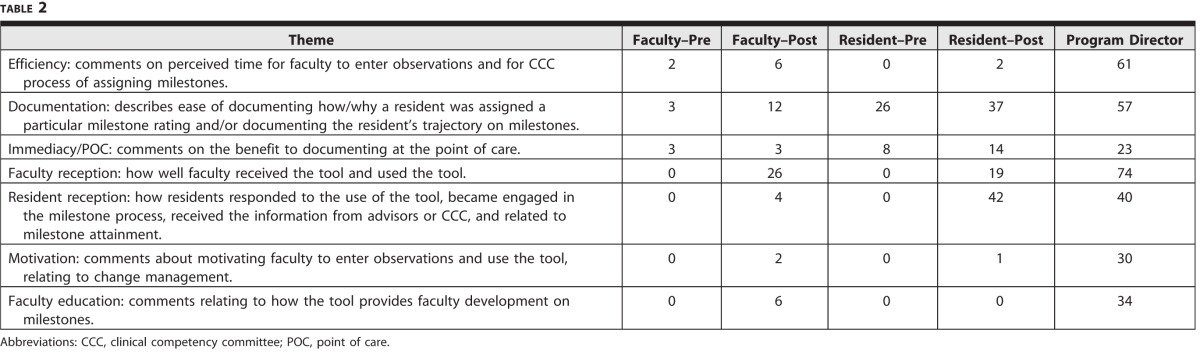

Program type, survey response rates, and weekly app volume for the 8 participating programs are shown in table 1. Faculty survey items covering teaching settings and learner groups were used to identify respondents who did not teach residents, and their responses were not included in the analysis. Table 2 lists themes identified in open-ended survey items for faculty and residents, along with frequency of occurrence. Seven themes that emerged from the program director interviews provide the framework for presenting the results.

Table 2.

Themes and Frequency of Occurrence From Program Director Interviews and Survey Open-Ended Comments

Efficiency of Documentation and Compilation of Data

Efficiency and improved CCC discussions was 1 key theme. One program cited a substantial decrease in the time required for faculty assessment of milestone achievement (an average of 7 minutes per resident versus 30 minutes previously), noting at the same time that the additional face-to-face faculty time could be focused on areas for improvement and professional development for residents. Another program director stated that having the data from the M3App decreased stress associated with CCC meetings. Residents also reported on improvement in the efficiency of documentation of the milestones. One resident commented that “the M3App . . . [is] a timely, simple way to share feedback with us.”

We found 2 adoption patterns for the M3App (table 1). Programs A through D used the M3App regularly and intensively. Three of the lower-volume programs (F–H) used the M3App consistently, but to a lesser extent. A program director explained, “We have . . . used the CCC to identify areas we were not assessing well by other means and are trying to focus on those with the app.” Others encouraged faculty to reference the M3App for specific comments. “We shortened or eliminated some of the other evaluations [we] ask faculty to do as a trade [off] for doing more M3App observations.” We refer to those programs as “fill-in-the-gaps” adopters. Program E was unable to sustain regular use of the tool, in large part because it was required to implement a new electronic evaluation system concurrent with the M3App rollout, and could not fully address both changes. Faculty at the other programs commented on the inability to integrate the M3App with the program's existing required electronic evaluation system, but reported that they were able to use both systems.

Quality and Specificity of Milestone Documentation

In the 7 programs that consistently used the M3App, program directors indicated that the app helped improve the quality of resident evaluations. One program director stated, “We had very little written descriptive comments on our routine evaluations and now we're able to give really meaningful, very specific observations to our residents and they are grateful for it.” The M3App also improved transparency for assignment of a certain level on the milestones. A program director commented, “At our first CCC meeting back in the fall, the M3App was the only thing we had to really point to as evidence to say why the CCC made a decision for assigning the various milestones.” Residents also noted a change in the feedback that they received after implementation of the app. In 1 postsurvey a resident noted, “Overall [it] has been a great improvement. In general, just the [more] specific the feedback can be the more helpful it is.”

Another aspect of feedback quality common in residents' comments concerned identification of needs for improvement. One-third of resident comments on the preimplementation survey indicated a desire for more specific, constructive feedback. As 1 resident put it, “specific examples on ways to improve, not just ‘doing well' or ‘keep reading.'” This theme recurred in more than one-fourth of postimplementation comments. Despite an overall improvement in quality and quantity of feedback, residents continue to voice a desire for added feedback—especially critical feedback.

Immediacy/Point of Care

An important objective of the M3App is to facilitate faculty evaluation of residents in real time, rather than at the end of a rotation. In presurvey open-ended items, nearly one-fourth of residents' comments reflected a desire for more frequent feedback. As 1 resident wrote, “I would like more frequent feedback that is applicable to situations in real time.” Faculty also appreciated this aspect of the tool. One commented on the postimplementation survey: “. . . when someone is on call 1 night, or they are doing something in clinic, or you see them doing an outside activity, or volunteering, there's all kind[s] of different things that we don't formally evaluate, so it's a good opportunity to [capture] those types of things.”

Resident Receptivity to M3App

Programs providing M3App feedback directly to residents noticed an increase in residents asking for M3App feedback, reinforcing the action. In interviews, 4 program directors commented that faculty were more comfortable entering constructive observations when residents were aware and engaged in the M3App process. For example, residents at 1 program developed the slogan “M3Me!” to remind faculty to use the app. One resident appreciatively captured the new energy of faculty for entering observations in writing: “I just wanted you to know this is engaging faculty in my patient care in a way that they hadn't before.”

Motivation of Learners and Faculty

We found that faculty and resident enthusiasm was associated with an increased usage volume of the M3App, and that the 3 programs with the earliest implementation and highest volume showed the most enthusiasm for the app. Directors of the 3 highest-use programs cited the importance of a faculty champion in achieving and maintaining consistent use of the app. Directors of programs with lower app use likewise cited the value of a faculty champion. As 1 noted, “You have to have a champion, or in our case, 2 or 3. If you don't, there's just no way it's going to work.” Faculty found motivation in resident engagement as well. One program director observed: “The faculty like the easy accessibility, and now I have some residents who actually will ask me to document on M3App.” Another faculty member described how preparing an advisee's assessment helped clarify the value of the M3App: “Giving residents feedback through the app had been an abstract idea—just an electronic form of what I think I do in person. . . . [T]hat meant my internal incentive was low to use the app. While assessing my advisee within each milestone, it became crystal clear how vital specific comments by faculty were within each milestone. I really see that value of the app now, and it really is easy to use.”

Faculty Education on Use of Milestones

Several program directors commented that the more faculty who used the M3App, the more specific their observations and selection of the milestones became. Program directors also indicated that the app was a useful tool in familiarizing and educating faculty about the milestones; the ability to start with an observation, then select the corresponding milestone by toggling between the observation and the milestone, simplified the process. As 1 program director said, “I can definitely see that those who used the M3App more frequently have a higher comfort level with the milestones and evaluating the residents on that.”

Discussion

Our results offer important insights from resident, faculty, and organizational perspectives. Residents emphasized the importance of feedback that is frequent, as close as possible to real time, specific, and critical. They appreciated the M3App's ability to improve each of these areas, and to provide a platform that facilitates continued efforts to help faculty constructively identify residents' strengths and improvement needs. It is perhaps for these reasons that residents valued written feedback as much as feedback given in person. Residents' continuing desire for critical feedback—and faculty members' apparent reluctance to provide it—reflects a common pattern9 and an opportunity for faculty development. Faculty showed an appreciation for the same aspects of feedback as residents, were also appreciative of a tool that allowed them to provide it, and found the M3App helpful in becoming familiar with milestones. Additionally, many programs found that the data collected in the M3App allowed them to shorten the time to review each resident in the CCC.

From an organizational standpoint, our results emphasize much of what is already known about implementing change. Within Kotter's framework,7 the new accreditation system and the milestones create both urgency (Step 1) and a vision for change (Step 3). Other factors identified by program directors include the importance of local champions who, as change leaders, help form powerful coalitions (Step 2) and identify and help remove obstacles (Step 5); clearly communicated goals (Step 4: communicate the vision for change); strategic timing (Step 6: create short-term wins; Step 7: build on change); and the importance of institutional buy-in (Step 8: anchor change in the institution's culture).

The feedback collected from the pilot implementation resulted in improvements to the M3App. It has been enhanced to automatically generate monthly reports to residents and their advisers (relieving program coordinators of the task), as well as reminders to residents prior to CCC meetings, encouraging them to ask faculty for feedback. Overall, the tool was well received and its use is being expanded to additional specialties.

Limitations of our study include the fact that data are limited to learner and faculty perceptions, and do not include data on residents' attainment of the milestones. It is possible that the results may be biased by more positive responses from higher-use programs, as well as a general reluctance to give negative feedback. Faculty who did not use, or had a negative view of the app, may not have completed the surveys or taken the time to provide constructive comments. Although faculty explicitly credited the M3App with increased comfort in using the milestones, we have no information on other evaluation tools used by participating programs, and the absence of a control group makes it difficult to separate the effect of the M3App from potentially generally increasing faculty familiarity with the milestones.

Further study is needed to address validity, quality, and frequency of M3App faculty observations. Additionally, the value of the app for use in real-time 360-degree evaluations (peer, nursing, patient, care manager, self) should be explored.

Conclusion

The M3App combines in a single evaluation tool a narrative assessment that allows observers to record observations of resident behavior in a variety of settings and link them to specific milestone subcompetencies. The app generates narrative observations, organized by milestone/subcompetency for feedback to residents, and use by CCCs. Faculty and residents credited the M3App with improving both the quality and efficiency of resident feedback, with residents appreciating the frequency, proximity, and specificity of feedback, and program directors reporting the app improved familiarity with the milestones. Common challenges to implementation included lack of a physician champion and competing program priorities.

References

- 1. Hauer KE, Chesluk B, Iobst W, et al. Reviewing residents' competence: a qualitative study of the role of clinical competency committees in performance assessment. Acad Med. 2015; 90 8: 1084– 1092. [DOI] [PubMed] [Google Scholar]

- 2. Franko O, Tirrell TF. Smartphone app use among medical providers in ACGME training programs. J Med Syst. 2012: 36 5: 3135– 3139. [DOI] [PubMed] [Google Scholar]

- 3. Yarris LM, Jones D, Kornegay JG, et al. The Milestones passport: a learner-centered application of the Milestone framework to prompt real-time feedback in the emergency department. J Grad Med Educ. 2014; 6 3: 555– 560. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Cooney CM, Cooney DS, Bello RJ, et al. Comprehensive observations of resident evolution: a novel method for assessing procedure-based residency training. Plast Reconstr Surg. 2016; 137 2: 673– 678. [DOI] [PubMed] [Google Scholar]

- 5. Ross S, Poth CN, Donoff M, et al. Competency-based achievement system: using formative feedback to teach and assess family medicine residents' skills. Can Fam Physician. 2011; 57 9: e323– e330. [PMC free article] [PubMed] [Google Scholar]

- 6. Steinert Y, Cruess RL, Cruess SR, et al. Faculty development as an instrument of change: a case study on teaching professionalism. Acad Med. 2007; 82 11: 1057– 1064. [DOI] [PubMed] [Google Scholar]

- 7. Kotter JP. Leading Change. Boston, MA: Harvard Business School Press; 1996. [Google Scholar]

- 8. Crabtree BF, Miller WL. Using codes and code manuals: a template organizing style of interpretation. : Crabtree BF, Miller WL. Doing Qualitative Research. 2nd ed. Thousand Oaks, CA: Sage; 1999: 163– 178. [Google Scholar]

- 9. Zenger J, Folkman J. Your employees want the negative feedback you hate to give. Harvard Business Review. January 15, 2014. https://hbr.org/2014/01/your-employees-want-the-negative-feedback-you-hate-to-give. Accessed April 22, 2016. [Google Scholar]