ABSTRACT

Background

There is limited information on the impact of widespread adoption of the electronic health record (EHR) on graduate medical education (GME).

Objective

To identify areas of consensus by education experts, where the use of EHR impacts GME, with the goal of developing strategies and tools to enhance GME teaching and learning in the EHR environment.

Methods

Information was solicited from experienced US physician educators who use EPIC EHR following 3 steps: 2 rounds of online surveys using the Delphi technique, followed by telephone interviews. The survey contained 3 stem questions and 52 items with Likert-scale responses. Consensus was defined by predetermined cutoffs. A second survey reassessed items for which consensus was not initially achieved. Common themes to improve GME in settings with an EHR were compiled from the telephone interviews.

Results

The panel included 19 physicians in 15 states in Round 1, 12 in Round 2, and 10 for the interviews. Ten items were found important for teaching and learning: balancing focus on EHR documentation with patient engagement achieved 100% consensus. Other items achieving consensus included adequate learning time, balancing EHR data with verbal history and physical examination, communicating clinical thought processes, hands-on EHR practice, minimizing data repetition, and development of shortcuts and templates. Teaching strategies incorporating both online software and face-to-face solutions were identified during the interviews.

Conclusions

New strategies are needed for effective teaching and learning of residents and fellows, capitalizing on the potential of the EHR, while minimizing any unintended negative impact on medical education.

What was known and gap

The impact of the electronic health record (EHR) on graduate medical education is not well understood.

What is new

A Delphi study of experts explored teaching strategies and aspects of the EHR that enhanced versus created challenges for education.

Limitations

Abbreviated Delphi approach, single EHR system may reduce generalizability.

Bottom line

Teaching in an EHR setting calls for new strategies that capitalize on advantages of the technology while minimizing barriers and drawbacks.

Introduction

The use of the electronic health record (EHR) has risen dramatically in the last decade. Both undergraduate and graduate medical education (GME) learners are increasingly required to use the EHR along with their teachers for patient care, predicated by evidence that use of the EHR has clinical benefits.1 Yet, unintended negative consequences have accompanied the introduction of the EHR, such as heightened susceptibility to automation bias, decreased quality of notes due to copying and pasting, and disruption of the patient-physician relationship.2 Additionally, the impact of the EHR on medical education has yet to be studied, despite widespread adoption of this technology.3–6

The limited existing literature suggests that instruction around meaningful use of the EHR is key during initial institutional introduction.2,6,7 The responsibility has fallen on educators to identify methods for developing and incorporating effective teaching strategies that accommodate navigating the complicated and at times onerous EHR, and the simultaneous provision of patient care and GME. Best practices and strategies for teaching medical trainees in the setting of EHR have not been identified or widely shared with the medical education community.

We sought to investigate the best practices and teaching strategies for the educational process using the EHR. To this end, the Delphi technique was used to query national medical education experts and identify consensus on which techniques and tools are most effective for teaching while using the EHR. The Delphi process is a technique used to obtain a consensus from a group of experts about a topic or issues for which there is little or no definite evidence,8 and is an ideal initial step toward the goal of improving teaching and learning while using the EHR.

Methods

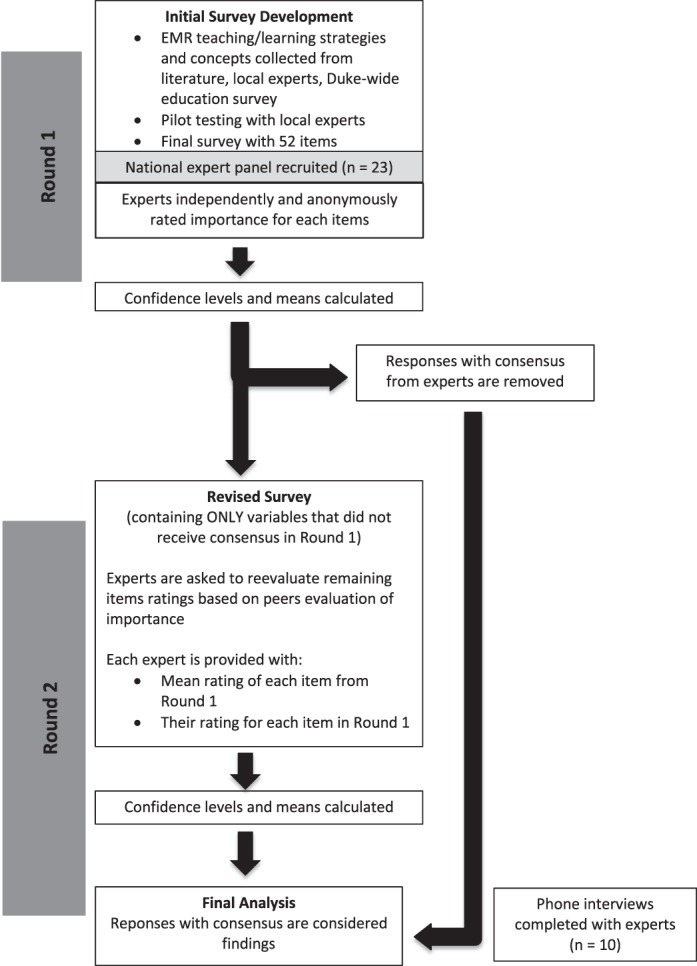

We conducted a 3-part survey of GME experts. It consisted of 2 rounds of online surveys of the expert panel (using the Delphi technique), followed by telephone interviews of experts by the study team (figure).

Figure.

Summary of Study Design

Note: A Delphi survey design was used for the study. A national panel of graduate medical education (GME) experts was recruited. The panel rated the importance of aspects of GME using the electronic health record with an online survey. Their responses were analyzed for consensus among the panel. A revised online survey was then distributed to the same panel, with the items that did not reach consensus; the revised survey included the mean score from the whole panel, giving the panelists the opportunity to change their rating based on the group's score. After completion of the second round, panelists completed individual phone interviews with the study team to share their strategies.

Creation of the Survey

The survey was developed by identifying aspects of clinical care, documentation, and GME from several sources: a literature review,2–5,9,10 several small group discussions with education experts at 1 institution (8 individuals including residency program directors and GME administrators) to find items based on the ACGME core competencies, and an open-ended survey of the faculty at our institution. Next, the authors (A.R.A., M.R., J.A.R.) developed question stems, 4- and 5-item scales, and statement wording in consultation with a survey methodology expert at the Duke University Social Science Research Institute. The survey was pilot tested on faculty (4 residency program directors and core clinical faculty not involved in its development) for clarity and length, and duplicate items and those that lacked clarity were excluded.

The Round 1 survey (provided as online supplemental material) included 3 stem questions and 52 individual items covering aspects of clinical care and resident and fellow teaching and learning in settings using the EPIC EHR (EPIC, Verona, WI). Twenty-nine items had a 4-level stem of asking about importance of the item to trainees' teaching and learning, and 23 items had a 5-level stem asking whether the item would enhance or represent a concern for the teaching and learning of residents and fellows in an EHR setting. Consensus that an item had expert agreement was defined as having 95% confidence interval (CI) with predetermined cutoffs. We considered responses to be positively relevant if they had a lower 95% confidence level of ≥3 and a standard deviation (SD) < 0.8 for items with a 4-point scale, and a lower 95% CI of ≥ 4 and SD < 1 for items with a 5-point scale.11 Items were determined to be negatively relevant with an upper 95% CI of ≤ 2 (4-point scale) or ≤ 1 (5-point scale).

The Round 2 survey consisted of items from the Round 1 survey where there was not clear consensus within the expert panel. For these items, panelists were shown their individual prior response and the mean score of the panel, and given the option of changing their score or leaving it unchanged.

Survey Administration

The survey was distributed by the Duke University Office of Clinical Research via e-mail using RedCAP12 to a national panel of physician educators with experience using EPIC software in GME settings. Panelists were identified by consultation with EPIC representatives, networking with colleagues at academic institutions, review of the literature, and referral by other panelists. Panelists included faculty from 15 states. The survey invitation stated that the expert panel would be acknowledged in a publication of the work, and that panelist responses would be kept confidential. Panelists were given the option of providing their contact information for a follow-up phone interview with a study team member. A brief telephone interview (approximately 10 to 15 minutes) based on an interview guide (provided as online supplemental material) was done after completion of both survey rounds. Content analysis of the interview notes was conducted to generate patterns and themes from the aggregate data (A.R.A., M.R., J.A.R.). Data were coded and sorted to identify themes regarding effective and ineffective teaching strategies.

The study was determined to be exempt by the Duke University Institutional Review Board.

Statistical Analysis

Data from both survey rounds were analyzed using SAS software (SAS Institute Inc, Cary, NC). Data were summarized using descriptive statistics (n, means, SD, 95% CI) for the ratings of individual items. To account for items left unanswered during Round 2, individual items' mean from Round 1 was used for analysis.

Results

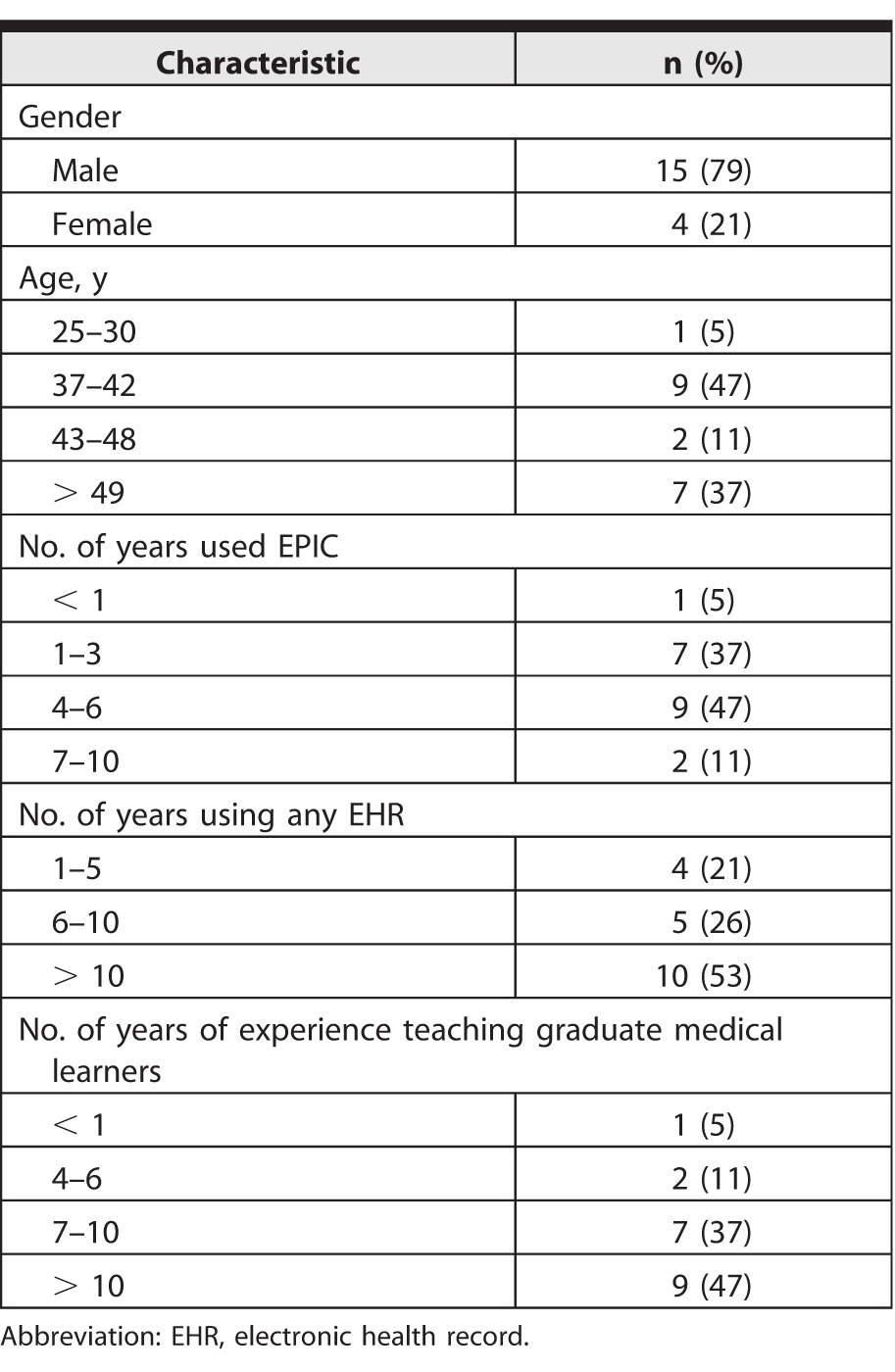

Nineteen physicians participated in Round 1 of the Delphi survey (table 1), 12 participated in Round 2, and 10 participated in the telephone interview about best practices.

Table 1.

Participant Demographics (n = 19)

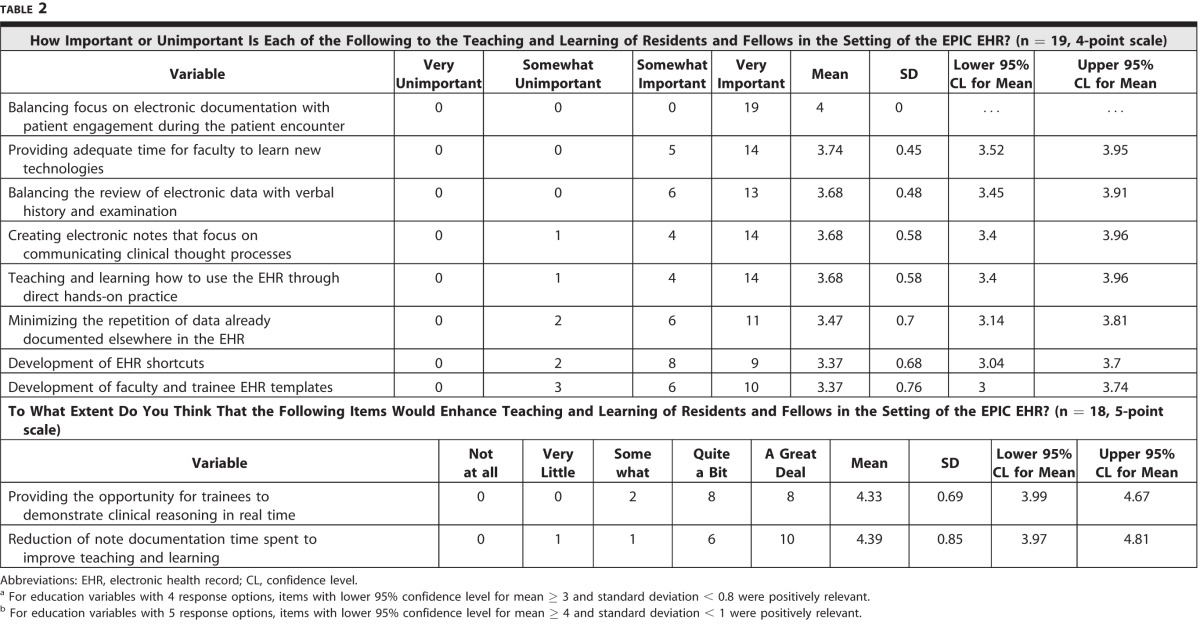

Delphi Survey Rounds

Ten of the 52 education items were identified in Round 1 as important to the teaching and learning of trainees in settings with an EHR, or as items that would significantly enhance the teaching and learning of trainees (table 2). “Balancing focus on electronic documentation with patient engagement during the patient encounter” had the highest consensus, and was the only item with 100% group consensus that it was “very important.” Round 1 did not produce any education items considered unimportant to the teaching and learning of trainees in settings with an EHR, or items that might detract from teaching and learning of trainees (negatively relevant). Thirteen variables with the least consensus (lowest quartile SD) were eliminated, as these were the least likely to reach consensus with Round 2 of the survey (provided as online supplemental material). In Round 2, we identified 6 additional education items that did not meet strict criteria for group consensus but were very close; of these, 5 were important and 1 was unimportant (provided as online supplemental material).

Table 2.

Delphi Survey Round 1 Education Variables With Consensusa,b

Best Practices Identified by Telephone Conversation

Responses from panelists regarding their experience with teaching using the EHR were qualitatively analyzed, and fell into 2 categories: effective strategies and ineffective strategies (box). In the effective strategies rubric, several themes related to chart and image review, shared lists, faculty modeling, and use of templates were identified. Ineffective strategies included EHR and photographic images, communication with the EHR, use of templates, and instruction on the use of the EHR. Interestingly, panelists commented that time was a barrier to using the EHR. Suggested future upgrades to the EHR included “search backward” and “track changes” functions, which might enhance the educational aspects of the system.

Box Best Practices Identified by Telephone Interviews Effective Strategies

Chart Review

• Review notes with trainee

• Compare amended note to initial note

• Provide feedback on the trainee's notes and ability to edit the trainee's note

Review imaging with the team/trainee

Create a shared list of interesting cases for the team

Faculty model to trainees how to use electronic health record (EHR)

Templates

• Use common templates

• Use templates as teaching tool for billing

• Leverage the templates, order sets, and smart phrases

Ineffective Strategies

Using photos slowed down the system (institution specific)

Relying on the EHR in place of verbal communication

Using templates for procedure notes (as opposed to step-by-step description each time the procedure is done)

Classroom instruction on use of the EHR is not as effective as just-in-time learning

Discussion

While the majority of items in the survey did not achieve consensus, 10 out of 52 questions reached expert consensus. The panelists unanimously agreed that achieving balance between EHR use and patient interaction was very important to the teaching and learning of residents and fellows in EHR settings, but their opinions differed on other aspects of the EHR for education. Panelists were in agreement about the importance of utilizing the EHR to assess the learner's clinical reasoning in real time. They also agreed that it was important to increase teaching time with learners, and felt that developing shortcuts, minimizing repetition of data already documented in the EHR, and decreasing documentation time to provide more time for teaching were important.

Telephone interviews with the expert panelists revealed that use of templates was a controversial topic: on the one hand, panelists thought templates helped learners understand the best workup for a particular problem, were useful for teaching, and provided a teaching model for billing. On the other hand, some felt that templates allowed the learner to “skip” the repetition and reinforcement of constructing a note de novo, particularly with regard to learning the steps involved in medical procedures.

There are several limitations to our study. The study used an abbreviated Delphi design with 2 rounds, whereas at least 3 rounds are typically done. We omitted the third round to minimize the further dropout of panelists, and because little added consensus was reached in Round 2. Our surveys used 4-point and 5-point scales for questions, whereas the standard is 5-point. We chose the 4-point scale to force the panelists to choose between a positive and negative response, as there is no neutral response option. Conducting our study on the EPIC EHR may result in reduced generalizability to other systems and vendors. Finally, it is not clear why there was a lack of consensus for some questions, when several closely related topics reached consensus, suggesting that panelists may have found some questions unclear or ambiguous.

Future research should include developing teaching interventions based on the identified strategies with stakeholders, such as residents, fellows, and clinical faculty, and then testing their efficacy in the education of trainees.

Conclusion

We utilized the Delphi method to explore consensus among a diverse group of GME experts, and identified areas of consensus and shared strategies on EHR use in GME. New teaching and learning strategies are needed to capitalize on the potential of the EHR, while minimizing its possible negative impact on medical education.

Supplementary Material

References

- 1. King J, Patel V, Jamoon EW, et al. Clinical benefits of electronic health record use: national findings. Health Serv Res. 2014; 49 1, pt 2: 392–404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Pageler NM, Friedman CP, Longhurst CA. Refocusing medical education in the EMR era. JAMA. 2013; 310 21: 2249– 2250. [DOI] [PubMed] [Google Scholar]

- 3. Schenarts PJ, Schenarts KD. Educational impact of the electronic medical record. J Surg Educ. 2012; 69 1: 105– 112. [DOI] [PubMed] [Google Scholar]

- 4. Tierney MJ, Pageler NM, Kahana M, et al. Medical education in the electronic medical record (EMR) era: benefits, challenges, and future directions. Acad Med. 2013; 88 6: 748– 752. [DOI] [PubMed] [Google Scholar]

- 5. Peled JU, Sagher O, Morrow JB, et al. Do electronic health records help or hinder medical education? PLoS Med. 2009; 6 5: e1000069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Keenan CR, Nguyen HH, Srinivasan M. Electronic medical records and their impact on resident and medical student education. Acad Psychiatry. 2006: 30 6: 522– 527. [DOI] [PubMed] [Google Scholar]

- 7. Goveia J, Van Stiphout F, Cheung Z, et al. Educational interventions to improve the meaningful use of Electronic Health Records: a review of the literature: BEME Guide No. 29. Med Teach. 2013; 35 11: e1551– e1560. [DOI] [PubMed] [Google Scholar]

- 8. Thangaratinam S, Redman CWE. The Delphi technique. Obstetrician Gynaecol. 2005; 7 2: 120– 125. [Google Scholar]

- 9. Ellaway RH, Graves L, Greene PS. Medical education in an electronic health record-mediated world. Med Teach. 2013; 35 4: 282– 286. [DOI] [PubMed] [Google Scholar]

- 10. Brickner LM, Clement M, Patton M. Resident supervision and the electronic medical record [author reply in Arch Intern Med. 2008; 168(10):1118]. Arch Intern Med. 2008; 168 10: 1117– 1118. [DOI] [PubMed] [Google Scholar]

- 11. Lindblad CI, Hanlon JT, Gross CR, et al. Clinically important drug-disease interactions and their prevalence in older adults. Clin Ther. 2006; 28 8: 1133– 1143. [DOI] [PubMed] [Google Scholar]

- 12. Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009; 42 2: 377– 381. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.