Abstract

Background Despite the recent focus on improving the quality of patient information, there is no rigorous method of assessing quality of written patient information that is applicable to all information types and that prescribes the action that is required following evaluation.

Objective The aims of this project were to develop a practical measure of the presentation quality for all types of written health care information and to provide preliminary validity and reliability of the measure in a paediatric setting.

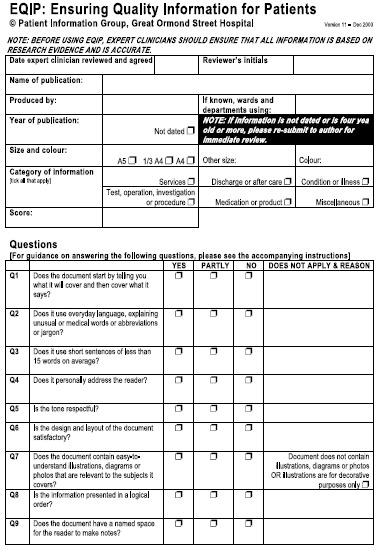

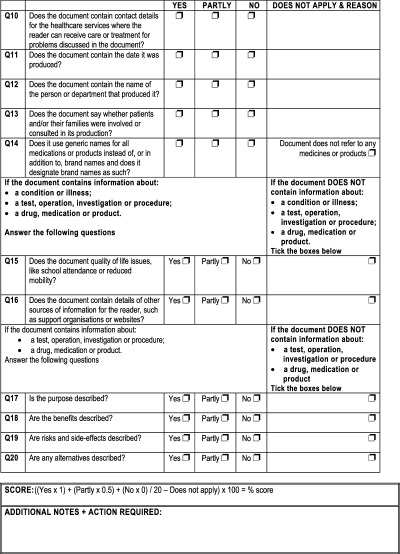

Methods The Ensuring Quality Information for Patients (EQIP) tool was developed through a process of item generation, testing for concurrent validity, inter‐rater reliability and utility. Patient information managers and health care professionals tested EQIP in three annual audits of health care leaflets produced by a children's hospital.

Results The final tool comprised 20 items. Kendall's τ B rank correlation between EQIP and DISCERN was 0.56 (P = 0.001). There was strong agreement between intuitive rating and the EQIP score (Kendall's τ B = 0.78, P = 0.009). Internal consistency using Cronbach's α was 0.80. There was good agreement between pairs of raters (mean κ = 0.60; SD = 0.18) with no differences based on types of leaflets. Audits showed significant improvement in the number of leaflets achieving a higher quality EQIP rating over a 3‐year period.

Conclusions EQIP demonstrated good preliminary validity, reliability and utility when used by patient information managers and healthcare professionals for a wide variety of written health care information. EQIP uniquely identifies actions to be taken as a result of the quality assessment. Use of EQIP improved the quality of written health care information in a children's hospital. Wider evaluation of EQIP with written information for other populations and settings is recommended.

Keywords: pamphlets, patient education, patient information, information management, information services, quality assessment

Research has shown that patients may forget half of what they have been told within 5 min of a medical consultation and retain only 20% of the information conveyed to them. 1 , 2 , 3 However, patient retention of information can be improved by 50% if supplemental written information is provided. 3 , 4 Providing patients and families with written information may reduce anxiety, improve use of preventative or self care measures, increase adherence to therapy, prevent communication problems between health care providers and patients, and lead to more appropriate and effective use of health care services. 5 , 6 , 7 , 8 , 9

The importance of written patient information has been recognized by the Department of Health and the NHS. The NHS Plan 10 states that patient information is an integral part of the patient journey. The Good Practice in Consent implementation guide 11 also provides guidance regarding clinician communication with patients undergoing medical or surgical treatment and emphasizes the importance of written information for patients.

Defining ‘high quality’ written health care information

The Centre for Health Information Quality 12 identifies three key attributes of quality health care information materials: 13 (a) the information should be clearly communicated; (b) be evidence based; and (c) involve patients in the development of the materials. The King's Fund identifies eight criteria for judging the quality of health care information materials 14 , 15 that include evaluation of the content and presentation of the information. Standards 3.1.1 and 3.2.1 from the Clinical Negligence Scheme for Trusts Clinical Risk Management Standards 16 state that ‘appropriate information is provided to patients on the risks and benefits of the proposed treatment or investigation, and the alternatives available, before a signature on a consent form is sought’ and that ‘proposals for treatment should be supported by written information’.

In 2002, the Department of Health published the ‘Toolkit for producing patient information’, 17 which provides detailed guidelines for writing and designing health care information. The toolkit includes guidance in the form of ‘points to consider’ and checklists for presentation of various types of information.

Readability is often mentioned as a measure of the quality of written health care information and several scales have been developed to evaluate the reading level of written information. 18 The lower the reading level, the more likely that the information can be read and understood by a large proportion of the public. However, readability is only one aspect of reading comprehension. 19 Furthermore, a leaflet with a low readability score may not have sufficient depth to meet the information needs of patients with chronic and complex conditions who have become familiar with health terminology. It is also challenging to provide written information for people from diverse ethnic and cultural backgrounds, or for people with learning difficulties.

The criteria described above provide overall standards of best practices for development of written health care information. However, they give little specific guidance on how to achieve the standards in practice. Furthermore, most of the guidance emphasizes the need for a strong evidence base and focuses on the use of written information for patient decision‐making. However, in many instances written patient information is intended to inform patients about health care services and self‐care that may or may not be underpinned by a research evidence base. Nevertheless, written patient information of all types should meet core standards of completeness and clarity of presentation. 17

Measuring the quality of written health care information

Numerous tools have been developed to facilitate more objective measurement of the quality of written health care information. Most of these instruments have a very specific focus, such as the evaluation of written information about medicines, 20 , 21 , 22 and robust validity and reliability testing is generally lacking.

An extensive literature search revealed only three tools developed for evaluation of written health care information other than medicines. The Area Health Educator Center tool 23 was designed for health professionals to evaluate the appropriateness of information for unskilled readers, focusing on organization, writing style, appearance and appeal of the information. However, no details are available about the methods used to rate the information or about the psychometric properties of the tool.

Gibson et al. 24 developed a pair of assessment tools for health professionals and patients to evaluate the appearance, content and usefulness of a large sample of family practice patient education materials. The tools showed good reliability, however, only a moderately low correlation was found between professional and patient assessment of the dimension of usefulness and no correlation in assessment of appearance and content.

DISCERN was developed for health professionals and consumers to evaluate the quality of written information specifically about treatment choices. 25 , 26 , 27 It does not evaluate readability or design aspects of the written materials. Adequate to good inter‐rater reliability of DISCERN was found in several studies of written health care information about treatment choices produced by patient self‐help groups, 25 prostate cancer 28 and provided by general family practitioners. 29 Although the authors suggest that it can be used to evaluate other types of health care information, 26 DISCERN does not contain questions pertinent to important health care information such as diagnostic tests, health care services or discharge from hospital. Furthermore, no published literature was found on the use of DISCERN to evaluate written health care information for parents or caretakers of children.

From our discussions with patient information managers and health care professionals, the need emerged for a more comprehensive and practical tool to evaluate the large volume of written health care information produced within the health service. The tool also needed to assist the user to make decisions about the urgency of any revisions that are needed to be made to written information in order to prioritize limited resources and minimize costs. Therefore, the aims of this project were to develop a practical measure of the presentation quality for all types of written health care information and to provide preliminary validity and reliability of the measure in a paediatric setting.

Methods

Design

The project was conducted in three phases. The first stage involved operationally defining presentation quality for written health care information from the literature and development of the draft tool by two patient information experts, and initial usability testing. In the second phase, the tool was pilot‐tested for concurrent and criterion validity. The third phase evaluated the inter‐rater reliability and utility of the tool using large diverse samples of written health care information.

Phase 1

The first stage involved operationally defining presentation quality for written health care information from a comprehensive review of the literature and development of the draft tool (Ensuring Quality Information for Patients, EQIP) by the two patient information experts (BM, a masters‐qualified librarian and patient information manager with a background in information science; and HB, a masters‐qualified nurse with extensive experience of clinical audit). An extensive search of bibliographic databases (AMED, Embase, Medline, CINAHL, PsychINFO and LISA) was carried out using the following search terms: patient education, information services, pamphlets, patient information (Embase), client education, information (PsychINFO) and consumer health information (CINAHL) alongside a hand search of relevant books and grey literature. The search was limited to articles written in English. The review of the literature informed the development of the first draft of the EQIP tool.

A purposive sample of nine individuals evaluated five randomly selected health care information leaflets produced by the children's hospital to establish usability of the draft EQIP tool. The sample included two parents of young patients, five hospital volunteers with varying educational backgrounds, and two members of the hospital clinical staff. The parents and volunteers were used to test whether the tool was comprehensible and usable by lay people; the clinicians tested whether the tool was usable by clinical staff as well as patient information managers.

Phase 2

In the second phase, the revised EQIP tool was pilot‐tested for concurrent and criterion‐related validity to determine if EQIP was able to distinguish between information of poor and high quality and correlated with other measures of information quality. 30 A randomly selected sample of 85 patient and family information leaflets (33% of the total number published by the children's hospital in 2000) were independently evaluated by two of the investigators using the revised EQIP and DISCERN. The DISCERN was the most widely used tool in the UK at the time of this study and is broadly similar in its objectives to the new EQIP tool. 25 To further establish criterion‐related validity, another expert rater was independently asked to give a rating of the quality of the information and the actions required for each of the leaflets.

Phase 3

The third phase evaluated the inter‐rater reliability to ensure that different raters would give consistent scores using the tool. 31 Pairs of raters from a group of five volunteer health care professionals rated each of the 85 leaflets (17 leaflets per pair). Following additional revisions of the items to improve clarity, the inter‐rater reliability of the EQIP tool was tested in the 2001 annual audit of 165 leaflets published by the children's hospital trust by three patient information experts and two volunteer health care professionals.

In the 2002 annual audit of leaflets published by the children's hospital, the final EQIP tool (Table 2) was evaluated for adequacy of the training package (available from the corresponding author), scoring, and utility across different types of health care information. This was performed by eight patient information experts and two volunteer health care professionals. Lastly, 21 leaflets of the following types: information about health care services, diseases or conditions, discharge and after care, procedures, and medications, were each evaluated by two raters to further assess inter‐rater and reliability.

Table 2.

Results of annual audits of information leaflets 1

| EQIP action | 2000 (n = 60 leaflets) | 2001 (n = 259 leaflets) | 2002 (n = 155 leaflets) |

|---|---|---|---|

| Continue to stock; review in 2–3 years | 14 | 18 | 43 |

| Continue to stock; review in 1–2 years | 24 | 106 | 85 |

| Continue to stock; begin review/ revision now and replace within 6 months to 1 year | 18 | 98 | 26 |

| Remove from circulation immediately | 4 | 37 | 1 |

EQIP, Ensuring Quality Information for Patients.

1 χ 2 = 69.12, P = 0.001.

Results

Phase 1

The literature review was a comprehensive qualitative review because there was insufficient research literature for formal systematic review and critique. Key themes were extracted from the literature review and tabulated. Based on the review, the authors decided to limit the quality assessment tool for evaluation of the presentation of the written information, specifically: completeness, appearance, understandability and usefulness, and 20 criteria that addressed these key concepts were identified from the literature (Table 1). The rationale for this decision was that the assessment of the quality of the research evidence and accuracy of the information could only be performed by a clinical expert within the specific field. Therefore, the accuracy of information had to be a prerequisite of the presentation quality assessment.

Table 1.

Quality criteria for written patient information

| Quality criterion | Rationale | Question in EQIP |

|---|---|---|

| Have clearly stated aims and achieve them | • To aid patient and family in deciding if information is suitable 17 | 1 |

| • To aid clinician in deciding appropriateness of the material for patient and family 15 | ||

| Written using ‘everyday’ language, explaining unusual or medical words or abbreviations or jargon | • To achieve the widest possible range of readership, the reading age should be taken into account seriously, 34 and should not exceed a reading age of 12 35 , 36 | 2 |

| Written using short sentences | • To achieve the widest possible range of readership, the reading age should be taken into account seriously 34 , and should not exceed a reading age of 12 35 , 36 | 3 |

| • Shorter sentences (of around 15 words) are easier to understand 17 , 37 | ||

| Written so that it personally addresses the reader | • In written information, patients prefer to be addressed as personally, for example ‘you’ rather than ‘the patient’ 15 , 17 | 4 |

| • Active sentences are easier to understand than passive sentences 17 | ||

| Written so that the tone is respectful | • Information should not offend the reader, nor discriminate on grounds of race, religion, sex, gender or disability 14 | 5 |

| • Information should not patronize the reader 17 | ||

| Design of information satisfactory | • Complies with local and national design guidelines 14 , 17 , 20 , 35 , 38 | 6 |

| Contains easy‐to‐understand illustrations, diagrams or photos that are relevant to the subject of the information | • A good illustration will simplify or reinforce information 14 , 17 | 7 |

| • Illustrations can aid information recall 39 | ||

| Presented in a logical order | • To make the information easier to read and understand 40 | 8 |

| • To locate information quickly and easily 17 | ||

| Contain a space to make notes | • To reinforce partnership between clinician and patient 15 | 9 |

| • To remind patient to make notes of questions to ask or instructions to follow 41 | ||

| Contain contact details for health care services | • To ensure reader can contact ward or department in case of questions or emergency 17 | 10 |

| Contain the date information was produced | • To enable reader to decide if information is recently produced 17 | 11 |

| • To enable author to review and update information regularly 17 | ||

| Contain name of person or department that produced information | • To indicate whether relevant department produced information 17 | 12 |

| • To increase credibility 42 | ||

| Indicates whether information was produced with assistance from users of service | • Patient information needs may differ from those of clinicians 15 , 43 , 44 | 13 |

| Contains reference to quality of life issues | • To enable self‐care if possible 17 | 14 |

| • To give realistic expectations 45 | ||

| Uses generic names for medications or products, or identifies brand names as such | • To eliminate any possibility of bias towards one company or product 46 | 15 |

| • To reduce risk of information becoming obsolete when a medication or product is no longer used 17 | ||

| Contains details of other sources of information | • To encourage reader to obtain a full understanding of treatment or condition 17 | 16 |

| Describes the purpose | • To comply with CNST Clinical Risk Management Standard 3 (criteria 3.1.1 and 3.2.1) 17 , 47 , 48 | 17 |

| Describes the benefits | • To comply with CNST Clinical Risk Management Standard 3 (criteria 3.1.1 and 3.2.1) 17 , 47 , 48 | 18 |

| Describes risks and side effects | • To comply with CNST Clinical Risk Management Standard 3 (criteria 3.1.1 and 3.2.1) 17 , 47 , 48 | 19 |

| Describes alternatives | • To comply with CNST Clinical Risk Management Standard 3 (criteria 3.1.1 and 3.2.1) 17 , 47 , 48 | 20 |

CNST, Clinical Negligence Scheme for Trusts.

The criteria were then rewritten as questions to be answered ‘yes’ or ‘no’ and a four‐level scoring algorithm was devised. A ‘partly’ option was added at later stages of the validation because of comments received by usability raters. The scoring formula was devised and enabled more weight to given to ‘yes’ answers than ‘partly’ answers. The denominator for the formula varied, depending on the type of information. For instance, the denominator for a piece of information about a service would be 14, whereas the denominator for a piece of information about an operation would be 20 (Figure 1).

Figure 1.

Four action statements were developed to give patient information or service managers guidance on actions to take according to the quality score. These were assigned by quartile. Scores in the upper quartile indicated well‐written high quality presentation and were linked with the action statement: ‘Continue to stock and review or revise within two to three years’. Scores in the second quartile indicated good quality presentation with some minor problems that could be addressed when stocks were depleted and were given the action: ‘Continue to stock and review or revise within one to two years’. Scores in the lower third quartile indicated more serious problems with the quality of the presentation and were given the action: ‘Continue to stock and begin review or revision immediately so that it is replaced within six months to a year’. Scores in the bottom quartile indicated severe problems with the presentation that warranted its removal and were given the action statement: ‘Remove from circulation immediately’.

Comments on the initial version of the EQIP tool by the usability evaluators were generally very positive, with the majority understanding the questions and being able to answer them adequately. The main area of concern was the difficulty in evaluating the accuracy and completeness of the information presented in the leaflet by evaluators who are not experts in the field. This was in response to a particular question in the original version of EQIP: ‘Are there any gaps or uncertainties in the information?’ to which the respondents queried whether they would be able to answer this, without having any clinical knowledge. This question was subsequently removed from the tool, as it was inconsistent with the decision to ensure accuracy of information prior to the evaluation presentation quality. Suggestions by the usability evaluators to improve the layout of the tool were incorporated into the next version of the tool.

Phase 2

In the second phase, Kendall's τ B rank correlation coefficient between EQIP and DISCERN total scores which was 0.56 (P = 0.001) demonstrated adequate correlation between the two measures. There was also strong agreement between the expert rater's judgment of quality and actions required with the EQIP score (Kendall's τ B = 0.78, P = 0.009). These findings suggest the concurrent and criterion related validity of EQIP compared with other comparative methods of evaluation.

Phase 3

The final stage evaluated the inter‐rater reliability and utility of the tool. There was moderate agreement between the pairs of raters (mean κ = 0.545; SD = 0.20). The 2001 annual audit showed internal consistency reliability using Cronbach's α was 0.80. The 2002 annual audit showed there was good agreement between the pairs of raters (mean κ = 0.60; SD = 0.18) and no differences based on type of information.

Table 2 shows the overall change over time in the quality assessment and actions taken based on the successive annual audits of health care information leaflets. There was a significant improvement in the number of leaflets achieving a higher quality rating over the 3‐year period.

Discussion

Implementation of current guidance regarding the quality of written health care information in an objective and consistent manner is challenging. Although there are assessment tools to aid this process, EQIP is the first tool that indicates actions to be taken as a result of the assessment. It was developed using a variety of types of health care information, including leaflets about services, conditions, procedures and discharge planning. EQIP demonstrated reasonable reliability and validity over time with large samples of diverse leaflets from one institution. However, further reliability and validity testing on written health care information for other settings is needed. EQIP should also be tested as a method to evaluate the quality of health care information on the web.

The use of EQIP in annual audits of leaflets produced by a children's hospital demonstrated support for its utility in a practice setting. Health care information does not remain static and an ongoing audit programme is essential to ensure that standards of high quality written information are sustained over time.

EQIP does not rigorously assess readability or comprehension of written information. Therefore, other tools such as the FRE 32 and REALM 33 should be used in the initial development of written health care information. Furthermore, reading and comprehension levels of the intended audience should be periodically sampled to ensure that the information is written at the optimal level.

EQIP was developed for use by patient information managers and health care professionals and requires at least some knowledge of the topics. However, the perspective of the health care consumer with little or no knowledge of the topic is also important in the quality assessment. The tool developed by Gibson et al. 24 for patients’ review of family practice patient education materials might be more broadly useful for consumers evaluating the quality of written health care information.

In summary, patient information is increasingly becoming a focus of patient‐centred care in the UK, with much advice and guidance being produced by organizations such as the Department of Health and the Centre for Health Information Quality. EQIP was developed to provide a more rigorous method of assessing the presentation quality of patient information that is applicable to all information types, and prescribes the action that is required following evaluation. EQIP demonstrated utility in one setting and has been incorporated into routine practice. However, wider evaluation of EQIP with written information for other populations and setting is recommended.

Acknowledgements

The authors would like to thank members of the GOSH patient information audit teams and the Patient Information Forum steering group for their assistance in the development of EQIP.

Research at the Institute of Child Health and Great Ormond Street Hospital for Children NHS Trust benefits from R&D funding received from the NHS Executive. The views expressed in this publication are those of the authors and are not necessarily those of the NHS Executive.

References

- 1. Johnson A. Do parents value and use written information? Neonatal, Paediatric and Child Health Nursing, 1999; 2: 3–7. [Google Scholar]

- 2. Little P, Griffin S, Kelly J, Dickson N, Sadler C. Effect of education leaflets and questions on knowledge of contraception in women taking the combined contraceptive pill: randomised controlled trial. British Medical Journal, 1998; 316: 1948–1952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Entwistle VA, Watt IS. Disseminating information about health care effectiveness: a survey of consumer health information services. Quality in Health Care, 1998; 7: 124–129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. MacFarlane J, Holmes W, Gard P et al. Reducing antibiotic use for acute bronchitis in primary care: blinded, randomised controlled trial of a patient information leaflet. British Medical Journal, 2002; 324: 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Audit Commission . What Seems to be the Matter: Communication Between Hospitals and Patients. London: HMSO, 1993. [Google Scholar]

- 6. Kitching JB. Patient information leaflets – the state of the art. Journal of the Royal Society of Medicine, 1990; 83: 298–300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Ley P. Satisfaction, compliance and communication. British Journal of Clinical Psychology, 1982; 21: 241–254. [DOI] [PubMed] [Google Scholar]

- 8. Gauld VA. Written advice: compliance and recall. Journal of the Royal College of General Practitioners, 1981; 31: 553–556. [PMC free article] [PubMed] [Google Scholar]

- 9. Patterson C, Teale C. Influence of written information on patients’ knowledge of their diagnosis. Age and Ageing, 1997; 26: 41–42. [DOI] [PubMed] [Google Scholar]

- 10. Department of Health . The NHS Plan: A Plan for Investment, A Plan for Reform. London: HMSO, 2000. [Google Scholar]

- 11. Department of Health Good Practice in Consent Implementation Guide: Consent to Examination or Treatment. London: HMSO, 2001. [Google Scholar]

- 12. Centre for Health Information Quality . About CHiQ. http://www.hfht.org/chiq/about_chiq.htm. Accessed on: 9 March 2004. [Google Scholar]

- 13. Centre for Health Information Quality . Quality Tools for Consumer Health Information. Winchester: Centre for Health Information Quality, 1997. [Google Scholar]

- 14. Duman, M. Producing Patient Information: How to Research, Develop and Produce Effective Information Resources. London: King's Fund, 2003. [Google Scholar]

- 15. Coulter A, Entwistle VA, Gilbert D. Informing Patients: an Assessment of the Quality of Patient Information Materials. London: King's Fund, 1998. [Google Scholar]

- 16. NHS Litigation Authority . CNST Clinical Risk Management Standards. London: NHS Litigation Authority, 2002. [Google Scholar]

- 17. Department of Health . Toolkit for Producing Patient Information. London: HMSO, 2002. [Google Scholar]

- 18. Spadero, DC. Assessing readability of patient information materials. Pediatric Nursing, 1983; 9; 274–278. [PubMed] [Google Scholar]

- 19. Doak CC, Doak LG and Root JH. Teaching Patients with Low Literacy Skills. Philadelphia: Lippincott, 1996. [Google Scholar]

- 20. Krass I, Svarstad BL, Bultman D. Using alternative methodologies for evaluating patient medication leaflets. Patient Education and Counseling, 2002; 47: 29–35. [DOI] [PubMed] [Google Scholar]

- 21. Health Promotion Wales . Make a splash in your pharmacy http://www.hpw.wales.gov.uk/tools/splash/home/_E.html. Accessed on: 9 March 2004. [Google Scholar]

- 22. Horne R, Hankins M, Jenkins R. The Satisfaction with Information about Medicines Scale (SIMS): a new measurement tool for audit and research. Quality and Safety in Health Care, 2001; 10: 135–140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Wilson FL. Are patient information materials too difficult to read? Home Healthcare Nurse, 2000; 18: 107–115. [DOI] [PubMed] [Google Scholar]

- 24. Gibson PA, Ruby C, Craig MD, Dow MM. A health/patient education database for family practice. Bulletin of the Medical Library Association, 1991; 79: 357–369. [PMC free article] [PubMed] [Google Scholar]

- 25. Charnock D, Shepperd S, Needham G, Gann R. DISCERN: an instrument for judging the quality of written consumer health information on treatment choices. Journal of Epidemiology and Community Health, 1999; 53: 105–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Charnock D. The DISCERN Handbook. Oxford: Radcliffe Medical Press, 1998. [Google Scholar]

- 27. Shepperd S, Charnock D, Cook A. A 5 star system for rating the quality of information based on DISCERN. Health Information and Libraries Journal, 2002; 19: 201–220. [DOI] [PubMed] [Google Scholar]

- 28. Rees CE, Ford JE, Sheard CE. Evaluating the reliability of DISCERN: a tool for assessing the quality of written information on treatment choices. Patient Education and Counseling, 2002; 47: 273–275. [DOI] [PubMed] [Google Scholar]

- 29. Godolphin W, Towle A, McKendry R. Evaluation of the quality of patient information to support shared decision making. Health Expectations, 2001; 4: 235–242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Huck SW. Reading Statistics and Research, 4th edn. Boston: Pearson Education, 2004. [Google Scholar]

- 31. Trochim WM. The Research Methods Knowledge Bases, 2nd edn. http://trochim.human.cornell.edu/kb/index.htm. Accessed on: 9 March 2004. [Google Scholar]

- 32. Flesch RE. A new readability yardstick. Journal of Applied Psychology 1948; 32: 221–233. [DOI] [PubMed] [Google Scholar]

- 33. Davis TC, Long SW, Jackson RH et al. Rapid estimate of adult literacy in medicine: a shortened screening instrument. Family Medicine, 1993; 25: 391–395. [PubMed] [Google Scholar]

- 34. Alderson P. As plain as can be. Health Service Journal, 1994; 104: 28–29. [PubMed] [Google Scholar]

- 35. Albert T, Chadwick S. How readable are practice leaflets? British Medical Journal, 1992; 305: 1266–1267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Nicklin J. Improving the quality of written information for patients. Nursing Standard, 2002; 16: 39–44. [DOI] [PubMed] [Google Scholar]

- 37. Walsh D, Shaw DG. The design of written information for cardiac patients: a review of the literature. Journal of Clinical Nursing, 2000; 9: 658–667. [Google Scholar]

- 38. Great Ormond Street Hospital for Children NHS Trust Patient Information Group . How to Produce Information for Patients and Families, 3rd edn. London: Great Ormond Street Hospital for Children NHS Trust, 2003. [Google Scholar]

- 39. Hussey LC. Strategies for effective patient education material design. Journal of Cardiovascular Nursing, 1997; 11: 37–46. [DOI] [PubMed] [Google Scholar]

- 40. Wilson FL, Mood DW, Risk J, Kershaw T. Evaluation of educational materials using Orem's self‐care deficit theory. Nursing Science Quarterly, 2003; 16: 68–76. [DOI] [PubMed] [Google Scholar]

- 41. Jones R, Pearson J, McGregor S et al. Does writing a list help cancer patients to ask relevant questions? Patient Education and Counseling, 2002; 47: 369–371. [DOI] [PubMed] [Google Scholar]

- 42. Kenny T. A PIL for every ill? Patient information leaflets (PILs): a review of past, present and future use. Family Practice, 1998; 15: 471–479. [DOI] [PubMed] [Google Scholar]

- 43. Perkins L. Developing a tool for health professionals involved in producing and evaluating nutrition education leaflets. Journal of Human Nutrition and Dietetics, 2000; 13: 41–49. [Google Scholar]

- 44. Coulter A. Evidence based patient information. British Medical Journal, 1998; 217: 225–226. [Google Scholar]

- 45. Morris KC. Psychological distress in carers of head injured individuals: the provision of written information. Brain Injury, 2001; 15: 239–254. [DOI] [PubMed] [Google Scholar]

- 46. Meredith P, Emberton M, Wood C. New directions in information for patients. British Medical Journal, 1995; 311: 4–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Holmes‐Rovner M. Patient choice modules for summaries of clinical effectiveness. British Medical Journal, 2001; 322: 664–667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Stevenson FA, Wallace G, Rivers P, Gerrett D. ‘It's the best of two evils’: a study of patients’ perceived information needs about oral steroids for asthma. Health Expectations, 1999; 2: 185–194. [DOI] [PMC free article] [PubMed] [Google Scholar]