Abstract

Background Interest in the involvement of members of the public in health services research is increasingly focussed on evaluation of the impact of involvement on the research process and the production of knowledge about health. Service user involvement in mental health research is well‐established, yet empirical studies into the impact of involvement are lacking.

Objective To investigate the potential to provide empirical evidence of the impact of service user researchers (SURs) on the research process.

Design The study uses a range of secondary analyses of interview transcripts from a qualitative study of the experiences of psychiatric patients detained under the Mental Health Act (1983) to compare the way in which SURs and conventional university researchers (URs) conduct and analyse qualitative interviews.

Results Analyses indicated some differences in the ways in which service user‐ and conventional URs conducted qualitative interviews. SURs were much more likely to code (analyse) interview transcripts in terms of interviewees’ experiences and feelings, while conventional URs coded the same transcripts largely in terms of processes and procedures related to detention. The limitations of a secondary analysis based on small numbers of researchers are identified and discussed.

Conclusions The study demonstrates the potential to develop a methodologically robust approach to evaluate empirically the impact of SURs on research process and findings, and is indicative of the potential benefits of collaborative research for informing evidence‐based practice in mental health services.

Keywords: collaborative research, knowledge production, mental health, psychiatric detention, secondary analysis, service user research

Background

Public and patient involvement in the development of health and social care services has become widespread internationally in recent years. 1 , 2 , 3 Within the UK concerted efforts have been made, at a policy level, to explore the impact of public involvement on service development in health and social care. 4 , 5 Similar efforts have been made to evaluate the impact of public and service user involvement in health and social care research, 6 the UK Department of Health establishing a resource (invoNET) ‘to advance evidence, knowledge and learning about public involvement in NHS, public health and social care research’. 7 Evidence – rather than description – of the impact of service user involvement in health and social care research has become the order of the day.

Within the field of mental health, research into the involvement of people who have themselves used mental health services in the research process has largely focused to date on identifying the range of potential benefits of such involvement. A systematic review of the literature on involving service users in the delivery and evaluation of mental health services found that service users can be involved in mental health services as researchers with some benefits to patients and providers. 8 Specific benefits – empowerment of the individual researchers; recommendations for service development that are valued by service users; improved communication between service users and providers – are widely documented. 9 , 10 , 11 However, there has been much less written about the impact of user involvement in research on the means of producing knowledge. A qualitative review of service user involvement in mental health research found that ‘service users can make a clear contribution to raising new research questions, by ensuring interventions are kept ‘user friendly’, and the selection of outcome measures’. 12

Attempts to conceptualize the impact of user involvement on research findings in mental health has focused on the status of knowledge claims that such research articulates. Rose suggests that service user researchers (SURs) offer new types of evidence on which to base practice, providing fresh insights ‘from the inside’, 13 while Simpson and House note that the greater reporting of dissatisfaction with mental health services to service user interviewers (in comparison to academic researchers) has been interpreted as bringing an enhanced validity to the research. 14 Beresford attributes this to a shorter distance between direct experience and its interpretation, stating that the resulting knowledge is less likely to be ‘inaccurate, unreliable and distorted’. 15 Faulkner and Thomas suggest that service users as researchers bring an ‘ecological’ or ‘real world’ validity to research, focussing on subjective, lived experience in contrast to the objectivities offered by the natural science type approach to research that generally informs evidence‐based medicine. 16 To advance a broader, more inclusive validity, they call for an evidence‐based medicine that responds to a research process that marries expertise by experience with expertise by profession: collaboration between the service user and the traditional clinical, academic researcher. While these views suggest a strong body of belief that service user involvement corrects a ‘distortion’ or imbalance in current mental health research, it is the purpose of this document to investigate, empirically, whether SURs and conventional university researchers (URs) offer different interpretative perspectives in one research project.

The difficulties of evidencing empirically the impact of service user involvement in mental health studies have been acknowledged. 14 The few empirical studies that systematically explore – qualitatively or quantitatively – the impact of SURs on the research process in comparison to other researchers or to non‐involvement have largely focussed on the recruitment of research participants and are almost all outside the field of mental health. The benefits to recruitment of using peer researchers have been shown in both an Australian study of young injecting drug users, 17 and a qualitative study of the views and experiences of people using illegal drugs. 18 In a prostate cancer testing trial, a before and after design showed that involving members of the participant population in designing study information and the recruitment process increased recruitment rates from 40 to 70%. 19

Empirical studies in mental health exploring the impact of SURs on the research process are limited to a systematic review of patients’ perspectives on electroconvulsive therapy (ECT). 20 This review demonstrated that using a range of outcomes that were important to patients, rather than mental health professionals, led to differences in the levels of perceived benefits of ECT.

In an earlier pilot project undertaken by the authors, reflections by the team on the research process suggested that there were differences in the way SURs conducted qualitative interviews and analysed the transcripts of those interviews, compared to the other members of the research team (all of whom held conventional academic posts in universities). Furthermore, the team felt that those differences might be amenable to empirical observation if a subsequent study was specifically designed to collect systematically secondary data on interviewing and analysis. It is this process of secondary analysis that is reported in this document.

Aims

To pilot a methodological approach in evaluating the impact of SURs on a qualitative mental health research project, and in particular to:

-

1

Measure the extent to which SURs carried out research (interviewing and analysis) differently to conventional URs;

-

2

Consider the impact of any differences on the research findings;

-

3

Consider the potential to use this methodological approach to measure more generally the impact of SURs on research in mental health.

Setting

A qualitative study, Understanding the Lived Experience of Detained Patients, was undertaken in a London Mental Health NHS Trust to explore patients’ experiences of ‘sectioning’ [detention under the terms of the Mental Health Act (1983)], and the practices of Control and Restraint, Rapid Tranquilisation and Seclusion. This study was undertaken by a collaborative team (the authors) comprising three mental health SURs and three conventional URs: a nursing researcher and two non‐clinical health services researchers. The study was designed to facilitate collection of data on approaches to interviewing and analysis of qualitative data for secondary analysis.

In the first phase of the original study, 19 semi‐structured interviews were conducted with inpatients detained under the Mental Health Act (1983) in a number of acute and forensic wards across the Trust. The three SURs and one UR (a non‐clinical health services researcher) were trained together in qualitative interview skills. The whole team collaborated in developing a semi‐structured interview schedule in the form of a topic list of questions that all interviewees should be asked. Interviewers would be free to ask follow‐up questions to explore those aspects of the detained patient experience that seemed to be relevant to each individual interviewee.

Interviews were conducted by various combinations of interviewer: UR alone (n = 12); UR as lead interviewer, with SUR as co‐interviewer (n = 2); pair of SURs (n = 2); SUR as lead interviewer, with UR as co‐interviewer (n = 3). All interviews involving SURs were conducted in pairs. This was required by the research ethics committee that approved the study as part of a comprehensive risk protocol. In all cases the nominated lead interviewer conducted the bulk of the interview, although co‐interviewers asked occasional additional follow‐up questions.

Methods

A range of secondary analyses of interview transcripts from the original ‘detained patients’ project were employed, comprising thematic and content analysis. 21 These analyses addressed the following specific research questions:

-

1

Did three SURs and a conventional university researcher (UR) conduct qualitative interviews differently for the ‘detained patients’ research project?

-

2

Did three SURs and three URs conduct qualitative analysis differently for the ‘detained patients’ research project?

Although this combination of thematic and content analysis would be somewhat unusual in a primary data qualitative interview study, for a secondary analysis it enabled us to categorize first of all the questions asked and analyses employed by researchers, and then to count their incidence for purposes of comparison. Strengths and weaknesses of the approach are considered in the Discussion.

Conducting qualitative interviews

Ten of the nineteen interviews completed for the ‘detained patients’ study were selected for secondary analysis. Ten interviews were used in order that all five interviews led by a SUR could be compared with five interviews led by the UR. The latter were selected to provide as full a range of interviewer combinations as possible. The selection of interviews and interviewer combinations is given in Table 1.

Table 1.

Interviewer combinations

| Interviewee no. | Lead interviewer | Co‐interviewer |

|---|---|---|

| 102 | UR | SUR1 |

| 104 | SUR1 | UR |

| 105 | SUR1 | SUR3 |

| 107 | UR | |

| 108 | UR | |

| 109 | SUR1 | SUR3 |

| 110 | UR | SUR2 |

| 112 | UR | |

| 113 | SUR1 | UR |

| 116 | SUR3 | UR |

UR, university researcher; SUR1, service user researcher 1; SUR2, service user researcher 2; SUR3, service user researcher 3.

To facilitate comparison between the ways in which the SURs and UR conducted interviews, interviewers’ questions were subject to thematic analysis using categorization or ‘coding’ tools common to inductive thematic analysis. 22 A list of categories of follow‐up questions was generated that was sufficient in range to capture commonalities across the interviews, without forcing idiosyncratic questions to fit uncomfortably within categories.

In coding the follow‐up questions used by interviewers, original questions from the interview schedule were not coded. Functional questions or responses by interviewers that did not introduce or further develop substantive topics were also not coded. These included responses that offered simple affirmation (e.g. ‘yes’, ‘hmm’) as well as questions that sought to elicit more information within an existing topic (e.g. ‘what happened next?’). Coding focussed instead on follow‐up questions that pursued and developed explicit lines of enquiry. Extended questions were coded more than once where questions relating to more than one category were used by the interviewer.

Coding was undertaken by the first author, one of the URs who had not taken part in any of the interviews, to avoid any ‘coding bias’ that might arise from interviewing priorities that an individual interviewer might bring to the analysis. The coded transcripts were then shared with the interviewers – after completion of the main study – in order that they could reflect on the first author’s interpretation of their questioning. This process aimed to arrive at a balanced secondary analysis by ‘bridging the gap’ between the ‘distance’ offered by the secondary researcher (first author) and the intimate knowledge of the data held by primary researchers (interviewers). 23

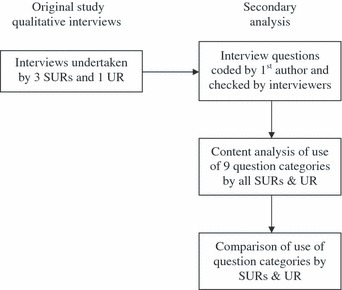

The second stage of the analysis employed a content analysis approach. 24 Questions asked by interviewers within each category were counted and aggregated separately for the five interviews led by SURs and the five interviews led by the UR. The number of questions asked by SURs within each category was then calculated as a percentage of the total number of questions that SURs had asked. Similarly, the number of questions asked by the UR within each category was calculated as a percentage of the total number of that the UR had asked. We used percentages, rather than absolute numbers of questions asked, to control for the variation in total numbers of questions asked in each interview. Interviews differed in length, with some interviewees responding freely to single questions, while others needed to be prompted with multiple follow‐up questions. A comparison of the extent to which SURs and the UR pursued different lines of enquiry in their interviews could therefore be biased by the length and dynamic of individual interviews, if we compared the absolute numbers of questions asked. The percentages of total coded questions asked within each category by SURs were then compared with those asked by the UR. The analysis process is illustrated in Fig. 1.

Figure 1.

Secondary analysis process – conducting qualitative interviews.

Conducting qualitative analysis

Primary analysis: as part of the collaborative approach to the original ‘detained patients’ study, the whole of the research team were involved in the preliminary stage of the analysis of interview transcripts. All six researchers were given the same set of extended extracts from transcripts to carry out a preliminary coding. Following common training, researchers were instructed to highlight any passages from the transcripts they thought of interest and importance, and to assign a short label – or code – to each passage. At a team meeting, all researchers presented their preliminary analysis and those codes were refined through a process of matrix analysis 25 until a list of 13 broad themes was produced. These themes were used by the team to complete the analysis of all nineteen interview transcripts.

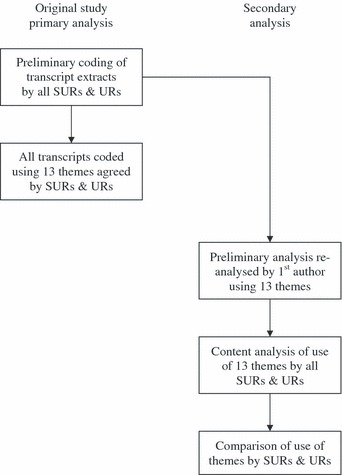

Secondary analysis: in the secondary analysis, we counted the number of codes that had been assigned, in the preliminary coding, to each of the thirteen themes by the three SURs and the three URs respectively. The number of times SURs coded to each theme was then calculated as a percentage of the total number of codes used by SURs. Similarly, the number of times URs coded to each theme was calculated as a percentage of the total number of codes used by URs. Again, we used percentages rather than absolute numbers to control for the different coding styles of individual researchers: some highlighted large numbers of very short fragments of text, assigning a code to each, while others coded a lesser number of whole sentences or paragraphs. A comparison of the extent to which SURs and URs used different themes while analysing interview data would have been biased by individual coding style had we based that comparison on absolute numbers. The percentage of total codes assigned to each theme by SURs was then compared with those used by URs. The full analysis process is illustrated in Fig. 2.

Figure 2.

Secondary analysis process – conducting qualitative analysis.

Results

Conducting qualitative interviews

A set of nine categories of follow‐up question was developed, as described above, to enable comparison of interviewers’ use of follow‐up questions. Those categories are listed below, along with brief definitions of their content:

-

1

Environment (ENV). Questions about physical environment, mood on the ward, the extent to which the ward felt restrictive and feeling safe on the ward;

-

2

Staff (STA). Questions exploring relationships with the staff, staff attitudes and therapeutic engagement of patients by staff;

-

3

Service and treatment (SER). Questions eliciting descriptions of the service and treatment provided on the ward, and opinions of the service;

-

4

Agency (AGE). Questions exploring the extent to which interviewees’ felt in control during their detention, felt able to express opinions and ask for what they needed, and felt able to exercise their rights under the terms of their detention;

-

5

Life events and mental health (L&M). Questions exploring the relationship between events in interviewees’ lives (on the ward and previously; relationships with friends and family; work and living arrangements) and their mental health;

-

6

Alternatives (ALT). Questions exploring alternative approaches to coercive and restrictive procedures, and alternatives to the existing service;

-

7

Experiences and feelings (EXP). Questions exploring interviewees’ personal accounts of being detained, coercive or restrictive practices they experienced or witnessed while detained, and how those experiences made them feel;

-

8

Procedures (PRO). Questions about the procedures around detention, coercive and restrictive practices;

-

9

Medical and behavioural approaches (M/B). Questions eliciting details about medical treatments, as well as attempting to understand interviewees’ experiences from a behavioural perspective.

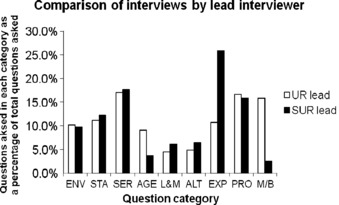

A comparison of questions coded to each category, as percentages of total questions asked, by SURs and by the UR, is given in Fig. 3.

Figure 3.

Comparison of interviews by lead interviewer. UR, university researcher; SUR, service user researcher; ENV, environment; STA, staff; SER, services and treatment; AGE, agency; L&M, life events and mental health; ALT, alternatives; EXP, experiences and feelings; PRO, procedures; M/B, medical and behavioural approaches.

There is little difference between SURs and the UR in terms of the percentages of total questions coded to most of the categories of follow‐up questions. However, a small number of exceptions stand out:

-

1

Service user researchers were proportionately more likely to ask questions about interviewees’Experiences and Feelings about detention and coercion than the UR (with 25.9% of the total questions SURs asked coded to EXP, compared to only 10.8% of the total questions the UR asked);

-

2

The UR was proportionately more likely to ask questions that reflected Medical and Behavioural Approaches to understanding interviewees’ experiences of their detention than the SURs (with 15.8% of the total questions the UR asked coded to M/B, compared to only 2.5% of the total questions SURs asked);

-

3

The UR was also proportionately more likely to ask questions that addressed the issue of interviewees’ sense of Agency over their own experiences of detention than SURs (with 9.1% of the total questions the UR asked coded to AGE, compared to only 3.6% of the total questions SURs asked).

Interviewers also asked interviewees what they felt about being interviewed by a SUR, where that had been the case. All interviewees described the experience as positive – of feeling ‘more comfortable’ with a SUR – and some stated that they found it personally encouraging meeting a service user working as a researcher. However, interviewees were unsure that it made a difference to how they answered the questions.

Conducting qualitative analysis

The list of thirteen themes used to analyse interview transcripts in the primary analysis of the original study is given below with a brief definition of each theme:

-

1

Background circumstances (BAC). Personal circumstances of interviewee prior to sectioning;

-

2

Being sectioned (SEC). Interviewee’s experiences of being sectioned;

-

3

Violence & mental health (VIO). Violence experienced or witnessed by detained patients while using mental health services;

-

4

Medication (MED). The prescription of medication (including compulsory administration of medication), choosing whether or not to take medication, side‐effects, etc;

-

5

Feelings about detention (FEE). Interviewees’ feelings more generally about being detained;

-

6

Staff/patient relationships (S/P). Relationships between detained patients and ward staff (both interviewees and more general observations);

-

7

Ward environment (ENV). All aspects of the ward environment;

-

8

Alternatives to coercion (ALT). Observations on alternatives to coercive practice, both as offered and observed by interviewees;

-

9

Communication (COM). How and what information was communicated to detained patients about their section, treatment, etc;

-

10

Implementation of policies & procedures (POL). The implementation of Trust and ward policies and procedures by ward staff;

-

11

Education & training of staff (E&T). Learnt practice demonstrated by ward staff, as evidenced in interviewees’ accounts;

-

12

Playing the game (GAM). Detained patients self‐consciously exploiting the knowledge of the ‘way things work’ on the ward;

-

13

Patient insight (INS). Patient insight into their own mental health and changes in their mental health.

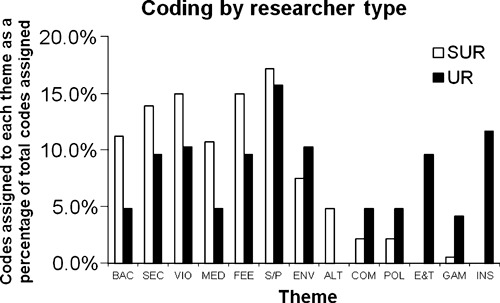

A comparison of codes assigned to each theme, as percentages of total codes assigned, by SURs and by URs is given in Fig. 4.

Figure 4.

Coding by researcher type. SUR, service user researchers; UR, university researchers; BAC, background circumstances; SEC, being sectioned; VIO, violence and mental health; MED, medication; FEE, feelings about detention; S/P, staff, patient relationships; ENV, ward environment; ALT, alternatives to coercion; COM, communication; POL, implementation of policies and procedures; E&T, education and training of staff; GAM, playing the game; INS, patient insight.

Service user researchers and URs assigned very different percentages of the total number of codes used to many of the thirteen themes. Those differences can be summarized as follows:

-

1

Service user researchers assigned, proportionally, more codes to the themes of Background Circumstances, Medication, Being Sectioned, Violence and Mental Health and Feelings about Detention than did URs [11.2% (BAC), 10.7% (MED), 13.9% (SEC), 15.0% (VIO) and 15.0% (FEE) of total codes assigned by SURs, compared to 4.8% (BAC), 4.8% (MED), 9.6% (SEC), 10.3% (VIO) and 9.6% (FEE) of total codes assigned by URs];

-

2

Only SURs assigned codes to the theme of Alternatives to Coercion (4.8% of total codes assigned by SURs);

-

3

University researchers assigned, proportionally, more codes to the themes of Communication, Implementation of Policies and Procedures, and Playing the Game than did SURs [4.8% (COM), 4.8% (POL) and 4.1% (GAM) of total codes assigned by URs, compared to 2.1% (COM), 2.1% (POL) and 0.5% (GAM) of total codes assigned by SURs];

-

4

Only URs assigned codes to the themes of Education and Training of Staff (9.6% of total codes assigned by URs) and Patient Insight (11.6% of total codes assigned by URs).

Discussion

The findings presented above suggest that, in one qualitative research project, there were some differences in the way three SURs carried out qualitative interviewing compared to a conventional university researcher, and that there was more difference in the way in which the SURs analysed qualitative interview transcripts compared to three conventional URs. However, in a study of a very small number of interview transcripts, undertaken and analysed by a small number of researchers, any findings are necessarily a reflection of these interviews by these researchers, rather than findings that can be generalized to qualitative research as a whole. It was not possible to test any of the comparisons made above for their statistical significance because the number of cases for comparison under consideration would necessarily be the number of researchers (of which there was a maximum of three service user‐ and three URs in our study), rather than the number of questions asked or codes used. Nonetheless, it remains possible to reflect on the potential of the methodological approach piloted here to offer a robust, empirical evaluation of the impact of SURs on research process and findings more generally.

The challenges in evaluating the impact of SURs on interviewing are twofold. First, secondary analysis of a greater number of interviews conducted by more researchers would address to a certain extent the possibility that comparisons were made here between individual interviewers, rather than ‘types’ of interviewer. However, greater numbers alone would not address a lack of direct comparison inherent in the methodology: that comparisons are between different interview transcripts, and that apparent differences in interviewing might simply be the result of different interviewees having different things to talk about (an ‘interviewee bias’). There would be considerable methodological challenges in designing a study in which individual interviewees answered the same set of questions asked by both a SURs and a university researcher. Alternatively, a certain amount of matching of interviewee for secondary analysis by characteristics relevant to the study might reduce interviewee bias.

Secondly, problems with what has been described as a ‘free list’ approach to content analysis can be identified: 24 primarily that without multiple raters agreeing on the definition and boundaries of categories within the list of codes generated, any content analysis is inherently unreliable. Attempts to address that were undertaken here, with the interviewers checking and amending the coding frame developed by the first author. A more robust study should therefore involve a number of both secondary and primary researchers bringing a range of perspectives to the process of generating a coding frame to be used in content analysis. 23

Evaluation of the impact of SURs on analysis demonstrated more methodological strength. First, all members of the team analysed the same extracts from the same interviews, and so direct comparisons between the analyses of different team members were being made. Secondly, the analyses of three SURs were compared with the analyses of three conventional URs, reducing the possibility that comparisons were being made between individual researchers. A larger study can be envisaged that controls carefully for greater numbers of researchers within a range of researcher types, further enhancing the reliability of any comparisons made. Finally, the coding frame developed here in order to compare analyses between team members was generated by a robust ‘matrix’ approach to analysis, 25 explicitly designed to systematically synthesize the range of interpretations brought to the analysis process by different team members.

It is also possible to reflect on the impact on findings of research involving mental health service users as researchers, while once again limiting those observations to this particular study because of the small scale of the primary research project. Results suggest the possibility that data generated in SUR‐led interviews is, to some extent, qualitatively different from the university researcher‐led interviews. A similar study that failed to include either SURs or URs would not have collected the same range of data, using the same interview tool, from the same sample of detained patients. Either a certain amount of data exploring experiences and feelings about detention and coercive practices would be missing, or data around medication, diagnoses or patient behaviours would be lacking. However, much of the interviewing was similar, borne out by interviewees’ reporting that they were unsure if they would have answered questions differently if they had been asked by non‐service user interviewers.

More strikingly, results suggest that the same set of data is interpreted very differently by service user‐ and conventional URs, evidenced by the very different analysis ‘profiles’ illustrated in Fig. 4. The findings of a similar study that lacked either SURs or URs would have drawn very different conclusions from the same data set. Either the same weight would not have been given to experiential or emotional perspectives on detention had the SURs been absent from the team, or procedural and conceptual interpretations would have been largely missing without the conventional university members of the team. While there is no space to report substantive findings here, in the original study the research team used these contrasting analytical perspectives to articulate different accounts of the detained patient experience. This informed the design of a clinical staff training intervention that incorporated both experiential (patient perspective) and procedural (practice perspective) elements.

The implications of the study are twofold. First, as a methodological pilot the study demonstrates that it is possible to develop a methodologically robust approach to the empirical evaluation of the impact of SURs on the research process: evidence was produced that different researchers did interview and analyse qualitative data differently in this study. Secondary analyses of a larger number of qualitative interviews conducted by a larger number of service user and non‐SURs, with the development of coding frames undertaken by a range of raters to enhance reliability, would enable the methodology to be subjected to a more rigorous empirical test.

Secondly, these findings support the idea that a collaborative approach to research can be productive of more complex data and analyses, offering a more comprehensive insight into the research question under investigation: the ‘ecological’ or ‘real world’ evidence base for change advocated by Faulkner and Thomas. 16 With the greater reliability that a larger study based on this pilot would offer, practitioners, commissioners and policy makers acting on the evidence provided by collaborative research would be confident that this was knowledge produced through a proper synthesis of service user, clinical and academic expertise.

Conflict of interest

No conflicts of interest have been declared.

Source of funding

Funding for this study was provided by South West London & St George’s Mental Health NHS Trust, London, UK.

References

- 1. Freil M, Knudsen J. Brugerinddragelse i sundhedsvaesenet. User involvement in the Danish health care sector. Ugeskrift for Laeger, 2009; 171: 1663–1666. [PubMed] [Google Scholar]

- 2. Sibitz I, Swoboda H, Schrank B et al. Einbeziehung von Betroffenen in Therapie‐ und Versorgungsentscheidungen: professionelle HelferInnen zeigen sich optimistisch. Mental health service user involvement in therapeutic and service delivery decisions: professional service staff appear optimistic. Psychiatrische Praxis, 2008; 35: 128–134. [DOI] [PubMed] [Google Scholar]

- 3. Kim YD, Ross L. Developing service user involvement in the South Korean disability services: lessons from the experience of community care policy and practice in UK. Health & Social Care in the Community, 2008; 16: 188–196. [DOI] [PubMed] [Google Scholar]

- 4. Carr S. Participation, power, conflict and change: theorizing dynamics of service user participation in the social care system of England and Wales Critical. Social Policy, 2007; 27: 266–276. [Google Scholar]

- 5. Doel M, Carroll C, Chambers E et al. Participation: finding out what difference it makes. London: Social Care Institute for Excellence, 2007. [Google Scholar]

- 6. Boote J, Telford R, Cooper C. Consumer involvement in health research: a review and research agenda. Health Policy, 2002; 61: 213–236. [DOI] [PubMed] [Google Scholar]

- 7. INVOLVE . The impact of public involvement on research. Available at: http://www.invo.org.uk/pdfs/EKLdiscussionpaperfinal170707.pdf, accessed 11 November 2009.

- 8. Simpson E, House A. Involving users in the delivery and evaluation of mental health services: a systematic review. British Medical Journal, 2002; 325: 1265–1269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Baxter L, Thorne L, Mitchell A. Small Voices; Big Ideas. Exeter: Washington Singer Press, 2001. [Google Scholar]

- 10. Faulkner A. Capturing the experiences of those involved in the TRUE project: a story of colliding worlds. Eastleigh: INVOLVE, 2004. [Google Scholar]

- 11. Turner M, Beresford P. User Controlled Research: Its Meanings and Potential. Brunel: Brunel University, 2005. [Google Scholar]

- 12. Trivedi P, Wykes T. From passive subjects to equal partners: qualitative review of user involvement in research. British Journal of Psychiatry, 2002; 181: 468–472. [DOI] [PubMed] [Google Scholar]

- 13. Rose D. Collaborative research between users and professionals: peaks and pitfalls. Psychiatric Bulletin, 2003; 27: 404–406. [Google Scholar]

- 14. Simpson E, House A. User and carer involvement in mental health services: from rhetoric to science. British Journal of Psychiatry, 2003; 183: 89–91. [DOI] [PubMed] [Google Scholar]

- 15. Beresford P. Developing the theoretical basis for service user/survivor‐led research and equal involvement in research. Epidemiologia e Psichiatria Sociale, 2005; 14: 4–9. [DOI] [PubMed] [Google Scholar]

- 16. Faulkner A, Thomas P. User‐led research and evidence based medicine. British Journal of Psychiatry, 2002; 180: 1–3. [DOI] [PubMed] [Google Scholar]

- 17. Coupland H, Maher L, Enriquez J et al. Clients or colleagues? Reflections on the process of participatory action research with young injecting drug users International Journal of Drug Policy, 2005; 16: 191–198. [Google Scholar]

- 18. Elliot E, Watson AJ, Harries U. Harnessing expertise: involving peer interviews in qualitative research with hard‐to‐reach populations. Health Expectations, 2002; 5: 172–178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Donovan J, Mills N, Smith M et al. Improving design and conduct of randomized controlled trials by embedding them in qualitative research: ProtecT (prostate testing for cancer and treatment) study. British Medical Journal, 2002; 325: 766–770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Rose D, Fleischmann P, Wykes T et al. Patients’ perspectives on electroconvulsive therapy: systematic review. British Medical Journal, 2003; 326: 1363–1367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Heaton J. Reworking Qualitative Data. London: Sage, 2004. [Google Scholar]

- 22. Ritchie J, Lewis J. Qualitative Research Practice. London: Sage, 2003. [Google Scholar]

- 23. Emslie C, Ridge D, Ziebland S, Hunt K. Men’s accounts of depression: reconstructing or resisting hegemonic masculinity? Social Science & Medicine, 2005; 62: 2246–2257. [DOI] [PubMed] [Google Scholar]

- 24. Ryan G, Bernard H. Data management and analysis methods In: Denzin N. (ed) Collecting and Interpreting Qualitative Materials, 2nd edn London: Sage, 2003: 259–309. [Google Scholar]

- 25. Averill J. Matrix analysis as a complementary analytic strategy in qualitative inquiry. Qualitative Health Research, 2002; 12: 855–866. [DOI] [PubMed] [Google Scholar]