Abstract

Background Evidence suggests that in decision contexts characterized by uncertainty and time constraints (e.g. health‐care decisions), fast and frugal decision‐making strategies (heuristics) may perform better than complex rules of reasoning.

Objective To examine whether it is possible to design deliberation components in decision support interventions using simple models (fast and frugal heuristics).

Design The ‘Take The Best’ heuristic (i.e. selection of a ‘most important reason’) and ‘The Tallying’ integration algorithm (i.e. unitary weighing of pros and cons) were used to develop two deliberation components embedded in a Web‐based decision support intervention for women facing amniocentesis testing. Ten researchers (recruited from 15), nine health‐care providers (recruited from 28) and ten pregnant women (recruited from 14) who had recently been offered amniocentesis testing appraised evolving versions of ‘your most important reason’ (Take The Best) and ‘weighing it up’ (Tallying).

Results Most researchers found the tools useful in facilitating decision making although emphasized the need for simple instructions and clear layouts. Health‐care providers however expressed concerns regarding the usability and clarity of the tools. By contrast, 7 out of 10 pregnant women found the tools useful in weighing up the pros and cons of each option, helpful in structuring and clarifying their thoughts and visualizing their decision efforts. Several pregnant women felt that ‘weighing it up’ and ‘your most important reason’ were not appropriate when facing such a difficult and emotional decision.

Conclusion Theoretical approaches based on fast and frugal heuristics can be used to develop deliberation tools that provide helpful support to patients facing real‐world decisions about amniocentesis.

Keywords: amniocentesis, decision support, decision making, fast and frugal, heuristics, prenatal diagnosis

Introduction

How best to support people attempting to make difficult health decisions is an area of considerable research interest. Decision support interventions, commonly known as decision aids, have been developed to help individuals learn about the features and implications of their treatment or screening options while improving communication with their health‐care providers. The number of published decision support interventions has tripled since 1999, 1 yet there is uncertainty around the nature of cognitive processes that might help people make informed preference‐sensitive decisions. 2 , 3 There is currently no consensus nor widely accepted methods for developing decision support interventions to best support patient’s preference‐sensitive decision making. 4 There is uncertainty around the nature of cognitive processes that might help people make informed preference‐sensitive decisions. 2 , 3 There is documented evidence that decision support interventions increase knowledge, realistic expectations and participation in decision making and reduce decisional conflict compared to usual practice 5 but do not systematically influence actual treatment or screening decisions. 6 In addition, Molenar et al. 7 established that decision support interventions had only limited effects on patients’ satisfaction with the decision, their decisional uncertainty and the final health outcomes.

The main reason for such heterogeneous findings might be rooted in the lack of theoretical and conceptual underpinning for the development and evaluation of decision support interventions and their associated deliberation tools. 8 , 9 , 10 Only 34% of interventions included in a Cochrane systematic review of patient decision aids were based on a theoretical framework relevant to supporting decision making. 5 , 11 Where theory was used, it was often based on rational models of decision making. For instance, the majority of deliberation tools embedded in decision support interventions, such as value‐/preference‐clarification exercises 12 or probability trade‐off techniques, are inspired by models of optimization. These complex models only regard deliberation as optimal if people consider and weigh all relevant information according to their relative importance on the outcome. Although clarifying personal values is a central task in preference‐sensitive decision making, it is yet unclear whether complex deliberation tools lead to better decisions than simple intuitive devices. To date, and as far as can be determined, no study has compared whether a complex and formalized clarification exercise leads to better decisions as a more intuitive exercise. In fact, there is good reason to doubt that complex approaches indeed optimize people’s preference‐sensitive decision making. Research on preference‐sensitive decision making of consumers suggests that, in certain situations, too much deliberation and attention to detail may disrupt people’s ability to focus on the relevant information and lead to poor decisions. 2 , 3 , 13 Further, research suggests that several value clarification techniques were prone to scoring inconsistency and poor stability of measurements on an individual level, which raises the question of what is actually being measured. 14 , 15 One may wonder why simpler alternative models, such as heuristics, have never been tested in the context of decision support interventions development. A recognized programme of simple models is the ‘fast and frugal heuristics’, based on the theory of probabilistic mental models developed by Gigerenzer et al. 16 , 17 These models recognize that humans have limited reasoning and computational abilities and may have to make decisions under conditions of limited knowledge and time. Fast and frugal heuristics are simple rules of reasoning that are mainly non‐compensatory; information search is limited and may be minimal, determined by simple stopping rules. Although heuristics have been considered sources of bias and judgement errors, 18 , 19 fast and frugal heuristics and other simple models were found to have the same predictive accuracy as complex models in several situations and sometimes even outperformed complex models. 20 , 21 , 22 , 23 These findings contradict the assumption of most optimization models that the quality of decisions (or predictions) will always improve, and never diminish, when increasing amounts of information are processed. This assumption is, however, incorrect. The relation between information quantity and the quality of an inferential prediction is often an inversely U‐shaped curve. 24 That is, too little information can harm decision quality, but too much information can harm as well. Especially in situations where uncertainty is high such as in medical decision making, it can be an advantage to ignore information to make more robust predictions. 25 , 26 , 27 , 28 This has been established in various contexts 17 , 21 , 29 including in the field of medicine. For coronary care unit assignments 30 and macrolide prescription for children, 31 a simple ‘fast and frugal tree’ made physicians achieve equally accurate or even more accurate decisions than a decision aid resting on a complex model. While heuristics have been shown to facilitate inferential decision making, it has not yet been proven whether these simple models could also contribute to preference‐sensitive decision making.

The present study aims at closing this gap. Our goal was to develop deliberation tools using fast and frugal models to support pregnant women facing a decision to undergo amniocentesis testing. Deciding whether or not to undergo amniocentesis testing is a complex and emotionally charged decision involving risk, complex information and far‐reaching consequences. In the United Kingdom, prenatal screening tests for Down’s syndrome (i.e. blood tests or ultrasound scan) are routinely offered to all pregnant women between 15 and 18 weeks of pregnancy to determine their risk of foetal chromosomal abnormality. Women who receive a higher risk result will be offered to undergo amniocentesis testing. The procedure, which is reported to have a 1% risk of miscarriage, may lead to detection of chromosomal abnormality, to further decision making about whether to continue with the pregnancy or to foetal loss. 32 , 33 , 34 The chromosome tests performed on the amniotic fluid will identify most common chromosomal abnormalities (e.g. trisomy 13, 18, 21 and exchange of chromosomes) but will not diagnose small changes in chromosomes (e.g. microdeletions) and will not indicate the severity of the abnormality detected. The trade‐off between the 1% miscarriage risk and the gain in information provided by the chromosome test results is not always clear for women considering amniocentesis. Evidence suggests that women who are offered amniocentesis are not provided with sufficient information and are unable to make informed decisions in this area. 35 Therefore, the amniocentesis decision is often made under conditions of limited knowledge and time as well as heightened stress and anxiety. Recognizing the need to develop a Web‐based decision support intervention for amniocentesis testing (amnioDex), the aims of the present study were to develop deliberation tools based on fast and frugal heuristics and to field‐test these tools with researchers, health‐care professionals and pregnant women facing amniocentesis testing.

Methods

The study was divided in four stages: (i) prototype development of two deliberation tools, (ii) prototypes field‐tested with researchers, (iii) prototypes field‐tested with health professionals and (iv) prototypes field‐tested with pregnant women facing a decision to undergo amniocentesis testing.

Prototype development

We considered eight decision algorithms as possible basis for the development of deliberation tools. These algorithms included four heuristic‐based algorithms (Take The Best, Take the Last, Minimalist and Tallying) and four integration algorithms (Unit Weight Linear Model, Weighted Tallying, Weighted Linear Model and Multiple Regression). In the literature, decision algorithms (see Table 1) have been compared using simulations of performance on real‐world questions under conditions of limited knowledge and time. 16 Our criteria for selecting decision algorithms (for translation into deliberation tools) were their specified performance and predictive accuracy on inferential decision tasks 16 and current research in this area. 36 Findings of this research suggested that the heuristic‐based algorithms 37 ‘Take The Best’ and ‘Tallying of Positive Evidence’ performed better than complex integration algorithms. 16 Both heuristic‐based algorithms were retained to guide the development of two deliberation tools embedded in a decision support intervention for women facing amniocentesis testing (amnioDex).

Table 1.

Algorithms considered for the development of deliberation tools

| Heuristic‐based algorithms | Integration algorithms | ||||||

|---|---|---|---|---|---|---|---|

| Take The Best | Take the Last | Minimalist | Tallying | Weighted Tallying | Unit Weight Linear Model | Weighted Linear Model | Multiple Regression |

| Cues1 or attributes are subjectively ranked according to their validity/importance. The highest ranking cue (best cue) is retrieved from memory. If the cue discriminates between options, the search stops and an option is chosen. If the cue does not discriminate between options, the process is repeated until a cue is found to discriminate. | The cue that discriminated between options the last time a decision was made is chosen. If this cue does not discriminate, the second most recently used cue is chosen. The process is repeated until a cue is found to discriminate. | The cues are selected in a random order until a cue is found to discriminate between options. The minimalist heuristic requires even less knowledge than Take The Best and Take the Last. | For each option, all positive cues are summed up, and the option with the largest number of positive cues is chosen. All cues have the same value or importance with regard to the decision. | Contrary to Tallying, where all cues weigh the same value, the Weighted Tallying algorithm involves weighing each cue according to its ecological validity. The ecological validity specifies the cue’s predictive power, that is, the frequency with which the cue successfully predicts the choice. 22 | This algorithm is similar to Tallying, except that the assignment of option a and option b differs (see Gigerenzer and Goldstein 18 ). This algorithm has been considered a good approximation of weighted linear models. | This model is similar to the Unit Weight Linear Model and Tallying. However, the value of option a and b are multiplied by their ecological validity. | Multiple regression accounts for the different validities of the cues. Multiple regression assigns weights to each option corresponding to the covariance between the cues. |

1Cues refer to the attributes of each option.

Key theoretical constructs of the Take The Best heuristic and Tallying algorithm guided the design of ‘your most important reason’ and ‘weighing it up’. The cognitive steps of each algorithm were isolated and translated into a graphic‐based interactive deliberation tool. The first mental step of the Take The Best algorithm 16 requires that all available attributes be ranked according to the attribute’s importance with regard to the decision. In the second step, each attribute is reviewed, in order of importance, until one attribute is found that can discriminate between options. The Tallying algorithm 38 requires that all determining attributes for each option are summed up. The option with the largest number of attributes is chosen. All attributes have the same value or importance with regard to the decision. The deliberation tools were developed in collaboration with a Web‐design company and a small group of researchers, over a period of 12 months. Several prototypes were developed for each deliberation tool. Each new prototype was discussed and adapted. Because of recurrent technical and graphic design issues, informal piloting occurred until the first usable prototype of the deliberation tools was ready for field test. The necessity to field‐test decision support interventions and specific interactive components (e.g. deliberation tools) has been highlighted by the International Patient Decision Aids Standards (IPDAS) collaboration. 39 Field testing is increasingly recognized as a necessary assessment and validation of the quality and usability of interactive interventions and deliberation tools. 40 Field testing is described as a ‘live’ testing of a prototype decision support intervention which involves showing the newly developed intervention or components to potential users who comment on its content and usability, to amend it accordingly. 40

Prototype testing with researchers

The first working prototypes of the deliberation tools, ‘weighing it up’ version 1 and ‘your most important reason’ version 1, were piloted with a stakeholder group of researchers from multidisciplinary backgrounds: medicine, psychology, health psychology, sociology, health informatics (n = 15). The sample consisted of two researchers specializing in shared decision making, eight researchers specializing in health communication, two researchers specializing in health informatics and three sociologists. A group interview was used to discuss each deliberation tool separately. The researchers were asked to comment on the design and usability of each tool. The data were analysed using thematic content analysis. ‘Weighing it up’ and ‘your most important reason’ versions 1 were amended following the researchers’ comments to create the second working prototype of the deliberation tools (i.e. version 2).

Prototype testing with health professionals

The planned sample of health professionals (n = 28) consisted of five consultants in obstetrics and gynaecology, a sonographer, a clinical nurse specialist, ten midwives, two geneticists, six coordinators of the national antenatal screening programme in Wales, England and Scotland, a patient representative and two professionals from national charities offering information and support during the diagnostic phase of pregnancy (Antenatal Results and Choices, Down’s Syndrome Association). An email was sent to all 28 individuals, asking them to review the deliberation tools online and to complete a short 18‐item questionnaire by providing written feedbacks on each item. The data were analysed using thematic content analysis. 41 For the purpose of this analysis, our attention was focussed on the items addressing the deliberation tools only. The deliberation tools were amended accordingly to develop version 3.

Prototype testing with women facing the amniocentesis decision

Pregnant women who had been offered an amniocentesis were invited to use amnioDex and the deliberation tools (version 3). In two antenatal clinics, pregnant women (any age) who had been offered an amniocentesis were informed of the study by midwives whether they undertook screening tests for Down’s syndrome (maternal serum screening, nuchal translucency scan) or not (advanced maternal age, mid‐pregnancy ultrasound scan). Pregnant women were excluded from the study if they could not read English. Women who indicated their interest were consented and given an interview date. The interview with pregnant women considering amniocentesis testing was conducted in two phases and presented more data than the field tests with researchers and health professionals. First, participants were asked to use the deliberation tools while verbalizing their thoughts using the ‘think aloud’ method. 42 , 43 This method requires participants to communicate their thoughts as they use the tools and provides insight into the usability of the products and impacts on cognitions and emotions of the steps required to navigate new technologies. 44 , 45 , 46 Second, participants took part in a short semi‐structured interview. The interview schedule consisted of eight open‐ended questions focusing on women’s reactions to the deliberation tools, navigation of the website, comprehension of content and suggestions for improvements. The interview data were qualitatively analysed using a two‐step thematic content analysis derived from descriptive phenomenology, 41 , 47 , 48 assisted by the computer software atlas‐ti (atlas‐ti 5.2). The deliberation tools were amended accordingly, and ‘weighing it up’ and ‘you most important reason’ version 4 were developed.

Results

Prototype development

Key theoretical constructs of the Take The Best and Tallying algorithms, respectively, guided the design of ‘your most important reason’ and ‘weighing it up’. To increase usability, the terminology was simplified. The term ‘attributes’ were replaced by the term ‘reasons’. The reasons displayed in both tools were selected from accounts provided in a detailed needs assessment reported separately. 49

‘Your most important reason’

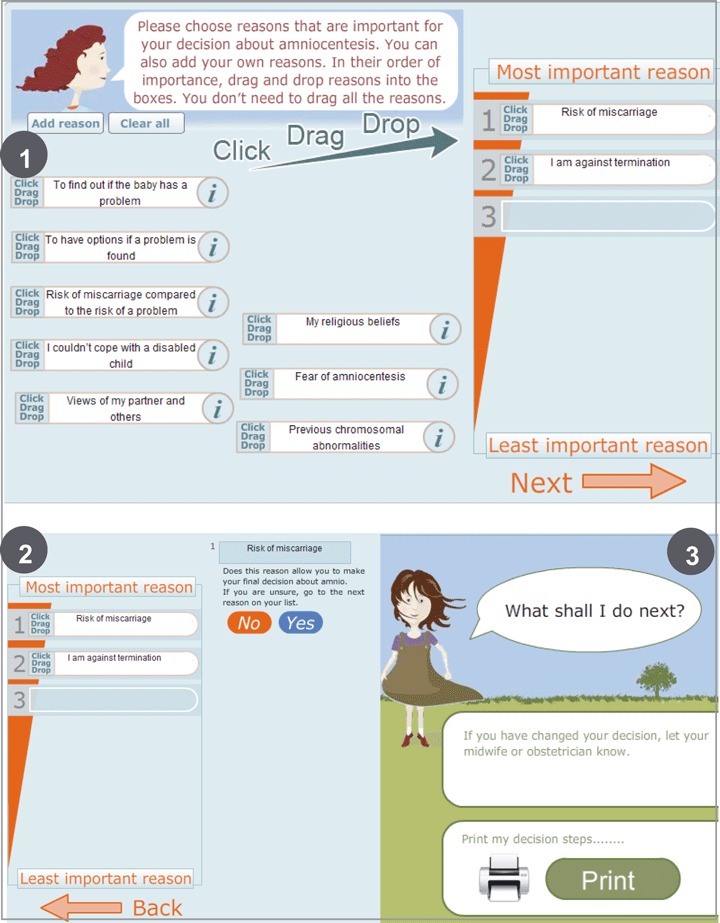

In ‘your most important reason’ version 1 (see Fig. 1), users were presented with a series of ‘important reasons’ that were considered influential in arriving at a decision to accept or decline amniocentesis. The reasons were displayed in boxes with clickable information buttons, and more reasons could also be added. Users were asked to choose their ‘important reasons’ and to rank them in order of importance. The first important reason ranked was automatically selected and a short question generated asking: ‘Does this reason allow you to make your final decision about amniocentesis?’ Users who chose ‘yes’ were asked to indicate their decision. Suggestions of the next steps to be taken were made, such as informing their health‐care provider, reading more about amniocentesis, printing their deliberation pathway or watching enacted quotes of women’s stories. Further advice and support was offered to users who indicated indecision about amniocentesis (i.e. discuss decision difficulties with health professionals, use another deliberation tools, obtain support from the Antenatal Result and Choices helpline, find more information).

Figure 1.

Your most important reason version 1.

‘Weighing it up’

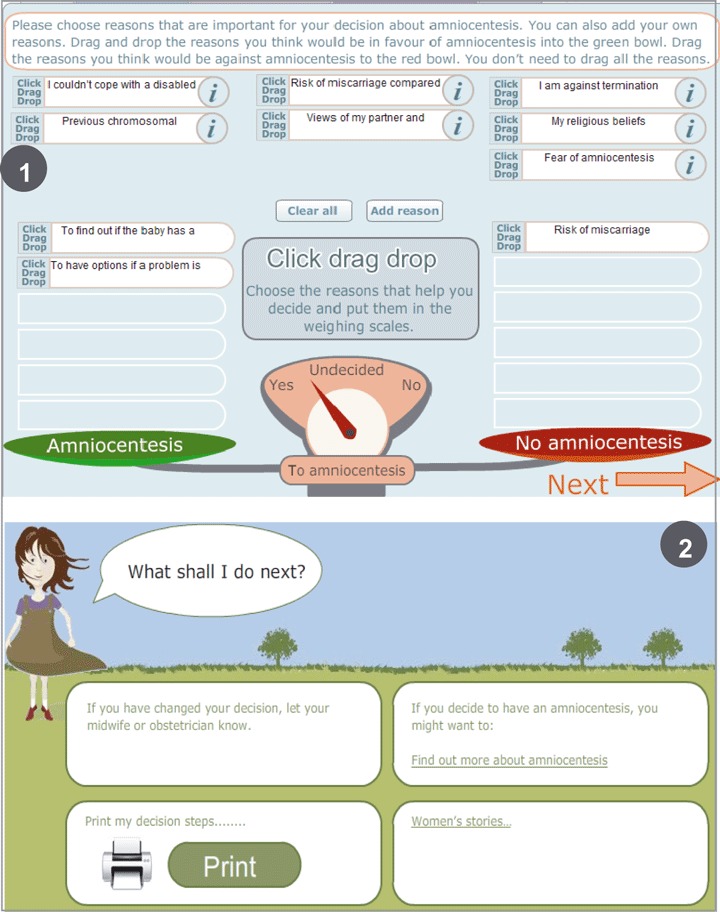

In ‘weighing it up’ version 1 (see Fig. 2), users were presented with the same series of ‘important reasons’ (as for the previous deliberation tool) and were asked to select the reasons that were relevant to their decision. When selected, a weight appeared on a weighing scale, indicating whether the reason acted in favour of or against having an amniocentesis. Users were subsequently asked whether a decision about amniocentesis had been made. Users who chose ‘yes’ were asked to indicate their decision (yes or no to amniocentesis). Suggestions of the next steps to be taken were made. Further advice and support was offered to users who indicated indecision about amniocentesis, as described previously.

Figure 2.

Weighing it up version 1.

Prototype testing with researchers

Fifteen researchers were invited and 10 agreed to take part. Most researchers positively reacted to both deliberation tools. They did not express preferences towards one tool or the other. Three researchers suggested presenting the reasons in two columns, to distinguish between the reasons for and against amniocentesis, as they believed this would facilitate the differentiation between options. All remaining researchers felt that it was appropriate to display the reasons in a random order. To avoid increasing the number of reasons displayed or affecting the design and layout and to maintain the random presentation of reasons, the layout was kept unchanged. Most researchers considered that instructions and textual content of ‘weighing it up’ and ‘your most important reason’ were not sufficiently clear and self‐explanatory. The textual content and instructions appearing on the first page of each tool were amended to increase usability and meet the requirements of the Plain English Campaign. 50 , 51 Further, following a researcher’s suggestion to demonstrate how to use the deliberation tool, a short demonstration sequence (animated video clip) was added. All amendments were integrated in the second versions of the deliberation tools.

Prototype testing with health professionals

Twenty‐eight health professionals were invited, and nine professionals agreed to review the website and embedded deliberation tools (version 2). The sample consisted of two midwives, a consultant in obstetrics and gynaecology, five professionals from the national screening programmes in England, Scotland and Wales, and the director of a national charity (Antenatal Results and Choices). Five health professionals expressed concerns regarding the clarity and usability of the tools. For both tools, the instructions were judged unclear and confusing. Two professionals even questioned the necessity to integrate such tools on the website, as they feared the tools would confuse rather than help pregnant women. One out of nine professionals reported preferring ‘weighing it up’ to ‘your most important reason’. Four professionals considered that both deliberation tools would prove beneficial in clarifying women’s thoughts and facilitating decision making. Two professionals insisted on the necessity to review the demonstration before using the tools and suggested integrating a mandatory demonstration in each deliberation tool. Following their comments, the textual content and instructions were amended to increase clarity and usability. The demonstration button was made more prominent to encourage users to watch the short animated video first. These changes were incorporated into the third versions of the deliberation tools.

Prototype testing with women facing the amniocentesis decision

In the participating antenatal clinics, 14 pregnant women who had recently been offered an amniocentesis were invited to take part and 10 women agreed to be interviewed. Pregnant women used the deliberation tools version 3. Nine participants took part in a phone interview, and one participant attended a face‐to‐face interview. Pregnant women were interviewed between 4 and 20 days following the counselling session where amniocentesis testing was offered and discussed (11 days in average). All pregnant women interviewed had already made a decision about amniocentesis testing. Five women decided to undergo amniocentesis, and five women declined the test. Interviews lasted between 17 and 75 min (29 min in average). The mean age of women in the sample was 36.7. The demographic characteristics of the participants are summarized in Table 2. Five themes were identified: benefits of the deliberation tools, disadvantages of the deliberation tools, difficulties using the deliberation tools, preferences for one tool over the other and suggestions for improvement (see Table 3).

Table 2.

Demographic characteristics of women who were interviewed (n = 10)

| Amniocentesis | |

| Accepted | 5 |

| Declined | 5 |

| Maternal age | Range 34–41. Mean 36.7 |

| Gestational weeks of pregnancy | Range 17–19 weeks. Mean 18 weeks |

| Marital status | |

| Married or engaged | 5 |

| Living with partner | 5 |

| Nationality | |

| British | 9 |

| Other (Filipina) | 1 |

| Number of children | |

| 0 | 2 |

| 1 | 6 |

| 2 | 2 |

| Existing children with a chromosome disorder | 0 |

| Obstetric history | |

| Previous miscarriage | 1 |

| Previous amniocentesis | 0 |

Table 3.

Themes identified in interviews with pregnant women

| Themes | Sub‐themes |

|---|---|

| Benefits of the deliberation tools | Decision‐making process facilitated |

| Decision outcome visualized | |

| Increased clarity | |

| List of reasons | |

| Preferences for one tool over the other | Advantages of weighing it up |

| Disadvantages of the deliberation tools | Complexity |

| Incompatible with such emotional decision | |

| Artificial process | |

| Difficulties using the deliberation tools | Usability |

| Understanding difficulties | |

| Technical difficulties |

All participants used both deliberation tools. In contrast to the views of health professionals, seven out of 10 pregnant women found the deliberation tools helpful in the following: weighing the pros and cons of options (n = 4), in making a decision (n = 2), confirming the decision made (n = 2), providing a comprehensive list of reasons (n = 2) and generally facilitating understanding (n = 1).

It was good to do that and see that, for me, everything went towards the no, not having it. I mean, it [weighing it up] just helped me make the decision basically. It’s just nice to be able to make the decision by using different ways of doing it, just to understand it a little bit more. (F, age 37, declined amniocentesis)

Seven out of ten pregnant women felt the instructions were clear and the tools easy to use. The majority of pregnant women did not watch the demo, but nine out of ten women thoroughly read the instructions.

‘It was fine to use, dead simple!’ (Female, age 34, undertook amniocentesis)

Two pregnant women considered the list of reasons helpful in clarifying their thoughts about amniocentesis testing. While they already knew the main reasons/factors for accepting or declining an amniocentesis, visualizing the list was deemed helpful in achieving decision making.

The best bit which helped compared to the leaflets was those little cartoons down at the bottom [‘weighing it up’ and ‘your most important reason’]. It’s got all the reasons that you were thinking of, in your brain, that were all messed up, so it lists it, so you know what those reasons are, you just couldn’t think straight at the time.” (F, age 34, undertook amniocentesis)

Further, seven out of ten pregnant women expressed preferences towards ‘weighing it up’ over ‘your most important reason’.

‘I like the weighing scales. I found that one a little better to use, just because it’s more visible as you do it rather than the other one, you’ve got to wait till the end to know what the result is.’ (F, age 34, declined amniocentesis).

They felt that ‘weighing it up’ was more immediate, intuitive and helpful in visualizing the decision. They perceived the movements of the weighing scales as facilitating the trade‐off between options. Pregnant women considered that ‘weighing it up’ enabled them to visualize their decision‐making process (movements of the scales during deliberation) and the final outcome, as reflected by the arrow on the weighing scale: leaning towards amniocentesis or not.

I think the first one [weighing it up] was more immediate in, kind of, putting it visually in front of you, in making a decision and putting down the pros and cons. (F, age 37, declined amniocentesis)

Three pregnant women indicated that ‘your most important reason’ was more complex and instructions seemed less clear than ‘weighing it up’. Two out of ten pregnant women reported difficulties ranking their reasons in order of importance.

Three pregnant women out of ten found the tools unhelpful in making a decision about amniocentesis. They felt the tools were overly complex, too clinical and did not facilitate understanding.

I think it’s such an emotive subject. Would I like to actually physically weigh the pros and cons and things like that? No, because it feels too clinical. (F, age 35, undertook amniocentesis)

Three out of ten pregnant women reported difficulties understanding how to use the tools. They considered that the layout and design of the tools were too complex and experienced difficulties navigating the tools. They also felt that the tools required a high level of concentration that was not necessarily possible at this stage of the pregnancy.

Especially for people who are not working with computers, they’re going to find that hard. The thing is, sometimes when you’re pregnant, you are all over the place, do you see what I mean? My concentration is not as good.

Two out of ten women experienced difficulties dragging and dropping the boxes in the column (‘your most important reason’) or on the weighing scales (‘weighing it up’).

I am not sure about that [pointing to the click, drag and drop box] I find that quite complicated and I work on computers but I think until you’re familiar with it…I find that part quite difficult (F, age 37, undertook amniocentesis)

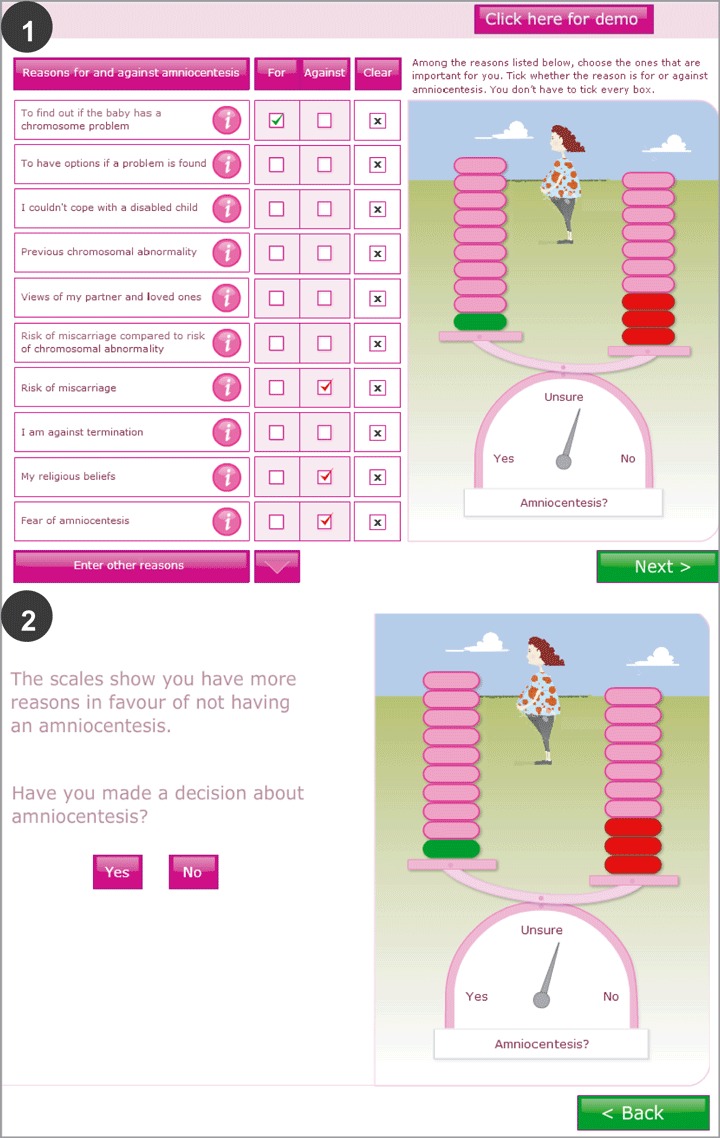

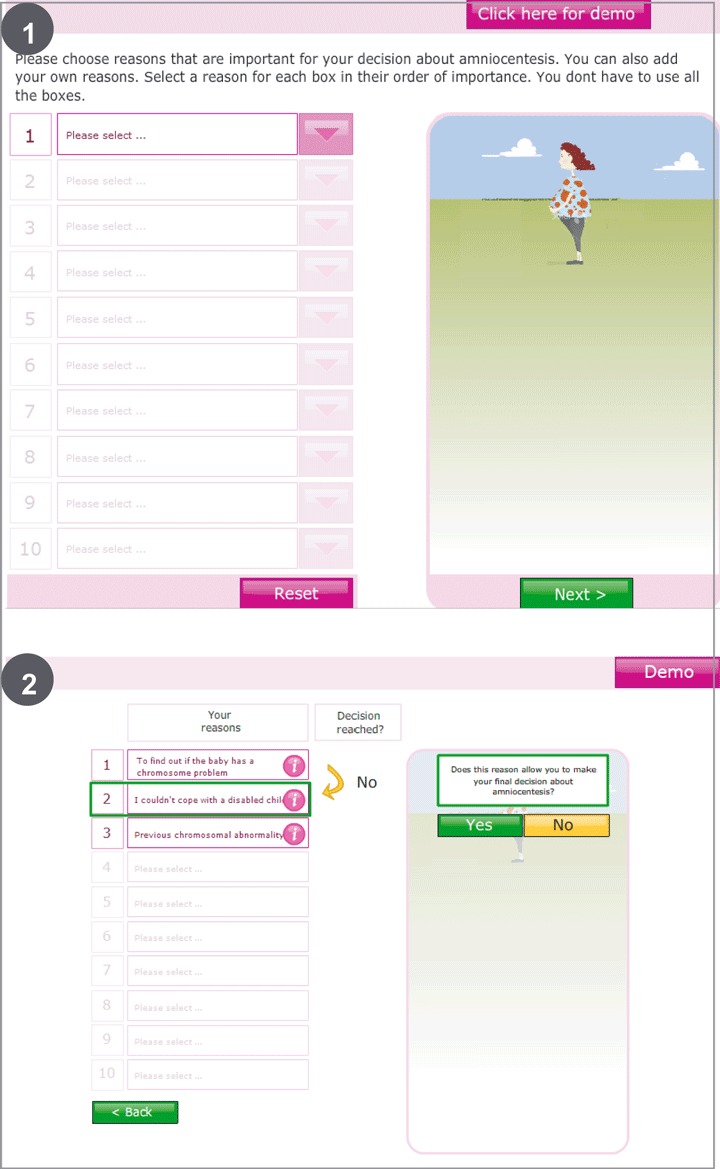

The deliberation tools were modified according to women’s comments. The action to ‘drag and drop’ boxes was replaced by a column where users tick the reasons that apply to them. The overall design and layout of the tools was simplified to increase usability on the basis of these comments, and version 4 of the deliberation tools (final version) was created (see 3, 4).

Figure 3.

Weighing it up version 4.

Figure 4.

Your most important reason version 4.

Discussion

The present findings indicate that heuristic‐based algorithms can successfully guide the design of interactive deliberation tools although difficulties may occur when attempting to translate heuristic constructs into usable interactive methods. The evaluation of the deliberation tools differed across the stakeholder groups. Both tools were positively received by most researchers and pregnant women, while the majority of health professionals expressed concerns about the tools’ clarity and usability. While ‘your most important reason’ (Take The Best) was based on a simpler decision algorithm than ‘weighing it up’ (Tallying), the majority of women explicitly preferred ‘weighing it up’.

To the best of our knowledge, this study is the first to transfer heuristic‐based algorithms (Take The Best and Tallying) into the practical development of interactive deliberation tools. Our results show that the success of this translation largely depends on effectively dealing with the challenges this process generates. Translating abstract mental steps into an acceptable interface proved difficult for the Web designers and researchers involved in the development process. Each mental step required extensive discussions and iterative modifications. To comply with the principles of the fast and frugal heuristics programme, the tools had to remain simple and fast while mirroring each algorithm’s cognitive steps. For instance, creating a graphic representation of the second step of the Take The Best algorithm (where each attribute is reviewed in order of importance until a cue is found to discriminate between options) was complex, abstract and ambiguous and could have led to many possible graphic representations.

During the field test, application issues were raised by health professionals and pregnant women. Only a minority of pregnant women reported concerns about the complexity of the deliberation tools (‘your most important reason’). By contrast, complexity was a major concern for health professionals, presumably because the tools appraised by pregnant women (version 3) drew on health professionals’ suggestions for improvement and consequently achieved higher usability. Divergent perceptions may also be imputed to pregnant women’s and health professionals’ differing opinions, interests and information needs. Previous research revealed that health professionals had strong and often diverging opinions about the nature and quantity of information needed about the range of chromosomal abnormalities tested for, elective termination of pregnancy, risk of miscarriage to quote, etc. 52 Because both deliberation tools provided comprehensive information about potentially controversial topics (e.g. elective pregnancy termination), professionals may have feared that this type of resource would interfere with information received during the medical consultation. Furthermore, processes or informational contents that are not specifically insightful or relevant to health professionals (e.g. clear list of reasons) may have facilitated decision making among pregnant women. The structure and guidance provided by the deliberation tools may offer a form of decision support that health professionals do not necessarily identify or consider helpful (e.g. visualizing the decision‐making process and outcome on the weighing scale). The gap between pregnant women’s and health professionals’ perceptions and interests has been documented in the literature and is consistent with the present finding. 53 , 54

While stakeholders and health professionals did not express clear preferences towards one tool or the other, most pregnant women reported preferences for ‘weighing it up’. Pregnant women felt that it offered a more immediate and intuitive way of weighing the pros and cons of amniocentesis and visualizing the decision. The movement of the weighing scales was deemed helpful in facilitating the trade‐off between options. In ‘your most important reason’, some women reported difficulty ranking the reasons in order of importance and comprehending the instructions. According to the Take The Best algorithm, the task of ranking cues in order of importance and finding a cue that discriminates between options should be fast, simple and completed with limited cognitive effort. The translation of the algorithm into ‘your most important reason’ failed to comply with the above‐mentioned principles. The present findings point to a paradox. While the Take The Best heuristic is a simpler and requires less cognitive effort than Tallying, its translation into ‘your most important reason’ is more complex and less intuitive. This highlights the difficulty of translating abstract theoretical constructs into usable tools and points to the necessity to field‐test complex interventions before assuming that those interventions are appropriate and usable by patients.

In the literature, the development of heuristic‐based deliberation tools has not been documented. However, one study compared the effectiveness of a heuristic‐based decision aid (Take The Best) with a decision aid based on the analytic hierarchy process (method derived from a normative theory of decision making) for a decision to undertake colorectal cancer screening. 55 The analytic hierarchy process decision aid described options and attributes and consisted of pairwise comparisons of all options and attributes. 56 The Take The Best version of the decision aid described options and attributes and asked users to select the most important attribute and identify the option that best satisfied the chosen attribute. Participants were asked to read one of three decision aids and indicate their current screening decision. The results indicated that the Take The Best decision aid predicted the final decision better than the analytic hierarchy process. Because information about the practical transfer of the Take The Best heuristic into a practical intervention is missing, a comparison with ‘your most important reason’ is not possible at this stage. However, this shows that heuristic‐based approaches effectively predict decision making in the context of medical decision making.

Strengths and limitations

Strengths of the study were the innovative approach used in developing deliberation tools and the diverse nature of the sample. As far as we could determine, heuristic‐based algorithms have never been used to develop deliberation tools. The present study captures one of the rare attempts to transfer theoretical constructs into usable decision support intervention’s components. Furthermore, the deliberation tools were field‐tested with three different groups of users: stakeholders, health professionals and women considering amniocentesis testing. One therefore expects that major dysfunctions and understanding difficulties would have been addressed from all relevant view points.

A limitation was the comparison difficulties generated by the iterative approach of the field test. The groups of users evaluated incremental versions of the deliberation tools, which subsequently compromised direct comparisons between the groups. Another possible limitation may be the multiple methods used to collect data. However, given stakeholders and health professionals’ time constraints and overall recruitment difficulties, adopting methods that were convenient for each group seemed essential and non‐negotiable.

Conclusions

The translation of theoretical constructs into graphic‐based deliberation tools is possible. However, field test demonstrates that the tool’s usability highly depends on the accuracy and feasibility of the translation. The ‘translation difficulties’ inherent in this process may be the major obstacles in designing theory‐based interventions. If there is to be success in translating theory into practical interventions, there will need to be significant commitment among stakeholders and user groups to collaboratively develop usable interventions. There is a scope for examining the translation issues associated with theory‐based interactive decision tools. At this stage, we are unable to assume that one approach (Take The Best or Tallying) is superior to the other. More research into the development and evaluation of theory‐based decision support interventions and associated components is needed. The effect of the deliberation tools will be examined in an online randomized controlled trial of amnioDex. The impact of the deliberation tools will be assessed by randomizing pregnant women to a minimum information version of amnioDex (which will exclude all deliberation tools) and a standard version of amnioDex, which will include all deliberation tools. Web‐log data will also be recorded.

Funding

MA‐D’s PhD, of which this study was a part, was funded by the Sir Halley Stewart Trust, Cambridge, UK.

Acknowledgements

We thank the midwives at the antenatal clinics for their on‐going support and for approaching participants and distributing information leaflets.

References

- 1. O’Connor AM, Bennett C, Stacey D et al. Do patient decision aids meet effectiveness criteria of the international patient decision aid standards collaboration? A systematic review and meta‐analysis. Medical Decision Making, 2007; 27: 554–574. [DOI] [PubMed] [Google Scholar]

- 2. Dijksterhuis A, Bos MW, Nordgren LF, van Baaren RB. On making the right choice: the deliberation‐without‐attention effect. Science, 2006; 311: 1005–1007. [DOI] [PubMed] [Google Scholar]

- 3. Wilson TD, Schooler JW. Thinking too much: introspection can reduce the quality of preferences and decisions. Journal of Personality and Social Psychology, 1991; 60: 181–192. [DOI] [PubMed] [Google Scholar]

- 4. Elwyn G, Kreuwel I, Durand MA et al. How to develop web‐based decision support interventions for patients: a process map. Patient Education and Counseling, 2010; Epub ahead of print. [DOI] [PubMed] [Google Scholar]

- 5. O’Connor AM, Stacey D, Entwistle V et al. Decision aids for people facing health treatment or screening decisions (Review). The Cochrane Library, 2006; 4: 1–110. [Google Scholar]

- 6. Estabrooks C, Goel V, Thiel E, Pinfold P, Sawka C, Williams I. Decision aids: are they worth it? A systematic review. Journal of Health Services Research & Policy, 2001; 6: 170–182. [DOI] [PubMed] [Google Scholar]

- 7. Molenaar S, Sprangers MA, Postma‐Schuit FC et al. Feasibility and effects of decision aids. Medical Decision Making, 2000; 20: 112–127. [DOI] [PubMed] [Google Scholar]

- 8. Bekker H, Thornton JG, Airey CM et al. Informed decision making: an annotated bibliography and systematic review. Health Technology Assessment, 1999; 3: 1–156. [PubMed] [Google Scholar]

- 9. Bowen DJ, Allen JD, Vu T, Johnson RE, Fryer‐Edwards K, Hart A. Theoretical foundations for interventions designed to promote informed decision making for cancer screening. Annals of Behavioral Medicine, 2006; 32: 202–210. [DOI] [PubMed] [Google Scholar]

- 10. Elwyn G, Stiel M, Durand MA, Boivin J. The design of patient decision support interventions: addressing the theory‐practice gap. Journal of Evaluation in Clinical Practice, 2010; Epub ahead of print. [DOI] [PubMed] [Google Scholar]

- 11. Durand MA, Stiel M, Boivin J, Elwyn G. Where is the theory? Evaluating the theoretical frameworks described in decision support technologies. Patient Education and Counseling, 2008; 71: 125–135. [DOI] [PubMed] [Google Scholar]

- 12. O’Connor AM, Wells GA, Tugwell P, Laupacis A, Elmslie T, Drake E. The effects of an ‘explicit’ values clarification exercise in a woman’s decision aid regarding postmenopausal hormone therapy. Health Expectations, 1999; 2: 21–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Wilson TD, Lisle DJ, Schooler JW, Hodges SD, Klaaren KJ, LaFleur SJ. Introspecting about reasons can reduce post‐choice satisfaction. Personality & Social Psychology Bulletin, 1993; 19: 331–339. [Google Scholar]

- 14. Souchek J, Stacks JR, Brody B et al. A trial for comparing methods for eliciting treatment preferences from men with advanced prostate cancer: results from the initial visit. Journal of Medical Care, 2000; 2: 161–165. [DOI] [PubMed] [Google Scholar]

- 15. Rutten‐van Molken MP, Bakker M, Hidding A, van Doorslaer EK, van der Linden S. Methodological issues of patient utility measurement. Journal of Medical Care, 1995; 33: 922–937. [DOI] [PubMed] [Google Scholar]

- 16. Gigerenzer G, Goldstein DG. Reasoning the fast and frugal way: models of bounded rationality. Psychological Review, 1996; 103: 650–669. [DOI] [PubMed] [Google Scholar]

- 17. Gigerenzer G, Todd PM, the ABC Research Group . Simple Heuristics that Make Us Smart. New York: Oxford University Press, 1999. [Google Scholar]

- 18. Dougherty MR, Franco‐Watkins AM, Thomas R. Psychological plausibility of the theory of probabilistic mental models and the fast and frugal heuristics. Psychological Review, 2008; 115: 199–213. [DOI] [PubMed] [Google Scholar]

- 19. Kaplan BJ, Shayne VT. Unsafe sex: decision‐making biases and heuristics. AIDS Education and Prevention, 1993; 5: 294–301. [PubMed] [Google Scholar]

- 20. Brighton H, Gigerenzer G. How heuristics exploit uncertainty In: Todd PM, Gigerenzer G, Group TAR. (eds) Ecological Rationality: Intelligence in the World. New York: Oxford University Press, in press. [Google Scholar]

- 21. Martignon L, Katsikopoulos KV, Woike JK. Categorization with limited resources: a family of simple heuristics. Journal of Mathematical Psychology, in press. [Google Scholar]

- 22. Beilock SL, Carr TH, MacMahon C, Starkes JL. When paying attention becomes counterproductive: impact of divided versus skill‐focused attention on novice and experienced performance of sensorimotor skills. Journal of Experimental Psychology: Applied, 2002; 8: 6–16. [DOI] [PubMed] [Google Scholar]

- 23. Johnson JG, Raab M. Take the first: option generation and resulting choices. Organizational Behavior and Human Decision Processes, 2003; 91: 215–229. [Google Scholar]

- 24. Pitt MA, Myung IJ, Zhang S. Toward a method for selecting among computational models for cognition. Psychological Review, 2002; 109: 472–491. [DOI] [PubMed] [Google Scholar]

- 25. Goldstein DG, Gigerenzer G. The recognition heuristic: how ignorance makes us smart In: Gigerenzer G, Todd PM, the ABC Research Group (eds) Simple Heuristics that Make Us Smart. New York: Oxford University Press, 1999: 37–58. [Google Scholar]

- 26. Borges B, Goldstein DG, Ortmann A, Gigerenzer G. Can ignorance beat the stock market? In: Gigerenzer G, Todd PM, the ABC Research Group (eds) Simple Heuristics that Make Us Smart. New York: Oxford University Press, 1999: 59–72. [Google Scholar]

- 27. Geman SE, Bienenstock E, Doursat R. Neural networks and the bias/variance dilemma. Neural Computation, 1992; 4: 1–58. [Google Scholar]

- 28. Foster M, Sober E. How to tell when simpler, more unified, or less ad hoc theories will provide more accurate predictions. British Journal of Philosophical Science, 1994; 45: 1–35. [Google Scholar]

- 29. Brighton H. Robust inference with simple cognitive models In: Lebiere C, Wray R. (eds) Between a Rock and a Hard Place: Cognitive Science Principles Meet AI‐hard Problems Papers from the AAAI Spring Symposium. (AAAI Tech. Rep. No. SS‐06‐03, pp. 17–22). Menlo Park, CA: AAAI Press, 2006. [Google Scholar]

- 30. Fischer JE, Steiner F, Zucol F et al. Use of simple heuristics to target macrolide prescription in children with community‐acquired pneumonia. Archives of Pediatrics and Adolescent Medicine, 2002; 156: 1005–1008. [DOI] [PubMed] [Google Scholar]

- 31. Pozen MW, D’Agostino RB, Selker HP, Sytkowski PA, Hood WB. A predictive instrument to improve coronary‐care‐unit admission practices in acute ischemic heart disease. The New England Journal of Medicine, 1984; 310: 1273–1278. [DOI] [PubMed] [Google Scholar]

- 32. Gaudry P, Grange G, Lebbar A et al. Fetal loss after amniocentesis in a series of 5,780 procedures. Fetal Diagnosis and Therapy, 2008; 23: 217–221. [DOI] [PubMed] [Google Scholar]

- 33. Tabor A, Philip J, Madsen M, Bang J, Obel EB, Norgaard‐Pedersen B. Randomised controlled trial of genetic amniocentesis in 4606 low‐risk women. Lancet, 1986; 7: 1287–1293. [DOI] [PubMed] [Google Scholar]

- 34. Asch A. Prenatal diagnosis and selective abortion: a challenge to practice and policy. American Journal of Public Health, 1999; 89: 649–657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Green JM, Hewison J, Bekker HL, Bryant LD, Cuckle HS. Psychosocial aspects of genetic screening of pregnant women and newborns: a systematic review. Health Technology Assessment, 2004; 8: 1–128. [DOI] [PubMed] [Google Scholar]

- 36. Wegwarth O, Elwyn G. Fast & frugal models for designing decision support tools: helpful companions or bad underminers. 2009, submitted.

- 37. Gigerenzer G. Why heuristics work. Perspective on Psychological Science, 2008; 3: 20–29. [DOI] [PubMed] [Google Scholar]

- 38. Goldstein D. The less‐is‐more Effect in Inference. Unpublished Master’s Thesis: University of Chicago, Chicago, 1994. [Google Scholar]

- 39. Elwyn G, O’Connor A, Stacey D et al. Developing a quality criteria framework for patient decision aids: online international Delphi consensus process. BMJ, 2006; 333: 417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Evans R, Elwyn G, Edwards A, Watson E, Austoker J, Grol R. Toward a model for field‐testing patient decision‐support technologies: a qualitative field‐testing study. Journal of Medical Internet Research, 2007; 9: e21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Holloway I. Qualitative Research in Health Care. Maidenhead: Open University Press, 2005. [Google Scholar]

- 42. Cotton D, Gresty K. Reflecting on the think‐aloud method for evaluating e‐learning. British Journal of Educational Technology, 2006; 37: 45–54. [Google Scholar]

- 43. Davison GC, Vogel RS, Coffman SG. Think‐aloud approaches to cognitive assessment and the articulated thoughts in simulated situations paradigm. Journal of Consulting and Clinical Psychology, 1997; 65: 950–958. [DOI] [PubMed] [Google Scholar]

- 44. Ericsson KA, Simon AS. Protocol Analyses, Verbal Reports as Data. Cambridge, MA: The MIT Press, 1984. [Google Scholar]

- 45. Fonteyn M, Fisher A. Use of think aloud method to study nurses’ reasoning and decision making in clinical practice settings. Journal of Neuroscience Nursing, 1995; 27: 124–128. [DOI] [PubMed] [Google Scholar]

- 46. Funkesson KH, Anbacken EM, Ek AC. Nurses’ reasoning process during care planning taking pressure ulcer prevention as an example. A think‐aloud study. International Journal of Nursing Studies, 2007; 44: 1109–1119. [DOI] [PubMed] [Google Scholar]

- 47. Denzin NK, Lincoln YS. Handbook of Qualitative Research. Thousand Oaks, CA: Sage Publications, 2000. [Google Scholar]

- 48. Pope C, Ziebland S, Mays N. Qualitative research in health care. Analysing qualitative data. BMJ, 2000; 320: 114–116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Durand MA, Boivin J, Elwyn G. A review of decision support technologies for amniocentesis. Human Reproduction Update, 2008; 14: 659–668. [DOI] [PubMed] [Google Scholar]

- 50. Plain English Campaign . How to write in plain English. Plain English Campaign. [Google Scholar]

- 51. Cutts M. The Plain English Guide. Oxford: Oxford University Press, 1995. [Google Scholar]

- 52. Durand MA, Stiel M, Boivin J, Elwyn G. Information and decision support need of parents considering amniocentesis: a qualitative study of pregnant women and health professionals. Health Expectations, 2009; in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Hunt LM, De Voogd KB, Castaneda H. The routine and the traumatic in prenatal genetic diagnosis: does clinical information inform patient decision‐making. Patient Education and Counseling, 2005; 56: 303–312. [DOI] [PubMed] [Google Scholar]

- 54. St‐Jacques S, Grenier S, Charland M, Forest JC, Rousseau F, Legare F. Decisional needs assessment regarding Down syndrome prenatal testing: a systematic review of the perceptions of women, their partners and health professionals. Prenatal Diagnosis, 2008; 28: 1183–1203. [DOI] [PubMed] [Google Scholar]

- 55. Galesic M, Cesario J, Gigerenzer G. Take‐the‐best approach to decision aids. 30th Annual Meeting of the Society for Medical Decision Making, Philadelphia, 2008.

- 56. Dolan JG, Frisina S. Randomized controlled trial of a patient decision aid for colorectal cancer screening. Medical Decision Making, 2002; 22: 125–139. [DOI] [PubMed] [Google Scholar]