Abstract

Objective

To introduce a new online generic decision support system based on multicriteria decision analysis (MCDA), implemented in practical and user‐friendly software (Annalisa©).

Background

All parties in health care lack a simple and generic way to picture and process the decisions to be made in pursuit of improved decision making and more informed choice within an overall philosophy of person‐ and patient‐centred care.

Methods

The MCDA‐based system generates patient‐specific clinical guidance in the form of an opinion as to the merits of the alternative options in a decision, which are all scored and ranked. The scores for each option combine, in a simple expected value calculation, the best estimates available now for the performance of those options on patient‐determined criteria, with the individual patient's preferences, expressed as importance weightings for those criteria. The survey software within which the Annalisa file is embedded (Elicia©) customizes and personalizes the presentation and inputs. Principles relevant to the development of such decision‐specific MCDA‐based aids are noted and comparisons with alternative implementations presented. The necessity to trade‐off practicality (including resource constraints) with normative rigour and empirical complexity, in both their development and delivery, is emphasized.

Conclusion

The MCDA‐/Annalisa‐based decision support system represents a prescriptive addition to the portfolio of decision‐aiding tools available online to individuals and clinicians interested in pursuing shared decision making and informed choice within a commitment to transparency in relation to both the evidence and preference bases of decisions. Some empirical data establishing its usability are provided.

Keywords: decision aid, decision support, multicriteria decision analysis, patient preferences

Introduction: multicriteria decision making

Asked how they make a decision, health professionals, either individually or as part of a multidisciplinary medical team, will often say something like

Together with the patient we look at the available options to see how well each performs on the main effect benefit, then take into account the side effect and adverse event harms, the burdens of the treatment and so on, finally weighing the benefits and harms and any other considerations to arrive at a conclusion as to the best option. We naturally bear in mind what the most recent relevant high quality guidelines have to say.

A patient responding to the same question will probably come up with something similar, albeit expressed in different words such as ‘taking the ‘pros and cons’ into account' and ‘giving all the considerations due weight’.

Clearly, these are not accurate characterizations of all clinical decision‐making processes, but would seem to be reasonably descriptive of many. More importantly, they would certainly be common responses when the prescriptive question is asked: ‘How should a clinical decision be made?’

These sorts of statements indicate that we operate in a health‐care system where some form of shared decision making is accepted as the aim. The majority of health professionals routinely ‘talk the talk’ of informed choice and patient‐centred care, increasingly emphasizing ‘patient‐important outcomes’ as promoted by the Grading of Recommendations Assessment, Development, and Evaluation (GRADE) collaboration1 and the newly established Patient‐Centered Outcomes Research Institute (PCORI) (http://www.pcori.org) among many other individuals and groups. They do so with genuine conviction and intent, but find it more difficult to ‘walk the walk’2, 3 and even to agree on what the key steps should be in terms of pace, direction and support.

The presence of cultural and socio‐economic variations, together with great individual heterogeneity within cultures and classes, is at the heart of the challenge posed in pursuing shared decision making (and informed choice) within an overall philosophy of person‐ and patient‐centred care. The challenge to the professionals is mirrored by that of the individuals with whom they engage. All parties lack a simple and generic way to picture and communicate about the decisions that need to be made in health care. We seek to address this major handicap to progress towards all three goals. For convenience, the discussion is focused on the encounter between individual clinician and patient, but we regard our proposal as applying beyond the microclinical setting, to the meso‐ and macrolevels of health‐care decision and policymaking.

Two broad types of decision technology are compatible with shared decision making. The first is that captured in the opening quotes. As it takes some form of argumentation conducted in words, even if it refers to numbers as inputs, we feel an appropriate shorthand term for it is verbal multicriteria decision deliberation (MCDD). It embraces all forms of decision making that occur through deliberative processes, including those which are based on decision aids and support grounded in descriptive theories of human decision behaviour, usually involving descriptive theories of expert decision making.4 It dominates recent work in relation to shared decision making and patient‐centred decision support.5, 6, 7 MCDD is a useful term because it highlights the key similarities and differences with the alternative decision (and decision support) technology that we argue should be included in the portfolio of clinical decision‐making competencies of both health professionals and patients. This alternative is based on the well‐established, theoretically grounded, prescriptive technique of multicriteria decision analysis (MCDA).8 To make the comparison with verbal MCDD even clearer, we can imagine the adjective numerical preceding it.

In short, we are suggesting, along with Dolan,9 van Hummel and Ijzerman10 and Liberatore and Nydick11 that numerical MCDA (hereafter simply MCDA) be added to the competency portfolio of all those involved in clinical decision making. We regard their studies as establishing that MCDA ‐based clinical decision support systems can be successfully developed and deployed. However, despite the high quality of the efforts of these researchers, the implementation of MCDA‐based decision support in health care has been fairly limited – not that the success of MCDD‐based aids in routine practice has been spectacular to date.12, 13 The reasons for this undoubtedly include the usability and communicability of the current software implementations of MCDA technique, computerization being a necessary condition for its application, in contrast to MCDD. In this respect, we believe it reasonable to infer that an implementation which is superior in these respects will be more successful and can be regarded a priori as a workable clinical decision support system. But the reasons also trace back to the fundamentally different theoretical paradigm from which MCDA itself emanates, compared with that underlying current clinical practice and the majority of decision aids built for use within it (a comprehensive inventory of patient decision aids is available at http://decisionaid.ohri.ca/index.html). It is vital to keep this in mind in any attempted evaluation.

MCDD and MCDA: similarities and differences

There are two key similarities between the two broad modes of multicriteria decision making, the umbrella term. First, both imply that in every clinical decision, two sorts of judgement are needed: (i) on the performance of each of the available options on each of the multiple relevant considerations and (ii) on the relative importance of those multiple considerations. Second, that these conceptually different types of input must be integrated/synthesized/combined in some way to arrive at a decision.

The key differences are reflected in the final words of the labels – deliberation and analysis – and in the preceding, implied adjectives – verbal and numerical. (In this paper, we include a graphical representation of data within the scope of the latter term.)

It might be asked why we characterize the distinction as a ‘verbal/numerical’ contrast, rather than a ‘qualitative/quantitative’ one. We do so because it is crucial to accept that MCDD is replete with the quantification of magnitudes. This quantification is simply done in predominantly verbal ways during the decision‐making parts of the discourse. This applies in relation to the performance magnitude judgements, for example, of different medications reducing the chance of Pain, where terms such as ‘low probability’, ‘good chance’ and ‘very likely’ are used to characterize the chances of the criterion being met for this patient. It also applies to the relative importance judgements, for example, of the importance of pain reduction relative to medication side effects, where again a variety of terms such as ‘paramount’, ‘trivial’ and ‘major’ – or simply ‘very important’ and ‘not very important’ – are deployed.

The word Analysis is used because the explicit aim in MCDA (and in fact of any version of decision analysis, including its cost‐effectiveness and cost‐utility forms) is to arrive at a result – an opinion is our preferred term – by a process of analytical calculation on the basis of numerical judgments. Of course, the process of arriving at those numerical judgements almost certainly involves extensive verbal, non‐numerical elements and hence deliberation, in the same way that the deliberative discourse of MCDD may contain many judgments of magnitudes, including some expressed numerically. Deliberation on the other hand is an interpersonal process where the provenance of the emerging conclusion inheres in the social process adopted and the participants involved in it. Unless the deliberation is structured as an MCDA,14 the conclusion cannot be detached from them in the form of a graphic summary, or equation, or set of numerical option scores.

A process that would benefit from the perceived strengths of each approach is an attractive prospect, and such a hybrid form has been implemented by Proctor and Drechsler in the context of environmental policy formation.14 A ‘stakeholder jury’ was used to structure an MCDA through a deliberative process and populate it with the help of experts. MCDD then followed as the final stage. The extensive time and resources involved, as well as the environmental policy context, make the empirical conclusions of limited relevance, but the hybrid case is well made in general. However, such a hybrid involves compromise from both sides, and this is not easy once it becomes clear that paradigmatic principles are involved, not merely syntactic or semantic differences that can be addressed by ‘translation’, for example, of verbal magnitude quantifiers into numerical ones. These paradigmatic differences need to be articulated before proceeding.

The objective of decision support is to improve decisions. This means establishing whether improvements can be made, identifying where improvements could be made and providing support that will lead to improvements. The concept of improvement means one cannot avoid being prescriptive. Purely descriptive approaches, which focus on describing how decisions are made – by individuals or by organizations, communities and other groups – provide no basis for change as they have no basis for identifying what would be an improvement.15 Purely normative approaches, which focus on establishing, without reference to how decisions are made, the fundamental principles and processes that an ideal decision maker would implement, are simply impractical.

On what basis can such desirable, potentially decision‐improving prescriptions for decision‐making processes be identified? There are two main possible bases.

One basis is the normative principles of decision theory and decision analysis. Decision analysis is essentially the ideal processes of decision theory converted into processes that are practical, given the time, resource and cognitive constraints of the real world. Lipshitz and Cohen4 call prescription arrived at on this basis analysis‐based prescription, and this is exactly what we mean when we say MCDA‐based decision aids are 'prescriptive'. They produce an opinion which reflects, as closely as practicable – for many reasons this may not be very close at all – the logical processes of an idealized decision maker. Interestingly these principles and processes have been endorsed by many people if they are asked how a decision should be made, even when they do not follow the principles and processes themselves.4

The other possible basis of prescriptions for improvement in decision making is description of the decision processes of expert decision makers, on the one hand, in contrast to non‐expert decision makers on the other. The former are defined as those whose decisions generally produce good results and outcomes. Identifying the differences between them – what makes the experts expert – can lead to what Lipshitz and Cohen call expertise‐based prescription. Despite the accuracy of this term, in the world of decision support the term ‘prescriptive’ is almost exclusively associated with the analysis‐based approach. Those who favour the expertise‐based approach prefer to characterize themselves as operating within an descriptive approach, which in many ways is true, even though, by definition, prescription is necessary to distinguish experts from non‐experts and good from not‐good results.16

Expertise‐based description/prescription has been virtually the only route to improvement in decisions considered professionally acceptable in clinical medicine, and this is reflected in the curricula of medical schools and in clinical practice. Decision analysis is rare to non‐existent in both the curricula of medical schools and in clinical practice. It is also the basis for the regular attacks on the ‘expected utility/value’ principle which underlies analysis‐based prescription. These critiques, most recently that of Russell and Schwartz,17 are always derived from the descriptive inadequacies of the expected value principle. But such inadequacies are ultimately irrelevant within a prescriptive paradigm because it is not derived from actual behaviour. As it is hard to conceive of unbiased cross‐paradigm evaluation, it is not surprising that this is never proposed in such critiques, which ultimately reflect the intuitive appeal of descriptive approaches that seek to ‘take into account’ the complex characteristics, history and contexts of the individual. The issue is not whether these inadequacies and complexities exist – are descriptively true – but whether a user would prefer to be supported by analysis‐based prescription or expertise‐based description. The ethical responsibility is to make clear the paradigmatic origins of the type of support offered.

The analysis‐based prescriptive approach has one compelling advantage in the provision of patient/person‐centred care and genuinely shared decision making. In its multicriteria form, decision analysis provides a generic approach to all decisions, that is, it is not condition specific and does not mandate the reasoning expertise and knowledge acquisition in the particular area (e.g. a disease) required to follow and share expertise‐based prescriptions. As long as expertise‐based prescription is the sole basis of the clinical encounter, patient empowerment will be a very difficult and demanding task. An MCDA‐based prescriptive approach allows the person/patient to input their preferences as importance weights for criteria in a straightforward manner and to have them transparently combined with the published evidence and the clinician's expertise.

Developing an MCDA‐based decision support system

This paper focuses on MCDA as an appropriate technique for facilitating person‐centred health care in relation to the adoption decision – deciding what to do given the available options. It is important to distinguish this decision from two other decisions where we also regard MCDA as an appropriate support technique. One is the quality decision – deciding how good the decision just taken was, given the decision technology used. An MCDA‐based instrument for measuring decision quality has been developed and is presented in J. Dowie, M. Kjer Kaltoft, G. Salkeld and M. Cunich (submitted). The other is the decision decision – deciding how to decide, given the available decision technologies. In our case, the decision decision is whether the adoption decision is to be made by the exercise of the health professional's ‘clinical judgment’, by some form of MCDD‐based decision making, or in conjunction with some type of MCDA‐based decision support? We return to this central, ‘meta’ question later.

A great number of software implementations of MCDA exist, reflecting both widely varying versions of the technique and particular judgements about the extent and type of complexity to be catered for and the time and cognitive resources required.18, 19 These range from implementations of a SMART (Simple Multi Attribute Rating System) in a simple spreadsheet, implementations using the analytic hierarchy process (AHP) as executed either in a spreadsheet template or a dedicated software package, notably Expert Choice, http://expertchoice.com/products-services/expert-choice-desktop/, to specific MCDA implementations such as V.I.S.A http://www.visadecisions.com, HiView http://www.catalyze.co.uk/index.php/software/hiview3/, Web‐Hipre http://hipre.aalto.fi/ and Logical Decisions http://www.logicaldecisions.com/. The latter two packages also contain an AHP option. The prime motivation for developing an MCDA decision support system in the form of Annalisa was that none of the existing implementations of MCDA had, despite proving themselves as clinical decision support systems, made significant progress in health care. That was the situation when Annalisa was first conceived and we feel it remains true now, despite the growing research in this area (see Dolan,9 Maarten Ijzerman and colleagues,20 and other examples cited in the Liberatore review).11 Most of the increasing use of MCDA in health care is at the policy and health technology assessment level, with the recent developments within the EVIDEM framework and software in the forefront21, 22 confined to this setting.

In deliberately implementing the simplest, compensatory ‘weighted‐sum’ version of the MCDA technique we make no claim of it being innovatory as a decision model. It is, in essential respects, an enhanced interface for any SMART‐type matrix. These can easily be developed in a spreadsheet. However, Annalisa seeks to provide enhanced interactive online usability by way of the numerous customizing and personalizing functionalities provided in the survey program Elicia, into which the Annalisa file is normally embedded. For example, Annalisa enables personalization of the performance ratings of options on criteria on the basis of patient characteristics and personalization of the weightings of the criteria by the patient at the point of decision.

Thus, the focus of this paper is not to re‐introduce MCDA or confirm its value as the basis for clinical decision support systems, but to introduce a particular software template, Annalisa, as a practical and person‐centred implementation for use at the individual level, not only in shared decision making, but also in the community, especially in relation to cancer and other disease screening decisions and policies.

The AHP has been the MCDA implementation used most widely in the clinical health‐care context and warrants special mention. In an extensive series of papers, James Dolan has expounded and investigated the ways it can contribute to both shared decision making and the wider issues involved in clinical decision support.8, 9, 23 However, in its standard form, AHP involves a level of complexity that imposes high demands on both the developers and implementers/users of AHP‐based support systems. Primarily responsible for this increased complexity is the hierarchical attribute structure which AHP permits and indeed encourages (hence its name) and the unique pairwise comparison method used to establish criteria importance weights and performance ratings, devised by its founder, Thomas Saaty.24 While this increased complexity can be seen as leading to a high level of performance by some standards of normative rigour and comprehensiveness,25 it creates the difficulties in the development and delivery of AHP‐based decision support26 that have hindered its wider dissemination.

French and Rios Insua27 propose 5 key characteristics that any ‘good’ implementation of decision analysis, single or multi criteria, should possess. We use them, as summarized by Riabacke et al.,28 to highlight the basis of the claims of Annalisa in each respect.

Axiomatic basis. The axiomatic bases underlying Annalisa are those of decision theory. All the implementations of MCDA mentioned in this paper embody some version of the weighted‐sum principle which is at the centre of decision theory. Annalisa implements this basic principle in a very simple way while still retaining its key principles.

Feasibility. Feasibility has been the main driving force in the development of Anna‐lisa. The template was explicitly designed to reduce the complexity – and resulting cognitive demands – that is possible and tends to be facilitated by increasingly sophisticated implementations within the other available packages.

Robustness. No provision for sensitivity analysis is built into Annalisa, but both the weightings and ratings can be directly varied and the effect on scores instantly observed.

Transparency to users. In best practice implementation, the weighted‐sum principle is not only explained and illustrated prior to the user's interaction with Annalisa, but also during interaction where appropriate,for example when and whether the individual panels (Weightings, Ratings, Scores) are opened or closed at different stages during engagement. Application of the weighted‐sum principle is manifestly transparent on any populated Annalisa screen.

Compatibility with a wider philosophy. This criterion relates to the model developed in the software rather than to its basic functionalities. It is up to the user of Annalisa to develop a model relevant to their context and setting. The embedding of Annalisa in the online survey program Elicia makes interactivity and cyclical iteration simple and efficient and leaves the amount of each entirely in the user's control.

The final point requires further development in the light of the increasing interest in the comparison and evaluation of decision aids on the basis of multiple criteria relating to development, performance, accessibility and impact.

Within its essential prioritization of simplicity over complexity, the functionalities provided by the Annalisa‐in‐Elicia software create the possibility of building decision aids that should perform well on most criteria of adaptability and personalization. According to the Eiring et al. coding scheme for the personalization of decision aids,29 the basic components of personalization are media content, user features, user model construction and representation and adaptive system behaviour. User features can broadly be classified into the user′s knowledge level, interests, preferences, goals/tasks, background, individual traits and context. Adaptive system behaviours include adaptive navigation support, adaptive selection, organisation and presentation of content, adaptive search, adaptive collaboration and personalized recommendations. Used in conjunction with Elicia, any implementation of Annalisa should provide a medium to high degree of personalization in all these respects and hence compare favourably with the 10 of the initial 259 decision aids that were subject to detailed classification in the Eiring‐led study.

It is up to the developer of a decision support tool within this software to determine what degree and type of adaptational flexibility and personalization – to individuals or groups – is to be offered in terms of attributes (such as content, language, connectivity and presentation). It is also up to the developer to determine whether these are to be provided on an opt‐in or opt‐out basis. While compared with some ideal decision aid there are limitations in all these respects, a tool built in the Annalisa software is capable of technically matching, or possibly surpassing, any of the actual decision aids subjected to intensive analysis in the Eiring‐led project. Most differences that arise will not be for reasons of functionality, but be traceable to their MCDA basis, because this is what influences what is offered and required – and how it is offered and required – by way of adaptation and personalization.

This point is worth emphasizing because it is important that a particular decision aid is assessed as an implementation of the underlying technique and philosophy within which it is built. A tool using Annalisa is based on the paradigm of analysis‐based prescription, as opposed to that of expertise‐based description within which all the other aids examined by Eiring et al. have been constructed. What is paramount in the development and use of a particular decision support tool or system is to make the potential user very clear about its underlying paradigm, so that questions concerning lower levels of functionality are relevant to it. The appropriate evaluation of Annalisa‐based aids is therefore in comparison with other MCDA‐based ones and such a comparison, involving Annalisa and AHP (using Expert Choice), carried out within Hi‐View, is that has been undertaken by Pozo‐Martin (personal communication).

This makes the 2005 French and Xu30 survey of the five MCDA packages cited earlier – HiView, V.I.S.A, Web‐Hipre, Expert Choice and Logical Decisions – the most relevant available published comparison at a functional level. A survey of current decision analysis software, including full technical and operational details, is provided biennially by INFORMS. The 2012 survey results are available at http://www.orms-today.org/surveys/das/das.html. Fifteen packages offer some form of multicriteria DA, but this is a purely descriptive listing of information provided by 24 vendors, with no comments or assessments added. Of the five MCDA packages in the French and Xu comparison, V.I.S.A. does not appear.

French and Xu compared the five programs in terms of the aspects in which they differed, notably (using Annalisa terminology) decision structuring, weighting elicitation, rating elicitation, data presentation and sensitivity analysis.

When Annalisa, in conjunction with Elicia, is added to the comparison, two things stand out. First, the package fails to provide the vast majority of the functionalities and features that these five offer, considerably augmented in the 8 years since the survey. These functionalities and features are entirely appropriate where complex analysis is of benefit, such as in major projects with numerous stakeholders involved and large amounts of resources used, but even in such contexts the limited use of these packages either in practice or as the basis of decision support templates is noteworthy. And even where used, the complexity of the analysis is rarely matched in, or warranted by, the extensive deliberation that follows, as exemplified in a Swedish exercise in participatory democracy.31 Somewhat paradoxically it is the failure of Annalisa to provide alternative and/or more sophisticated and complex methods for key tasks (including determining the criteria and eliciting weights) that we regard as its positive virtue, because it will provide the potential for much wider use. The growth of product comparison websites and recommender systems within e‐commerce32, 33, 34, 35 is a clear sign that multicriteria analysis is eminently accessible to large sections of the population, but only at an appropriate level of complexity. William Buxton has pointed out that the speed of technological progress captured in Moore's Law (a technology generation is 18 months and decreasing) is in complete contrast to his ‘God's law’, which states that ‘the capacity of human beings is limited and does not increase over time – our neurons do not fire any faster, our memory doesn't increase in capacity, and we do not learn or think faster as time progresses’.36 The problem this creates for the evaluation of innovative and disruptive systems of all kinds is succinctly captured in Martin Buxton's law ‘It is always too early [for rigorous evaluation], until suddenly it's too late’.37

The second difference is closely associated with the pace of change in both the hardware and connectivity within which any MCDA software will operate. Both French and Xu and an earlier study by Belton and Hodgkin in 199938 saw three main settings for use of an MCDA package: Do‐It‐Yourself use by a single individual, an analyst‐facilitated group meeting, and ‘off‐line’ analysis by a consultant sandwiched between face‐to‐face meetings with decision makers. Subsequent developments in communication technology and connectivity means that there are now many more possibilities, including one in which a pre‐structured (options, criteria) and evidence‐populated MCDA‐based decision aid is made available online. Decision makers then need only to have their preferences (utilities, importance weights) elicited to obtain an opinion on the merits of the alternative options. In terms of data presentation and display, as well as interactivity, Annalisa benefits from being developed specifically for online use within the latest technology. Now written in html5, with tablet use prominently in mind, it has mobile presence on touch‐operated devices using iOS, Android and other operating systems. Such interactive mobile accessibility is likely to trump most other functional considerations in the coming years, making a level of complexity compatible with such operation a paramount consideration.

Annalisa was designed to embody the following practical principles:

It should be possible to undertake an analysis within a very short time, such as the 5–10 min often available in time/resource pressured situations, to ensure that the possible benefits of even a modicum of ‘slow thinking’ should not be lost.39 This was in no way, of course, intended to prevent weeks or months being devoted to generating the detailed structure and inputs – if the time and other resources are available.

Irrespective of the time available at the point of decision (and therefore including 5–10 min), the decision owner should not be asked to make the necessary trade‐offs among more than 7 ± 2 criteria.40, 41 (Annalisa actually has a maximum of 10.)

All the elements of the decision (preferences and evidence) and the outcome (best option) should be simultaneously visible on the screen, providing a complete picture of all elements of the decision and with the effects of changing any weighting or rating dynamically visible in real time.

Pop‐ups on the screen should provide access to additional information, especially the provenance of the option performance ratings (including external links where appropriate).

Giving higher weight to practical considerations, Annalisa adopts the simplest and most colloquially familiar form of MCDA. In the decision matrix ‘weighted‐sum’ approach, all attributes exist at the same level (there is no hierarchy of criteria and sub‐criteria); the performance of each option is directly rated on each attribute; the importance of each attribute is directly weighted in relation to that of all the other attributes; and the option Scores are calculated by summing an option's ratings on the attributes multiplied by the attribute weightings.

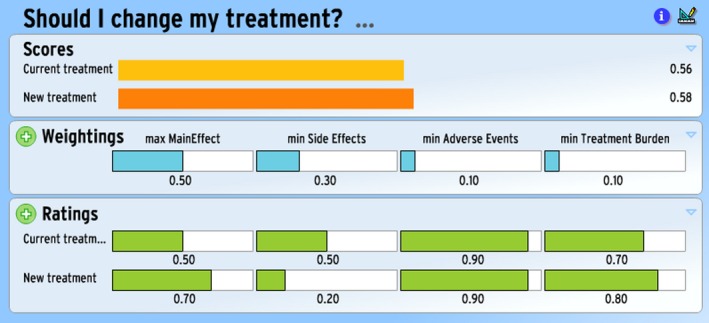

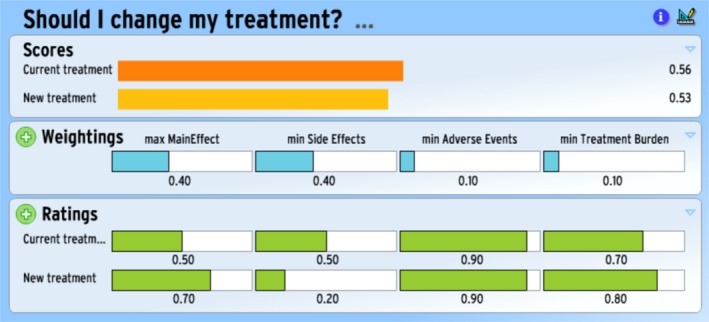

An illustrative example of a completed Annalisa screen is provided in Figures 1 and 2. These might be seen as either those for two different patients or those of the same patient at two points of time (where Fig. 1 is produced at Time 0 and Fig. 2 is produced at the next encounter i.e. Time 1). In the ratings panel of both instances, we can see that new treatment is better at maximizing the main effect benefit than current treatment (0.70 vs. 0.50), is better at minimizing the treatment burden than the current treatment (0.80 vs. 0.70), but is worse at minimizing side effects (0.20 vs. 0.50). (Longer bars mean the particular option does better.) The two are equally good in relation to minimizing adverse events (both 0.90).

Figure 1.

Example of Annalisa for hypothetical Patient 1 at Time 0.

Figure 2.

Example of Annalisa for hypothetical Patient 1 at Time 1.

Given the relative weightings of the four attributes in Fig. 1, new treatment emerges with the highest score in a simple expected value calculation.

Figure 2 presents the scores when the weight assigned to minimizing side effects harm is increased, with correspondingly reduced weight to maximizing main effect benefit. (The weightings for the set of attributes must sum to 1). Current treatment now has the highest score, which means we interpret this option as the opinion emerging from the Annalisa.

From wherever and however they are derived, both the ratings and the weightings entered into Annalisa are treated as measures on a ratio scale running from a (true) zero to 1 or 100%. Zero on the ratings scale means either zero probability (literally, and in many case logically, no chance) or zero fulfilment of the attribute concerned; 1 means 100% probability or complete fulfilment. Similarly, zero on the weightings scale means of no importance whatsoever, and 1 means all important to the exclusion of all other attributes.

The choice of the simple weighted‐sum approach, among many other decisions in the design and development of the Annalisa template, was made in the light of our value judgments as to the weight to be assigned to particular considerations. Pre‐eminently, we have assigned high weights to relevant practical considerations in both development and delivery, in full recognition and awareness that these may lead to poorer ratings on other criteria, pre‐eminently ones concerned with normative rigour. We do not see rigour/relevance and practicality/normativity as dichotomies, where one must make binary choices, but as matters of weighting and hence preference sensitive. Annalisa, as with any implementation of MCDM including MCDD, embodies a particular view as to the criteria and weights to be used in ‘deciding how to decide’. This point continues to apply even when choosing among the candidates within the field of MCDA.

Deciding how to decide: the decision decision

Given that a patient faces multiple options and regards multiple criteria as relevant to choosing among them, should they stick with MCDD, the currently dominant decision technology, or move to MCDA, at least as a decision support technology?

As discussed earlier, the two basic forms of MCDM and their many internal variations differ in important ways, as well as having key similarities. But from the clinical decision‐making standpoint, we should not be thinking of making a choice between them at some general and abstract level. It hardly makes any sense to ask whether MCDD or MCDA is better in general as a technique. Neither is it particularly useful to ask whether AHP/Expert Choice or weighted‐sum/Annalisa is a ‘better’ template implementation of MCDA. We need rather to focus on particular instantiations of each technique template in a tool for a particular clinical decision setting, where ‘setting’ embraces such things as the organization, the professional, the patient and the condition involved.

This implies we need to establish the decisional criteria relevant to a setting. These criteria will probably include such higher‐level considerations as evidential strength and coverage, theoretical grounding, explicitness, precision, transparency, communicability and potential for social or institutional biasing. But given that this is clinical decision support, they should also include the basic resource requirements, such as the time and cognitive effort and commitment required from all parties, as well as any financial implications for them.

It can be taken for granted that the performance of particular implementations of MCDD and MCDA will vary on these criteria, not least because of conscious value judgement‐based trade‐offs regarding the selection and weighting of the criteria made by individual parties in the case of MCDD and by the developers and implementers in the case of MCDA‐based decision support. For example, an MCDA‐based aid – or MCDD‐based appointment – designed to take no more than 20 min will (should) perform less well on a criterion such as ‘coverage of the evidence’ than one assumed to have 40 min at its disposal. The various interactive decision support systems we are developing all allow customization of the support process to the time and other resources available, as well as personalization of the weightings by, and ratings for, the specific patient on the selected criteria. They explicitly assume, indeed emphasize, that such customization choices will impact on which aspects of the decision support will be accessed and that the personalization of weightings will affect the outcome (opinion) emerging from the analysis.

Thus, given that the decision on what decision procedure or decision support system to adopt involves multiple criteria and is therefore preference sensitive, it does not make sense to ask whether Annalisa has, or ever can be, shown to work in some overall or average sense as the basis of a clinical decision support system. The answer will vary as a function of the particular decision maker's preferences in the particular context as well as the quality of the instantiation. Empirically, we can note that in a study with a small number of Australian GPs, 80% agreed that the demonstrated Annalisa‐based tool for prostate cancer screening would be useful in discussions with their patients and half thought it would be useful and could be recommended for use in decisions on any health matter.42 Pozo‐Martin has recently established the preference sensitivity of decision support evaluation in a comparison of Annalisa and the Analytic Hierarchy Process for developing and delivering decision support for patients with advanced lung cancer in some Spanish hospitals.43 Finally, while it is not appropriate to report the full set of results of a RCT involving Annalisa‐based decision aids for PSA testing here, Table 1 provides information on the age of participants and their ratings on various criteria, such as difficulty in responding to the key items on criteria weighting, that confirm its accessibility.

Table 1.

Age of participants and individual ratings on criteria relating to usability of Annalisa decision aids for PSA testing

| All, n = 1447 | Annalisa for PSA Testing with a Fixed Set of Attributes Chosen by Researchers, n = 727 (50.2%) | Annalisa for PSA Testing with Attributes Chosen by Study Participants, n = 720 (49.8%) | ||||

|---|---|---|---|---|---|---|

| n | % | n | % | n | % | |

| Age | ||||||

| 40–49 years | 591 | 40.8 | 282 | 38.8 | 309 | 42.9 |

| 50–59 years | 487 | 33.7 | 248 | 34.1 | 239 | 33.2 |

| 60–69 years | 369 | 25.5 | 197 | 27.1 | 172 | 23.9 |

| Difficulty deciding on the Weights for Criteria | ||||||

| Very difficult | 93 | 6.4 | 52 | 7.2 | 41 | 5.7 |

| Fairly difficult | 282 | 19.5 | 140 | 19.3 | 142 | 19.7 |

| Neither difficult nor easy | 466 | 32.2 | 235 | 32.3 | 231 | 32.1 |

| Fairly easy | 433 | 29.9 | 209 | 28.8 | 224 | 31.1 |

| Very easy | 173 | 12.0 | 91 | 12.5 | 82 | 11.4 |

| Difficulty entering the Weights for Criteria | ||||||

| Very difficult | 100 | 6.9 | 55 | 7.6 | 45 | 6.3 |

| Fairly difficult | 323 | 22.3 | 159 | 21.9 | 164 | 22.8 |

| Neither difficult nor easy | 467 | 32.3 | 242 | 33.3 | 225 | 31.3 |

| Fairly easy | 392 | 27.1 | 184 | 25.3 | 208 | 28.9 |

| Very easy | 165 | 11.4 | 87 | 12.0 | 78 | 10.8 |

| Contents of Evidence panel met needs for information | ||||||

| Very well | 362 | 25.0 | 163 | 22.4 | 199 | 27.6 |

| Well | 847 | 58.5 | 433 | 59.6 | 414 | 57.5 |

| Not very well | 238 | 16.5 | 131 | 18.0 | 107 | 14.9 |

Given the resource requirements of decision making and decision supporting – and hence their opportunity costs – we suggest there is a strong case for ‘deciding how to decide’ being approached analytically as an exercise in ‘decision resource–decision effectiveness analysis’. This simply parallels in relation to the decision decision (should we adopt this or that way of deciding whether, for example, to adopt this new drug or device technology?) the use of conventional cost‐effectiveness analysis in relation to the adoption decision (should we, for example, adopt this new drug or device technology or not?). As implied above, both numerator and denominator in decision resource‐decision effectiveness analysis are appropriately conceptualized as multicriterial indexes. It follows that MCDA is the appropriate analytical technique for decision resource‐decision effectiveness analysis.

Conclusion

A template designed to facilitate generic online multicriteria decision support in person‐centred health care is presented in this paper as a valid addition to the portfolio of decision support systems available to clinicians and their patients.

It is essential that any comparative evaluation of decision support systems makes the theoretical basis of each aid and process very clear to all respondents and decision stakeholders. In the context of person‐centred care, this comparison will involve multiple criteria, of which the paradigmatic basis of the aid or process is a crucial one. The choice will be preference sensitive, with the weighting sometimes leading to an instantiation of multicriteria decision deliberation emerging as the best way of deciding and at other times to an implementation of multicriteria decision analysis. Ultimately whether Annalisa and similar templates have a role to play in person‐centred care is not a question with a binary answer. The empirical question, which will need to be iteratively asked and re‐asked as technology and attitudes change, concerns the precise roles it can play in the increasingly complex world of translational health. This is what we are researching.

Sources of Support

The contribution of Professor Salkeld and Dr Cunich was supported by the Screening and diagnostic Test Evaluation Program (STEP) funded by the National Health and Medical Research Council of Australia under program Grant number 633003.

Conflict of Interest

Jack Dowie has a financial interest in the Annalisa software, but Annalisa and Elicia are ©Maldaba Ltd. (http://maldaba.co.uk/products/annalisa)

Acknowledgement

The authors acknowledge the helpful insights provided by Øystein Eiring.

References

- 1. Guyatt GH, Oxman AD, Kunz R et al GRADE guidelines: 2. Framing the question and deciding on important outcomes. Journal of Clinical Epidemiology, 2011; 64: 395–400. [DOI] [PubMed] [Google Scholar]

- 2. Mulley A, Trimble C, Elwyn G. Patients' Preferences Matter: Stop the Silent Misdiagnosis. London: King's Fund, 2012. [DOI] [PubMed] [Google Scholar]

- 3. Lin G, Trujillo L, Frosch DL. Consequences of not respecting patient preferences for cancer screening. Archives of Internal Medicine, 2012; 172: 393–394. [DOI] [PubMed] [Google Scholar]

- 4. Lipshitz R, Cohen MS. Warrants for prescription: analytically and empirically based approaches to improving decision making. Human Factors, 2005; 47:102–120. [DOI] [PubMed] [Google Scholar]

- 5. Elwyn G, Frosch D, Volandes AE, Edwards A, Montori VM. Investing in deliberation: a definition and classification of decision support interventions for people facing difficult health decisions. Medical Decision Making, 2011; 30: 701–711. [DOI] [PubMed] [Google Scholar]

- 6. Witt J, Elwyn G, Wood F, Brain K. Decision making and coping in healthcare: the Coping in Deliberation (CODE) framework. Patient Education and Counseling, 2012; 88: 256–261. [DOI] [PubMed] [Google Scholar]

- 7. Elwyn G, Lloyd A, Joseph‐Williams N et al Option grids: shared decision making made easier. Patient Education and Counseling, 2012. [cited 2012 Nov 7]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/22854227. [DOI] [PubMed] [Google Scholar]

- 8. Dolan JG. Multi‐criteria clinical decision support: a primer on the use of multiple criteria decision making methods to promote evidence‐based, patient‐centered healthcare. Patient, 2010; 3: 229–248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Dolan JG. Shared decision‐making–transferring research into practice: the Analytic Hierarchy Process (AHP). Patient Education and Counseling, 2008; 73: 418–425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. van Hummel JM, Ijzerman MJ. The use of the Analytic Hierarchy Process in Health Care Decision Making. Enschede: University Of Twente, 2009. [Google Scholar]

- 11. Liberatore M, Nydick R. The analytic hierarchy process in medical and health care decision making: a literature review. European Journal of Operational Research, 2008; 189: 194–207. [Google Scholar]

- 12. Elwyn G, Rix A, Holt T, Jones D. Why do clinicians not refer patients to online decision support tools? Interviews with front line clinics in the NHS. BMJ Open, 2012; 2: 1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Elwyn G. The implementation of patient decision support interventions into routine clinical practice: a systematic review. Implementation Science, 2013; Available at http://ipdas.ohri.ca/IPDAS-Implementa. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Proctor W, Drechsler M. Deliberative multicriteria evaluation. Environment and Planning C: Government and Policy, 2006; 24: 169–190. [Google Scholar]

- 15. Dowie J. Researching doctors' decisions. Quality and Safety in Health Care, 2004; 13: 411–412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Feldman‐Stewart D, Tong C, Siemens R et al The impact of explicit values clarification exercises in a patient decision aid emerges after the decision is actually made: evidence from a randomized controlled trial. Medical Decision Making, 2012; 32: 616–626. [DOI] [PubMed] [Google Scholar]

- 17. Russell LB, Schwartz A. Looking at patients' choices through the lens of expected utility: a critique and research agenda. Medical Decision Making, 2012; 32: 527–531. [DOI] [PubMed] [Google Scholar]

- 18. De Montis A, De Toro P, Droste‐franke B, Omann I, Stagl S. Assessing the quality of different MCDA methods In: Getzner M, Spash CL, Stagl S. (eds) Alternatives for Environmental Evaluation. Abingdon, Oxon: Routledge, 2005: 99–133. [Google Scholar]

- 19. Wallenius J, Dyer JS, Fishburn PC, Steuer RE, Zionts S, Deb K. Multiple criteria decision making, multiattribute utility theory: recent accomplishments and what lies ahead. Management Science, 2008; 54: 1336–1349. [Google Scholar]

- 20. Van Til JA, Renzenbrink GJ, Dolan JG, Ijzerman MJ. The use of the analytic hierarchy process to aid decision making in acquired equinovarus deformity. Archives of Physical Medicine and Rehabilitation, 89: 457–462. [DOI] [PubMed] [Google Scholar]

- 21. Goetghebeur MM, Wagner M, Khoury H, Rindress D, Grégoire J‐P, Deal C. Combining multicriteria decision analysis, ethics and health technology assessment: applying the EVIDEM decision‐making framework to growth hormone for Turner syndrome patients. Cost Effectiveness and Resource Allocation, 2010; 8: 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Tony M, Wagner M, Khoury H et al Bridging health technology assessment (HTA) with multicriteria decision analyses (MCDA): field testing of the EVIDEM framework for coverage decisions by a public payer in Canada. BMC Health Services Research, 2011; 11: 329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Dolan JG, Boohaker E, Allison J, Imperiale TF. Patients' preferences and priorities regarding colorectal cancer screening. Medical Decision Making, 2013; 53: 59–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Saaty TL. Decision making with the analytic hierarchy process. International Journal of Services Sciences, 2008; 1: 83–98. [Google Scholar]

- 25. Richman MB, Forman EH, Bayazit Y, Einstein DB, Resnick MI, Stovsky MD. A novel computer based expert decision making model for prostate cancer disease management. The Journal of Urology, 2005; 174: 2310–2318. [DOI] [PubMed] [Google Scholar]

- 26. van Hummel JM, Snoek GJ, Van Til JA, Van Rossum W, Ijzerman MJ. A multicriteria decision analysis of augmentative treatment of upper limbs in persons with tetraplegia. Journal of Rehabilitation Research And Development, 2005; 42: 635–644. [DOI] [PubMed] [Google Scholar]

- 27. French S, Insua DR. Statistical Decision Theory. New York: Oxford University Press, 2000. [Google Scholar]

- 28. Riabacke M, Danielson M, Ekenberg L. State‐of‐the‐art prescriptive criteria weight elicitation. Advances in Decision Sciences, 2012; 2012: 1–24. [Google Scholar]

- 29. Eiring Ø, Kumar H, Kim H, Drag S, Slaughter L. Personalization in patient decision aids: state of the art and potential. 6th International Shared Decision Making conference 2011. Maastricht. [Google Scholar]

- 30. French S, Xu D. Comparison study of multi‐attribute decision analytic software. Journal of Multi‐Criteria Decision Analysis, 2005; 13: 65–80. [Google Scholar]

- 31. Danielson M, Ekenberg L. Decision process support for participatory democracy. Journal of Multi‐Criteria Decision Analysis, 2008; 30 : 15–30. [Google Scholar]

- 32. Laffey D, Gandy A. Comparison websites in UK retail financial services. Journal of Financial Services Marketing, 2009; 14: 173–186. [Google Scholar]

- 33. Tsafarakis S, Lakiotaki K, Matsatsinis N. Applications of MCDA in Marketing and e‐Commerce. Handbook of Multicriteria Analysis: Applied Optimization, 2010; 103: 425–448. [Google Scholar]

- 34. Manouselis N, Costopoulou C. Analysis and classification of multi‐criteria recommender systems. World Wide Web, 2007; 10: 415–441. [Google Scholar]

- 35. Venkatesh V, Morris M, Davis G, Davis F. User acceptance of information technology: toward a unified view. MIS Quarterly, 2003; 27: 425–478. [Google Scholar]

- 36. Buxton W. Less is more (more or less) In: Denning P. (ed) The Invisible Future: The Seamless Integration of Technology in Everyday Life. New York: McGraw‐Hill, 2001: 145–179. [Google Scholar]

- 37. Buxton M. Problems in the economic appraisal of new health technology: The evaluation of heart transplants in the UK In: Drummond M. (ed.) Economic Appraisal of Health Technology in the European Community. Oxford: Oxford Medical publications, 1987: 103–118. [Google Scholar]

- 38. Belton V, Hodgkin J. Facilitators, decision makers, D.I.Y, users: Is intelligent multicriteria decision support for all feasible or desirable? European Journal of Operational Research, 1999; 113: 247–260. [Google Scholar]

- 39. Kahneman D. Thinking, Fast and Slow. London: Penguin, 2012. [Google Scholar]

- 40. Miller GA. The magical number seven, plus or minus two: some limits on our capacity for processing information. Psychological Review, 1956; 63: 81–97. [PubMed] [Google Scholar]

- 41. Higgins J, Green S. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011] [Internet]. The Cochrane Collaboration; 2011. Available from: www.cochrane-handbook.org. [Google Scholar]

- 42. Cunich M, Salkeld G, Dowie J et al Integrating evidence and individual preferences using a web‐based multi‐criteria decision analytic tool: an application to prostate cancer screening. Patient, 2011; 4: 1–10. [DOI] [PubMed] [Google Scholar]

- 43. Pozo‐Martin F. Multi‐Criteria Decision Analysis (MCDA) as the basis for the development, implementation and evaluation of interactive patient decision aids. PhD thesis, 2013; London School of Hygiene and Tropical Medicine.