Abstract

A common view in evolutionary biology is that mutation rates are minimised. However, studies in combinatorial optimisation and search have shown a clear advantage of using variable mutation rates as a control parameter to optimise the performance of evolutionary algorithms. Much biological theory in this area is based on Ronald Fisher’s work, who used Euclidean geometry to study the relation between mutation size and expected fitness of the offspring in infinite phenotypic spaces. Here we reconsider this theory based on the alternative geometry of discrete and finite spaces of DNA sequences. First, we consider the geometric case of fitness being isomorphic to distance from an optimum, and show how problems of optimal mutation rate control can be solved exactly or approximately depending on additional constraints of the problem. Then we consider the general case of fitness communicating only partial information about the distance. We define weak monotonicity of fitness landscapes and prove that this property holds in all landscapes that are continuous and open at the optimum. This theoretical result motivates our hypothesis that optimal mutation rate functions in such landscapes will increase when fitness decreases in some neighbourhood of an optimum, resembling the control functions derived in the geometric case. We test this hypothesis experimentally by analysing approximately optimal mutation rate control functions in 115 complete landscapes of binding scores between DNA sequences and transcription factors. Our findings support the hypothesis and find that the increase of mutation rate is more rapid in landscapes that are less monotonic (more rugged). We discuss the relevance of these findings to living organisms.

Electronic supplementary material

The online version of this article (doi:10.1007/s00285-016-0995-3) contains supplementary material, which is available to authorized users.

Keywords: Adaptation, Fitness landscape, Mutation rate, Population genetics

Introduction

Mutation is one of the most important biological processes that influence evolutionary dynamics. During replication mutation leads to a loss of information between the offspring and its parent, but it also allows the offspring to acquire new features. These features are likely to be deleterious, but have the potential to be beneficial for adaptation. Thus, mutation can be seen as a process of innovation, which is particularly important as the number of all living organisms is tiny relative to the number of all possible organisms. A question that naturally arises with regards to mutation is whether there is an optimal balance between the amount of information lost and potential fitness gained.

The seminal mathematical work to investigate biological mutation is by Fisher (1930), who considered mutation as a random motion in Euclidean space, the points of which are vectors representing collections of phenotypic traits of organisms. Using the geometry of Euclidean space, Fisher showed that probability of adaptation decreases exponentially as a function of mutation size (defined using the ratio of mutation radius and distance to the optimum), and concluded, therefore, that adaptation is more likely to occur by small mutations. Several studies, however, suggested that large mutations can be quite frequent in nature, thereby prompting re-examination of the theory (Orr 2005). Thus, Kimura (1980) extended the theory to take into account differences in probabilities of fixation for mutations of small and large size. Subsequently Orr (1998) considered the effect of mutation across several replications. Interestingly, while Fisher had a critical role in developing mathematical theory around discrete alleles, in his geometric model he used Euclidean space of traits as the domain of mutation, which is uncountably infinite and unbounded. This important issue only became apparent after the realisation that biological evolution occurs in a countable or even finite space of discrete molecular sequences (Smith 1970). However, subsequent geometric models based on Fisher’s, while explicitly modelling discrete mutational steps (e.g. Orr 2002), continue to assume that they occur within the same infinite Euclidean space. This issue may contribute to the fact that the predictions of such models have at best only been partially verified in actual biological systems (McDonald et al. 2011; Bataillon et al. 2011; Kassen and Bataillon 2006; Rokyta et al. 2008). In this and previous work, we consider mutation using the geometry and combinatorics of Hamming spaces (Belavkin et al. 2011; Belavkin 2011), which are finite, and this leads to a radically different view about the role of large mutations.

Independent of such biological concerns, researchers in evolutionary computation and operations research have a long history of considering variable mutation rates in genetic algorithms (GAs) (e.g. see Eiben et al. 1999; Ochoa 2002; Falco et al. 2002; Cervantes and Stephens 2006; Vafaee et al. 2010, for reviews). In particular, Ackley (1987) suggested that mutation probability is analogous to temperature in simulated annealing, which decreases with time through optimisation. A gradual reduction of mutation rate was also proposed by Fogarty (1989). Markov chain analysis of GAs was used by Yanagiya (1993) to show that a sequence of optimal mutation rates maximising the probability of obtaining the global solution exists in any problem. In particular, Bäck (1993) studied the probability of adaptation in the space of binary strings and derived optimal mutation rates depending on the distance from the global optimum. More recently, numerical methods have been used to optimise a mutation operator (Vafaee et al. 2010) that was based on the Markov chain model of GA by Nix and Vose (1992), although the complexity of this method may restrict its application to small spaces and populations. More recently, several authors have analysed the run-time of the co-called -evolutionary algorithm using constant and adaptive mutation rates and demonstrating some advantages of the latter (Böttcher et al. 2010; Sutton et al. 2011). Thus, the idea of using variable mutation rates to optimise evolutionary dynamics is not new. Unfortunately, these results in the field of evolutionary computation (EC) have specific computational focus, which limits their appeal for biology.

First, theoretical work on EC has focused almost exclusively on systems of binary strings. Optimisation of mutation rates of DNA strings, which have the alphabet of four bases, involves analysis of a significantly more difficult combinatorics and geometry. Previously, we presented some results on optimal mutation rates (Belavkin et al. 2011; Belavkin 2011), which used formula (2) for the intersection of spheres in general Hamming spaces. Here we give the derivation of this formula in Appendix 1 and show how it can be used to generalise Fisher’s geometric model of adaptation in Sect. 2.

Second, the run-time analysis and optimisation of evolutionary algorithms is concerned with their long term behaviour, which may have little relevance for biological systems. For example, Böttcher et al. (2010) show that the run time of the -evolutionary algorithm is on the order of , where l is the length of a binary string. In biological organisms, the typical length of DNA sequence is (and the alphabet size is ). Assuming the minimum of 20 minutes between replications, the run-time of order will significantly exceed years—the estimated time after which stars will cease to exist in the Universe (Adams and Laughlin 1997). Moreover, biological landscapes may fail to have a global optimum to converge to, because the set of all DNA sequences with variable lengths is infinite. In addition, biological landscapes are not static, and change on a regular basis. Thus, the short-term behaviour, perhaps within one or several replications, is more important for optimisation of parameters in biological systems. Here we develop these insights regarding mutation rate variation towards the particular issues presented by biological systems.

In Sect. 2 we show how the problem of optimal control of mutation rate can be defined in different ways leading to different solutions. In some cases, these solutions can be obtained analytically. For example, in the idealised geometric model, when maximisation of fitness is equivalent to minimisation of distance to a global optimum, the optimal mutation rates can be derived as functions of the distance (Belavkin 2012, 2013). This, however, is not the case for more realistic landscapes, which can be rugged. In Sect. 3, we address how the control functions can be obtained numerically. Although fitness landscapes have been analysed and classified in terms of hardness for evolutionary algorithms (He et al. 2015), there is no general theory about optimal mutation rates in arbitrary landscapes. The development of such theory is the main focus and contribution of this paper. In Sect. 4, we consider a fitness landscape as a communication channel between fitness values and distances from a nearest optimum. We introduce various notions of monotonicity of a fitness landscape, and discuss how these properties are related to the genotype-phenotype mapping. The main theoretical result is a theorem about weak monotonicity of continuous landscapes, which establishes the condition for a similarity between fitness and distance to an optimum in a broad class of landscapes. This suggests a similarity between fitness-based and distance-based optimal control functions for mutations rates.

These theoretical results allow us to formulate hypotheses about monotonicity and mutation rate control in biological fitness landscapes. We test these hypotheses by numerically obtaining optimal mutation rate control functions for 115 published complete landscapes of transcription factor binding (Badis et al. 2009). Our results presented in Sect. 5 show that all the optimal mutation rate control functions in these biological landscapes do indeed converge to non-trivial forms consistent with the theory developed here. We also observe differences among optimal mutation rate control functions, variation that relates to variation in the landscapes’ monotonic properties. We conclude in Sect. 6 by discussing how mutation rate control as considered here may be manifested in living organisms.

Fisher’s geometric model of adaptation in Hamming space

In this section, we consider an abstract problem, in which organisms are represented as points in some metric space and adaptation as a motion in this space towards some target point (an optimal organism), and fitness is negative distance to target. Minimisation of distance to the target is therefore equivalent to maximisation of fitness. Geometry of the metric space allows us to solve the optimisation problem precisely. These abstract results will be used in the following sections to develop the theory further bringing it closer to biology.

Representation and assumptions

Let be the set of all possible genotypes representing organisms. This set is usually equipped with a metric related to the mutation operator, such that large mutations correspond to large distance d(a, b) and vice versa. For example, the set of all DNA sequences of length can be represented by vectors in the Hamming space equipped with the Hamming metric counting the number of different letters in two strings. This choice of metric is particularly suitable for a simple point-mutation, which will be the focus of this paper. A sphere S(a, r) and a closed ball B[a, r] of radius around are defined as usual:

We refer to as the mutation radius.

Environment defines a preference relation (a total pre-order) so that means genotype b represents an organism that is better adapted to or has a higher replication rate in a given environment than an organism represented by genotype a. We shall consider only countable or even finite , so that there always exists a real function such that

In game theory, such a function is called utility, but in the biological context it is called fitness, and usually it is assumed to have non-negative values representing replication rates of the organisms. The non-negativity assumption is not essential, however, because the preference relation induced by f does not change under a strictly increasing transformation of f. Thus, our interpretation of fitness simply as a numerical representation of a preference relation is distinct from population genetic definitions of fitness (e.g. see Orr 2009). We shall assume also that there exists a top (optimal) genotype such that , which represents the most adapted or quickly replicating organism. Note that a finite set always contains at least one top as well as at least one bottom element .

Generally, one should consider also the set of all environments (including other organisms), because different environments impose different preference relations on , which have to be represented by different fitness functions. In this paper, however, we shall assume that fitness in any particular environment has been fixed.

During replication, genotype a can mutate into b with transition probability . Mutation can have different effects on fitness: It can be deleterious, if ; neutral, if ; or beneficial, if .

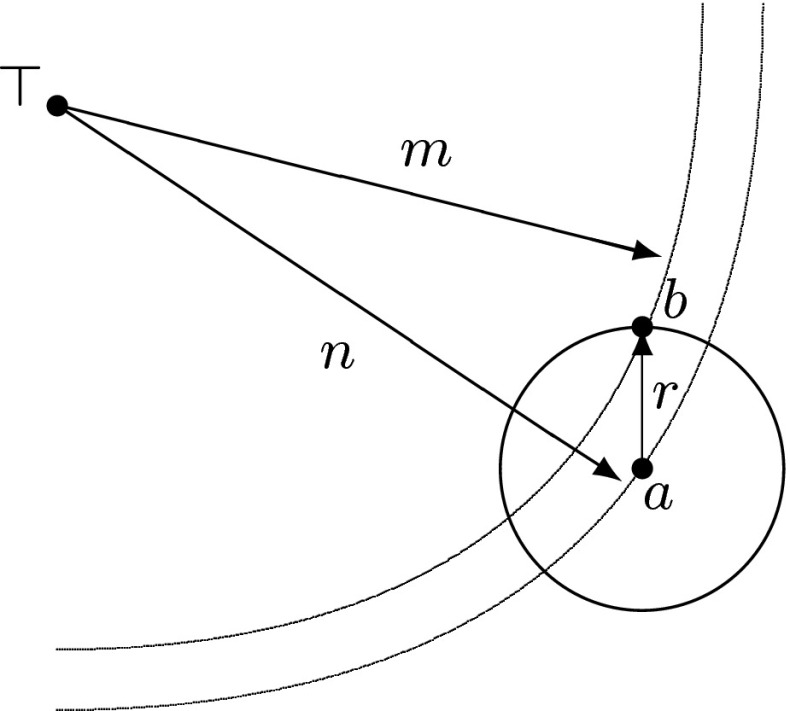

In this section, we consider a simple picture , so that maximization of fitness is equivalent to minimization of distance , and adaptation (beneficial mutation) corresponds to a transition from a sphere of radius into a sphere of a smaller radius , which is depicted in Fig. 1. This geometric view of mutation and adaptation is based on Ronald Fisher’s idea (Fisher 1930), which was, perhaps, the earliest mathematical work on the role of mutation in adaptation. Fisher represented individual organisms by points of Euclidean space of traits, and equipped with the Euclidean metric . The top element was identified with the origin in , and fitness with the negative distance . Then Fisher used the geometry of the Euclidean space to show that the probability of beneficial mutation decreases exponentially as the mutation radius increases, and therefore mutations of small radii are more likely to be beneficial. Despite subsequent development of the theory (Orr 2005), the use of Euclidean space for representation was not revised.

Fig. 1.

Mutation of point a in a metric space into b with mutation radius . The distances and from an optimal element define the fitnesses of a and b

Euclidean space is unbounded (and therefore non-compact) and the interior of any ball has always smaller volume than its exterior. Therefore, assuming mutation in random directions, a point on the surface of a ball around an optimum is always more likely to mutate into the exterior than the interior of this ball. This simple property is key for Fisher’s conclusion that adaptation is more likely to occur by small mutations. We showed previously, however, that the geometry of a finite space, such as the Hamming space of strings, implies a different relation between the radius of mutation and adaptation (Belavkin et al. 2011; Belavkin 2011). In particular, the mutation radius maximising the probability of adaptation varies as a function of the distance to the optimum.

Probability of adaptation in a Hamming space

Consider mutation of genotype in a Hamming space into with mutation radius , as shown on Fig. 1. Assuming equal probabilities for all points in the sphere S(a, r), the probability that the offspring is in the sphere is given by the number of points in the intersection of spheres and S(a, r):

| 1 |

where denotes cardinality of a set (the number of its elements). The cardinality of the intersection with condition is computed as follows:

| 2 |

where summation runs over indexes and (here denotes the floor operation) and satisfying conditions and . See Appendix 1 for the derivation of this combinatorial result. We point out that for , the indexes , and count respectively the numbers of beneficial, deleterious and neutral substitutions in .

The cardinality of sphere is

| 3 |

Equations (1)–(3) allow us to compute the probability of adaptation, which is the probability that the offspring is in the interior of ball :

| 4 |

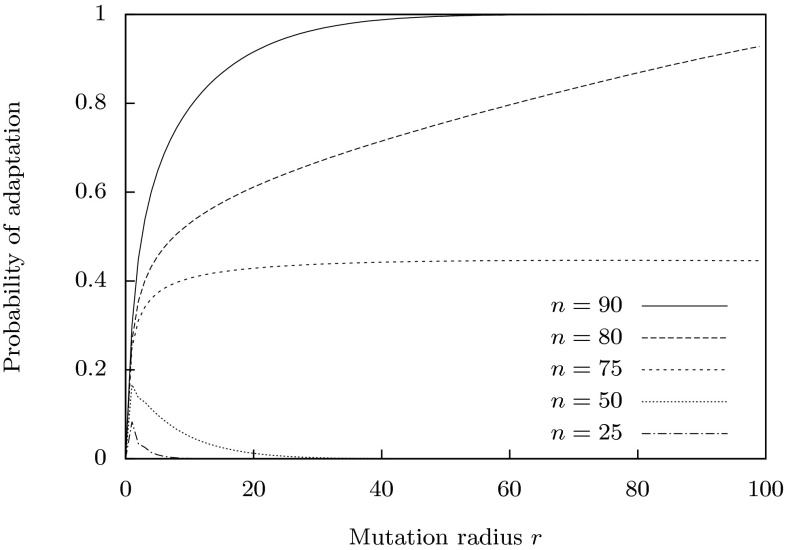

Figure 2 shows the probability of adaptation for Hamming space as a function of mutation radius r for different values of . One can see that when (more generally when ), the probabilities of adaptation decrease with increasing radius , similar to Fisher’s conclusion for the Euclidean space. However, for there is no such decrease, and when (i.e. for ), the probability of adaptation actually increases with r. This is due to the fact that, unlike Euclidean space, Hamming space is finite, and the interior of ball can be larger than its exterior. The geometry of a Hamming space has a number of interesting properties (Ahlswede and Katona 1977). For example, every point has diametric opposite points , such that , and the complement of a ball in is the union of balls .

Fig. 2.

Probability of adaptation in the Hamming space as a function of mutation radius r. Different curves show for different distances of the parent string from the optimum

Random mutation

By mutation we understand a random process of transforming the parent string a into offspring b, so that the mutation radius is a random variable. The simplest form of mutation, called point mutation, is the random process of independently substituting each letter in the parent string to any of the other letters with probability . At its simplest, with one parameter, there is an equal probability of mutating to each of the letters. The parameter is called the mutation rate. For point mutation, the probability of mutating by radius is given by the binomial distribution:

| 5 |

We assume that the mutation rate may depend on the distance from the top string n, and therefore the probability is also conditional on n.

Optimisation of the mutation rate requires knowledge of the probability that mutation of a into b leads to a transition from sphere into . This transition probability can be expressed as follows:

Substituting (1) and (5) into the above equation, and taking into account (3), we obtain the following expression:

| 6 |

where the number is given by Eq. (2). The case was investigated previously by several authors (e.g. Bäck 1993; Braga and Aleksander 1994). The expressions for arbitrary alphabets were first presented in (Belavkin et al. 2011) (see also Belavkin 2011).

We note that simple, one parameter point mutation is optimal in a certain sense: it is the solution of a variational problem of minimisation of expected distance between points a and b in a Hamming space subject to a constraint on mutual information between a and b (see Belavkin 2011, 2013). The constraint on mutual information between strings a and b represents the fact that perfect copying is not possible. The optimal solutions to this problem are conditional probabilities having exponential form , where parameter , called the inverse temperature, is related to the mutation rate, and it is defined from the constraint on mutual information. The reason why this exponential solution in the Hamming space corresponds to independent substitutions with the same probability is because Hamming metric is computed as the sum of elementary distances between letters and in ith position in the string, and the values are equal to zero or one independent of the specific letters of the alphabet or their position i. Other, more complex mutation operators, which incorporate multiple parameters or non-independent substitutions (the phenomenon known in biology as epistasis) can be considered as optimal solutions of the same variational problem, but applied to a different representation space with a different metric.

Optimal control of mutation rates

The fact that the transition probability , defined by Eq. (6), depends on the mutation rate introduces the possibility of organisms maximising the expected fitness of their offspring by controlling the mutation rate. We call the collection of pairs the mutation rate control function . Indeed, let be the distribution of parent genotypes in at time t, and let be the distribution of their distances from the optimum. Transition probabilities define a linear transformation of distribution into distribution of distances of their offspring at time :

If this transformation does not change with time, then the distribution after s generations is defined by , the sth power of . The optimal mutation rates can be found (at least in principle) by minimising the expected distance subject to additional constraints, such as the time horizon :

| 7 |

For example, mutation rates minimising the expected distance at generation should depend on n according to the following step function:

| 8 |

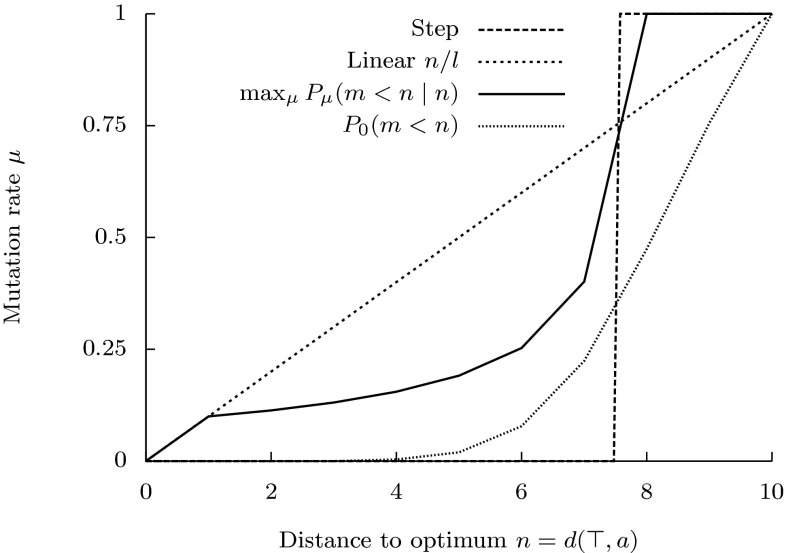

This function is shown on Fig. 3 for Hamming space . The sudden change of the optimal mutation rate from at to at corresponds to the sudden change of the effect of the mutation radius on the probability of adaptation shown on Fig. 2. Note that this mutation control function is not optimal for minimisation of the expected distance up to generations, because strings that are closer to the optimum than do not mutate, so that there is no chance of improvement.

Fig. 3.

Different optimal mutation rate control functions derived mathematically to optimise different criteria in Hamming space : step function minimising expected distance to optimum in one generation, linear function maximising probability of mutating directly into optimum, a function maximising conditional probability that an offspring is closer to optimum than its parent, cumulative distribution function minimising expected distance to optimum subject to an information constraint (Belavkin 2012)

The variational problem for the optimal control of the mutation rate, such as problem (7), can be formulated in different ways optimising different criteria (e.g. instantaneous or cumulative expected distance, probability of adaptation, probability of mutating directly into the optimum) or taking into account additional constraints (e.g. the time horizon, information constraints), and generally they lead to different solutions. Previously, we investigated various types of such problems and obtained their solutions (Belavkin et al. 2011; Belavkin 2011, 2012), some of which are shown on Fig. 3. One can see that there is no single optimal mutation rate control function. However, it is also evident that all these control functions have a common property of monotonically increasing mutation rate with increasing distance from the optimum. The main question that we are interested in this paper is whether such monotonic control of mutation rate is beneficial in a broader class of landscapes, when fitness is not equivalent to distance. In Sect. 4, we shall further develop the theory from the simple case considered in this section to more general fitness landscapes and formulate hypotheses which will be tested in biological landscapes in Sect. 5. To generate data for this testing, we develop an evolutionary technique in Sect. 3 to obtain approximations to the optimal control functions in a broad class of problems, when the derivation of exact solutions is impractical or impossible.

Evolutionary optimisation of mutation rate control functions

Analytical approaches cannot always be applied to derive optimal mutation rate control functions due to high problem complexity. Moreover, when fitness is not equivalent to negative distance, the transition probabilities between fitness levels may be unknown, so that analytical solutions are impossible. Another approach is to use numerical optimisation to obtain approximately optimal solutions. In this section, we describe an evolutionary technique that uses two genetic algorithms. The first, which we refer to as the Inner-GA, evolves individual string with the mutation rate controlled by some function that maps fitness value of a string to its mutation rate . The second, which we refer to as the Meta-GA, evolves a population of such mutation rate control functions for better performance of the Inner-GAs. Note that the Inner-GA can use any fitness function. In this section, we shall apply the technique to the case when fitness is equivalent to negative distance from an optimum (a selected point in a Hamming space). The purpose of this exercise is to demonstrate that the functions evolved by the Meta-GA have monotonic properties, similar to those possessed by the optimal mutation rate control function obtained analytically. Later we shall apply the technique to more general fitness landscapes.

Inner-GA

The Inner-GA is a simple generational genetic algorithm, where each genotype is a string in Hamming space , and the optimal string is defined by a fitness function . The initial population of 100 individuals had equal numbers of individuals at each fitness value, and they were evolved by the Inner-GA for 500 generations using simple point mutation. The mutation rates were controlled according to function , specified by the Meta-GA, with fitness values as the input. In the experiments described, we used no selection and no recombination in order to isolate the effect on evolution of the mutation rate control from other evolutionary operators.

Note that the parameters of the Inner-GA (e.g. population size, the number of generations) were chosen empirically to satisfy two conflicting objectives: On one hand, the parameters should be large enough to get any sort of convergence at the Meta-GA level; on the other hand, the parameters should be small enough for the system to obtain satisfactory results in feasible time (in our case several months of run-time using a cluster of 72 GPUs).

Meta-GA

The Meta-GA is a simple generational genetic algorithm that uses tournament selection, which is known to be robust for fitness scores on arbitrary scales and shifts, and because of its suitability for highly parallel implementation. Each genotype in the Meta-GA is a mutation rate function of fitness values y. The domain of is an ordered partition of the range of the Inner-GA fitness function. Thus, individuals in the Meta-GA are strings of real values representing probabilities of mutation at different fitnesses, as used in the Inner-GA.

At each generation of the Meta-GA, multiple copies of the Inner-GA were evolved for 500 generations, with the mutation rate in each copy controlled by a different function taken from the Meta-GA population. We used populations of 100 individual functions, which were initialised to . All runs within the same Meta-GA generation were seeded with the same initial population of the Inner-GA. The Meta-GA evolved functions for generations to maximise the average fitness in the final generation of the Inner-GA.

The Meta-GA used the following selection, recombination and mutation methods:

Randomly select (without replacement) three individuals from the population and replace the least fit of these with a mutated crossover of the other two; repeat with the remaining individuals until all individuals from the population have been selected or fewer than three remain.

Crossover recombines the start of the numerical string representing one mutation rate function with the end of another using a single cut point chosen randomly, excluding the possibility of being at either end, so that there are no clones.

Mutation adds a uniform-random number to one randomly selected value (mutation rate) on the individual mutation rate function, but then bounds that value to be within [0, 1].

The Meta-GA returns the fittest mutation rate function . In this study, the parameters in the Meta-GA were not optimised, as this would probably take more computational time than conducting the study itself. However, given that Meta-GA converged to the same result, the only difference the parameters could make were how quickly the result was found.

Evolved control functions

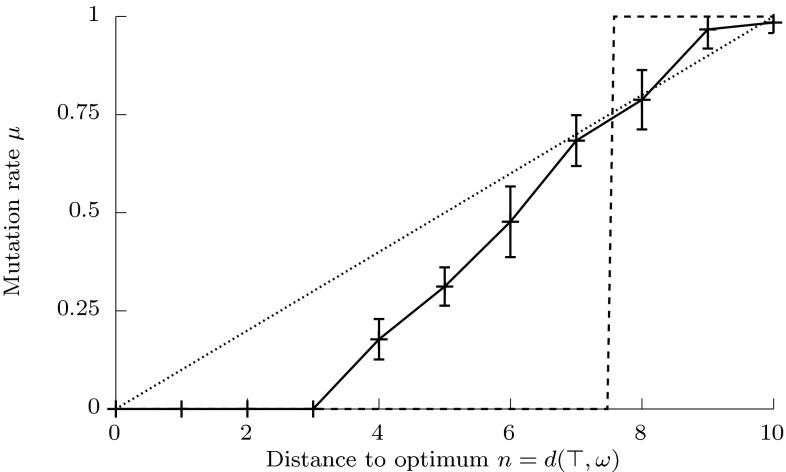

The kind of mutation rate control function the Meta-GA evolves depends greatly on properties of the fitness landscape used in the Inner-GA. In Sect. 2.4 we showed theoretically that for corresponding to negative distance to optimum , the optimal mutation rate increases with . Therefore, the population of mutation rate functions in the Meta-GA should evolve the same characteristics in such a landscape. Figure 4 shows the average and standard deviations of the fittest control functions evolved in 20 runs of the Meta-GA using Inner-GAs with strings in (i.e. , ) and fitness defined by . As predicted, the mutation rate increases with . We shall now consider more complex landscapes.

Fig. 4.

Means and standard deviations of mutation rates evolved to minimise expected distance to the optimum in Hamming space after 500 generations. The results are based on 20 runs of the Meta-GA, each evolving mutation rates for generations. Each generation of the Meta-GA involved running the Inner-GAs for 500 generations with 100 individuals. Dashed lines represent theoretical functions optimising short-term (step) and long-term (linear) criteria

Weakly monotonic fitness landscapes

The derivation of variable (or adaptive) optimal mutation rates described in Sect. 2.4 was based on the assumption that fitness is equivalent to negative distance from the top genotype. Biological fitness landscapes, however, can be rugged (Lobkovsky et al. 2011), meaning that fitness may have very little relation to distance in the space of genotypes. In this section, we consider a more general relation between fitness and distance to study the effect of variable mutation rates on adaptation in more biologically realistic landscapes. We begin by considering fitness as a noisy or partially observed distance, and then discuss monotonic relation between these ordered random variables. We introduce several notions of monotonicity and then prove a theorem on weak monotonicity in a general class of fitness landscapes.

Fitness-distance communication

If fitness is not isomorphic with distance , but there is some degree of dependency between the two variables, then one could try to estimate unobserved distance from observed values of fitness and employ the control function of mutation rate based on the estimated distance. Such a control becomes -optimal, where represents some deviation from optimality. The estimation of distance could be done sequentially using, for example, the filtering theory (Stratonovich 1959). Here, however, we shall limit our discussion to a simple case of using just the current fitness value instead of current distance to control the mutation rate.

Given a distribution of strings in (e.g. a uniform distribution on a Hamming space ), the fitness and distance is a pair of random variables with joint distribution P(y, n). Note that if has multiple optima , then n should be understood as the distance from the nearest optimum: . Joint distribution P(y, n) defines conditional probabilities and by the Bayes formula. Mutation of string a into string b results in the change of distance from to and the change of fitness from to . If fitness does not communicate more information about the distance than distance itself (i.e. fitness is a ‘noisy’ distance), then one can show that fitness and distance are conditionally independent: (see Remark 1 in Appendix 2). In this case, the transition probability between fitness values is expressed using the following composition of transition probabilities , and :

(see Appendix 2 for details). The transition probability is defined by the geometry of the mutation operator in the space of genotypes , and for simple point mutation in a Hamming space it is given by Eq. (6). Conditional probabilities and are defined by the fitness landscape, and they represent dependency between fitness and distance.

The simplest and, perhaps, the most important such relationship is linear dependency, represented by correlation. The fitness-distance correlation has been used previously to describe problem difficulty for evolutionary algorithms (Jones and Forrest 1995; Jansen 2001) and neutral mutations (Poli and Galvan-Lopez 2012). The fitness-distance correlation reflects global monotonic dependency between the pair of ordered random variables. In biological context, however, such a global measure of monotonicity may be less important, because biological organisms tend to populate some neighbourhoods of local optima of fitness landscapes due to selection. Thus, we define the concepts of local and weak monotonicity relative to a chosen metric. We shall also prove that all landscapes that are continuous and open at local optima are weakly monotonic. This result will allow us to formulate three hypotheses about control of mutation rates in biological landscapes, which we shall test experimentally in Sect. 5.

Monotonicity of fitness and distance

We first consider monotonic relationships between values of fitness function at points a, and their distances to an arbitrary point given by a metric (e.g. a Hamming metric in ). If all a and b inside some ball , , satisfy the properties below, we say that:

- f is locally monotonic relative to metric d at if:

- d is locally monotonic relative to f at if:

f and d are locally isomorphic at if both implications hold.

We say that d or f are globally monotonic (isomorphic) at relative to each other if the relevant property holds over .

The three monotonic relations between fitness and distance defined above are illustrated on Fig. 5. These cases represent idealised situations, because usually the value of distance does not define the value of fitness uniquely and vice versa. Indeed, the pre-image of distance is a sphere , and there can be strings with different fitness values within the sphere. Similarly, the pre-image of fitness is the set , and strings within this set may have different distances from . Thus, to describe monotonicity in realistic landscapes, one can modify the definitions by considering the ‘average’ (i.e. expected) fitness or distance within the sets. In particular, we shall denote the average fitness at distance and the average distance at fitness respectively as follows:

The above averages are particular cases of conditional expectations under the assumption of a uniform distribution of strings in a Hamming space . Appendix 3 gives definitions for arbitrary Borel probability measure on a metric space.

Fig. 5.

Schematic representation of monotonic properties described. Abscissae represent string space, ordinates represent fitness. a Fitness is monotonic relative to distance to optimum (fitness landscape can have ‘plateaus’); b distance to optimum is monotonic relative to fitness (landscape can have ‘cliffs’); c fitness and distance to optimum are isomorphic (neither cliffs nor plateaus are allowed)

In what follows, we shall use specific notation , with letter ‘a’ instead of , to denote average fitness at distance (instead of ). Similarly, we shall use notation , with a specific letter ‘a’ instead of , to denote average distance at fitness (instead of ). Such notation is convenient to define average (mean) monotonicity. If all a and b inside some ball , , satisfy the properties below, we say that:

- f is on average locally monotonic relative to metric d at if:

- d is on average locally monotonic relative to f at if:

f and d are on average locally isomorphic at if both implications hold.

If is a local optimum in a finite space (e.g. a Hamming space), then fitness and distance are always locally monotonic relative to each other (and hence isomorphic) at least inside the ball of radius one (otherwise, cannot be a local optimum). However, if the size of the space is large, then the neighbourhood becomes negligible, and therefore the notions of local monotonicity become less important in larger landscapes. For larger neighbourhoods one can speak only about the probability that the implications above are true for some pair of points a and b. Thus, for larger neighbourhoods we can define monotonicity in probability (or in measure). However, because monotonicity always holds with some (possibly zero) probability, and it holds trivially with probability one at each point (i.e. in a zero-radius ball ), we should make such a definition more useful by distinguishing, for example, landscapes, in which the probability of monotonicity gradually increases as points get closer to a local optimum. We refer to this notion as weak monotonicity.

Let be a sequence of points such that the distances converge to zero or fitness values converge to . If a, of any such sequence satisfy the properties below, we say that:

- f is weakly monotonic relative to metric d at if:

- d is weakly monotonic relative to f at if:

f and d are weakly isomorphic at if both conditions hold.

Weak monotonicity is implied by the average local monotonicity in some ball with , because the latter means that the implications above hold with probability one in . The average local monotonicity is in turn implied by the (strong) local monotonicity. The relation between the three notions is shown by the implications below:

Moreover, one may consider an increasing sequence of finite landscapes such that in the limit the landscape is modelled by a continuum metric space. In this case, fitness may fail to be monotonic at any point, including the global optimum, even if fitness is a continuous function. Indeed, it is well known that almost all continuous functions are nowhere differentiable, and therefore they are also nowhere monotonic (Banach 1931; Mazurkiewicz 1931). However, as will be shown by the theorem below, weaker monotonicity may still hold in such landscapes.

Like weak monotonicity, fitness-distance correlation can also be applied to infinite landscapes, including nowhere monotonic landscapes. However, while fitness-distance correlation describes global property of a landscape, weak monotonicity effectively describes a gradual increase of fitness-distance correlation in decreasing neighbourhoods of a point. Thus, although weak monotonicity is related to fitness-distance correlation, these notions are not equivalent. In fact, unlike fitness-distance correlation, weak monotonicity holds in a very broad class of landscapes, including infinite landscapes.

Theorem 1

Let be a metric space equipped with a Borel probability measure P, and let be P-measurable. Let be a local optimum: . Then

If f is continuous at , then f is weakly monotonic relative to d at .

If f maps open balls to open intervals , then d is weakly monotonic relative to f at .

If f satisfies both conditions then f and d are weakly isomorphic at .

The proof of this theorem is given in Appendix 3, and it is based on the construction of a decreasing sequence of radii around for any increasing sequence , which is guaranteed by continuity of f at . Note that we used metric in the theorem, because metric spaces are well-understood, but the theorem and its proof can be reformulated in terms of a quasi-pseudometric. Every quasi-uniform space with countable base (and hence every corresponding topological space) is quasi-pseudometrisable (e.g. see Fletcher and Lindgren 1982, Theorem 1.5), which probably subsumes any topology on DNA or RNA structures (Stadler et al. 2001).

Weak monotonicity implies increasing probability of positive correlation between fitness and negative distance to a local or global optimum in decreasing neighbourhoods. This suggests that the fitness-based control of mutation rate in any continuous and open landscape should resemble the distance-based control in some neighbourhood of an optimum. This forms our first hypothesis:

Hypothesis 1

Optimal mutation rate increases with a decrease in fitness in some neighbourhood of an optimum for realistic fitness landscapes (e.g. biological landscapes), where fitness is not globally isomorphic to distance.

Further, the more monotonic the landscape, the more the optimal mutation rate control function will resemble theoretical functions derived and discussed in Sect. 2; this forms our second hypothesis:

Hypothesis 2

The larger the neighbourhood of weak monotonicity, the more mutation rate control may contribute to evolution towards high fitness.

We test these hypotheses in Sect. 5.

On the role of genotype-phenotype mapping

Mutation occurs at the microscopic level as a random change of a genotype, whereas fitness is defined by the interaction of an organism with its environment, and therefore is a property of the phenotype rather than genotype. If we denote by X the set of all phenotypes, then fitness of genotypes can be factorised into a composition of a genotype-phenotype mapping and phenotypic fitness . We use a function for genotype-phenotype mapping, because we assume for simplicity that one genotype cannot be decoded into two or more phenotypes. On the other hand, there are usually many genotypes corresponding to the same phenotype (Schuster et al. 1994). The genotype-phenotype mapping can be seen as a black-box model of DNA decoding via translation and transcription.

The set X of phenotypes is pre-ordered by the values of phenotypic fitness ( iff ), while the set of genotypes is pre-ordered by the values of distance from the nearest top genotype ( iff ). It is clear from factorisation that the relation between fitness f of genotypes and their distance from an optimum depends on monotonic properties of the genotype-phenotype mapping. For example, genotypic fitness is order-isomorphic with distance when the genotype-phenotype mapping satisfies the condition: if and only if .

The factorisation shows that part of the fitness function, specifically , is property of an organism, and therefore a monotonic relation between fitness and distance can be an adaptive and evolving property. This forms our third hypothesis:

Hypothesis 3

The extent to which mutation rate control may contribute to the evolution of high fitness is itself a trait, which will evolve across biological organisms.

We analyse data that may support this hypothesis in Sect. 5.

Evolving fitness-based mutation rate control functions

In this section, we conduct a computational experiment using landscapes with biological origins to test the hypotheses arising from our theory in Sect. 4. We used the earlier described Meta-GA technique (see Sect. 3) to evolve approximately optimal functions for 115 published complete landscapes of transcription factor binding (Badis et al. 2009). This also allows us to establish the range of fitness values over which monotonicity of optimal mutation rate holds, quantifying the extent to which Hypothesis 1 holds for these biological landscapes. TFs have evolved over very long periods to bind to specific DNA sequences. The landscapes show experimentally measured strengths of interaction (DNA-TF binding score) between the double-stranded DNA sequences of length of base pairs each and a particular transcription factor. Thus, we represent the set of all DNA sequences by Hamming space (i.e. , ), and consider the DNA-TF binding score as their fitness, which is clearly different from the negative Hamming distance from the top string (a sequence with the maximum DNA-TF binding score).

Evolved control functions

We used the Meta-GA evolutionary optimisation technique, described in Sect. 3, to obtain for each landscape an approximately optimal mutation rate control function maximising the average DNA-TF binding score in the population (expected fitness) after 500 replications. Our experiments showed that 16 replicate runs1 were sufficient to achieve satisfactory convergence in feasible time for each of the 115 transcription factor landscapes.

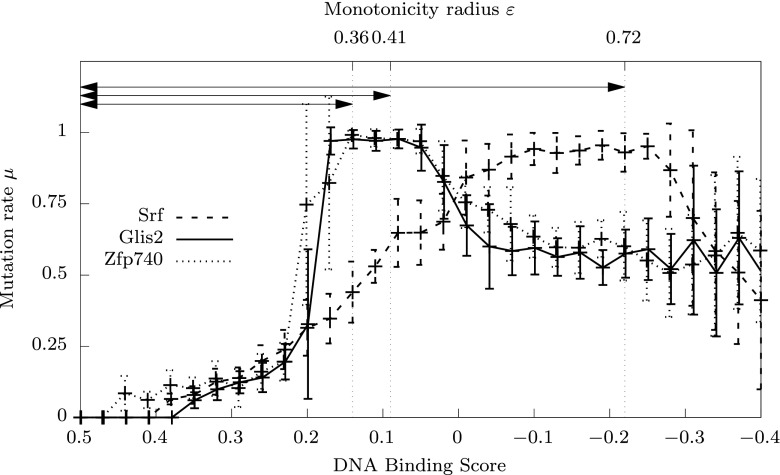

Figure 6 shows the average values and standard deviations of the evolved mutation rates for three transcription factors: Srf, Glis2 and Zfp740. Evolved functions for all landscapes are shown on Figure 9 in supplementary material. One can see that the evolved functions for each transcription factor landscape is approximately monotonic in the direction predicted: close to zero mutation at the maximum fitness, rising to high levels further from the maximum fitness value. This supports Hypothesis 1 as developed from the theory in Sect. 4.

Fig. 6.

Examples of GA-evolved optimal mutation rate control functions. Data are shown for the transcription factors Srf, Glis2 and Zfp740. Each curve represents the average of 16 independently evolved optimal mutation rate functions on a particular transcription factor DNA-binding landscape (Badis et al. 2009). Error bars represent standard deviations from the mean. Similar curves for all 115 landscapes are shown in supplementary Fig. 9. The arrows indicate the monotonicity radius , that defines an interval of fitness values below the maximum, where mutation rate monotonically increases

Small standard deviations indicate good convergence to a particular control function. Observe that there is poor convergence at low fitness areas of the landscape that are poorly explored by the genetic algorithm. Once the mutation rate has peaked near the maximum value , the mutation rates tend to decrease and become chaotic. As will be shown in the next section, this occurs at lower fitness values at which the landscape is no longer monotonic (i.e. further from the peak of fitness).

Landscapes for transcription factors

The variation in the evolved mutation rate control function is clearly related to a variation in the properties of the landscapes. Our theoretical analysis suggests that the main property affecting mutation rate control is monotonicity of the landscape relative to a metric measuring the mutation radius. In particular, the radius of point-mutation is measured by the Hamming metric, and we shall look into the local and weak monotonic properties of the transcription factors landscapes relative to the Hamming metric.

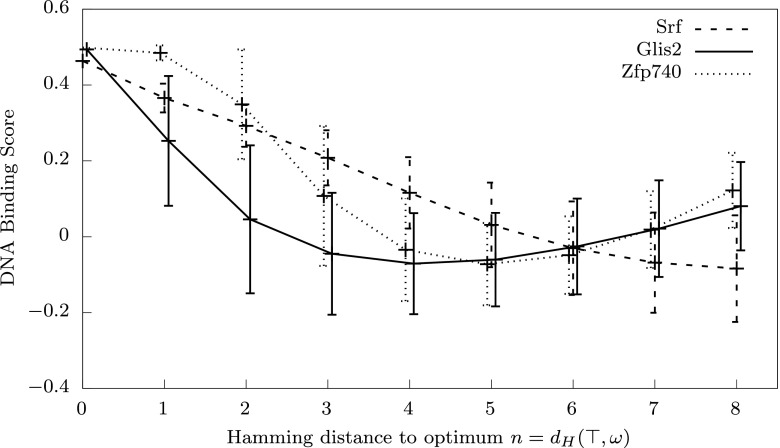

Figure 7 shows average DNA-TF binding scores within spheres around the optimal string as a function of Hamming distance from the optimum. Data is shown for three transcription factors: Srf, Glis2 and Zfp740. Lines connect average values at discrete distances for visualisation purposes. Error bars show standard deviations of the DNA-TF binding scores within the spheres. Distributions of fitness with respect to Hamming distance for all 115 transcription factors are shown on Figure 10 (supplementary material).

Fig. 7.

Examples of fitness landscapes based on the binding score between DNA sequences and transcription factors (TF) from (Badis et al. 2009). Data are shown for the transcription factors: Srf, Glis2 and Zfp740. Lines connect mean values of the binding score shown as functions of the Hamming distance from the top string (a sequence with the highest DNA-TF binding score). Error bars represent standard deviations. Similar curves for all 115 landscapes are shown in supplementary Fig. 10

One can see from Fig. 7 that the landscape for the Srf factor has monotonic properties: the average values increase steadily for strings that are closer to the optimum, and the deviations from the mean within the spheres are relatively small. This is in contrast to the other two landscapes. We note also that the average values for Glis2 decrease quite sharply around the optimum, while the landscape for Zfp740 has a relatively flat plateau area around the optimum, which means that there are many sequences with high DNA-TF binding score. This difference may explain different gradients of optimal mutation rates near the maximum fitness shown on Fig. 6.

Monotonicity and controllability

Our results have confirmed that the evolved optimal mutation rates rise from zero to very high levels as fitness decreases from the maximum value to some value (see Fig. 6 and supplementary Fig. 9). We refer to the corresponding value as the monotonicity radius, as it defines the neighbourhood of in terms of fitness values in which the evolved mutation rate control function has monotonic properties. We find substantial variation in monotonicity radius among transcription factors.

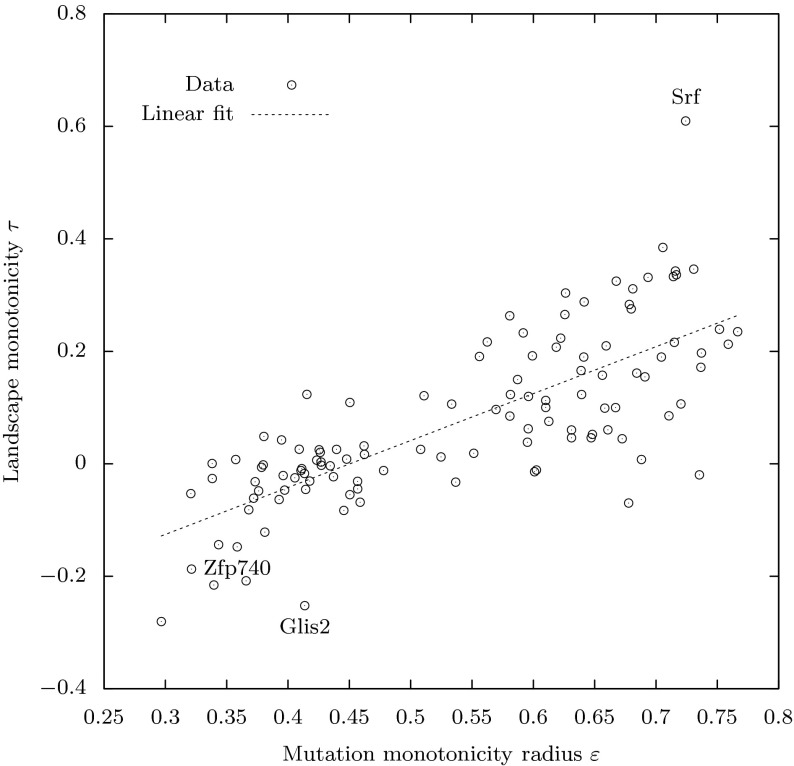

We hypothesised that the variation in the optimal mutation rate control functions relates to variation in the monotonicity of the transcription factor landscapes (Hypothesis 2). Various measures have been proposed for the roughness of biological landscapes (Lobkovsky et al. 2011). Here we focus on Kendall’s correlation, which is directly concerned with monotonicity; specifically, measures the proportion of mutations that, in moving closer to the optimum in string space, also increase in fitness. As shown in Fig. 8, we find that the value of of the landscape does indeed have a relationship with the monotonicity radius of the evolved mutation rate control functions (Spearman’s , , ), supporting Hypothesis 2.

Fig. 8.

Linear relation between monotonicity of the landscapes measured by the Kendall’s correlation (ordinates) and the monotonicity radius (abscissae) of the corresponding evolved mutation rate control functions. Three labels show data for three transcription factors shown in Figs. 6 and 7

Finally, we investigated whether these related features of the TF landscapes and mutation rate functions themselves relate to the biological evolution of these TF systems. To test this we looked at the evolutionary origins of the TF families, to which the 115 TFs tested above belonged, using an integer scale indicating key splits in the tree of eukaryotic life (Weirauch and Hughes 2011). We find a significant relationship between this scale of biological evolution and the monotonicity radius (Spearman’s , , ). This indicates that TFs in families that originated more recently (e.g. in families restricted to Deuterostomes, rather than being present across all eukaryotic life) tend to have broader regions over which the optimal mutation rate monotonically increases with distance from the binding optimum. This is consistent with Hypothesis 3, indicating that the extent to which mutation rate control may contribute to the evolution of high fitness itself evolves through the tree of life.

Discussion

In this paper we have developed and tested theory relating to the control of the mutation rate in biological sequence landscapes. To do so, we had to move the theory closer to the biology in three ways. Firstly (in Sect. 2), we generalised Fisher’s geometric model of adaptation, from its Euclidean space (continuous and infinite) to a discrete, finite Hamming space of strings. Doing so demonstrated that, in contrast to the behaviour in Euclidean space, where the probability of beneficial mutation behaves similarly at different distances from the optimum (Orr 2003), the probability of beneficial mutation, for a given mutation size, varies markedly depending on the distance from the optimum (Fig. 2). Secondly, we analytically derived functions for optimal control of the mutation rate minimising the expected Hamming distance to a particular point (optimal string). We also demonstrated a variation of these control functions dependent on specific formulations of the optimisation problem. Nonetheless we observed consistency: all optimal functions increase monotonically (Fig. 3). Thirdly, we developed theory concerning monotonic properties of fitness landscapes and establishing sufficient conditions of weak monotonicity. The theory demonstrated that all biological landscapes over discrete spaces, however rugged, are characterised by monotonic properties in some neighbourhood of the optimum. Therefore, optimal solutions to the geometric problem of optimal mutation rate control based on distance can be applied more broadly to problems of -optimal control of mutation rate based on fitness in biological systems.

Empirical biological fitness landscapes mapping genotypes to fitness values within a small, defined, subset of genotypic space are becoming increasingly available (de Visser and Krug 2014). Here we use the test case of the affinities of 115 different transcription factors for all possible eight base-pair DNA sequences (Badis et al. 2009). We used these landscapes to test hypotheses arising from the theory, relating to the nature of optimal mutation rate functions (Hypothesis 1; Figs. 6, 7, 8). In each case we find evidence to support the hypothesis, consistent with the idea that our theory is not only correct, but, as expected, substantively relevant to such biological fitness landscapes.

Given that we find this theory to be relevant to biological fitness landscapes, we need to ask how it might manifest itself within biology. There are several requirements if biological organisms are to exert any approximation to optimal mutation rate control. The first requirement is variation in mutation rate. There is evidence for abundant variation in biological mutation rates, both across species (Sung et al. 2012) and among populations of a species (Bjedov et al. 2003). Variation is therefore possible. However, for this theory to be relevant, that variation needs to be controllable by the organism. This in turn requires that mutation rate varies right down to the level of an individual genotype, i.e. mutation rate plasticity (MRP). There is evidence for MRP in ‘stress-induced mutagenesis’ (Galhardo et al. 2007) and related phenomena, such as the increased number of mutations in sperm from older males (Kong et al. 2012). However, while this constitutes MRP, the possibility of control requires that this plasticity is not merely the inevitable result of an organism’s environment (e.g. the accumulation of damage with time or due to stress factors), but controllable by the organism in response to that environment. The proximate and ultimate causes of stress-induced mutagenesis are much debated, but that they include any form of ‘control’ is far from clear (MacLean et al. 2013). Clearer evidence of control is, however, present in a novel example of MRP we described recently (Krašovec et al. 2014). In this case, there is environmentally dependent MRP that can be switched on or off by the presence or absence of a particular gene (luxS).

The next requirement for a biological analogue of the theory described here is that control of the mutation rate may be exercised as a decreasing function of fitness. This requires that an organism can somehow assay its own fitness. This is a non-trivial requirement in that fitness is a function of one or more generations of an organism’s offspring, not of an organism itself. Various proxies are conceivable that might give an organism an indication of its fitness. These include counting its offspring relative to some internal or external clock, counting the population as a whole, or testing aspects of the environment that may correlate with the future likelihood of offspring. The last of these could include stressors, meaning that stress-induced mutagenesis might meet this requirement. In our recently identified example, the aspect of the environment with which mutation rate varies is the density of a bacterial culture. Population density can act as a good proxy for fitness in some circumstances (e.g. in a fixed volume bacterial culture), and the mutation rate does indeed decrease with increasing density (Krašovec et al. 2014), consistent with the fitness-associated control of mutation rate we here determine to be optimal.

The final requirement for the existence of biological mutation-rate control of the sort addressed here is that it is possible for it to evolve and be maintained by the processes of biological evolution. This is not trivial in that it involves the evolution of plasticity, which is not as straight-forward or common in biology as might be expected (Scheiner and Holt 2012). It also involves so-called ‘second-order selection’ (Tenaillon et al. 2001). This is because any particular mutation rate or MRP is unlikely to affect an individual’s fitness (and therefore selection) directly; rather, MRP must be selected for indirectly via the genetic effects it produces. Nonetheless, phenotypic plasticity occurs widely and, while rare, there are clear examples of second-order selection occurring in biology (Woods et al. 2011). Furthermore, here we demonstrate MRP rapidly evolving de novo to particular forms (Fig. 6). The genetic algorithm (GA) in this case was not created to mimic biology, and the group-selection used by the outer GA in particular is rather un-biological. However, others, working with explicitly biological population genetic models, also find the evolution of MRP (Ram and Hadany 2012). This implies that not only is the MRP predicted here possible for biological organisms, but it may reasonably be expected to evolve and be maintained. It remains to be tested whether the precise range and nature of the MRP identified by Krašovec et al. (2014) does indeed fulfil this role i.e. to enable populations to evolve faster and/or further in realised, whole organism biological fitness landscapes in a similar fashion to the evolutionary advantage seen for in silico, molecular interaction landscapes tested here (Fig. 6). Nonetheless, such density-dependent MRP (Krašovec et al. 2014) is a prime candidate for a biological manifestation of the mutation rate control which we have addressed here.

We have focused on fitness-associated control of mutation rate. However, mutation is only one evolutionary process where fitness-associated control may be beneficial. Recombination and dispersal are also evolutionary processes that may be under the control of the individual and therefore open to similar effects. Fitness-associated recombination has been demonstrated to be advantageous theoretically (Hadany and Beker 2003; Agrawal et al. 2005) and identified in biology (Agrawal and Wang 2008; Zhong and Priest 2011). Similarly, the idea that dispersal associated with low fitness might be advantageous has a basis in simulation of spatially differentiated populations (Aktipis 2004, 2011). This association might perhaps be framed more generally in terms of ‘fitness-associated dispersal’. Thus, the framework for control of mutation rate in response to fitness that we have developed here may in future be applicable to both recombination and dispersal.

Overall, our development of theory and testing its predictions in silico not only clarifies ideas around the monotonicity of fitness landscapes and mutation rate control, it leads directly to hypotheses about specific systems in living organisms. At the same time there is the potential for greater insight through further development of the theory. Three directions seem particularly likely to be fruitful.

First, while it is striking how effective mutation rate control is at enabling adaptive evolution, without invoking selection in our in silico experiments, it will be important to consider the role of selection strategies. Such strategies may implicitly modify fitness functions. For instance, one of the analytically derived functions shown in Fig. 3 is the mutation rate function for a DNA space () which maximises the probability of adaptation (as derived by Bäck (1993) for binary strings). As outlined in Sect. 2.4, maximising the probability of adaptation is equivalent to maximising expected fitness of the offspring relative to its parent. This effect may be implicit in a selection strategy that removes the offspring of reduced fitness that will inevitably be produced by maximising offspring expected fitness. Given the importance of selection in biology, we therefore anticipate that such functions may be closer to mutation rate control functions in living organisms. This requires further work.

A second area for development is in variable adaptive landscapes. The importance of time-varying adaptive landscapes in biological evolution is becoming increasingly appreciated (Mustonen and Lassig 2009; Collins 2011) and variable mutation rates have a particular role here (Stich et al. 2010). It is worth noticing, however, that our derivation of optimal mutation rate functions is not dependent on a fixed landscape, as it depends only on the fitness values. Nonetheless, as we demonstrate for the transcription factor landscapes, variation in landscapes’ monotonic properties relates to the shape of mutation rate functions in predictable ways (Fig. 8). This deserves further exploration both theoretically and empirically: measuring variation in the monotonic properties of real biological landscapes will be informative about optimal mutation rate functions and vice versa.

Finally, there is potential to develop theory around the role of the genotype-phenotype mapping. Landscape monotonicity, as explored here, is not absolute; it may depend on this mapping. That is, if the decoding of DNA changes, it may be possible to convert a non-monotonic landscape into a monotonic one. Biology uses a variety of such decoding schemes which may themselves evolve. For the transcription factor landscapes used here, the decoding scheme is defined by the biochemical interactions between the transcription factor (a protein molecule) and DNA. Thus, evolution of transcription factors constitutes evolution of DNA-decoding, and indeed we do find a relationship between the evolutionary age of gene families and the monotonic properties of the associated landscapes. A more familiar example is the genetic code, where there is much existing work on its evolution (e.g. Freeland et al. 2000). Determining how evolution of such codes affects the monotonic properties of biological landscapes as explored here may, therefore, provide novel insights into large-scale evolutionary patterns. Ultimately, theory such as this that identifies analytically or empirically optimal mutation rate control functions may help make predictions about evolutionary responses to future environmental change (Chevin et al. 2010) or inferences about the environment(s) within which particular organisms evolved. In the meantime, mutation rate control as developed here may assist directed evolution within biological and other complex landscapes, for instance in the evolution of DNA-protein binding (Knight et al. 2009).

Electronic supplementary material

Below is the link to the electronic supplementary material.

Acknowledgments

This work was supported by the Engineering and Physical Sciences Research Council [grant number EP/H031936/1]; and the Biotechnology and Biological Sciences Research Council [grant numbers BB/L009579/1, BB/M020975/1, BB/M021106/1, BB/M021157/1]. CGK was partially supported by a fellowship from the Wellcome Trust [grant number 082453/Z/07/Z]. The dataset underpinning the results is openly available from Zenodo at http://doi.org/bd2w.

Appendix 1: Intersection of spheres in Hamming space

Proposition 1

Let S(a, r) and S(c, m) be two spheres in with Hamming distance between the centres . Then the cardinality of their intersection is

| 9 |

Proof

The intersection is formed by points , which form triangles together with the centres of the spheres a and c:

|

Point b can be obtained equivalently by substituting r letters in a or by substituting m letters in c, and we shall count using the smallest number of substitutions .

Consider a substitution of a letter of string a into letter . There are three possible cases:

- ,

Such substitutions contribute to a decrease of distance between the strings, and we refer to them as beneficial substitutions. There are of letters in a such that . The number of beneficial substitutions out of n letters in a is .

- ,

Such substitutions contribute to an increase of distance between the strings, and we refer to them as deleterious substitutions. There are of letters in a such that , and each of them can be substituted into letters . The number of deleterious substitutions out of letters in a is .

- ,

Such substitutions do not change the distance between the strings, and we refer to them as neutral substitutions. After beneficial substitutions, there are of letters in a such that , and each of them can be substituted into letters . The number of neutral substitutions out of letters in a is .

The product of these three numbers gives the total number of beneficial, deleterious and neutral substitutions. For fixed points a and c with , the third point can be obtained using different values of , and . However, not all values of , and are admissible.

First, let us establish the range for and . Using the triangle inequalities for Hamming metric we have and , or (substituting the values n, m and r):

This gives inequalities and . Observe that

If , then , which means that all substitutions are beneficial. Thus, is bounded above by .

If , then , which means that all substitutions are deleterious. Thus, is bounded above by .

Given and , the number of neural substitutions is computed from the condition:

Finally, because neutral substitutions do not change the distances, the difference represents the change of the distance (i.e. the change , if ). Thus,

These conditions are the required guards in the summation (9).

Appendix 2: Memoryless communication

Let us consider an -valued stochastic process , where and are measurable sets. Our interest is in the ‘similarity’ between the marginal processes and under special assumptions on the communication between X and Y. Recall that a Markov transition kernel from to is a conditional probability measure on , which is -measurable for each . We shall use measure-theoretic notation for transition kernel .

Proposition 2

Let and be measurable sets, and let be a -valued stochastic process such that elements of the marginal process are conditionally independent given :

Then transition kernel can be expressed as a composition of transition kernels , and as follows:

This transition kernel has the following properties:

If X and Y are statistically independent, then is independent of :

- If corresponds to a function , then

- If corresponds to a function , then

- If corresponds to a bijection , then

Proof

Transition kernel can generally be expressed as follows:

Using the Bayes formula and conditional independence gives

Thus, can be expressed using the composition of transition kernels . We now consider four important cases.

- If X and Y are independent, then and , and therefore

- If , then

which gives the resulting expression. - If , then

The resulting expression is obtained by integrating for each and . Follows from for a bijection.

Remark 1

The assumption of conditional independence is common in theory of non-linear filtering or hidden Markov models (Stratonovich 1959), and it is equivalent to the assumption that the observed process does not provide more information about the hidden process than x itself:

The idea is that y is a ‘noisy’ version of x. Then, using the Bayes formula gives:

Note that it is also very common to assume that the hidden process is Markov, and together the two assumptions imply that the joint process is also Markov (but the observed process is usually not Markov, but a conditional Markov process). Note that it is not required in Proposition 2 for any of the stochastic processes , or to be Markov. In the context of Sect. 4, the unobserved variable is the distance to optimum , and observed variable is fitness.

Appendix 3: Proof of Theorem 1

Let us consider a sequence of closed balls of decreasing radia in a metric space , such that the balls are nested:

The difference will be referred to as a ‘sphere’ of radius . Let P be a Borel probability measure on (i.e. related to the topology on ). We shall denote by the conditional probability associated with the sphere :

Given a P-measurable function , we shall denote by the conditional expectation :

For example, if P is a uniform distribution, then and is the average value of in .

Similarly, we consider a sequence of intervals and conditional probabilities defined by their pre-images . We shall denote by the conditional expectation :

For example, if P is a uniform distribution, then is the average distance in . We now prove the theorem.

Proof of Theorem 1

For convenience, we shall assume that is a global optimum, so that condition can be omitted. If contains multiple optima , then by we understand the distance to the nearest optimum.

- ()

- Let be a decreasing sequence of (i.e. ), and let be defined as follows:

Such exists for each by continuity of f at , and because for all . The sequence is also decreasing (otherwise is non-decreasing or f is not continuous at ). Observe that we already have monotonicity with respect to balls in the following sense:

We need to prove monotonicity of conditional expectations within spheres . Let so that . There are two mutually excluding cases:

The first case corresponds to monotonicity and non-negative difference ; otherwise, the difference is negative . Using the Markov inequality for conditional probability and the fact that we derive the following bounds for :

On the other hand . These inequalities allow us to give the following bounds on the difference :

Substituting we obtain

Conditional probability converges to zero for any decreasing sequence , which proves that the probability of non-negative difference converges to one. In other words, the implication is true with probability one as . - ()

- Consider the function . The pre-image of each open interval is an open ball . Because f maps open balls to open intervals by our assumption, the composition is an open mapping of open intervals . Therefore, the inverse function is continuous at . This means that for any decreasing sequence we can construct the corresponding decreasing sequence by setting

The rest of the proof is identical to that of the first implication. Specifically, using Markov inequality we derive the following bounds for :

Using bounds we obtain the following bounds on the difference :

Because conditional probability converges to zero for any decreasing sequence , this proves that the probability of non-negative difference converges to one. In other words, the implication is true with probability one as . - ()

If f is both continuous and open at , then both implications are true in probability, which means that f and d are weakly isomorphic at .

Footnotes

We used a multiple of 4 due to 4 GPUs used in one node.

Contributor Information

Roman V. Belavkin, Email: r.belavkin@mdx.ac.uk

Alastair Channon, Email: a.d.channon@keele.ac.uk.

Elizabeth Aston, Email: e.j.aston@keele.ac.uk.

John Aston, Email: j.aston@statslab.cam.ac.uk.

Rok Krašovec, Email: rok.krasovec@manchester.ac.uk.

Christopher G. Knight, Email: chris.knight@manchester.ac.uk

References

- Ackley DH (1987) An empirical study of bit vector function optimization. In: Davis L (ed) Genetic algorithms and simulated annealing, Pitman, chap 13, pp 170–204

- Adams FC, Laughlin G. A dying universe: the long-term fate and evolutionof astrophysical objects. Rev Mod Phys. 1997;69:337–372. [Google Scholar]

- Agrawal AF, Wang AD. Increased transmission of mutations by low-condition females: evidence for condition-dependent DNA repair. PLoS Biol. 2008;6(2):e30. doi: 10.1371/journal.pbio.0060030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Agrawal AF, Hadany L, Otto SP. The evolution of plastic recombination. Genetics. 2005;171(2):803–12. doi: 10.1534/genetics.105.041301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahlswede R, Katona G. Contributions to the geometry of Hamming spaces. Discrete Math. 1977;17(1):1–22. [Google Scholar]

- Aktipis CA. Know when to walk away: contingent movement and the evolution of cooperation. Journal of Theoretical Biology. 2004;231(2):249–60. doi: 10.1016/j.jtbi.2004.06.020. [DOI] [PubMed] [Google Scholar]

- Aktipis CA. Is cooperation viable in mobile organisms? Simple walk away rule favors the evolution of cooperation in groups. Evol Human Behav Off J Human Behav Evol Soc. 2011;32(4):263–276. doi: 10.1016/j.evolhumbehav.2011.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bäck T (1993) Optimal mutation rates in genetic search. In: Forrest S (ed) Proceedings of the 5th international conference on genetic algorithms. Morgan Kaufmann, Burlington, pp 2–8

- Badis G, Berger MF, Philippakis AA, Talukder S, Gehrke AR, Jaeger SA, Chan ET, Metzler G, Vedenko A, Chen X, Kuznetsov H, Wang CF, Coburn D, Newburger DE, Morris Q, Hughes TR, Bulyk ML. Diversity and complexity in DNA recognition by transcription factors. Science. 2009;324(5935):1720–3. doi: 10.1126/science.1162327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banach S. Über die Baire’sche kategorie gewisser funktionenmengen. Studia Math. 1931;3:174–179. [Google Scholar]

- Bataillon T, Zhang T, Kassen R. Cost of adaptation and fitness effects of beneficial mutations in pseudomonas fluorescens. Genetics. 2011;189(3):939–49. doi: 10.1534/genetics.111.130468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belavkin RV (2011) Mutation and optimal search of sequences in nested Hamming spaces. In: IEEE information theory workshop. IEEE, New York

- Belavkin RV (2012) Dynamics of information and optimal control of mutation in evolutionary systems. In: Sorokin A, Murphey R, Thai MT, Pardalos PM (eds) Dynamics of information systems: mathematical foundations. In: Springer proceedings in mathematics and statistics, vol 20. Springer, Berlin, pp 3–21

- Belavkin RV (2013) Minimum of information distance criterion for optimal control of mutation rate in evolutionary systems. In: Accardi L, Freudenberg W, Ohya M (eds) Quantum bio-informatics V, QP-PQ: quantum probability and white noise analysis, vol 30. World Scientific, Singapore, pp 95–115

- Belavkin RV, Channon A, Aston E, Aston J, Knight CG (2011) Theory and practice of optimal mutation rate control in Hamming spaces of DNA sequences. In: Lenaerts T, Giacobini M, Bersini H, Bourgine P, Dorigo M, Doursat R (eds) Advances in artificial life, ECAL 2011: proceedings of the 11th European conference on the synthesis and simulation of living systems. MIT Press, Cambridge, pp 85–92

- Bjedov I, Tenaillon O, Gerard B, Souza V, Denamur E, Radman M, Taddei F, Matic I. Stress-induced mutagenesis in bacteria. Science. 2003;300(5624):1404–9. doi: 10.1126/science.1082240. [DOI] [PubMed] [Google Scholar]

- Böttcher S, Doerr B, Neumann F. Optimal fixed and adaptive mutation rates for the leadingones problem. In: Schaefer R, Cotta C, Koodziej J, Rudolph G, editors. Parallel Problem Solving from Nature, PPSN XI. Berlin Heidelberg: Lecture Notes in Computer ScienceSpringer; 2010. pp. 1–10. [Google Scholar]

- Braga ADP, Aleksander I (1994) Determining overlap of classes in the -dimensional Boolean space. In: Neural networks, 1994. In: 1994 IEEE international conference on IEEE world congress on computational intelligence, vol 7, pp 8–13

- Cervantes J, Stephens CR. ‘Optimal’ mutation rates for genetic search. In: Cattolico M, editor. Proceedings of genetic and evolutionary computation conference (GECCO-2006) Seattle: ACM; 2006. pp. 1313–1320. [Google Scholar]

- Chevin LM, Lande R, Mace GM (2010) Adaptation, plasticity, and extinction in a changing environment: towards a predictive theory. PLoS Biol 8(4):e1000,357 [DOI] [PMC free article] [PubMed]

- Collins S. Many possible worlds: expanding the ecological scenarios in experimental evolution. Evol Biol. 2011;38(1):3–14. [Google Scholar]

- de Visser JA, Krug J. Empirical fitness landscapes and the predictability of evolution. Nat Rev Genet. 2014;15(7):480–490. doi: 10.1038/nrg3744. [DOI] [PubMed] [Google Scholar]

- Eiben AE, Hinterding R, Michalewicz Z. Parameter control in evolutionary algorithms. IEEE Trans Evol Comput. 1999;3(2):124–141. [Google Scholar]

- Falco ID, Cioppa AD, Tarantino E. Mutation-based genetic algorithm: performance evaluation. Appl Soft Comput. 2002;1(4):285–299. [Google Scholar]

- Fisher RA. The genetical theory of natural selection. Oxford: Oxford University Press; 1930. [Google Scholar]

- Fletcher P, Lindgren WF (1982) Quasi-uniform spaces. In: Lecture notes in pure and applied mathematics, vol 77. Marcel Dekker, New York

- Fogarty TC (1989) Varying the probability of mutation in the genetic algorithm. In: Schaffer JD (ed) Proceedings of the 3rd International Conference on Genetic Algorithms, Morgan Kaufmann, pp 104–109

- Freeland SJ, Knight RD, Landweber LF, Hurst LD. Early fixation of an optimal genetic code. Mol Biol Evol. 2000;17(4):511–518. doi: 10.1093/oxfordjournals.molbev.a026331. [DOI] [PubMed] [Google Scholar]

- Galhardo RS, Hastings PJ, Rosenberg SM. Mutation as a stress response and the regulation of evolvability. Crit Rev Biochem Mol Biol. 2007;42(5):399–435. doi: 10.1080/10409230701648502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadany L, Beker T. On the evolutionary advantage of fitness-associated recombination. Genetics. 2003;165(4):2167–79. doi: 10.1093/genetics/165.4.2167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He J, Chen T, Yao X. On the easiest and hardest fitness functions. IEEE Trans Evol Comput. 2015;19(2):295–305. [Google Scholar]

- Jansen T. On classifications of fitness functions. In: Kallel L, Naudts B, Rogers A, editors. Theoretical aspects of evolutionary computing. Natural computing series. Berlin: Springer; 2001. pp. 371–385. [Google Scholar]

- Jones T, Forrest S (1995) Fitness distance correlation as a measure of problem difficulty for genetic algorithms. In: Eshelman L (ed) Proceedings of the sixth international conference on genetic algorithms, San Francisco, pp 184–192

- Kassen R, Bataillon T. Distribution of fitness effects among beneficial mutations before selection in experimental populations of bacteria. Nat Genet. 2006;38(4):484–8. doi: 10.1038/ng1751. [DOI] [PubMed] [Google Scholar]

- Kimura M. Average time until fixation of a mutant allele in a finite population under continued mutation pressure: Studies by analytical, numerical, and pseudo-sampling methods. Proc Natl Acad Sci. 1980;77(1):522–526. doi: 10.1073/pnas.77.1.522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knight CG, Platt M, Rowe W, Wedge DC, Khan F, Day PJ, McShea A, Knowles J, Kell DB. Array-based evolution of DNA aptamers allows modelling of an explicit sequence-fitness landscape. Nucl Acids Res. 2009;37(1):e6. doi: 10.1093/nar/gkn899. [DOI] [PMC free article] [PubMed] [Google Scholar]