Abstract

Introduction:

Significant event analysis (SEA) is well established in many primary care settings but can be poorly implemented. Reasons include the emotional impact on clinicians and limited knowledge of systems thinking in establishing why events happen and formulating improvements. To enhance SEA effectiveness, we developed and tested “guiding tools” based on human factors principles.

Methods:

Mixed-methods development of guiding tools (Personal Booklet—to help with emotional demands and apply a human factors analysis at the individual level; Desk Pad—to guide a team-based systems analysis; and a written Report Format) by a multiprofessional “expert” group and testing with Scottish primary care practitioners who submitted completed enhanced SEA reports. Evaluation data were collected through questionnaire, telephone interviews, and thematic analysis of SEA reports.

Results:

Overall, 149/240 care practitioners tested the guiding tools and submitted completed SEA reports (62.1%). Reported understanding of how to undertake SEA improved postintervention (P < .001), while most agreed that the Personal Booklet was practical (88/123, 71.5%) and relevant to dealing with related emotions (93/123, 75.6%). The Desk Pad tool helped focus the SEA on systems issues (85/123, 69.1%), while most found the Report Format clear (94/123, 76.4%) and would recommend it (88/123, 71.5%). Most SEA reports adopted a systems approach to analyses (125/149, 83.9%), care improvement (74/149, 49.7), or planned actions (42/149, 28.2%).

Discussion:

Applying human factors principles to SEA potentially enables care teams to gain a systems-based understanding of why things go wrong, which may help with related emotional demands and with more effective learning and improvement.

Keywords: adverse events, patient safety, primary care, incident analysis, team learning, emotional demands, human factors and ergonomics, systems thinking

A recent scoping review estimates that 1%–2% of primary care consultations may involve a significant event.1 Such safety incidents clearly affect the health and well-being of patients (and relatives), and sometimes devastatingly so,2 but they may also have profound psychological impacts on the care practitioners involved.3 A “significant event” is an umbrella term that covers “adverse events” in which patients were unintentionally harmed (physically or psychologically, regardless of severity) and “near misses,” where patients could have been harmed.4–7 Significant event analysis (SEA) is a well-established team safety investigation and quality improvement tool used in certain international primary care settings (eg, United Kingdom, Ireland, Australia, and New Zealand) to help understand the event and why it happened, and direct subsequent learning and improvement efforts.4,8

However, existing evidence suggests that the SEA process is poorly implemented by many primary care teams, resulting in missed opportunities for learning and improvement to enhance care performance, system design, and patient safety.4 A range of overlapping psychological, methodological, and sociocultural issues contribute to these problems, but two main factors operate to decrease the effectiveness of the SEA process.4,6,9–14

First, there is limited knowledge among care practitioners about taking a “systems approach” to understanding how and why significant events occur.6 The evidence suggests that many do not give sufficient attention to broader systems influences, but overly focus on individual performance and behaviors without appreciating the wider human interactions and work environment factors that combine and impact to contribute to significant events. This is an issue supported by human factors science, in which the conceptual and evidence-based approach to “human error” suggests that individuals are not the “cause” of significant events. Instead, in complex sociotechnical systems (such as many primary care settings) underlying interacting contributing factors (eg, inadequately designed care process, poorly designed technology interfaces, and workplace culture) lead to these types of events because systems are often intractable and unpredictable, and work goals are frequently conflicted and subject to many constraints.15–18

Second, for care practitioners involved in a significant event, this can be analogous to receiving a form of “negative feedback” or “bad news” on their performance.9,10 The first response to such an event is usually, and understandably, an emotional one. Research shows that emotional responses to bad news are normal, and further, that the emotional response needs to be acknowledged, worked through, and assimilated before the practitioner can move on to addressing the situation in an analytical manner. This can be a challenging task. In some cases, the emotional distress may not be alleviated and may produce longer term impact on the emotional well-being of care practitioners. Sometimes, this presents as the so-called “second victim” syndrome3 leading to increased stress levels and a reluctance to highlight safety issues because of concerns about punitive action, professional embarrassment, or guilt.11–13 Many are also highly selective in the types of significant events that they highlight for analysis.4,6,14

There is a clear need, therefore, for a conceptual and structured process to guide care practitioners undertaking SEA. First, the need is to minimize any unnecessary or inappropriate focus on their personal role in a significant event,4,6,14,16 which may generate emotional barriers to applying an objective perspective to the analysis. It is important, therefore, for the practitioner to recognise and reflect on how the event has made them feel and to consider how they might achieve sufficient “psychological readiness” to enter into a constructive analysis. Reflection on one's experiences and one's emotions, in a structured manner, is a valuable approach for enhancing awareness and for learning.19,20 Second, by considering the wider systems issues contributing to the event, they gain a more balanced and objective understanding of the contexts and constraints influencing the event rather than continuing to focus on their personal involvement alone.15–18 The next stage would, where possible, involve a team-based event analysis which would also be guided by a systems-centred process, potentially leading to more informed analytical insights and risk reduction strategies than is presently the case.4,14

Against this background, we aimed, therefore, to design, develop, and test a conceptual and practical process intervention, informed largely by human factors principles,15 to support and enhance the implementation of SEA by practitioners in primary care settings. Specifically, our study set out to study the impact of a structured SEA process and range of supporting educational materials on:

The individual practitioner: prompting those involved in a significant event to consider and reflect on the possible emotional impacts (as appropriate), the wider systems influences contributing to the event and their personal role within this whole context. The intent is that such an approach will provide a more objective and balanced perspective of why the event happened. In turn, this insight will decrease negative emotional reactions and increase the practitioner's psychological readiness to move on and engage more effectively with the SEA process and raise the issue with other members of the care team (as appropriate).

The care team level: prompting the team to consider and reflect on the possible emotional impacts on all concerned, and to take a systems approach to the SEA process. The intent is that this will lead to more meaningful engagement with the process and result in better informed analytical insights into why the significant event occurred and, consequently, improved risk reduction strategies.

METHODS

We used a mixed-methods approach in two phases: phase 1—to develop the conceptual framework and practical intervention (guiding tools and a structured approach to the SEA process). Phase 2—to evaluate their use and implementation by care practitioners.

Phase 1: Developing the Conceptual Framework and Practical Intervention (Guiding Tools and a Structured Approach to the SEA Process)

Phase 1 included four activities in the following:

Comprehensive Literature Review

The literature search was designed to identify incident investigation approaches that could be adapted to suit primary care settings. We conducted a formal search of the following electronic databases: “PsycINFO,” “Embase,” “Medline,” “Web of Science,” and CINAHL for English language peer-reviewed journal articles published between 1990 and 2014 which was strongly informed by identification of a related review17: “incident investigation,” “incident analysis,” “accident analysis,” “event analysis,” “critical incident technique,” “root cause analysis,” “medical error,” “adverse events,” “patient safety,” and “significant event.” The abstracts of identified studies were reviewed and full texts of relevant articles obtained. A manual search of relevant online journals was also undertaken, eg, BMJ Quality & Safety, Quality in Primary Care, Safety Science, Applied Ergonomics, Journal of Patient Safety and Clinical Risk. Although a small number of articles of general relevance were identified to help inform thinking around the study, only two studies were of specific value in outlining different sociotechnical “systems-based” conceptual models that could potentially be adapted and simplified for the primary care context.21,22

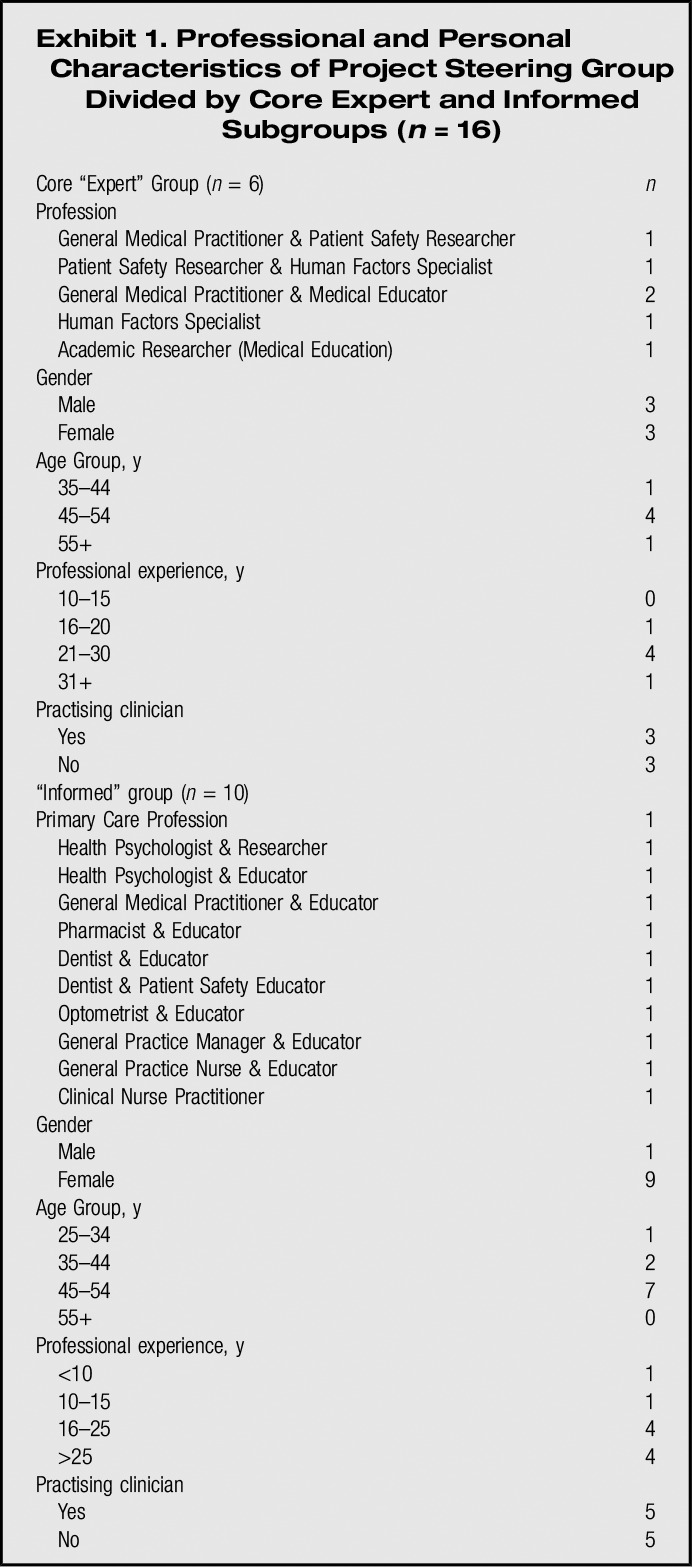

Interprofessional “Expert” Development Group

A multiprofessional expert project steering group consisting of experienced interprofessional clinical educators, patient safety researchers, and human factors specialists was formed to oversee the design, development, and testing of the intervention (Exhibit 1). The group adopted a participatory design approach,23 a core human factors principle, to innovating and developing the proposed interventions. This is a user-centred method which seeks to actively involve frontline groups with subject matter expertise in a codesign process, so the outcome meets their needs, and usability concerns are addressed iteratively before implementation.

Exhibit 1.

Professional and Personal Characteristics of Project Steering Group Divided by Core “Expert” and “Informed” Subgroups (n = 16)

Development of a Conceptual Model for a Systems Approach to SEA

The development of the enhanced SEA conceptual framework (Fig. 1) was initially undertaken by the core expert subgroup in May 2013. The design was influenced by the two similar sociotechnical systems models identified in the literature review: Vincent et al (The London Protocol)21 and Carayon et al (SEPIS Model).22 A preliminary model was developed and agreed by this group after in-depth debate during two 4-hour workshops. A key objective was to integrate a fundamental, easily identifiable, and memorable human factors principle within the model to clearly illustrate the complex systems interactions underpinning why things go wrong in the workplace. The group achieved this by reviewing and condensing relevant elements of the London Protocol (7-factor model which includes “patient, individual, task and technology, team, work environment, organisation and management, and institutional context”) and the SEIPS model (5-factor model which includes “person, task, technology and tools, environment, and organisation”). This led to creation of a more pragmatic 3-factor solution (eg, people, activity, and environments) which enhances understanding, remembering, and implementation for frontline care practitioners working in time-pressured busy clinical environments (rather than, for example, specialist incident investigators who would take a more in-depth time-consuming approach).

FIGURE 1.

Conceptual model of a systems approach to enhanced SEA in primary care settings

The preliminary model was then presented to the wider steering group. Final consensus on the design and content of the conceptual framework was achieved over three face-to-face workshop meetings informed by the mini-Delphi technique. During this process, different iterations of the model were forwarded by electronic mail to group members between meetings to review and provide feedback for improvement until full group agreement was reached.24 The group agreed to name the new systems-based approach “enhanced” SEA to differentiate it from the existing approach.4

Development of the Intervention (Guiding Tools and Process)

The steering group incorporated the principles of the systems-centred approach underpinning the conceptual model, into development of the educational intervention, a series of three “guiding tools.” These are described in Exhibit 2 and were intended to act as practical aids to assist in the implementation of the enhanced SEA concept by individual practitioners and care teams. In developing the practical design and content of the guiding tools, the group also used their professional experiences in the workplace to consider the specific contexts and constraints of primary care work settings (eg, in clinical workspace design, limited time availability, and how meetings tend to happen) to make them potentially functional, informative, and useful for frontline colleagues.

Exhibit 2.

Enhanced SEA Intervention: Description of “Guiding Tools” and Educational Support

Guiding Tools

The main intervention was named enhanced significant event analysis (enhanced SEA) by the multiprofessional development group, and was divided into three parts or tools:

At the Personal Level: A small (A5 size) 12-page Personal Booklet to be kept and read by participants following their involvement in a significant event (SE). The Booklet contains information and guidance on how we can often feel and what to expect emotionally in terms of coping with SE involvement (as a form of “negative feedback”), and an illustrated practice example of a SE case study that has been analysed using human factors principles. The case study reads on first viewing for example, that a nurse practitioner was to “blame” for a mistake, but in-depth analysis clearly demonstrates other contributory factors at play. Its overall purpose is to lessen self-blame and provide a more objective self-analysis by guiding participants through the common initial emotional stages of SE involvement and to demonstrate that there are most often other systems-based factors contributing to the occurrence of the event which have interacted to influence their actions or inactions at the time. Finally, having gone through this process there is guidance on how to take the next steps in raising the event with colleagues. The Booklet also contained a set of three small cards (titled People, Activity and Environment) which could be used as supporting or alternative guiding analytical prompts by individuals or teams.

At the Team Level: A Desk Pad Sheet Booklet (A3 size) featuring a centre-piece Venn-diagram that illustrates the interacting nature of People, Activity and Environment factors in the workplace which combine and contribute to SEs. Its purpose was to act as a constant reminder and guiding prompt to adopt a structured systems-based analysis of the SE during team-based meetings and to be mindful of the emotional impact the event may have had on work colleagues. The Desk Pad Sheets could be torn off by individuals and also used as worksheets to make notes on reasons why the event happened and agreed actions to minimise risks of reoccurrence.

At the Personal Level: A standard A4 enhanced SEA Report Form was developed from a previously published example. Its purpose was to act as a “forcing function” to guide individuals when writing up their event analysis to adopt a systems approach to understanding why the event occurred, identifying learning needs and taking meaningful action for improvement, where necessary.

Educational Support and Process

The guiding tools were supported by a further intervention which could be accessed by participants online www.nes.scot.nhs.uk/shine/. The website acted as a “one-stop-shop” to which all participants, steering group members, and other stakeholders were directed to view and download relevant project information and outputs, and access relevant educational guidance on Human Factors (eg, undertake a short very basic 20-minutes e-learning intervention to raise awareness of the enhanced SEA approach; and read relevant academic papers).

Phase 2: Evaluation of Their Use and Implementation by Care Practitioners

Study Design and Setting

A mixed-methods evaluation of the pilot testing of the enhanced SEA intervention was undertaken from October 2013 to February 2014. The study design was informed by a pragmatic approach to combining and linking cross-sectional survey data (mainly quantitative) with telephone interview data (qualitative), which can provide a more in-depth understanding of issues than would be the case if relying on either approach by itself.25

Recruitment of Participants

Clinical educational representatives on the project steering group identified suitable professional groups from which to recruit volunteer participants. Those groups were approached using established professional and organizational networks across Scotland (eg, specialty training programmes or vocational training schemes). The goal was to recruit a diverse mix of clinical trainees, qualified health care practitioners, managers, and clinicians furthering their training in education to represent groups in the primary care workforce who would be involved in interprofessional SEA activity and in teaching the concept and process. We aimed for a pragmatically determined sample size of 140 participants. Participants were mailed printed copies of the guiding tools and also notified by email that these could also be downloaded in PDF format from a dedicated project website which contained further supporting information (www.nes.scot.nhs.uk/shine/).

Data Collection Approaches

We used three data collection tools. They included the following:

Preintervention and postintervention questionnaires

The purpose of the preintervention questionnaire was to collect participant experiences of SEA and attitudes to a range of patient safety issues. The postintervention questionnaire revisited some of these issues to enable comparisons but also established experiences and perceptions of the guiding tools and their usability and impacts. Questionnaire items and statements were adapted from published questionnaires concerned with patient safety-related attitudes, beliefs, and experiences of health care professionals associated with, for example, their exposure to significant events, incident investigation, and understanding of why things go wrong in the workplace,26–28 which were directly relevant to the intervention. Seven-point Likert scales were used to measure attitudinal responses to these statements. Data were collected on experiences of being involved in SEA; attitudes to, perceptions and experiences of, patient safety issues and related improvement; and perceived usability (defined as the ease of use and learnability of a human-made object) and impact of the enhanced SEA guide tools on coping with the emotional consequences of a significant event and subsequently taking a systems approach to learning and improvement. Potential participants were prompted on visiting the dedicated project website to click on a link to complete a preintervention online questionnaire (hosted by Questback). The questionnaire was pilot tested on six colleagues, with no feedback issues identified and therefore remained unchanged.

Enhanced SEA report submissions

Completed written reports using the new structured format for enhanced SEA were submitted by participants through a dedicated email address. Reports were analyzed to determine whether the guiding principles of the new approach had been used by participants. The following data were collected: type of significant event; reasons cited for event occurrence; involvement of external agencies/individuals; level of patient harm (death, severe, moderate, low, and none); type of learning issues identified; and type of actions taken.

Telephone interviews

After submission of their enhanced SEA reports and completion of the postintervention questionnaire, participants received an email invitation to take part in a telephone interview. All willing participants were interviewed. These interviews explored views, experiences, and impacts of SEA in general and the enhanced SEA approach specifically. The semistructured telephone interviews were conducted by experienced health psychologists and qualitative researchers (D.H., J.F., S.S., and J.W.) and were digitally recorded and transcribed with consent. Interviews lasted 15 minutes on average (range 12–20 minutes).

Analysis of Data

We used both quantitative and qualitative analytical approaches. Quantitative questionnaire data were exported to SPSS version 22. Descriptive and comparative analyses were conducted to provide background data and an overview of the responses received to the questions and statements. Differences between preintervention and postintervention responses for all respondents were calculated along with 95% CIs. For questionnaire statements related to each of the three guiding tools with a response rating of >4 on the 7-point Likert scale (indicative of good to strong positive agreement), the number of responses was aggregated, and a mean “usability” score (with standard deviation) calculated for each tool to provide a measure of perceived usefulness.

Our analysis of the questionnaire data generated questions that were incorporated into our interviews. Issues addressed in the interviews included the following: general views of SEA; barriers/facilitators to engaging with SEA; impact of enhanced SEA on quality and safety of care; provision of emotional and professional support following use of enhanced SEA; and experiences and perceptions of the enhanced SEA tools.

Telephone interview data were transcribed and subjected to basic thematic analysis by the interview team (D.H., J.F., S.S., and J.W.).29 Data were coded and themes generated on an iterative basis and checked and verified against the coding framework by P. Bowie. Where disagreements occurred, these were resolved by consensus. Each written SEA report was thematically analyzed jointly by four researchers (E.F., D.H., J.W., and S.S.) during a series of meetings. A preliminary coding framework was applied based on the content of the enhanced SEA conceptual model and SEA research in primary care for judging and classifying significant events, contributory factors, learning needs identified, and actions taken. In this way, we were able to collect and categorize data to demonstrate to some extent if a systems approach had been undertaken, and sufficient learning and improvement had reportedly taken place. Where there was disagreement between researchers, a consensus was reached through discussion on the codes assigned. To enhance validity, a fifth researcher (P.B.) independently analyzed one-in-five reports and the associated coding before meeting with the other researchers to triangulate final agreement on the data collected.

Ethical Review

The study was prescreened by the west of Scotland Research Ethics Committee but judged to be a service development that did not require ethical approval.

RESULTS

Survey and Interview Participation Rates

In total, 240 health care professionals completed the preintervention online questionnaire survey of which 159 were women (66.3%). Of this group, 149 were sufficiently self-motivated to subsequently participate in the pilot study by accessing the guiding tools, undertaking enhanced SEA in the workplace, and returning a completed written report (62.1%). The postintervention questionnaire was then completed by 123 participants (80.9%). The largest participant group was general medical practitioners (80/149, 53.7%), followed by general dental practitioners (34/149, 22.8%). Twenty-three participants were interviewed, including dental profession (n = 13), general medical practitioners (n = 7), general practice nurses (n = 2), and a community pharmacist (n = 1). Key themes from interview data included attitudes toward and experiences of SEA; blame culture; and usability of guiding tools.

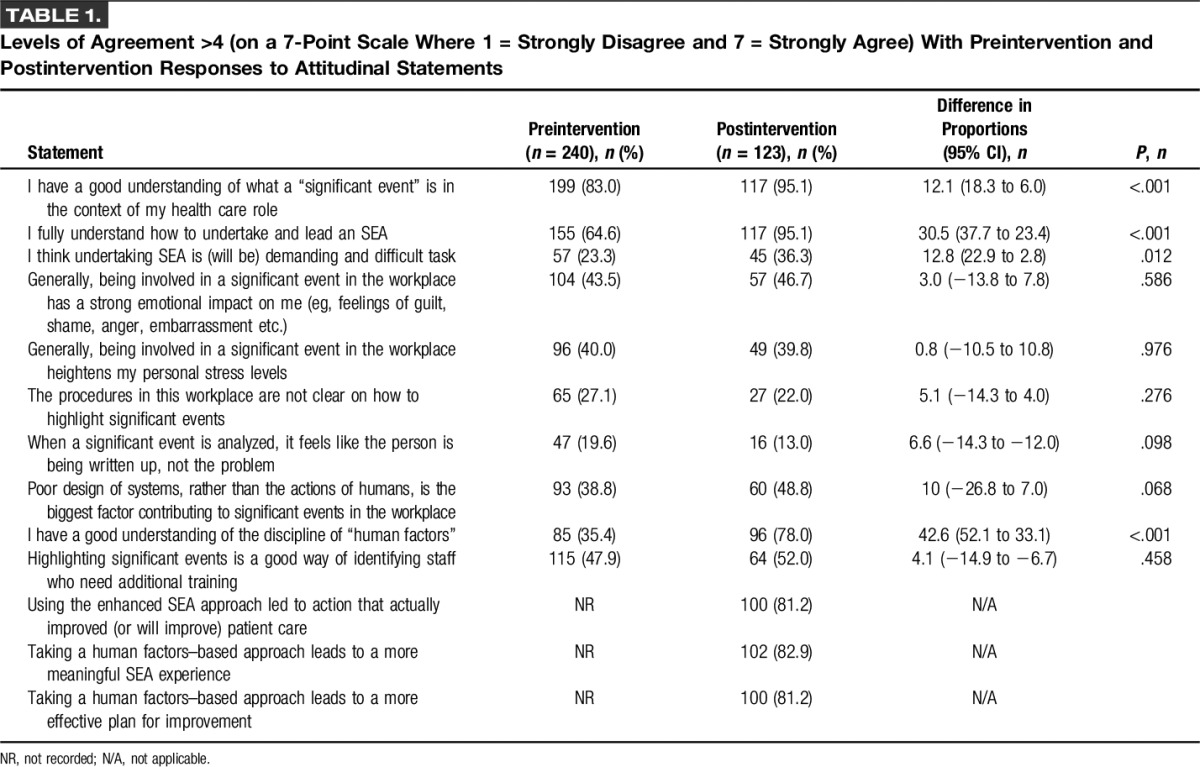

Attitudes Toward and Knowledge of SEA and the Human Factors Discipline

Preintervention and postintervention attitudinal comparisons are outlined in Table 1. Statistical differences were apparent in the reported understanding of a significant event (P < .001), on how to undertake and lead an event analysis (P < .001) and understanding of the discipline of human factors (P < .001). The great majority of postintervention respondents indicated that the pilot approach tested leads to a more meaningful SEA experience (102/123, 82.9%) and to effective plans for improvements in patient care (100/123, 81.2%). Our interview data provided illustrations of how the approach enhanced meaningfulness, especially about the outcome in the following:

People become jittery when we are drilling down into something that has happened but seeing at the end of it that there is a positive outcome, instead of someone getting a “kicking” is where we are supposed to be getting to (Practice Nurse 1—Interview).

I think the great thing about it is that it emphasises from the start that everything is being looked at positively, and that it is not down to human failings, it's down to people being put in the wrong situation and generally it is designed to move forward and improve things (Dentist 8—Interview).

I think the human factors model is a very useful approach to looking at an SEA and without doubt caused me to think differently about this SEA and feel the outcomes were more meaningful (General Medical Practitioner 2).

TABLE 1.

Levels of Agreement >4 (on a 7-Point Scale Where 1 = Strongly Disagree and 7 = Strongly Agree) With Preintervention and Postintervention Responses to Attitudinal Statements

Perceived Usability and Impact of the Enhanced SEA Guiding Tools

A full breakdown of responses to the perceived usability and impact of the enhanced SEA guiding tools is outlined in Table 2. The mean aggregated (grouped) score for the percentage of respondents rating related statements >4 (on the 7-point scale) for each of the guiding tools was as follows: Report Format (mean = 89.7%, SD = 3.79), Personal Booklet (mean = 83.9%, SD = 17.6), and Desk Pad (mean = 73.8%, SD = 15.0). Comments illustrating ways in which the enhanced tools contributed to an improved analysis included the following:

…use of the tool enlightens staff, never a case where just one person is blamed…others were unsure about discussing events in a group, the tool might make people more aware and allow them to provide support (General Practitioner 5).

I think it'll get people to think more into just why significant event analysis happens and it's like a big elephant in the room, of course you're embarrassed and you will have these emotions and I think it encourages people to realise that those emotions are there, we're not working as robots if you like (Pharmacist 1).

TABLE 2.

Perceived Usability of Each Interventional Guiding Tool (Including Aggregated Mean Score) by Study Participants (n = 123)

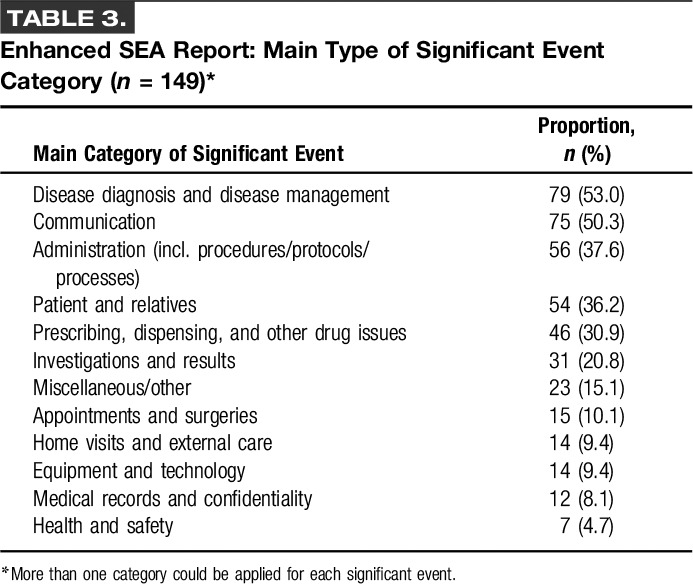

Evaluation of enhanced SEA report submissions

Overall, 149 completed report submissions (149/240, 62.1%) were received. Most were submitted from general medical practice (82, 55.0%) followed by general dental practice (34, 27.9%). Further submissions were received from general practice nursing (15, 9.9%), community pharmacy (10, 6.6%), practice managers (10, 6.6%), and a single optometrist (0.6%). The main significant event categories for all submissions are described in Table 3, with most focusing on disease diagnosis and management issues (79, 53%), communication (75, 50.3%), and administrative system problems (56, 37.6%).

TABLE 3.

Enhanced SEA Report: Main Type of Significant Event Category (n = 149)*

Just over half of submissions were concerned with “near miss” harm incidents (77, 51.0%). In four cases, the event analysis focused on a patient's death (2.6%) with a further three categorized as “severe harm” (2.5%). There were 17 additional cases of “moderate harm” (11.3%) with a further 31 categorized as “low harm” events (20.5%).

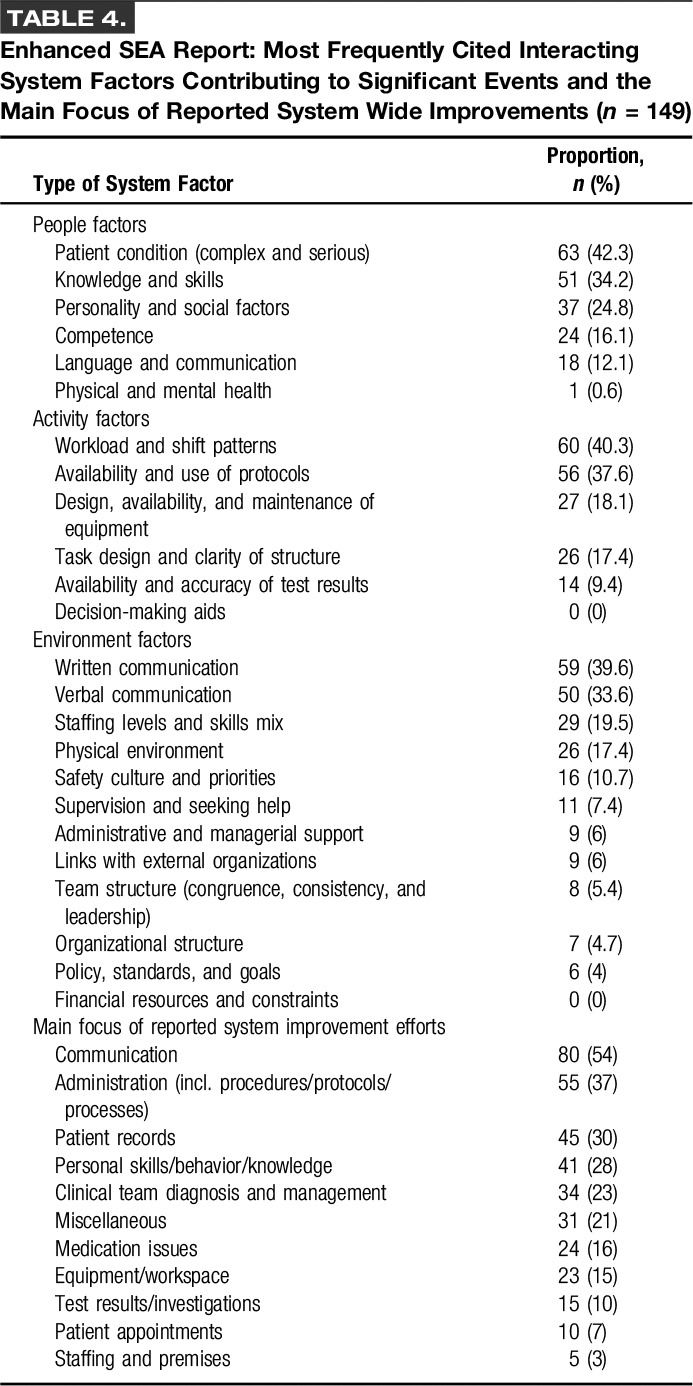

The most frequently cited interacting system factors contributing to why the events occurred are outlined in Table 4. The most common contributory issues related to the patient's condition (63, 42.3%), workload and shift patterns (60, 40.3%), and written communication processes (59, 39.6%).

TABLE 4.

Enhanced SEA Report: Most Frequently Cited Interacting System Factors Contributing to Significant Events and the Main Focus of Reported System Wide Improvements (n = 149)

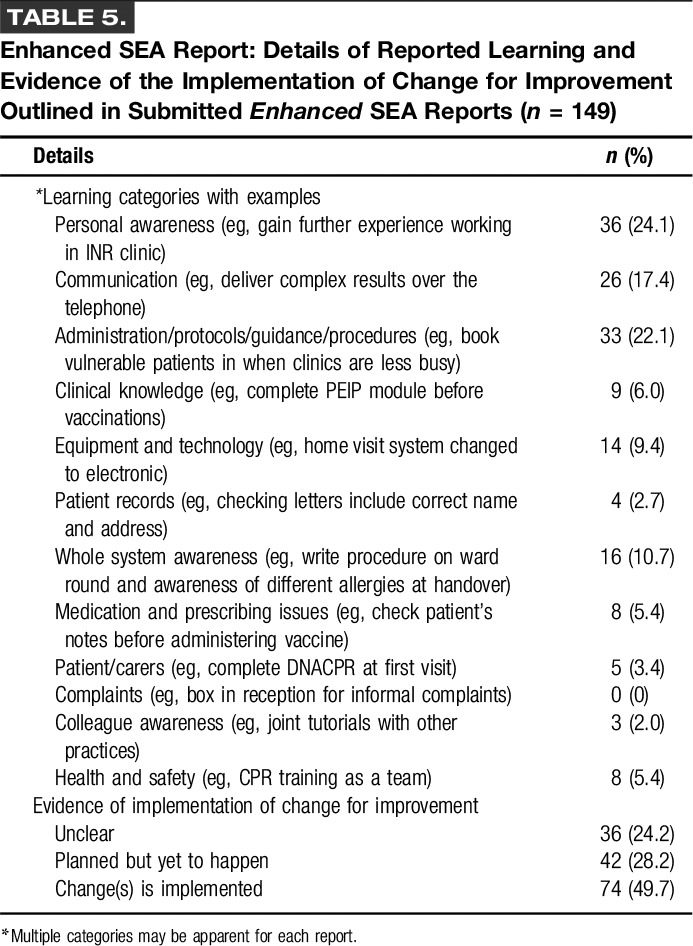

In total, 107 reports were judged to have demonstrated clear evidence of team involvement in the event analysis in question (107/149, 71.8%), with most also involving two or more professional groups (98/149, 65.8%). In most submissions (125, 83.9%), it was determined that a systems approach to identifying the range of factors (and their interrelationships) contributing to how and why significant events occurred was undertaken by participants. A range of system-wide learning issues were also evident, whereas change(s) for improvement was considered to have been implemented in 74 submitted reports (49.7) with improvement actions being planned in a further 42 cases (28.2%)—Table 5.

TABLE 5.

Enhanced SEA Report: Details of Reported Learning and Evidence of the Implementation of Change for Improvement Outlined in Submitted Enhanced SEA Reports (n = 149)

DISCUSSION

We developed and tested an enhanced SEA approach which sought to help care practitioners recognize and reflect on related emotional impacts and use systems thinking to understand why significant events happen, and to facilitate a more objective and constructive learning and improvement approach to minimize future risks. After intervention, most participants reported an improved understanding of how to undertake and lead the SEA process. Most were also supportive of the newly integrated human factors–based approach, and were generally positive about the related guiding tools and their impact on enhancing the meaningfulness and improvement potential of SEA. However, it is also clear that, for many participants, undertaking SEA may remain a challenging and stressful task regardless of the “success” or otherwise of this intervention.

Both the Personal Booklet and Desk Pad tools were seen as relevant to understanding the emotions involved in a significant event for care practitioners. However, just over half of participants did not feel that the tools reduced their own personal stress. Plausible reasons for this could be, for example, that the severity and consequences of the significant event chosen by the participant for analysis was either low and did not have high emotional impact. Alternatively, the event could have had high-stakes professional or regulatory implications for the care practitioner, which may focus on individual behaviors and actions rather than wider system contributory factors.

However, focusing on addressing the emotional reactions and negative consequences experienced by many care practitioners involved in significant events seems to be a novel concept as part of incident investigations. In summary, the findings indicate that the conceptual framework and guiding tools developed were positively received by most and can support a systems-centred approach to SEA, although design improvements are necessary based on feedback gathered.

In the types of significant events selected for analyses, the range of categories is reflective of how everyday human performance can be impacted by a wide array of complex interactions with other functions and activities across the primary care work system. The identification by most participants of a plethora of system-wide factors contributing to these significant events and the variety of system-centred improvement efforts that they devised and outlined in SEA reports arguably adds further weight to this assertion. In addition, the details of the reported knowledge gained and evidence of the actual or planned implementation of change described in submitted SEA reports (as judged by the authors), demonstrates to some extent that the intervention has led to meaningful individual/collective learning, and improvements in care processes and, by extension, possible enhancements in the quality and safety of patient care provided.

This is potentially indicative of participants considering everyday problem issues with wider system interactions, rather than focusing narrowly on their own individual performances such as, for example, highlighting actions or inactions related to personal decision making. Although this personal approach may be potentially useful, it is also limited, from a human factors perspective, because of what we know about the need to identify and consider the impact of wider systemic factors on individual (and organizational) performance and well-being.

Study Strengths and Limitations

The participatory codesign process ensured that the development was “grounded” and relevant to health care practice given the involvement of frontline care practitioners and clinical educators, which was augmented by human factors expertise. Use of underlying theory and evidence to guide the development process ensured that it was based on human factors and safety science principles. Mixed-methods evaluation enabled diverse data collection from different sources to provide a more informed understanding of relevant study issues than a single method may have offered.

Respondent bias may be evident given that participant numbers were small, unrepresentative, and were largely volunteer “early adopters.” The characteristics of those who were invited but declined to participate are unknown. Evaluation data were self-reported and unverifiable, while enhanced SEA outcomes may be influenced by personal and political agendas, meaning findings should be interpreted with caution. We also compared the evaluation questionnaire data from our preintervention group (n = 240) with the postintervention group (n = 123), when it may have been more methodologically sound to compare the latter with responses from the 149 actual study participants from the preintervention group. However, the technical setup of our survey tool made it difficult to identify and match these specific two groups for comparison. Evaluation of submitted written reports was open to bias through the strong likelihood of variations in interrater judgements, although there will be also variation in participants' writing abilities and in accurately conveying findings.

Comparison With Existing Literature

The evaluation findings corresponded with evidence around care practitioners' experiencing personal stress and other emotional impacts associated with significant events.4,11–14 The event types analyzed by participants also mirrored the most common categories described in research,6,7,30–32 including medico-legal evidence33 ie, issues around disease diagnosis and management, medications, and communications. This may demonstrate the preparedness of participants to tackle the most serious types of safety incidents using the enhanced SEA approach. Arguably, this study was the first attempt to outline and categorize the full range of factors across multiprofessional primary care work systems that reportedly combined to contribute to the significant events that were analyzed. A plausible interpretation is that it is indicative of systems thinking by most study participants. Whereas SEA research6 notes that learning opportunities “were often non-specific professional issues not shared with the wider practice team,” the study evidence suggests a shift toward a more systems-centred approach given the greater focus on involving colleagues and identifying communication and administration learning needs.

Implication for Practice

The development and testing of the enhanced SEA method is a small start in improving the rigor with which this type of learning and improvement is traditionally undertaken with further scope for facilitating interprofessional education and transformative learning.34 However, already decision makers in NHS Scotland have shown strong interest or agreed to implement this approach. For example, to support medical appraisal for revalidation, training of general practice educational supervisors, learning from complaints to the public sector ombudsman, and contributing to the national agenda on reporting and learning from adverse events. Spread and dissemination of the approach has also led to the design of a prototype “train-the-trainer” package for patient safety incident investigators and educators. This intervention has a strong focus on the potential emotional consequences of incidents for care practitioners and the need to take a “systems” (rather than a linear “cause and effect”) approach to analyzing safety incidents in complex systems.21,22

Future Research and Development

Further development of this work is already underway in incorporating the reported feedback to improve related interventions and design new approaches (eg, design and usability testing of a digital mobile and desktop App containing the guiding tools and improvement of the resources contained on the dedicated webpage including the related e-learning module). Spread and dissemination of the enhanced SEA method within the Scottish Health Service has commenced, with the new approach being integrated within the curricula of a range of speciality and vocational training schemes and in support of continuing professional development and appraisal arrangements across all the participating professional groups. The potential for this intervention to make a contribution to the evolving national policy on the reporting and management of adverse events is also being explored.35 However, further testing and evaluation of the new approach at scale (and also in health and social care settings beyond the original primary care test sites) is required to gain a greater understanding of its acceptability, feasibility, and impact on the well-being of care practitioners, patient safety, and team performance. How well care teams apply the analytical process, problem-solve and critique, and implement potential solutions will be pivotal to its success,4,16 and this will likely require ethnographic research approaches36 to explore these issues. The development and testing of the enhanced SEA method is a small start in improving the rigor with which this type of learning and improvement is traditionally undertaken.

Conclusions

The pilot study provided encouraging evidence that applying human factors principles to the SEA process may offer care practitioners and teams a more objective and constructive means of gaining a deeper, systems-based understanding of why things go wrong. This may help to depersonalize the incident and focus attention on the “true” contributory factors—that is, how the complexity of everyday people, activity, and wider environment issues can interact to increase the risk of health care error and avoidable harm.16–18,37 Understanding these core principles is vital in adopting a mature and constructive response to related learning and action for improvement to minimize the risks of incident recurrence.4,16

Lessons for Practice

■ Care practitioners may be emotionally affected by involvement in significant events, which can affect both their personal well-being and preparedness to raise and critically analyze these issues.

■ The analyses of significant events by primary care practitioners often lack a systems approach to understanding why things went wrong, which can limit subsequent learning and improvement efforts.

■ By integrating human factors principles into the existing method of significant event analysis—primarily through adopting a systems approach—we may be able to prompt care practitioners and teams to better consider and manage any related emotional reactions, and undertake more meaningful event analyses leading to improved care quality and safety.

ACKNOWLEDGMENTS

The authors offer sincere thanks to all the health care professionals who participated in this pilot study and who provided valuable feedback to help us with the development and testing process. They also thank The Health Foundation for funding their work and for providing ongoing support and encouragement, and are grateful to their employing organization, NHS Education for Scotland, for additional funding.

Footnotes

Disclosures: The authors declare no conflict of interest. P. Bowie, E. McNaughton, and D. Bruce conceived the idea, acquired funding, and led the study which was project managed by D. Holly. The data collection and analysis process was undertaken by D. Holly, E. Forrest, S. Stirling, J. Ferguson, and J. Wakeling, with additional data interpretation by P. Bowie, J. McKay, E. McNaughton, and D. Bruce. P. Bowie drafted the initial manuscript, and all authors contributed to the study design, conceptual model, guiding tool developments, and critical review, drafting, and final approval of the manuscript. The study was funded by the Health Foundation (www.health.org.uk) as part of a 2012/13 SHINE Programme Award.

REFERENCES

- 1.The Health Foundation: Evidence Scan: Levels of Harm in Primary Care. 2011. London, United Kingdom: Available at: http://www.health.org.uk/publications/levels-of-harm-in-primary-care/. Accessed September 14, 2015. [Google Scholar]

- 2.Francis R. Report of the Mid Staffordshire NHS Foundation Trust Public Inquiry. London, United Kingdom: The Stationery Office; 2013. [Google Scholar]

- 3.O'Beirne M, Sterlin P, Palacios-Derflingher L, et al. Emotional impact of patient safety incidents on family physicians and their office staff. J Am Board Fam Med. 2012;25:177–183. [DOI] [PubMed] [Google Scholar]

- 4.Bowie P, Pope L, Lough M. A review of the current evidence base for significant event analysis. J Eval Clin Pract. 2008;14:520–536. [DOI] [PubMed] [Google Scholar]

- 5.Pringle M, Bradley C, Carmichael C, et al. Significant Event Auditing: A Study of the Feasibility and Potential of Case-based Auditing in Primary Medical Care. Occasional Paper No. 70. London, United Kingdom: Royal College of General Practitioners; 1995. [PMC free article] [PubMed] [Google Scholar]

- 6.McKay J, Bradley N, Lough M, et al. A review of significant events analysed in general medical practice: implications for the quality and safety of patient care. BMC Fam Pract. 2009;10:61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cox SJ, Holden JD. A retrospective review of significant events reported in one district in 2004–2005. Br J Gen Pract. 2007;57:732–736. [PMC free article] [PubMed] [Google Scholar]

- 8.Scott I. What are the most effective strategies for improving quality and safety of health care? Intern Med J. 2009;39:389–400. [DOI] [PubMed] [Google Scholar]

- 9.Sargeant J, Mann K, Sinclair D, et al. Understanding the influence of emotions and reflection upon multi-source feedback acceptance and use. Adv Health Sci Educ Theory Pract. 2008;13:275–288. [DOI] [PubMed] [Google Scholar]

- 10.Kluger AN, DeNisi A. Effects of feedback intervention on performance: a historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol Bull. 1996;119:254–284. [Google Scholar]

- 11.Scott SD, Hirschinger LE, Cox KR, et al. The natural history of recovery for the healthcare provider “second victim” after adverse patient events. Qual Saf Health Care. 2009;18:325–330. [DOI] [PubMed] [Google Scholar]

- 12.Wu AW, Steckelberg RC. Medical error, incident investigation and the second victim: doing better but feeling worse? BMJ Qual Saf. 2012;21:267–270. [DOI] [PubMed] [Google Scholar]

- 13.Dekker S. Second Victim: Error, Guilt, Trauma and Resilience. Boca Raton, FL: CRC Press; 2013. [Google Scholar]

- 14.Bowie P, McKay J, Dalgetty E, et al. A qualitative study of why general practitioners may participate in significant event analysis and educational peer review. Qual Saf Health Care. 2005;14:185–189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wilson JR. Fundamentals of systems ergonomics/human factors. Appl Ergon. 2014;45:5–13. [DOI] [PubMed] [Google Scholar]

- 16.Anderson JE, Kodate N. Learning from patient safety incidents in incident review meetings: organisational factors and indicators of analytic process effectiveness. Saf Sci. 2015. Available at: http://dx.doi.org/10.1016/j.ssci.2015.07.012. Accessed March 1, 2016. [Google Scholar]

- 17.Woloshynowych M, Rogers S, Taylor-Adams S, Vincent C. The investigation and analysis of critical incidents and adverse events in healthcare. Health Technol Assess. 2005;9:1–143. [DOI] [PubMed] [Google Scholar]

- 18.Dekker S. The Field Guide to Understanding Human Error. Hampshire, England: Ashgate Publishing Limited; 2006. [Google Scholar]

- 19.Sargeant J, Mann K, van der Vleuten C, et al. Reflection: a link between receiving and using assessment feedback. Adv Health Sci Educ Theor Pract. 2009;3:399–410. [DOI] [PubMed] [Google Scholar]

- 20.Boud D, Cohen R, Simpson J. Peer learning and assessment. Assess Eval High Educ. 1999;24:413–426. [Google Scholar]

- 21.Vincent C, Taylor-Adams S, Stanhope N. Framework for analysing risk and safety in clinical medicine. BMJ. 1998;11:1154–1157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Carayon P, Schoofs Hundt A, Karsh BT, et al. Work system design for patient safety: the SEIPS model. Qual Saf Health Care. 2006;15(suppl 1):i50–i58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hignett S, Wilson JR, Morris W. Finding ergonomic solutions–participatory approaches. Occup Med (Lond). 2005;5:200–207. [DOI] [PubMed] [Google Scholar]

- 24.Hannifin S. Review of literature on the Delphi technique. Available at: http://www.dcya.gov.ie/documents/publications/Delphi_Technique_A_Literature_Review.pdf. Accessed March 23, 2015.

- 25.Johnson R, Onwuegbuzie A. Mixed methods research: a research paradigm whose time has come. Educ Res. 2004;33:14–26. [Google Scholar]

- 26.Bowie P, McKay J, Norrie J, et al. Awareness and analysis of a significant event by general practitioners: a cross sectional survey. Qual Saf Health Care. 2004;13:102–107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.McKay J, Bowie P, Murray L, et al. Barriers to significant event analysis: an attitudinal survey of principals in general practice. Qual Prim Care. 2003;11:189–198. [Google Scholar]

- 28.McKay J, Bowie P, Murray L, et al. Attitudes to the identification and reporting of significant events in general practice. Clin Governance: An Int J. 2004;9:96–100. [Google Scholar]

- 29.Saldana J. The Coding Manual for Qualitative Researchers. Thousand Oaks, CA: Sage; 2009. [Google Scholar]

- 30.Elder NC, Dovey SM. Classification of medical errors and preventable adverse events in primary care: a synthesis of the literature. J Fam Pract. 2002;51:927–932. [PubMed] [Google Scholar]

- 31.Jacobs S, O'Beirne M, Derfiingher LP, et al. Errors and adverse events in family medicine: developing and validating a Canadian taxonomy of errors. Can Fam Physician. 2007;53:271e6–271e270. [PMC free article] [PubMed] [Google Scholar]

- 32.Makeham MA, Kidd MR, Salman DC, et al. The Threats to Patient Safety (TAPs) study: incidence of reported errors in general practice. Med J Aust. 2006;185:95–98. [DOI] [PubMed] [Google Scholar]

- 33.Silk N. An Analysis of 1000 Consecutive General Practice Negligence Claims. Leeds, United Kingdom: Medical Protection Society (MPS); 2000. [Google Scholar]

- 34.Sargeant J. Theories to aid understanding and implementation of interprofessional education. J Contin Educ Health Prof. 2009;29:178–184. [DOI] [PubMed] [Google Scholar]

- 35.Healthcare Improvement Scotland. A National Approach to Learning From Adverse Events Through Reporting and Review. Edinburgh, Scotland: Healthcare Improvement Scotland; Available at: http://www.healthcareimprovementscotland.org/our_work/governance_and_assurance/management_of_adverse_events1.aspx. Accessed September 14, 2015. [Google Scholar]

- 36.Goodson L, Vassar M. An overview of ethnography in healthcare and medical education research. Educ Eval Health Prof. 2011;8:4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Leveson NG. Applying systems thinking to analyze and learn from events. Saf Sci. 2011;49:55–64. [Google Scholar]