Abstract

Objective

To provide a model for conducting cost‐effectiveness analyses in medical education. The model was based on a randomised trial examining the effects of training midwives to perform cervical length measurement (CLM) as compared with obstetricians on patients' waiting times. (CLM), as compared with obstetricians.

Methods

The model included four steps: (i) gathering data on training outcomes, (ii) assessing total costs and effects, (iii) calculating the incremental cost‐effectiveness ratio (ICER) and (iv) estimating cost‐effectiveness probability for different willingness to pay (WTP) values. To provide a model example, we conducted a randomised cost‐effectiveness trial. Midwives were randomised to CLM training (midwife‐performed CLMs) or no training (initial management by midwife, and CLM performed by obstetrician). Intervention‐group participants underwent simulation‐based and clinical training until they were proficient. During the following 6 months, waiting times from arrival to admission or discharge were recorded for women who presented with symptoms of pre‐term labour. Outcomes for women managed by intervention and control‐group participants were compared. These data were then used for the remaining steps of the cost‐effectiveness model.

Results

Intervention‐group participants needed a mean 268.2 (95% confidence interval [CI], 140.2‒392.2) minutes of simulator training and a mean 7.3 (95% CI, 4.4‒10.3) supervised scans to attain proficiency. Women who were scanned by intervention‐group participants had significantly reduced waiting time compared with those managed by the control group (n = 65; mean difference, 36.6 [95% CI 7.3‒65.8] minutes; p = 0.008), which corresponded to an ICER of 0.45 EUR minute−1. For WTP values less than EUR 0.26 minute−1, obstetrician‐performed CLM was the most cost‐effective strategy, whereas midwife‐performed CLM was cost‐effective for WTP values above EUR 0.73 minute−1.

Conclusion

Cost‐effectiveness models can be used to link quality of care to training costs. The example used in the present study demonstrated that different training strategies could be recommended as the most cost‐effective depending on administrators' willingness to pay per unit of the outcome variable.

Short abstract

Discuss ideas arising from the article at http://www.mededuc.com discuss.

Introduction

Health professions education involves training and certifying care‐provider groups in specific procedures. However, there are considerable associated costs that have been estimated globally to amount to more than 80 billion Euros per year.1 Because some training is usually more effective than no training2 but often associated with considerable monetary and time costs, identifying the most cost‐effective strategy can be challenging.3 Nonetheless, many institutions have to balance the need for training new health care providers in performing certain types of procedures against the costs associated with training.4 Cost‐effectiveness analyses are suitable for these types of decisions; however, only a few studies have attempted to link training costs to quality of care.5, 6 Experimental research in medical education provides evidence of effectiveness of different training interventions or programmes.7, 8 However, quite often there are substantial differences in the costs of different training programmes. For example, simulation‐based training may improve learners' anatomical knowledge9 but so does reading a textbook at only a fraction of the cost. Administrators and educators therefore have to question not only if an educational intervention works but also whether the associated change in the outcome of interest is significant enough to justify a difference in training costs.10 Consequently, the ability to inform and guide educators and administrators on future practice is limited unless the associated costs of training are reported and compared in experimental trials. If not, educators and administrators may become reluctant to invest in effective training programmes because the return on investment is not readily apparent.11 Even worse, costly training programmes may be adopted over cheaper but equally effective alternatives as a consequence of lacking cost‐effectiveness data. The discrepancy between how education scientists evaluate training programmes based on their effectiveness and the way administrators determine which programmes to adopt based on cost may eventually lead to a gap between education evidence and actual practice.11, 12

Previous studies have attempted to establish the cost‐effectiveness of simulation programmes13, 14 and of different simulator fidelity levels.3 Despite these efforts, there is still no consensus on how to assess cost‐effectiveness in medical education.15 This may in part be attributed to difficulties linking training interventions and patient outcomes.16 Accurate estimation of the total effects of training may also prove challenging due to decay in skills over time, study participant attrition, organisational changes, and residual training effects that remain after the trial period has ended. Moreover, the effects of training are not always immediately apparent but may instead result in better preparation for future learning.17, 18 Likewise, cost estimates are prone to differences between countries, institutions and populations, thus potentially limiting the generalisability of cost‐effectiveness studies in medical education.19

The underlying principle of cost‐effectiveness analysis is to compare the difference in costs between training programmes with the difference in effectiveness between the same two alternatives.20 Administrators and educators may only be prepared to pay a certain amount for each unit of the outcome variable. This is also known as willingness to pay (WTP) and is the maximum amount that an administrator is prepared to pay to achieve or avoid a certain outcome. A new programme, intervention or training concept may be considered cost‐effective if the cost of the outcome of interest is less than what administrators are willing to pay.19 Uncertainty is represented in each of the steps described above and probabilistic models have therefore been developed for this purpose in health economics theory21, 22, 23 Using these models, the likelihood that a given intervention is cost‐effective can be calculated and reworded into concrete recommendations to administrators. However, the majority of existing economic evaluations in medical education research3 have failed to include probabilistic analyses, thereby weakening their recommendations.

As indicated above, there are multiple threats to the internal and external validity of cost‐effectiveness research in health professions education. This underlines the need for models that can guide educators, administrators and decision makers in choosing and prioritising training strategies. Based on current health economics theory19, 20, 21, 22, 23 and existing cost‐effectiveness studies in medical education,3, 4, 5, 6, 10, 11, 13, 14, 15 we propose a general model for conducting cost‐effectiveness studies in health professions education, the Programme Effectiveness and Cost Generalization (PRECOG) model. The PRECOG model includes four steps that represent increasing levels of recommendation strength, from assessment of programme effectiveness and cost to generalisation of cost‐effectiveness evidence. Each step of the PRECOG model is described in further detail in the following sections. In addition, we provide an example of a cost‐effectiveness trial of simulation‐based training in an obstetric emergency unit.

Methods

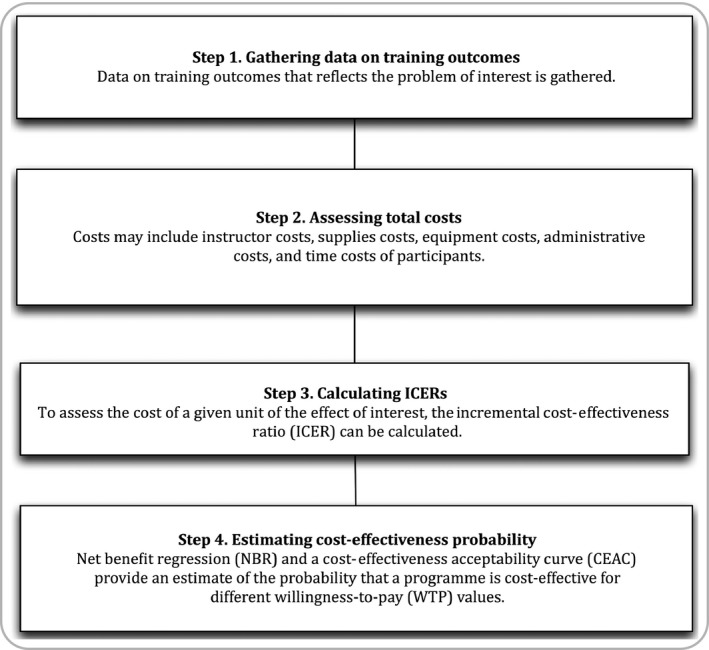

The four steps of the PRECOG model of collecting cost‐effectiveness evidence include: (i) gathering data on training outcomes, (ii) assessing total costs, (iii) calculating incremental cost‐effectiveness ratios, and (iv) estimating cost‐effectiveness probability (Fig. 1). Each step of the model was developed through a synthesis of current health economics theory19, 20, 21, 22, 23 and existing cost‐effectiveness studies in medical education.3, 4, 5, 6, 10, 11, 13, 14, 15, 24

Figure 1.

The Programme Effectiveness and Cost Generalization (PRECOG) model for cost‐effectiveness studies in medical education

Step 1. Gathering data on training outcomes

The first step includes gathering relevant data on training outcomes of interest. The purpose of this step is to estimate the effect of different training programmes and it follows standard research methods in health professions education. However, special consideration must be given to selection of the primary outcome of interest because it is placed in the denominator of a cost‐effectiveness ratio and any recommendation will be based on potential changes in this outcome. A particular challenge to gathering data on training outcomes is that effects are often measured immediately upon completion of training but may persist long after the study period has ended.25 The true effects of training are therefore underestimated if residual effects are omitted when estimating total training effects.

Step 2. Assessing total costs

The viewpoint for the cost analysis is rarely reported but is nonetheless important as the cost of training varies depending on who bears it (e.g. patients, hospitals, the national health service, trainees, etc.).19, 26 Existing health professions education literature has reported direct costs in terms of implementation cost,27 equipment cost,3, 13 personnel cost11, 28 and operating costs (e.g. operating theatre costs).28, 29, 30 Unrelated costs that may arise secondary to training are usually not reported. Such costs may involve consequences of ineffective training programmes for future practice (e.g. diagnostic errors or surgical complications). At some point, however, an artificial boundary must be drawn around the cost analyses and the role of unrelated costs is therefore debated in the health economics literature.19

Step 3. Determining the incremental cost‐effectiveness ratio (ICER)

When the effects and costs of different interventions have been established, the costs of one additional outcome of interest unit can be calculated as the incremental cost‐effectiveness ratio (ICER). This ratio can be determined as the ratio of the difference in costs between training programmes to the difference in effectiveness between the same two alternatives.20 An ICER (ICER = ΔC/ΔE) is calculated as the difference in training costs between different training programmes (ΔC) divided by the difference in their effectiveness (ΔE). The resulting ratio is, however, a relative term with no indication of uncertainty and the generalisability of raw cost‐effectiveness ratios may therefore be limited.10

Step 4. Estimating cost‐effectiveness probability

A new programme, intervention or training concept may be considered cost‐effective if the cost of one additional unit of the outcome of interest is less than the administrator's willingness to pay (WTP) value.21 Willingness to pay is the maximum amount that an administrator is prepared to pay to achieve or avoid a certain outcome. Uncertainty is represented in each of the steps described above and probabilistic models have therefore been developed for this purpose in health economics theory.22, 23 Using an estimate of this uncertainty, the likelihood that a given intervention is cost‐effective can be calculated for different WTP values, which may then be used to provide concrete recommendations to administrators and educators. Uncertainty can be estimated using net benefit regression (NBR) models that take different WTP values, effects and costs into consideration. The basic regression formula for the NBR model can be written as: Net Benefit = WTP × E – C, where E and C are the observed effects and costs, respectively. Based on the variance in distribution of effects and costs, a cost‐effectiveness acceptability curve (CEAC) can then be generated from the NBR model to provide a graphical illustration of the probability that a training strategy is cost‐effective at different WTP values.19, 22, 23

Example of Step 1: gathering data on training outcomes

Purpose

The purpose of the example study was to determine the cost‐effectiveness of training midwives to perform cervical scans compared with obstetrician‐performed cervical scans with regard to time from patient arrival to admission or discharge.

Design, participants and randomisation

A randomised study was performed to explore the effects of training a group of midwives to assess cervical length with regard to time from arrival to discharge or admission for women with symptoms of preterm onset of labour. The study was approved by the Danish Data Protection Agency (Protocol No. 2007‐58‐0015), registered at clinicaltrials.gov (Identifier NCT02001467), and was exempt from ethical approval by the Regional Ethical Committee for the Capital Region (Protocol No. H‐4‐2013‐FSP). Participants included 12 certified midwives who volunteered for the study and provided informed consent. The eligibility criteria were employment at the Obstetric Emergency Unit, Nordsjaelland's University Hospital, Hillerød, and no prior transvaginal ultrasound experience. Participants were randomised to ultrasound training or no training in a 1 : 1 ratio.

Intervention and control

Intervention‐group participants completed two types of simulation‐based ultrasound training. They were trained using a virtual reality (VR) simulator (Scantrainer™, Medaphor, Cardiff, UK) and a physical manikin (BluePhantom™, Sarasota, USA) until predefined performance levels were attained. The validity of scores obtained on the simulator tests and the performance levels used had been collected in previous studies and included content evidence, internal structure, relations to other variables and consequences of testing.31, 32, 33, 34 A simulator instructor provided feedback during all simulation‐based training. After completing the simulator training, the participants were trained and assessed by a foetal medicine consultant (LNN). Training was completed when three consecutive scans were rated above a predefined performance level.33 Control‐group participants did not receive any ultrasound training.

Outcomes

Once training was completed, intervention‐group participants independently performed CLMs on women who presented with symptoms of preterm onset of labour. If participants had a long cervical length (above 25 mm), they could be discharged without consulting an obstetrician. An obstetrician was consulted for further diagnostics or follow‐up if the CLM was shortened (25 mm or less). The control‐group participants provided standard patient care and consulted an obstetrician if a CLM was needed. Over the next 6 months, all study participants recorded each woman's time of arrival and discharge or admission (waiting time; primary outcome), as well as who performed the CLM (midwife, obstetrician, or midwife followed by obstetrician), to determine the number of changes in the responsible health care provider (gaps in continuity of care; secondary outcome). The primary outcome was chosen because managing women with preterm onset of labour is time‐sensitive with regard to initiation of treatment and because we expected considerable psychological stress to be associated with uncertainty for patients during the waiting time.

The total effects of training were not limited to the first 6 months because the participants continued to perform CLMs after the study period was completed. To account for this residual effect, the estimated number of patients treated by the intervention‐group participants was determined over a 60‐month period via the average number of patients managed per participant per month and the observed participant attrition rate per month. The total number of patients was multiplied by the average reduced waiting time to determine the total number of minutes saved. Differences between groups in mean waiting time and changes in the responsible health care provider were calculated using Student's t‐test and the chi‐squared test, respectively.

Example of Step 2: assessing total costs

The viewpoint for the cost‐effectiveness analysis was that the hospital carried the costs of training. To estimate the implementation costs, participants' time expenditure was assessed. The total number of simulator instructor and clinician hours per participant was estimated and time costs were converted into monetary costs by multiplying the number of hours used with the costs per hour for the midwives, simulator instructor and clinician teacher. The total costs were estimated by adding implementation costs and equipment costs (i.e. simulator costs). Equipment costs were depreciated over 5 years and the costs per month were calculated.

Example of Step 3: determining the incremental cost‐effectiveness ratio (ICER)

An ICER was calculated for the primary and secondary outcomes. The costs of the training programme minus those of the control group (no cost) were divided by the difference in their outcomes (mean difference in waiting times between groups and difference in frequency of gaps in continuity of care).

Example of Step 4: estimating cost‐effectiveness probability for different WTP values

A template available online for Excel 2011 was used to generate the NBR estimates.35 To determine the distribution of NBR estimates for different WTP values, Monte Carlo simulations were performed using the Cholesky decomposition technique based on observed means and standard deviations for costs and effects for the intervention and control groups. A CEAC was generated based on the Monte Carlo simulations and the NBR model.35 One‐sided confidence intervals were used to assess which strategy was effective for different WTP values.

Results

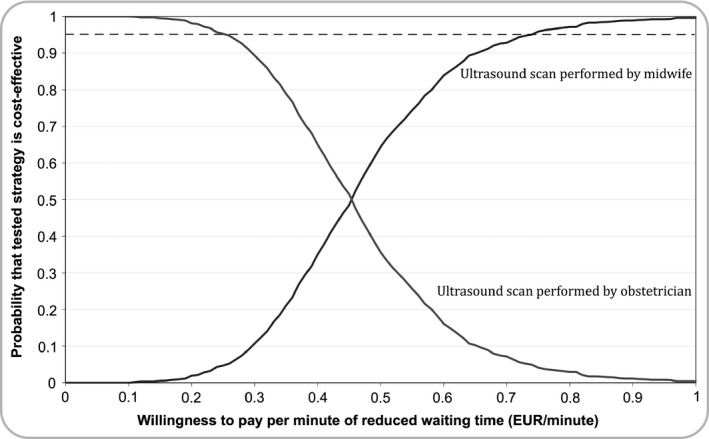

Intervention‐group participants needed a mean 268.2 (95% confidence interval [CI], 140.2‒392.2) minutes of simulator training and a mean 7.3 (95% CI, 4.4‒10.3) supervised scans to attain proficiency. Table 1 lists implementation, equipment and total training costs. Patients who were scanned by the intervention group had significantly shorter waiting times (n = 50, mean 80.7 [95% CI 67.4‒93.9] minutes) compared with those managed by the control group (n = 65; mean 117.2 [95% CI 90.5‒144.0] minutes; mean difference between groups, 36.6 [95% CI 7.3‒65.8] minutes; p = 0.008). Gaps in continuity of care were reduced by 84% for patients managed by the intervention group as compared with the control group (16% versus 100%, p < 0.001). The estimated total number of women managed by the intervention group over a 60‐month period was 164, corresponding to a total of 5990 saved minutes (99 hours 50 minutes). The ICER for time saved was EUR 0.45 minute−1 and the ICER for gaps in continuity of care was EUR 19.51/shift. Figure 2 shows the CEAC. For WTP values less than EUR 0.26 minute−1, there was a 95% probability that obstetrician‐performed CLM was the most cost‐effective strategy. For WTP values above EUR 0.73 minute−1, there was a 95% probability that midwife‐performed CLM was the most cost‐effective strategy.

Table 1.

Implementation costs and total costs of training. Costs per hour were based on contract‐regulated wages for health providers in 2013

| Implementation costs | |

|---|---|

| Midwives | |

| Training time for all midwives (n = 6) | 34 hours 41 minutes |

| Cost per hour | EUR 17.4 |

| Total cost of all midwives (n = 6) | EUR 603.2 |

| Simulator instructor | |

| Total number of hours of instruction | 26 hours 37 minutes |

| Cost per hour | EUR 29.1 |

| Total cost of instructor | EUR 774.6 |

| Clinician teacher | |

| Number of hours of supervision | 7 hours 20 minutes |

| Cost per hour | EUR 38.2 |

| Total cost of clinician teacher | EUR 280.1 |

| Total implementation costs | EUR 1657.9 |

| Equipment costs | |

|

Virtual reality simulator (Scantrainer, Medaphor) Total cost/cost per month over 5 years |

EUR 56 979.0/EUR 949.7 |

|

Physical manikin (BluePhantom, CAE) Total cost/cost per month over 5 years |

EUR 4841.8/EUR 80.7 |

| Equipment costs during study period (1 month) | EUR 1030.4 |

| Total costs (implementation cost + equipment costs during study period) | EUR 2688.3 |

Figure 2.

A cost‐effectiveness acceptability curve (CAEC) showing the probability that the training strategies were cost‐effective for different willingness‐to‐pay values. The dashed line denotes the 95% confidence limit that the training strategy is cost‐effective.

Discussion

We provided a methodological basis for conducting future cost‐effectiveness studies in order to meet the calls for economic evaluations in health professions education.5, 6 We demonstrated a 4‐step model to determine cost effectiveness of training interventions (the PRECOG model). The model included gathering training outcomes, assessing costs, calculating cost‐effectiveness ratios, and estimating the probability that the training strategies were cost‐effective for different willingness to pay values.

A substantial challenge for medical education cost‐effectiveness studies is the lack of generalisability of results due to differences in training costs, study context and curriculum design across institutions and countries.3, 19 Systematic reviews and meta‐analyses may overcome some of these limitations, but experimental research in medical education often fail to report costs of training interventions, thereby making economic evaluations across studies difficult. Furthermore, comparisons of effects may be complicated by different evaluation methods, outcome measures6 and follow‐up durations. We proposed a model where the total effects of training were estimated by extrapolating from immediate effects while adjusting for participant attrition rate and skills decay, when applicable. If only immediate effects are taken into account, the costs per unit of the outcome variable may be overestimated. To account for decision uncertainty, we used a probabilistic method for estimating cost‐effectiveness. The importance of conducting this final step was highlighted by both training strategies being recommended as the most cost‐effective in the example provided, depending on administrators' willingness to pay. If administrators were willing to pay more than EUR 0.73 per minute of reduced waiting time, there was a high probability that training a group of midwives in performing CLMs would be cost‐effective. On the other hand, if administrators were willing to pay less than EUR 0.26 per minute, there was a high probability that maintaining the obstetrician‐performed CLMs would be cost‐effective.

The example used in this study involved training a group of midwives to perform CLMs to reduce patient waiting time before admission or discharge and to reduce gaps in continuity of care. Time is an important parameter when administrating corticosteroids to women with preterm onset of labour36 and gaps in continuity of care are associated with errors during patient handover.37 Both parameters were therefore proxy indicators of care quality. However, we did not attempt to explore their effects on patient outcomes such as neonatal morbidity or number of errors committed during patient handover.

Strengths of this study include the use of patient‐level data, a randomised design, standardised protocols for training, assessment and certification, and robust statistical models. There are also some limitations. First, the small sample size and single‐centre design limit generalisability of the study results. Second, only direct effects of the training intervention were assessed and not indirect effects such as reduced and increased workload imposed on obstetricians and midwives, respectively. Third, the equipment costs may have been slightly overestimated in this study as we anticipated that the simulators could not be used for other purposes during the study period. Finally, the model does not provide a one‐size‐fits‐all approach to economic evaluations in medical education, but may be used to improve the methodological rigour of future cost‐effectiveness studies. We chose to select the current model over competing models because it enabled us to link training outcomes to costs and to provide a generalisable estimate of the probability that the intervention was cost‐effective. However, the general principles described in the present model may just as well apply to different methods for estimation of effects, cost, uncertainty and generalisation.

Providing evidence of cost‐effectiveness in future experimental medical education trials may bridge the gap between best practice and actual practice.12 Administrators are thereby enabled to prioritise training interventions based on cost‐effectiveness evidence rather than effectiveness evidence alone. However, this requires cost estimates to be reported in future effectiveness studies.

Conclusion

Cost‐effectiveness models can be used to link quality of care to training costs. We demonstrated a 4‐step model to determine cost‐effectiveness of training interventions. In the example used in the present study, training midwives to perform cervical length measurements was cost‐effective for WTP values above EUR 0.73 per minute reduced waiting time. Maintaining obstetrician‐performed cervical length measurements was cost‐effective for WTP values below EUR 0.26 per minute waiting time.

Contributors

MGT, LNN and AT contributed to the conception of the study. MGT, LN, AT and MEM contributed to the study design. MGT, MEM, CW and LD contributed to the data acquisition. CR contributed to data interpretation. MGT prepared the first draft of the article. CR and AT contributed to the first draft and CR, AT, LN, MEM, CW and LD critically revised the subsequent drafts. All authors approved the final manuscript and agree to be accountable for all aspects of the work.

Funding

this study was funded by the Tryg Foundation.

Conflicts of interest

none.

Ethical approval

the study was approved by the Danish Data Protection Agency (Protocol No. 2007‐58‐0015) and was exempt from ethical approval by the Regional Ethical Committee for the Capital Region (Protocol No. H‐4‐2013‐FSP).

Acknowledgements

none.

Medical Education 2015: 49: 1263–1271 doi: 10.1111/medu.12882

References

- 1. Frenk J, Chen L, Bhutta ZA, The Lancet Commissions , et al Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet 2010;376:1923–58. [DOI] [PubMed] [Google Scholar]

- 2. Cook DA. If you teach them, they will learn: why medical education needs comparative effectiveness research. Adv Health Sci Educ Theory Pract 2012;17:305–10. [DOI] [PubMed] [Google Scholar]

- 3. Isaranuwatchai W, Brydges R, Carnahan H, Backstein D, Dubrowski A. Comparing the cost‐effectiveness of simulation modalities: a case study of peripheral intravenous catheterization training. Adv Health Sci Educ 2013;19:219–32. [DOI] [PubMed] [Google Scholar]

- 4. Magee SR, Shields R, Nothnagle M. Low cost, high yield: simulation of obstetric emergencies for family medicine training. Teach Learn Med 2013;25:207–10. [DOI] [PubMed] [Google Scholar]

- 5. Zendejas B, Wang AT, Brydges R, Hamstra SJ, Cook DA. Cost: the missing outcome in simulation‐based medical education research: a systematic review. Surgery 2013;153:160–76. [DOI] [PubMed] [Google Scholar]

- 6. Walsh K, Levin H, Jaye P, Gazzard J. Cost analyses approaches in medical education: there are no simple solutions. Med Educ 2013;47:962–8. [DOI] [PubMed] [Google Scholar]

- 7. Cook DA, Hatala R, Brydges R, Zendejas B. Technology‐enhanced simulation for health professions education: a systematic review and meta‐analysis. JAMA 2011;306:978–88. [DOI] [PubMed] [Google Scholar]

- 8. McGaghie WC, Issenberg SB, Petrusa ER, Scalese RJ. A critical review of simulation‐based medical education research:2003‐2009. Med Educ 2009;44:50–63. [DOI] [PubMed] [Google Scholar]

- 9. Knobe M, Carow JB, Ruesseler M, Leu BM, Simon M, Beckers SK et al Arthroscopy or ultrasound in undergraduate anatomy education: a randomized cross‐over controlled trial. BMC Med Educ 2012;12:85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Fletcher JD, Wind AP. Cost considerations in using simulations for medical training. Mil Med 2013;178:37–46. [DOI] [PubMed] [Google Scholar]

- 11. Wynn BO, Smalley R, Cordasco KM. Does it cost more to train residents or to replace them? RAND Corporation; 2013. www.rand.org. [Accessed 15 June 2015.] [PMC free article] [PubMed] [Google Scholar]

- 12. van der Vleuten CPM, Driessen EW. What would happen to education if we take education evidence seriously? Perspect Med Educ 2014;3:222–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Cohen ER, Feinglass J, Barsuk JH, Barnard C, O'Donnell A, McGaghie WC et al Cost savings from reduced catheter‐related bloodstream infection after simulation‐based education for residents in a medical intensive care unit. Simulation in healthcare. Simul Healthc 2010;5:98–102. [DOI] [PubMed] [Google Scholar]

- 14. Stefanidis D, Hope WW, Korndorffer JR, Markley S, Scott DJ. Initial laparoscopic basic skills training shortens the learning curve of laparoscopic suturing and is cost‐effective. J Am Coll Surg 2010;210:436–40. [DOI] [PubMed] [Google Scholar]

- 15. Iribarne A, Easterwood R, Russo MJ, Wang YC. Integrating economic evaluation methods into clinical and translational science award consortium comparative effectiveness educational goals. Acad Med 2011;86:701–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Cook DA, West CP. Perspective: reconsidering the focus on “outcomes research” in medical education: a cautionary note. Acad Med 2013;88:162–7. [DOI] [PubMed] [Google Scholar]

- 17. Bransford JD, Schwartz DL. Rethinking transfer: a simple proposal with multiple implications. Rev Res Educ 2009;24:153–66. [Google Scholar]

- 18. Mylopoulos M, Woods N. Preparing medical students for future learning using basic science instruction. Med Educ 2014;48:667–73. [DOI] [PubMed] [Google Scholar]

- 19. Drummond MF, Sculpher MJ, Torrance GW, O'Brien BJ, Stoddart GL. Methods for the Economic Evaluation of Health Care Programmes. OUP Catalogue. Oxford: Oxford University Press; 2005. [Google Scholar]

- 20. Gold MR, Siegel JE, Russell LB, Weinstein MC. Cost‐Effectiveness in Health and Medicine: Report of the Panel on Cost‐Effectiveness in Health and Medicine. New York: Oxford University Press; 1996. [Google Scholar]

- 21. Hoch JS, Rockx MA, Krahn AD. Using the net benefit regression framework to construct cost‐effectiveness acceptability curves: an example using data from a trial of external loop recorders versus Holter monitoring for ambulatory monitoring of “community acquired” syncope. BMC Health Serv Res 2006;6:68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. O'Brien BJ, Drummond MF, Labelle RJ, Willan A. In search of power and significance: issues in the design and analysis of stochastic cost‐effectiveness studies in health care. Med Care 1994;32:150–63. [DOI] [PubMed] [Google Scholar]

- 23. Van Hout BA, Al MJ, Gordon GS. Costs, effects and C/E‐ratios alongside a clinical trial. Health Econ 1994;3:309–19. [DOI] [PubMed] [Google Scholar]

- 24. Brown CA, Belfield CR, Field SJ. Cost effectiveness of continuing professional development in health care: a critical review of the evidence. BMJ 2002;324:652–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Tolsgaard MG, Ringsted C, Dreisler E, Nørgaard LN, Petersen JH, Madsen ME et al Sustained effect of simulation‐based ultrasound training on clinical performance: a randomized trial. Ultrasound Obstet Gynecol 2015;46(3):312–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Harrison EM. The cost of surgical training. ASGBI News Lett 2006:1–17. http://www.asit.org. [Accessed 15 June 2015.] [Google Scholar]

- 27. Danzer E, Dumon K, Kolb G, Pray L, Selvan B, Resnick AS et al What is the cost associated with the implementation and maintenance of an ACS/APDS‐based surgical skills curriculum? J Surg Educ 2011;68:519–25. [DOI] [PubMed] [Google Scholar]

- 28. von Strauss Und Torney M, Dell‐Kuster S, Mechera R, Rosenthal R, Langer I. The cost of surgical training: analysis of operative time for laparoscopic cholecystectomy. Surg Endosc 2012;26:2579–86. [DOI] [PubMed] [Google Scholar]

- 29. Babineau TJ, Becker J, Gibbons G, Sentovich S, Hess D, Robertson S et al The “cost” of operative training for surgical residents. Arch Surg 2004;139:366–9. [DOI] [PubMed] [Google Scholar]

- 30. Goodwin AT, Birdi I, Ramesh TP, Taylor GJ, Nashef SA, Dunning JJ et al Effect of surgical training on outcome and hospital costs in coronary surgery. Heart 2001;85:454–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Madsen ME, Konge L, Nørgaard LN, Tabor A, Ringsted C, Klemmensen AK et al Assessment of performance measures and learning curves for use of a virtual‐reality ultrasound simulator in transvaginal ultrasound examination. Ultrasound Obstet Gynecol 2014;44:693–9. [DOI] [PubMed] [Google Scholar]

- 32. Tolsgaard MG, Todsen T, Sorensen JL, Ringsted C, Lorentzen T, Ottesen B et al International multispecialty consensus on how to evaluate ultrasound competence: a delphi consensus survey. PLoS ONE 2013;28 (8):e57687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Tolsgaard MG, Ringsted C, Dreisler E, Klemmensen A, Loft A, Sorensen JL et al Reliable and valid assessment of ultrasound operator competence in obstetrics and gynecology. Ultrasound Obstet Gynecol 2014;43:437–43. [DOI] [PubMed] [Google Scholar]

- 34. Todsen T, Tolsgaard MG, Olsen BH, Henriksen BM, Hillingsø JG, Konge L et al Reliable and valid assessment of point‐of‐care ultrasonography. Ann Surg 2014;261:309–15. [DOI] [PubMed] [Google Scholar]

- 35. Barton GR, Briggs AH, Fenwick E. Optimal cost‐effectiveness decisions: the role of the Cost‐Effectiveness Acceptability Curve (CEAC), the Cost‐Effectiveness Acceptability Frontier (CEAF). Value Health 2008;11:886–97. [DOI] [PubMed] [Google Scholar]

- 36. Roberts D, Dalziel S. Antenatal corticosteroids for accelerating fetal lung maturation for women at risk of preterm birth. Cochrane Database Syst Rev 2006;3:CD004454. [DOI] [PubMed] [Google Scholar]

- 37. Cook RI, Render M, Woods DD. Gaps in the continuity of care and progress on patient safety. BMJ 2000;320:791–4. [DOI] [PMC free article] [PubMed] [Google Scholar]