Abstract

Background

We describe our use of cognitive interviews in developing a measure of “preventive misconception” to demonstrate the importance of this approach to researchers developing surveys in empirical bioethics. The preventive misconception involves research participants’ false beliefs about a prevention trial, including beliefs that the interventions being tested will certainly be effective.

Methods

We developed and refined a measure of the preventive misconception using qualitative interviews that focused on cognitive testing of proposed survey items with HIV prevention trial participants.

Results

Two main problems emerged during initial interviews. First, the phrase “reduce your risk,” used to elicit beliefs about risk reduction from the use of study medications, was interpreted as relating to a reduction of risky behaviors. Second, the phrase “participating in this study,” intended to elicit beliefs about trial group assignment, was interpreted as relating to personal behavior changes associated with study participation. Additional interviews using a revised measure were no longer problematic in these ways, and participants felt the response options were appropriate for conveying their answers.

Conclusions

These findings underscore the importance of cognitive testing in developing surveys for empirical bioethics.

Keywords: Bioethics, Empirical Research, Interview, Qualitative Research, HIV/AIDS, Health Risk Behaviors

INTRODUCTION

Survey research accounts for most empirical research in bioethics (Sugarman and Sulmasy 2010). Cognitive interviewing has become an established means of helping to ensure that survey instruments are sound, which is an essential step in generating meaningful results (DeWalt et al. 2007; Willis 2005). Although a variety of approaches are used in cognitive interviewing, the technique essentially involves qualitative assessments of the understandability and usability of particular survey items and materials to gather information about a particular domain of interest. Within bioethics, Willis (2006) has suggested that cognitive interviewing can be used to enhance informed consent. In addition, cognitive interviews have been used in developing information materials for disclosing financial conflicts of interest in research (Weinfurt et al. 2007) and consent for biobanking (Beskow and Dean 2008). In this paper, we describe our use of cognitive interviews in developing a measure of “preventive misconception” to demonstrate the importance of this approach to those developing surveys in empirical bioethics.

The “preventive misconception” has been defined as “the overestimate in probability or level of personal protection that is afforded by being enrolled in a trial of a preventive intervention” (Simon et al. 2007, 371). Having a preventive misconception suggests the possibility of a problem with informed consent and may contribute to behavioral changes that could put the research participant and others at risk. Thus, harboring a preventive misconception could be problematic in any prevention trial. However, it may be especially problematic in HIV prevention trials, in which a change in risk behaviors could increase the likelihood of becoming infected.

Mounting evidence suggests that the preventive misconception exists in HIV prevention research. For example, in a trial of a vaginal microbicide for HIV prevention conducted in Malawi and Zimbabwe, the possibility of a preventive misconception was identified among 29% of participants who were interviewed (Woodsong et al. 2012). In another vaginal microbicide trial in South Africa, approximately 15% of participants who completed exit interviews appeared to have a preventive misconception (Dellar et al. 2014). The study found a significant association between risk behaviors and having a preventive misconception. The preventive misconception has also been reported in interviews with adolescents in the United States who were undergoing a consent process for a hypothetical HIV vaccine trial (Ott et al. 2013) and in key informant interviews and focus groups about willingness to participate in an HIV vaccine trial with men who have sex with men in India (Chakrapani et al. 2012).

Despite evidence supporting the presence of the preventive misconception, the findings to date have arisen through interviews and focus groups that were not standardized across studies. Accordingly, in this project, we developed and refined a quantitative measure of the preventive misconception by conducting qualitative interviews focused on cognitive testing of the draft instruments with participants in an HIV prevention trial.

METHODS

Development of a Conceptual Model to Guide Instrument Design

In the original description of the preventive misconception, Simon et al. (2007) proposed two subtypes: (1) overestimation by the participant of the probability that he or she will receive the experimental intervention and (2) overestimation by the participant of the probability of receiving personal benefit from the experimental intervention. Building on this previous work, the authors developed a draft conceptual model and discussed components of the model with members of an expert advisory panel assembled for this project (Table 1). As a result of these discussions, the authors developed a conceptual model and drafted a set of survey items for qualitative testing (Table 2).

Table 1.

Members of the Advisory Panel

| Panel Member | Institutional Affiliation |

|---|---|

| Noel T. Brewer, PhD | University of North Carolina at Chapel Hill |

| David Celentano, ScD, MHS | Johns Hopkins University |

| Thomas J. Coates, PhD | University of California, Los Angeles |

| Joel E. Gallant, MD, MPH | Johns Hopkins University |

| Nancy E. Kass, ScD | Johns Hopkins University |

| Kathleen M. MacQueen, PhD, MPH | FHI360 |

| Kenneth H. Mayer, MD | Brown University |

| Steven Wakefield | HIV Vaccine Trials Network |

| Mitchell Warren | AVAC: Global Advocacy for HIV Prevention |

Table 2.

Draft Measure of Preventive Misconception

| Item No. | Question | Response Options |

|---|---|---|

| dQ1 | Will participating in this study prevent you from getting HIV? | yes; no; maybe; don’t know/unsure |

| dQ2 | How much will participating in this study prevent you from getting HIV? | not at all; a little; a lot; don’t know/unsure |

| dQ3 | How confident are you that participating in this study will reduce your risk of getting HIV? | 0–100 (0 = not at all confident, 100 = very confident); don’t know/unsure |

| dQ4 | Imagine 100 people who are participating in this study. About how many of them will have their risk of getting HIV reduced by participating in the study? | 0–100; don’t know/unsure |

| dQ5 | Is this your first time participating in an HIV prevention study? | yes; no; don’t know/unsure |

| dQ6 | Before you began this study, what did you believe was your risk of getting HIV? | 0–100 (0 = no risk of getting HIV; 100 = will get HIV); don’t know/unsure |

| dQ7 | Now that you are participating in this study, what do you believe is your risk of getting HIV? | 0–100 (0 = no risk of getting HIV; 100 = will get HIV); don’t know/unsure |

| dQ8 | Participants in this study were put into the following groups. Which group do you think you are in? | maraviroc (Selzentry); maraviroc (Selzentry) plus emtricitabine (Emtriva); maraviroc (Selzentry) plus tenofovir (Viread); tenofovir plus emtricitabine (Truvada); don’t know/unsure |

| dQ9 | How confident are you that you are in that group? | 0–100 (0 = not at all confident; 100 = very confident); don’t know/unsure |

| dQ10 | Imagine 100 people who are participating in this study. About how many of them do you think would be put into that group? | 0–100; don’t know/unsure |

The model was based on two key concepts. The first is that the beliefs that could be subsumed under the preventive misconception vary in their level of generality. At the most general level is the notion that the investigational interventions in the trial will necessarily confer a prevention benefit. At the next level of generality, there are two beliefs: (1) that the participant received the investigational intervention and (2) that the investigational intervention will prevent the participant from acquiring HIV. Beliefs at the next lowest level refer to more detailed ways of expressing these two more general beliefs.

Another key concept in the model is that these more detailed beliefs can be expressed in different ways, with correspondingly different notions of probability. For example, one can ask a participant to estimate how many people on average out of 100 will have their likelihood of acquiring HIV reduced by participating in the prevention study, which elicits a frequency-type probability (Hacking 2001; Weinfurt 2004). Alternatively, one can ask a participant how confident she is that participating in the prevention study will reduce her risk of acquiring HIV, which elicits a belief-type probability (Hacking 2001; Weinfurt 2004). However, it was not known whether and how study participants would understand questions about frequency- or belief-type probabilities. Similarly, it was uncertain whether different probability formulations would be relevant to the way participants construed their experiences.

Qualitative Interviews

We focused on assembling a convenience sample of participants in an actual HIV prevention trial. Specifically, participants in the qualitative interviews were enrollees at three sites in HPTN 069/ACTG 5305 (ClinicalTrials.gov identifier NCT01505114), a multicenter HIV prevention trial in the United States involving pre-exposure prophylaxis in men and women who have sex with men and are at risk of HIV infection. The sites were Baltimore, Maryland; Chapel Hill, North Carolina; and Washington, D.C. The institutional review boards of the Duke University Health System, George Washington University, Johns Hopkins Medicine, and the University of North Carolina at Chapel Hill approved the study. All participants provided written informed consent.

After completing assessments associated with the week 4 trial visit, which included a survey consisting of the draft items listed in Table 2, participants were asked to join this qualitative interview study. Parent study staff approached all research participants who completed their week 4 visit in the study, and interviews were continued until informational saturation was obtained. We did not track response rates; to maintain confidentiality, we only interviewed those who were willing to be contacted by our team. That said, to our knowledge, no participant who was asked declined to participate.

In a semi-structured, audio-recorded interview, the interviewer presented the draft survey items the participant had completed during the trial visit and asked for the participant’s responses. Following a modified cognitive interviewing methodology (Willis 2005), the interviewer also asked the participant questions about each item to assess understanding and to elicit information about response construction. For example, to help assess understanding, participants could be asked: “What is this question asking you? “Can you put this question in your own words?” Next, the interviewer would probe on key words or concepts in the particular item. To assess response construction, participants could be asked: “How did you come up with the answer to this question? And, was this a difficult question to answer?” Subsequently, interviewers would probe on the participant’s responses to assess whether they were consistent with both the question and the participant’s intent.

All interviewers received in-person training on how to conduct the interviews. The interviews lasted 20 to 30 minutes. Throughout the study, members of the study team reviewed audio recordings of the interviews to ensure consistency and quality, and the study team held meetings with the interviewers as needed to discuss challenges and to help ensure that the interviews were consistent across sites.

To facilitate analysis, a member of the study team listened to the audio recordings of the interviews and paraphrased participants’ responses to the questions. Members of the study team reviewed these summaries to identify potential problems with the interpretation of the items and their responses. To address the problems identified after the completion of the first 17 interviews, the study team revised some items and removed some items. The revised draft measure was used in the remaining interviews.

RESULTS

The study team conducted a total of 44 qualitative interviews in 2012 and 2013. Table 3 shows the characteristics of the participants.

Table 3.

Characteristics of the Participants

| Characteristic | Site* | All Sites (N = 44) | ||

|---|---|---|---|---|

| George Washington University (n = 15) | Johns Hopkins University (n = 10) | University of North Carolina at Chapel Hill (n = 19) | ||

| Age (years) | ||||

| Median (IQR) | 23 (22–25) | 44 (36–49) | 27 (23–33) | 26 (22–34) |

| Mean (SD) | 23 (3) | 42 (10) | 29 (8) | 30 (10) |

| Men, No. (%) | 14 (93) | 10 (100) | 18 (95) | 42 (95) |

| Race, No. (%) | ||||

| Black or African American | 11 (73) | 7 (70) | 6 (32) | 24 (55) |

| White | 3 (20) | 3 (30) | 10 (53) | 16 (36) |

| More than one race | 1 (7) | 0 | 3 (16) | 4 (9) |

| Hispanic or Latino ethnicity | 4 (27) | 0 | 2 (11) | 6 (14) |

| Original interview, No. (%) | 0 | 2 (20) | 15 (79) | 17 (39) |

| Revised interview, No. (%) | 15 (100) | 8 (80) | 4 (21) | 27 (61) |

Note. IQR = interquartile range. SD = standard deviation

Total enrollment in the parent trial at the time recruitment was completed for cognitive interviews at each site is as follows: George Washington University (n = 54); Johns Hopkins University (n = 20); and University of North Carolina at Chapel Hill (n = 38). However, some participants in the parent trial had completed their 4-week visit prior to recruitment for the cognitive interviews at that site.

The original draft measure included 10 items relating to trial participation and its potential effects on HIV risk reduction. In the first 17 interviews, some items prompted several participants to focus on personal behavior change rather than on the efficacy of the study medications and the use of randomization in the trial as had been assumed in the conceptual model and for which the items were designed to assess. For example, some questions were designed to elicit beliefs about risk reduction resulting from the use of study medications. Item dQ2 asked, “How much will participating in this study reduce your risk of getting HIV?” When asked how they came up with their responses to this question, several participants focused on risk reduction as a function of their own agency in reducing high-risk behaviors during participation in the trial, such as condom use, instead of focusing on the potential role of study medications in reducing risk.

One participant explained his response to item dQ2 as follows: “For each visit, you know, you kind of pretty much are getting information about ways to have safe sex, you’re getting condoms, as well as the medications to possibly prevent the transmission of HIV.” Another participant explained how he came up with his response to the item in this exchange:

Participant: “Just based on my own personal risk factors.”

Interviewer: “What do you think your personal risk factors are?”

Participant: “Every once in a while there is a slipup, but generally I know the risk of getting HIV.”

Interviewer: “When you say ‘slipup,’ do you just mean having unprotected sex?”

Participant: “Correct.”

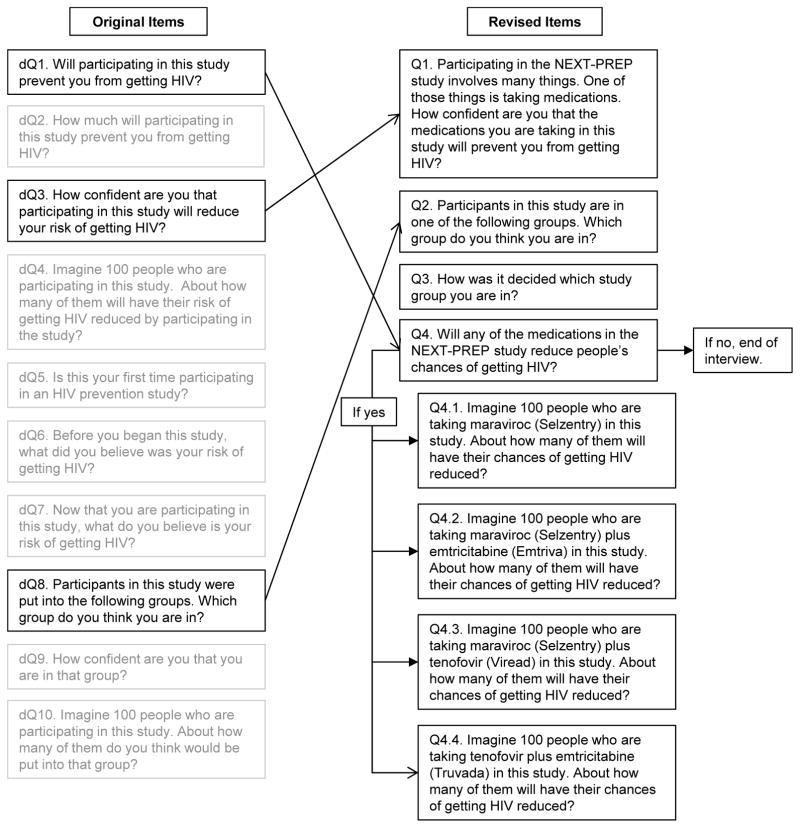

The study team hypothesized that the main problem was with the phrase “reduce your risk,” and specifically with the word “risk.” That is, for some participants like the one quoted above, the word “risk” was associated with their thinking about their own risk behaviors. To address this issue, the study team changed item phrasings from “reduce your risk” to “reduce your chance” in an effort to prevent participants from focusing on personal risk behaviors. The original and revised items are shown in the Figure 1.

Figure 1.

Revisions to Measure

In another example, some items were designed to elicit beliefs about trial group assignment and participation. Consistent with the conceptual model, the intent of these items was to understand how participants think about what it means to be randomly assigned to a study group in a clinical trial, and the implications for their participation in the trial as a whole. The problem involved items with the phrase “participating in this study.” For example, item dQ7 asked, “Now that you are participating in this study, what do you believe is your risk of getting HIV?” Likewise, item dQ1 asked, “Will participating in this study prevent you from getting HIV?”

Instead of focusing on what was being tested in the trial in regard to HIV prevention, several participants interpreted these questions as relating to personal behavior changes associated with study participation, such as increased awareness of HIV risk factors. For example, one participant explained:

By getting tested more often and being able to be honest with my partners in describing the study, and then also being counseled regularly and having the opportunity to have safe sexual practices more often—all those things put together, I think, have overall drastically reduced my risk of getting HIV.

To address this problem, the study team changed the phrase “participating in this study” to variations of “taking medications in this study” (Figure 1).

Finally, several items were removed from the draft measure because they did not provide information of value to achieve the study team’s purpose. For example, item dQ4 in the original draft measure asked, “Imagine 100 people who are participating in this study. About how many of them will have their risk of getting HIV reduced by participating in this study?” After reflecting more carefully on the conceptual model, the study team realized that asking about overall (as opposed to experimental group-specific) risks and prevention benefits did not provide incremental value to the purpose of measuring a potential preventive misconception. Similarly, items that addressed confidence in group assignment (dQ9) and understanding of the proportion of participants assigned to the same trial arm as the participant (dQ10) did not provide useful information in regard to measuring a preventive misconception, so they were removed.

Item dQ3 was revised (to Q1) by changing the language about participating in the study to a specific reference to taking the study medication. Responses to this question (Q1) serve as an overall assessment of the benefit the person believes he or she is receiving from the medications received (an answer of zero would indicate no preventive misconception). Additionally, Q1 might be useful for understanding how people’s beliefs regarding their personal prevention benefit are related to their subsequent engagement in risk behaviors. Nevertheless, because it remained important to assess participants’ understanding of group assignment and randomization, item dQ8 was retained and an item from the Quality of Informed Consent (QUIC) measure was added (Joffe et al. 2001). Item dQ5, “Is this your first time participating in an HIV prevention study?” while important for research use, was not intended to help assess preventive misconception, and was removed.

Therefore, the revised items address the first subtype of preventive misconception directly by asking about the perceived chance of prevention benefit in revised items Q1 and Q4. The revised items also address the second subtype of preventive misconception by asking about the basis for group assignment in items Q2 and Q3. Table 4 shows the revised measure.

Table 4.

Revised Measure of Preventive Misconception

| Item No. | Question | Response Options |

|---|---|---|

| Q1 | Participating in the NEXT-PREP study involves many things. One of those things is taking medications. How confident are you that the medications you are taking in this study will prevent you from getting HIV? | 0–100 (0 = not at all confident; 100 = very confident); don’t know/unsure |

| Q2 | Participants in this study are in one of the following groups. Which group do you think you are in? | maraviroc (Selzentry); maraviroc (Selzentry) plus emtricitabine (Emtriva); maraviroc (Selzentry) plus tenofovir (Viread); tenofovir plus emtricitabine (Truvada); don’t know/unsure |

| Q3 | How was it decided which study group you are in? | a study doctor chose the group that would be best for me; randomly (by chance); other; don’t know/unsure |

| Q4 | Will any of the medications in the NEXT-PREP study reduce people’s chances of getting HIV? | yes; no; maybe; don’t know/unsure |

| Q4.1a | Imagine 100 people who are taking maraviroc (Selzentry) in this study. About how many of them will have their chances of getting HIV reduced? | 0–100; don’t know/unsure |

| Q4.2a | Imagine 100 people who are taking maraviroc (Selzentry) plus emtricitabine (Emtriva) in this study. About how many of them will have their chances of getting HIV reduced? | 0–100; don’t know/unsure |

| Q4.3a | Imagine 100 people who are taking maraviroc (Selzentry) plus tenofovir (Viread) in this study. About how many of them will have their chances of getting HIV reduced? | 0–100; don’t know/unsure |

| Q4.4a | Imagine 100 people who are taking tenofovir plus emtricitabine (Truvada) in this study. About how many of them will have their chances of getting HIV reduced? | 0–100; don’t know/unsure |

Question asked only if the answer to item Q4 was “yes,” “maybe,” or “don’t know/unsure.”

After performing an additional 27 interviews with the revised measure, participants’ responses converged to focus on the effects of the study medications on reduction of risk of HIV infection. The kinds of responses that focused on personal agency and behavior change in the initial set of interviews were no longer present. Additionally, participants had no difficulty using the items’ response options and felt they were appropriate for conveying their answers.

DISCUSSION

This study was conducted to develop a measure of preventive misconception. The work began with the development a conceptual model to guide exploration of the ways people might express their beliefs about participation in HIV prevention research. Using qualitative interviews that had features of both open-ended and cognitive interview methodologies, findings emerged that helped to sharpen the measure of the preventive misconception.

First, participation in HIV prevention research can be accurately considered in terms of a range of lifestyle changes in addition to simply taking an experimental agent, such as counseling to avoid risky behaviors and encourage safer practices (e.g., condom use), which are commonplace in these trials. While this is apparent to those working in HIV prevention research, it has important implications for measurement of the preventive misconception. For example, to query beliefs about an investigational agent in particular, there must be specificity regarding this particular issue. Otherwise, participants will understandably include the baseline prevention benefit of participation in their answers, which would inappropriately inflate the possibility of identifying a preventive misconception. That is, the answers might erroneously combine accurate conceptions about risk reduction with misconceptions related to the certain prevention benefits of an experimental intervention.

Second, language in items about “reducing risk” tends to be associated with participants thinking about what they can do themselves to reduce risk, such as changing behavior. Alternatively, asking about “prevent you from getting HIV” does not seem to be associated with this type of response. Similarly, asking about “chance” rather than “risk” reduces the tendency to think about personal efforts toward reducing risk, perhaps because of the connotations of “risk” in regard to HIV prevention.

These findings are consistent with some of the challenges faced by others in qualitatively assessing the preventive misconception. For example, in exit interviews for a vaginal microbicide trial in South Africa, 90% of participants believed there was an HIV prevention benefit by virtue of participating in the trial. However, on closer examination, not all of these participants seemed to have a misconception. Rather, most seemed to have an accurate understanding of the prevention benefits of participation, but approximately 15% did harbor a preventive misconception. As described earlier, these participants also seemed to have an increase in risk behaviors (Deller et al. 2014). Although the authors describe this latter group as an “expanded” definition of preventive misconception, we believe it is in fact a more refined, or accurate, assessment of preventive misconception because it accounts for what is being misconceived and not accurately apprehended.

In addition, a mental model based upon data derived from focus groups and interviews with men who have sex with men in India suggests that participants viewed prevention trials as health interventions themselves, but also overestimated the likelihood of the efficacy of an experimental vaccine and the chance of receiving the experimental product (Chakrapani 2013).

Assessing a preventive misconception necessitates disaggregating misconceptions from correct conceptions of prevention benefit related to trial participation. Further, our findings underscore the need to conduct cognitive interviews of items aimed at addressing difficult concepts such as these.

In creating and refining the measure, the study team was able to take the larger set of candidate concepts and items and reduce them to a manageable set of items that can be tailored to specific studies. For example, items that include the trial’s name (Q1 and Q4) can, and should, be tailored to any trial without any reason to suspect that it would alter the characteristics of the instrument. Similarly, the particular names of experimental agents (Q4.1, etc.) should be changed to reflect those used in the trial and the study design.

Limitations

A strength of our approach to instrument development is that we engaged actual participants in an active HIV prevention trial in the process, where it is reasonable to assume that issues related to a preventive misconception would be expected to be salient. However, this opportunity also put some limitations on the study population, namely those who were participating in a single trial being conducted in the United States that primarily enrolled men at the time we conducted our work. In addition, in order to minimize respondent burden, we did not ask about educational level of interviewees. While having a more broadly representative population will be important when evaluating the measure, it should be sufficient for this stage of instrument development. However, it will be important to assess whether and how the measure performs in other cultures, languages, and settings.

In addition, although substantial progress has been made in assessing the preventive misconception in the context of HIV prevention trials that include testing biomedical interventions, the current measure is not designed for use in HIV prevention trials that are solely testing behavioral interventions. Consequently, future work should be directed toward developing such a measure that will necessarily need to be sensitive to the issues we identified in our qualitative interviews.

CONCLUSIONS

The study team is currently fielding the measure of preventive misconception in two HIV prevention studies, HPTN069/ACTG 5305 and MTN-020 (ClinicalTrials.gov identifier NCT01617096), a Phase 3 trial of a vaginal ring containing dapivirine. Once these trials are completed, the study team will be able to assess the performance of the measure, the potential presence of the preventive misconception, and explore associations of different aspects of the preventive misconception and adherence and risk behavior initiation after enrollment in the studies.

The findings of this project underscore the importance of conducting cognitive interviews when developing survey instruments for empirical bioethics research. As described in this paper, this approach helped to identify phrasing of items that were problematic in regard to assessing components of the conceptual model. Further, iterative reflection on the intent of the measure helped to eliminate unnecessary survey items, which would have increased respondent burden without any expectation of better measurement. Accordingly, given the inherent complexity of the issues addressed in empirical bioethics, those interested in developing sound surveys should be encouraged to develop appropriate expertise in cognitive testing and describe its use in published reports so that those using the findings can be more confident of the validity of the data.

Acknowledgments

The authors thank the HPTN 069/ACTG 5305 study team, especially the leadership (Roy Gulick, Kenneth Mayer, and Timothy Wilkin) and core staff at FHI360 (Marybeth McCauley) for facilitating work on this project. The authors also thank the site leadership for assisting in this collaboration: Linda Apuzzo and Joel Gallant, Johns Hopkins University; Manya Magnus, Irene Kuo, and Marc Siegel, George Washington University, with support and guidance from the District of Columbia Developmental Center for AIDS Research, an NIH-funded Program (P30AI087714); and Cheryl Marcus and Kristine Patterson, University of North Carolina at Chapel Hill. The authors are especially grateful to the interviewers: Joe Ali, Johns Hopkins University; Jonathan Oakes, University of North Carolina at Chapel Hill; and James Peterson and Christopher Watson, George Washington University. This work would not have been possible without the willingness of the participants to be interviewed. Members of the advisory panel (listed in Table 1) provided helpful input at the outset of the project.

FUNDING: This work was supported by grant R21MH092253 from the National Institute of Mental Health (NIMH). In addition, HPTN 069/ACTG 5305 provided support through collaboration. The HPTN 069 study is supported by the HIV Prevention Trials Network (HPTN) and by cooperative agreements from the National Institute of Allergy and Infectious Diseases (NIAID) to the HPTN Leadership and Operations Center (UM1AI068619), the HPTN Laboratory Center (UM1AI068613), and the HPTN Statistical and Data Management Center (UM1AI068617). ACTG 5305 is supported by grant U01AI068636 from NIAID and by NIMH and the National Institute of Dental and Craniofacial Research (NIDCR).

Footnotes

PREVIOUS PRESENTATION: The work was presented as a poster at the 20th International AIDS Conference, Melbourne, Australia (July 24, 2014).

AUTHOR CONTRIBUTIONS: Jeremy Sugarman conceived and designed the research, interpreted data, drafted parts of the manuscript, and made critical revisions to it. Damon Seils supervised data collection, participated in data analysis, and drafted parts of the manuscript. J. Kemp Watson-Ormond participated in data collection and analysis and drafted part of the manuscript. Kevin Weinfurt conceived and designed the research, supervised data analysis and interpretation, and made critical revisions to the paper. All authors approved of the final version of the manuscript.

ROLE OF THE SPONSOR: The sponsor had no role in study design; collection, analysis, and interpretation of data; writing the report; or the decision to submit the report for publication.

DISCLAIMER: The content is solely the responsibility of the authors and does not necessarily represent the official views of NIMH, NIAID, NIDCR, or the National Institutes of Health (NIH).

CONFLICTS OF INTEREST: None reported.

ETHICAL APPROVAL: This study was approved by the institutional review boards at the Duke University Health System, George Washington University, Johns Hopkins Medicine, and the University of North Carolina at Chapel Hill.

References

- Beskow LM, Dean E. Informed consent for biorepositories: assessing prospective participants’ understanding and opinions. Cancer Epidemiology Biomarkers and Prevention. 2008;17(6):1440–1451. doi: 10.1158/1055-9965.EPI-08-0086. [DOI] [PubMed] [Google Scholar]

- Chakrapani V, Newman PA, Singhal N, Jerajani J, Shunmugam M. Willingness to participate in HIV vaccine trials among men who have sex with men in Chennai and Mumbai, India: a social ecological approach. PLoS One. 2012;7(12):e51080. doi: 10.1371/journal.pone.0051080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chakrapani V, Newman PA, Singhal N, Nelson R, Shunmugam M. “If it’s not working, why would they be testing it?”: mental models of HIV vaccine trials and preventive misconception among men who have sex with men in India. BMC Public Health. 2013;13(1):731. doi: 10.1186/1471-2458-13-731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dellar RC, Abdool Karim Q, Mansoor LE, et al. The preventive misconception: experiences from CAPRISA 004. AIDS and Behavior. 2014;18(9):1746–1752. doi: 10.1007/s10461-014-0771-6. [DOI] [PubMed] [Google Scholar]

- DeWalt DA, Rothrock N, Yount S, Stone AA. Evaluation of item candidates: the PROMIS qualitative item review. Medical Care. 2007;45(5Suppl 1):S12–S21. doi: 10.1097/01.mlr.0000254567.79743.e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hacking I. An introduction to probability and inductive logic. Cambridge University Press; Cambridge, UK: 2001. [Google Scholar]

- Joffe S, Cook EF, Cleary PD, Clark JW, Weeks JC. Quality of informed consent: a new measure of understanding among research subjects. Journal of the National Cancer Institute. 2001;93(2):139–147. doi: 10.1093/jnci/93.2.139. [DOI] [PubMed] [Google Scholar]

- Ott MA, Alexander AB, Lally LM, Steever JB, Zimet GD. Adolescent Medicine Trials Network (ATN) for HIV/AIDS Interventions. Preventive misconception and adolescents’ knowledge about HIV vaccine trials. Journal of Medical Ethics. 2013;39(12):765–771. doi: 10.1136/medethics-2012-100821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simon AE, Wu AW, Lavori PW, Sugarman J. Preventive misconception: its nature, presence, and ethical implications for research. American Journal of Preventative Medicine. 2007;32(5):370–374. doi: 10.1016/j.amepre.2007.01.007. [DOI] [PubMed] [Google Scholar]

- Sugarman J, Sulmasy D, editors. Methods in medical ethics. 2. Washington, DC: Georgetown University Press; 2010. [Google Scholar]

- Weinfurt KP. Discursive versus information-processing perspectives on a bioethical problem: the case of ‘unrealistic’ patient expectations. Theory & Psychology. 2004;14(2):191–203. [Google Scholar]

- Weinfurt KP, Allsbrook JS, Friedman JY, et al. Developing model language for disclosing financial interests to potential clinical research participants. IRB. 2007;29(1):1–5. [PMC free article] [PubMed] [Google Scholar]

- Willis GB. Cognitive interviewing. Thousand Oaks, California: Sage; 2005. [Google Scholar]

- Willis GB. Cognitive interviewing as a tool for improving the informed consent process. Journal of Empirical Research on Human Research Ethics. 2006;1(1):9–24. doi: 10.1525/jer.2006.1.1.9. [DOI] [PubMed] [Google Scholar]

- Woodsong C, Alleman P, Musara P, et al. Preventive misconception as a motivation for participation and adherence in microbicide trials: evidence from female participants and male partners in Malawi and Zimbabwe. AIDS and Behavior. 2012;16(3):785–790. doi: 10.1007/s10461-011-0027-7. [DOI] [PMC free article] [PubMed] [Google Scholar]