Abstract

Background

Journal abstracts including those reporting systematic reviews (SR) should contain sufficiently clear and accurate information for adequate comprehension and interpretation. The aim was to compare the quality of reporting of abstracts of SRs including meta-analysis published in high-impact general medicine journals before and after publication of the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) extension for abstracts (PRISMA-A) released in April 2013.

Methods

SRs including meta-analysis of randomized controlled trials published in 2012, 2014, and 2015 in top-tier general medicine journals were searched in PubMed. Data was selected and extracted by two reviewers based on the PRISMA-A guidelines which recommend to include 12 items. The primary outcome was the adjusted mean number of items reported; the secondary outcome was the reporting of each item and factors associated with a better reporting. Adjustment was made for abstract word count and format, number of authors, PRISMA endorsement, and publication on behalf of a group.

Results

We included 84 abstracts from 2012, 59 from 2014, and 61 from 2015. The mean number of items reported in 2015 (7.5; standard deviation [SD] 1.6) and in 2014 (6.8; SD 1.6) differed and did not differ from that reported in 2012 (7.2; SD 1.7), respectively; adjusted mean difference: 0.9 (95 % CI 0.4; 1.3) and −0.1 (95 % CI −0.6; 0.4). From 2012 to 2014, the quality of reporting was in regression for “strengths and limitations of evidence” and “funding”; contrariwise, it remained unchanged for the others items. Between 2012 and 2015, the quality of reporting rose up for “description of the effect”, “synthesis of results”, “interpretation”, and “registration”; but decreased for “strengths and limitations of evidence”; it remained unchanged for the other items. The overall better reporting was associated with abstracts structured in the 8-headings format in 2014 and abstracts with a word count <300 in 2014 and 2015.

Conclusions

Not surprisingly, the quality of reporting did not improve in 2014 and suboptimally improved in 2015. There is still room for improvement to meet the standards of PRISMA-A guidelines. Stricter adherence to these guidelines by authors, reviewers, and journal editors is highly warranted and will surely contribute to a better reporting.

Electronic supplementary material

The online version of this article (doi:10.1186/s13643-016-0356-8) contains supplementary material, which is available to authorized users.

Keywords: Abstract, PRISMA, Systematic review, Randomized controlled trial, Meta-analysis, General medicine journal

Background

Systematic reviews and meta-analyses of randomized controlled trials (RCTs) are fundamental tools which can be used to generate reliable summaries of health care information directed to clinicians, decision makers, and patients as well [1]. RCTs by their design generally provide the best quality of evidence required for health care decisions about interventions compared to observational studies [2, 3]. As such, they should be reported according to the highest possible pre-defined standards, as well as their systematic reviews and meta-analyses. Indeed, systematic reviews of RCTs provide information on clinical benefits and harms of interventions, inform the development of clinical recommendations, and help to identify future research needs. Besides, they are the reference standard for synthesizing evidence in healthcare about interventions because of their methodological rigor [4]. Clinicians read them to keep up to date with their field [5, 6]. As with any other research, the value of a systematic review depends on what was done, what was found, and the clarity of its reporting. Like other publications, low quality of reporting of systematic reviews can limit readers’ ability to assess the strengths and weaknesses of these reviews.

With an overwhelming day-to-day workload, the availability of large volumes of scientific publications, limited access to many full-text articles, healthcare professionals often make recourse to information contained in abstracts to guide or sustain their decisions [7, 8]. Within queries to PubMed, most readers look only at titles; only half of searches result in any click on content [9]. What makes matters worse, the average number of titles clicked on to obtain the abstract or full text, even after retrieving several searches in a row, is less than five. Of those clicks, abstracts will be represented about 2.5 times more often than full texts [9].

Abstracts can be useful for: screening by study type, facilitating quick assessment of validity, enabling efficient perusal of electronic search results, clarifying to which patients and settings the results apply, providing readers and peer reviewers with explicit summaries of results, facilitating the pre-publication peer review process, and increasing precision of computerized searches [10–13]. Consequently, journal and conference abstracts must contain sufficiently clear and accurate information that will permit adequate comprehension and interpretation of systematic reviews and meta-analyses findings [14].

After observing that the quality of abstracts of systematic reviews is still poor [15], the Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) for Abstracts Group developed an extension to the PRISMA Statement in order to provide guidance on how to write and present abstracts for systematic reviews and meta-analyses [14]. These guidelines were published in April 9 2013. To the best of our knowledge, there is no published study that has scrutinized the impact of release of PRISMA for abstracts (PRISMA-A) yet, comparing the quality of abstracts of papers published before (2012) and after (2014 and 2015) this release. Consequently, in an effort to promote quality reports of abstracts in systematic reviews and meta-analyses of RCTs, we firstly compared the quality of reporting abstracts in high-impact biomedical journals, before and after publication of PRISMA guidelines for abstracts. The secondary objectives included comparing the quality of reporting of each item of PRISMA-A guidelines before and after the publication of PRISMA-A. We also aimed to investigate factors associated with better adherence to PRISMA-A guidelines.

Methods

Design

We identified abstracts of systematic reviews published in 2012, 2014, and 2015 in high-impact general medicine journals. We assessed the reporting of these abstracts according to PRISMA-A recommendations [14]. We compared reporting from 2012 (before PRISMA-A) with reporting in 2014 and 2015 (after PRISMA-A). The present review was not registered. The PRISMA guidelines served as the template for reporting the present review [16]. The PRISMA checklist can be found in the Additional file 1.

Data sources

Based on impact factor published in 2015 by Thomson Reuters [17], the top nine high-impact general medical journals were selected for this study, namely: New England Journal of Medicine (NEJM), The Lancet, Journal of American Medical Association (JAMA), Annals of Internal Medicine, British Medical Journal (BMJ), Archives of Internal Medicine, PLOS Medicine, JAMA Internal Medicine, BMC Medicine, and Mayo Clinic Proceedings. In the PRISMA website, NEJM, Annals of Internal Medicine, Archives of Internal Medicine, and Mayo Clinic Proceedings are not cited as endorsers of PRISMA guidelines by contrast to the other journals which are PRISMA endorsers [18]. Journals were not excluded on the basis of their lack of endorsement of the PRISMA statement. We conducted a PubMed search of all systematic reviews and meta-analyses published in years 2012, 2014, and 2015. Our search strategy included “meta-analysis” as publication type, journal names, and limits were set for the specific time periods of interest (2012/01/01 to 2012/12/31, 2014/01/01 to 2014/12/31, and 2015/01/01 to 2015/12/31). The search strategy is presented in the Additional file 2.

Studies selection and data extraction

Two reviewers (JJRB and LNU) independently selected abstracts of systematic reviews of RCTs including meta-analyses. A “yes” or “no” answer was assigned to each item indicating whether the authors had reported it or not. Data extraction was independently performed by two reviewers (JJRB and LNU) in compliance with recommendations of the evaluation of PRISMA-A guidelines [14]. This was done for the 12 items recommended by the PRISMA-A guidelines. Agreement was measured using the Kappa statistic [19]. Disagreements were resolved by consensus after discussion between authors.

In addition to the PRISMA-A items, we collected the journal name, number of authors, type of abstract format (Introduction, Methods, Results, and Discussion [IMRAD]; 8-headings [objective, design, setting, participants, intervention, main outcome measured, results, and conclusions]), PRISMA endorser journal (yes or no), and actual observed abstract word count (<300 versus ≥300).

Outcomes measured

The primary outcome was the number of items reported among the 12 recommended items of the PRISMA-A guidelines; the secondary one was the proportion of abstracts reporting each of these 12 recommended items.

Statistical analysis

Data were entered and analyzed using the Statistical Package for Social Science (SPSS) version 21.0 for Windows (IBM Corp. Released 2012. IBM SPSS Statistics for Windows, Version 21.0. Armonk, NY: IBM Corp.). Categorical variables were expressed as numbers (N) with percentages (%). Continuous variables were expressed as means with standard deviation (SD).

We expressed the number of items for each year as mean (SD) and estimated the unadjusted and adjusted differences using the independent two-sample Student t test and generalized estimation equations (GEE), respectively [20]. The mean differences and adjusted means were reported with their 95 % confidence intervals (95 % CI) and p values. For continuous variables (number of items reported, for example), we assumed a linear distribution. We compared compliance with the 12 items of the PRISMA-A for years 2012 versus 2014 and 2015 using individual chi-squared test or Fischer’s exact test where necessary. Unadjusted analysis was followed by an adjusted analysis using GEE. For binary outcomes, we assumed a binomial distribution and an unstructured correlation matrix. We reported measures of association with odds ratios (OR) for univariable analyses and adjusted odds ratios (aOR) for multivariable analyses together with their 95 % confidence intervals (CI) and p values. Factors associated with overall better reporting were investigated using GEE and assuming Poisson distribution for number of items reported. The magnitude of association between better reporting and investigated factors was measured with adjusted incidence rate ratio (aIRR) alongside its 95 % CI. Additionally, we conducted sensitive analyses to determine factors associated with a better quality of reporting of the “Methods” and “Results” sections of abstracts for years 2014 and 2015.

For GEE, adjustments were made for actual observed abstract word count (<300 versus ≥300), PRISMA endorser journal (yes versus no), abstract format (IMRAD versus 8-headings), publication on behalf of a group (yes versus no), number of authors (≤6 versus >6), with journal as a grouping factor to adjust for potential clustering or similarity in articles published in the same journal. Evidence against the null hypothesis was considered for a two-tailed p value of <0.05. The 300-word cut-off was chosen as it has been shown, like for other abstract reporting guidelines such as the Consolidated Standards of Reporting Trials (CONSORT) extension for abstracts, that a word count around 300 is sufficient to fill all items [20]. The adjustment was done for abstract format because there are previous studies reporting relationship between abstract format and quality reporting [21, 22]. The adjustment was also done for number of authors and publication on behalf a group because it was reported an association between higher quality of work and increased number of collaborators [23, 24], though some other studies did not end up with the same finding [25, 26].

Results

General characteristics of abstracts selected

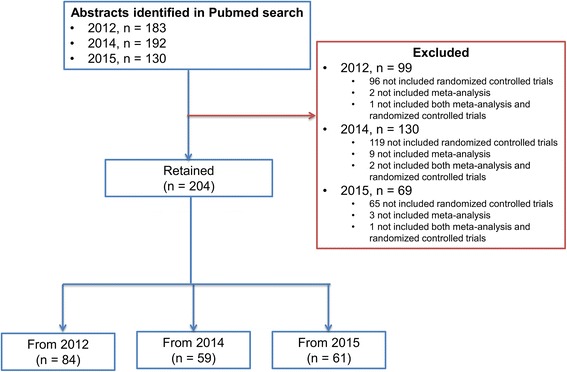

Our search yielded 505 articles of which 183 were published in 2012, 192 in 2014, and 130 in 2015. Three hundred and one abstracts did not meet our inclusion criteria, hence were excluded because most of them not included RCTs in the meta-analysis (n = 280). On the whole, we included 204 abstracts: 84 from 2012, 59 from 2014, and 61 from 2015 as shown in the flow diagram (Fig. 1). Agreement between reviewers on all PRISMA-A items was high (Kappa = 0.79, p < 0.001). Additional file 3 lists all abstracts included in this review.

Fig. 1.

Flow chart of studies considered for inclusion

As concerns the journals in which the abstracts were published, 67 (32.8 %) were published in BMJ (Table 1). The 8-headings format was used in 132 (64.7 %) abstracts. Among all the journals, 163 (79.9) were published with an actual observed abstract word count ≥300, 19 (9.3 %) abstracts reported publications on behalf of a group in authorship, and 150 abstracts (73.5 %) were from journals which endorsed PRISMA. Four of the 10 journals were PRISMA endorsers. The mean number of authors per article was 8.5 (SD 5.4) (Table 1).

Table 1.

Distribution of abstracts by year and characteristics

| 2012 n = 84 |

2014 n = 59 |

2015 n = 61 |

All N = 204 |

|

|---|---|---|---|---|

| Journals | ||||

| - Annals of Internal Medicine | 12 (14.3) | 13 (22.0) | 11 (18.0) | 36 (17.6) |

| - BMC Medicine | 4 (4.8) | 8 (13.6) | 10 (16.4) | 22 (10.8) |

| - BMJ | 31 (36.9) | 18 (30.5) | 18 (29.5) | 67 (32.8) |

| - JAMA | 6 (7.1) | 6 (10.2) | 4 (6.6) | 16 (7.8) |

| - JAMA Internal Medicine/Archives of Internal Medicine | 13 (15.5) | 11 (18.6) | 4 (6.6) | 28 (13.7) |

| - Mayo Clinic Proceedings | 1 (1.2) | 3 (5.1) | 0 | 4 (2.0) |

| - NEJM | 1 (1.2) | 0 | 0 | 1 (0.5) |

| - PLOS Medicine | 6 (7.1) | 0 | 0 | 6 (2.9) |

| - The Lancet | 10 (11.9) | 0 | 14 (23.0) | 24 (11.8) |

| Mean number of authors | 8.2 (5.8) | 8.4 (5.4) | 9.1 (4.9) | 8.5 (5.4) |

| Abstract format | ||||

| - IMRAD | 35 (41.7) | 12 (20.3) | 25 (41.0) | 72 (35.3) |

| - 8-headings | 49 (58.3) | 47 (79.7) | 36 (59.0) | 132 (64.7) |

| PRISMA endorser journals | ||||

| - Yes | 57 (67.9) | 43 (72.9) | 50 (82.0) | 150 (73.5) |

| - No | 27 (32.1) | 16 (27.1) | 11 (18.0) | 54 (26.5) |

| Publication on behalf of a group | ||||

| - Yes | 8 (9.5) | 4 (6.8) | 7 (11.5) | 19 (9.3) |

| - No | 76 (90.5) | 55 (93.2) | 54 (88.5) | 185 (90.7) |

| Actual observed abstract word count | ||||

| - <300 | 14 (16.7) | 16 (27.1) | 11 (18.0) | 41 (20.1) |

| - ≥300 | 70 (83.3) | 43 (72.9) | 50 (82.0) | 163 (79.9) |

Data are n (%) or mean (standard deviation)

Comparison of quality of abstract reporting between 2014 and 2012 and between 2015 and 2012

The mean number of items reported in 2014 (mean = 6.8; SD = 1.6) did not statistically differ from that reported in 2012 (mean = 6.7; SD = 1.6), mean difference (MD): 0.1; 95 % CI −0.4; 0.7; p = 0.694. There was no statistically significant difference after adjusting for covariates among which actual observed abstract word count, PRISMA endorsement, abstract format, publication on behalf of a group, and number of authors (MD −0.1, 95 % CI −0.6; 0.4; p = 1.0). The mean number of items reported in 2015 (mean = 7.5; SD = 1.6) differed significantly from that reported in 2012: mean difference (MD): 0.8; 95 % CI 0.2; 1.3; p = 0.007. This difference remained statistically significant after adjustment for covariates (MD 0.9, 95 % CI 0.4; 1.3; p = 0.002) (Table 2). An exploratory comparison between abstracts released in 2015 and those released in 2014 with regard to the overall quality of reporting showed a statistically significant difference (MD 0.63, 95 % CI 0.05; 1.21; p = 0.034).

Table 2.

Comparison of mean of PRISMA for abstracts items reported in abstracts of meta-analyses of RCT

| Variables | N | Univariable analysisa | Multivariable analysisb | ||||

|---|---|---|---|---|---|---|---|

| Means (standard deviation) | Mean difference (95 % CI) | p value | Adjusted means (95 % CI) | Adjusted mean difference (95 % CI) | p value | ||

| Year | |||||||

| - 2012 (ref) | 84 | 6.7 (1.6) | 7.0 (6.5; 7.5) | <0.001* | |||

| - 2014 | 59 | 6.8 (1.6) | 0.1 (−0.4; 0.7) | 0.694 | 6.9 (6.4; 7.4) | −0.1 (−0.6; 0.4) | 0.711** |

| - 2015 | 61 | 7.5 (1.6) | 0.8 (0.2; 1.3) | 0.007 | 7.8 (7.4; 8.3) | 0.9 (0.4; 1.3) | 0.001*** |

| Abstract word count | |||||||

| - <300 (ref) | 30 | 8.3 (2.0) | 6.9 (6.3; 7.4) | ||||

| - ≥300 | 113 | 6.7 (1.4) | −0.3 (−1.3; −1.0) | <0.001 | 7.6 (6.8; 8.4) | 0.7 (−0.4; 1.8) | 0.184 |

| PRISMA endorsement | |||||||

| - Non endorser journals (ref) | 43 | 7.9 (1.9) | 7.7 (7.1; 8.2) | ||||

| - Endorser journals | 100 | 6.6 (1.4) | −1.3 (−1.9; −0.7) | <0.001 | 6.8 (6.2; 7.4) | −0.8 (−1.7; 0.04) | 0.063 |

| Abstract format | |||||||

| - IMRAD (ref) | 47 | 6.4 (1.6) | 6.9 (6.3; 7.4) | ||||

| - 8-headings | 96 | 7.3 (1.6) | 0.8 (0.4; 1.3) | <0.001 | 7.6 (7.2; 8.0) | 0.7 (0.2; 1.2) | 0.008 |

| Publication on behalf of a group | |||||||

| - No (ref) | 131 | 7.0 (1.7) | 7.3 (7.0; 7.7) | ||||

| - Yes | 12 | 6.7 (1.4) | −0.3 (−1.1; 0.4) | 0.394 | 7.1 (6.5; 7.8) | −0.2 (−0.8; 0.5) | 0.557 |

| Number of authors | |||||||

| - ≤6 (ref) | 64 | 6.9 (1.6) | 0.1 (−0.4; 0.6) | 0.659 | 7.2 (6.7; 7.6) | ||

| - >6 | 79 | 7.0 (1.7) | 7.3 (6.9; 7.7) | 0.2 (−0.3; 0.6) | 0.464 | ||

Ref reference for mean difference calculation, PRISMA preferred reporting items for systematic review and meta-analysis, IMRAD introduction, methods, results, and discussion

aStudent’s t test, *Global p value

bGeneralized estimation equations with journals as grouping variable. Goodness of Fit: value of the Corrected Quasi-likelihood under Independence Model Criterion = 1827.12 and the value of the Quasi-likelihood under Independence Model Criterion = 1829.28

After Bonferroni correction for multiple comparisons, values are **1.0 and *** 0.002

Seven items were reported in more than 50 % of the abstracts: title, objectives, eligibility criteria, included studies, synthesis of results, description of effect, and interpretation. Four items were reported in less than 50 % of the abstracts: information sources, strengths and limitations of evidence, funding, and registration. Reporting of risk of bias varied, around 50 % throughout years (49.2–67.8 %) (Table 3).

Table 3.

Reporting quality of items of the PRISMA for abstracts of meta-analyses of RCT

| Items | Criteria | 2015 n = 61 |

2014 n = 59 |

2012 n = 84 |

|---|---|---|---|---|

| Title | Identify the report as a meta-analysis | 60 (98.4) | 58 (98.3) | 78 (92.9) |

| Objectives | The research question including components such as participants, interventions, comparators, and outcomes | 52 (85.2) | 45 (76.3) | 68 (81.0) |

| Eligibility criteria | Study and report characteristics used as criteria for inclusion | 53 (86.9) | 51 (86.4) | 76 (90.5) |

| Information sources | Key databases searched and search dates | 7 (11.5) | 15 (25.4) | 11 (13.3) |

| Risk of bias | Methods of assessing risk of bias | 30 (49.2) | 40 (67.8) | 43 (51.2) |

| Included studies | Number and type of included studies and participants and relevant characteristics of studies | 36 (59.0) | 35 (59.3) | 54 (64.3) |

| Synthesis of results | Results for main outcomes (benefits and harms), including summary measures and confidence intervals | 61 (100) | 42 (71.2) | 57 (67.9) |

| Description of the effect | Direction of the effect and size of the effect in terms meaningful to clinicians and patients | 46 (75.4) | 34 (57.6) | 51 (60.7) |

| Strengths and Limitations of evidence | Brief summary of strengths and limitations of evidence | 12 (19.7) | 18 (30.5) | 33 (39.3) |

| Interpretation | General interpretation of the results and important implications | 61 (100) | 51 (86.4) | 69 (82.1) |

| Funding | Primary source of funding for the review | 24 (39.3) | 12 (20.3) | 24 (28.6) |

| Registration | Registration number and registry name | 14 (23.0) | 3 (5.1) | 2 (2.4) |

In univariable analysis, there was a statistically significant improvement in abstract reporting in 2014 compared to 2012 only for “risk of bias” (crude OR [cOR] = 2.0; 95 % CI 1.003; 4.0). After adjustment, “risk of bias” became insignificant while reporting of “strengths and limitations of evidence” (adjusted OR [aOR] = 0.23; 95 % CI 0.07–0.71) and “Funding” (aOR = 0.25; 95 % CI 0.07–0.81) became statistically associated with a lower quality in 2014 compared to 2012 (Table 4).

Table 4.

Comparison of reporting quality of items of the PRISMA for abstracts of meta-analyses of RCT

| Items | Univariable analysisa | Multivariable analysisb | ||||||

|---|---|---|---|---|---|---|---|---|

| 2014 versus 2012 | 2015 versus 2012 | 2014 versus 2012 | 2015 versus 2012 | |||||

| Crude odds ratio (95 % CI) | p | Crude odds ratio (95 % CI) | p | Adjusted odds ratio (95 % CI) | p | Adjusted odds ratio (95 % CI) | p | |

| Title | 4.5 (0.52; 38.1) | 0.240 | 4.6 (0.54; 39.4) | 0.239 | 4.4 (0.55; 35.1) | 0.163 | 4.5 (0.46; 43.7) | 0.199 |

| Objectives | 0.76 (0.34; 1.7) | 0.499 | 1.4 (0.56; 3.3) | 0.499 | 0.78 (0.33; 1.8) | 0.564 | 1.6 (0.66; 4.1) | 0.286 |

| Eligibility criteria | 0.67 (0.24; 1.9) | 0.451 | 0.70 (0.25; 2.0) | 0.496 | 0.58 (0.17; 2.0) | 0.390 | 0.75 (0.25; 2.3) | 0.617 |

| Information sources | 2.3 (0.95; 5.4) | 0.060 | 0.86 (0.31; 2.4) | 0.770 | 2.0 (0.70; 5.5) | 0.197 | 0.82 (0.26; 2.6) | 0.740 |

| Risk of bias | 2.0 (1.003; 4.0) | 0.048 | 0.92 (0.48; 1.8) | 0.811 | 1.2 (0.55; 2.7) | 0.625 | 0.79 (0.36; 1.7) | 0.550 |

| Included studies | 0.81 (0.41; 1.6) | 0.547 | 0.80 (0.41; 1.6) | 0.519 | 1.1 (0.52; 2.2) | 0.834 | 0.98 (0.50; 2.7) | 0.956 |

| Synthesis of results | 1.2 (0.57; 2.4) | 0.671 | Not estimable | <0.001 | 1.1 (0.52; 2.5) | 0.738 | Not estimable | |

| Description of the effect | 0.88 (0.45; 1.7) | 0.711 | 2.0 (0.96; 4.1) | 0.063 | 0.93 (0.45; 1.9) | 0.849 | 2.7 (1.2; 6.1) | 0.014 |

| Strengths and Limitations of evidence | 0.68 (0.34; 1.4) | 0.281 | 0.38 (0.18; 0.82) | 0.012 | 0.23 (0.07; 0.71) | 0.011 | 0.13 (0.03; 0.66) | 0.013 |

| Interpretation | 1.4 (0.07; 2.8) | 0.474 | Not estimable | <0.001 | 1.9 (0.58; 5.9) | 0.297 | Not estimable | |

| Funding | 0.64 (0.29; 1.4) | 0.264 | 1.6 (0.81; 3.3) | 0.174 | 0.25 (0.07; 0.81) | 0.021 | 1.5 (0.56; 4.0) | 0.425 |

| Registration | 2.2 (0.36; 13.6) | 0.404 | 12.2 (2.7; 56.1) | <0.001 | 1.9 (0.30; 12.4) | 0.485 | 10.8 (2.3; 49.6) | 0.002 |

CI confidence interval

aChi-squared test

bGeneralized estimation equations with journal as grouping variable: adjustment has been made for abstract word count (<300 versus ≥300), PRISMA endorser journal (yes versus no), abstract format (IMRAD versus 8-headings), publication on behalf a group (yes versus no), number of authors (≤6 versus >6)

In univariable analysis, there was a statistically significant improvement in abstract reporting in 2015 compared to 2012 for “synthesis of results” (cOR not estimable, p < 0.001), for “interpretation”

(cOR not estimable, p < 0.001), and for “registration” (cOR = 12.2; 95 % CI 2.7–56.1). The quality of abstract decreased from 2012 to 2015 concerning “strength and limitations of evidence” (cOR = 0.38; 95 % CI 0.18–0.82). After adjustment, “synthesis and results” (aOR not estimable, p < 0.001), “description of effect” (aOR = 2.7; 95 % CI 1.2–6.1), “interpretation” (aOR not estimable, p < 0.001), and “registration” (aOR = 10.8; 95 % CI 2.3–49.6) were statistically associated with a better quality of reporting in 2015 compared to 2012. “Strengths and limitations of evidence” was statistically associated with lower reporting quality in 2015 compared to 2012 (Table 4).

In 2014, factors statistically associated with overall better reporting were structuring abstracts in the 8-headings format compared to the IMRAD format (aIRR 1.26; 95 % CI 1.02–1.56) and abstract word count <300 (aIRR 1.20; 95 % CI 1.09–1.35). The sole factor statistically associated with a better reporting of the “Methods” section was abstract word count <300 (aIRR 1.43; 95 % CI 1.16–1.72). None of the researched factors was statistically associated with a better quality of reporting of the “Results” section (Table 5).

Table 5.

Factors associated with a better reporting of items of PRISMA for abstracts published in 2014

| Overall reporting quality | Methods reporting quality | Results reporting quality | ||||

|---|---|---|---|---|---|---|

| Adjusted incidence rate ratios (95 % confidence interval)a | p | Adjusted incidence rate ratios (95 % confidence interval)a | p | Adjusted incidence rate ratios (95 % confidence interval)a | p | |

| Abstract word count | ||||||

| <300 (ref) | 1 | 1 | ||||

| ≥300 | 0.83 (0.74; 0.92) | <0.001 | 0.70 (0.58; 0.86) | <0.001 | 1.02 (0.78; 1.33) | 0.878 |

| Abstract format | ||||||

| IMRAD (ref) | 1 | |||||

| 8-headings | 1.26 (1.02; 1.56) | 0.036 | 1.40 (0.94; 2.10) | 0.099 | 1.41 (0.91; 2.20) | 0.126 |

| Publication on behalf of a group | ||||||

| No (ref) | ||||||

| Yes | 1.01 (0.88; 1.15) | 0.911 | 0.85 (0.52; 1.38) | 0.497 | 0.99 (0.62; 1.58) | 0.965 |

| Number of authors | ||||||

| ≤6 (ref) | 1 | |||||

| >6 | 1.02 (0.91; 1.14) | 0.761 | 0.89 (0.73; 1.08) | 0.242 | 1.05 (0.78; 1.41) | 0.743 |

Ref reference for mean difference calculation, PRISMA preferred reporting items for systematic review and meta-analysis, IMRAD introduction, methods, results, and discussion

aGeneralized estimation equations with journal as grouping variable. PRISMA endorser variable was excluded from model because it was redundant

In 2015, the sole factor statistically associated with an overall better reporting was abstract word count <300 (aIRR 1.20; 95 % CI 1.09–1.35). Factors statistically associated with a better reporting of the “Methods” section was abstract word count <300 (aIRR 1.43; 95 % CI 1.16–1.72), structuring abstract in the 8-headings format compared to the IMRAD format (aIRR 1.33; 95 % CI 1.08–1.65), and not publishing on behalf of a group (aIRR 1.37; 95 % CI 1.04–1.82). No factor was statistically associated with a better reporting of the “Results” section (Table 6).

Table 6.

Factors associated with a better reporting of items of PRISMA for abstracts published in 2015

| Overall reporting quality | Methodological reporting quality | Results reporting quality | ||||

|---|---|---|---|---|---|---|

| Adjusted incidence rate ratios (95 % confidence interval)a | p | Adjusted incidence rate ratios (95 % confidence interval)a | p | Adjusted incidence rate ratios (95 % confidence interval)a | p | |

| Abstract word count | ||||||

| <300 (ref) | 1 | |||||

| ≥300 | 0.80 (0.74; 0.87) | <0.001 | 0.86 (0.59; 0.79) | <0.001 | 1.03 (0.87; 1.22) | 0.731 |

| Abstract format | ||||||

| IMRAD (ref) | 1 | |||||

| 8-headings | 1.06 (0.96; 1.17) | 0.279 | 1.33 (1.08; 1.65) | .008 | 1.04 (0.87; 1.24) | 0.701 |

| Publication on behalf of a group | ||||||

| No (ref) | ||||||

| Yes | 0.90 (0.80; 1.00) | 0.055 | 0.73 (0.55; 0.96) | .027 | 0.85 (0.64; 1.13) | 0.271 |

| Number of authors | ||||||

| ≤6 (ref) | 1 | |||||

| >6 | 1.04 (0.97; 1.12) | 0.251 | 1.03 (0.89; 1.19) | .721 | 1.02 (0.88; 1.18) | 0.790 |

Ref reference for mean difference calculation, PRISMA preferred reporting items for systematic review and meta-analysis, IMRAD introduction, methods, results, and discussion

aGeneralized estimation equations with journal as grouping variable. PRISMA endorser variable was excluded from model because it was redundant

Discussion

This study aimed to assess, according to the PRISMA-A checklist, differences in the quality of reporting of abstracts of systematic reviews and meta-analyses of RCTs published in top-tier general medicine journals before (in 2012) and after (in 2014 and 2015) publication of the PRISMA extension for abstracts [14]. Our findings demonstrate that the overall reporting quality of PRISMA abstracts has not improved in the 2014 era as compared to the pre-PRISMA-A era; however, there is a small improvement in 2015. There was no improvement of each of the 12 items in 2014; in fact, two items regressed. There was one regression and four improvements in 2015. The sole factor associated with better reporting was presenting abstracts following the 8-headings format.

These results are consistent with previous studies that reported inconsistencies and patterns of suboptimal reporting quality of abstracts across journals and fields of medicine over time [21, 27–35]. As demonstrated by Hopewell and colleagues, there are serious deficiencies in the reporting of abstracts of systematic reviews which make it difficult for readers to reliably assess study findings [36], although it has been bolstered that the endorsement of PRISMA guidelines increases adherence to recommendations [37].

Reporting of abstracts with the 8-headings format was associated with an overall and “Results” section better adherence to PRISMA-A guidelines in 2014. Actually, the use of structured abstracts is warranted to improve their quality of reporting [21, 22]. Surprisingly, abstract word count <300 was associated with better reporting overall, and better “Methods” section and “Results” section reporting in 2014 and 2015. This indicates that a word count of 300 is not too short to provide useful, complete, and comprehensive information. In addition, it has been demonstrated for the CONSORT guidelines that checklist items can be easily incorporated within a word count limit of 250 to 300 words [20]. We found that endorsement of PRISMA was not statistically associated with an improvement in the quality of abstracts. Therefore, editors, reviewers and authors should ensure that not only the guidelines for the reporting of full texts are respected, but also those for abstracts, as Hopewell and colleagues have figured out huge discrepancies in the quality of reporting between abstracts and full texts [36].

Our study presents some flaws. First, our study was based on a limited number of published studies. Nonetheless, it included all meta-analyses of RCT abstracts from high-impact medical journals found in PubMed. Second, our study may not be a representative sample of all medical journals because we selected only general medicine journals with high-impact factors. There is need for further studies to investigate all types of systematic reviews and screen randomly selected abstracts, not only from top-tier journals. However, as our findings show that the quality of reporting of systematic reviews including meta-analyses abstracts is suboptimal in these top journals, we can extrapolate that the quality of reporting may be lower in other journals. Hence, this call for improvement in the quality of reporting standards could perhaps be generalized.

Conclusions

The reporting quality of abstracts of systematic reviews including meta-analyses of RCTs in leading general medicine journals did not improve in 2014 after the publication of the PRISMA-A guidelines and only improved slightly in 2015. There is still room for improvement to meet the standards of the PRISMA-A guidelines. Better structuring of abstracts and stricter adherence to the PRISMA-A by authors, reviewers, and journal editors is highly warranted.

Acknowledgements

None.

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Availability of data and materials

Additional file 3 contains the complete PMID list of included studies accompanied with journal name. Other data are available on request.

Authors’ contributions

JJRB contributed to the study conception and design and statistical analysis. JJRB and LNU contributed to the study selection and data extraction. JJRB and JRNN contributed to the data interpretation. JJRB drafted the manuscript. JJRB, LNU and JRNN revised the manuscript. All authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

Not applicable.

Abbreviations

- CONSORT

Consolidated Standards of Reporting Trials

- GEE

Generalized estimation equations

- IMRAD

Introduction, Methods, Results, and Discussion

- PRISMA-A

Preferred Reporting Items for Systematic Review and Meta-Analysis for Abstracts

- RCT

Randomized controlled trial

Additional files

PRISMA reporting checklist. (DOC 63 kb)

Search strategy for systematic reviews including meta-analysis of randomized controlled trials published in 2012, 2014 and 2015 in high-impact general medicine journals. (DOCX 15 kb)

PMID list of included studies accompanied with journal name and year of publication. (XLSX 14 kb)

Contributor Information

Jean Joel R. Bigna, Email: bignarimjj@yahoo.fr

Lewis N. Um, Email: lumnyobe@yahoo.fr

Jobert Richie N. Nansseu, Email: jobertrichie_nansseu@yahoo.fr

References

- 1.Hutton B, Salanti G, Caldwell DM, Chaimani A, Schmid CH, Cameron C, Ioannidis JP, Straus S, Thorlund K, Jansen JP, et al. The PRISMA extension statement for reporting of systematic reviews incorporating network meta-analyses of health care interventions: checklist and explanations. Ann Intern Med. 2015;162(11):777–84. doi: 10.7326/M14-2385. [DOI] [PubMed] [Google Scholar]

- 2.Concato J, Shah N, Horwitz RI. Randomized, controlled trials, observational studies, and the hierarchy of research designs. N Engl J Med. 2000;342(25):1887–92. doi: 10.1056/NEJM200006223422507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Stolberg HO, Norman G, Trop I. Randomized controlled trials. AJR Am J Roentgenol. 2004;183(6):1539–44. doi: 10.2214/ajr.183.6.01831539. [DOI] [PubMed] [Google Scholar]

- 4.Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, Shekelle P, Stewart LA. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev. 2015;4:1. doi: 10.1186/2046-4053-4-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Oxman AD, Cook DJ, Guyatt GH. Users’ guides to the medical literature. VI. How to use an overview. Evidence-Based Medicine Working Group. JAMA. 1994;272(17):1367–71. doi: 10.1001/jama.1994.03520170077040. [DOI] [PubMed] [Google Scholar]

- 6.Swingler GH, Volmink J, Ioannidis JP. Number of published systematic reviews and global burden of disease: database analysis. BMJ. 2003;327(7423):1083–4. doi: 10.1136/bmj.327.7423.1083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Smith H, Bukirwa H, Mukasa O, Snell P, Adeh-Nsoh S, Mbuyita S, Honorati M, Orji B, Garner P. Access to electronic health knowledge in five countries in Africa: a descriptive study. BMC Health Serv Res. 2007;7:72. doi: 10.1186/1472-6963-7-72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.The impact of open access upon public health. PLoS medicine. 2006; 3(5):e252. [DOI] [PMC free article] [PubMed]

- 9.Islamaj Dogan R, Murray GC, Neveol A, Lu Z. Understanding PubMed user search behavior through log analysis. Database (Oxford) 2009;2009:bap018. doi: 10.1093/database/bap018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Froom P, Froom J. Deficiencies in structured medical abstracts. J Clin Epidemiol. 1993;46(7):591–4. doi: 10.1016/0895-4356(93)90029-Z. [DOI] [PubMed] [Google Scholar]

- 11.Hartley J. Clarifying the abstracts of systematic literature reviews. Bull Med Libr Assoc. 2000;88(4):332–7. [PMC free article] [PubMed] [Google Scholar]

- 12.A proposal for more informative abstracts of clinical articles. Ad Hoc Working Group for Critical Appraisal of the Medical Literature. Ann Intern Med. 1987;106(4):598–604. [PubMed]

- 13.Haynes RB, Mulrow CD, Huth EJ, Altman DG, Gardner MJ. More informative abstracts revisited. Cleft Palate Craniofac J. 1996;33(1):1–9. doi: 10.1597/1545-1569-33.1.1. [DOI] [PubMed] [Google Scholar]

- 14.Beller EM, Glasziou PP, Altman DG, Hopewell S, Bastian H, Chalmers I, Gotzsche PC, Lasserson T, Tovey D. PRISMA for Abstracts: reporting systematic reviews in journal and conference abstracts. PLoS Med. 2013;10(4) doi: 10.1371/journal.pmed.1001419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Beller EM, Glasziou PP, Hopewell S, Altman DG. Reporting of effect direction and size in abstracts of systematic reviews. JAMA. 2011;306(18):1981–2. doi: 10.1001/jama.2011.1620. [DOI] [PubMed] [Google Scholar]

- 16.Moher D, Liberati A, Tetzlaff J, Altman DG, Group P. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. J Clin Epidemiol. 2009;62(10):1006–12. doi: 10.1016/j.jclinepi.2009.06.005. [DOI] [PubMed] [Google Scholar]

- 17.Impact Factor List 2014 [http://www.citefactor.org/]. Accessed 25 Apr 2016.

- 18.PRISMA Endorsers [http://www.prisma-statement.org/Endorsement/PRISMAEndorsers.aspx]. Accessed 28 Apr 2016.

- 19.Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med. 2005;37(5):360–3. [PubMed] [Google Scholar]

- 20.Hopewell S, Clarke M, Moher D, Wager E, Middleton P, Altman DG, Schulz KF. CONSORT for reporting randomised trials in journal and conference abstracts. Lancet. 2008;371(9609):281–3. doi: 10.1016/S0140-6736(07)61835-2. [DOI] [PubMed] [Google Scholar]

- 21.Ghimire S, Kyung E, Lee H, Kim E. Oncology trial abstracts showed suboptimal improvement in reporting: a comparative before-and-after evaluation using CONSORT for Abstract guidelines. J Clin Epidemiol. 2014;67(6):658–66. doi: 10.1016/j.jclinepi.2013.10.012. [DOI] [PubMed] [Google Scholar]

- 22.Dupuy A, Khosrotehrani K, Lebbe C, Rybojad M, Morel P. Quality of abstracts in 3 clinical dermatology journals. Arch Dermatol. 2003;139(5):589–93. doi: 10.1001/archderm.139.5.589. [DOI] [PubMed] [Google Scholar]

- 23.Figg WD, Dunn L, Liewehr DJ, Steinberg SM, Thurman PW, Barrett JC, Birkinshaw J. Scientific collaboration results in higher citation rates of published articles. Pharmacotherapy. 2006;26(6):759–67. doi: 10.1592/phco.26.6.759. [DOI] [PubMed] [Google Scholar]

- 24.Willis DL, Bahler CD, Neuberger MM, Dahm P. Predictors of citations in the urological literature. BJU Int. 2011;107(12):1876–80. doi: 10.1111/j.1464-410X.2010.10028.x. [DOI] [PubMed] [Google Scholar]

- 25.Lee SY, Teoh PJ, Camm CF, Agha RA. Compliance of randomized controlled trials in trauma surgery with the CONSORT statement. J Trauma Acute Care Surg. 2013;75(4):562–72. doi: 10.1097/TA.0b013e3182a5399e. [DOI] [PubMed] [Google Scholar]

- 26.Camm CF, Chen Y, Sunderland N, Nagendran M, Maruthappu M, Camm AJ. An assessment of the reporting quality of randomised controlled trials relating to anti-arrhythmic agents (2002–2011) Int J Cardiol. 2013;168(2):1393–6. doi: 10.1016/j.ijcard.2012.12.020. [DOI] [PubMed] [Google Scholar]

- 27.Can OS, Yilmaz AA, Hasdogan M, Alkaya F, Turhan SC, Can MF, Alanoglu Z. Has the quality of abstracts for randomised controlled trials improved since the release of Consolidated Standards of Reporting Trial guideline for abstract reporting? A survey of four high-profile anaesthesia journals. Eur J Anaesthesiol. 2011;28(7):485–92. doi: 10.1097/EJA.0b013e32833fb96f. [DOI] [PubMed] [Google Scholar]

- 28.Samaan Z, Mbuagbaw L, Kosa D, Borg Debono V, Dillenburg R, Zhang S, Fruci V, Dennis B, Bawor M, Thabane L. A systematic scoping review of adherence to reporting guidelines in health care literature. J Multidiscip Healthc. 2013;6:169–88. doi: 10.2147/JMDH.S43952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hopewell S, Ravaud P, Baron G, Boutron I. Effect of editors’ implementation of CONSORT guidelines on the reporting of abstracts in high impact medical journals: interrupted time series analysis. BMJ. 2012;344:e4178. doi: 10.1136/bmj.e4178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Fleming PS, Buckley N, Seehra J, Polychronopoulou A, Pandis N. Reporting quality of abstracts of randomized controlled trials published in leading orthodontic journals from 2006 to 2011. Am J Orthod Dentofacial Orthop. 2011;142(4):451–8. doi: 10.1016/j.ajodo.2012.05.013. [DOI] [PubMed] [Google Scholar]

- 31.Knobloch K, Vogt PM. Adherence to CONSORT abstract reporting suggestions in surgical randomized-controlled trials published in Annals of Surgery. Ann Surg. 2011;254(3):546. doi: 10.1097/SLA.0b013e31822ad829. [DOI] [PubMed] [Google Scholar]

- 32.Seehra J, Wright NS, Polychronopoulou A, Cobourne MT, Pandis N. Reporting quality of abstracts of randomized controlled trials published in dental specialty journals. J Evid Based Dent Pract. 2013;13(1):1–8. doi: 10.1016/j.jebdp.2012.11.001. [DOI] [PubMed] [Google Scholar]

- 33.Chen Y, Li J, Ai C, Duan Y, Wang L, Zhang M, Hopewell S. Assessment of the quality of reporting in abstracts of randomized controlled trials published in five leading Chinese medical journals. PLoS One. 2010;5(8):e11926. doi: 10.1371/journal.pone.0011926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ghimire S, Kyung E, Kang W, Kim E. Assessment of adherence to the CONSORT statement for quality of reports on randomized controlled trial abstracts from four high-impact general medical journals. Trials. 2012;13:77. doi: 10.1186/1745-6215-13-77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Mbuagbaw L, Thabane M, Vanniyasingam T, Borg Debono V, Kosa S, Zhang S, Ye C, Parpia S, Dennis BB, Thabane L. Improvement in the quality of abstracts in major clinical journals since CONSORT extension for abstracts: a systematic review. Contemp Clin Trials. 2014;38(2):245–50. doi: 10.1016/j.cct.2014.05.012. [DOI] [PubMed] [Google Scholar]

- 36.Hopewell S, Boutron I, Altman DG, Ravaud P. Deficiencies in the publication and reporting of the results of systematic reviews presented at scientific medical conferences. J Clin Epidemiol. 2015;68(12):1488–95. doi: 10.1016/j.jclinepi.2015.03.006. [DOI] [PubMed] [Google Scholar]

- 37.Panic N, Leoncini E, de Belvis G, Ricciardi W, Boccia S. Evaluation of the endorsement of the preferred reporting items for systematic reviews and meta-analysis (PRISMA) statement on the quality of published systematic review and meta-analyses. PLoS One. 2013;8(12):e83138. doi: 10.1371/journal.pone.0083138. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Additional file 3 contains the complete PMID list of included studies accompanied with journal name. Other data are available on request.